Abel: Integrating Humanoid Body, Emotions, and Time Perception to Investigate Social Interaction and Human Cognition

Abstract

1. Introduction

- a believable aesthetics and expressivity of the robot for better conveying and expressing emotions

- an embodied cognitive system, with internal emotional representation, that can flexibly adapt its behavior basing both on the robot’s internal and external scenario

- the possibility to endow the robot with its subjective time

2. Humanoid Body, Emotions, and Subjective Time

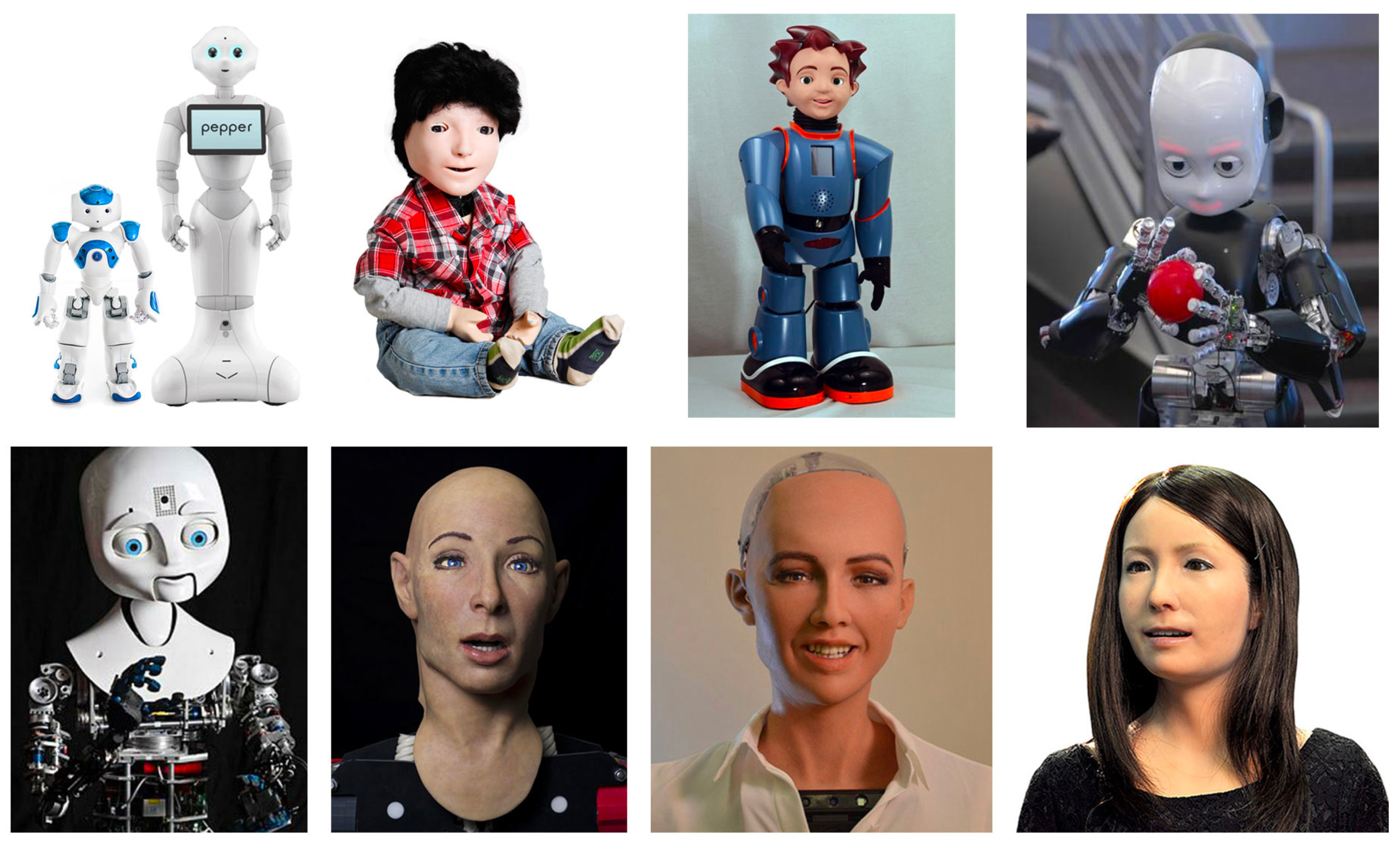

2.1. Humanoid Body

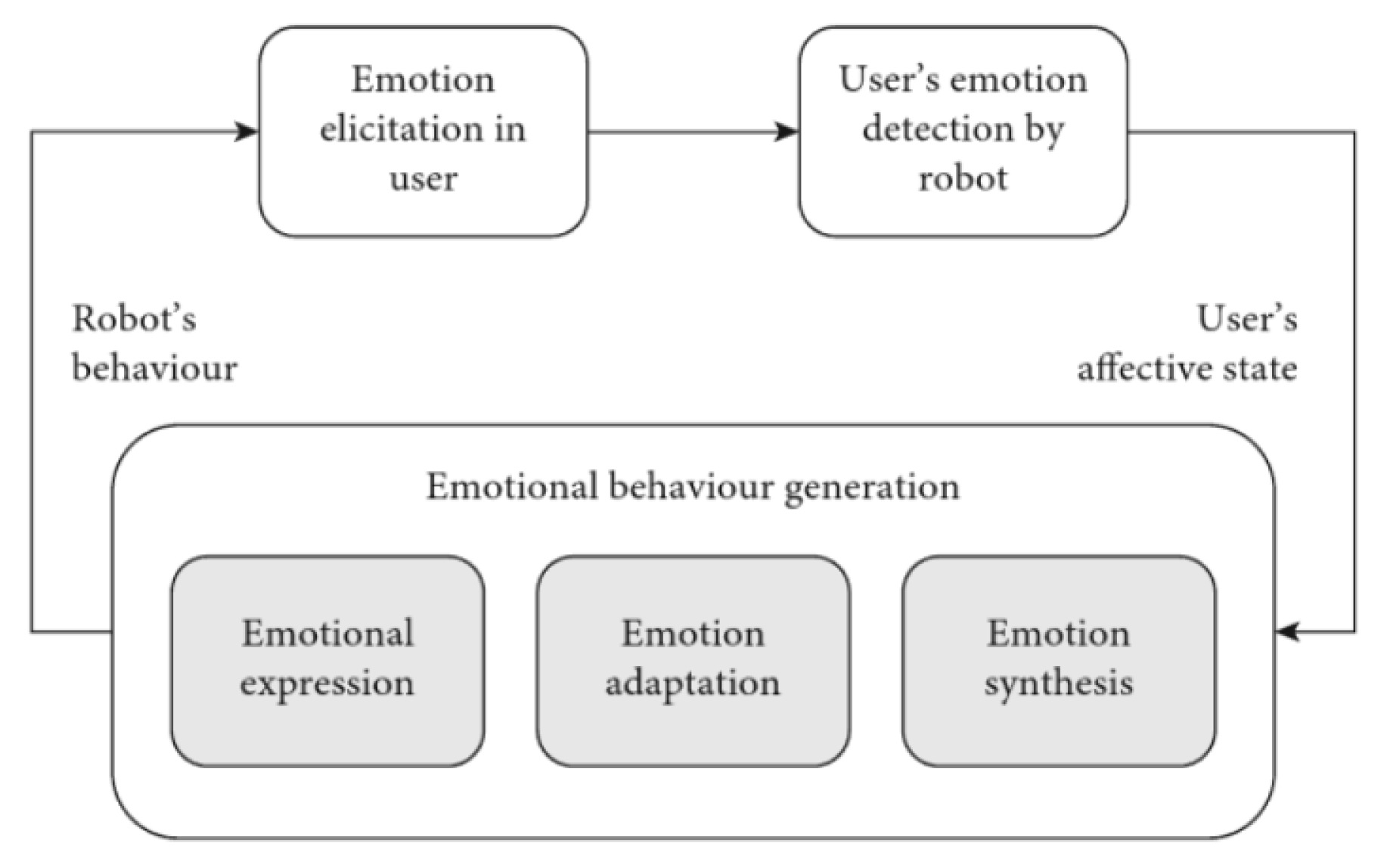

2.2. Humanoid Emotions

2.3. Humanoid Perception of Time

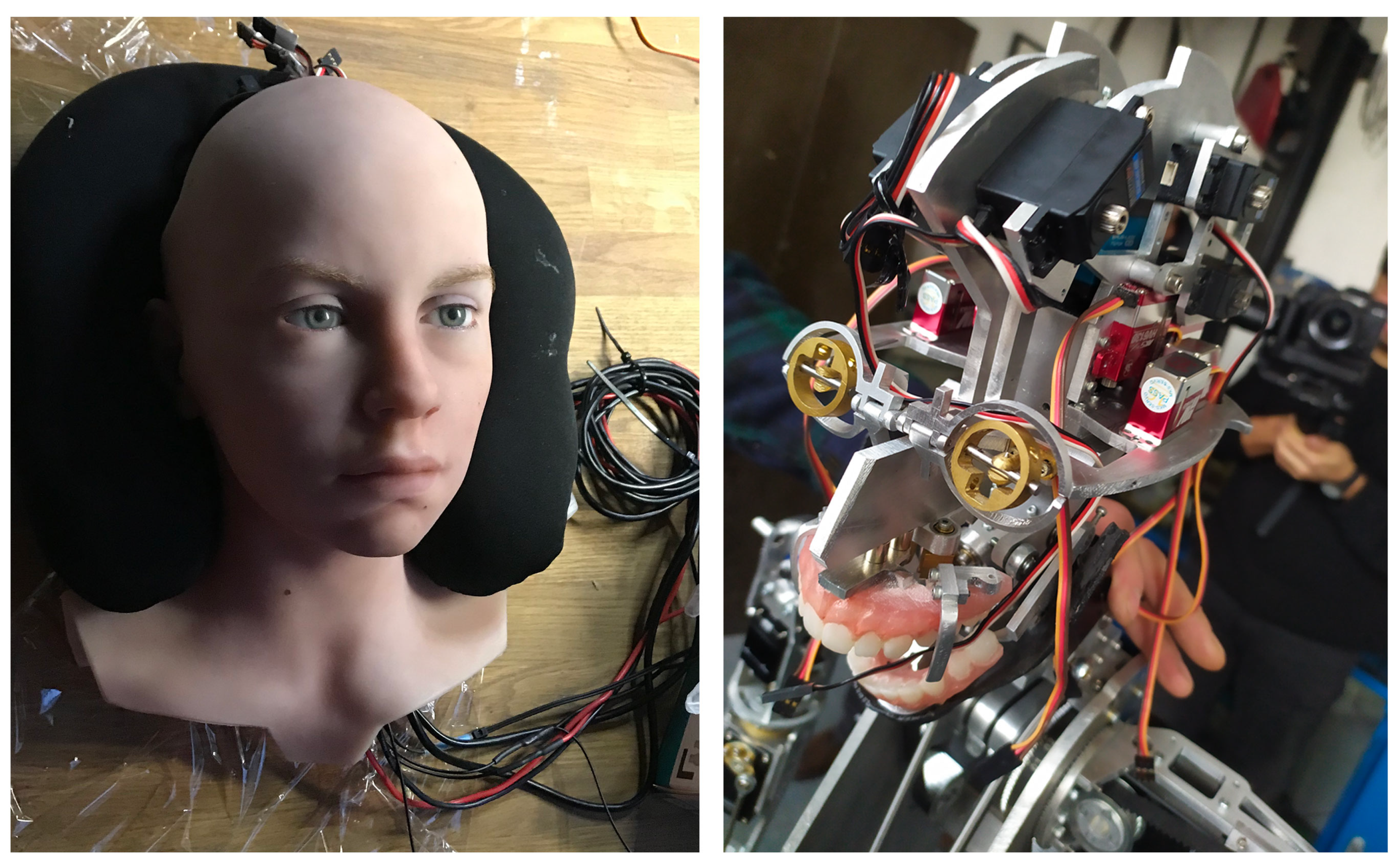

3. The Making of Abel

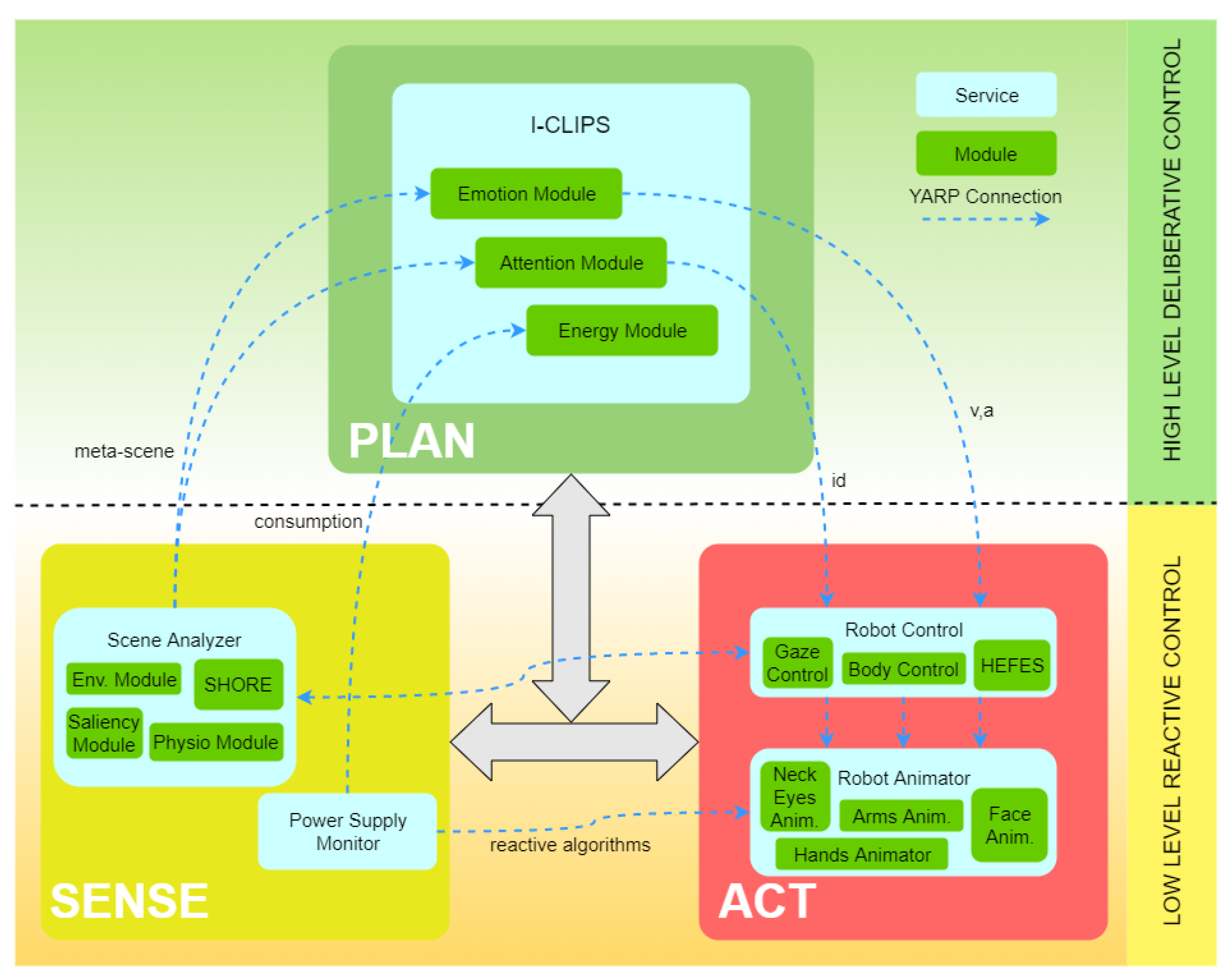

4. The Mind of Abel

5. Discussion and Future Works

5.1. Body

5.2. Emotion

5.3. Time

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Cresswell, K.; Cunningham-Burley, S.; Sheikh, A. Health care robotics: Qualitative exploration of key challenges and future directions. J. Med. Internet Res. 2018, 20, e10410. [Google Scholar] [CrossRef] [PubMed]

- Belpaeme, T.; Kennedy, J.; Ramachandran, A.; Scassellati, B.; Tanaka, F. Social robots for education: A review. Sci. Robot. 2018, 3, eaat5954. [Google Scholar] [CrossRef] [PubMed]

- Reidsma, D.; Charisi, V.; Davison, D.; Wijnen, F.; van der Meij, J.; Evers, V.; Cameron, D.; Fernando, S.; Moore, R.; Prescott, T.; et al. The EASEL project: Towards educational human-robot symbiotic interaction. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Edinburgh, UK, 19–22 July 2016; pp. 297–306. [Google Scholar]

- Shamsuddin, S.; Yussof, H.; Ismail, L.; Hanapiah, F.A.; Mohamed, S.; Piah, H.A.; Zahari, N.I. Initial response of autistic children in human-robot interaction therapy with humanoid robot NAO. In Proceedings of the 2012 IEEE 8th International Colloquium on Signal Processing and its Applications, Melaka, Malaysia, 23–25 March 2012; pp. 188–193. [Google Scholar]

- Mazzei, D.; Billeci, L.; Armato, A.; Lazzeri, N.; Cisternino, A.; Pioggia, G.; Igliozzi, R.; Muratori, F.; Ahluwalia, A.; De Rossi, D. The face of autism. In Proceedings of the 19th International Symposium in Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010; pp. 791–796. [Google Scholar]

- Kerzel, M.; Strahl, E.; Magg, S.; Navarro-Guerrero, N.; Heinrich, S.; Wermter, S. NICO—Neuro-inspired companion: A developmental humanoid robot platform for multimodal interaction. In Proceedings of the 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Lisbon, Portugal, 28 August–1 September 2017; pp. 113–120. [Google Scholar]

- Park, H.W.; Grover, I.; Spaulding, S.; Gomez, L.; Breazeal, C. A model-free affective reinforcement learning approach to personalization of an autonomous social robot companion for early literacy education. In Proceedings of the AAAI Conference on Artificial Intelligence 2019, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 687–694. [Google Scholar]

- Diftler, M.A.; Ambrose, R.O.; Tyree, K.S.; Goza, S.; Huber, E. A mobile autonomous humanoid assistant. In Proceedings of the 4th IEEE/RAS International Conference on Humanoid Robots, Santa Monica, CA, USA, 10–12 November 2004; Volume 1, pp. 133–148. [Google Scholar]

- Vouloutsi, V.; Blancas, M.; Zucca, R.; Omedas, P.; Reidsma, D.; Davison, D.; Charisi, V.; Wijnen, F.; van der Meij, J.; Evers, V.; et al. Towards a synthetic tutor assistant: The Easel project and its architecture. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Edinburgh, UK, 19–22 July 2016; pp. 353–364. [Google Scholar]

- Brooks, R.A.; Breazeal, C.; Irie, R.; Kemp, C.C.; Marjanovic, M.; Scassellati, B.; Williamson, M.M. Alternative essences of intelligence. AAAI/IAAI 1998, 1998, 961–968. [Google Scholar]

- Atkeson, C.G.; Hale, J.G.; Pollick, F.; Riley, M.; Kotosaka, S.; Schaul, S.; Shibata, T.; Tevatia, G.; Ude, A.; Vijayakumar, S.; et al. Using humanoid robots to study human behavior. IEEE Intell. Syst. Their Appl. 2000, 15, 46–56. [Google Scholar] [CrossRef]

- Adams, B.; Breazeal, C.; Brooks, R.A.; Scassellati, B. Humanoid robots: A new kind of tool. IEEE Intell. Syst. Their Appl. 2000, 15, 25–31. [Google Scholar] [CrossRef]

- Kemp, C.C.; Fitzpatrick, P.; Hirukawa, H.; Yokoi, K.; Harada, K.; Matsumoto, Y. Humanoids. Exp. Psychol. 2009, 56, 1–3. [Google Scholar]

- Webb, B. Can robots make good models of biological behaviour? Behav. Brain Sci. 2001, 24, 1033–1050. [Google Scholar] [CrossRef]

- Chatila, R.; Firth-Butterflied, K.; Havens, J.C.; Karachalios, K. The IEEE Global Initiative for Ethical Considerations in Artificial Intelligence and Autonomous Systems [Standards]. IEEE Robot. Autom. Mag. 2017, 24, 110. [Google Scholar] [CrossRef]

- Wang, S.; Lilienfeld, S.O.; Rochat, P. The Uncanny Valley: Existence and Explanations. Rev. Gen. Psychol. 2015, 19, 393–407. [Google Scholar] [CrossRef]

- Mori, M. Bukimi no tani [the uncanny valley]. Energy 1970, 7, 33–35. [Google Scholar]

- Gee, F.; Browne, W.N.; Kawamura, K. Uncanny valley revisited. In Proceedings of the ROMAN 2005, IEEE International Workshop on Robot and Human Interactive Communication, Okayama, Japan, 22 September 2004; pp. 151–157. [Google Scholar]

- Weis, P.P.; Wiese, E. Cognitive conflict as possible origin of the uncanny valley. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting; SAGE Publications Sage CA: Los Angeles, CA, USA, 2017; Volume 61, pp. 1599–1603. [Google Scholar]

- Lepora, N.F.; Verschure, P.; Prescott, T.J. The state of the art in biomimetics. Bioinspir. Biomimetics 2013, 8, 013001. [Google Scholar] [CrossRef] [PubMed]

- Fink, J. Anthropomorphism and human likeness in the design of robots and human-robot interaction. In Proceedings of the International Conference on Social Robotics. Springer, Chengdu, China, 29–31 October 2012; pp. 199–208. [Google Scholar]

- Breazeal, C. Emotion and sociable humanoid robots. Int. J. Hum.-Comput. Stud. 2003, 59, 119–155. [Google Scholar] [CrossRef]

- Chaminade, T.; Cheng, G. Social cognitive neuroscience and humanoid robotics. J. Physiol.-Paris 2009, 103, 286–295. [Google Scholar] [CrossRef] [PubMed]

- Leslie, K.R.; Johnson-Frey, S.H.; Grafton, S.T. Functional imaging of face and hand imitation: Towards a motor theory of empathy. Neuroimage 2004, 21, 601–607. [Google Scholar] [CrossRef] [PubMed]

- Gouaillier, D.; Hugel, V.; Blazevic, P.; Kilner, C.; Monceaux, J.; Lafourcade, P.; Marnier, B.; Serre, J.; Maisonnier, B. Mechatronic design of NAO humanoid. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 769–774. [Google Scholar]

- Pandey, A.K.; Gelin, R. A mass-produced sociable humanoid robot: Pepper: The first machine of its kind. IEEE Robot. Autom. Mag. 2018, 25, 40–48. [Google Scholar] [CrossRef]

- Dautenhahn, K.; Nehaniv, C.L.; Walters, M.L.; Robins, B.; Kose-Bagci, H.; Assif, N.; Blow, M. KASPAR—A minimally expressive humanoid robot for human–robot interaction research. Appl. Bionics Biomech. 2009, 6, 369–397. [Google Scholar] [CrossRef]

- Hanson, D.; Baurmann, S.; Riccio, T.; Margolin, R.; Dockins, T.; Tavares, M.; Carpenter, K. Zeno: A cognitive character. In Proceedings of the AAAI National Conference, Chicago, IL, USA, 13–17 July 2008. [Google Scholar]

- Metta, G.; Sandini, G.; Vernon, D.; Natale, L.; Nori, F. The iCub humanoid robot: An open platform for research in embodied cognition. In Proceedings of the 8th Workshop on Performance Metrics for Intelligent Systems, Gaithersburg, MD, USA, 19–21 August 2008; pp. 50–56. [Google Scholar]

- Allman, T. The Nexi Robot; Norwood House Press: Chicago, IL, USA, 2009. [Google Scholar]

- Hanson, D. Hanson Robotics Website. Available online: https://www.hansonrobotics.com/sophia/ (accessed on 31 December 2020).

- Nishio, S.; Ishiguro, H.; Hagita, N. Geminoid: Teleoperated android of an existing person. Humanoid Robot. New Dev. 2007, 14, 343–352. [Google Scholar]

- Kiverstein, J.; Miller, M. The embodied brain: Towards a radical embodied cognitive neuroscience. Front. Hum. Neurosci. 2015, 9, 237. [Google Scholar] [CrossRef]

- Dautenhahn, K.; Ogden, B.; Quick, T. From embodied to socially embedded agents–implications for interaction-aware robots. Cogn. Syst. Res. 2002, 3, 397–428. [Google Scholar] [CrossRef]

- Pfeifer, R.; Lungarella, M.; Iida, F. Self-organization, embodiment, and biologically inspired robotics. Science 2007, 318, 1088–1093. [Google Scholar] [CrossRef]

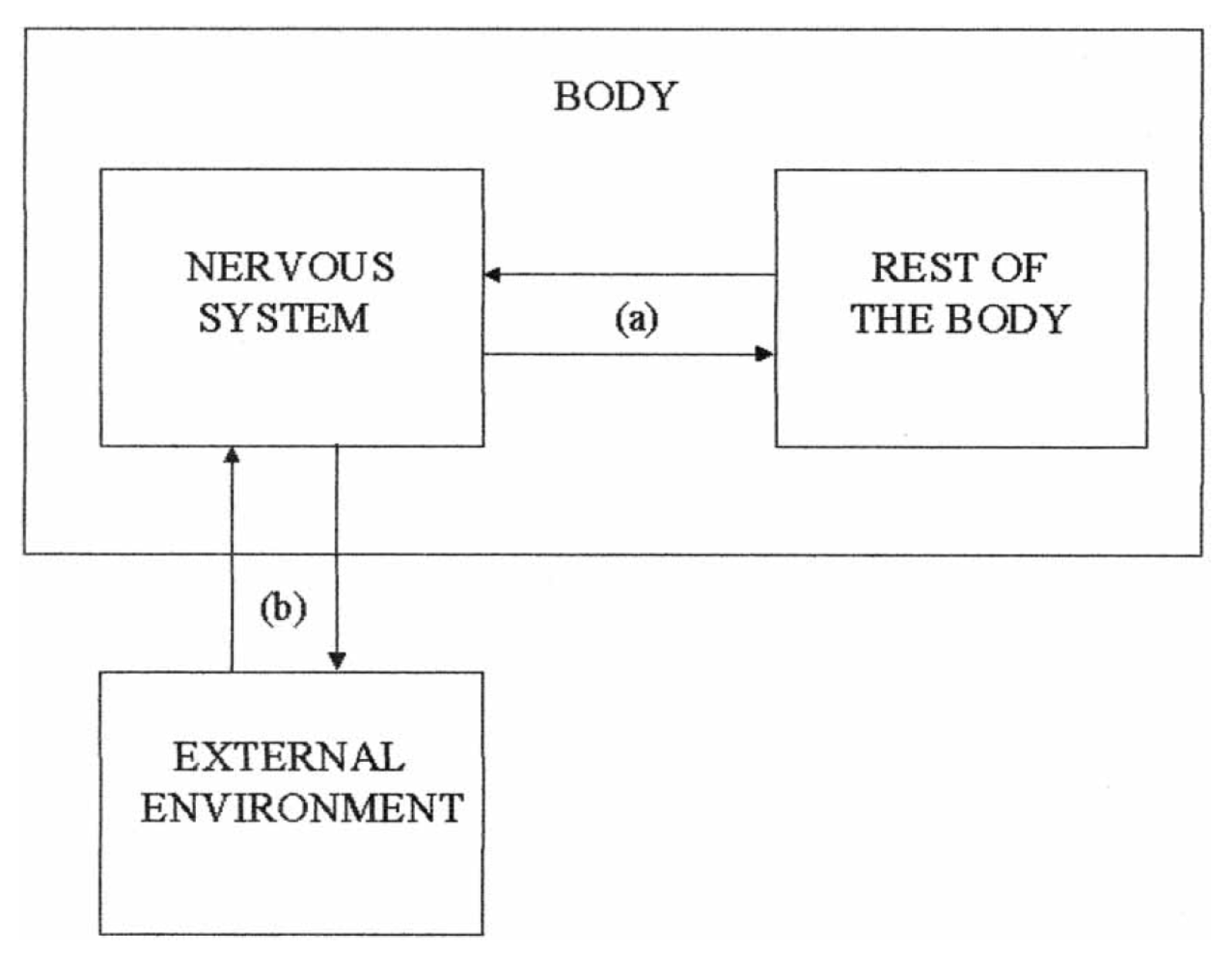

- Parisi, D. The other half of the embodied mind. In Embodied and Grounded Cognition; Frontiers Media: Lausanne, Switzerland, 2011. [Google Scholar]

- Parisi, D. Internal robotics. Connect. Sci. 2004, 16, 325–338. [Google Scholar] [CrossRef]

- Dennett, D.C. Kinds of Minds: Toward an Understanding of Consciousness; Basic Books: New York, NY, USA, 1996. [Google Scholar]

- Helm, B.W. The significance of emotions. Am. Philos. Q. 1994, 31, 319–331. [Google Scholar]

- Dennett, D.C. The Intentional Stance; MIT Press: Cambridge, MA, USA, 1989. [Google Scholar]

- Dennett, D.C. Consciousness Explained; Little, Brown & Company: Boston, MA, USA, 1991. [Google Scholar]

- Damasio, A. Descartes’ Error: Emotion, Reason, and the Human Brain; Grosset/Putnam: New York, NY, USA, 1994. [Google Scholar]

- Ledoux, J. The Emotional Brain: The Mysterious Underpinnings of Emotional Life; Simon & Schuster: New York, NY, USA, 1998. [Google Scholar]

- Bechara, A.; Damasio, H.; Tranel, D.; Damasio, A.R. Deciding advantageously before knowing the advantageous strategy. Science 1997, 275, 1293–1295. [Google Scholar] [CrossRef] [PubMed]

- Damasio, A. The Feeling of What Happens. Body and Emotion in the Making of Consciousness. In Spektrum Der Wissenschaft; Harcourt Brace: San Diego, CA, USA, 2000; p. 104. [Google Scholar]

- Ficocelli, M.; Terao, J.; Nejat, G. Promoting Interactions Between Humans and Robots Using Robotic Emotional Behavior. IEEE Trans. Cybern. 2016, 46, 2911–2923. [Google Scholar] [CrossRef]

- Lazzeri, N.; Mazzei, D.; Greco, A.; Rotesi, A.; Lanatà, A.; De Rossi, D.E. Can a Humanoid Face be Expressive? A Psychophysiological Investigation. Front. Bioeng. Biotechnol. 2015, 3, 64. [Google Scholar] [CrossRef]

- Paiva, A.; Leite, I.; Ribeiro, T. Emotion modeling for social robots. In The Oxford Handbook of Affective Computing; Oxford University Press: Oxford, UK, 2014; pp. 296–308. [Google Scholar]

- Michaud, F.; Pirjanian, P.; Audet, J.; Létourneau, D. Artificial emotion and social robotics. In Distributed Autonomous Robotic Systems 4; Springer: Berlin/Heidelberg, Germany, 2000; pp. 121–130. [Google Scholar]

- Samsonovich, A.V. On a roadmap for the BICA Challenge. Biol. Inspired Cogn. Archit. 2012, 1, 100–107. [Google Scholar] [CrossRef]

- Goertzel, B.; Lian, R.; Arel, I.; De Garis, H.; Chen, S. A world survey of artificial brain projects, Part II: Biologically inspired cognitive architectures. Neurocomputing 2010, 74, 30–49. [Google Scholar] [CrossRef]

- Franklin, S.; Madl, T.; D’mello, S.; Snaider, J. LIDA: A systems-level architecture for cognition, emotion, and learning. IEEE Trans. Auton. Ment. Dev. 2013, 6, 19–41. [Google Scholar] [CrossRef]

- Sun, R. The Motivational and Metacognitive Control in CLARION; Oxford University Press: Oxford, UK, 2007. [Google Scholar]

- Kokinov, B. Micro-level hybridization in the cognitive architecture DUAL. In Connectionist-Symbolic Integration: From Unified to Hybrid Approaches; Psychology Press: East Sussex, UK, 1997; pp. 197–208. [Google Scholar]

- Bach, J. The micropsi agent architecture. In Proceedings of the ICCM-5, International Conference on Cognitive Modeling, Bamberg, Germany, 10–12 April 2003; pp. 15–20. [Google Scholar]

- Hart, D.; Goertzel, B. Opencog: A software framework for integrative artificial general intelligence. In Proceedings of the 2008 Conference on Artificial General Intelligence 2008, Memphis, TN, USA, 1–3 March 2008; pp. 468–472. [Google Scholar]

- Russell, J.A. 13-reading emotion from and into faces: Resurrecting a dimensional-contextual perspective. In The Psychology of Facial Expression; Cambridge University Press: Cambridge, UK, 1997; pp. 295–320. [Google Scholar]

- Lazarus, R.S. Cognition and motivation in emotion. Am. Psychol. 1991, 46, 352. [Google Scholar] [CrossRef]

- Plutchik, R. Emotions: A general psychoevolutionary theory. Approaches Emot. 1984, 1984, 197–219. [Google Scholar]

- Smith, C.A. Dimensions of appraisal and physiological response in emotion. J. Personal. Soc. Psychol. 1989, 56, 339. [Google Scholar] [CrossRef]

- Howard, M.W.; MacDonald, C.J.; Tiganj, Z.; Shankar, K.H.; Du, Q.; Hasselmo, M.E.; Eichenbaum, H. A unified mathematical framework for coding time, space, and sequences in the hippocampal region. J. Neurosci. 2014, 34, 4692–4707. [Google Scholar] [CrossRef] [PubMed]

- MacDonald, C.J.; Lepage, K.Q.; Eden, U.T.; Eichenbaum, H. Hippocampal “time cells” bridge the gap in memory for discontiguous events. Neuron 2011, 71, 737–749. [Google Scholar] [CrossRef]

- O’keefe, J.; Nadel, L. The Hippocampus as a Cognitive Map; Clarendon Press: Oxford, UK, 1978. [Google Scholar]

- Droit-Volet, S.; Meck, W.H. How emotions colour our perception of time. Trends Cogn. Sci. 2007, 11, 504–513. [Google Scholar] [CrossRef] [PubMed]

- Droit-Volet, S.; Gil, S. The emotional body and time perception. Cogn. Emot. 2016, 30, 687–699. [Google Scholar] [CrossRef]

- Zakay, D.; Block, R.A. The role of attention in time estimation processes. In Advances in Psychology; Elsevier: Amsterdam, The Netherlands, 1996; Volume 115, pp. 143–164. [Google Scholar]

- Brown, S.W. Time perception and attention: The effects of prospective versus retrospective paradigms and task demands on perceived duration. Percept. Psychophys. 1985, 38, 115–124. [Google Scholar] [CrossRef]

- Zakay, D. The impact of time perception processes on decision making under time stress. In Time Pressure and Stress in Human Judgment and Decision Making; Springer: Berlin/Heidelberg, Germany, 1993; pp. 59–72. [Google Scholar]

- Droit-Volet, S.; Fayolle, S.; Lamotte, M.; Gil, S. Time, emotion and the embodiment of timing. Timing Time Percept. 2013, 1, 99–126. [Google Scholar] [CrossRef]

- Arel, I.; Rose, D.; Coop, R. Destin: A scalable deep learning architecture with application to high-dimensional robust pattern recognition. In Proceedings of the 2009 AAAI Fall Symposium Series, Arlington, VA, USA, 5–7 November 2009. [Google Scholar]

- Arel, I.; Rose, D.; Karnowski, T. A deep learning architecture comprising homogeneous cortical circuits for scalable spatiotemporal pattern inference. In Proceedings of the NIPS 2009 Workshop on Deep Learning for Speech Recognition and Related Applications, Whistler, BC, Canada, 11–12 December 2009; pp. 23–32. [Google Scholar]

- Project, T. Official Website. Available online: http://timestorm.eu/ (accessed on 31 December 2020).

- Center, E.P.R. University of Pisa. Available online: https://www.centropiaggio.unipi.it/ (accessed on 31 December 2020).

- Hoegen, G. Biomimic Studio. Available online: https://www.biomimicstudio.org/ (accessed on 31 December 2020).

- Breazeal, C.L. Sociable Machines: Expressive Social Exchange between Humans and Robots. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2000. [Google Scholar]

- Kozima, H.; Nakagawa, C.; Yano, H. Can a robot empathize with people? Artif. Life Robot. 2004, 8, 83–88. [Google Scholar] [CrossRef]

- Cominelli, L.; Mazzei, D.; De Rossi, D.E. SEAI: Social emotional artificial intelligence based on Damasio’s theory of mind. Front. Robot. AI 2018, 5, 6. [Google Scholar] [CrossRef]

- Metta, G.; Fitzpatrick, P.; Natale, L. YARP: Yet another robot platform. Int. J. Adv. Robot. Syst. 2006, 3, 43–48. [Google Scholar] [CrossRef]

- Mazzei, D.; Cominelli, L.; Lazzeri, N.; Zaraki, A.; De Rossi, D. I-clips brain: A hybrid cognitive system for social robots. In Biomimetic and Biohybrid Systems; Springer: Berlin/Heidelberg, Germany, 2014; pp. 213–224. [Google Scholar]

- Zaraki, A.; Pieroni, M.; De Rossi, D.; Mazzei, D.; Garofalo, R.; Cominelli, L.; Dehkordi, M.B. Design and Evaluation of a Unique Social Perception System for Human-Robot Interaction. IEEE Trans. Cogn. Dev. Syst. 2017, 9, 341–355. [Google Scholar] [CrossRef]

- Mazzei, D.; Lazzeri, N.; Hanson, D.; De Rossi, D. HEFES: An hybrid engine for facial expressions synthesis to control human-like androids and avatars. In Proceedings of the 4th IEEE RAS & EMBS International Conference on Biomedical Robotics and Biomechatronics (BioRob), Rome, Italy, 24–27 June 2012; pp. 195–200. [Google Scholar]

- Giarratano, J.C.; Riley, G. Expert Systems; PWS Publishing Co.: Boston, MA, USA, 1998. [Google Scholar]

- Cominelli, L.; Mazzei, D.; Pieroni, M.; Zaraki, A.; Garofalo, R.; De Rossi, D. Damasio’s Somatic Marker for Social Robotics: Preliminary Implementation and Test. In Biomimetic and Biohybrid Systems; Springer: Berlin/Heidelberg, Germany, 2015; pp. 316–328. [Google Scholar]

- Cominelli, L.; Garofalo, R.; De Rossi, D. The Influence of Emotions on Time Perception in a Cognitive System for Social Robotics. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; pp. 1–6. [Google Scholar]

- Cominelli, L.; Mazzei, D.; Carbonaro, N.; Garofalo, R.; Zaraki, A.; Tognetti, A.; De Rossi, D. A Preliminary Framework for a Social Robot “Sixth Sense”. In Proceedings of the Conference on Biomimetic and Biohybrid Systems, Edinburgh, UK, 19–22 July 2016; pp. 58–70. [Google Scholar]

- Russell, J.A. Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychol. Bull. 1994, 115, 102–141. [Google Scholar] [CrossRef] [PubMed]

- Maniadakis, M.; Trahanias, P. Temporal cognition: A key ingredient of intelligent systems. Front. Neurorobot. 2011, 5, 2. [Google Scholar] [CrossRef] [PubMed]

- Maniadakis, M.; Trahanias, P. Time models and cognitive processes: A review. Front. Neurorobot. 2014, 8, 7. [Google Scholar] [CrossRef] [PubMed][Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cominelli, L.; Hoegen, G.; De Rossi, D. Abel: Integrating Humanoid Body, Emotions, and Time Perception to Investigate Social Interaction and Human Cognition. Appl. Sci. 2021, 11, 1070. https://doi.org/10.3390/app11031070

Cominelli L, Hoegen G, De Rossi D. Abel: Integrating Humanoid Body, Emotions, and Time Perception to Investigate Social Interaction and Human Cognition. Applied Sciences. 2021; 11(3):1070. https://doi.org/10.3390/app11031070

Chicago/Turabian StyleCominelli, Lorenzo, Gustav Hoegen, and Danilo De Rossi. 2021. "Abel: Integrating Humanoid Body, Emotions, and Time Perception to Investigate Social Interaction and Human Cognition" Applied Sciences 11, no. 3: 1070. https://doi.org/10.3390/app11031070

APA StyleCominelli, L., Hoegen, G., & De Rossi, D. (2021). Abel: Integrating Humanoid Body, Emotions, and Time Perception to Investigate Social Interaction and Human Cognition. Applied Sciences, 11(3), 1070. https://doi.org/10.3390/app11031070