Expressing Robot Personality through Talking Body Language

Abstract

1. Introduction

2. Emotion Expression in Robots

3. Sentiment to Expression Conversion

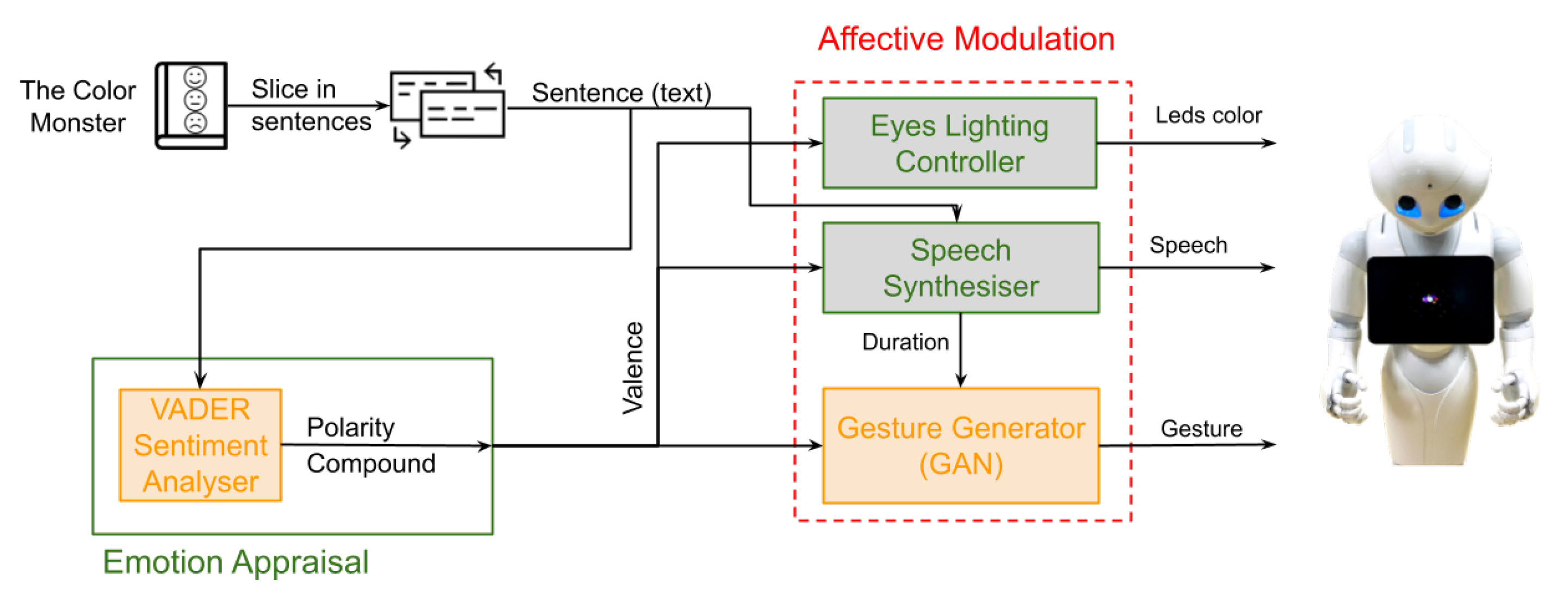

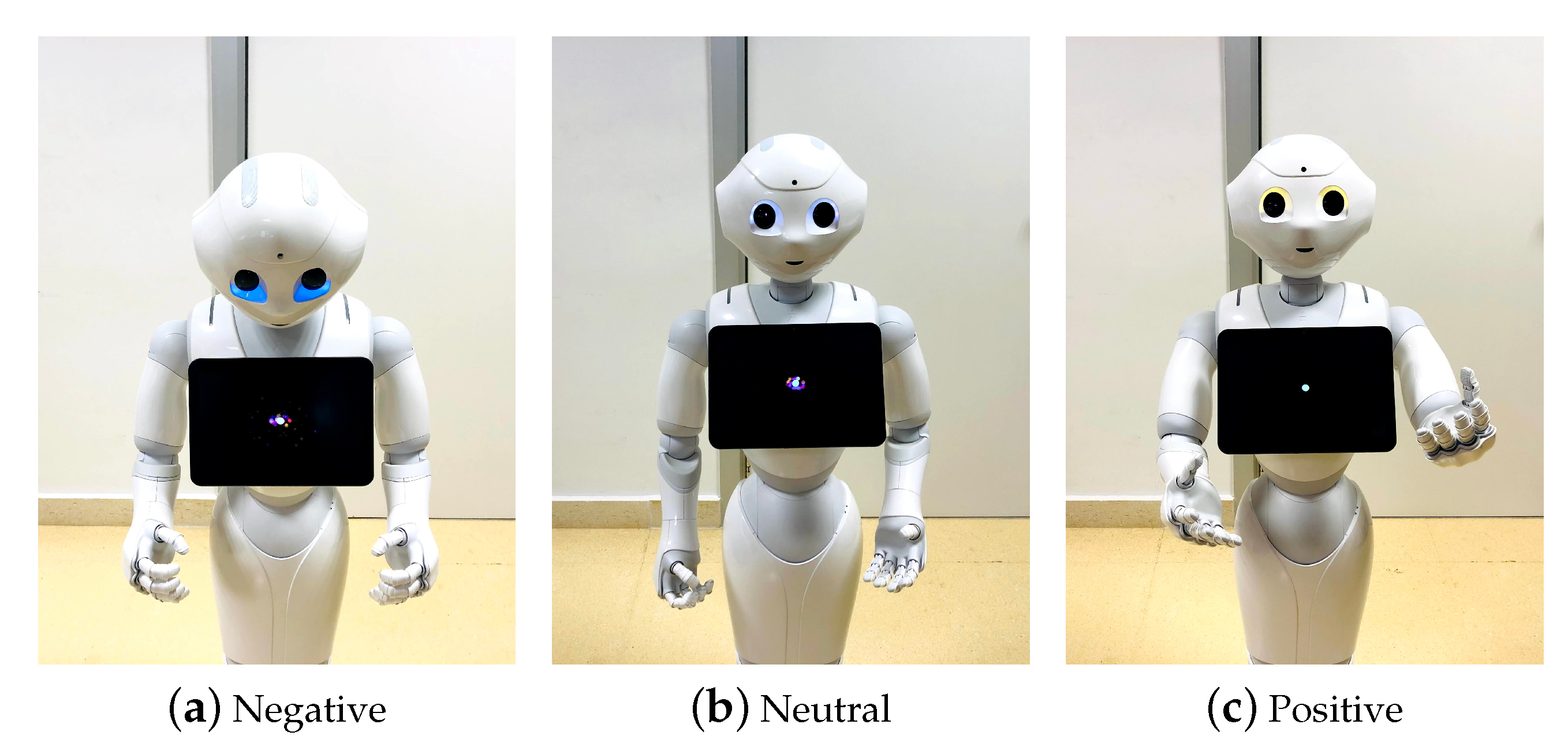

3.1. Affective Input

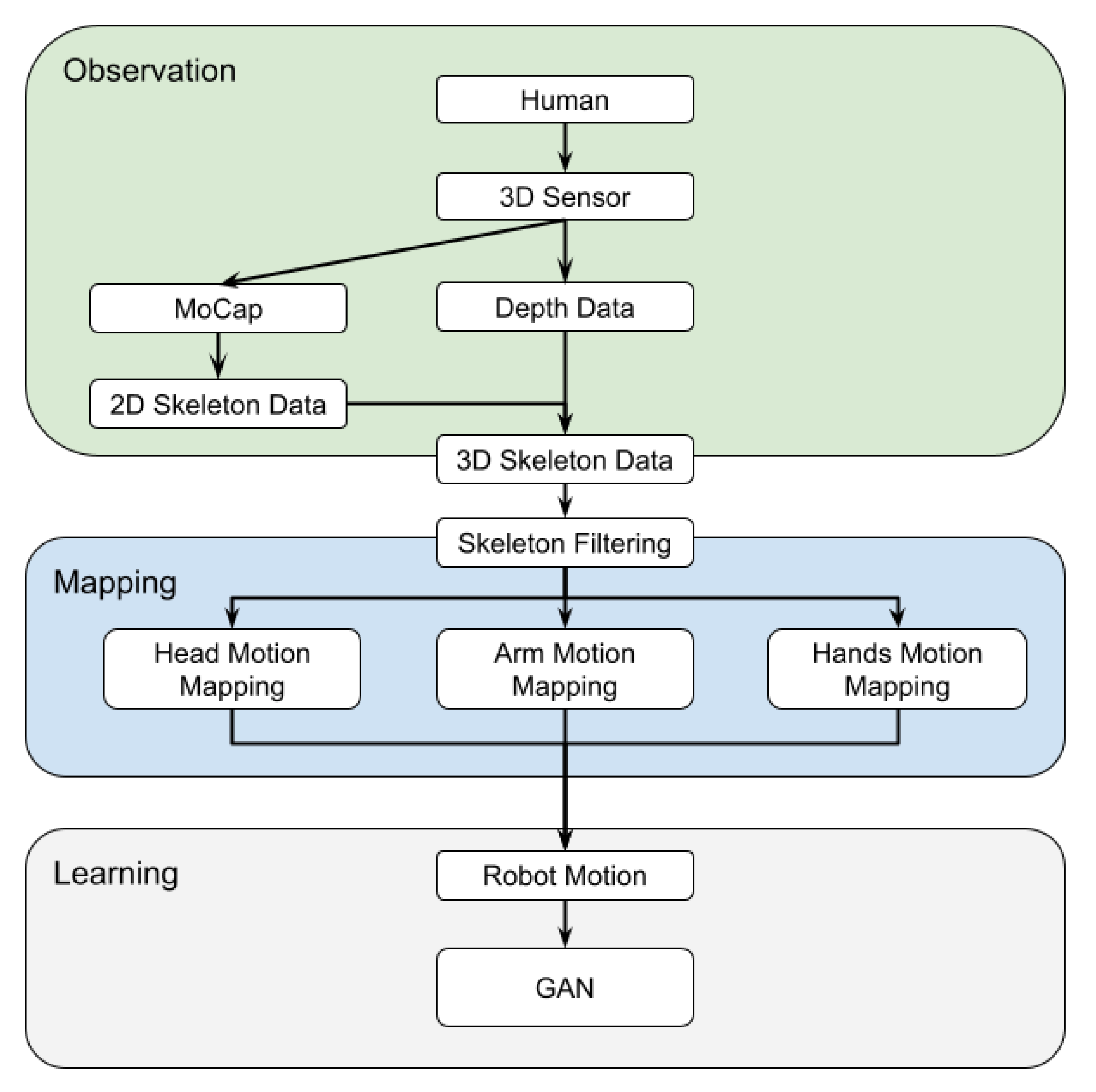

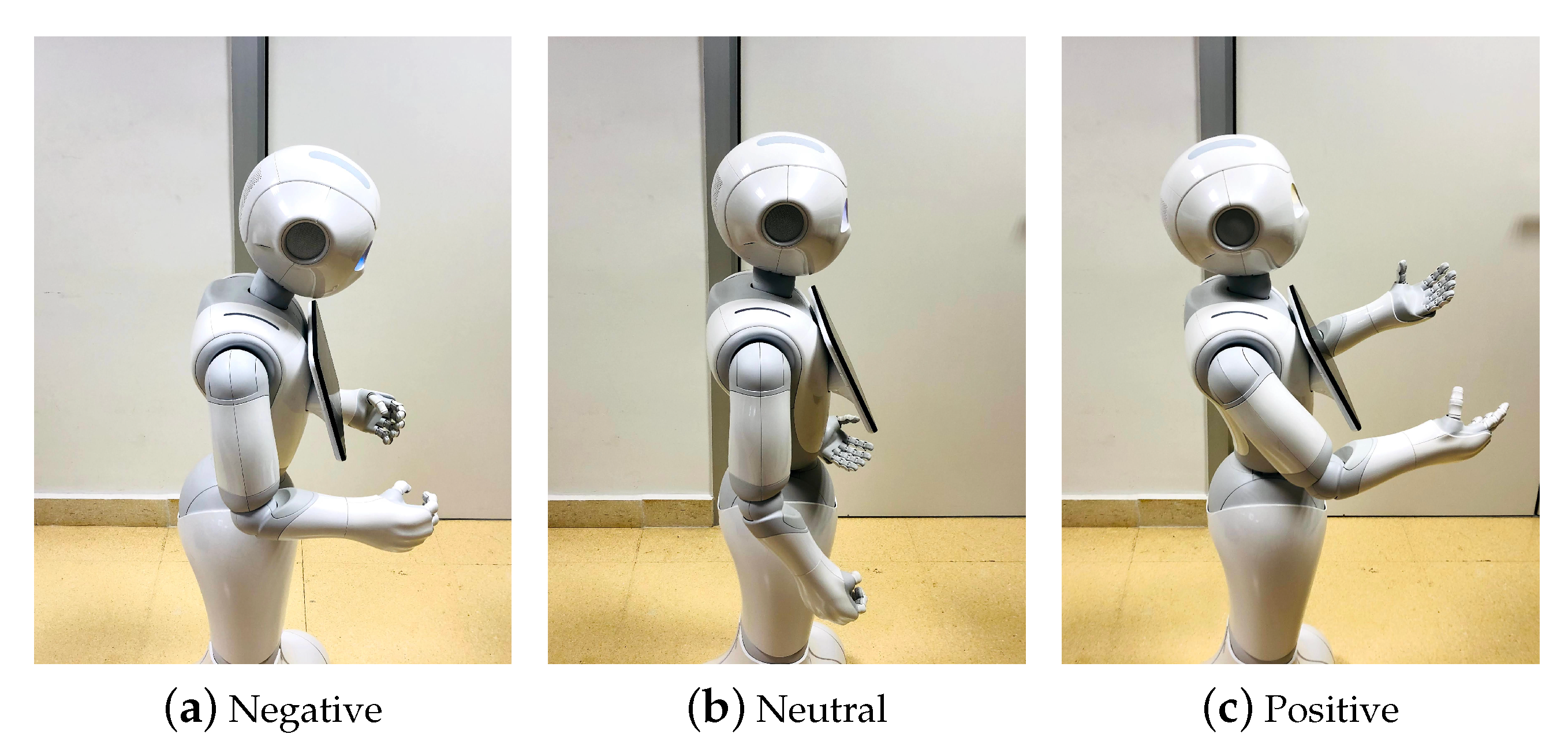

3.2. Gesticulation Behavior

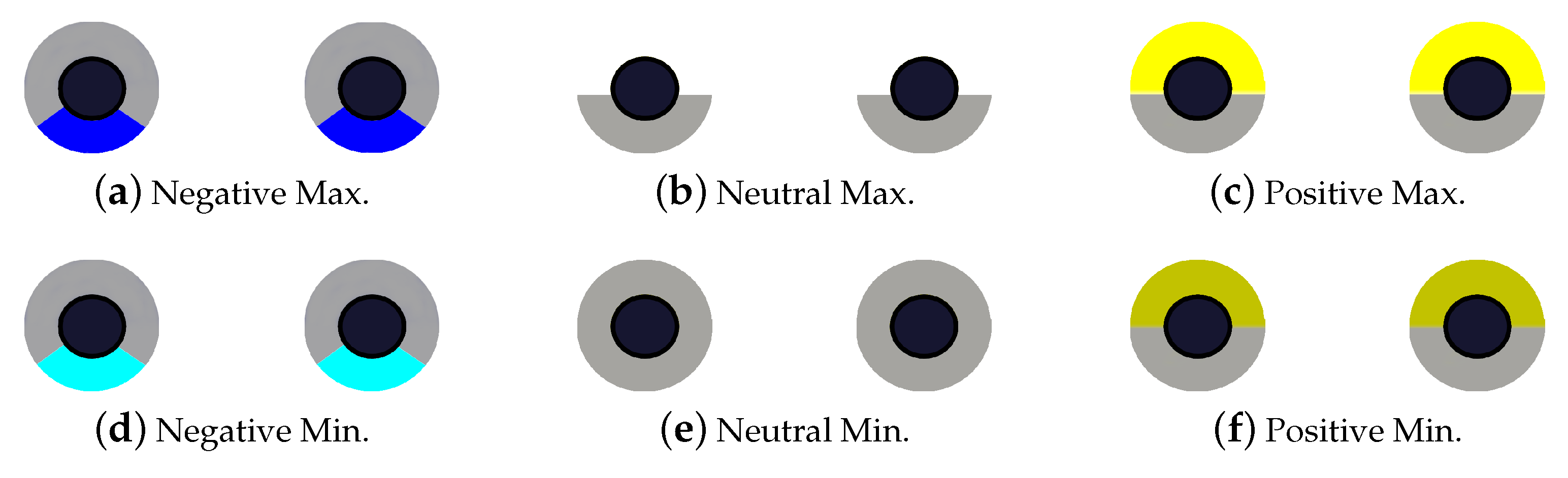

3.3. Affective Modulation

4. Adaptive Personality

5. Results

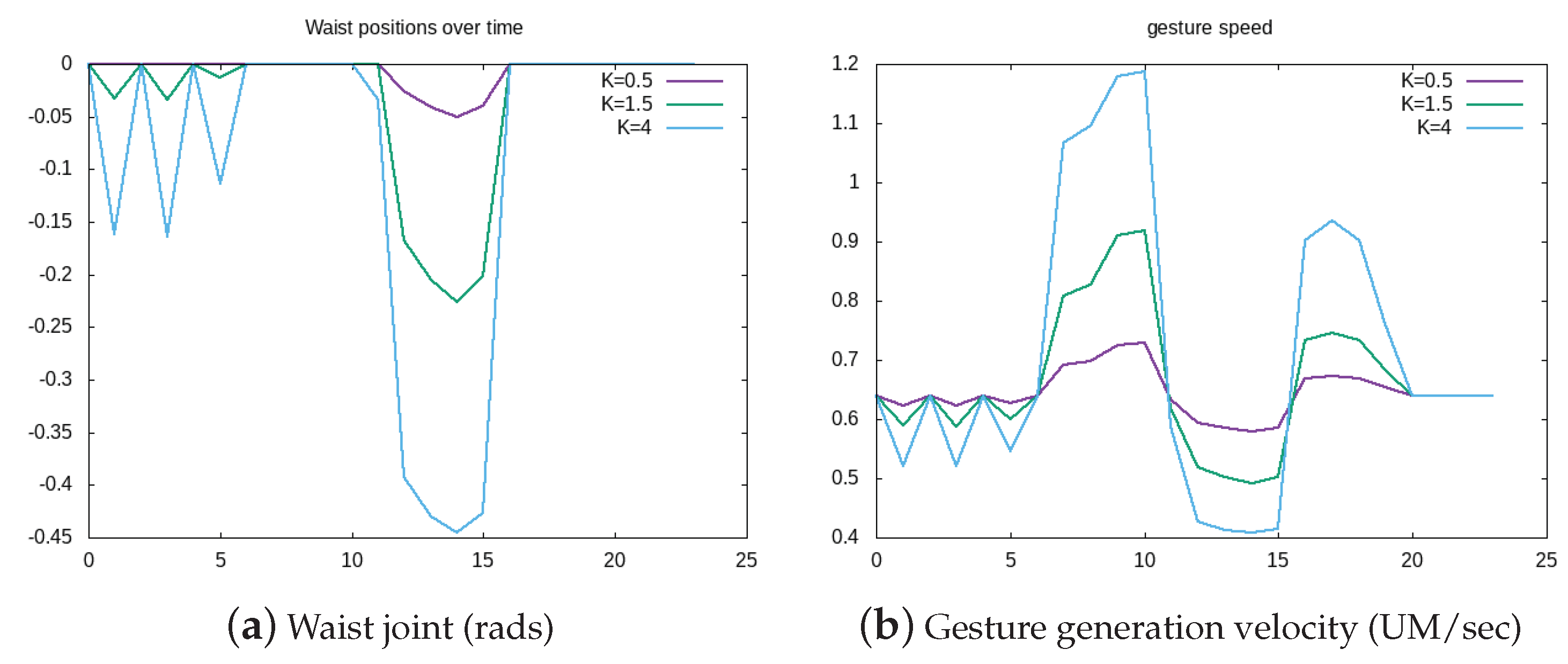

5.1. Scenario 1

5.2. Scenario 2

6. Conclusions and Further Work

Author Contributions

Funding

Conflicts of Interest

References

- Goodrich, M.A.; Schultz, A.C. Human-robot interaction: A survey. Found. Trends Hum. Comput. Interact. 2007, 1, 203–275. [Google Scholar] [CrossRef]

- Sheridan, T.B. Human–Robot interaction: Status and challenges. Hum. Factors 2016, 58, 525–532. [Google Scholar] [CrossRef] [PubMed]

- Fong, T.; Nourbakhsh, I.; Dautenhahn, K. A survey of socially interactive robots. Robot. Auton. Syst. 2003, 42, 143–166. [Google Scholar] [CrossRef]

- Robert, L.; Alahmad, R.; Esterwood, C.; Kim, S.; You, S.; Zhang, Q. A Review of Personality in Human–Robot Interactions. Found. Trends Inf. Syst. 2020, 4, 107–212. [Google Scholar] [CrossRef]

- Yale University. Socially Assistive Robotics. Available online: http://robotshelpingkids.yale.edu/ (accessed on 18 May 2021).

- Feil-Seifer, D.; Matarić, M.J. Defining Socially Assistive Robotics. In Proceedings of the International Conference on Rehabilitation Robotics, Chicago, IL, USA, 28 June–1 July 2005; pp. 465–468. [Google Scholar]

- Groopman, J. Robots that Care. Advances in technological therapy. The New Yorker, 2 November 2009. [Google Scholar]

- Cañamero, L.; Lewis, M. Making New “New AI” Friends: Designing a Social Robot for Diabetic Children from an Ambodied AI Perspective. Soc. Robot. 2016, 8, 523–537. [Google Scholar] [CrossRef]

- Čaić, M.; Mahr, D.; Oderkerken-Schröder, G. Value of social robots in services: Social cognition perspective. J. Serv. Mark. 2019, 33, 463–478. [Google Scholar] [CrossRef]

- Søraa, R.A.; Nyvoll, P.; Tøndel, G.; Fosch-Villaronga, E.; Serrano, J.A. The social dimension of domesticating technology: Interactions between older adults, caregivers, and robots in the home. Technol. Forecast. Soc. Chang. 2021, 167, 120678. [Google Scholar] [CrossRef]

- Lia, S.; Wynsberghe, A.V.; Roeser, S. The Complexity of Autonomy: A Consideration of the Impacts of Care Robots on the Autonomy of Elderly Care Receivers. Cult. Sustain. Soc. Robot. Proc. Robophilosophy 2020 2021, 335, 316. [Google Scholar]

- Churamani, N.; Kalkan, S.; Gune, H. Continual Learning for Affective Robotics: Why, What and How? In Proceedings of the IEEE International Conference on Robot and Human Interactive Communication RO-MAN), Online, 31 August–4 September 2020; pp. 209–223. [Google Scholar]

- Paiva, A.; Leite, I.; Ribeiro, T. Emotion Modelling for Social Robots. In The Oxford Handbook of Affective Computing; Oxford University Press: New York, NY, USA, 2015; pp. 296–308. [Google Scholar]

- Höök, K. Affective loop experiences: Designing for interactional embodiment. Philos. Trans. R. Soc. B 2009, 364, 3585–3595. [Google Scholar] [CrossRef]

- Damiano, L.; Dumouchel, P.; Lehmann, H. Towards human–robot affective co-evolution overcoming oppositions in constructing emotions and empathy. Int. J. Soc. Robot. 2015, 7, 7–18. [Google Scholar] [CrossRef]

- Damiano, L.; Dumouchel, P.G. Emotions in Relation. Epistemological and Ethical Scaffolding for Mixed Human-Robot Social Ecologies. Humana Mente J. Philos. Stud. 2020, 13, 181–206. [Google Scholar]

- Crumpton, J.; Bethel, C.L. A Survey of Using Vocal Prosody to Convey Emotion in Robot Speech. Int. J. Soc. Robot. 2016, 8, 271–285. [Google Scholar] [CrossRef]

- Knight, H. Eight Lessons learned about Non-verbal Interactions through Robot Theater. In Social Robotics, Proceedings of the Third International Conference, ICSR 2011, Amsterdam, The Netherlands, 24–25 November 2011; Lecture Notes in Computer Science; Mutlu, B., Bartneck, C., Ham, J., Evers, V., Kanda, T., Eds.; Springer: Berlin, Germany, 2011; Volume 7072, pp. 42–51. [Google Scholar] [CrossRef]

- Ritschel, H.; Kiderle, T.; Weber, K.; Lingenfelser, F.; Baur, T.; André, E. Multimodal Joke Generation and Paralinguistic Personalization for a Socially-Aware Robot. In Advances in Practical Applications of Agents, Multi-Agent Systems, and Trustworthiness, Proceedings of the PAAMS Collection—18th International Conference, PAAMS 2020, L’Aquila, Italy, 7–9 October 2020; Lecture Notes in Computer Science; Demazeau, Y., Holvoet, T., Corchado, J.M., Costantini, S., Eds.; Springer: Berlin, Germany, 2020; Volume 12092, pp. 278–290. [Google Scholar] [CrossRef]

- Neff, M.; Kipp, M.; Albrecht, I.; Seidel, H.P. Gesture Modeling and Animation Based on a Probabilistic Re-creation of Speaker Style. ACM Trans. Graph. 2008, 27, 5:1–5:24. [Google Scholar] [CrossRef]

- Cassell, J.; Vilhjálmsson, H.H.; Bickmore, T. Beat: The behavior expression animation toolkit. In Proceedings of the 28th Annual Conference on Computer Graphics and Interactive Techniques, Los Angeles, CA, USA, 12–17 August 2001; pp. 477–486. [Google Scholar]

- Ahuja, C.; Lee, D.W.; Nakano, Y.I.; Morency, L.P. Style transfer for co-speech gesture animation: A multi-speaker conditional-mixture approach. In Proceedings of the 28th European Conference on Computer Vision, Online, 23–28 August 2020; pp. 248–265. [Google Scholar]

- Bozkurt, E.; Yemez, Y.; Erzin, E. Affective synthesis and animation of arm gestures from speech prosody. Speech Commun. 2020, 119, 1–11. [Google Scholar] [CrossRef]

- Hanson, D. Hanson Robotics. Available online: http://www.hansonrobotics.com (accessed on 18 May 2021).

- Ishiguro, H. Hiroshi Ishiguro Laboratories (ATR). Available online: http://www.geminoid.jp/en/index.html (accessed on 18 May 2021).

- Breazeal, C. Emotion and sociable humanoid robots. Int. J. Hum. Comput. Stud. 2003, 59, 119–155. [Google Scholar] [CrossRef]

- Al Moubayed, S.; Skantze, G.; Beskow, J. The furhat back-projected humanoid head-lip reading, gaze and multi-party interaction. Int. J. Humanoid Robot. 2013, 10. [Google Scholar] [CrossRef]

- Anki. Cozmo. Available online: https://www.digitaldreamlabs.com/pages/cozmo (accessed on 18 May 2021).

- Pelikan, H.R.; Broth, M.; Keevallik, L. “Are you sad, Cozmo?” How humans make sense of a home robot’s emotion displays. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; pp. 461–470. [Google Scholar]

- Bretan, M.; Hoffman, G.; Weinberg, G. Emotionally expressive dynamic physical behaviors in robots. Int. J. Hum. Comput. Stud. 2015, 78, 1–16. [Google Scholar] [CrossRef]

- Augello, A.; Infantino, I.; Pilato, G.; Rizzo, R.; Vella, F. Binding representational spaces of colors and emotions for creativity. Biol. Inspired Cogn. Archit. 2013, 5, 64–71. [Google Scholar] [CrossRef]

- Infantino, I.; Pilato, G.; Rizzo, R.; Vella, F. I Feel Blue: Robots and Humans Sharing Color Representation for Emotional Cognitive Interaction. In Proceedings of the Biologically Inspired Cognitive Architectures 2012, Palermo, Italy, 31 October–3 November 2012; Advances in Intelligent Systems and Computing. Chella, A., Pirrone, R., Sorbello, R., Jóhannsdóttir, K., Eds.; Springer: Berlin, Germany, 2011; Volume 196, pp. 161–166. [Google Scholar] [CrossRef]

- Song, S.; Yamada, S. Expressing emotions through color, sound, and vibration with an appearance-constrained social robot. In Proceedings of the 2017 12th ACM/IEEE International Conference on Human-Robot Interaction HRI, Vienna, Austria, 6–9 March 2017; pp. 2–11. [Google Scholar]

- Feldmaier, J.; Marmat, T.; Kuhn, J.; Diepold, K. Evaluation of a RGB-LED-based Emotion Display for Affective Agents. arXiv 2016, arXiv:1612.07303. [Google Scholar]

- Johnson, D.O.; Cuijpers, R.H.; van der Pol, D. Imitating human emotions with artificial facial expressions. Int. J. Soc. Robot. 2013, 5, 503–513. [Google Scholar] [CrossRef]

- Paradeda, R.B.; Hashemian, M.; Rodrigues, R.A.; Paiva, A. How Facial Expressions and Small Talk May Influence Trust in a Robot. In Social Robotics, Proceedings of the 8th International Conference, ICSR 2016, Kansas City, MO, USA, 1–3 November 2016; Lecture Notes in Computer Science; Agah, A., Cabibihan, J.J., Howard, A., Salichs, M., He, H., Eds.; Springer: Berlin, Germany, 2016; Volume 9979, pp. 169–178. [Google Scholar] [CrossRef]

- Aly, A.; Tapus, A. Towards an intelligent system for generating an adapted verbal and nonverbal combined behavior in human–robot interaction. Auton. Robot. 2016, 40, 193–209. [Google Scholar] [CrossRef]

- Aly, A.; Tapus, A. On designing expressive robot behavior: The effect of affective cues on interaction. SN Comput. Sci. 2020, 1, 1–17. [Google Scholar] [CrossRef]

- Huang, C.M.; Mutlu, B. Modeling and Evaluating Narrative Gestures for Humanlike Robots. In Proceedings of the Robotics: Science and Systems, Berlin, Germany, 24–28 June 2013. [Google Scholar] [CrossRef]

- Alexanderson, S.; Székely, É.; Henter, G.E.; Kucherenko, T.; Beskow, J. Generating coherent spontaneous speech and gesture from text. In Proceedings of the 20th ACM International Conference on Intelligent Virtual Agents, Online, 20–22 October 2020; pp. 1–3. [Google Scholar]

- Kucherenko, T.; Hasegawa, D.; Kaneko, N.; Henter, G.E.; Kjellström, H. Moving fast and slow: Analysis of representations and post-processing in speech-driven automatic gesture generation. Int. J. Hum. Comput. Interact. 2021, 1–17. [Google Scholar] [CrossRef]

- Ekman, P. Are there basic emotions? Psychol. Rev. 1992, 99, 550–553. [Google Scholar] [CrossRef]

- Russell, J.A. A circumplex model of affect. J. Personal. Soc. Psychol. 1980, 39, 1161. [Google Scholar] [CrossRef]

- Posner, J.; Russell, J.A.; Peterson, B.S. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 2005, 17, 715–734. [Google Scholar] [CrossRef] [PubMed]

- Pang, B.; Lee, L. Opinion mining and sentiment analysis. Found. Trends Inf. Retr. 2008, 2, 1–135. [Google Scholar] [CrossRef]

- Hutto, C.; Gilbert, E. Vader: A parsimonious rule-based model for sentiment analysis of social media text. In Proceedings of the International AAAI Conference on Web and Social Media, Ann Arbor, MI, USA, 1–4 June 2014; Volume 8. [Google Scholar]

- Bird, S.; Klein, E.; Loper, E. Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2009. [Google Scholar]

- Akbik, A.; Blythe, D.; Vollgraf, R. Contextual String Embeddings for Sequence Labeling. In Proceedings of the COLING 2018, 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 20–26 August 2018; pp. 1638–1649. [Google Scholar]

- McNeill, D. Hand and Mind: What Gestures Reveal about Thought; University of Chicago Press: Chicago, IL, USA, 1992. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Cao, Z.; Hidalgo Martinez, G.; Simon, T.; Wei, S.; Sheikh, Y.A. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 172–186. [Google Scholar] [CrossRef]

- Zabala, U.; Rodriguez, I.; Martínez-Otzeta, J.M.; Irigoien, I.; Lazkano, E. Quantitative analysis of robot gesticulation behavior. Auton. Robot. 2021, 45, 175–189. [Google Scholar] [CrossRef]

- Rodriguez, I.; Martínez-Otzeta, J.M.; Lazkano, E.; Ruiz, T. Adaptive Emotional Chatting Behavior to Increase the Sociability of Robots. In Proceedings of the International Conference on Social Robotics, Tsukuba, Japan, 22–24 November 2017; pp. 666–675. [Google Scholar]

- Bänziger, T.; Scherer, K.R. The role of intonation in emotional expressions. Speech Commun. 2005, 46, 252–267. [Google Scholar] [CrossRef]

| Sentence | Flair | VADER | TextBlob |

|---|---|---|---|

| Do not you feel much better | −0.963 | 0.4404 | 0.5 |

| I see you are feeling something new | 0.999 | 0.128 | 0.136 |

| Tell me how do you feel now | 0.899 | 0.0 | 0.0 |

| When you are sad you hide and want to be alone | 0.998 | −0.6705 | −0.5 |

| You do not want to do anything except maybe cry | −0.654 | −0.5142 | 0.0 |

| When you are afraid, you feel tiny | 0.815 | 0.0 | −0.3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zabala, U.; Rodriguez, I.; Martínez-Otzeta, J.M.; Lazkano, E. Expressing Robot Personality through Talking Body Language. Appl. Sci. 2021, 11, 4639. https://doi.org/10.3390/app11104639

Zabala U, Rodriguez I, Martínez-Otzeta JM, Lazkano E. Expressing Robot Personality through Talking Body Language. Applied Sciences. 2021; 11(10):4639. https://doi.org/10.3390/app11104639

Chicago/Turabian StyleZabala, Unai, Igor Rodriguez, José María Martínez-Otzeta, and Elena Lazkano. 2021. "Expressing Robot Personality through Talking Body Language" Applied Sciences 11, no. 10: 4639. https://doi.org/10.3390/app11104639

APA StyleZabala, U., Rodriguez, I., Martínez-Otzeta, J. M., & Lazkano, E. (2021). Expressing Robot Personality through Talking Body Language. Applied Sciences, 11(10), 4639. https://doi.org/10.3390/app11104639