1. Introduction

A mobile mapping system (MMS) can be defined as an integrated sensing system for the collection of geospatial data from a moving platform. At its core, an MMS comprises an integrated GNSS/IMU system for determination of the platform state and one or more imaging sensors. It may include additional navigation sensors such as a wheel revolution counter. The trajectory estimates from the navigation system allow direct georeferencing of acquired image data. The two main types of imaging sensors found on board MMSs are passive cameras and laser scanners. Since the focus of this work is the latter, an MMS is henceforth understood to mean a laser scanning based system. Early MMSs were developed for land-vehicle platforms but have since evolved into backpack, hand cart, water vehicle and unmanned aerial vehicle (UAV) embodiments in support of a vast range of applications. The work described herein focuses on a land-vehicle MMS accuracy testing, though the methods are expected to be broadly applicable to other platforms such as UAVs with suitable adaptation.

According to [

1], MMSs may be classified according to the accuracy they can achieve, either mapping or survey grade. Achieving the higher, survey-grade accuracy relies on accurate trajectory determination, which is particularly challenging in GNSS-denied and multipath environments such as urban canyons. Kersting and Friess [

1] report a post-mission quality assurance procedure for survey-grade MMSs. They perform a fully automated self-calibration to estimate corrections to system parameters (boresight and lever arm) and corrections to the trajectory. Planar features are used for the self-calibration. The plane-based system calibration method has been used for airborne [

2], UAV [

3] and mobile [

4,

5] laser scanner systems and the modelling has been extended to include catenary curves [

6] and poles [

7]. The trajectory model reported by [

1] includes a second-order corrections as a function of time. The trajectory is segmented according to position accuracy to allow piecewise modelling with continuity and smoothness constraints.

Methods to assess and improve the accuracy of MMSs have been investigated by many authors. Signalized control points [

8] and signalized tie points [

9] observed in multiple MMS passes have been reported to correct navigation system errors while check points [

10] can be employed to independently quantify the errors. The use of total stations and GNSS equipment results in accurately determined point coordinates [

11]. However, point-based assessment suffers from the great disparity in data density between the MMS and the independently surveyed points.

In recognition of this shortcoming, a number of researchers have developed outdoor test fields for MMS accuracy assessment. Generally speaking, they comprise a dense reference point cloud of a large urban area through which the MMS can be driven. The reference point cloud is collected by static terrestrial laser scanning (TLS) that is georeferenced with static or RTK GNSS methods. The accuracy assessment of MMS data can be performed by comparing the two point clouds, though the features and methods used for comparison vary.

Kaartinen et al. [

12] detail the testing of several MMSs over a 1.7 km loop. They quantify system accuracy by comparing the coordinates of independently surveyed point features. The reference targets are poles, building corners and curb corners extracted from the point cloud. Xu et al. [

13] report a mobile laser scanner test field of similar size (1000 m × 550 m area). Their assessment is conducted with 45 control points. Fryskowska and Wróblewski [

14] also use TLS data as a reference to assess the accuracy of an MMS. Their assessment is performed using a large number of check points and the lengths of building features. In their manual for laser-based mobile mapping, Johnson et al. [

15] report two test sites (urban and rural) established with control points for performance testing of MMSs. Both sites are about 3 km long. Accuracy is assessed with signalized control points and TLS reference data. The latter source is used at a specific site to estimate bridge clearance. Toschi et al. [

16] use TLS reference data collected of two buildings in a city center to evaluate MMS accuracy. Iterative closest point (ICP) method registration [

17] is performed on segmented building data and various statistical measures, including higher-order moments, are computed for the assessment. Rodríguez-Gonzálvez et al. [

18] constructed a 2.5 km long TLS point cloud of a medieval wall for the accuracy assessment of their MMS. However, the assessment was performed over a small section of the wall.

The accuracy assessment of indoor MMSs (IMMS) is described by [

19]. Three IMMSs were tested using a pre-surveyed cultural heritage site as a reference test field. TLS data of the test field serves as ground truth. IMMS data were collected on two pre-defined paths: one closed and one open. Subsets of the data were registered to reference data by the ICP method. A number of different data comparisons are reported to quantify the accuracy of each system.

The assessment of MMS accuracy is not, however, confined to the built environment. Rönnholm et al. [

20] evaluate the quality of backpack laser MMS in a forest relative to reference data collected with UAV and TLS. The evaluation is performed by comparing data patches comprising 10 s of data with ICP. The time series duration is a tradeoff between enough topographic variation in the data and changes in system orientation. Performing independent ICPs creates discontinuities between patches, so the parameters of adjacent patches are (linearly) time dependent.

The past decade has seen tremendous growth in the utilization of unmanned aerial vehicles for airborne mapping. Though cameras are ubiquitous sensors found onboard mapping UAVs, the deployment of light-weight laser scanner systems is becoming more commonplace. Accordingly, the assessment of the accuracy of point clouds derived from such systems is of great importance. Perhaps not surprisingly, the use of an independently observed reference point cloud has been employed by a number of researchers. Several different laser scanner systems appear in the literature including the ibeo LUX LiDAR sensor [

21], the SICK LMS511 PRO [

22], the RIEGL miniVUX lidar [

23] as well as the HDL-32E [

24], the VLP-16 Puck HI-RES [

3] and the VLP-16 [

25] models from Velodyne.

Bakuła et al. [

21] report on the assessment of UAV point cloud accuracy in support of levee monitoring using signalized points and cross sections. This group utilizes airborne laser scanner and ground survey data for a later assessment [

25]. A multi-faceted assessment of UAV laser scanner data quality over a landslide area is described by [

23]. Assessments of strip adjustment quality, overall digital elevation model accuracy, point density and change detection were conducted by comparing the UAV data with TLS, total station and GNSS data. The use of TLS point clouds of single buildings and their surroundings as the reference data are reported by [

22,

24].

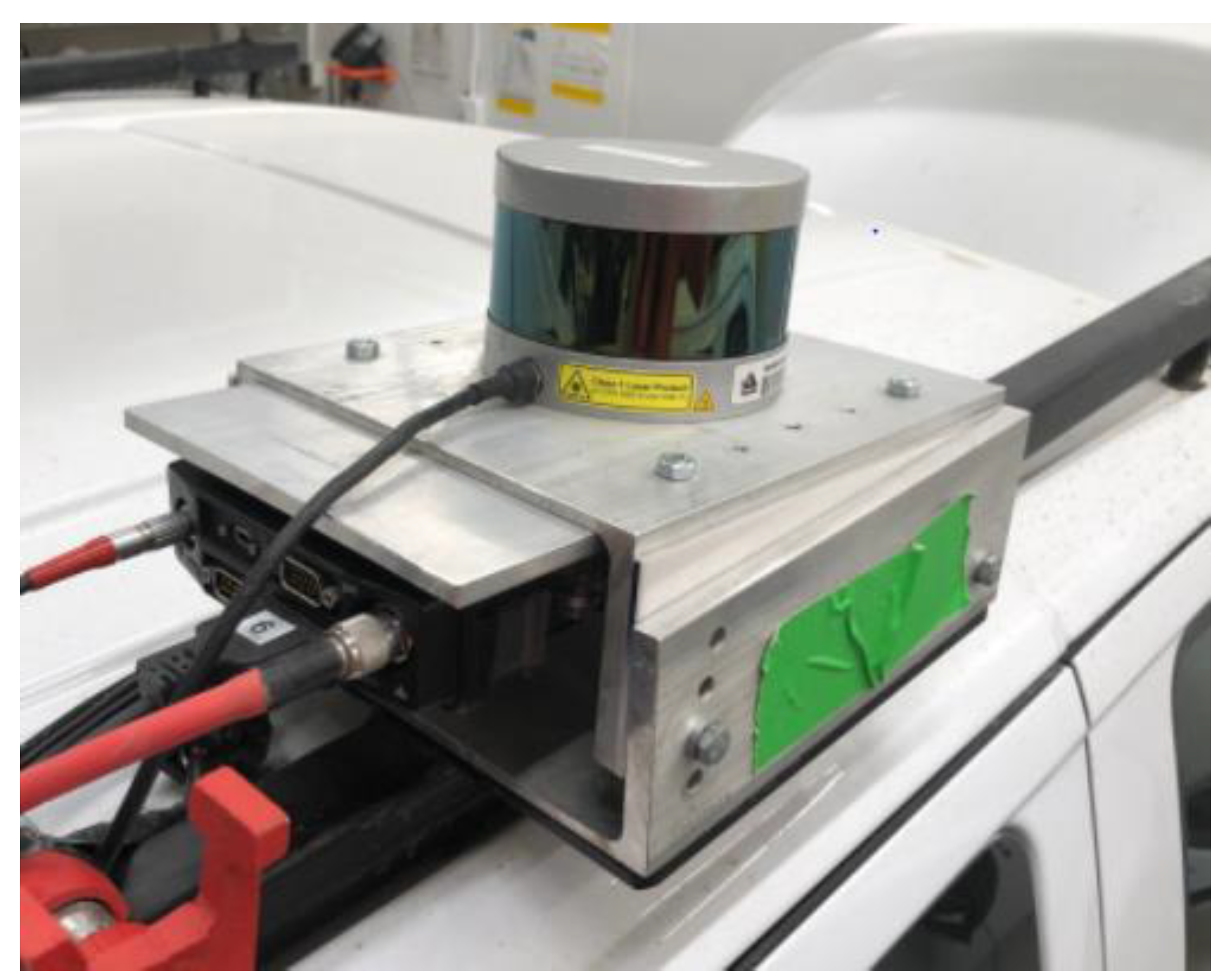

Clearly, recent activities found in the literature indicate the importance of evaluating the metric performance of lightweight laser scanning systems utilized in MMSs regardless of whether they are deployed on a land vehicle or a UAV platform. This need is further emphasized with the increased use of low-cost microelectromechanical systems (MEMS) sensors in the navigation system. This work presents the design of a completely automated framework for land-vehicle MMS accuracy assessment by comparing a mobile laser scanner point cloud with a reference point cloud of a dedicated testing facility. The establishment of the testing facility comprising several downtown city blocks—a challenging GNSS environment—is also detailed. Multiple datasets with an MMS fitted with a Velodyne VLP-16 and three different MEMS sensors were captured to demonstrate the effectiveness of the proposed method. Detailed evaluation of these sensors is presented following the experiment description.

2. Methodology

Before describing the proposed methodology for lightweight laser scanner performance assessment, it is important to review the kinematic MMS positioning equation. The mapping frame coordinates (

m) of a point p,

, observed by an MMS, can be expressed as follows:

where

is the position of the body frame (

b) origin;

is the body frame attitude matrix, which is parameterized in terms of the time-varying roll, pitch and yaw angles, and represents the rotational transformation from the body frame to the mapping frame;

is the lever arm offset vector between the sensor (laser scanner) frame (

s) and the body frame (the IMU axes);

is the boresight matrix, parameterized in terms of three Cardan angles, which represents the rotation from the sensor frame to the body frame; and

is the position vector of point

p in the sensor frame. For a Velodyne laser scanner, the vector

is a function of the observed range, rotation angle and the vertical angle of each of its 16 lasers as well the scanner’s interior calibration parameters. Additional details about the laser scanner are given later.

All quantities are considered to time-varying except for the elements of the boresight matrix and the lever arm vector, which are pre-calibrated and treated as constants. The mapping frame in this project was the 3TM projection with central meridian 114° west. The point coordinates given by Equation (1) are prone to many error sources. If the mounting parameters (boresight and lever arm) have been accurately calibrated, then the errors can be attributed to the navigation and laser scanner systems. MMS position and orientation can be severely biased in GNSS-denied and high-multipath environments like urban canyons. They can rapidly vary with time if a low-cost IMU is used.

The accuracy assessment methodology is based on spatial comparison of an MMS (kinematic) point cloud and a GNSS/IMU-independent (static) point cloud. The kinematic point cloud possesses variable spatial accuracy at different temporal points due to the nature of the MMS data collection. For instance, areas with sufficient GNSS coverage feature high spatial quality, while areas with a lack of GNSS coverage may feature lower spatial quality, depending on the aiding provided by the IMU for the navigation solution. On the other hand, the static point cloud possesses almost constant accuracy since it is based on a precise multi-station terrestrial laser scanning survey that does not rely on kinematic GNSS/IMU position and orientation estimates. A spatial comparison between the kinematic point cloud and the static one will indicate any discrepancies between the two datasets.

One famous way to derive spatial discrepancies between point clouds is by conducting 3D registration [

26]. The well-known iterative closest point method [

17] can handle registration between point clouds from different sources and with variable point density. The application of the ICP-registration results in six Helmert transformation parameters (i.e., three translations and three rotation angles), which are used as the accuracy measure for the spatial quality of the kinematic point cloud. The scale parameter is not solved for using the ICP since the laser scanners preserve unit scale factor. If the kinematic point cloud has a high spatial quality, it will match the static point cloud and the values of the six transformation parameters will be close to zeroes following the ICP registration. Any differences between the two point-clouds will be indicated by large, non-zero values for the transformation parameters.

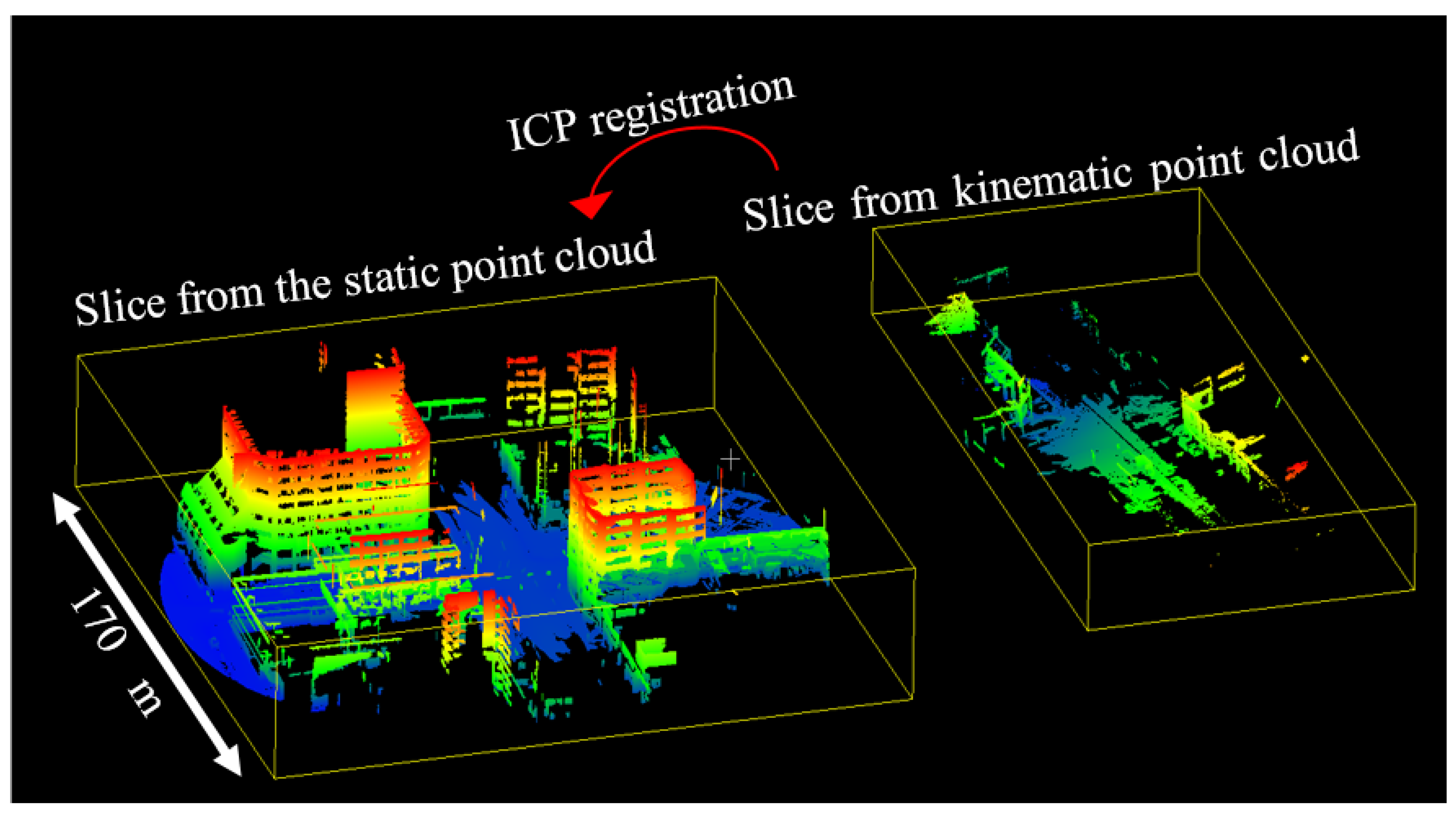

For a rigorous accuracy assessment, the kinematic point cloud is divided into temporal slices prior to the registration. Each temporal slice contains points from a short time interval of data collection. The individual slices are sequentially registered to the static point cloud using the ICP (

Figure 1). The functional relationship between a point in the cloud captured by the MMS in slice k,

, and the closest point in the reference TLS point cloud,

, is thus given by:

where

is the translation vector and

is the rotation matrix between the two point clouds. Note that the mapping frame superscript has been omitted for clarity. The objective of the ICP is to minimize the sum of squares of differences between the two point clouds,

ε2, i.e.,

It is assumed that the initial position and orientation of the kinematic point cloud provided by the direct georeferencing is sufficiently accurate enough to ensure convergence of the ICP. The result of applying this procedure to all slices is a time-series of transformation parameter sets that provides an accuracy measure of the kinematic data at different temporal points and spatial locations.

Note that navigation quality changes rapidly in urban areas and, accordingly, the spatial quality of the kinematic point cloud may vary at different temporal points. Therefore, it is hypothesized that a shorter temporal slice will result in a more realistic accuracy assessment. Point cloud data collected within a short time interval from a moving mobile mapping system will have homogeneous spatial accuracy. Nevertheless, one should bear in mind that a very short time interval may not contain a sufficient number of points or enough objects/features to perform the ICP registration. On the other hand, a very long time interval will provide a large size point cloud with inhomogeneous spatial accuracy and may lead to a false accuracy assessment. Spatial errors in a few locations may not be detected through the registration of large point clouds. The errors may be distributed throughout the larger dataset and will not contribute significantly to the estimation of the transformation parameters. Since the transformation parameters are used to quantify data quality, a long time interval must be avoided. A few factors should be taken to account for determining the time interval length, for instance: (1) the mobile mapping system speed, (2) the laser scanner range, (3) the scanning frequency, (4) the quality of the GNSS/IMU system, and (5) the structure of the scanning area.

4. Experiment Results and Discussion

The assessment commences with analysis of the trajectory accuracy as determined with respect to the μIRS reference. Statistics of the 3D position differences for each dataset were computed over short time intervals. Typical values from each dataset are presented in

Table 2. Sub-meter accuracy (in terms of mean value) was achieved with the Honeywell IMU, while the Epson provided roughly meter-level accuracy. The mean value from the Invensens was above 2 m.

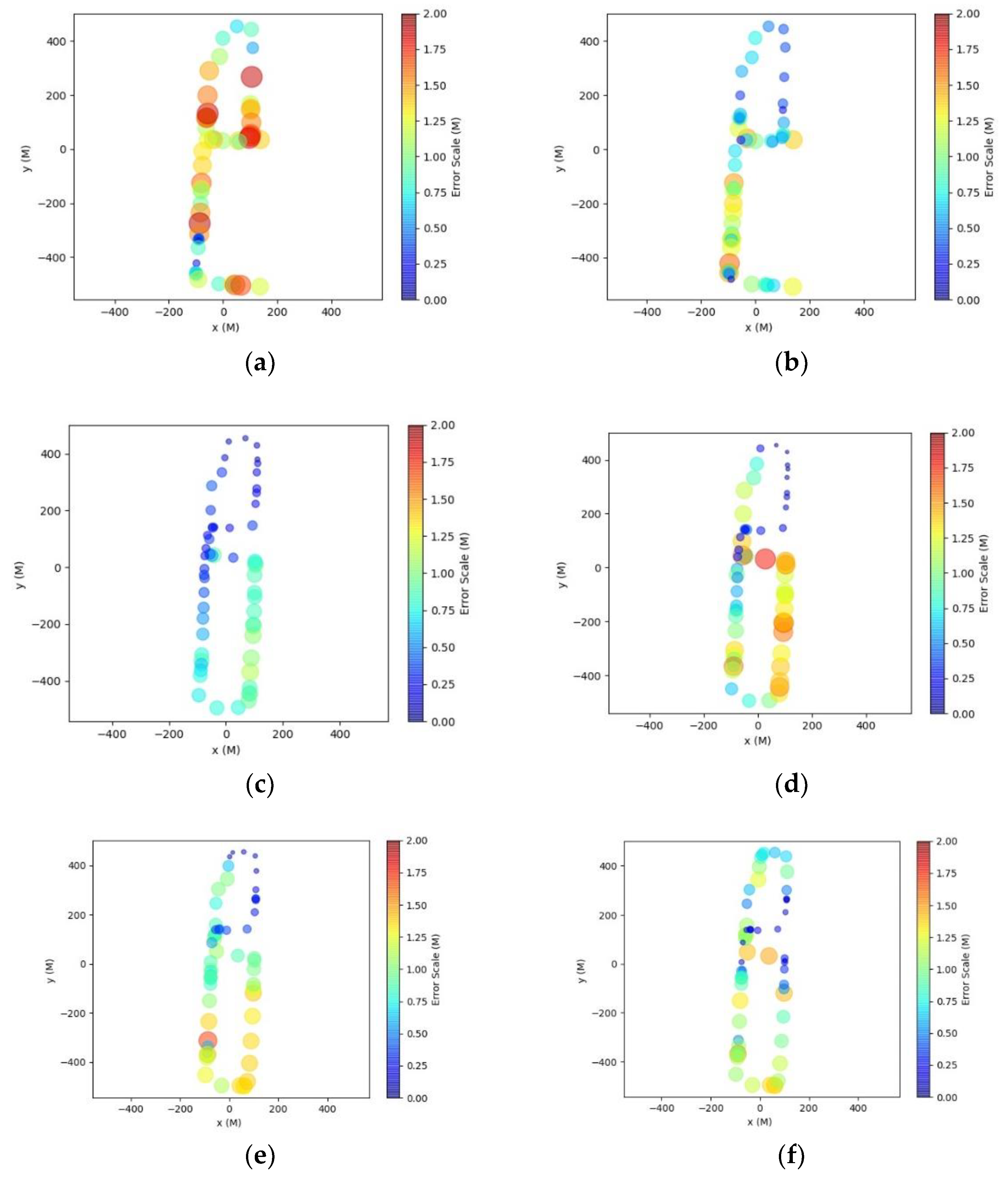

The accuracy assessment over the six kinematic point clouds resulted in a wide range of values for

depending on the MMS’s location within the testing facility. The results are statistically summarized in

Table 3 and

Table 4 and depicted graphically in

Figure 6 and

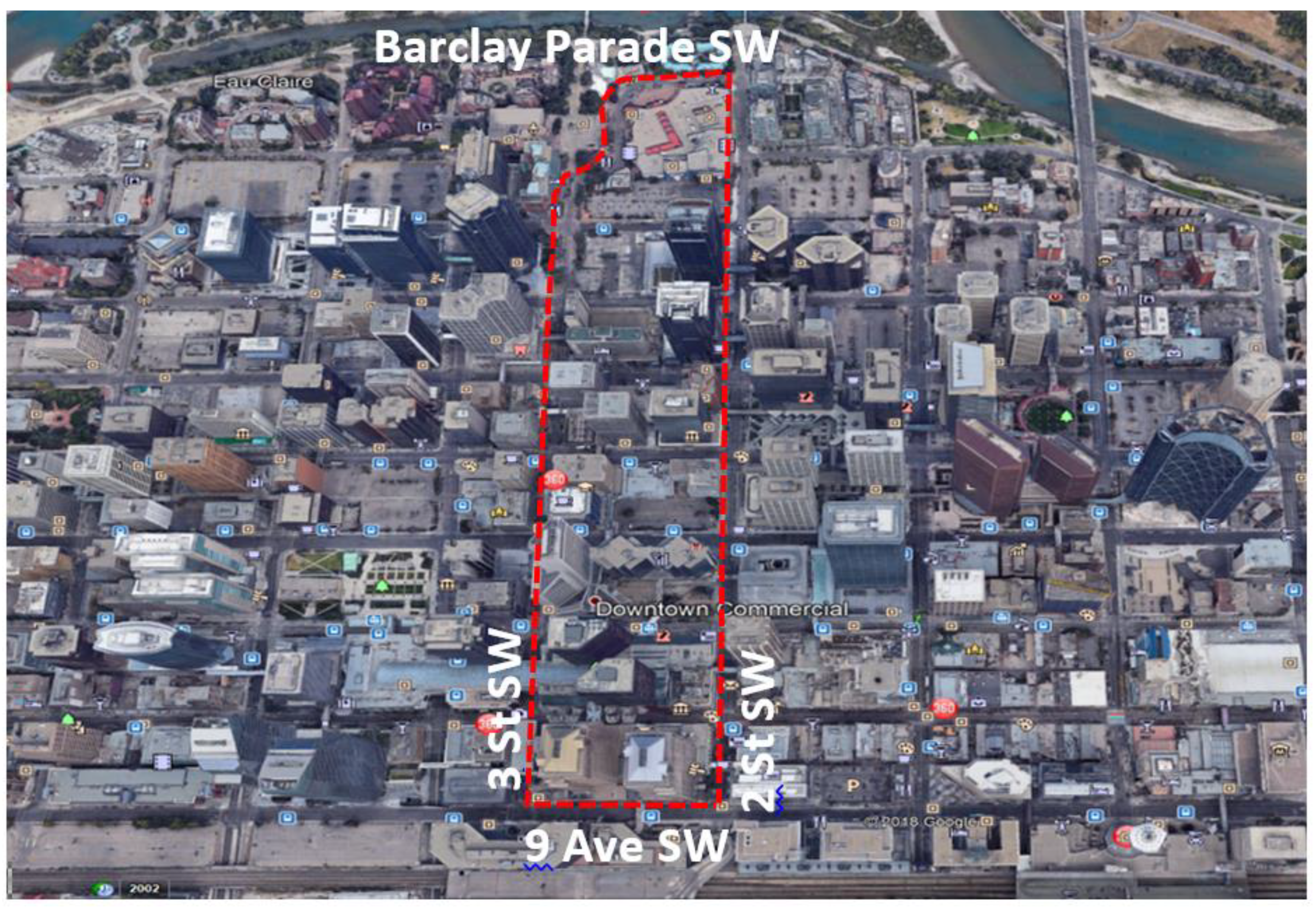

Figure 7. For the six datasets, high spatial accuracy values were found in areas with good navigation conditions (open sky, no sharp turns). These outcomes were found at the northern extent of the test field in the vicinity of Barclay Parade. The land uses in this region comprise a mixture of low-rise commercial and residential buildings (two to three stories). On the contrary, but not surprisingly, lower spatial accuracy values mainly occur in the southern part of the test field where the land use is predominantly high-rise buildings that occlude the GNSS signals and degrade the navigation solution.

As can be seen in

Table 3, the six point clouds provided a wide range of mean accuracy values depending mainly on the sensor type and the scanning experiment. The Epson and CPT sensors showed error magnitudes in the range of 0.48 m to 0.85 m. The InvenSense MEMS sensor produced a mean error of 1.13 m.

It is evident that the slice size did not significantly impact the accuracy assessment mean values. A comparison of the results in

Table 3 and

Table 4 reveals that the 5 and 10 s slice lengths provide very similar mean error values. The differences are on the order of a few centimeters, which are not significant in relation to the overall magnitude of the mean errors themselves. The differences in mean error values can be attributed to differences in the point cloud shapes for different slice sizes, which slightly impacts the quality of registration for each slice.

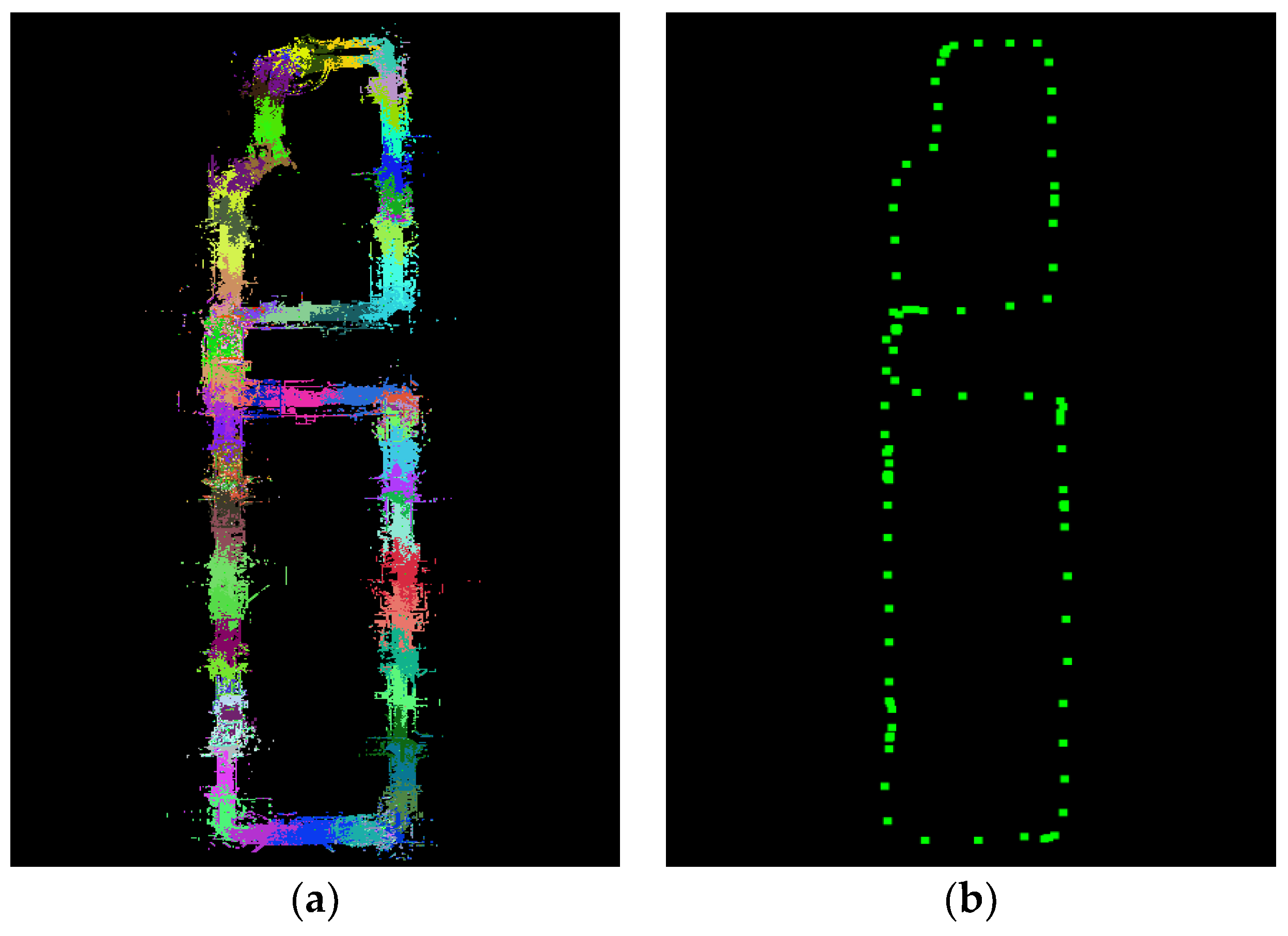

For a better visualization of the accuracy assessment results,

Figure 6 shows color maps of the error values for the six point clouds and 5 s slice size length. Both color and the size of the data markers indicate the error magnitude for each slice. The points are plotted at the centroid of each individual slice (c.f.

Figure 5b). In general, the navigation accuracy of a mobile mapping system changes slowly at different points in time. Except for the InvenSense sensor, which is a lower grade of MEMS IMU, a smooth transition of error values from one slice to the next is generally evident, as can be seen in

Figure 6. Similar observations are valid for experiments with slice size of 10 s (

Figure 7). However, it was observed during testing that the smaller the slice size produced better the alignment following the registration. Accordingly, the accuracy assessment results from the 5 s slices are assumed as the more representative of the accuracy for the three sensors.

Multiple datasets were captured on separate dates with both the Epson and Honeywell sensors. Inspection of the results in common areas of the test field (

Figure 7) reveals overall consistency of the results for both. Some local differences in accuracy do exist, in the vicinity of x = 100 m and y = 0 m, for example. These can be attributed to different satellite visibility conditions, as can other localized accuracy differences that can be seen between different sensors. Comparison of

Table 2 with the results in

Table 3 and

Table 4 reveals that the MMS errors as determined by the proposed method are largely due to the low-cost IMUs rather than the Velodyne data. In fact, the new accuracy assessment methodology provides slightly more optimistic results than what is indicated by the trajectory difference statistics.

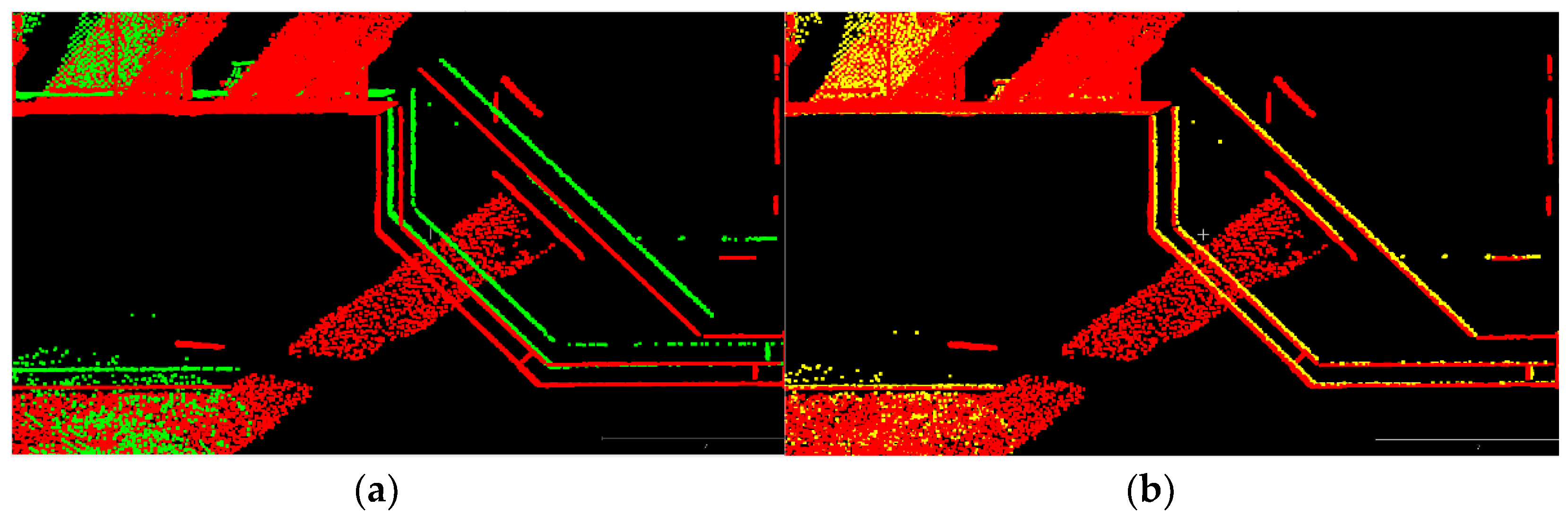

Further demonstration of the performance of the proposed method is given in

Figure 8. This figure shows an example of point cloud cross-sections before and after registration. As can be seen, the ICP method provided precise alignment between the kinematic and static point clouds following the registration, even for point clouds that were significantly (~1 m) separated in three dimensions. This provides high confidence in the results of the proposed accuracy assessment methodology.