An Approach Based on VR to Design Industrial Human-Robot Collaborative Workstations

Abstract

:1. Introduction

1.1. Contributes from Literature

1.2. The Proposed Contribution

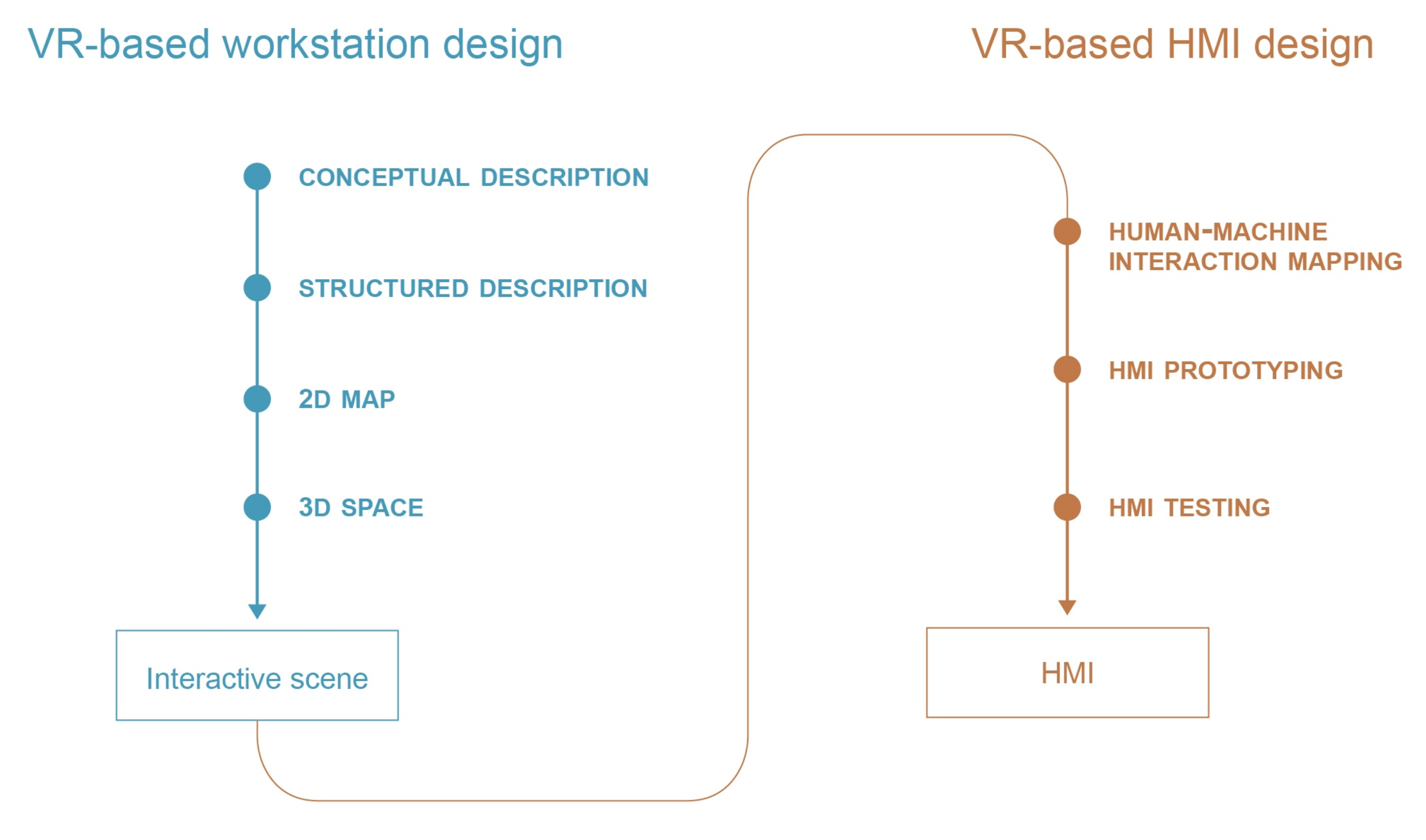

2. The Proposed Approach

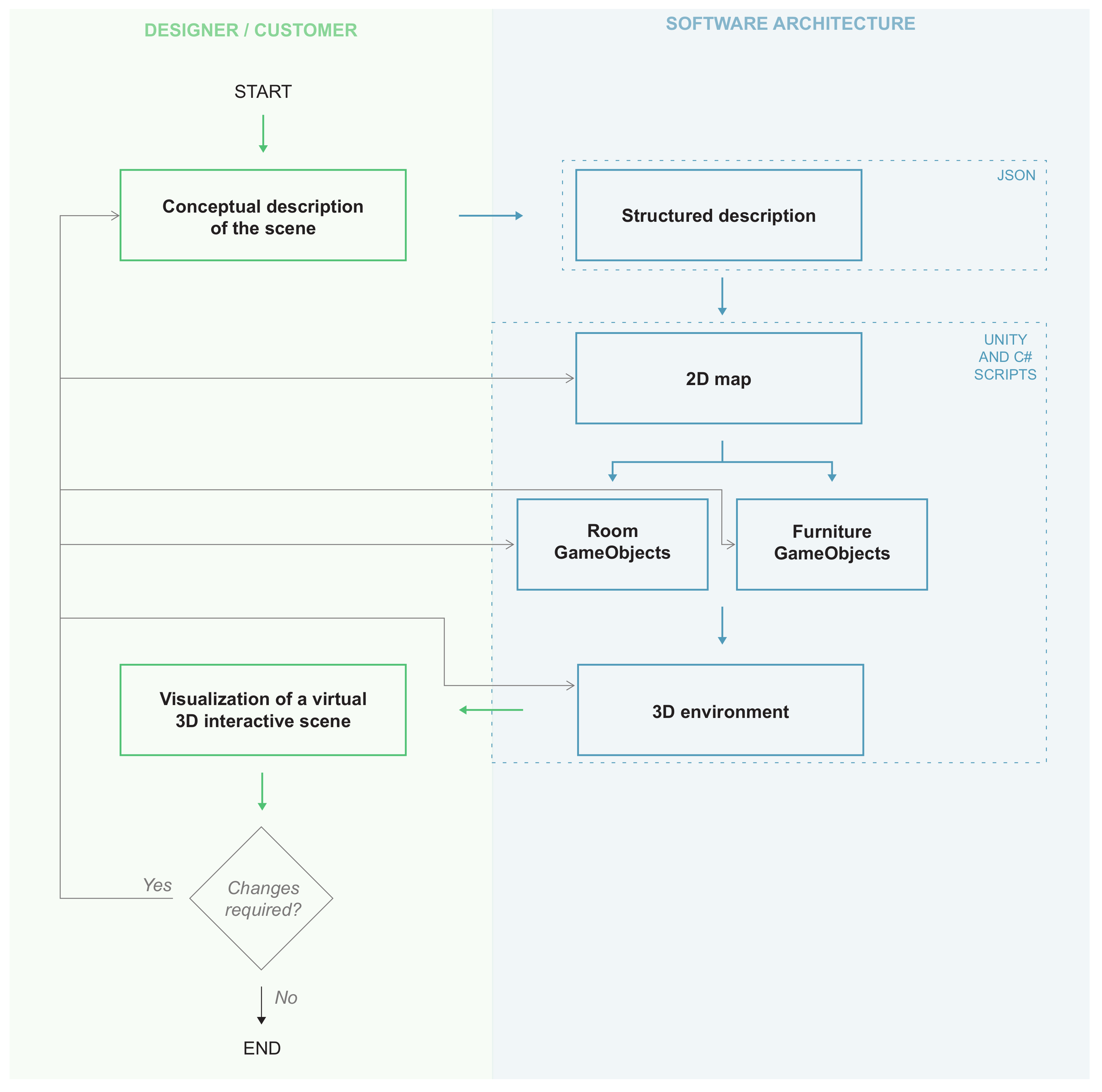

2.1. VR-Based Rapid Prototyping of the Workstation Layout

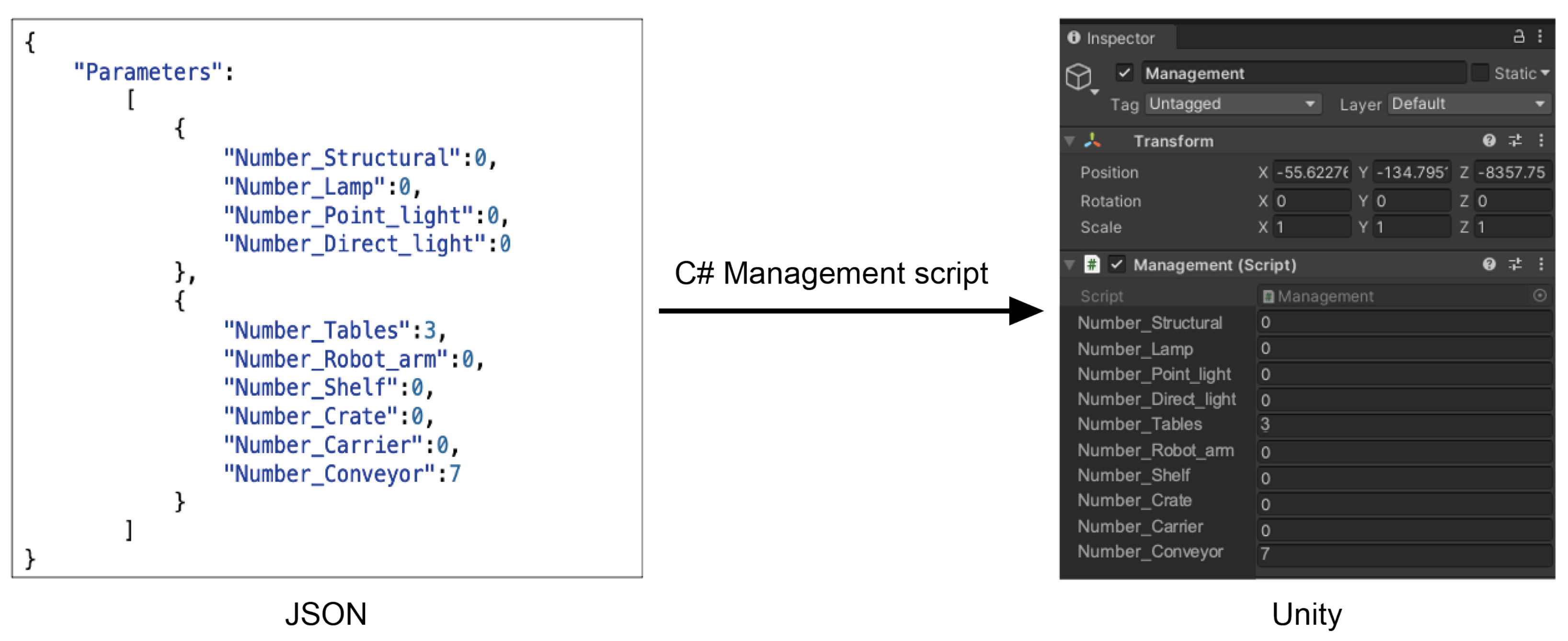

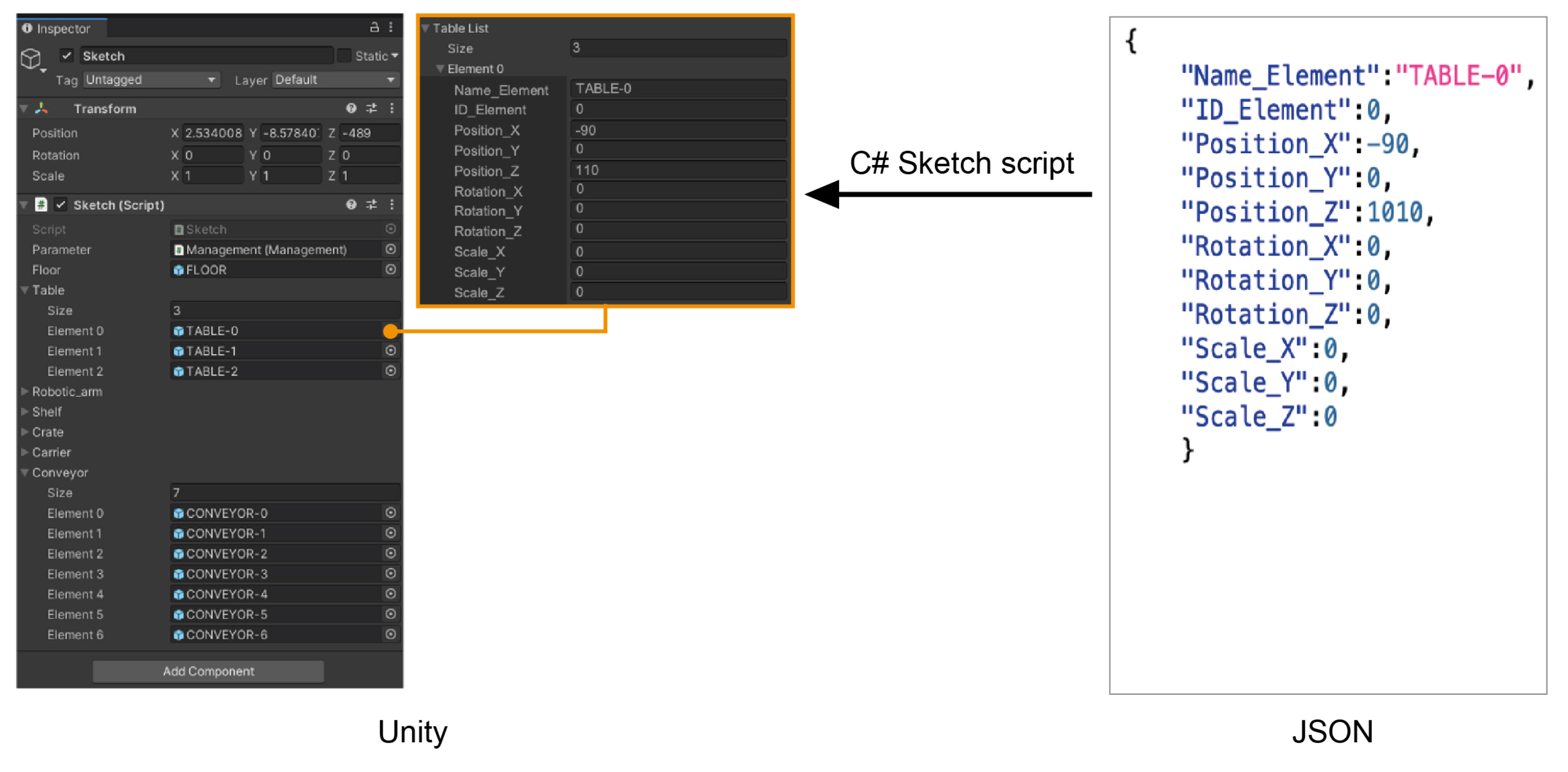

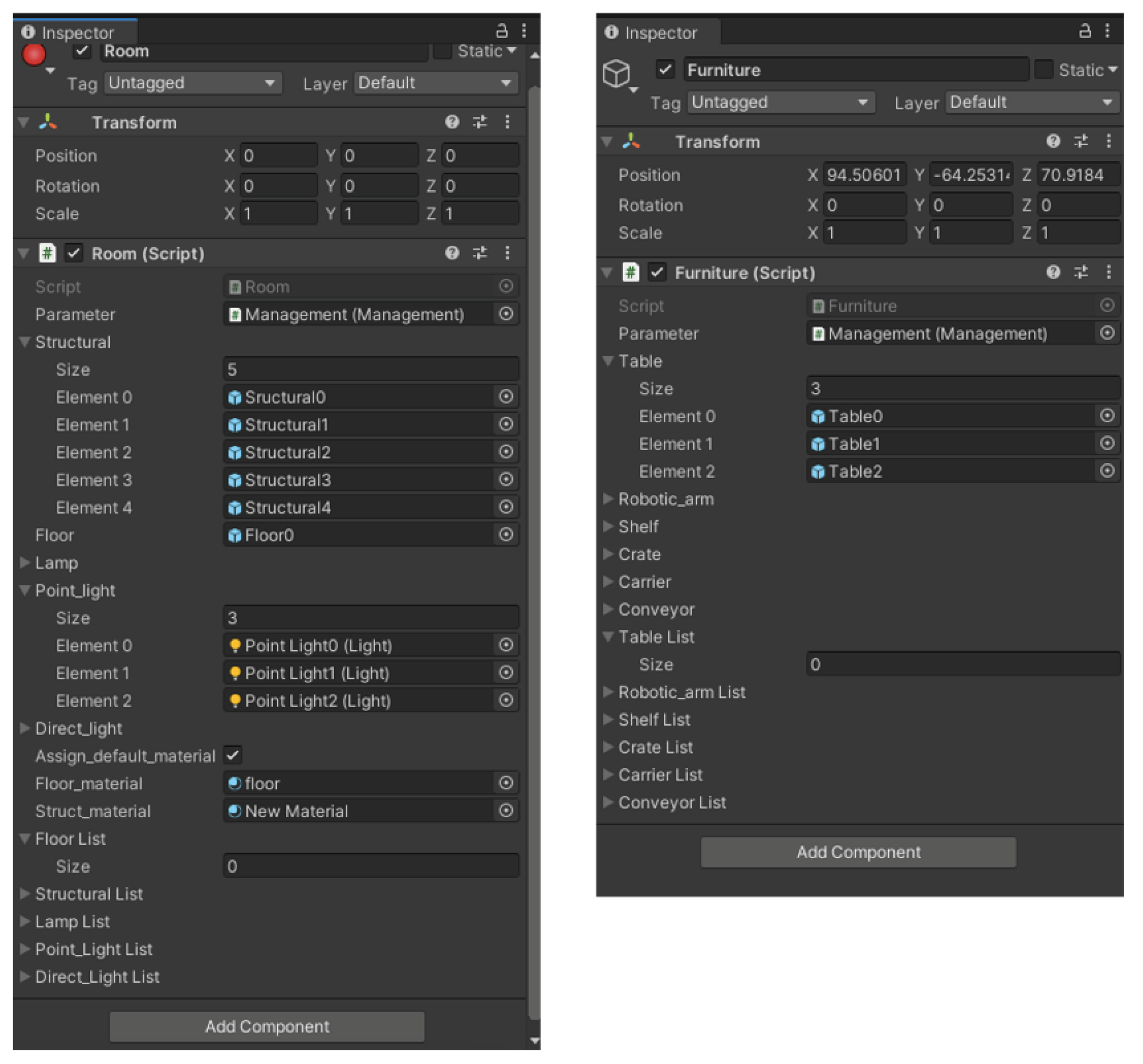

2.1.1. Creation of a Structured Description of the Scene

2.1.2. Creation of a 2D Map of the Workstation

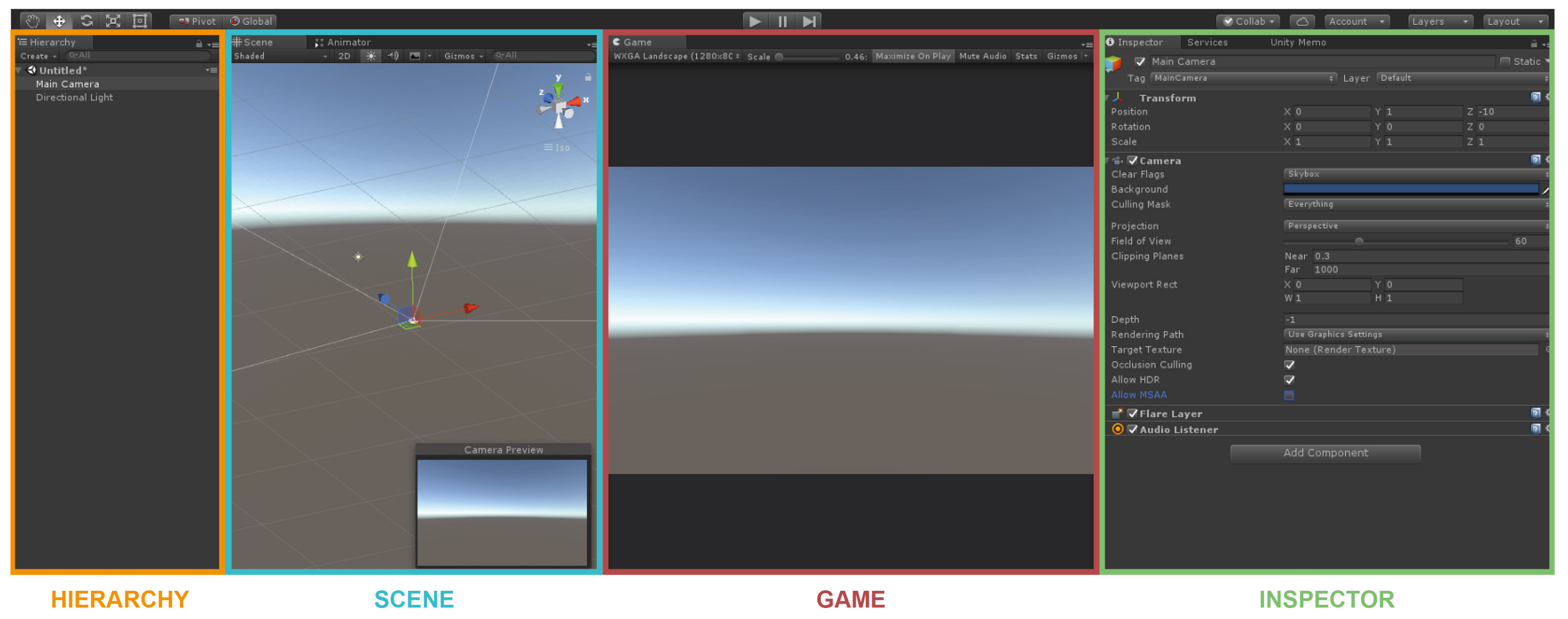

2.1.3. Creation of a 3D Environment for the Workstation

2.2. VR-Based Interaction Design

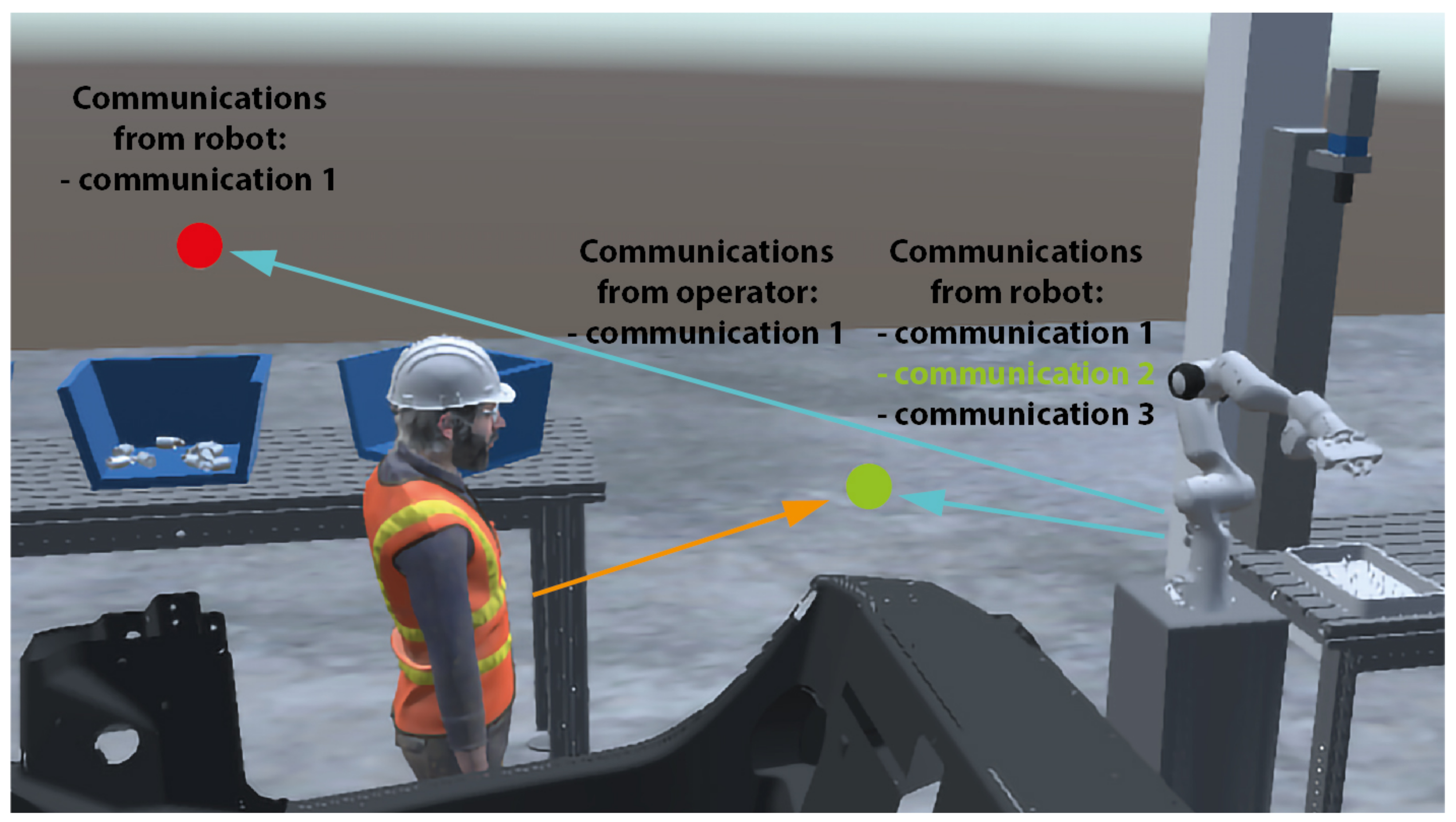

2.2.1. Human-Machine Interaction Mapping

- where: Where interactions with the interface/s take place;

- which: Which information must be exchanged through the interface/s;

- direction: Which communication direction, from/to the user/s and other actors (e.g., robot, system);

- when: When interactions occur.

- where: Represented with a balloon where the interactions with the interface will take place;

- which: Represented with a text label about the information to be exchanged, distinguishing those from user/s to other actors and vice versa;

- direction: Represented with an arrow pointing to or from the identified interaction point;

- when: Represented through a colour change of the interaction balloon and the information label to be exchanged in the specific moment.

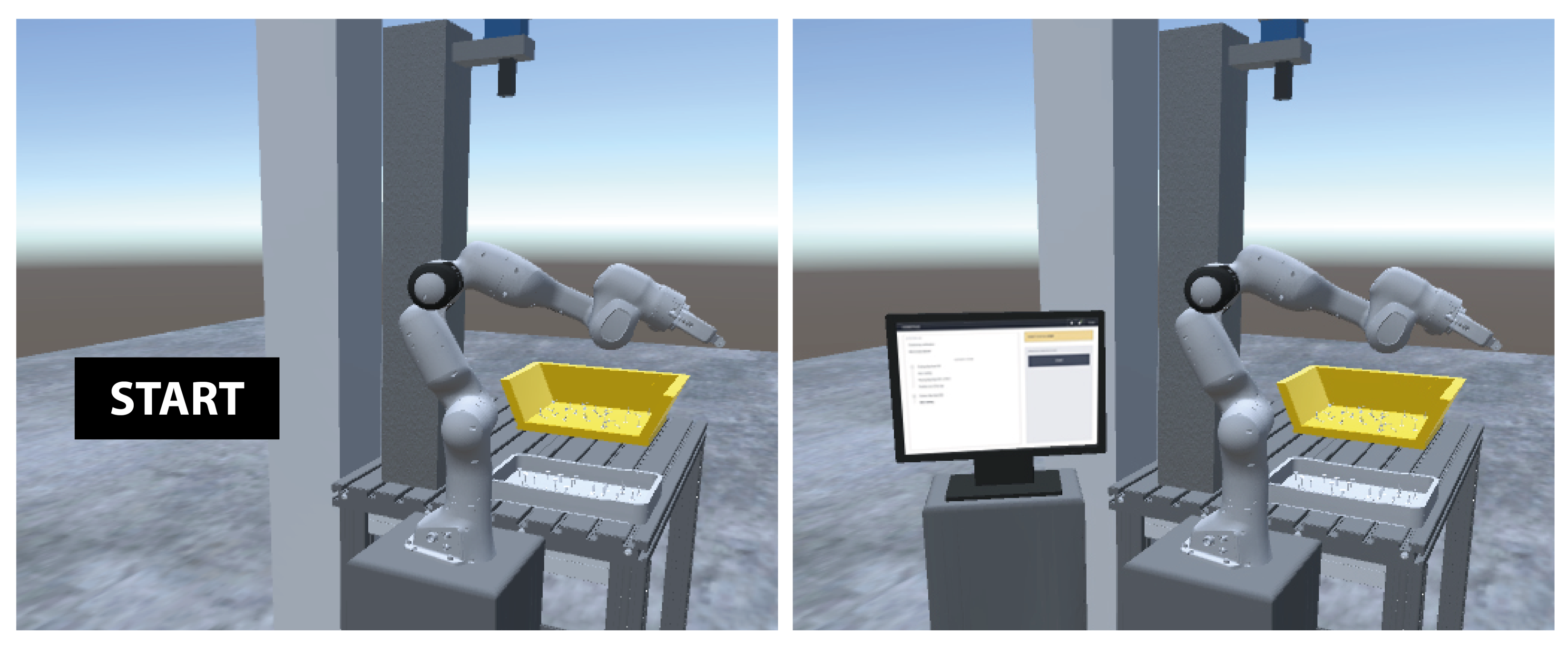

2.2.2. HMI Prototyping

2.2.3. HMI Testing

3. Application to Industrial Case Studies

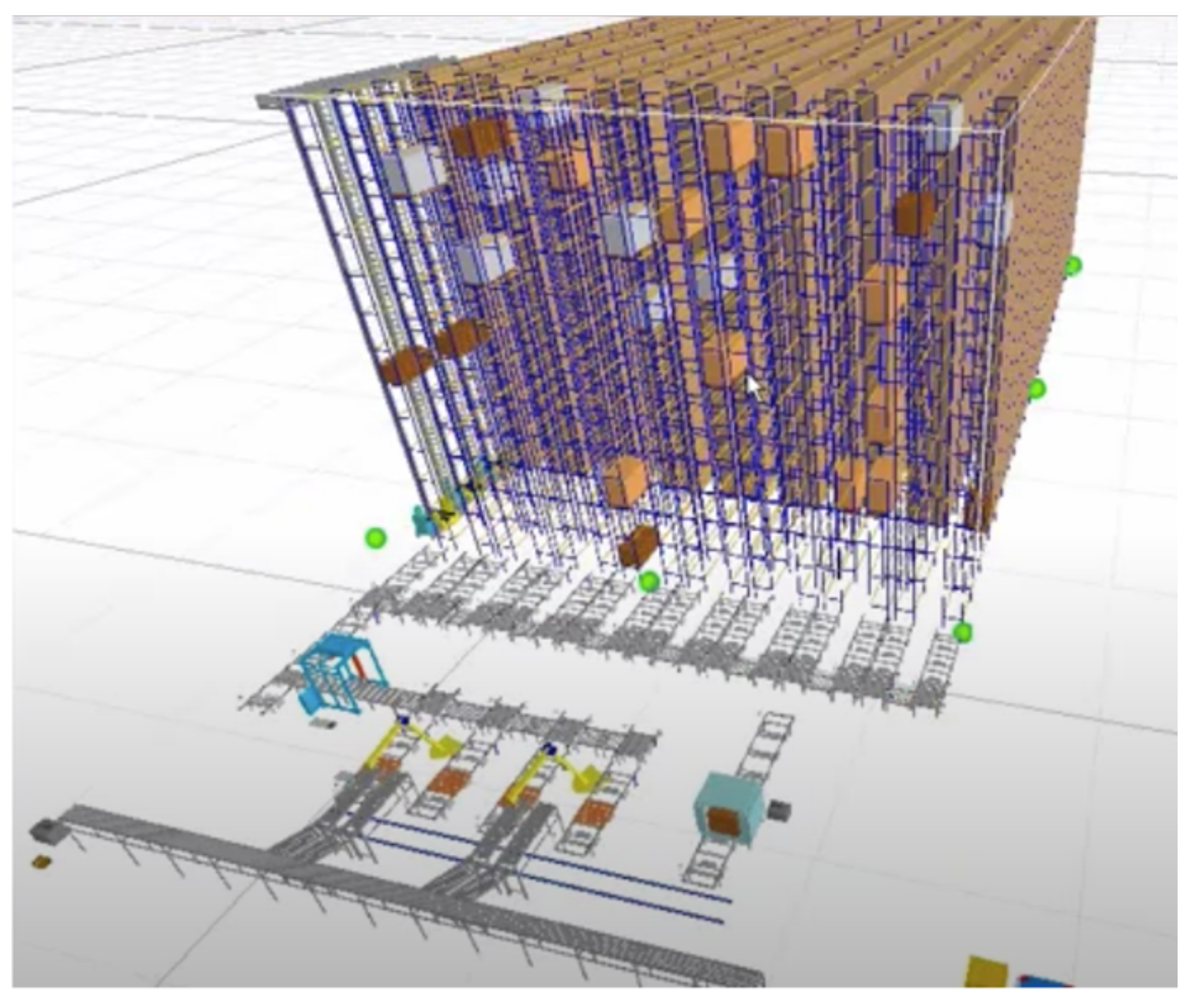

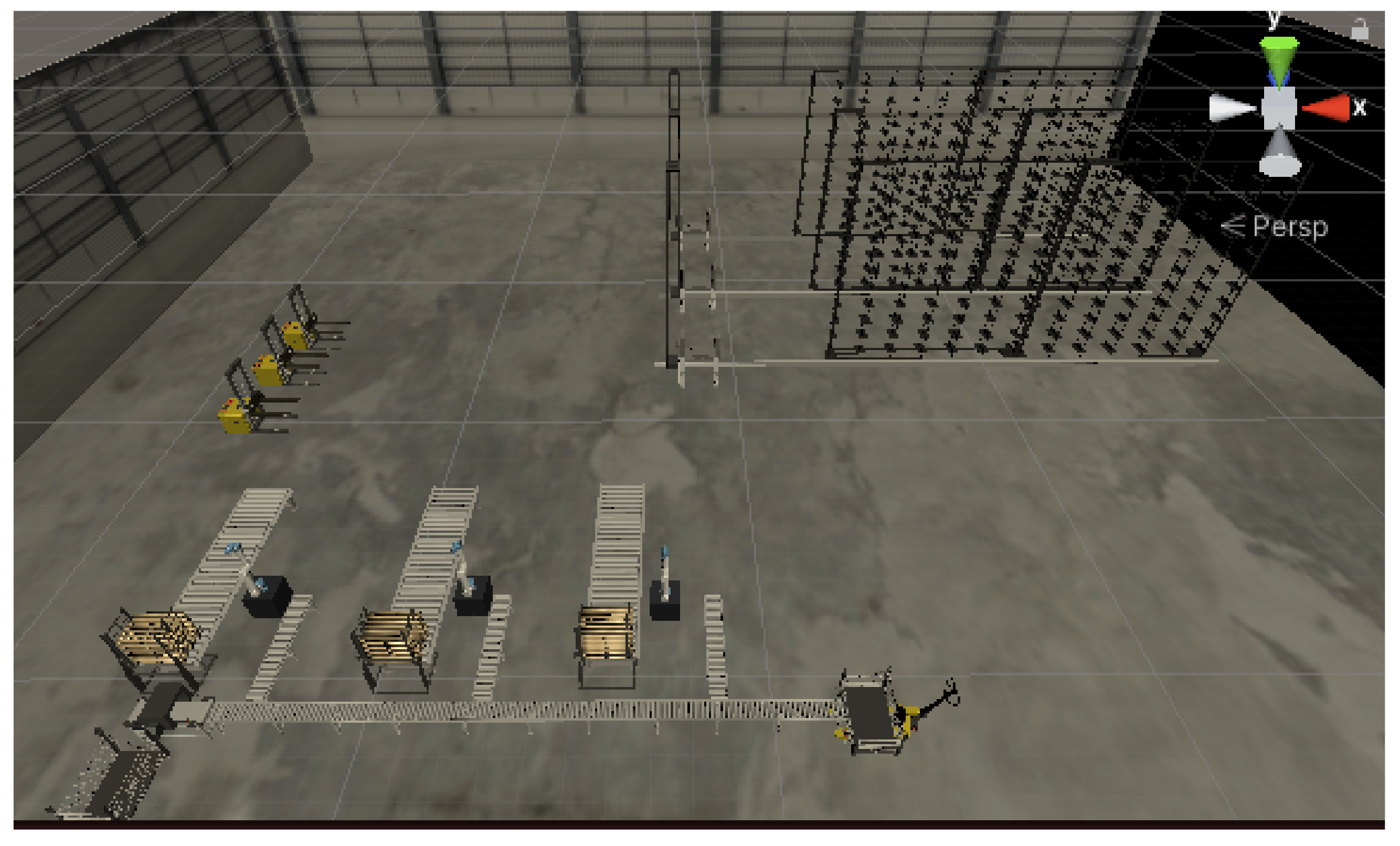

3.1. Case No.1: Complex Layout Prototyping for Intensive Warehouse

- Five structural elements (i.e., ceiling and four walls);

- Four lamps;

- Seven segments of conveyor belt;

- Three robot arms;

- Three AGVs;

- Three shelves for intensive warehouses (i.e., shelf and stacker elevator).

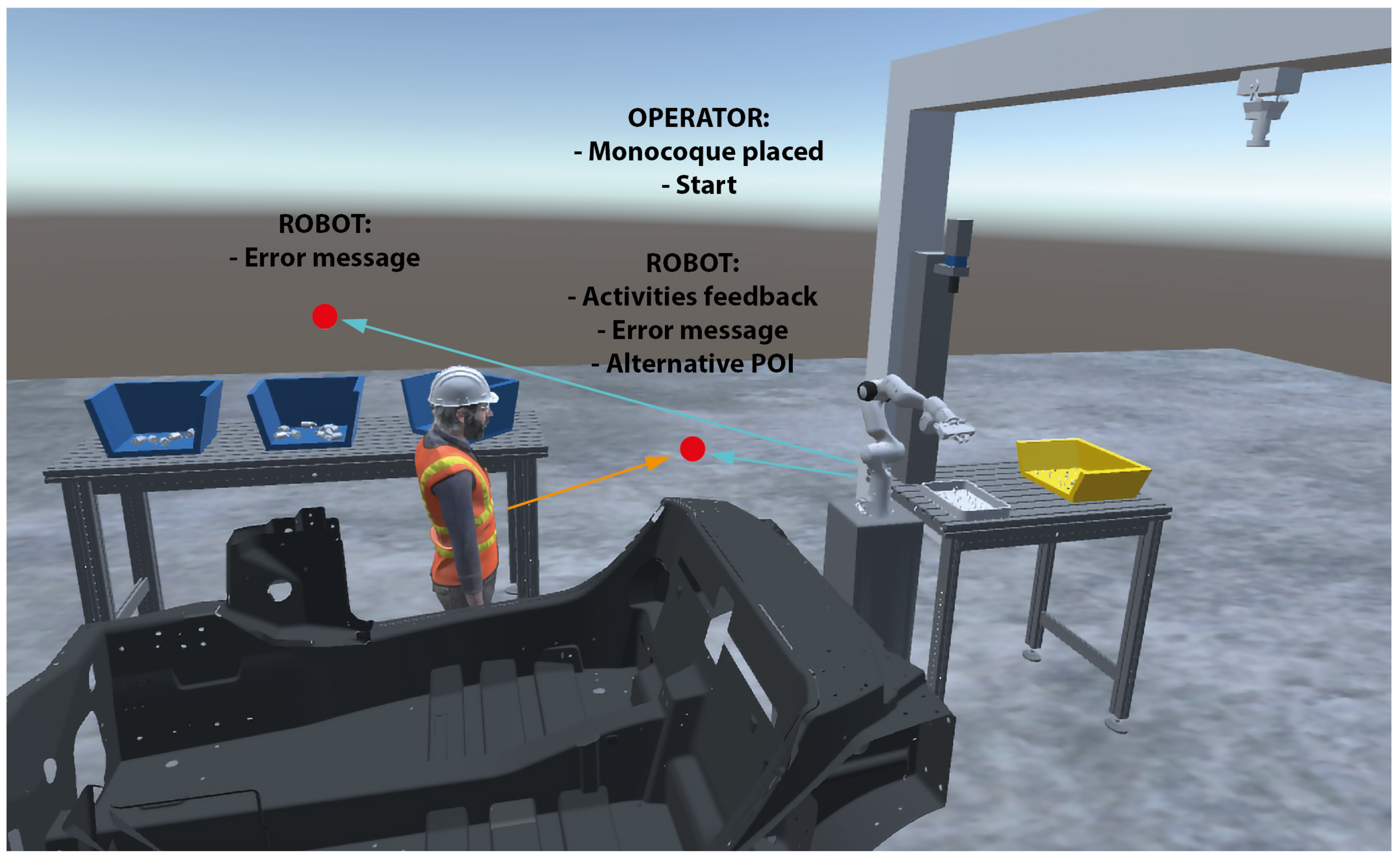

3.2. Case No.2: Interaction Prototyping for Human-Robot Assembly

- Two tables;

- A cobot, with specific end-effector for bigHeads insertion;

- Monocoque;

- A glue dispenser;

- A vision system.

- Place the trolley with the monocoque near the robot;

- Communicate the positioning on the HMI and verify that the automatic phase is started;

- Carry out parallel activities (for example on the desk located on the left);

- Resolve the reported problem;

- Communicate the resolution of the problem;

- Suspend the activity of the robot.

- “I expected to receive an indication of the exact positioning of the monocoque”;

- “The interface was clear, but it has a rather limited appeal. I would like it more colorful”;

- “I was expecting feedback on the successful completion of the hand guidance phase”;

- “In the problem resolution screen, it was not clear that I had to do something like moving the robot in hand guidance”.

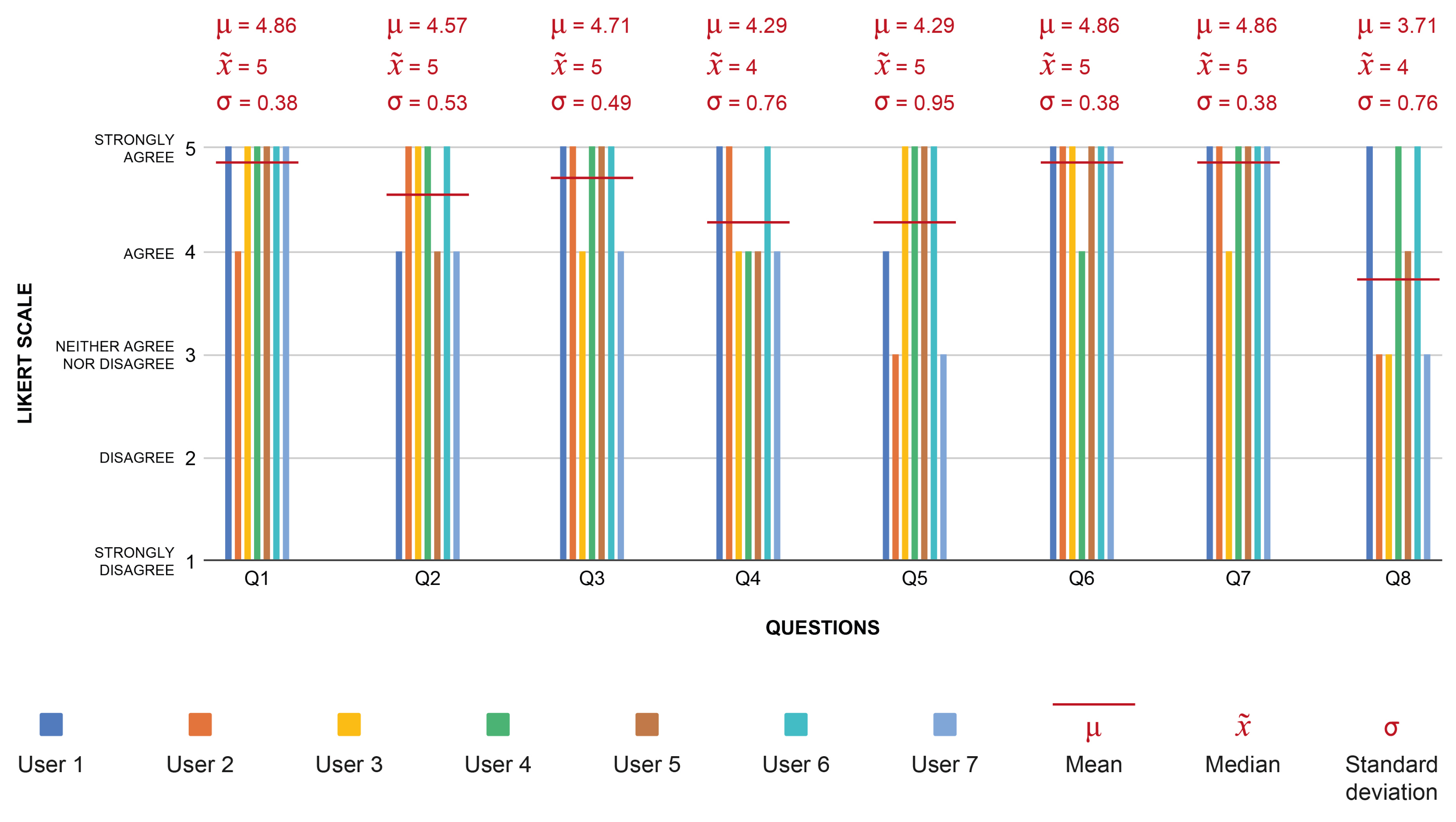

- Q1:

- I think I would like to use the interface;

- Q2:

- I found the interface very clear;

- Q3:

- I found the interface easy to use;

- Q4:

- I felt safe in interacting with the interface;

- Q5:

- I think the type of interface is appropriate for the type of work;

- Q6:

- I think the interface provides me with all the information I need to do my tasks;

- Q7:

- I think that the interaction with the interface is not hindered by the surrounding environment;

- Q8:

- I think that the HMI evaluation is not influenced by the VR scene characteristics.

4. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Matheson, E.; Minto, R.; Zampieri, E.G.; Faccio, M.; Rosati, G. Human–robot collaboration in manufacturing applications: A review. Robotics 2019, 8, 100. [Google Scholar] [CrossRef] [Green Version]

- Goodrich, M.A.; Schultz, A.C. Human-Robot Interaction: A Survey; Now Publishers Inc.: Delft, The Netherlands, 2008. [Google Scholar]

- Thrun, S. Toward a framework for human-robot interaction. Hum.-Comput. Interact. 2004, 19, 9–24. [Google Scholar]

- Fraboni, F.; Gualtieri, L.; Millo, F.; De Marchi, M.; Pietrantoni, L.; Rauch, E. Human-Robot Collaboration During Assembly Tasks: The Cognitive Effects of Collaborative Assembly Workstation Features. In Proceedings of the Congress of the International Ergonomics Association, online, 13–18 June 2021; pp. 242–249. [Google Scholar]

- Harriott, C.E.; Buford, G.L.; Adams, J.A.; Zhang, T. Mental workload and task performance in peer-based human-robot teams. J.-Hum.-Robot. Interact. 2015, 4, 61–96. [Google Scholar] [CrossRef] [Green Version]

- Krüger, J.; Lien, T.K.; Verl, A. Cooperation of human and machines in assembly lines. CIRP Ann. 2009, 58, 628–646. [Google Scholar] [CrossRef]

- Raymond, L.; St-Pierre, J. Antecedents and performance outcomes of advanced manufacturing systems sophistication in SMEs. Int. J. Oper. Prod. Manag. 2005, 25, 514–533. [Google Scholar] [CrossRef]

- Yin, Y.; Stecke, K.E.; Li, D. The evolution of production systems from Industry 2.0 through Industry 4.0. Int. J. Prod. Res. 2018, 56, 848–861. [Google Scholar] [CrossRef] [Green Version]

- Lattanzi, L.; Raffaeli, R.; Peruzzini, M.; Pellicciari, M. Digital twin for smart manufacturing: A review of concepts towards a practical industrial implementation. Int. J. Comput. Integr. Manuf. 2021, 34, 567–597. [Google Scholar] [CrossRef]

- Dianatfar, M.; Latokartano, J.; Lanz, M. Review on existing VR/AR solutions in human-robot collaboration. Procedia CIRP 2021, 97, 407–411. [Google Scholar] [CrossRef]

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human–robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Wonsick, M.; Padir, T. A Systematic Review of Virtual Reality Interfaces for Controlling and Interacting with Robots. Appl. Sci. 2020, 10, 9051. [Google Scholar] [CrossRef]

- De Giorgio, A.; Romero, M.; Onori, M.; Wang, L. Human-machine collaboration in virtual reality for adaptive production engineering. Procedia Manuf. 2017, 11, 1279–1287. [Google Scholar] [CrossRef]

- Gammieri, L.; Schumann, M.; Pelliccia, L.; Di Gironimo, G.; Klimant, P. Coupling of a redundant manipulator with a virtual reality environment to enhance human-robot cooperation. Procedia CIRP 2017, 62, 618–623. [Google Scholar] [CrossRef]

- Matsas, E.; Vosniakos, G.C.; Batras, D. Modelling simple human-robot collaborative manufacturing tasks in interactive virtual environments. In Proceedings of the 2016 Virtual Reality International Conference, Laval, France, 23–25 March 2016; pp. 1–4. [Google Scholar]

- Wang, Q.; Cheng, Y.; Jiao, W.; Johnson, M.T.; Zhang, Y. Virtual reality human-robot collaborative welding: A case study of weaving gas tungsten arc welding. J. Manuf. Process. 2019, 48, 210–217. [Google Scholar] [CrossRef]

- Dimitrokalli, A.; Vosniakos, G.C.; Nathanael, D.; Matsas, E. On the assessment of human-robot collaboration in mechanical product assembly by use of Virtual Reality. Procedia Manuf. 2020, 51, 627–634. [Google Scholar] [CrossRef]

- Pérez, L.; Diez, E.; Usamentiaga, R.; García, D.F. Industrial robot control and operator training using virtual reality interfaces. Comput. Ind. 2019, 109, 114–120. [Google Scholar] [CrossRef]

- Prati, E.; Peruzzini, M.; Pellicciari, M.; Raffaeli, R. How to include User eXperience in the design of Human-Robot Interaction. Robot.-Comput.-Integr. Manuf. 2021, 68, 102072. [Google Scholar] [CrossRef]

- Daria, B.; Martina, C.; Alessandro, P.; Fabio, S.; Valentina, V.; Zennaro, I. Integrating mocap system and immersive reality for efficient human-centred workstation design. IFAC-PapersOnLine 2018, 51, 188–193. [Google Scholar] [CrossRef]

- Caputo, F.; Greco, A.; D’Amato, E.; Notaro, I.; Spada, S. On the use of Virtual Reality for a human-centered workplace design. Procedia Struct. Integr. 2018, 8, 297–308. [Google Scholar] [CrossRef]

- Peruzzini, M.; Carassai, S.; Pellicciari, M. The benefits of human-centred design in industrial practices: Re-design of workstations in pipe industry. Procedia Manuf. 2017, 11, 1247–1254. [Google Scholar] [CrossRef]

- Grajewski, D.; Górski, F.; Zawadzki, P.; Hamrol, A. Application of virtual reality techniques in design of ergonomic manufacturing workplaces. Procedia Comput. Sci. 2013, 25, 289–301. [Google Scholar] [CrossRef] [Green Version]

- Menck, N.; Yang, X.; Weidig, C.; Winkes, P.; Lauer, C.; Hagen, H.; Hamann, B.; Aurich, J. Collaborative factory planning in virtual reality. Procedia CIRP 2012, 3, 317–322. [Google Scholar] [CrossRef] [Green Version]

- Gavish, N.; Gutiérrez, T.; Webel, S.; Rodríguez, J.; Peveri, M.; Bockholt, U.; Tecchia, F. Evaluating virtual reality and augmented reality training for industrial maintenance and assembly tasks. Interact. Learn. Environ. 2015, 23, 778–798. [Google Scholar] [CrossRef]

- Loch, F.; Vogel-Heuser, B. A virtual training system for aging employees in machine operation. In Proceedings of the 2017 IEEE 15th International Conference on Industrial Informatics (INDIN), Emden, Germany, 24–26 July 2017; pp. 279–284. [Google Scholar]

- Berg, L.P.; Vance, J.M. Industry use of virtual reality in product design and manufacturing : A survey. Virtual Real. 2017, 21, 1–17. [Google Scholar] [CrossRef]

- Bruno, F.; Muzzupappa, M. Product interface design: A participatory approach based on virtual reality. Int. J.-Hum.-Comput. Stud. 2010, 68, 254–269. [Google Scholar] [CrossRef]

- Morra, L.; Lamberti, F.; Pratticó, F.G.; La Rosa, S.; Montuschi, P. Building trust in autonomous vehicles: Role of virtual reality driving simulators in HMI design. IEEE Trans. Veh. Technol. 2019, 68, 9438–9450. [Google Scholar] [CrossRef]

- Shi, Y.; Azzolin, N.; Picardi, A.; Zhu, T.; Bordegoni, M.; Caruso, G. A Virtual Reality-based Platform to Validate HMI Design for Increasing User’s Trust in Autonomous Vehicle. Comput.-Aided Des. Appl. 2020, 18, 502–518. [Google Scholar] [CrossRef]

- Stadler, S.; Cornet, H.; Huang, D.; Frenkler, F. Designing Tomorrow’s Human-Machine Interfaces in Autonomous Vehicles: An Exploratory Study in Virtual Reality. In Augmented Reality and Virtual Reality; Springer: Cham, Switzerland, 2020; pp. 151–160. [Google Scholar]

- Prati, E.; Villani, V.; Peruzzini, M.; Sabattini, L. Use of interaction design methodologies for human-robot collaboration in industrial scenarios. IEEE Trans. Autom. Sci. Eng. 2021. [Google Scholar] [CrossRef]

- Prati, E.; Grandi, F.; Peruzzini, M. Usability Testing on Tractor’s HMI: A Study Protocol. In Proceedings of the International Conference on Human-Computer Interaction, Virtual Event, 24–29 July 2021; pp. 294–311. [Google Scholar]

- Villani, V.; Lotti, G.; Battilani, N.; Fantuzzi, C. Survey on usability assessment for industrial user interfaces. IFAC-PapersOnLine 2019, 52, 25–30. [Google Scholar] [CrossRef]

- Villani, V.; Capelli, B.; Sabattini, L. Use of virtual reality for the evaluation of human-robot interaction systems in complex scenarios. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 422–427. [Google Scholar]

- Barnum, C.M. Usability Testing Essentials: Ready, Set... Test! Morgan Kaufmann: Burlington, MA, USA, 2020. [Google Scholar]

- Nielsen, J.; Landauer, T.K. A mathematical model of the finding of usability problems. In Proceedings of the INTERACT’93 and CHI’93 Conference on Human Factors in Computing Systems, Amsterdam, The Netherlands, 24–29 April 1993; pp. 206–213. [Google Scholar]

- Grandi, F.; Zanni, L.; Peruzzini, M.; Pellicciari, M.; Campanella, C.E. A Transdisciplinary digital approach for tractor’s human-centred design. Int. J. Comput. Integr. Manuf. 2020, 33, 377–397. [Google Scholar] [CrossRef]

- Albert, W.; Tullis, T. Measuring the User Experience: Collecting, Analyzing, and Presenting Usability Metrics; Newnes: London, UK, 2013. [Google Scholar]

- Borsci, S.; Federici, S.; Bacci, S.; Gnaldi, M.; Bartolucci, F. Assessing user satisfaction in the era of user experience: Comparison of the SUS, UMUX, and UMUX-LITE as a function of product experience. Int. J.-Hum.-Comput. Interact. 2015, 31, 484–495. [Google Scholar] [CrossRef]

| Reference No. | Application Area | Main Results |

|---|---|---|

| [20] | Industrial workplaces | Ergonomic assessment of future workplace solutions |

| [21] | Automotive assembly lines | Biomechanical effort and ergonomics assessment |

| [22] | Pipe industry | Physical and cognitive ergonomics optimization |

| [23] | Industrial workplaces | Workplace design assessment |

| [24] | Industrial workplaces | Factory planning |

| [25] | Industrial maintenance and assembly | Training and support of operators |

| [26] | Industrial workplaces | Training of operators |

| [27] | Industrial workplaces | Product and process design |

| [28] | Product interface design | Participatory interface design |

| [29] | Autonomous Vehicles | User’s level of trust testing |

| [30] | Autonomous Vehicles | User’s level of trust testing |

| [31] | Autonomous Vehicles | HMI concept testing |

| Element | Description |

|---|---|

| Floor | It defines the size of the area to create. It is not an optional item and must be added in any scene. It corresponds to a surface, to be used as floor. |

| Structural | It defines walls and ceiling. |

| Directional Light | It simulates outdoor light, made of parallel and infinite rays. It is incident on any element in the scene. |

| Lamp | It is pointwise light used to simulate lamps that can illuminate a limited area around. |

| Table | It represents any flat surface that can be used as worktable. |

| Shelf | It includes any type of shelves. |

| Crate | It includes any box. |

| Carrier | It includes any tool that can be used to move items, such as pallet jacks, forklifts and automated guided vehicles. |

| Robot arm | It includes any type of robot arms. |

| Conveyor | It is specific for conveyors and can be used to create complex networks and arrangements of conveyor belts. |

| Situational factors | They include, for example, the presence of noise, dust or other environmental conditions, and of human agents, with any possible personal protective equipment. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prati, E.; Villani, V.; Peruzzini, M.; Sabattini, L. An Approach Based on VR to Design Industrial Human-Robot Collaborative Workstations. Appl. Sci. 2021, 11, 11773. https://doi.org/10.3390/app112411773

Prati E, Villani V, Peruzzini M, Sabattini L. An Approach Based on VR to Design Industrial Human-Robot Collaborative Workstations. Applied Sciences. 2021; 11(24):11773. https://doi.org/10.3390/app112411773

Chicago/Turabian StylePrati, Elisa, Valeria Villani, Margherita Peruzzini, and Lorenzo Sabattini. 2021. "An Approach Based on VR to Design Industrial Human-Robot Collaborative Workstations" Applied Sciences 11, no. 24: 11773. https://doi.org/10.3390/app112411773

APA StylePrati, E., Villani, V., Peruzzini, M., & Sabattini, L. (2021). An Approach Based on VR to Design Industrial Human-Robot Collaborative Workstations. Applied Sciences, 11(24), 11773. https://doi.org/10.3390/app112411773