K-Means-Based Nature-Inspired Metaheuristic Algorithms for Automatic Data Clustering Problems: Recent Advances and Future Directions

Abstract

:1. Introduction

2. Scientific Background

2.1. Nature-Inspired Metaheuristics for Automatic Clustering Problems

2.2. Review Methodology

2.2.1. Research Questions

- RQ1: What are the various nature-inspired meta-heuristics techniques that have been hybridized with the K-means clustering algorithm?

- RQ2: Which of the reported hybridization of nature-inspired meta-heuristics techniques with K-means clustering algorithm handled automatic clustering problems?

- RQ3: What various automatic clustering approaches were adopted in the reported hybridization?

- RQ4: What contributions were made to improve the performance of the K-means clustering algorithm in handling automatic clustering problems?

- RQ5: What is the rate of publication of hybridization of K-means with nature-inspired meta-heuristic algorithms for automatic clustering?

2.3. Adopted Strategy for Article Selection

3. Data Synthesis and Analysis

3.1. RQ1. What Are the Various Nature-Inspired Meta-Heuristics Techniques That Have Been Hybridized with the K-Means Clustering Algorithm?

3.1.1. Genetic Algorithm

3.1.2. Particle Swarm Optimization

3.1.3. Firefly Algorithm

3.1.4. Bat Algorithm

3.1.5. Flower Pollination Algorithm

3.1.6. Artificial Bee Colony

3.1.7. Grey Wolf Optimizer

3.1.8. Sine–Cosine Algorithm

3.1.9. Cuckoo Search Algorithm

3.1.10. Differential Evolution

3.1.11. Invasive Weed Optimization

3.1.12. Imperialist Competitive Algorithm

3.1.13. Harmony Search

3.1.14. Blackhole Algorithm

3.1.15. Membrane Computing

3.1.16. Dragonfly Algorithm

3.1.17. Ant Lion Optimizer

3.1.18. Social Spider Algorithm

3.1.19. Fruit Fly Optimization

3.1.20. Bees Swarm Optimization

3.1.21. Bacterial Colony Optimization

3.1.22. Stochastic Diffusion Search

3.1.23. Honey Bee Mating Optimization

3.1.24. Cockroach Swarm Optimization

3.1.25. Glowworm Swarm Optimization

3.1.26. Bee Colony Optimization

3.1.27. Symbiotic Organism Search

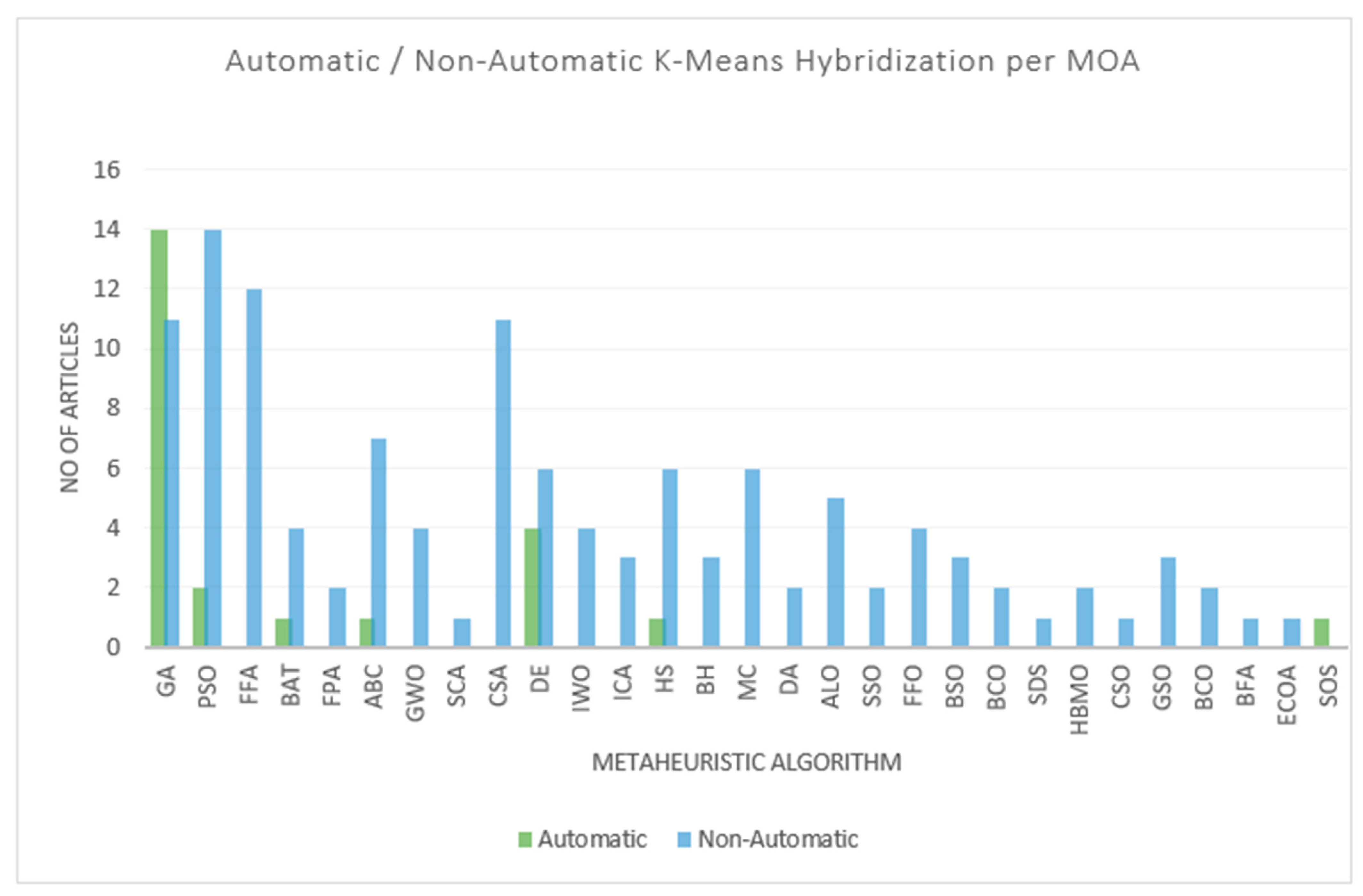

3.2. RQ2. Which of the Reported Hybridization of Nature-Inspired Meta-Heuristics Techniques with K-Means Clustering Algorithm Handled Automatic Clustering Problems?

3.3. RQ3. What Were the Various Automatic Clustering Approaches Adopted in the Reported Hybridization?

3.4. RQ4. What Were the Contributions Made to Improve the Performance of the K-Means Clustering Algorithm in Handling Automatic Clustering Problems?

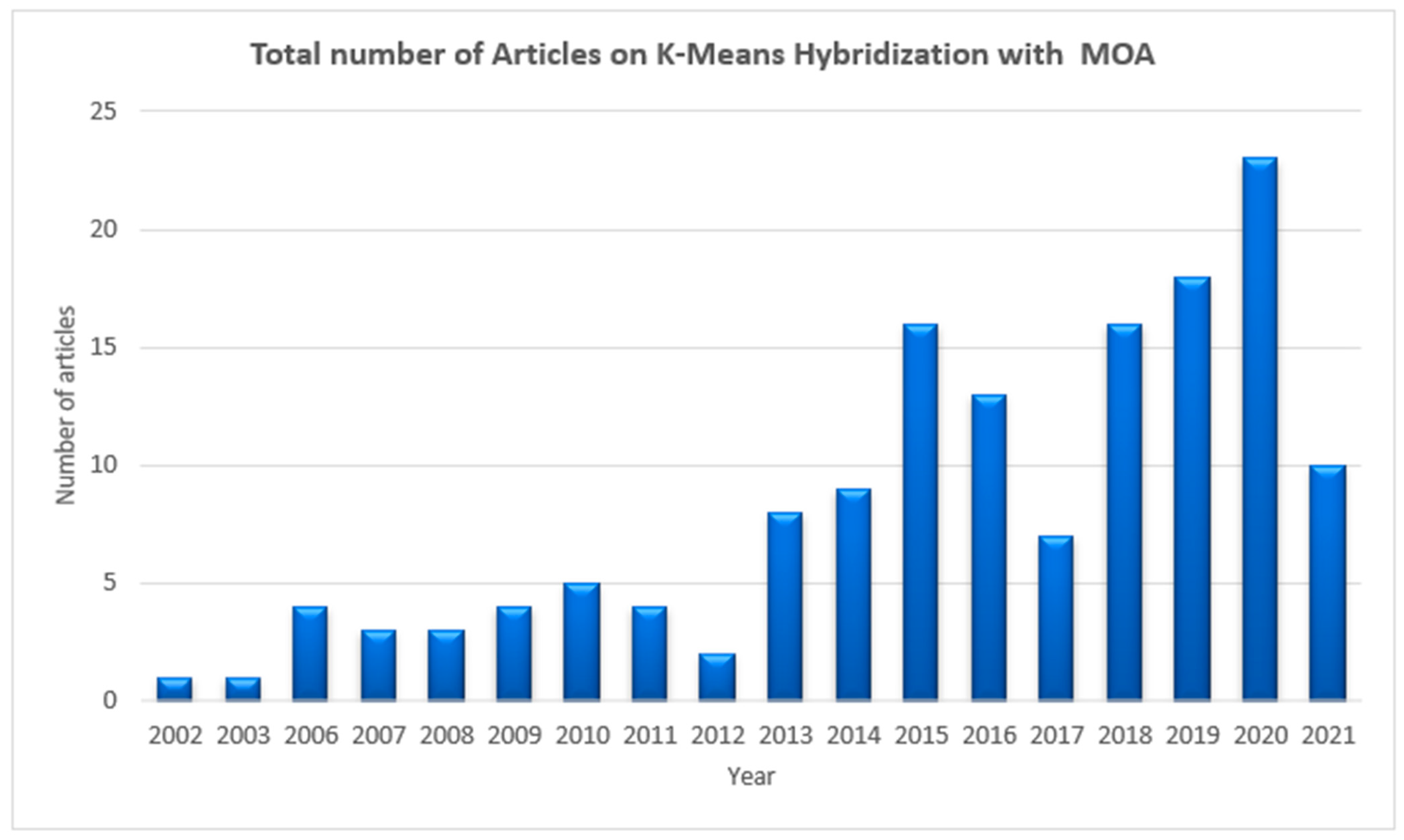

3.5. RQ5. What Is the Rate of Publication of Hybridization of K-Means with Nature-Inspired Meta-Heuristic Algorithms for Automatic Clustering?

Publications Trend of K-Means Hybridization with MOA

4. Results and Discussions

4.1. Metrics

4.2. Strength of This Study

4.3. Weakness of This Study

4.4. Hybridization of K-Means with MOA

4.5. Impact of Automatic Hybridized K-Means with MOA

4.6. Trending Areas of Application of Hybridized K-Means with MOA

4.7. Research Implication and Future Directions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ABC | Artificial Bee Colony |

| ABC-KM | Artificial Bee Colony K-Means |

| ABDWT-FCM | Artificial Bee Colony based discrete wavelet transform with fuzzy c-mean |

| AC | Accuracy of Clustering |

| ACA-AL | Agglomerative clustering algorithm with average link |

| ACA-CL | Agglomerative clustering algorithm with complete link |

| ACA-SL | Agglomerative clustering algorithm with single link |

| ACDE-K-means | Automatic Clustering-based differential Evolution algorithm with K-Means |

| ACN | Average Correct Number |

| ACO | Ant Colony Optimization |

| ACO-SA | Ant Colony Optimization with Simulated Annealing |

| AGCUK | Automatic Genetic Clustering for Unknown K |

| AGWDWT-FCM | Adaptive Grey Wolf-based Discrete Wavelet Transform with Fuzzy C-mean |

| ALO | Ant Lion Optimizer |

| ALO-K | Ant Lion Optimizer with K-Means |

| ALPSOC | Ant Lion Particle Swarm Optimization |

| ANFIS | Adaptive Network based Fuzzy Inference System |

| ANOVA | Analysis of Variance |

| AR | Accuracy Rate |

| ARI | Adjusted Rand Index |

| ARMIR | Association Rule Mining for Information Retrieval |

| BBBC | Big Bang Big Crunch |

| BCO | Bacterial Colony Optimization |

| BCO+KM | Bacterial Colony Optimization with K-Means |

| BFCA | Bacterial Foraging Clustering Algorithm |

| BFGSA | Bird Flock Gravitational Search Algorithm |

| BFO | Bacterial foraging Optimization |

| BGLL | A modularity-based algorithm by Blondel, Guillaume, Lambiotte, and Lefebvre |

| BH | Black Hole |

| BH-BK | Black Hole and Bisecting K-means |

| BKBA | K-Means Binary Bat Algorithm |

| BPN | Back Propagation Network |

| BPZ | Bavarian Postal Zones Data |

| BSO | Bees Swarm Optimization |

| BSO-CLARA | Bees Swarm Optimization Clustering Large Dataset |

| BSOGD1 | Bees Swarm Optimization Guided by Decomposition |

| BTD | British Town Data |

| C4.5 | Tree-induction algorithm for Classification problems |

| CAABC | Chaotic Adaptive Artificial Bee Colony Algorithm |

| CAABC-K | Chaotic Adaptive Artificial Bee Colony Algorithm with K-Means |

| CABC | Chaotic Artificial Bee Colony |

| CCI | Correctly Classified Instance |

| CCIA | Cluster Centre Initialization Algorithm |

| CDE | Clustering Based Differential Evolution |

| CFA | Chaos-based Firefly Algorithm |

| CGABC | Chaotic Gradient Artificial Bee Colony |

| CIEFA | Compound Inward Intensified Exploration Firefly Algorithm |

| CLARA | Clustering Large Applications |

| CLARANS | Clustering Algorithm based on Randomized Search |

| CMC | Contraceptive Method Choice |

| CMIWO K-Means | Cloud model-based Invasive weed Optimization |

| CMIWOKM | Combining Invasive weed optimization and K-means |

| COA | Cuckoo Optimization Algorithm |

| COFS | Cuckoo Optimization for Feature Selection |

| CPU | Central Processing Unit |

| CRC | Chinese Restaurant Clustering |

| CRPSO | Craziness based Particle Swarm Optimization |

| CS | Cuckoo Search |

| CSA | Cuckoo Search Algorithm |

| CS-K-means | Cuckoo Search K-Means |

| CSO | Cockroach Swarm Optimization |

| CSOAKM | Cockroach Swarm Optimization and K-Means |

| CSOS | Clustering based Symbiotic Organism Search |

| DA | Dragonfly Algorithm |

| DADWT-FCM | Dragonfly Algorithm based discrete wavelet transform with fuzzy c-mean |

| DBI | Davies-Bouldin Index |

| DBSCAN | Density-Based Spatial Clustering of Applications with Noise |

| DCPSO | Dynamic Clustering Particle Swarm Optimization |

| DDI | Dunn-Dunn Index |

| DE | Differential Evolution |

| DEA-based K-means | Data Envelopment Analysis based K-Means |

| DE-AKO | Differential Evolution with K-Means Operation |

| DE-ANS-AKO | Differential Evolution with adaptive niching and K-Means Operation |

| DEFOA-K-means | Differential Evolution Fruit Fly Optimization Algorithm with K-means |

| DE-KM | Differential Evolution and K-Means |

| DE-SVR | Differential Evolution -Support Vector Regression |

| DFBPKBA | Dynamic frequency-based parallel K-Bat Algorithm |

| DFSABCelite | ABC with depth-first search framework and elite-guided search equation |

| DMOZ | A dataset |

| DNA | Deoxyribonucleic Acid |

| DR | Detection Rate |

| DWT-FCM | Discrete wavelets transform with fuzzy c-mean |

| EABC | Enhanced Artificial Bee Colony |

| EABCK | Enhanced Artificial Bee Colony K-Means |

| EBA | Enhanced Bat Algorithm |

| ECOA | Extended Cuckoo Optimization Algorithm |

| ECOA-K | Extended Cuckoo Optimization Algorithm K-means |

| EFC | Entropy-based Fuzzy Clustering |

| EPSONS | PSO based on new neighborhood search strategy with diversity mechanism and Cauchy mutation operator |

| ER | Error Rate |

| ESA | Elephant Search Algorithm |

| EShBAT | Enhanced Shuffled Bat Algorithm |

| FA | Firefly Algorithm |

| FACTS | Flexible AC Transmission Systems |

| FA-K | Firefly-based K-Means Algorithm |

| FA-K-Means | Firefly K-Means |

| FAPSO-ACO-K | Fuzzy adaptive Particle Swarm Optimization with Ant Colony Optimization and K-Means |

| FA-SVR | Firefly Algorithm based Support Vector Regression |

| FBCO | Fuzzy Bacterial Colony Optimization |

| FBFO | Fractional Bacterial Foraging Optimization |

| FCM | Fuzzy C-Means |

| FCM-FA | Fuzzy C-Means Firefly Algorithm |

| FCMGWO | Fuzzy C-means Grey Wolf Optimization |

| FCSA | Fuzzy Cuckoo Search Algorithm |

| FFA-KELM | Firefly Algorithm based Kernel Extreme Learning Machine |

| FFO | Fruit Fly Optimization |

| FGKA | Fast Genetic K-means Algorithm |

| FI | F-Measure |

| FKM | Fuzzy K-Means |

| FM | F-Measure |

| FN | A modularity-based algorithm by Newman |

| FOAKFCM | Kernel-based Fuzzy C-Mean clustering based on Fruitfly Algorithm |

| FPA | Flower Pollination Algorithm |

| FPAGA | Flower Pollination Algorithm and Genetic Algorithm |

| FPAKM | Flower Pollination Algorithm K-Means |

| FPR | False Positive Rate |

| FPSO | Fuzzy Particle Swarm Optimization |

| FSDP | Fast Search for Density Peaks |

| GA | Genetic Algorithm |

| GABEEC | Genetic Algorithm Based Energy-efficient Clusters |

| GADWT | Genetic Algorithm Discrete Wavelength Transform |

| GAEEP | Genetic Algorithm Based Energy Efficient adaptive clustering hierarchy Protocol |

| GAGR | Genetic Algorithm with Gene Rearrangement |

| GAK | Genetic K-Means Algorithm |

| GAS3 | Genetic Algorithm with Species and Sexual Selection |

| GAS3KM | Modifying Genetic Algorithm with species and sexual selection using K-Means |

| GA-SVR | Genetic Algorithm based Support Vector Regression |

| GCUK | Genetic Clustering for unknown K |

| GENCLUST | Genetic Clustering |

| GENCLUST-F | Genetic Clustering variant |

| GENCLUST-H | Genetic Clustering variant |

| GGA | Genetically Guided Algorithm |

| GKA | Genetic K-Means Algorithm |

| GKM | Genetic K-Means Membranes |

| GKMC | Genetic K-Means Clustering |

| GM | Gaussian Mixture |

| GN | A modularity-based algorithm by Girvan and Newman |

| GP | Genetic Programming |

| GPS | Global Position System |

| GSI | Geological Survey of Iran |

| GSO | Glowworm Swarm Optimization |

| GSOKHM | Glowworm Swarm Optimization |

| GTD | Global Terrorist Dataset |

| GWDWT-FCM | Grey Wolf-based Discrete Wavelength Transform with Fuzzy C-Means |

| GWO | Grey wolf optimizer |

| GWO-K-Means | Grey wolf optimizer K-means |

| HABC | Hybrid Artificial Bee Colony |

| HBMO | Honeybees Mating Optimization |

| HCSPSO | Hybrid Cuckoo Search with Particle Swarm Optimization and K-Means |

| HESB | Hybrid Enhanced Shuffled Bat Algorithm |

| HFCA | Hybrid Fuzzy Clustering Algorithm |

| HHMA | Hybrid Heuristic Mathematics Algorithm |

| HKA | Harmony K-Means Algorithm |

| HS | Harmony Search |

| HSA | Harmony Search Algorithm |

| HSCDA | Hybrid Self-adaptive Community Detection algorithms |

| HSCLUST | Harmony Search clustering |

| HSKH | Harmony Search K-Means Hybrid |

| HS-K-means | Harmony Search K-Means |

| IABC | Improved Artificial Bee Colony |

| IBCOCLUST | Improved Bee Colony Optimization Clustering |

| ICA | Imperialist Competitive Algorithm |

| ICAFKM | Imperialist Competitive Algorithm with Fuzzy K Means |

| ICAKHM | Imperial Competitive Algorithm with K-Harmonic Mean |

| ICAKM | Imperial Competitive Algorithm with K-Mean |

| ICGSO | Image Clustering Glowworm Swarm Optimization |

| ICMPKHM | Improved Cuckoo Search with Modified Particle Swarm Optimization and K-Harmonic Mean |

| ICS | Improved Cuckoo Search |

| ICS-K-means | Improved Cuckoo Search K-Means |

| ICV | Intracluster Variation |

| IFCM | Interactive Fuzzy C-Means |

| IGBHSK | Global Best Harmony Search K-Means |

| IGNB | Information Gain-Naïve Bayes |

| IIEFA | Inward Intensified Exploration Firefly Algorithm |

| IPSO | Improved Particle Swarm Optimization |

| IPSO-K-Means | Improved Particle swarm Optimization with K-Means |

| IWO | Invasive weed optimization |

| IWO-K-Means | Invasive weed Optimization K-means |

| kABC | K-Means Artificial Bee Colony |

| KBat | Bat Algorithm with K-Means Clustering |

| KCPSO | K-Means and Combinatorial Particle Swarm Optimization |

| K-FA | K-Means Firefly Algorithm |

| KFCFA | K-member Fuzzy Clustering and Firefly Algorithm |

| KFCM | Kernel-based Fuzzy C-Mean Algorithm |

| KGA | K-Means Genetic Algorithm |

| K-GWO | Grey wolf optimizer with traditional K-Means |

| KHM | K-Harmonic Means |

| K-HS | Harmony K-Means Algorithm |

| KIBCLUST | K-Means with Improved bee colony |

| KMBA | K-Means Bat Algorithm |

| KMCLUST | K-Means Modified Bee Colony K-means |

| K-Means FFO | K-Means Fruit fly Optimization |

| KMeans-ALO | K-Means with Ant Lion Optimization |

| K-Means-FFA-KELM | Kernel Extreme Learning Machine Model coupled with K-means clustering and Firefly algorithm |

| KMGWO | K-Means Grey wolf optimizer |

| K-MICA | K-Means Modified Imperialist Competitive Algorithm |

| KMQGA | Quantum-inspired Genetic Algorithm for K-Means Algorithm |

| KMVGA | K-Means clustering algorithm based on Variable string length Genetic Algorithm |

| K-NM-PSO | K-Means Nelder–Mead Particle Swarm Optimization |

| KNNIR | K-Nearest Neighbors for Information Retrieval |

| KPA | K-means with Flower pollination algorithm |

| KPSO | K-means with Particle Swarm Optimization |

| KSRPSO | K-Means selective regeneration Particle Swarm Optimization |

| LEACH | Low-Energy Adaptive Clustering Hierarchy |

| MABC-K | Modified Artificial Bee Colony |

| MAE | Mean Absolute Error |

| MAX-SAT | Maximum satisfiability problem |

| MBCO | Modified Bee Colony K-means |

| MC | Membrane Computing |

| MCSO | Modified Cockroach Swarm Optimization |

| MEQPSO | Multi-Elitist Quantum-behaved Particle Swarm Optimization |

| MFA | Modified Firefly Algorithm |

| MFOA | Modified Fruit Fly Optimization Algorithm |

| MfPSO | Modified Particle Swarm Optimization |

| MICA | Modified Imperialist Competitive Algorithm |

| MKCLUST | Modified Bee Colony K-means Clustering |

| MKF-Cuckoo | Multiple Kernel-Based Fuzzy C-Means with Cuckoo Search |

| MN | Multimodal Nonseparable function |

| MOA | Meta-heuristic Optimization Algorithm |

| MPKM | Modified Point symmetry-based K-Means |

| MSE | Mean Square Error |

| MTSP | Multiple Traveling Salesman Problem |

| NaFA | Firefly Algorithm with neighborhood attraction |

| NGA | Niche Genetic Algorithm |

| NGKA | Niching Genetic K-means Algorithm |

| NM-PSO | Nelder–Mead simplex search with Particle Swarm Optimization |

| NNGA | Novel Niching Genetic Algorithm |

| Noiseclust | Noise clustering |

| NR-ELM | Neighborhood-based ratio (NR) and Extreme Learning Machine (ELM) |

| NSE | Nash-Sutcliffe Efficiency |

| NSL-KDD | NSL Knowledge Discovery and Data Mining |

| PAM | Partitioning Around Medoids |

| PCA | Principal component analysis |

| PCA-GAKM | Principal Component Analysis with Genetic Algorithm and K-means |

| PCAK | Principal Component Analysis K-means |

| PCA-SOM | Principal Component Analysis and Self-Organizing Map |

| PCAWK | Principal component analysis |

| PGAClust | Parallel Genetic Algorithm Clustering |

| PGKA | Prototypes-embedded Genetic K-means Algorithm |

| P-HS | Progressive Harmony Search |

| P-HS-K | Progressive Harmony Search with K-means |

| PIMA | Indian diabetic dataset |

| PNSR | Peak Signal to Noise Ratio |

| PR | Precision-Recall |

| PSC-RCE | Particle Swarm Clustering with Rapid Centroid Estimation |

| PSDWT-FCM | Particle Swarm based Discrete Wavelength Transform with Fuzzy C-Means |

| PSNR | Peak Signal-to-Noise Ratio |

| PSO | Particle Swarm Optimization |

| PSO-ACO | Particle Swarm Optimization and Ant Colony Optimization |

| PSO-FCM | Particle Swarm Optimization with Fuzzy C-Means |

| PSOFKM | Particle Swarm Optimization with Fuzzy K-means |

| PSOK | Particle Swarm Optimization with K-Means based clustering |

| PSOKHM | Particle Swarm Optimization with K-Harmonic Mean |

| PSO-KM | PSO-based K-Means clustering algorithm |

| PSOLF-KHM | Particle Swarm Optimization with Levy Flight and K-Harmonic Mean Algorithm |

| PSOM | Particle Swarm optimization with mutation operation |

| PSO-SA | Particle Swarm Optimization with Simulated Annealing |

| PSO-SVR | Particle Swarm Optimization based Support Vector Regression |

| PTM | Pattern Taxonomy Mining |

| QALO-K | Quantum Ant Lion Optimizer with K-Means |

| rCMA-ES | restart Covariance Matrix Adaptation Evolution Strategy |

| RMSE | Root Mean Square Error |

| ROC | Receive Operating Characteristics |

| RSC | Relevant Set Correlation clustering model |

| RVPSO-K | K-Means cluster algorithm based on Improved velocity of Particle Swarm Optimization cluster algorithm |

| RWFOA | Fruit Fly Optimization based on Stochastic Inertia Weight |

| SA | Simulated Annealing |

| SaNSDE | Self-adaptive Differential Evolution with Neighborhood Search |

| SAR | Synthetic Aperture Radar |

| SCA | Sine-Cosine Algorithm |

| SCAK-Means | Sine-Cosine Algorithm with K-means |

| SD | Standard Deviation |

| SDM | Sexual Determination Method |

| SDME | Second Derivative-like Measure of Enhancements |

| SDN | Software defined Network |

| SDS | Stochastic Diffusion Search |

| SFLA-CQ | Shuffled frog leaping algorithm for Color quantization |

| SHADE | Success-History based Adaptive Differential Evolution |

| SI | Scatter Index |

| SI | Silhouette Index |

| SIM dataset | Simulated dataset |

| SMEER | Secure multi-tier energy-efficient routing protocol |

| SOM | Self-Organizing Feature Maps |

| SOM+K | Self-Organizing Feature Maps neural networks with K-Means |

| SRPSO | Selective Regeneration Particle Swarm Optimization |

| SSB | Sum of Square Between |

| SSE | Sum of Square Error |

| SSIM | Structural Similarity |

| SS-KMeans | Scattering search K-Means |

| SSO | Social Spider Optimization |

| SSOKC | Social Spider Optimization with K-Means Clustering |

| SSW | Sum of Square within |

| SVC | Support Vector Clustering |

| SVM+GA | Support Vector Machine with Genetic Algorithm |

| SVMIR | Support Vector Machine for Information Retrieval |

| TCSC | Thyristor Controlled Series Compensator |

| TKMC | Traditional K-means Clustering |

| TP | True Positivity Rate |

| TPR | True Positivity Rate |

| TREC | Text Retrieval Conference dataset |

| TS | Tabu Search |

| TSMPSO | Two-Stage diversity mechanism in Multiobjective Particle Swarm Optimization |

| TSP-LIB-1600 | dataset for Travelling Salesman Problem |

| TSP-LIB-3038 | dataset for Travelling Salesman Problem |

| UCC | U-Control Chart |

| UCI | University of California Irvine |

| UN | Unimodal Nonseparable function |

| UPFC | Unified Power Flow Controller |

| US | Unimodal Separable function |

| VGA | Variable string length Genetic Algorithm |

| VSGSO-D K-means | Variable Step-size glowworm swarm optimization |

| VSSFA | Variable Step size firefly Algorithm |

| WDBC | Wisconsin Diagnostic Breast Cancer |

| WHDA-FCM | Wolf hunting based dragonfly with Fuzzy C-Means |

| WK-Means | Weight-based K-Means |

| WOA | Whale Optimization Algorithm |

| WOA-BAT | Whale Optimization Algorithm with Bat Algorithm |

| WSN | Wireless Sensor Networks |

References

- Ezugwu, A.E. Nature-inspired metaheuristic techniques for automatic clustering: A survey and performance study. SN Appl. Sci. 2020, 2, 273. [Google Scholar] [CrossRef] [Green Version]

- MacQueen, J. Some Methods for Classification and Analysis of Multivariate Observations. Am. J. Hum. Genet. 1969, 21, 407–408. [Google Scholar]

- Kapil, S.; Chawla, M.; Ansari, M.D. On K-Means Data Clustering Algorithm with Genetic Algorithm. In Proceedings of the 2016 Fourth International Conference on Parallel, Distributed and Grid Computing (PDGC), Solan, India, 22–24 December 2016; pp. 202–206. [Google Scholar]

- Ezugwu, A.E.-S.; Agbaje, M.B.; Aljojo, N.; Els, R.; Chiroma, H.; Elaziz, M.A. A Comparative Performance Study of Hybrid Firefly Algorithms for Automatic Data Clustering. IEEE Access 2020, 8, 121089–121118. [Google Scholar] [CrossRef]

- Ezugwu, A.E.; Shukla, A.K.; Agbaje, M.B.; Oyelade, O.N.; José-García, A.; Agushaka, J.O. Automatic clustering algorithms: A systematic review and bibliometric analysis of relevant literature. Neural Comput. Appl. 2020, 33, 6247–6306. [Google Scholar] [CrossRef]

- José-García, A.; Gómez-Flores, W. Automatic clustering using nature-inspired metaheuristics: A survey. Appl. Soft Comput. 2016, 41, 192–213. [Google Scholar] [CrossRef]

- Hruschka, E.; Campello, R.J.G.B.; Freitas, A.A.; de Carvalho, A. A Survey of Evolutionary Algorithms for Clustering. IEEE Trans. Syst. Man Cybern. Part C Appl. Rev. 2009, 39, 133–155. [Google Scholar] [CrossRef] [Green Version]

- Ezugwu, A.E.; Shukla, A.K.; Nath, R.; Akinyelu, A.A.; Agushaka, J.O.; Chiroma, H.; Muhuri, P.K. Metaheuristics: A comprehensive overview and classification along with bibliometric analysis. Artif. Intell. Rev. 2021, 54, 4237–4316. [Google Scholar] [CrossRef]

- Rana, S.; Jasola, S.; Kumar, R. A review on particle swarm optimization algorithms and their applications to data clustering. Artif. Intell. Rev. 2010, 35, 211–222. [Google Scholar] [CrossRef]

- Nanda, S.J.; Panda, G. A survey on nature inspired metaheuristic algorithms for partitional clustering. Swarm Evol. Comput. 2014, 16, 1–18. [Google Scholar] [CrossRef]

- Alam, S.; Dobbie, G.; Koh, Y.S.; Riddle, P.; Rehman, S.U. Research on particle swarm optimization based clustering: A systematic review of literature and techniques. Swarm Evol. Comput. 2014, 17, 1–13. [Google Scholar] [CrossRef]

- Mane, S.U.; Gaikwad, P.G. Nature Inspired Techniques for Data Clustering. In Proceedings of the 2014 International Conference on Circuits, Systems, Communication and Information Technology Applications (CSCITA), Mumbai, India, 4–5 April 2014; pp. 419–424. [Google Scholar]

- Falkenauer, E. Genetic Algorithms and Grouping Problems; John Wiley & Sons, Inc.: London, UK, 1998. [Google Scholar]

- Cowgill, M.; Harvey, R.; Watson, L. A genetic algorithm approach to cluster analysis. Comput. Math. Appl. 1999, 37, 99–108. [Google Scholar] [CrossRef] [Green Version]

- Okwu, M.O.; Tartibu, L.K. Metaheuristic Optimization: Nature-Inspired Algorithms Swarm and Computational Intelligence, Theory and Applications; Springer Nature: Berlin/Heidelberg, Germany, 2020; Volume 927. [Google Scholar]

- Malik, K.; Tayal, A. Comparison of Nature Inspired Metaheuristic Algorithms. Int. J. Electron. Electr. Eng. 2014, 7, 799–802. [Google Scholar]

- Engelbrecht, A.P. Computational Intelligence: An Introduction; John Wiley & Sons: London, UK, 2007. [Google Scholar]

- Agbaje, M.B.; Ezugwu, A.E.; Els, R. Automatic Data Clustering Using Hybrid Firefly Particle Swarm Optimization Algorithm. IEEE Access 2019, 7, 184963–184984. [Google Scholar] [CrossRef]

- Rajakumar, R.; Dhavachelvan, P.; Vengattaraman, T. A Survey on Nature Inspired Meta-Heuristic Algorithms with its Domain Specifications. In Proceedings of the 2016 International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 21–26 October 2016; pp. 1–6. [Google Scholar]

- Ezugwu, A.E. Advanced discrete firefly algorithm with adaptive mutation–based neighborhood search for scheduling unrelated parallel machines with sequence–dependent setup times. Int. J. Intell. Syst. 2021, 1–42. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Sivanandam, S.N.; Deepa, S.N. Genetic algorithms. In Introduction to Genetic Algorithms; Springer: Berlin/Heidelberg, Germany, 2008; pp. 15–37. [Google Scholar]

- Krishna, K.; Murty, M.N. Genetic K-means algorithm. IEEE Trans. Syst. Man Cybern. Part B 1999, 29, 433–439. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bandyopadhyay, S.; Maulik, U. An evolutionary technique based on K-means algorithm for optimal clustering in RN. Inf. Sci. 2002, 146, 221–237. [Google Scholar] [CrossRef]

- Cheng, S.S.; Chao, Y.H.; Wang, H.M.; Fu, H.C. A prototypes-embedded genetic k-means algorithm. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), Hong Kong, China, 20–24 August 2006; Volume 2, pp. 724–727. [Google Scholar]

- Laszlo, M.; Mukherjee, S. A genetic algorithm using hyper-quadtrees for low-dimensional k-means clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 533–543. [Google Scholar] [CrossRef] [PubMed]

- Laszlo, M.; Mukherjee, S. A genetic algorithm that exchanges neighboring centers for k-means clustering. Pattern Recognit. Lett. 2007, 28, 2359–2366. [Google Scholar] [CrossRef]

- Dai, W.; Jiao, C.; He, T. Research of K-Means Clustering Method based on Parallel Genetic Algorithm. In Proceedings of the Third International Conference on Intelligent Information Hiding and Multimedia Signal. Processing (IIH-MSP 2007), Kaohsiung, Taiwan, 26–28 November 2007; Volume 2, pp. 158–161. [Google Scholar]

- Chang, D.-X.; Zhang, X.-D.; Zheng, C.-W. A genetic algorithm with gene rearrangement for K-means clustering. Pattern Recognit. 2009, 42, 1210–1222. [Google Scholar] [CrossRef]

- Sheng, W.; Tucker, A.; Liu, X. A niching genetic k-means algorithm and its applications to gene expression data. Soft Comput. 2008, 14, 9–19. [Google Scholar] [CrossRef]

- Xiao, J.; Yan, Y.; Zhang, J.; Tang, Y. A quantum-inspired genetic algorithm for k-means clustering. Expert Syst. Appl. 2010, 37, 4966–4973. [Google Scholar] [CrossRef]

- Rahman, M.A.; Islam, M.Z. A hybrid clustering technique combining a novel genetic algorithm with K-Means. Knowl.-Based Syst. 2014, 71, 345–365. [Google Scholar] [CrossRef]

- Sinha, A.; Jana, P.K. A Hybrid MapReduce-based k-Means Clustering using Genetic Algorithm for Distributed Datasets. J. Supercomput. 2018, 74, 1562–1579. [Google Scholar] [CrossRef]

- Islam, M.Z.; Estivill-Castro, V.; Rahman, M.A.; Bossomaier, T. Combining K-Means and a genetic algorithm through a novel arrangement of genetic operators for high quality clustering. Expert Syst. Appl. 2018, 91, 402–417. [Google Scholar] [CrossRef]

- Zhang, H.; Zhou, X. A Novel Clustering Algorithm Combining Niche Genetic Algorithm with Canopy and K-Means. In Proceedings of the 2018 International Conference on Artificial Intelligence and Big Data (ICAIBD), Chengdu, China, 26–28 May 2018; pp. 26–32. [Google Scholar]

- Mustafi, D.; Sahoo, G. A hybrid approach using genetic algorithm and the differential evolution heuristic for enhanced initialization of the k-means algorithm with applications in text clustering. Soft Comput. 2019, 23, 6361–6378. [Google Scholar] [CrossRef]

- El-Shorbagy, M.A.; Ayoub, A.Y.; Mousa, A.A.; El-Desoky, I.M. An enhanced genetic algorithm with new mutation for cluster analysis. Comput. Stat. 2019, 34, 1355–1392. [Google Scholar] [CrossRef]

- Ghezelbash, R.; Maghsoudi, A.; Carranza, E.J.M. Optimization of geochemical anomaly detection using a novel genetic K-means clustering (GKMC) algorithm. Comput. Geosci. 2019, 134, 104335. [Google Scholar] [CrossRef]

- Kuo, R.; An, Y.; Wang, H.; Chung, W. Integration of self-organizing feature maps neural network and genetic K-means algorithm for market segmentation. Expert Syst. Appl. 2006, 30, 313–324. [Google Scholar] [CrossRef]

- Li, X.; Zhang, L.; Li, Y.; Wang, Z. An Improved k-Means Clustering Algorithm Combined with the Genetic Algorithm. In Proceedings of the 6th International Conference on Digital Content, Multimedia Technology and Its Applications, Seoul, Korea, 16–18 August 2010; pp. 121–124. [Google Scholar]

- Karegowda, A.G.; Vidya, T.; Jayaram, M.A.; Manjunath, A.S. Improving Performance of k-Means Clustering by Initializing Cluster Centers using Genetic Algorithm and Entropy based Fuzzy Clustering for Categorization of Diabetic Patients. In Proceedings of International Conference on Advances in Computing; Springer: New Delhi, India, 2013; pp. 899–904. [Google Scholar]

- Eshlaghy, A.T.; Razi, F.F. A hybrid grey-based k-means and genetic algorithm for project selection. Int. J. Bus. Inf. Syst. 2015, 18, 141. [Google Scholar] [CrossRef]

- Lu, Z.; Zhang, K.; He, J.; Niu, Y. Applying k-Means Clustering and Genetic Algorithm for Solving MTSP. In International Conference on Bio-Inspired Computing: Theories and Applications; Springer: Singapore, 2016; pp. 278–284. [Google Scholar]

- Barekatain, B.; Dehghani, S.; Pourzaferani, M. An Energy-Aware Routing Protocol for Wireless Sensor Networks Based on New Combination of Genetic Algorithm & k-means. Procedia Comput. Sci. 2015, 72, 552–560. [Google Scholar]

- Zhou, X.; Gu, J.; Shen, S.; Ma, H.; Miao, F.; Zhang, H.; Gong, H. An Automatic K-Means Clustering Algorithm of GPS Data Combining a Novel Niche Genetic Algorithm with Noise and Density. ISPRS Int. J. Geo-Inf. 2017, 6, 392. [Google Scholar] [CrossRef] [Green Version]

- Mohammadrezapour, O.; Kisi, O.; Pourahmad, F. Fuzzy c-means and K-means clustering with genetic algorithm for identification of homogeneous regions of groundwater quality. Neural Comput. Appl. 2018, 32, 3763–3775. [Google Scholar] [CrossRef]

- Esmin, A.A.A.; Coelho, R.A.; Matwin, S. A review on particle swarm optimization algorithm and its variants to clustering high-dimensional data. Artif. Intell. Rev. 2013, 44, 23–45. [Google Scholar] [CrossRef]

- Niu, B.; Duan, Q.; Liu, J.; Tan, L.; Liu, Y. A population-based clustering technique using particle swarm optimization and k-means. Nat. Comput. 2016, 16, 45–59. [Google Scholar] [CrossRef]

- Van der Merwe, D.W.; Engelbrecht, A.P. Data Clustering using Particle Swarm Optimization. In Proceedings of the 2003 Congress on Evolutionary Computation, CEC’03, Canberra, Australia, 8–12 December 2003; Volume 1, pp. 215–220. [Google Scholar]

- Omran, M.G.H.; Salman, A.; Engelbrecht, A.P. Dynamic clustering using particle swarm optimization with application in image segmentation. Pattern Anal. Appl. 2005, 8, 332–344. [Google Scholar] [CrossRef]

- Alam, S.; Dobbie, G.; Riddle, P. An Evolutionary Particle Swarm Optimization Algorithm for Data Clustering. In Proceedings of the 2008 IEEE Swarm Intelligence Symposium, St. Louis, MO, USA, 21–23 September 2008; pp. 1–7. [Google Scholar]

- Kao, I.W.; Tsai, C.Y.; Wang, Y.C. An effective particle swarm optimization method for data clustering. In Proceedings of the 2007 IEEE International Conference on Industrial Engineering and Engineering Management, Singapore, 2 December 2007; pp. 548–552. [Google Scholar]

- Niknam, T.; Amiri, B. An efficient hybrid approach based on PSO, ACO and k-means for cluster analysis. Appl. Soft Comput. 2010, 10, 183–197. [Google Scholar] [CrossRef]

- Thangaraj, R.; Pant, M.; Abraham, A.; Bouvry, P. Particle swarm optimization: Hybridization perspectives and experimental illustrations. Appl. Math. Comput. 2011, 217, 5208–5226. [Google Scholar] [CrossRef]

- Chuang, L.-Y.; Hsiao, C.-J.; Yang, C.-H. Chaotic particle swarm optimization for data clustering. Expert Syst. Appl. 2011, 38, 14555–14563. [Google Scholar] [CrossRef]

- Chen, C.-Y.; Ye, F. Particle Swarm Optimization Algorithm and its Application to Clustering Analysis. In Proceedings of the 17th Conference on Electrical Power Distribution, Tehran, Iran, 2–3 May 2012; pp. 789–794. [Google Scholar]

- Yuwono, M.; Su, S.W.; Moulton, B.D.; Nguyen, H.T. Data clustering using variants of rapid centroid estimation. IEEE Trans. Evol. Comput. 2013, 18, 366–377. [Google Scholar] [CrossRef]

- Omran, M.; Engelbrecht, A.P.; Salman, A. Particle swarm optimization method for image clustering. Int. J. Pattern Recognit. Artif. Intell. 2005, 19, 297–321. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, H. Research on Application of Clustering Algorithm based on PSO for the Web Usage Pattern. In Proceedings of the 2007 International Conference on Wireless Communications, Networking and Mobile Computing, Honolulu, HI, USA, 21–25 September 2007; pp. 3705–3708. [Google Scholar]

- Kao, Y.-T.; Zahara, E.; Kao, I.-W. A hybridized approach to data clustering. Expert Syst. Appl. 2008, 34, 1754–1762. [Google Scholar] [CrossRef]

- Kao, Y.; Lee, S.Y. Combining K-Means and Particle Swarm Optimization for Dynamic Data Clustering Problems. In Proceedings of the 2009 IEEE International Conference on Intelligent Computing and Intelligent Systems, Shanghai, China, 20–22 November 2009; Volume 1, pp. 757–761. [Google Scholar]

- Yang, F.; Sun, T.; Zhang, C. An efficient hybrid data clustering method based on K-harmonic means and Particle Swarm Optimization. Expert Syst. Appl. 2009, 36, 9847–9852. [Google Scholar] [CrossRef]

- Tsai, C.-Y.; Kao, I.-W. Particle swarm optimization with selective particle regeneration for data clustering. Expert Syst. Appl. 2011, 38, 6565–6576. [Google Scholar] [CrossRef]

- Prabha, K.A.; Visalakshi, N.K. Improved Particle Swarm Optimization based k-Means Clustering. In Proceedings of the 2014 International Conference on Intelligent Computing Applications, Coimbatore, India, 6–7 March 2014; pp. 59–63. [Google Scholar]

- Emami, H.; Derakhshan, F. Integrating Fuzzy K-Means, Particle Swarm Optimization, and Imperialist Competitive Algorithm for Data Clustering. Arab. J. Sci. Eng. 2015, 40, 3545–3554. [Google Scholar] [CrossRef]

- Nayak, S.; Panda, C.; Xalxo, Z.; Behera, H.S. An Integrated Clustering Framework Using Optimized K-means with Firefly and Canopies. In Computational Intelligence in Data Mining-Volume 2; Springer: New Delhi, India, 2015; pp. 333–343. [Google Scholar]

- Lloyd, S. Least squares quantization in PCM. IEEE Trans. Inf. Theory 1982, 28, 129–137. [Google Scholar] [CrossRef]

- Ratanavilisagul, C. A Novel Modified Particle Swarm Optimization Algorithm with Mutation for Data Clustering Problem. In Proceedings of the 5th International Conference on Computational Intelligence and Applications (ICCIA), Beijing, China, 19–21 June 2020; pp. 55–59. [Google Scholar]

- Paul, S.; De, S.; Dey, S. A Novel Approach of Data Clustering Using An Improved Particle Swarm Optimization Based K–Means Clustering Algorithm. In Proceedings of the 2020 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Virtual, 2–4 July 2020; pp. 1–6. [Google Scholar]

- Jie, Y.; Yibo, S. The Study for Data Mining of Distribution Network Based on Particle Swarm Optimization with Clustering Algorithm Method. In Proceedings of the 2019 4th International Conference on Power and Renewable Energy (ICPRE), Chengdu, China, 21–23 September 2019; pp. 81–85. [Google Scholar]

- Chen, X.; Miao, P.; Bu, Q. Image Segmentation Algorithm Based on Particle Swarm Optimization with K-means Optimization. In Proceedings of the 2019 IEEE International Conference on Power, Intelligent Computing and Systems (ICPICS), Shenyang, China, 12–14 July 2019; pp. 156–159. [Google Scholar]

- Yang, X.S. Firefly Algorithms for Multimodal Optimization. In Proceedings of the International Symposium on Stochastic Algorithms, Sapporo, Japan, 26–28 October 2009; pp. 169–178. [Google Scholar]

- Xie, H.; Zhang, L.; Lim, C.P.; Yu, Y.; Liu, C.; Liu, H.; Walters, J. Improving K-means clustering with enhanced Firefly Algorithms. Appl. Soft Comput. 2019, 84, 105763. [Google Scholar] [CrossRef]

- Hassanzadeh, T.; Meybodi, M.R. A New Hybrid Approach for Data Clustering using Firefly Algorithm and K-Means. In Proceedings of the 16th CSI c (AISP 2012), Fars, Iran, 2–3 May 2012; pp. 007–011. [Google Scholar]

- Mathew, J.; Vijayakumar, R. Scalable Parallel Clustering Approach for Large Data using Parallel K Means and Firefly Algorithms. In Proceedings of the 2014 International Conference on High. Performance Computing and Applications (ICHPCA), Bhubaneswar, India, 22–24 December 2014; pp. 1–8. [Google Scholar]

- Nayak, J.; Kanungo, D.P.; Naik, B.; Behera, H.S. Evolutionary Improved Swarm-based Hybrid K-Means Algorithm for Cluster Analysis. In Proceedings of the Second International Conference on Computer and Communication Technologies; Springer: New Delhi, India, 2017; Volume 556, pp. 343–352. [Google Scholar]

- Behera, H.S.; Nayak, J.; Nanda, M.; Nayak, K. A novel hybrid approach for real world data clustering algorithm based on fuzzy C-means and firefly algorithm. Int. J. Fuzzy Comput. Model. 2015, 1, 431. [Google Scholar] [CrossRef]

- Nayak, J.; Naik, B.; Behera, H.S. Cluster Analysis Using Firefly-Based K-means Algorithm: A Combined Approach. In Computational Intelligence in Data Mining. Advances in Intelligent Systems and Computing; Behera, H., Mohapatra, D., Eds.; Springer: Singapore, 2017; Volume 556. [Google Scholar]

- Jitpakdee, P.; Aimmanee, P.; Uyyanonvara, B. A hybrid approach for color image quantization using k-means and firefly algorithms. World Acad. Sci. Eng. Technol. 2013, 77, 138–145. [Google Scholar]

- Kuo, R.; Li, P. Taiwanese export trade forecasting using firefly algorithm based K-means algorithm and SVR with wavelet transform. Comput. Ind. Eng. 2016, 99, 153–161. [Google Scholar] [CrossRef]

- Kaur, A.; Pal, S.K.; Singh, A.P. Hybridization of K-Means and Firefly Algorithm for intrusion detection system. Int. J. Syst. Assur. Eng. Manag. 2018, 9, 901–910. [Google Scholar] [CrossRef]

- Langari, R.K.; Sardar, S.; Mousavi, S.A.A.; Radfar, R. Combined fuzzy clustering and firefly algorithm for privacy preserving in social networks. Expert Syst. Appl. 2019, 141, 112968. [Google Scholar] [CrossRef]

- HimaBindu, G.; Kumar, C.R.; Hemanand, C.; Krishna, N.R. Hybrid clustering algorithm to process big data using firefly optimization mechanism. Mater. Today Proc. 2020. [Google Scholar] [CrossRef]

- Wu, L.; Peng, Y.; Fan, J.; Wang, Y.; Huang, G. A novel kernel extreme learning machine model coupled with K-means clustering and firefly algorithm for estimating monthly reference evapotranspiration in parallel computation. Agric. Water Manag. 2020, 245, 106624. [Google Scholar] [CrossRef]

- Yang, X.S.; Gandomi, A.H. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef] [Green Version]

- Sood, M.; Bansal, S. K-medoids clustering technique using bat algorithm. Int. J. Appl. Inf. Syst. 2013, 5, 20–22. [Google Scholar] [CrossRef]

- Tripathi, A.; Sharma, K.; Bala, M. Dynamic frequency based parallel k-bat algorithm for massive data clustering (DFBPKBA). Int. J. Syst. Assur. Eng. Manag. 2017, 9, 866–874. [Google Scholar] [CrossRef]

- Pavez, L.; Altimiras, F.; Villavicencio, G. A K-means Bat Algorithm Applied to the Knapsack Problem. In Proceedings of the Computational Methods in Systems and Software; Springer: Cham, Switzerland, 2020; pp. 612–621. [Google Scholar]

- Gan, J.E.; Lai, W.K. Automated Grading of Edible Birds Nest Using Hybrid Bat Algorithm Clustering Based on K-Means. In Proceedings of the 2019 IEEE International Conference on Automatic Control. and Intelligent Systems (I2CACIS), Kuala Lumpur, Malaysia, 19 June 2019; pp. 73–78. [Google Scholar]

- Chaudhary, R.; Banati, H. Hybrid enhanced shuffled bat algorithm for data clustering. Int. J. Adv. Intell. Paradig. 2020, 17, 323–341. [Google Scholar] [CrossRef]

- Yang, X.S. Flower pollination algorithm for global optimization. In Proceedings of the International Conference on Unconventional Computing and Natural Computation; Springer: Berlin/Heidelberg, Germany, 2012; pp. 240–249. [Google Scholar]

- Jensi, R.; Jiji, G.W. Hybrid data clustering approach using k-means and flower pollination algorithm. arXiv 2015, arXiv:1505.03236. [Google Scholar]

- Kumari, G.V.; Rao, G.S.; Rao, B.P. Flower pollination-based K-means algorithm for medical image compression. Int. J. Adv. Intell. Paradig. 2021, 18, 171–192. [Google Scholar] [CrossRef]

- Karaboga, D. An Idea Based on Honey Bee Swarm for Numerical Optimization; Technical Report-tr06; Erciyes University, Engineering Faculty, Computer Engineering Department: Kayseri, Turcia, 2005. [Google Scholar]

- Armano, G.; Farmani, M.R. Clustering Analysis with Combination of Artificial Bee Colony Algorithm and k-Means Technique. Int. J. Comput. Theory Eng. 2014, 6, 141–145. [Google Scholar] [CrossRef] [Green Version]

- Tran, D.C.; Wu, Z.; Wang, Z.; Deng, C. A Novel Hybrid Data Clustering Algorithm Based on Artificial Bee Colony Algorithm and K-Means. Chin. J. Electron. 2015, 24, 694–701. [Google Scholar] [CrossRef]

- Bonab, M.B.; Hashim, S.Z.M.; Alsaedi, A.K.Z.; Hashim, U.R. Modified K-Means Combined with Artificial Bee Colony Algorithm and Differential Evolution for Color Image Segmentation. In Computational Intelligence in Information Systems; Springer: Cham, Switzerland, 2015; pp. 221–231. [Google Scholar]

- Jin, Q.; Lin, N.; Zhang, Y. K-Means Clustering Algorithm Based on Chaotic Adaptive Artificial Bee Colony. Algorithms 2021, 14, 53. [Google Scholar] [CrossRef]

- Dasu, M.V.; Reddy, P.V.N.; Reddy, S.C.M. Classification of Remote Sensing Images Based on K-Means Clustering and Artificial Bee Colony Optimization. In Advances in Cybernetics, Cognition, and Machine Learning for Communication Technologies; Springer: Singapore, 2020; pp. 57–65. [Google Scholar]

- Huang, S.C. Color Image Quantization Based on the Artificial Bee Colony and Accelerated K-means Algorithms. Symmetry 2020, 12, 1222. [Google Scholar] [CrossRef]

- Wang, X.; Yu, H.; Lin, Y.; Zhang, Z.; Gong, X. Dynamic Equivalent Modeling for Wind Farms with DFIGs Using the Artificial Bee Colony With K-Means Algorithm. IEEE Access 2020, 8, 173723–173731. [Google Scholar] [CrossRef]

- Cao, L.; Xue, D. Research on modified artificial bee colony clustering algorithm. In Proceedings of the 2015 International Conference on Network and Information Systems for Computers, Wuhan, China, 13–25 January 2015; pp. 231–235. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Soft. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Katarya, R.; Verma, O.P. Recommender system with grey wolf optimizer and FCM. Neural Comput. Appl. 2016, 30, 1679–1687. [Google Scholar] [CrossRef]

- Korayem, L.; Khorsid, M.; Kassem, S. A Hybrid K-Means Metaheuristic Algorithm to Solve a Class of Vehicle Routing Problems. Adv. Sci. Lett. 2015, 21, 3720–3722. [Google Scholar] [CrossRef]

- Pambudi, E.A.; Badharudin, A.Y.; Wicaksono, A.P. Enhanced K-Means by Using Grey Wolf Optimizer for Brain MRI Segmentation. ICTACT J. Soft Comput. 2021, 11, 2353–2358. [Google Scholar]

- Mohammed, H.M.; Abdul, Z.K.; Rashid, T.A.; Alsadoon, A.; Bacanin, N. A new K-means gray wolf algorithm for engineering problems. World J. Eng. 2021. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl. Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Moorthy, R.S.; Pabitha, P. A Novel Resource Discovery Mechanism using Sine Cosine Optimization Algorithm in Cloud. In Proceedings of the 4th International Conference on Intelligent Computing and Control. Systems (ICICCS), Madurai, India, 13–15 May 2020; pp. 742–746. [Google Scholar]

- Yang, X.S.; Deb, S. Cuckoo Search via Lévy Flights. In Proceedings of the 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC), Coimbatore, India, 9–11 December 2009; pp. 210–214. [Google Scholar]

- Ye, S.; Huang, X.; Teng, Y.; Li, Y. K-Means Clustering Algorithm based on Improved Cuckoo Search Algorithm and its Application. In Proceedings of the 2018 IEEE 3rd International Conference on Big Data Analysis (ICBDA), Shanghai, China, 9–12 March 2018; pp. 422–426. [Google Scholar]

- Saida, I.B.; Kamel, N.; Omar, B. A New Hybrid Algorithm for Document Clustering based on Cuckoo Search and K-Means. In Recent Advances on Soft Computing and Data Mining; Springer: Cham, Swizterland, 2014; pp. 59–68. [Google Scholar]

- Girsang, A.S.; Yunanto, A.; Aslamiah, A.H. A Hybrid Cuckoo Search and K-Means for Clustering Problem. In Proceedings of the 2017 International Conference on Electrical Engineering and Computer Science (ICECOS), Palembang, Indonesia, 22–23 August 2017; pp. 120–124. [Google Scholar]

- Zeng, L.; Xie, X. Collaborative Filtering Recommendation Based On CS-Kmeans Optimization Clustering. In Proceedings of the 2019 4th International Conference on Intelligent Information Processing, Wuhan, China, 16–17 November 2019; pp. 334–340. [Google Scholar]

- Tarkhaneh, O.; Isazadeh, A.; Khamnei, H.J. A new hybrid strategy for data clustering using cuckoo search based on Mantegna levy distribution, PSO and k-means. Int. J. Comput. Appl. Technol. 2018, 58, 137–149. [Google Scholar] [CrossRef]

- Singh, S.P.; Solanki, S. A Movie Recommender System Using Modified Cuckoo Search. In Emerging Research in Electronics, Computer Science and Technology; Springer: Singapore, 2019; pp. 471–482. [Google Scholar]

- Arjmand, A.; Meshgini, S.; Afrouzian, R.; Farzamnia, A. Breast Tumor Segmentation Using K-Means Clustering and Cuckoo Search Optimization. In Proceedings of the 9th International Conference on Computer and Knowledge Engineering (ICCKE), Virtual, 24–25 October 2019; pp. 305–308. [Google Scholar]

- García, J.; Yepes, V.; Martí, J.V. A Hybrid k-Means Cuckoo Search Algorithm Applied to the Counterfort Retaining Walls Problem. Mathematics 2020, 8, 555. [Google Scholar] [CrossRef]

- Binu, D.; Selvi, M.; George, A. MKF-Cuckoo: Hybridization of Cuckoo Search and Multiple Kernel-based Fuzzy C-means Algorithm. AASRI Procedia 2013, 4, 243–249. [Google Scholar] [CrossRef]

- Manju, V.N.; Fred, A.L. An efficient multi balanced cuckoo search K-means technique for segmentation and compression of compound images. Multimed. Tools Appl. 2019, 78, 14897–14915. [Google Scholar] [CrossRef]

- Deepa, M.; Sumitra, P. Intrusion Detection System Using K-Means Based on Cuckoo Search Optimization. IOP Conf. Ser. Mater. Sci. Eng. 2020, 993, 012049. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential evolution–a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Brest, J.; Maučec, M.S. Population size reduction for the differential evolution algorithm. Appl. Intell. 2007, 29, 228–247. [Google Scholar] [CrossRef]

- Kwedlo, W. A clustering method combining differential evolution with the K-means algorithm. Pattern Recognit. Lett. 2011, 32, 1613–1621. [Google Scholar] [CrossRef]

- Cai, Z.; Gong, W.; Ling, C.X.; Zhang, H. A clustering-based differential evolution for global optimization. Appl. Soft Comput. 2011, 11, 1363–1379. [Google Scholar] [CrossRef]

- Kuo, R.J.; Suryani, E.; Yasid, A. Automatic clustering combining differential evolution algorithm and k-means algorithm. In Proceedings of the Institute of Industrial Engineers Asian Conference 2013; Springer: Singapore, 2013; pp. 1207–1215. [Google Scholar]

- Sierra, L.M.; Cobos, C.; Corrales, J.C. Continuous Optimization based on a Hybridization of Differential Evolution with K-Means. In IBERO-American Conference on Artificial Intelligence; Springer: Cham, Switzerland, 2014; pp. 381–392. [Google Scholar]

- Hu, J.; Wang, C.; Liu, C.; Ye, Z. Improved K-Means Algorithm based on Hybrid Fruit Fly Optimization and Differential Evolution. In Proceedings of the 12th International Conference on Computer Science and Education (ICCSE), Houston, TX, USA, 22–25 August 2017; pp. 464–467. [Google Scholar]

- Wang, F. A Weighted K-Means Algorithm based on Differential Evolution. In Proceedings of the 2nd IEEE Advanced Information Management, Communicates, Electronic and Automation Control. Conference (IMCEC), Xi’an, China, 25–27 May 2018; pp. 1–2274. [Google Scholar]

- Silva, J.; Lezama, O.B.P.; Varela, N.; Guiliany, J.G.; Sanabria, E.S.; Otero, M.S.; Rojas, V. U-Control Chart Based Differential Evolution Clustering for Determining the Number of Cluster in k-Means. In International Conference on Green, Pervasive, and Cloud Computing; Springer: Cham, Switzerland, 2019; pp. 31–41. [Google Scholar]

- Sheng, W.; Wang, X.; Wang, Z.; Li, Q.; Zheng, Y.; Chen, S. A Differential Evolution Algorithm with Adaptive Niching and K-Means Operation for Data Clustering. IEEE Trans. Cybern. 2020, 1–15. [Google Scholar] [CrossRef]

- Mustafi, D.; Mustafi, A.; Sahoo, G. A novel approach to text clustering using genetic algorithm based on the nearest neighbour heuristic. Int. J. Comput. Appl. 2020, 1–13. [Google Scholar] [CrossRef]

- Mehrabian, A.; Lucas, C. A novel numerical optimization algorithm inspired from weed colonization. Ecol. Inform. 2006, 1, 355–366. [Google Scholar] [CrossRef]

- Fan, C.; Zhang, T.; Yang, Z.; Wang, L. A Text Clustering Algorithm Hybriding Invasive Weed Optimization with K-Means. In Proceedings of the 12th International Conference on Autonomic and Trusted Computing and 2015 IEEE 15th International Conference on Scalable Computing and Communications and Its Associated Workshops (UIC-ATC-ScalCom), Beijing, China, 10–14 August 2015; pp. 1333–1338. [Google Scholar] [CrossRef]

- Pan, G.; Li, K.; Ouyang, A.; Zhou, X.; Xu, Y. A hybrid clustering algorithm combining cloud model IWO and k-means. Int. J. Pattern Recogn. Artif. Intell. 2014, 28, 1450015. [Google Scholar] [CrossRef]

- Boobord, F.; Othman, Z.; Abubakar, A. PCAWK: A Hybridized Clustering Algorithm Based on PCA and WK-means for Large Size of Dataset. Int. J. Adv. Soft Comput. Appl. 2015, 7, 3. [Google Scholar]

- Razi, F.F. A hybrid DEA-based K-means and invasive weed optimization for facility location problem. J. Ind. Eng. Int. 2018, 15, 499–511. [Google Scholar] [CrossRef] [Green Version]

- Atashpaz-Gargari, E.; Lucas, C. Imperialist Competitive Algorithm: An Algorithm for Optimization Inspired by Imperialistic Competition. In Proceedings of the 2007 IEEE Congress on Evolutionary Computation, Singapore, 25–28 September 2007. [Google Scholar]

- Niknam, T.; Fard, E.T.; Pourjafarian, N.; Rousta, A. An efficient hybrid algorithm based on modified imperialist competitive algorithm and K-means for data clustering. Eng. Appl. Artif. Intell. 2011, 24, 306–317. [Google Scholar] [CrossRef]

- Abdeyazdan, M. Data clustering based on hybrid K-harmonic means and modifier imperialist competitive algorithm. J. Supercomput. 2014, 68, 574–598. [Google Scholar] [CrossRef]

- Forsati, R.; Meybodi, M.; Mahdavi, M.; Neiat, A. Hybridization of K-Means and Harmony Search Methods for Web Page Clustering. In Proceedings of the 2008 IEEE/WIC/ACM International Conference on Web Intelligence and Intelligent Agent Technology, Melbourne, Australia, 14–17 December 2020; Volume 1, pp. 329–335. [Google Scholar]

- Mahdavi, M.; Abolhassani, H. Harmony K-means algorithm for document clustering. Data Min. Knowl. Discov. 2008, 18, 370–391. [Google Scholar] [CrossRef]

- Cobos, C.; Andrade, J.; Constain, W.; Mendoza, M.; León, E. Web document clustering based on Global-Best Harmony Search, K-means, Frequent Term Sets and Bayesian Information Criterion. In Proceedings of the IEEE Congress on Evolutionary Computation, New Orleans, LA, USA, 5–8 June 2011; pp. 1–8. [Google Scholar]

- Chandran, L.P.; Nazeer, K.A.A. An improved clustering algorithm based on K-means and harmony search optimization. In Proceedings of the 2011 IEEE Recent Advances in Intelligent Computational Systems, Trivandrum, India, 22–24 September 2011; pp. 447–450. [Google Scholar]

- Nazeer, K.A.; Sebastian, M.; Kumar, S.M. A novel harmony search-K means hybrid algorithm for clustering gene expression data. Bioinformation 2013, 9, 84–88. [Google Scholar] [CrossRef] [PubMed]

- Raval, D.; Raval, G.; Valiveti, S. Optimization of Clustering Process for WSN with Hybrid Harmony Search and K-Means Algorithm. In Proceedings of the 2016 International Conference on Recent Trends in Information Technology (ICRTIT), Chennai, India, 8–9 April 2016; pp. 1–6. [Google Scholar]

- Kim, S.; Ebay, S.K.; Lee, B.; Kim, K.; Youn, H.Y. Load Balancing for Distributed SDN with Harmony Search. In Proceedings of the 2019 16th IEEE Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 11–14 January 2019; pp. 1–2. [Google Scholar]

- Hatamlou, A. Black hole: A new heuristic optimization approach for data clustering. Inf. Sci. 2013, 222, 175–184. [Google Scholar] [CrossRef]

- Tsai, C.W.; Hsieh, C.H.; Chiang, M.C. Parallel Black Hole Clustering based on MapReduce. In Proceedings of the 2015 IEEE International Conference on Systems, Man, and Cybernetics, Hong Kong, China, 9–12 October 2015; pp. 2543–2548. [Google Scholar]

- Abdulwahab, H.A.; Noraziah, A.; Alsewari, A.A.; Salih, S.Q. An Enhanced Version of Black Hole Algorithm via Levy Flight for Optimization and Data Clustering Problems. IEEE Access 2019, 7, 142085–142096. [Google Scholar] [CrossRef]

- Eskandarzadehalamdary, M.; Masoumi, B.; Sojodishijani, O. A New Hybrid Algorithm based on Black Hole Optimization and Bisecting K-Means for Cluster Analysis. In Proceedings of the 22nd Iranian Conference on Electrical Engineering (ICEE), Tehran, Iran, 20–22 May 2014; pp. 1075–1079. [Google Scholar]

- Pal, S.S.; Pal, S. Black Hole and k-Means Hybrid Clustering Algorithm. In Computational Intelligence in Data Mining; Springer: Singapore, 2020; pp. 403–413. [Google Scholar]

- Feng, L.; Wang, X.; Chen, D. Image Classification Based on Improved Spatial Pyramid Matching Model. In International Conference on Intelligent Computing; Springer: Cham, Switzerland, 2018; pp. 153–164. [Google Scholar]

- Jiang, Y.; Peng, H.; Huang, X.; Zhang, J.; Shi, P. A novel clustering algorithm based on P systems. Int. J. Innov. Comput. Inf. Control 2014, 10, 753–765. [Google Scholar]

- Jiang, Z.; Zang, W.; Liu, X. Research of K-Means Clustering Method based on DNA Genetic Algorithm and P System. In International Conference on Human Centered Computing; Springer: Cham, Switzerland, 2016; pp. 193–203. [Google Scholar]

- Zhao, D.; Liu, X. A Genetic K-means Membrane Algorithm for Multi-relational Data Clustering. In Proceedings of the International Conference on Human Centered Computing, Colombo, Sri Lanka, 7–9 January 2016; pp. 954–959. [Google Scholar]

- Xiang, W.; Liu, X. A New P System with Hybrid MDE-k-Means Algorithm for Data Clustering. 2016. Available online: http://www.wseas.us/journal/pdf/computers/2016/a145805-1077.pdf (accessed on 21 October 2021).

- Zhao, Y.; Liu, X.; Zhang, H. The K-Medoids Clustering Algorithm with Membrane Computing. TELKOMNIKA Indones. J. Electr. Eng. 2013, 11, 2050–2057. [Google Scholar] [CrossRef]

- Wang, S.; Xiang, L.; Liu, X. A Hybrid Approach Optimized by Tissue-Like P System for Clustering. In International Conference on Intelligent Science and Big Data Engineering; Springer: Cham, Switzerland, 2018; pp. 423–432. [Google Scholar]

- Wang, S.; Liu, X.; Xiang, L. An improved initialisation method for K-means algorithm optimised by Tissue-like P system. Int. J. Parallel Emergent Distrib. Syst. 2019, 36, 3–10. [Google Scholar] [CrossRef]

- Mirjalili, S. Dragonfly algorithm: A new meta-heuristic optimization technique for solving single-objective, discrete, and multi-objective problems. Neural Comput. Appl. 2016, 27, 1053–1073. [Google Scholar] [CrossRef]

- Angelin, B. A Roc Curve Based K-Means Clustering for Outlier Detection Using Dragon Fly Optimization. Turk. J. Compu. Math. Educ. 2021, 12, 467–476. [Google Scholar]

- Kumar, J.T.; Reddy, Y.M.; Rao, B.P. WHDA-FCM: Wolf Hunting-Based Dragonfly With Fuzzy C-Mean Clustering for Change Detection in SAR Images. Comput. J. 2019, 63, 308–321. [Google Scholar] [CrossRef]

- Majhi, S.K.; Biswal, S. Optimal cluster analysis using hybrid K-Means and Ant Lion Optimizer. Karbala Int. J. Mod. Sci. 2018, 4, 347–360. [Google Scholar] [CrossRef]

- Chen, J.; Qi, X.; Chen, L.; Chen, F.; Cheng, G. Quantum-inspired ant lion optimized hybrid k-means for cluster analysis and intrusion detection. Knowl.-Based Syst. 2020, 203, 106167. [Google Scholar] [CrossRef]

- Murugan, T.M.; Baburaj, E. Alpsoc Ant Lion*: Particle Swarm Optimized Hybrid K-Medoid Clustering. In Proceedings of the 2020 International Conference on Smart Technologies in Computing, Electrical and Electronics (ICSTCEE), Bengaluru, India, 9–10 October 2020; pp. 145–150. [Google Scholar]

- Naem, A.A.; Ghali, N.I. Optimizing community detection in social networks using antlion and K-median. Bull. Electr. Eng. Inform. 2019, 8, 1433–1440. [Google Scholar] [CrossRef]

- Dhand, G.; Sheoran, K. Protocols SMEER (Secure Multitier Energy Efficient Routing Protocol) and SCOR (Secure Elliptic curve based Chaotic key Galois Cryptography on Opportunistic Routing). Mater. Today Proc. 2020, 37, 1324–1327. [Google Scholar] [CrossRef]

- Cuevas, E.; Cienfuegos, M.; Zaldívar, D.; Pérez-Cisneros, M. A swarm optimization algorithm inspired in the behavior of the social-spider. Expert Syst. Appl. 2013, 40, 6374–6384. [Google Scholar] [CrossRef] [Green Version]

- Chandran, T.R.; Reddy, A.V.; Janet, B. Performance Comparison of Social Spider Optimization for Data Clustering with Other Clustering Methods. In Proceedings of the 2018 Second International Conference on Intelligent Computing and Control. Systems (ICICCS), Madurai, India, 14–15 June 2018; pp. 1119–1125. [Google Scholar]

- Thiruvenkatasuresh, M.P.; Venkatachalam, V. Analysis and evaluation of classification and segmentation of brain tumour images. Int. J. Biomed. Eng. Technol. 2019, 30, 153–178. [Google Scholar] [CrossRef]

- Xing, B.; Gao, W.J. Fruit fly optimization algorithm. In Innovative Computational Intelligence: A Rough Guide to 134 Clever Algorithms; Springer: Cham, Switzerland, 2014; pp. 167–170. [Google Scholar]

- Sharma, V.K.; Patel, R. Unstructured Data Clustering using Hybrid K-Means and Fruit Fly Optimization (KMeans-FFO) algorithm. Int. J. Comput. Sci. Inf. Secur. (IJCSIS) 2020, 18. [Google Scholar]

- Jiang, X.Y.; Pa, N.Y.; Wang, W.C.; Yang, T.T.; Pan, W.T. Site Selection and Layout of Earthquake Rescue Center Based on K-Means Clustering and Fruit Fly Optimization Algorithm. In Proceedings of the 2020 IEEE International Conference on Artificial Intelligence and Computer Applications (ICAICA), Dalian, China, 27–29 June 2020; pp. 1381–1389. [Google Scholar]

- Gowdham, D.; Thangavel, K.; Kumar, E.S. Fruit Fly K-Means Clustering Algorithm. Int. J. Scient. Res. Sci. Eng. Technol. 2016, 2, 156–159. [Google Scholar]

- Wang, Q.; Zhang, Y.; Xiao, Y.; Li, J. Kernel-based Fuzzy C-Means Clustering based on Fruit Fly Optimization Algorithm. In Proceedings of the 2017 International Conference on Grey Systems and Intelligent Services (GSIS), Stockholm, Sweden, 8–11 August 2017; pp. 251–256. [Google Scholar]

- Drias, H.; Sadeg, S.; Yahi, S. Cooperative Bees Swarm for Solving the Maximum Weighted Satisfiability Problem. In International Work-Conference on Artificial Neural Networks; Springer: Berlin/Heidelberg, Germany, 2005; pp. 318–325. [Google Scholar]

- Djenouri, Y.; Belhadi, A.; Belkebir, R. Bees swarm optimization guided by data mining techniques for document information retrieval. Expert Syst. Appl. 2018, 94, 126–136. [Google Scholar] [CrossRef]

- Aboubi, Y.; Drias, H.; Kamel, N. BSO-CLARA: Bees Swarm Optimization for Clustering Large Applications. In International Conference on Mining Intelligence and Knowledge Exploration; Springer: Cham, Switzerland, 2015; pp. 170–183. [Google Scholar]

- Djenouri, Y.; Habbas, Z.; Aggoune-Mtalaa, W. Bees Swarm Optimization Metaheuristic Guided by Decomposition for Solving MAX-SAT. ICAART 2016, 2, 472–479. [Google Scholar]

- Li, M.; Yang, C.W. Bacterial colony optimization algorithm. Control Theory Appl. 2011, 28, 223–228. [Google Scholar]

- Revathi, J.; Eswaramurthy, V.P.; Padmavathi, P. Hybrid data clustering approaches using bacterial colony optimization and k-means. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1070, 012064. [Google Scholar] [CrossRef]

- Vijayakumari, K.; Deepa, V.B. Hybridization of Fuzzy C-Means with Bacterial Colony Optimization. 2019. Available online: http://infokara.com/gallery/118-dec-3416.pdf (accessed on 18 September 2021).

- Al-Rifaie, M.M.; Bishop, J.M. Stochastic Diffusion Search Review. Paladyn J. Behav. Robot. 2013, 4, 155–173. [Google Scholar] [CrossRef] [Green Version]

- Karthik, J.; Tamizhazhagan, V.; Narayana, S. Data leak identification using scattering search K Means in social networks. Mater. Today Proc. 2021. [Google Scholar] [CrossRef]

- Fathian, M.; Amiri, B.; Maroosi, A. Application of honey-bee mating optimization algorithm on clustering. Appl. Math. Comput. 2007, 190, 1502–1513. [Google Scholar] [CrossRef]

- Teimoury, E.; Gholamian, M.R.; Masoum, B.; Ghanavati, M. An optimized clustering algorithm based on K-means using Honey Bee Mating algorithm. Sensors 2016, 16, 1–19. [Google Scholar]

- Aghaebrahimi, M.R.; Golkhandan, R.K.; Ahmadnia, S. Localization and Sizing of FACTS Devices for Optimal Power Flow in a System Consisting Wind Power using HBMO. In Proceedings of the 18th Mediterranean Electrotechnical Conference (MELECON), Athens, Greece, 18–20 April 2016; pp. 1–7. [Google Scholar]

- Obagbuwa, I.C.; Adewumi, A. An Improved Cockroach Swarm Optimization. Sci. World J. 2014, 2014, 1–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Senthilkumar, G.; Chitra, M.P. A Novel Hybrid Heuristic-Metaheuristic Load Balancing Algorithm for Resource Allocationin IaaS-Cloud Computing. In Proceedings of the Third International Conference on Smart Systems and Inventive Technology, Tirunelveli, India, 20–22 August 2020; pp. 351–358. [Google Scholar]

- Aljarah, I.; Ludwig, S.A. A New Clustering Approach based on Glowworm Swarm Optimization. In Proceedings of the 2013 IEEE Congress on Evolutionary Computation, Cancun, Mexico, 20–23 June 2013; pp. 2642–2649. [Google Scholar]

- Zhou, Y.; Ouyang, Z.; Liu, J.; Sang, G. A novel K-means image clustering algorithm based on glowworm swarm optimization. Przegląd Elektrotechniczny 2012, 266–270. Available online: http://pe.org.pl/articles/2012/8/66.pdf (accessed on 11 July 2021).

- Onan, A.; Korukoglu, S. Improving Performance of Glowworm Swarm Optimization Algorithm for Cluster Analysis using K-Means. In International Symposium on Computing in Science & Engineering Proceedings; GEDIZ University, Engineering and Architecture Faculty: Ankara, Turkey, 2013; p. 291. [Google Scholar]

- Tang, Y.; Wang, N.; Lin, J.; Liu, X. Using Improved Glowworm Swarm Optimization Algorithm for Clustering Analysis. In Proceedings of the 18th International Symposium on Distributed Computing and Applications for Business Engineering and Science (DCABES), Wuhan, China, 8–10 November 2019; pp. 190–194. [Google Scholar]

- Teodorović, D. Bee colony optimization (BCO). In Innovations in Swarm Intelligence; Springer: Berlin/Heidelberg, Germany, 2009; pp. 39–60. [Google Scholar]

- Das, P.; Das, D.K.; Dey, S. A modified Bee Colony Optimization (MBCO) and its hybridization with k-means for an application to data clustering. Appl. Soft Comput. 2018, 70, 590–603. [Google Scholar] [CrossRef]

- Forsati, R.; Keikha, A.; Shamsfard, M. An improved bee colony optimization algorithm with an application to document clustering. Neurocomputing 2015, 159, 9–26. [Google Scholar] [CrossRef]

- Yang, C.-L.; Sutrisno, H. A clustering-based symbiotic organisms search algorithm for high-dimensional optimization problems. Appl. Soft Comput. 2020, 97, 106722. [Google Scholar] [CrossRef]

- Zhang, D.; Leung, S.C.; Ye, Z. A Decision Tree Scoring Model based on Genetic Algorithm and k-Means Algorithm. In Proceedings of the Third International Conference on Convergence and Hybrid Information Technology, Busan, Korea, 11–13 November 2008; Volume 1, pp. 1043–1047. [Google Scholar]

- Patel, R.; Raghuwanshi, M.M.; Jaiswal, A.N. Modifying Genetic Algorithm with Species and Sexual Selection by using K-Means Algorithm. In Proceedings of the 2009 IEEE International Advance Computing Conference, Patiala, India, 6–7 March 2009; pp. 114–119. [Google Scholar]

- Niu, B.; Duan, Q.; Liang, J. Hybrid Bacterial Foraging Algorithm for Data Clustering. In International Conference on Intelligent Data Engineering and Automated Learning; Springer: Berlin/Heidelberg, Germany, 2013; pp. 577–584. [Google Scholar]

- Karimkashi, S.; Kishk, A.A. Invasive Weed Optimization and its Features in Electromagnetics. IEEE Trans. Antennas Propag. 2010, 58, 1269–1278. [Google Scholar] [CrossRef]

- Charon, I.; Hudry, O. The noising method: A new method for combinatorial optimization. Oper. Res. Lett. 1993, 14, 133–137. [Google Scholar] [CrossRef]

- Arthur, D.; Vassilvitskii, S. K-means++: The advantages of careful seeding. In Proceedings of the 18th Annual ACM-SIAM Symposium on Discrete Algorithms, New Orleans, LA, USA, 7–9 January 2007. [Google Scholar]

- Pykett, C. Improving the efficiency of Sammon’s nonlinear mapping by using clustering archetypes. Electron. Lett. 1978, 14, 799–800. [Google Scholar] [CrossRef]

- Lee, R.C.T.; Slagle, J.R.; Blum, H. A triangulation method for the sequential mapping of points from N-space to two-space. IEEE Trans. Comput. 1977, 26, 288–292. [Google Scholar] [CrossRef]

- McCallum, A.; Nigam, K.; Ungar, L.H. Efficient clustering of high-dimensional data sets with application to reference matching. In Proceedings of the Sixth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Boston, MA, USA, 1 August 2000; pp. 169–178. [Google Scholar]

- Wikaisuksakul, S. A multi-objective genetic algorithm with fuzzy c-means for automatic data clustering. Appl. Soft Comput. 2014, 24, 679–691. [Google Scholar] [CrossRef]

- Neath, A.A.; Cavanaugh, J.E. The Bayesian information criterion: Background, derivation, and applications. Wiley Interdiscip. Rev. Comput. Stat. 2012, 4, 199–203. [Google Scholar] [CrossRef]

- Davies, D.L.; Bouldin, D.W. A cluster separation measure. IEEE Trans. Pattern Anal. Mach. Intell. 1979, 224–227. [Google Scholar] [CrossRef]

| Metaheuristic Algorithm | Objective | Application | Method for Automatic Clustering | MOA Role | K-Means Role | Dataset Used for Testing | Compared with | Performance Measure | |||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Genetic Algorithm (GA) | |||||||||||

| 1 | Zhou et al., [45]-NoiseClust | Niche Genetic Algorithm (NGA) and K-means++ | Automatic | Global Positioning System | Density-Based Method | Adaptive probabilities of crossover and mutation | |||||

| 2 | Dai, Jiao & He, [28]-PGAClust | Parallel Genetic Algorithm (PGA) and K-means | Automatic | Dynamic mining of cluster number | |||||||

| 3 | Li et al. [40] | GA and K-means | Automatic | Adopting a k-value learning algorithm using GA | |||||||

| 4 | Kuo et al. [39]-SOM+GKA | SOM and Modified GKA | Automatic | Market Segmentation in Electronic Commerce | Self-Organizing Feature Maps (SOM) neural networks | SOM+K, K-means | Within Cluster Variations (SSW) and number of misclassifications | ||||

| 5 | Eshlaghy & Razi [42] | Grey-based K-means and GA | Non-Automatic | Research and Project selection and management | Project allocation selection | clustering of different projects | SSE | ||||

| 6 | Sheng, Tucker & Liu [30]-NGKA | Niche Genetic Algorithm (NGA) + one step of K-means | Improving GA optimization procedure for general clustering | Non-Automatic | Gene Expression | Gene Expression Data (Subcellcycle_384 subcellcycle_2945 data, Serum data Subcancer data | GGA and GKA | Sum of Square Error (SSE) | |||

| 7 | Karegowda et al. [41] | K-means + GA | Avoidance of random selection of cluster centers | Non-Automatic | Medical Data Mining | initial cluster center assignment | Clustering of dataset | PIMA Indian diabetic dataset | Classification error and execution time | ||

| 8 | Bandyopadhyay & Maulik [24]-KGA | GA + K-means | Escape from local optimum convergence | Non-Automatic | Satellite image classification | GA perturb the system to avoid local convergence | determining new cluster center for each generation | Artificial Data Real-life data sets (Vowel data, iris data, Crude oil data) | K-means and GA-Clustering | ||

| 9 | Cheng et al. [25]-PGKA | Prototype Embedded GA + K-Means | Encoding Cluster prototypes for general GA clustering | Non-Automatic | SKY testing data | K-Means, GKA and FGKA | Ga based criteria | ||||

| 10 | Zhang & Zhou [35]-Nclust | novel niching genetic algorithm (NNGA) + K-means | Finding better cluster number automatically | Automatic | General Cluster Analysis | Improved canopy and K-means ++ | UCI dataset | GAK and GenClust | SSE, DBIPBM, COSEC, ARI and SC | ||

| 11 | Ghezelbash, Maghsoudi & Carranza [38] -Hybrid GKMC | Genetic K-means clustering + Traditional K-means algorithm | General Improvement of K-Means | Non-Automatic | Geochemical Anomaly Detection from stream sediments | Determining cluster center locations | General Clustering | GSI Analytical Data | Traditional K-Means Clustering (TKMC) | Prediction rate curve based on a defined fitness function | |

| 12 | Mohammadrezapou, Kisi & Pourahmadm [46] | K-means + Genetic Algorithm and Fuzzy C-Means + Genetic Algorithm | Avoidance of random selection of cluster centers | Automatic | Homogeneous regions of groundwater quality identification | GA method | Determining the optimum number of clusters | General Clustering | Av. Silhouette width Index; Levene’s homogeneity test; Schuler & Wilcox classification; Piper’s diagram | ||

| 13 | El-Shorbagy et al. [37] | K-means + GA | Combining the advantages of K-means and GA in general clustering | Non-Automatic | Electrical Distribution system | GA Clustering with a new mutation | GA population Initialization for best cluster centers | UCI dataset | K-means Clustering and GA-Clustering | ||

| 14 | Barekatain, Dehghani & Pourzaferani [44] | K-means + Improved GA | New cluster-based routing protocols | Automatic | Energy Reduction and Extension of network Lifetime | Determine the optimum number of clusters | Dynamic clustering of the network | Ns2Network (Fedora10) | Other network routing protocols, i.e., LEACH, GABEEC and GAEEP. | ||

| 15 | Lu et al. [43] | K-means + GA | Combining the advantages of the two algorithms | Non-Automatic | Multiple Travelling Salesman Problem | ||||||

| 16 | Sinha & Jana [33] | GA with Mahalanobis distance + K-Means with K-means++ Initialization | clustering algorithm for distributed dataset | Automatic | GA method | Initial clustering using GA method | Fine-tuning the result obtained from GA clustering | Breast Cancer Iris Glass Yeast) | Map Reduced-based Algorithms, MRk-means, parallel K-means and scaling GA | Davies-Bouldin index, Fisher’s discriminant ratio, Sum of the squared differences | |

| 17 | Laszlo & Mukherjee [26] | GA + K-means | improving GA-based clustering method | Non-Automatic | Evolving initial cluster centers using hyper-quad tree | To return the fitness value of a chromosome | GTD, BTD, BPZ, TSP-LIB-1060 TSP-LIB-3038; Simulated dataset | GA-based clustering, J-Means | |||

| 18 | Zhang, Leung & Ye [199] | GA + K-means | Improving accuracy | Non-Automatic | Credit Scoring | Reduce data attribute’s redundancy | Removal of noise data | German credit dataset Australian credit dataset | C4.5, BPN, GP, SVM+GA and RSC | ||

| 19 | Kapil, Chawla & Ansari [3] | K-means + GA | Optimizing the K-means | Automatic | General Cluster Analysis | GA method | Generating initial cluster centers | Basic K-means clustering | Online User dataset | K-means | SSE |

| 20 | Rahman & Islam [32]-GENCLUST | GA + K-means | Finds cluster number with high-quality centers | Automatic | General Cluster Analysis | Deterministic selection of Initial Genes | Generating initial cluster centers | Basic K-means clustering | UCI dataset | RUDAW, AGCUK, GAGR, GFCM and SABC | Xie-Beni Index, SSE, COSEC, F-Measure, Entropy, and Purity |

| 21 | Islam et al. [34] GENCLUST++ | GA + K-means | Computational complexity reduction | Automatic | General Cluster Analysis | GA method | Generating initial cluster centers | Basic K-means clustering | UCI dataset | GENCLUST-H, GENCLUST-F, AGCUK, GAGR, K-Means | GA Performance Measure |

| 22 | Mustafi & Sahoo [36] | GA + DE + K-means | Choice of initial centroid | Automatic | Clustering Text Document | Using DE | Generating initial cluster centers | Basic K-means clustering | Corpus | K-Means | Standard clustering validity parameters |

| 23 | Laszlo & Mukherjee [27] | GA + K-means | Superior Partitioning | Non-Automatic | German credit dataset Australian credit dataset | ||||||

| 24 | Patel, Raghuwanshi & Jaiswal [200]-GAS3KM | GAS3 + K-means | Improving the performance of GAS3 | Automatic | GA method | Generating initial cluster centers | Basic K-means clustering | Unconstrained unimodal and multi-modal functions with or without epistasis among n-variables. | GAS3 | GA Performance Measure | |

| 25 | Xiao, Yan, Zhang, & Tang [31]-KMQGA | K-means + QGA | Quantum inspired GA for K-means | Automatic | GA method | Generating initial cluster centers | Basic K-means clustering | Simulated datasets Glass, Wine, SPECTF-Heart, Iris | KMVGA (Variable String Length Genetic Algorithm) | Davies–Bouldin rule index | |

| Particle Swarm Optimisation (PSO) | |||||||||||

| 26 | Jie and Yibo [70] | PSO + K-means | Outlier detection | Non-Automatic | Distribution Network Sorting | Optimizing the clustering center | Determining the optimal number of Clusters | Simulated datasets | K-Means | SSE | |

| 27 | Tsai & Kao [63]-KSRPSO | Selective Regeneration PSO + K-means | SRPSO Performance improvement | Non-Automatic | Global optimal convergence | Basic K-means clustering | Artificial datasets, Iris, Crude oil, Cancer, Vowel, CMC, Wine, and Glass | SRPSO, PSO and K-Means | Sum of intra-cluster distances and Error Rate (ER) | ||

| 28 | Paul, De & Dey, [69]-MfPSO based K-Means | MfPSO + K-Means | Improved multidimensional data clustering | Non-Automatic | Cluster center generation | Basic K-Means clustering | Iris, Wine, Seeds, and Abalone | K-Means and Chaotic Inertia weight PSO | DBI, SI, Means, SD and computational time, ANOVA test and a two-tailed t-test conducted at 5% significance | ||

| 29 | Prabha & Visalakshi [64]-PSO-K-Means | PSO + Normalisation + K-Means | Improving performance using normalization | Non-Automatic | Global optimal convergence | Basic K-means clustering | Australian, Wine, Bupa, Mammography, Sattelite Image, and Pima Indian Diabetes | PSO-KM, K-Means | Rand Index, FMeasure, Entropy, and Jacquard Index | ||

| 30 | Ratanavilisagul [69]-PSOM | PSO + K-Means + mutation operations applied with particles | Avoidance of getting entrapped in local optima | Non-Automatic | Global optimal convergence | Basic K-means clustering | (Iris, Wine, Glass, Heart, Cancer, E.coli, Credit, Yeast | Standard PSO, PSOFKM, PSOLF-KHM | F-Measure (FM), Average correct Number (ACN), and Standard Deviation (SD)of FM. | ||

| 31 | Nayak et al. [66] | Improved PSO + K-Means | optimal cluster centers for non-globular clusters | Non-Automatic | Global optimal convergence | Basic K-means clustering | K-Means, GA-K-Means, and PSO-K-Means | ||||

| 32 | Emami, & Derakhshan [65]-SOFKM | PSO + FKM | Escape from local optimum with increased convergence speed | Non-Automatic | Global optimal convergence | Fuzzy K-means clustering | FKM, ICA, PSO, PSOKHM, and HABC algorithms | Sample, Iris, Glass, Wine, and Contraceptive Method Choice (indicated as CMC) | F-Measure (FM) and Runtime Metrics | ||

| 33 | Chen, Miao & Bu [71] | PSO + K-Means | Solving initial center selection problem and escape from local optimal | Non-Automatic | Image Segmentation | Global optimal convergence | Dynamic clustering using k-means algorithm | Lena, Tree and Flower images from the Matlab Environment | K-Means PSOK | Sphere function and Griewank function | |

| 34 | Niu et al. [48] | Six different PSOs with different social communications + K-means | Escape from local optimum convergence with accelerated convergence speed | Non-Automatic | Global optimal convergence | Refining partitioning results for accelerating convergence | Iris, Wine, Coil2, Breast Cancer, German Credit, Optdigits, Musk, Magic 04, and Road Network with synthetic datasets | PSC-RCE, MacQueens K-Means, ACA-SL, ACA-CL, ACA-AL and Lloyd’s K-means | Mean squared error (MSE—sum of intra-cluster distances) | ||

| 35 | Yang, Sun & Zhang [62]-PSOKHM | PSO + KHM | Combining the merits of PSO and KHM | Non-Automatic | Global optimal convergence | Refining cluster center and KHM clustering | Artificial datasets, Wine, Glass, Iris, breast-cancer-Wisconsin, and Contraceptive Method Choice. | KHM, PSO | Objective function of KHM and F-Measure | ||

| 36 | Chen & Zhang [59]-RVPSO-K | PSO + K-means | Improved stability, precision, and convergence speed. | Non-Automatic | Web Usage Pattern Clustering | A parallel search for optimal clustering | Refining cluster center and K-means clustering | Two-day Web log of a university website | PSO-K | Fitness Measure and Run-time | |