1. Introduction

In the last decade, convolutional neural networks (ConvNets) have outclassed traditional machine learning algorithms in several tasks, from image classification [

1,

2] to audio [

3,

4] and natural language processing [

5,

6]. Many smart applications today make use of ConvNets to infer meaningful information from raw data. Those ConvNets, first trained off-line on a representative set of data and then deployed for on-line inference, can be processed either remotely, on high-performance servers, or locally, close to the source of data, on lightweight end-nodes. In both cases, achieving high energy efficiency is paramount: on the cloud side, it is to reduce the power consumption, the costs of the cooling systems, and hence it is a way to improve maintenance and reliability [

7]; on the edge side, is to cope with lower energy budgets and to maximize the battery lifetime still ensuring reasonable processing time [

8]. Unfortunately, ConvNets are created heavy and cumbersome, and their algorithmic structure calls for high resource allocation, that is, large memories for storing weights and partial results and highly parallel data-paths for handling massive arithmetic workloads.

The need for practical optimization methods and training flows capable of lowering the hardware requirements attracted large research interest in the last years, leading to many possible alternatives. Most of them are based on different forms of approximate computing strategies enabled by the intrinsic error resilience of ConvNets. Approximations can be applied at different levels by means of different knobs: (i) the data format, with mini-floats [

9,

10] or fixed-point quantization [

11,

12,

13]; (ii) the arithmetic precision, replacing exact multiplications with an approximate version [

14,

15]; (iii) the algorithmic structure, for instance simplifying standard convolutions with an alternative formulation, such as Winograd [

16] or frequency domain convolution [

3]. These techniques share a common characteristic: the arithmetic algebra adopted for carrying on matrix convolutions is still built upon the basic multiply and accumulate (MAC) operation.

A broader look at how ConvNets process data may suggest a radical and perhaps more efficient alternative. The convolutional layers are characterized by stencil loops that update array elements according to fixed patterns, thereby producing repetitive workloads with a high degree of temporal and spatial locality. This offers the opportunity to implement reuse mechanisms that alleviate the computational workload. To this end, associative memories that store recurrent results have been proposed as a viable option to replace the arithmetic MAC, and thus to skip redundant computations improving the energy efficiency [

17,

18]. To further enhance the associative mechanism, additional approximations not strictly related to the arithmetic MAC approximation can offer an orthogonal dimension for optimization. Bit obfuscation and operand precision lowering were used to relax the matching rules indeed, further increasing the repetitiveness of certain patterns and the probability the required data get available in the associative memory [

17,

18,

19]. To be noted that the error introduced by approximate matching rules might call for auxiliary error-recovering policies through online hardware calibration and/or custom training procedures. This represents a substantial overhead. Furthermore, most of the proposed solutions rely on custom memory architectures thought for Resistive-RAM technologies, which are more difficult to integrate within standard CMOS designs and processes [

20].

This work does focus on these aspects introducing a practical alternative to enhance data reuse via approximate associative matching and standard CMOS circuits. Specifically, we propose a hardware–software co-design pipeline consisting of a suite of automatic tools that implement a multi-stage approximation and generate ConvNet models able to maximize the reuse mechanism implemented through a memory-enhanced processing element (PE) borrowed from our preliminary work [

21]. Similar input patterns are merged via clustering at first, then a bit-wise approximate matching is implemented in such a way that the associative memory utilization is maximized. Along the pipeline, the error tolerance is regulated through a user-defined threshold, enabling an energy-accuracy tradeoff to satisfy different application-level specifications and/or custom hardware constraints. High prediction accuracy is achieved neither resorting to additional training epochs for weights, nor any custom training procedure. This is an important feature as it ensures the use of pre-trained models maximizing the integration with existing model architectures. The processing element consists of tiny CMOS content addressable memories (CAMs) coupled with a standard floating-point unit (FPU), which is easy to be integrated with modern digital designs, e.g., existing GP-GPUs [

17,

22,

23] or custom spatial accelerators [

24,

25,

26].

The experiments conducted on computer vision tasks (image classification and object recognition) and keyword spotting reveal that our approach achieves up to 77% of energy savings with a negligible accuracy loss (<1%). Moreover, with the possibility of controlling the accuracy-latency tradeoff at design-time, ConvNets obtained with the proposed technique cover a larger set of operating points (up to 23% energy savings when the accuracy target gets relaxed from to ). To prove the scalability of our solution, we also repeated experiments considering half-precision floating point data-paths. The results reveal it is possible to achieve a comparable energy efficiency (72%) with negligible accuracy loss (<1%). Finally, the comparison with the state-of-the-art shows remarkable energy saving (up to 29.47%) against prior solutions.

3. Co-Design Pipeline: Concept and Methodology

As anticipated in the previous sections, the basic principle of our proposal is to accelerate the inference stage of a ConvNet by pre-computing and storing the most recurrent multiplications offline and then reusing them whenever the same input pattern occurs. We first introduce the processing element and its design parameters, then we provide a detailed description of the co-design pipeline.

3.1. Hardware Design

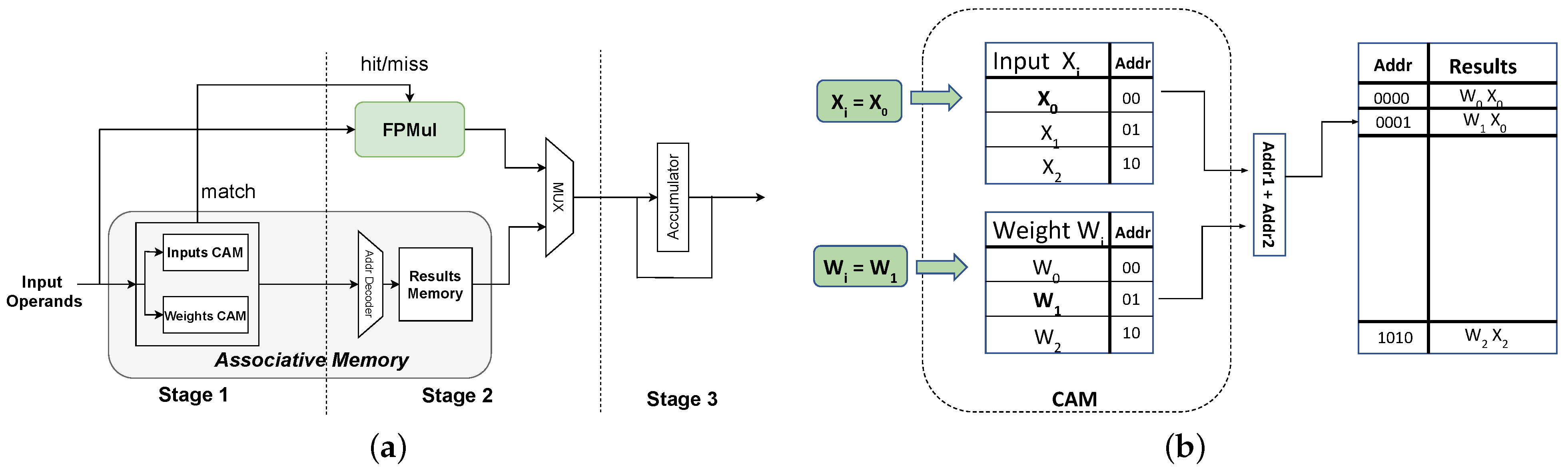

An overview of the PE architecture is depicted in

Figure 2a. There is an FPU composed of a multi-stage floating-point multiplier (FPMul) and a single-stage accumulator, coupled with an associative memory storing the most representative input patterns and weights values. The PE functionality is simple, yet highly efficient. The CAMs process the input operands in parallel. If they (partially) match those stored, the multiplication result is read from the associative memory and the FPMul clock gated, otherwise, the multiplication is normally computed using the FPMul. Due to the optimization stage done at design time, the FPMul is turned off for most of the cycles with a marginal contribution to the total power consumption.

We implemented both single and half-precision floating-point units. The architecture of the associative memory is depicted in

Figure 2b. It is composed of two CAMs, one dedicated to the most recurrent weights, the other dedicated to activations values. The SRAM stores the results of the pre-computed approximate multiplications. The proposed architecture is inspired to [

18], whose design is more efficient in terms of area and energy compared to a single CAM solution with a double word bit-width (weight and input concatenation). If compared to a standard pipelined floating-point MAC, the proposed hybrid processing element (PE) has an additional pipeline stage (Stage 1 in

Figure 2a). The latency of such an additional stage is given as the search time consumed by two parallel CAMs and is function of the CAM configuration/size, of course. According to our experiments and simulations, the latency of the largest CAM configuration (512 rows) fits the design clock. To notice that larger CAM configurations can be designed (at no clock-period penalty) leveraging a modular architecture composed of multi-stage sub-CAMs, with a base module that replicates itself in cascading stages [

18]. The design and assessment of circuit and/or architecture optimization is out of the scope of this work. It is worth emphasizing that the second and last stage of our hybrid processing element PE (Stage 2 in

Figure 2a) has a shorter latency compared to the FPMul. This would enable further speed-up and optimization options, as most of the MAC operations could be faster. Nonetheless, we kept the same clock period of the FPMul thus ensuring synchronization of the workload.

The associative memory size and hence its performances are characterized by a set of parameters defined as follows:

denotes the number of rows in CAM dedicated to relevant weights value;

indicates the number of rows in CAM dedicated to the most frequent input and activations;

refers to the CAM and SRAM memory word size.

The parameter set (

,

,

) describes the size of the two CAMs and hence the SRAM memory defined as

. Playing with different configurations of those parameters leads to distinct memory designs and different energy consumption. To this aim, the co-design tool explores those memory configurations to minimize the energy consumption for a given user-defined accuracy constraint. This process might return custom configurations, e.g., uneven word size (

) or non 2’s power

and

. However, as depicted in

Figure 3, we opted for a more regular memory structure with 8-bit word bit-width when considering a PE with a half-precision (FPMul 16) and 16-bit word bit-width when considering a 32-bit data format (FPMul 32).

3.2. Energy Model

Given

,

and

, the overall energy consumption for a look-up in the associative memory is calculated through Equation (

1), where

and

are the energy contributions due to the CAMs with size

and

respectively, and

is the contribution given by the patterns memory of size

. The energy values come from a hardware characterization done offline.

The simulation framework integrates the energy model shown in Equation (

2), where

represents the hit rate,

is the energy consumption of the multiplier,

and

refer to the energy consumption of the two CAMs given in Equation (

1),

is the energy consumed once the inputs do match the CAM content (the pre-computed result is retrieved from the small-size SRAM memory), and

is the energy due to a missing search of the pattern (in this case the multiplication is effectively computed).

One can compute the energy-saving by comparing the energy consumed by the stand-alone (single or half precision) FPMul to that retrieved by the energy model above.

3.3. Software Design

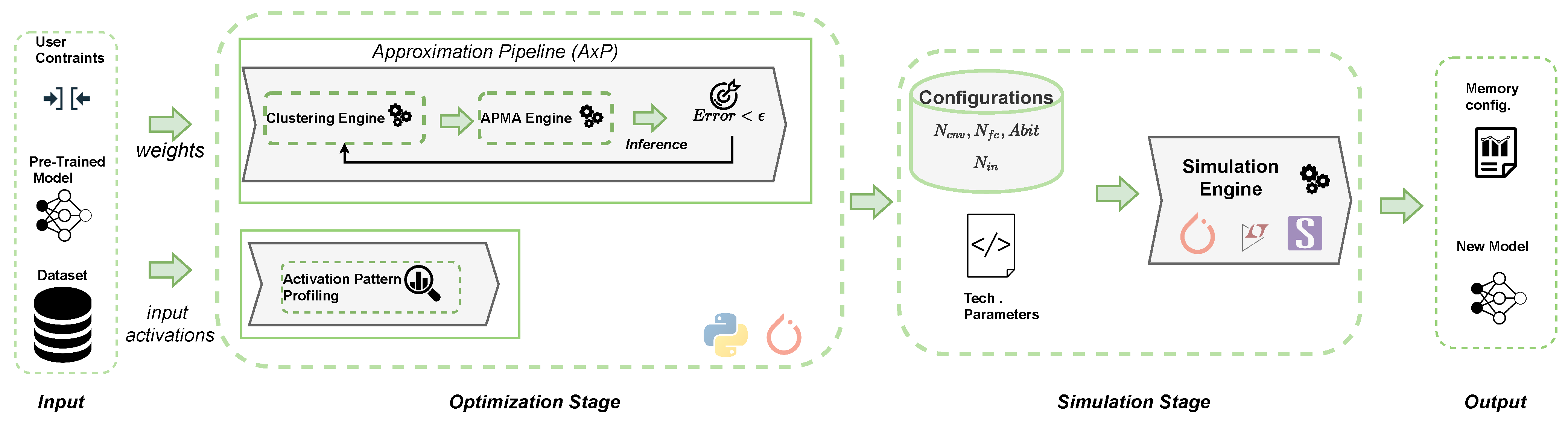

To enable such associative-based implementation, we introduce a co-design framework depicted in

Figure 4. It consists of two main stages: an

Optimization Stage and a

Simulation Stage. The former searches for possible candidates of the memory and model configurations that satisfy the user-defined constraints. The latter does a test over the relevant configurations elaborated in the previous stage emulating the inference stage on the designed PE, providing the weight-set of the clustered ConvNet and the corresponding energy-efficient associative memory settings as main outcome. The

Optimization Stage is the core of the framework. It has two principal components: (i) the

Approximation Pipeline (AxP) that performs an iterative dual-step approximation based on a clustering & approximate flow applied on the ConvNet model; (ii) the

Activation Pattern Profiling (APP) stage that works in parallel extracting relevant statistics on the activation maps produced by the inner processing layers of the ConvNet model. The following sections provide a more detailed description of these components.

3.4. Approximation Pipeline (AxP)

The approximation pipeline (AxP) is built to increase data repetitiveness across the ConvNet model. The rationale is that reducing the number of different parameters and activations minimizes the arithmetic unit utilization since most of the operations can be pre-computed and reused during the inference by exploiting the associative-based processing element. To achieve this, an iterative clustering and approximation procedure is implemented as described by Algorithm 1.

| Algorithm 1: Approximation Pipeline (AxP) Algorithm |

![Applsci 11 11164 i001]() |

The main inputs are (i) the pre-trained , (ii) the validation set (), (iii) a user-defined accuracy drop target (), and (iv) a stopping variable () to ensure convergence of the iterative loop. The algorithm delivers as main outcome the set of configurations () which are fed as input to the latter stage of the framework, namely, the simulation engine. Going deeper into details, the AxP is composed of two sub-stages run sequentially: clustering and Approximate Pattern Matching Analysis (apma). The clustering step explores different solutions in a discrete space , n defined empirically, seeking the optimal number of weight clusters for both the convolutional and the fully-connected layers (the parameters and respectively). The optimal number of bits used for the approximation pattern matching (the parameter ) is evaluated during the apma sub-stage, which explores the viable configurations in the interval , as the parallelism of the current hardware data-path (32- or 16-bit in our study). During the iterative loop, shown in Algorithm 1, all the tuples (, , ) that satisfy the accuracy constraint are kept as possible optimal solutions. Here the tool employs the solution found in the clustering stage, characterized by and parameters, and iteratively performs the forwarding pass on the validation set. In each iteration, a certain partial matching configuration (while and are fixed) is evaluated on the validation set and all the solutions that meet the user constraint are collected. This additional approximation phase introduces a new degree of freedom in the design phase and a finer grain control in the accuracy vs. energy efficiency space.

From here follows the detailed description of clustering and apma sub-stages and the knob involved.

3.4.1. Clustering Engine

During the clustering stage, the ConvNet’s weights are merged into the lowest possible number of clusters using a similarity distance metric. Once the engine returns the cluster centroids, all the weights in a certain range are mapped on the corresponding centroid values. This procedure affects the ConvNet model complexity, that is, the lower the number of clusters, the lower the cardinality of the weight-set. Indeed, only the values of the cluster centroids are the weight patterns to be stored.

The clustering procedure was built upon the jenks natural breaks (JNB) algorithm [

47]. It is an iterative method whose objective is to maximize the inter classes variance while minimizing the intra-class variance. The weight tensors are unrolled and treated as a 1D vector, then sorted by magnitude during a pre-processing step to achieve speed-up. We opted for a layer-wise clustering granularity with a differentiated strategy for convolutional and fully connected layers. Each filter in a CONV layer is clustered independently, while the entire weight matrix is considered for an FC layer. The resulting number of clusters are

and

for CONV and FC layers respectively. The differentiated clustering strategy adopted for the two types of layers is motivated by empirical evidence as it decreases both the number of centroids and the accuracy drop.

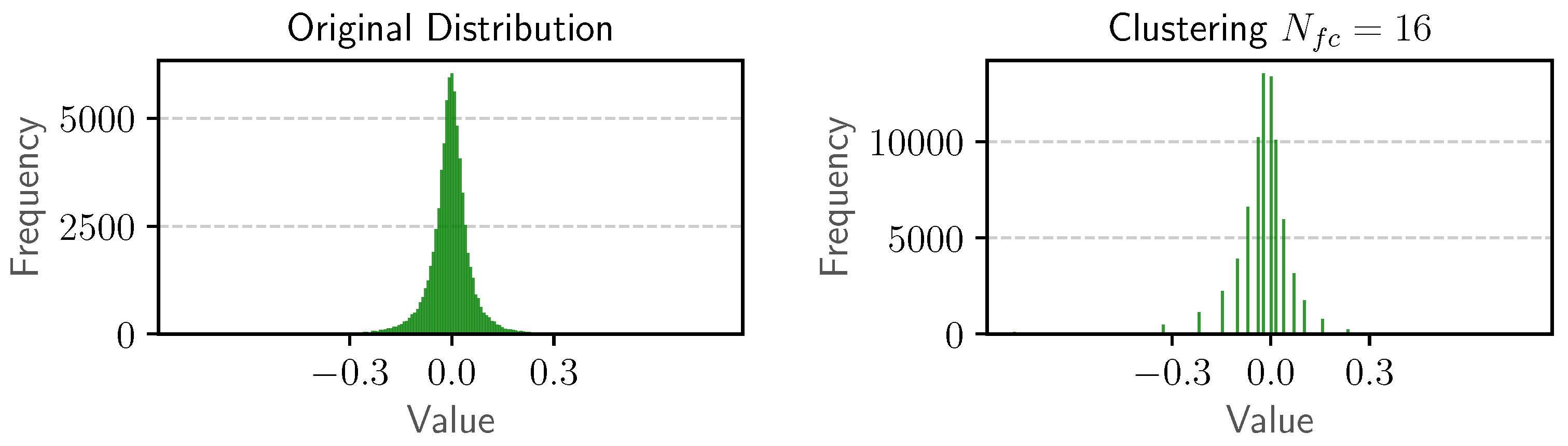

As a practical example, the histograms reported in

Figure 5 show the effect of the clustering step on the weights-set of an FC layer using

clusters. The original peak frequency for near-zero values (≈5 k) is doubled after the

clustering step (≈10 k), which suggests that the occurrence of zero patterns gets doubled at inference-time, hence opening to a higher matching probability exploitable by an associative-memory based processing element.

3.4.2. APMA Engine

Once the weight-set of the ConvNet model has been discretized across a lower number of clusters, the second stage encompasses the approximate pattern matching analysis (APMA) (see

Figure 4). APMA takes as input the model processed by the clustering engine and returns the approximation bits (

Abit) parameter, which is the number of bits used to implement the approximate matching function for the retrieving of the pre-computed results stored in the associative memory of the processing element. The main objective is to minimize the

Abit while ensuring an accuracy drop lower or equal to the user-defined constraint.

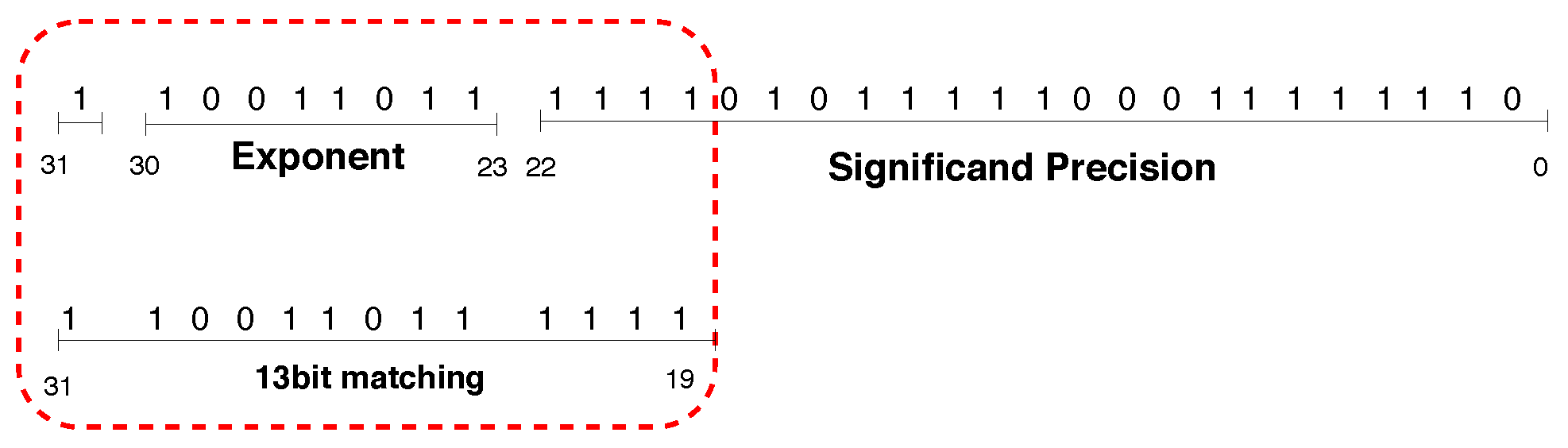

The target data formats are the single and half-precision floating-point defined within the IEEE 754 standard.

Figure 6 reports the single-precision floating-point format, composed of a sign bit, 8 bits for the

exponent and 23 fraction bits. During the approximate matching, the least significant bits (LSB) are obfuscated, using the most significant bits (MSB) as the search key. For instance, a possible approximate matching schema makes use of 13 MSBs, pruning 19 fraction bits. This mechanism replaces the exact matching between current input patterns (weight and activation) and the pre-computed patterns in the associative memory, increasing the hit rate at the cost of a certain accuracy drop. It is worth noticing this mechanism can be regulated with the

Abit knob, which affects the memory size, the energy efficiency, and the accuracy drop.

3.4.3. Activation Pattern Profiling (APP)

We exploit the information stored in the training data to retrieve the best representative values for the possible inputs and activation. The tool performs the feed-forward pass on the training data to collect and profile the most frequent inputs and the intermediate activation values. Those values fed the dedicated CAM memory (

Inputs CAM in

Figure 2a) and based on the empirical evaluation, we adopted a layer-wise granularity, where (

) indicates the number of input activations to store for each layer.

3.5. Understanding Co-Design Knobs

The optimization stage returns a set of optimal configurations (, , , ) and the corresponding accuracy degradation. Each configuration reflects a different hardware setting, which in turn affects the energy efficiency and the accuracy drop as follows:

defined as max(, ) and affect the weight-CAM size and the overall accuracy;

and impact the activation-CAM size and the overall hit rate in the associative memory;

, and contribute to the static random access memory (SRAM) size for collecting the multiplication results: .

Fixing , there exist solutions with the same accuracy degradation (same ), but different associative memory sizes (varying ), hence higher or lower energy savings.

3.6. Simulation Engine

Once all viable configurations have been computed and assessed based on the actual accuracy target, a further iterative step emulates the inference stage on the customized hardware. This step encompasses the assessment of the CAM-enhanced FPU. The associative memory is initialized with the patterns provided by the optimization pipeline. The technological characterization of the PE (

Tech. Parameters in

Figure 4) and the hit rate in the associative memory are used for estimating the energy consumption. The best associative memory configuration that satisfies the user’s constraints with minimum energy consumption is finally returned as the main outcome of the framework. It is worth emphasizing that multiple accuracy constraints can be used by the end-user to trade energy efficiency with performance according to specific requirements (hardware equipment or application).

4. Experimental Results

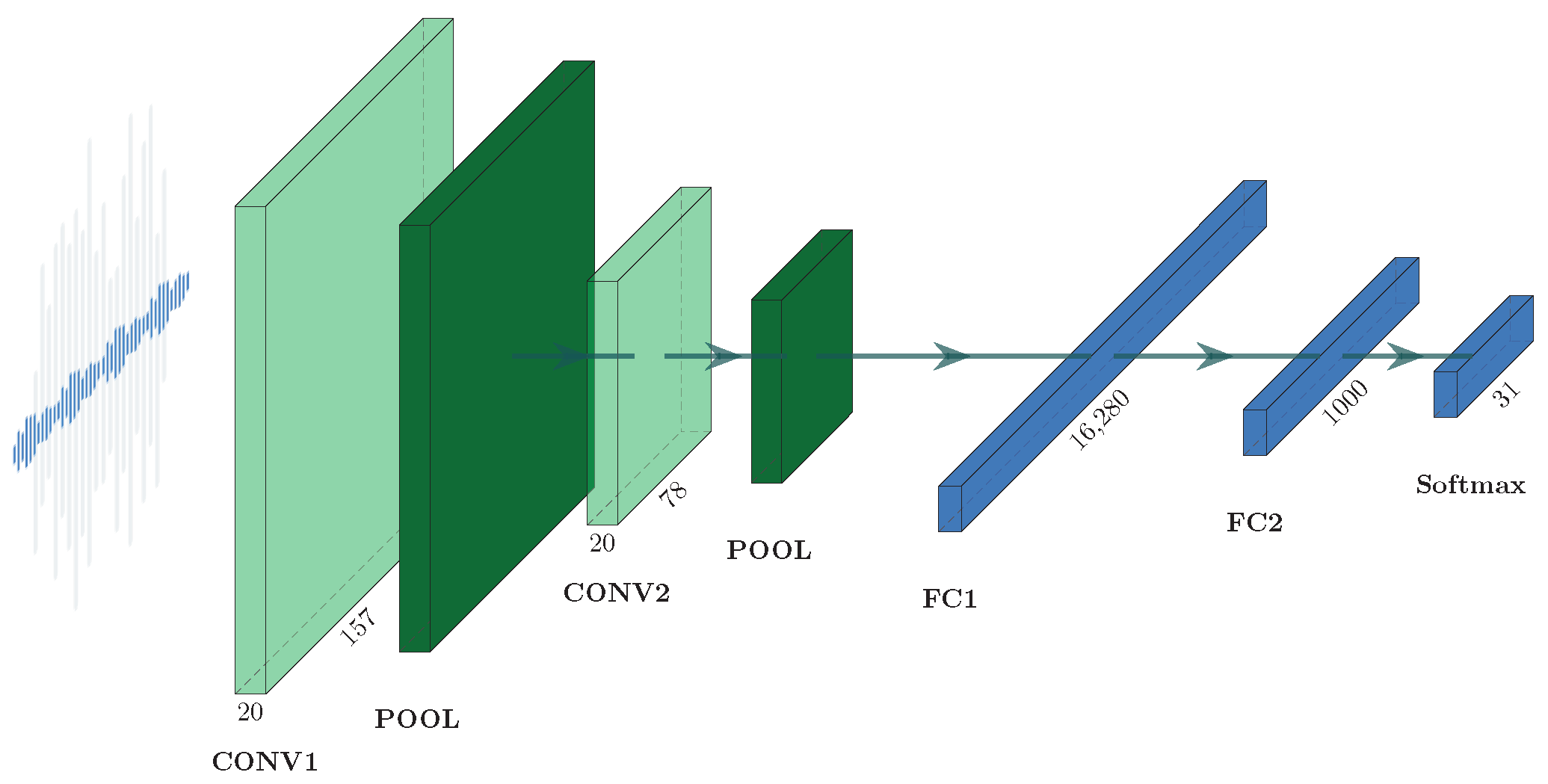

4.1. Benchmarks and Datasets

Table 1 reports the benchmarks adopted. Specifically, the dataset and ConvNet employed for each task, the input data size, and the detailed model topology. It also collects the baseline accuracy for the full-precision model (FP32) and the half-precision one (FP16). A detailed description of the tasks follows.

Image Classification (IC): the goal is to classify 10 different handwritten digits. The dataset employed is the popular MNIST [

48] consisting of 60 k 32 × 32 gray-scale images, 50 k samples used for training, 10 k for testing. The model employed is inspired by the LeNet [

50] architecture widely adopted in previous works.

Object Recognition (OR): the objective is to recognize 43 different traffic signals, a popular sub-task for autonomous driving. The dataset adopted is the German Traffic Sign Recognition Dataset (GTSRD) [

49]. It collects 50 k samples of 32 × 32 RGB samples of traffic signals spread in the German streets, 40 k used for training, 10 k testing. For this task, the ConvNet architecture is the LeNet-5 [

50] model.

Keyword Spotting (KS): the objective is to recognize 30 simple vocal commands. The adopted dataset comes from Google research, Google Speech Commands (GSC) [

27], with 65 k one-second-long audio samples of 30 different keywords plus noise, labeled as “unknown”. The inputs are the 2D spectrograms of the recorded samples, 56,196 samples are for training, 7518 for testing. The model adopted is the GSCNet taken from an open-source repository [

51], which is inspired by the original work [

27].

4.2. Hardware and Software Setup

We designed a PE with a single and half-precision floating-point multiplier using the open-source FloPoCo library [

52]. The energy performance is retrieved from Synopsys Design Compiler and PrimeTime leveraging a commercial CMOS 28 nm FDSOI technology library from STMicroelectronics. The CAMs are designed and mapped with a standard 6T cell that comes from the 28 nm technology library. The energy characterization was done in HSPICE by Synopsys. Experimental results show that the adopted design performance is aligned with state-of-the-art CAM memories [

53]. The SRAM bank is characterized using CACTI 7.0 [

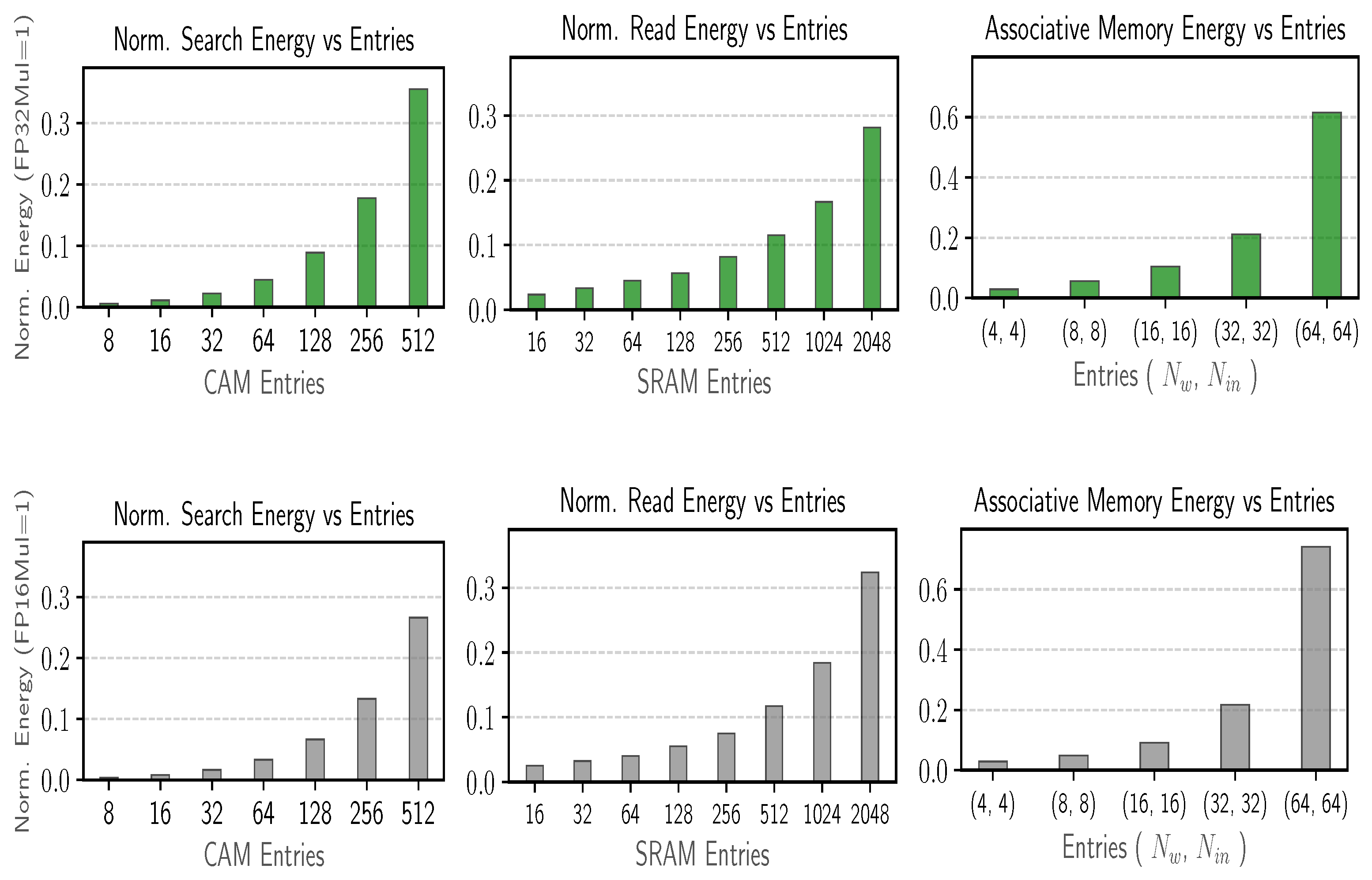

54]. A parametric characterization of the three memory components is depicted in

Figure 7. Here, the energy consumption increases with memory size (the number of rows) while fixing the word size as described in the previous section.

The entire framework was built upon the deep-learning framework PyTorch (v1.4). The baseline models were trained with hyper-parameters from original papers [

27,

50]. Both training and inference stages were run on a server powered with 40-core Intel Xeon CPUs and accelerated with NVIDIA Titan Xp GPU (CUDA 10.0). The models were trained twice using FP32 first and FP16 then, however,

Table 1 revealed that there is no difference for the considered benchmarks.

4.3. Weight Approximation Pipeline

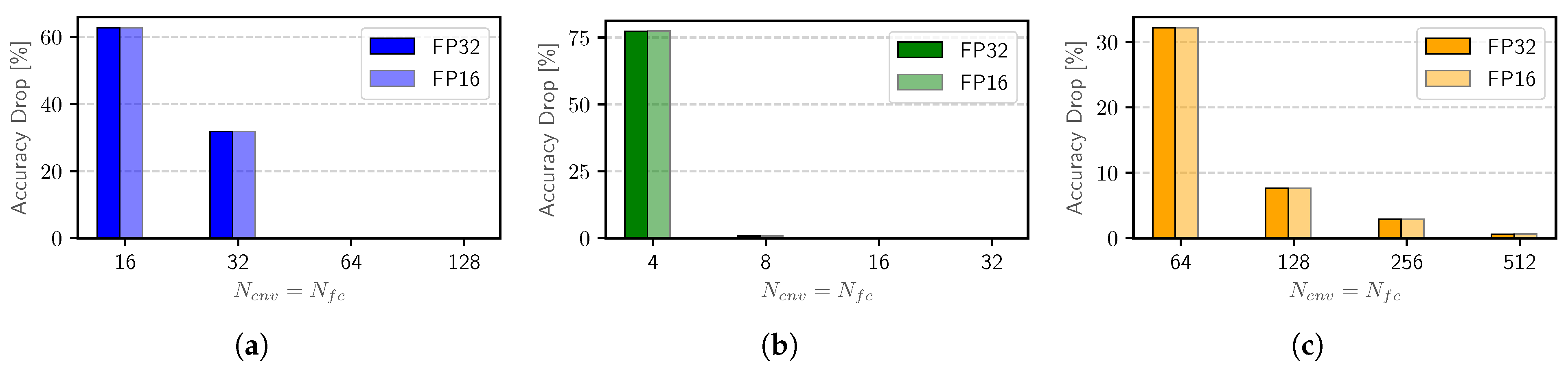

This section quantifies the existing relationship between the prediction accuracy of ConvNets and the design knobs explored in the approximation pipeline. A first analysis shows how clustering (i.e., and ) affects the accuracy along with the benefits of the proposed approximate pattern matching strategy.

The

clustering effects are depicted in

Figure 8 where the accuracy drop is linked to the number of clusters used in that stage. For graphical purposes, we just plotted the solutions where

, however, the tool can explore also uneven working points. Intuitively, the accuracy degradation decreases for larger values of

and

. This behavior shows the hidden relationship between the number of different parameters, i.e., the model complexity and the generalization ability of a ConvNet. The break-even (near-to-zero accuracy) configuration differs among benchmarks and it is strictly related to the model topology and the task complexity. In particular, for ConvNets with similar size (e.g., for OR and IC tasks), the number of clusters required are strictly related to the task difficulty (43 vs. 10 classes), when the number of weights grows up (wider architecture) more clusters are required to represent the entire weight space, as shown by the KS benchmark for instance. The results achieved are remarkable. It is possible to shirk the complex high-dimensional information of a ConvNet model with no substantial loss in the prediction ability. For instance, negligible accuracy degradation is obtained with

for OR,

for IC and

.

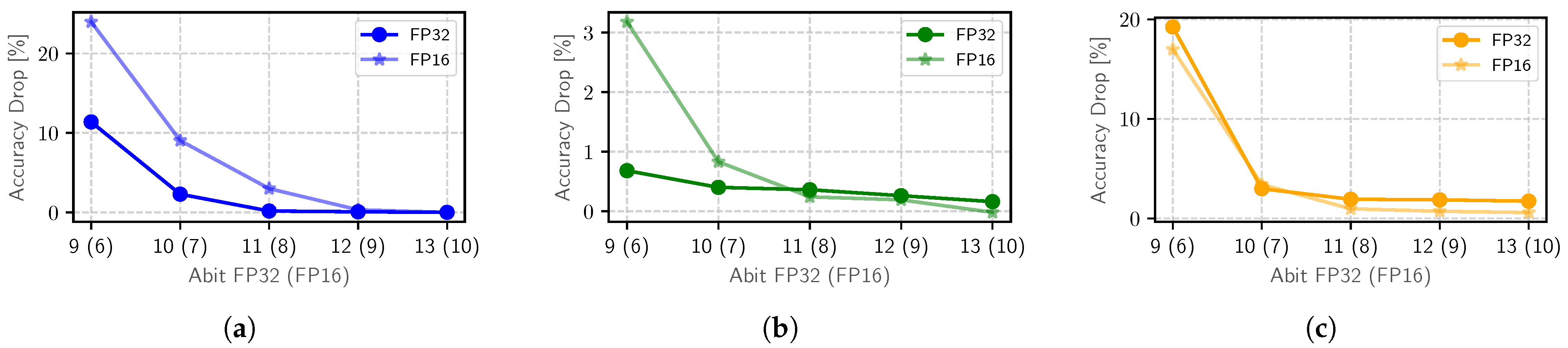

The

is a key knob in our proposal, as it affects both accuracy and hit rate in the associative memory.

Figure 9 shows the accuracy degradation trend for a different number of bits used to implement the approximate matching. In order to assess the impact due to

, we fixed the number of clusters (

) such a way that the accuracy drop is negligible:

for OR,

for IC and

for KS. Those points represent possible candidate solutions indeed. The accuracy drop decreases as

gets larger. Although the initial error differs among benchmarks, the common ground is that it gets close to zero using 13 MSB over the whole 32-bit; for the FP16 format, 8 MSBs are just enough to achieve negligible accuracy losses (<1%). From

Figure 8 and

Figure 9, it is also clear that

is a finer knob when compared to the number of clusters, as it leads to minor accuracy variations.

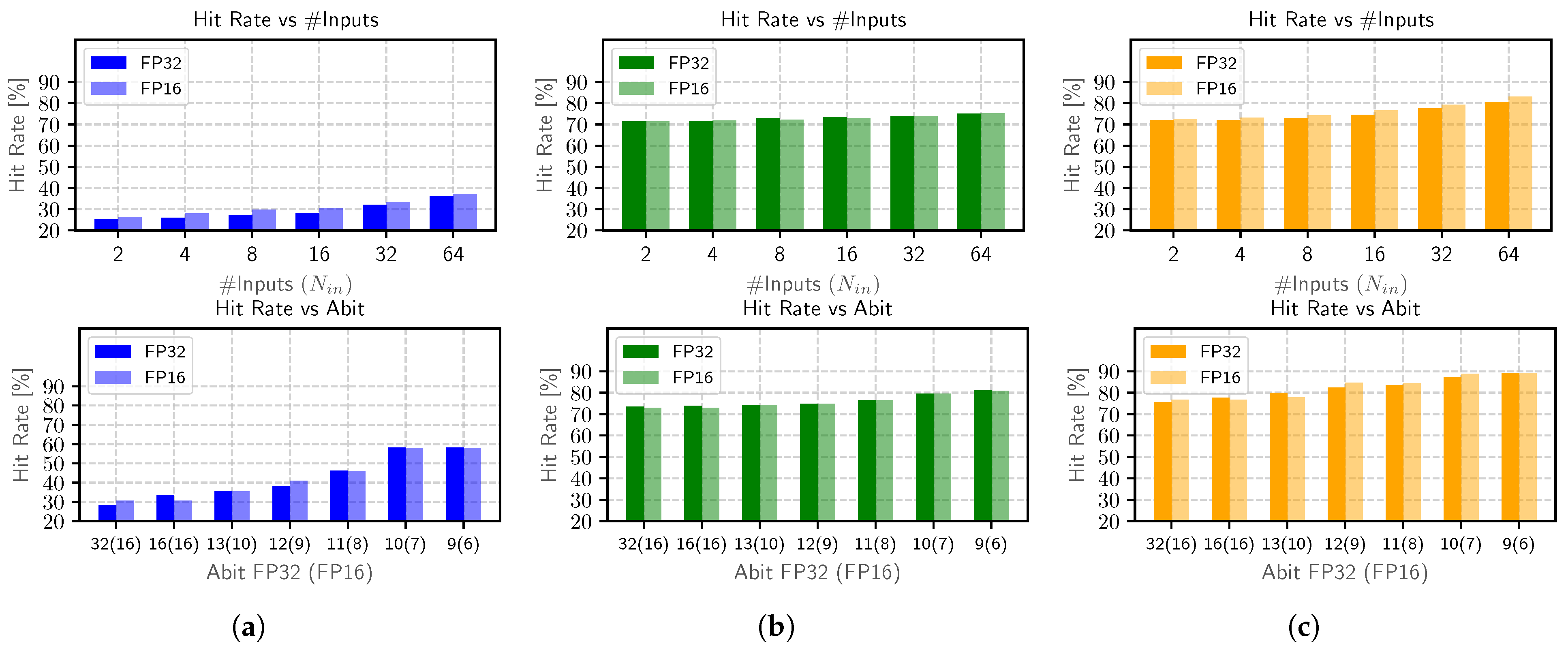

4.4. Input Activations Profiling

Figure 10 (top) shows the hit rate trends as function of the number of input and activation patterns stored

. As expected, the hit rate increases with

, yet with different rates depending on the task. IC and KS reach higher hit rates for instance (71–81%). The best case for IC gets close to 75%, whereas KS goes even higher to 81% when

. The direct implication of this observation is that the FPMul workload decreases up to 80%, which means a mere 20–30% of the overall input patterns are handled as classical arithmetic operations. The results get slightly different for the OD task where the hit rate ranges are limited between 25% and 36%. This might be due to the higher variance in input data. Similar behavior is depicted when scaling bit-width (FP16). Here, the hit rate for OR and KS slightly increases (up to

) due to the natural reduction of the bit-width. The peak performance is recorded for the KS benchmark, where the hit rate ranges from 76% to 89%.

However, an increment in the hit rate due to a higher number of clusters has a side effect on energy consumption. Higher means larger associative memory indeed, and hence more energy consumption. This behavior motivates the need to balance those contrasting metrics (hit rate and energy consumption) through extensive co-design exploration.

4.5. Approximate Pattern Matching on Input Activation

Figure 10 (bottom) shows the hit rate when varying

while fixing the other knobs (

). This configuration guarantees a moderate hit rate (as depicted in

Figure 10 (top)) and may be a good representation point to analyse the impact of

apma stage on hit rate.

As expected, the hit rate increases when resorting to a lower number of bits for approximate matching. Specifically, the hit rate increases up to 58% for OR, 83% for IC, and 89% for KS. More interesting is the understanding of how the hit rate behavior changes after the

apma step to isolate the impact on that stage. This is depicted in

Figure 10 (bottom), where the leftmost values represent the baseline values (equals to

Figure 10 (up),

). When comparing this behavior with the hit rate after the clustering (

Figure 10 (bottom), leftmost values) it turns out that the

apma phase leads to substantial hit rate improvements.

In particular, even on the benchmark with a high hit rate after the clustering step, the apma step leads to an additional improvement: up to 14% (13%) for KS and 9% (10%) for IC, referred to 32 bit-data width (16 bit-data). Moreover, the results are impressive on OR benchmark, where this additional approximation step guarantees up to for 30% (FP16 28%) of hit rate improvement. This suggests that the apma phase is very effective, as it leads to new energy-efficient solutions, unreachable with the clustering stand-alone.

4.6. Energy-Accuracy Trade-Off and Comparison with Previous Works

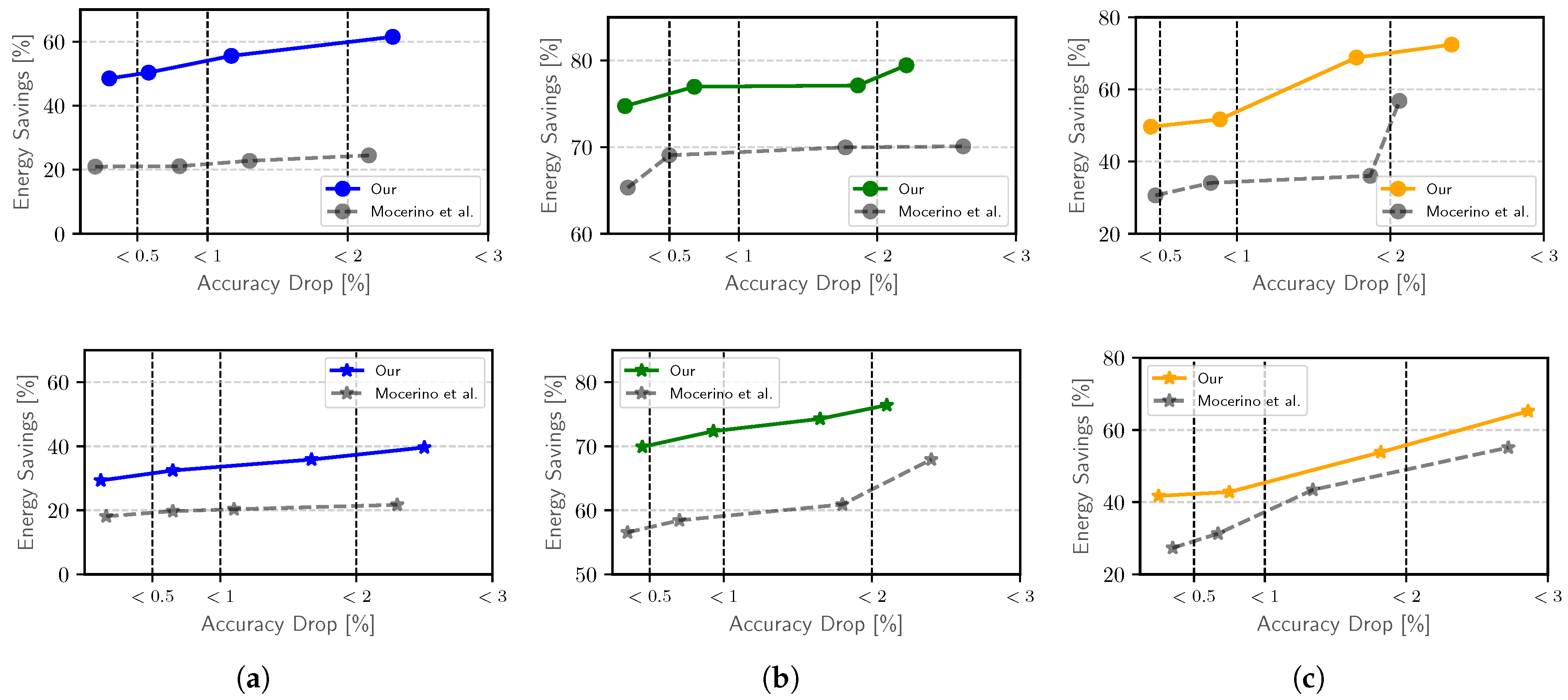

The plots collected in

Figure 11 show the Pareto analysis obtained running multiple instances of the framework under different user-defined accuracy constraints.

Those constraints on the x-axis (dashed vertical lines) are represented as the accuracy drop w.r.t. the nominal accuracy (in

Table 1). On the y-axis, the energy savings w.r.t. a standard convolution-as-GEMM implementation running on fully arithmetic processing element are reported. In this space, the plots depict our solutions (in colored full lines) and our preliminary work (in grey dashed lines). Each color refers to a different benchmark: object recognition with blue, image classification task with green, and keywords spotting with yellow. Moreover, the top row refers to the FP32 data-path (circle marker), whereas the bottom one to FP16 (star marker).

Intuitively, energy savings get lower for stricter accuracy constraints. The amount of energy savings vary across the benchmarks as a consequence of different configurations, hence different associative memory designs. Looking at the FP32 results, with a close-to-zero accuracy drop (<0.5%), energy savings are substantial: 48.50% (OR), 74.75% (IC), 49.65% (KS). Results get even more interesting when relaxing the constraints (<3%): 61.50% (OR), 79.45% (IC), 72.40% (KS).

Efficacy has been proven for FP16 too, yet with narrow margins. Specifically, the energy savings range from 29.32% to 39.5% (OR), 69.91% to 76.42% (IC), and from 41.74% to 65.22% (KS) for the three accuracy constraints respectively. The best scaling ratio is shown by KS with an improvement of 22.75% relaxing the constraints, while IC shows a smoother scaling with 5.45% of accuracy improvement. Reducing data path bit-width leads to similar trends, in particular, KS presents a maximum scaling of 23.38% while the minimum occurs for IC (6.51%).

Concerning prior arts, the first analysis we conducted aims at showing the improvements brought by our new framework when compared to our preliminary work [

21] (gray dashed line in

Figure 11). The energy efficiency increases up to 37.06% for OR, 9.42% for IC, and 32.79% for KS for FP32 data path, while for FP16 data path it is possible to save up to 13.37% for OR, 17.92% for IC, and 14.42 for KS from a strict to more relaxed accuracy constraint. It is noted that our solution heavily improves the energy efficiency on benchmarks where our preliminary work shows the poorest results (e.g., OR benchmark). This gets empirical evidence that the additional approximation step introduced with this work enhances data-reuse opportunity. Even though savings slightly decrease, the results on the FP16 data path confirm the dominance of the new approximate technique.

As a final remark,

Table 2 reports a fair comparison to other prior works based on associative-based computing integrated with a floating-point unit [

38,

40] or probabilistic data-structure such as bloom filter [

45]. Moreover, in

Table 2 details on the memory technology adopted, the type of cell, the technological node and the clock period of processing element are reported. The voltage supply for each solution is set to 1V. The analysis involves a common benchmark (IC) under the same accuracy constraint (<1%).

The results reveal the proposed framework outperforms prior arts, with up to 29.47% of energy improvement. This achievement is the result of a joint hardware–software optimization process, in which a sophisticated data-reuse strategy fully exploits the compact and energy-efficient associative processing element.

Finally, we found that the search time for a feasible solution grows in the case of large ConvNets (i.e., with a high number of channels). We realized that the bottleneck is the clustering stage, where the clustering iterations in convolutional layers increase with the number of active channels. There are different ways to address this limitation, for instance adopting a recently proposed high parallel implementation [

55,

56].