Exploring Users’ Mental Models for Anthropomorphized Voice Assistants through Psychological Approaches

Abstract

1. Introduction

2. Theoretical Background

2.1. Anthropomorphized Personality

2.2. Perceived Emotion

2.3. Motivations and Values of Using Voice Assistants

2.4. The Usage of VA

2.5. Mental Model

2.6. Psychological Approach

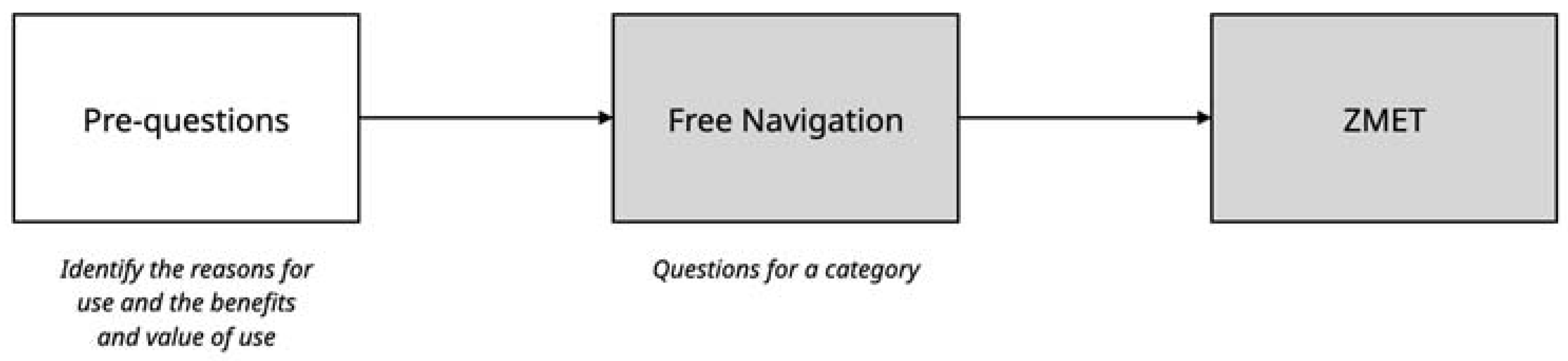

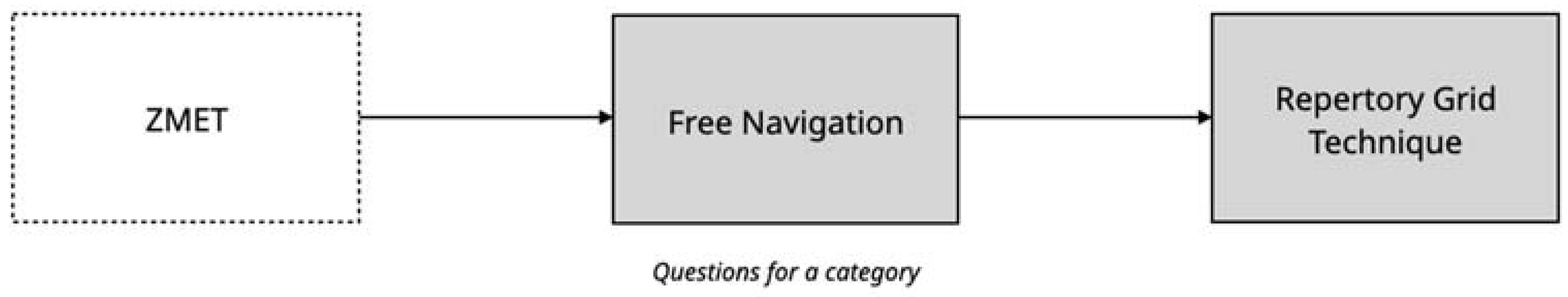

3. Method

3.1. Study 1: ZMET

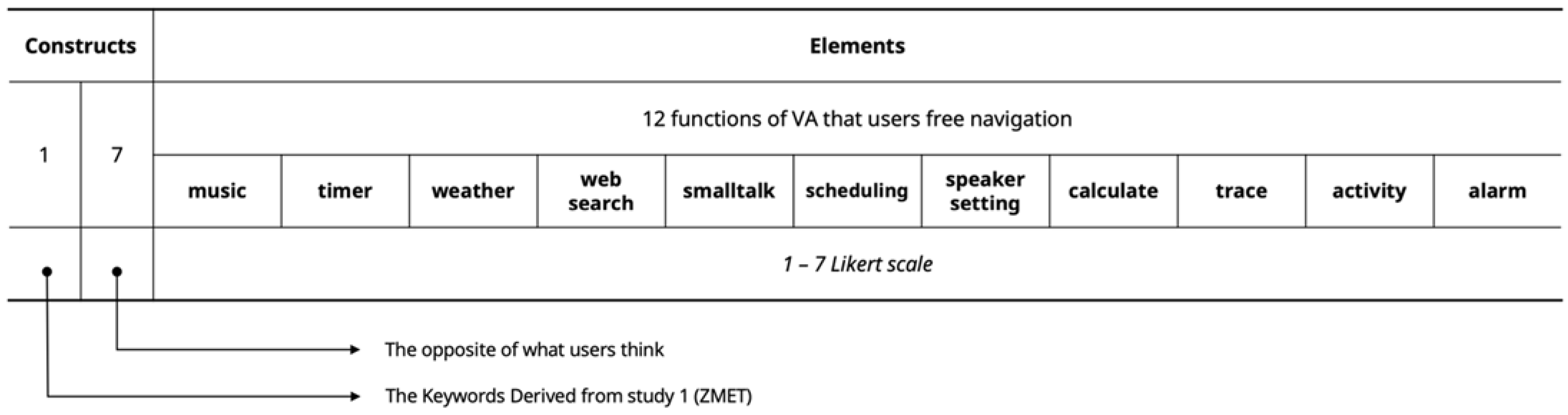

3.2. Study 2: Repertory Grid

4. Results

4.1. Results of Study 1

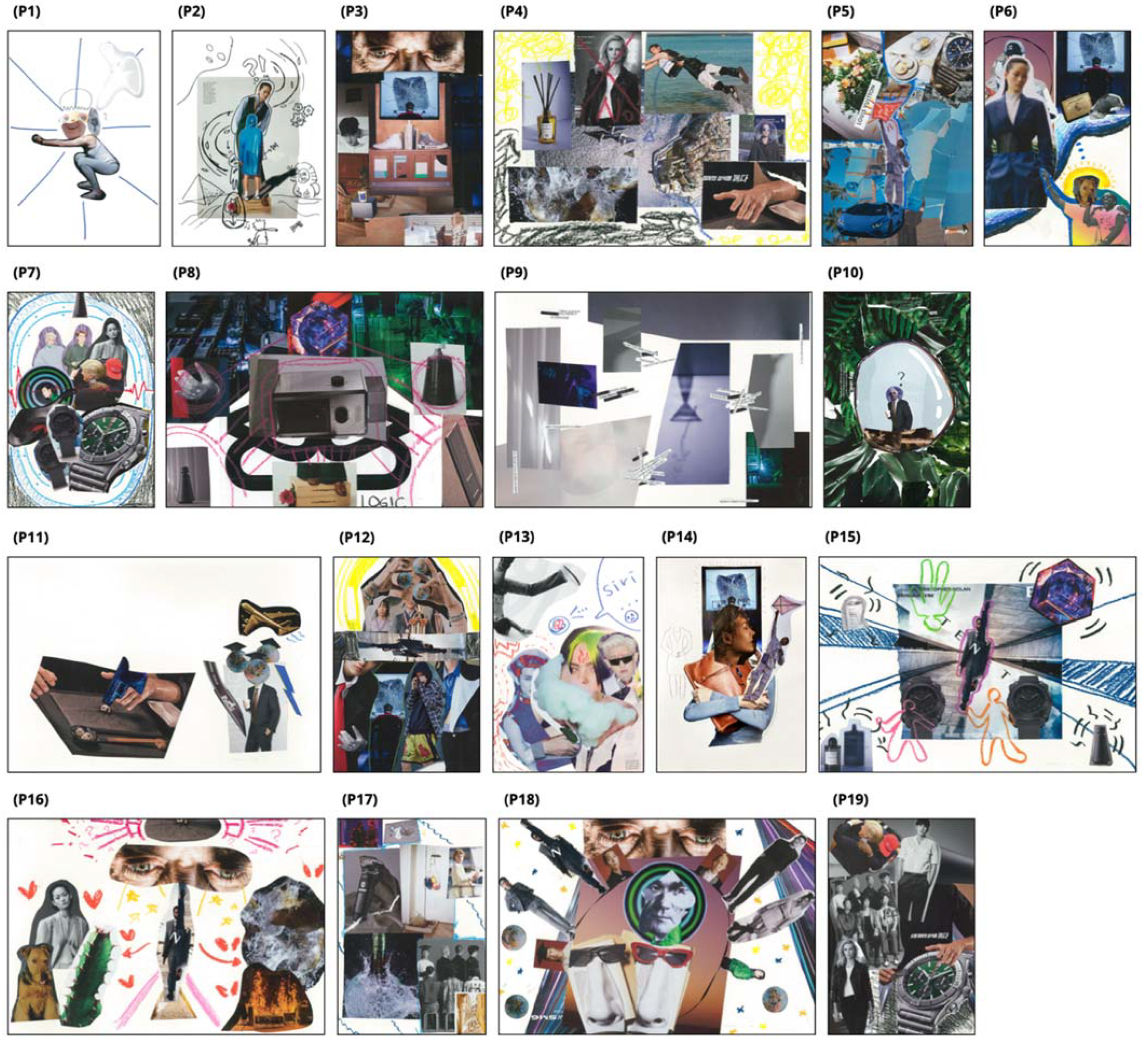

4.1.1. Collage Image

- (a)

- P6

“It is a very cute friend to me, but it is a tremendous analyst and has tremendous knowledge. Sometimes it’s stupid, but it’s not always like that. I think it’s piled up in a veil to some extent.”

- (b)

- P14

“It would be gentle and kind as it responds very kindly whenever and whatever I ask. Also, Rather than expressing my feelings, I chose the image of a secretary because I thought that the speaker itself was an ‘artificial intelligence secretary’. (…) If the speaker is actually working as a human, wouldn’t it be like this?”

- (c)

- P18

“Speakers are intangible and very high-dimensional, so I think they will be smart. (…) Human life is finite, but speakers are infinite, so it seems that it will continue to develop in the future.”

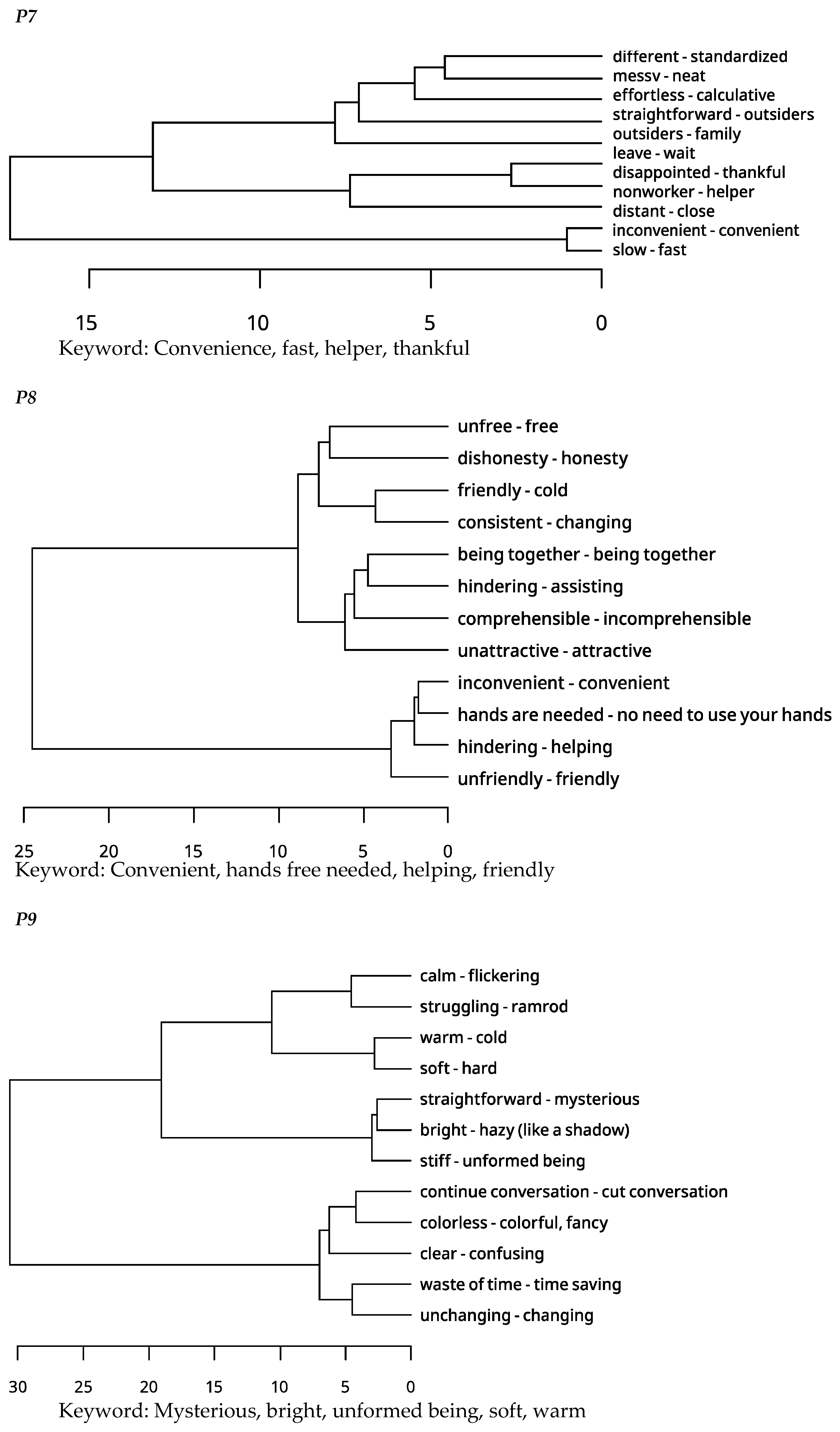

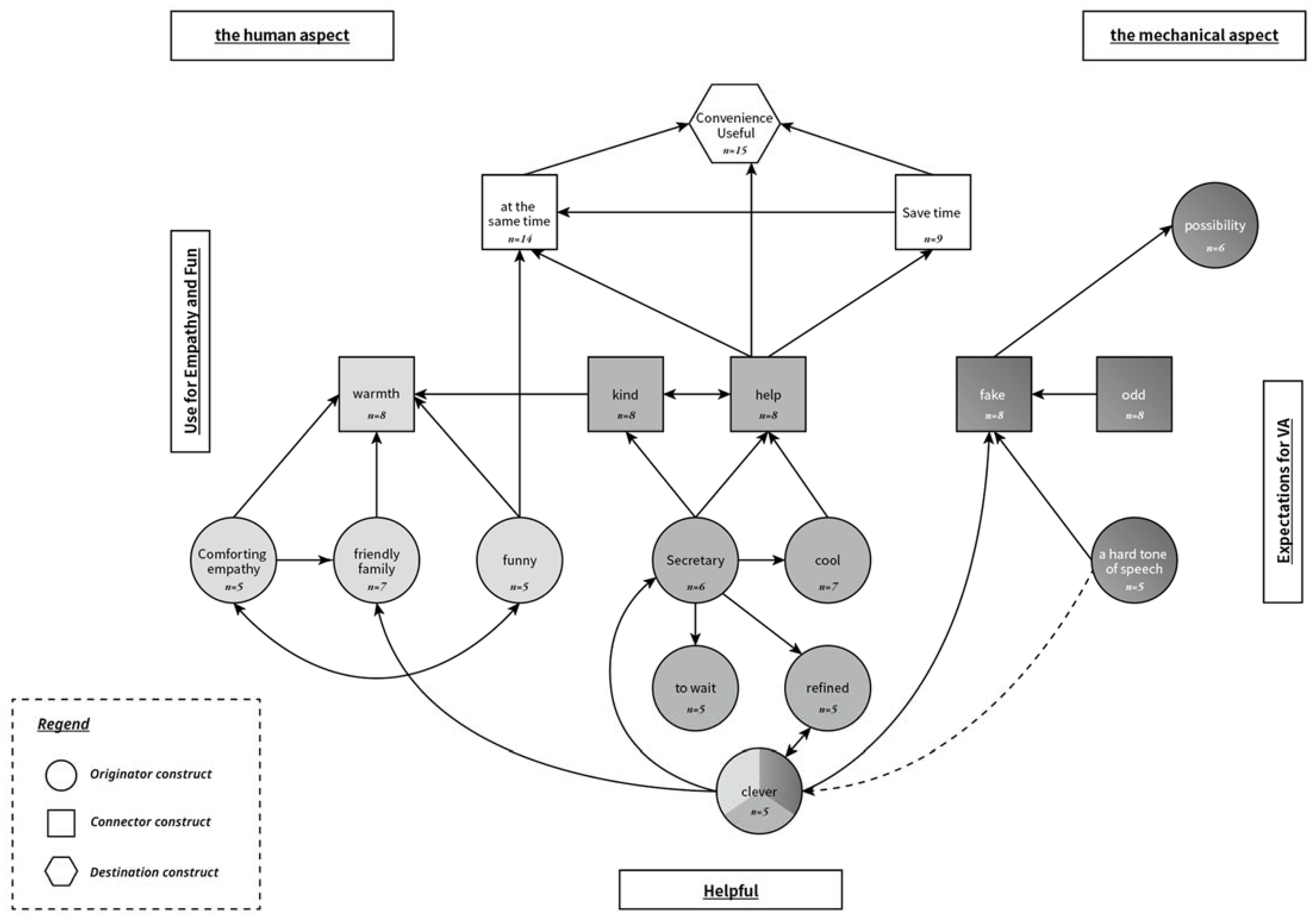

4.1.2. Consensus Map of Using VA

- (a)

- Theme 1: use for empathy and fun

“It feels like a friend A LOT.”(P6)

“It’s mechanical, but when I play words or say hello, I get the idea that I can be friends or family when I’m lonely.”(P7)

“Comfort and empathy, I would say. (…) I want to be comforted when I’m emotionally unstable. There are some things that are hard to tell my friends or family, that’s when I want to rely on voice assistants.”(P1)

- (b)

- Theme 2: helpful

“The voice assistant works for me. When I ask for a certain work, it performs that work and shows it to me, so the part seems like a top-down relationship to me.”(P17)

“As VAs search and inform information, I think of an image like a secretary from them.(P14)

- (c)

- Theme 3: expectations for VA

“I wanted to express a speaker in an intangible space. Human life is finite, but speakers are infinite, so it seems that it will continue to develop in the future.”(P18)

“There are certainly more advantages than disadvantages, and I feel uncomfortable without it. I feel very good if the conversation continues smoothly, like a conversation with a real person. I expected it to continue to develop in the future.”(P16)

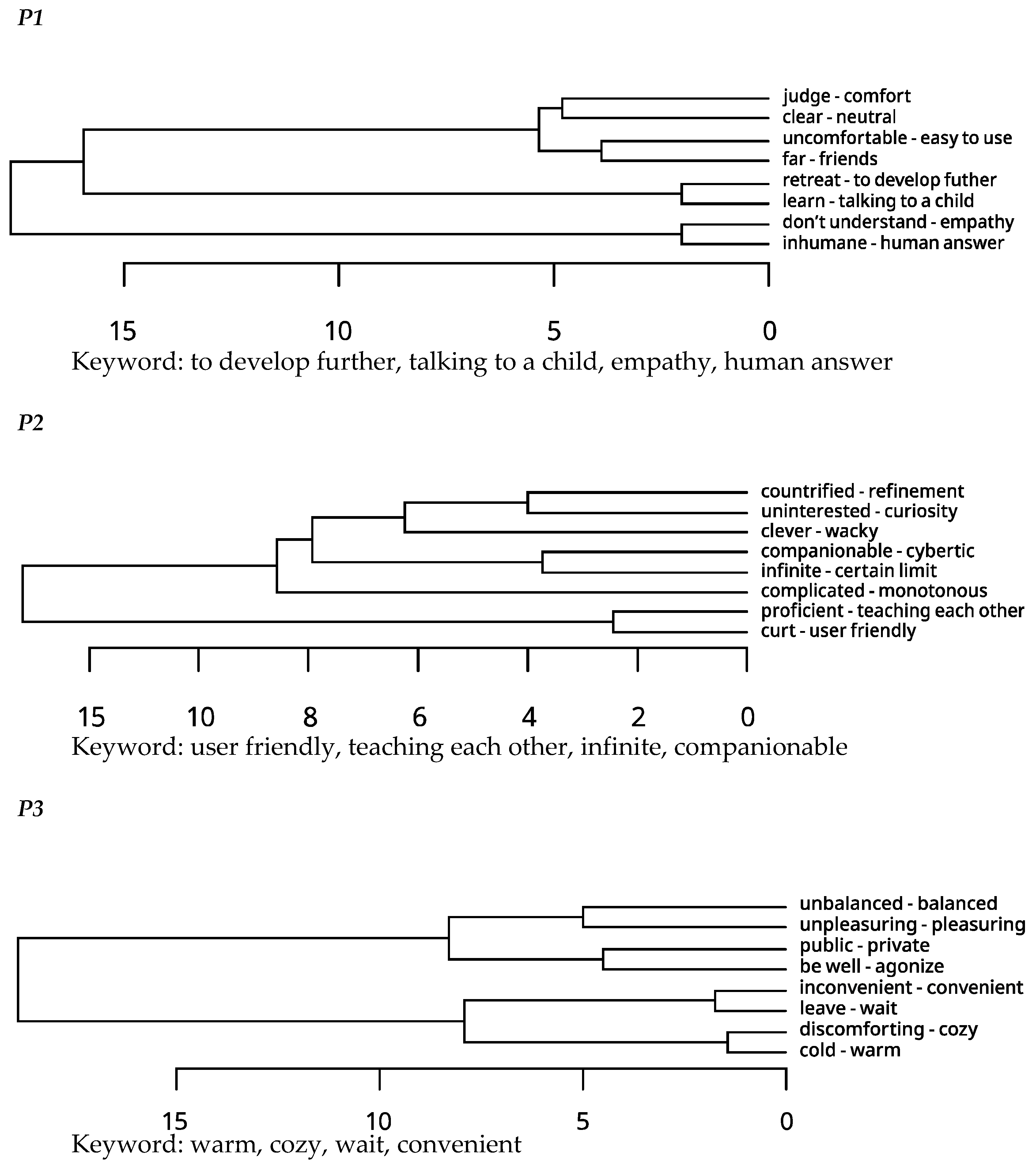

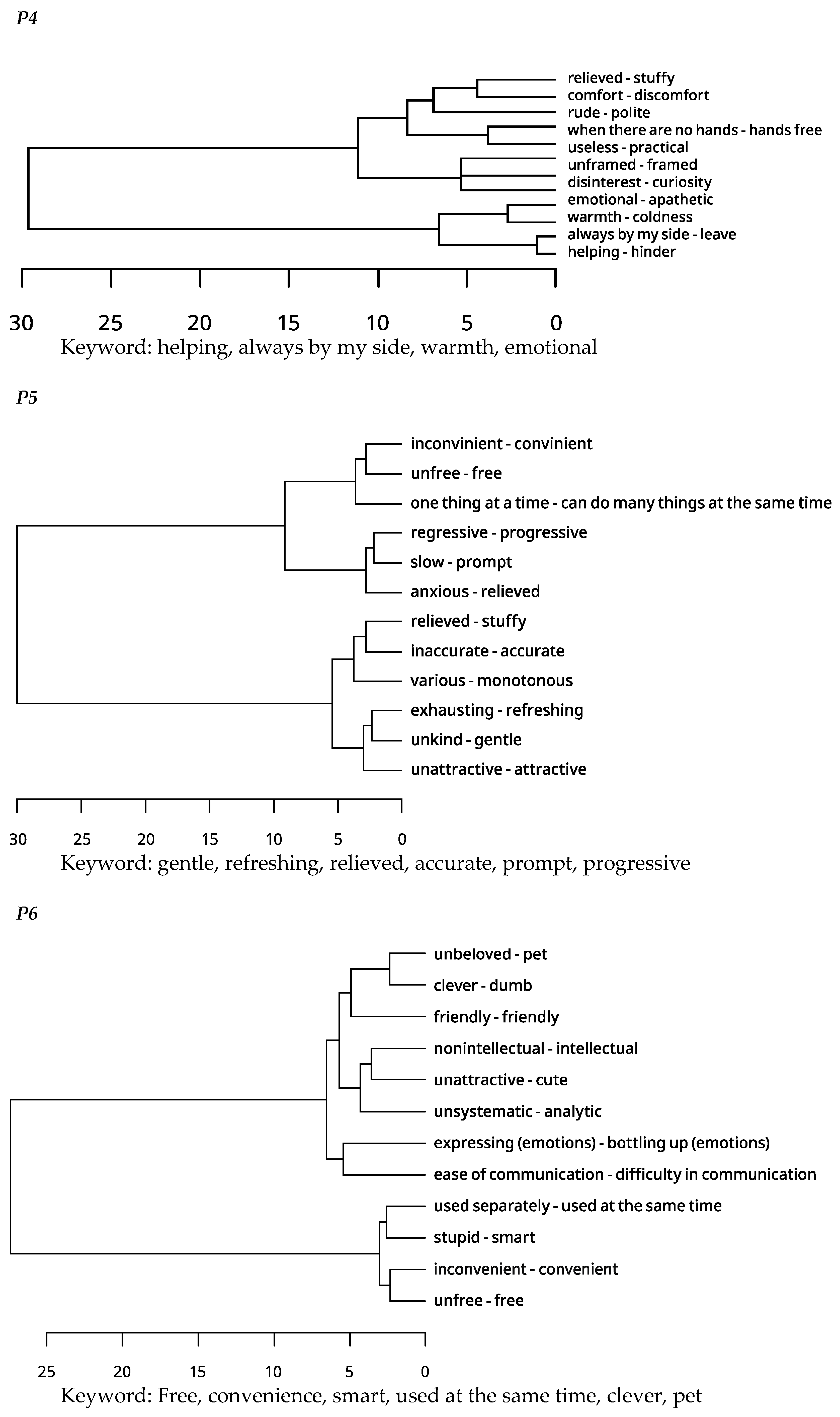

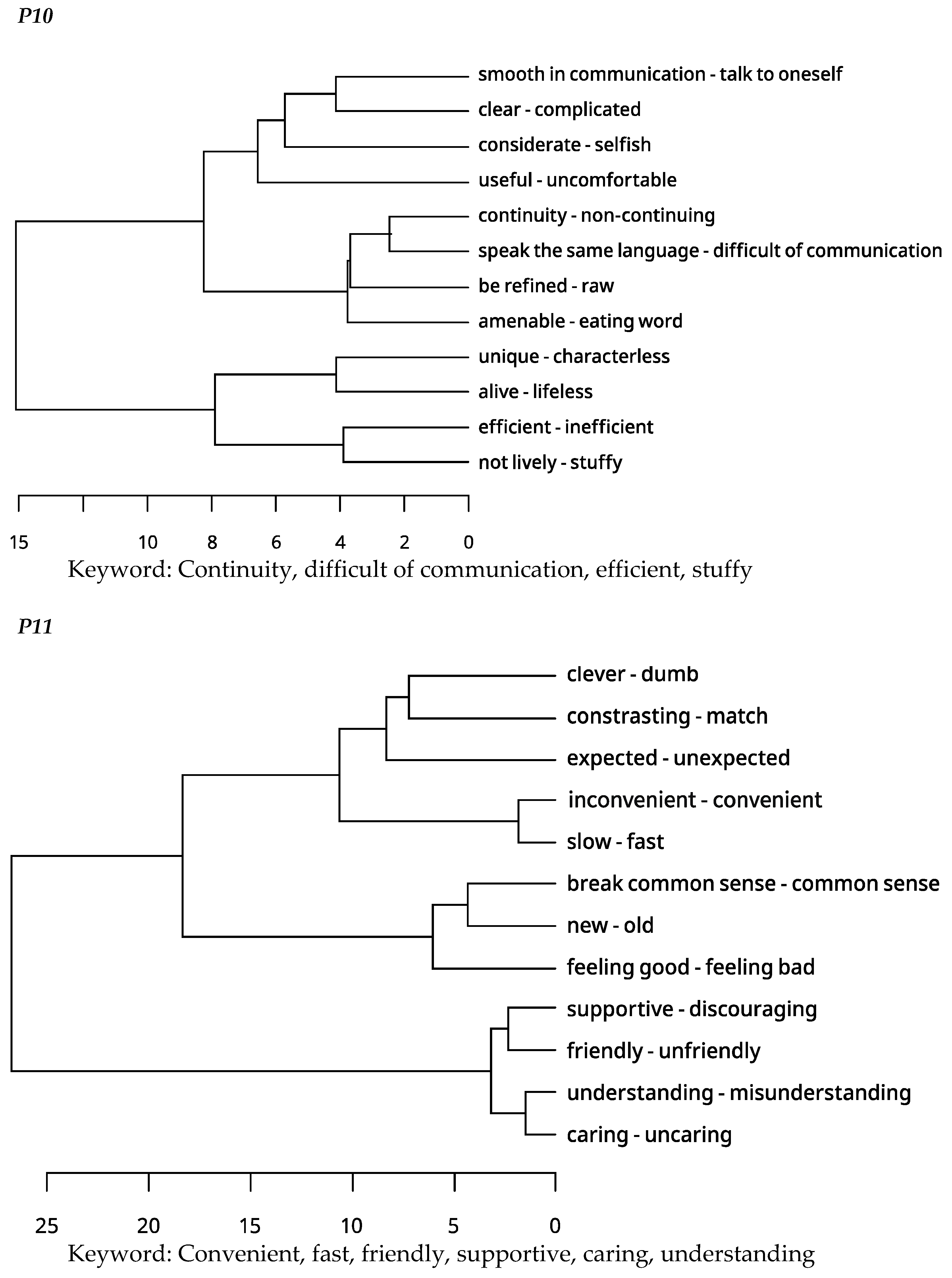

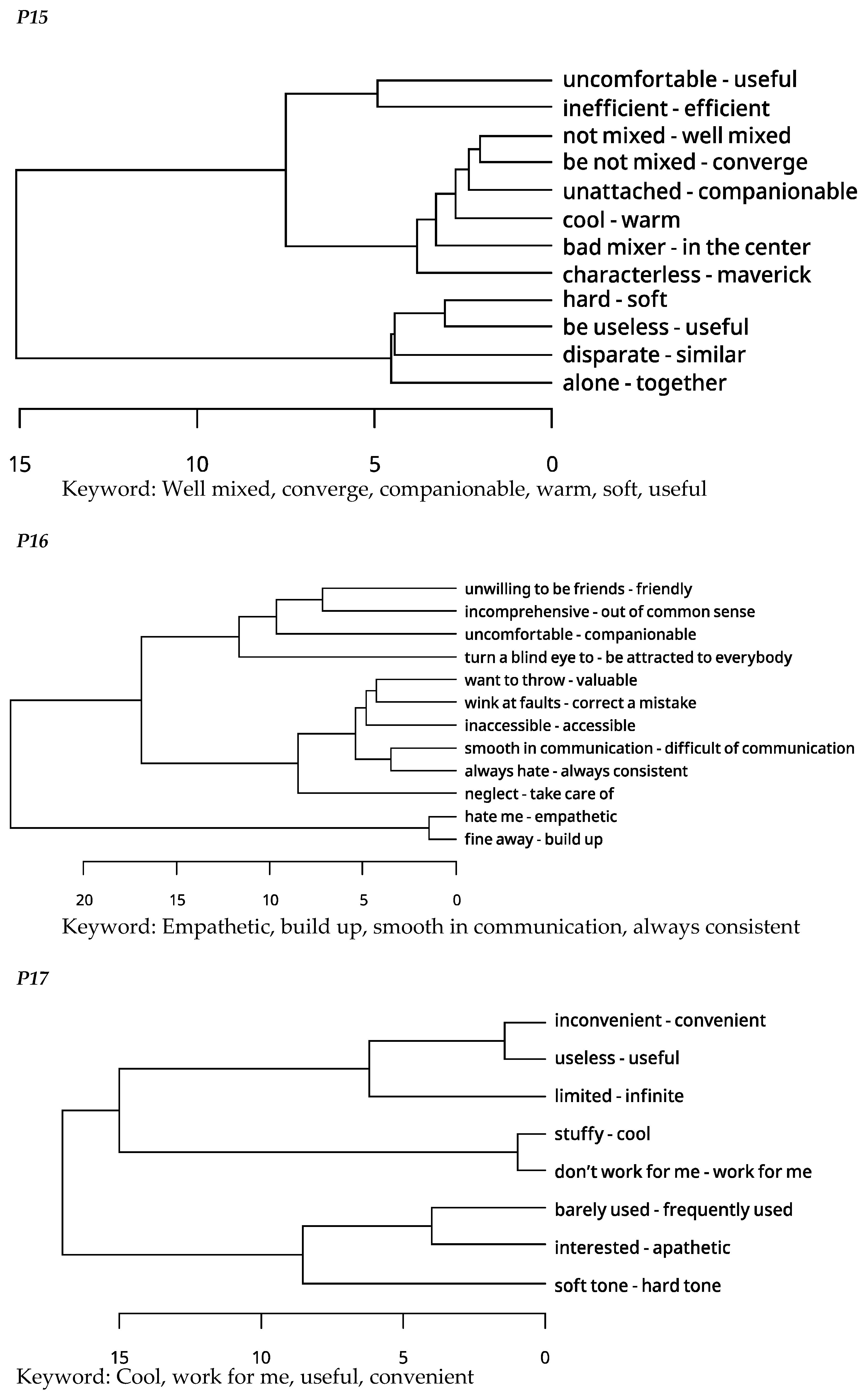

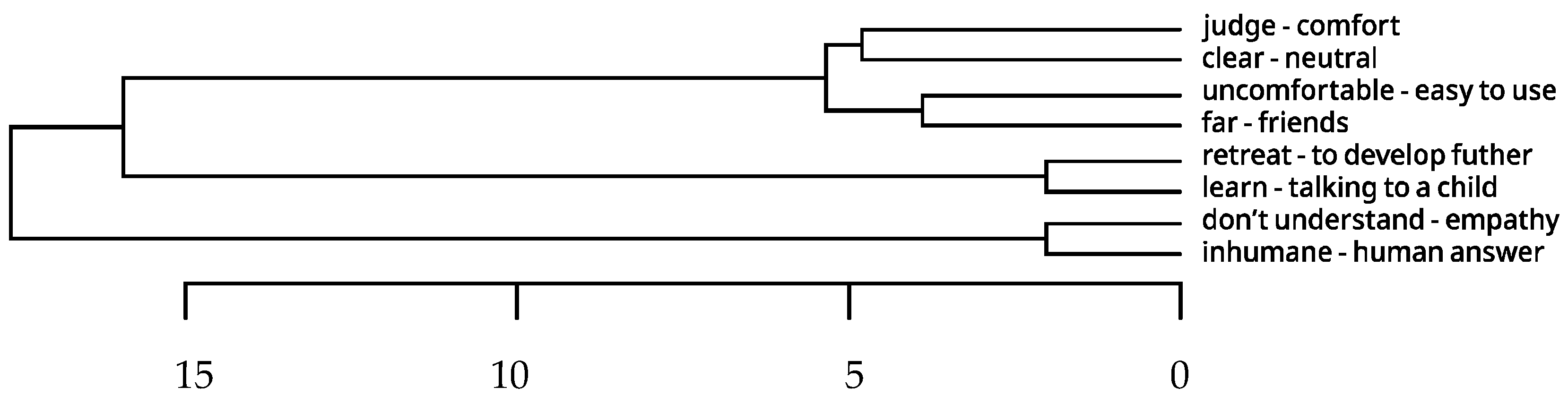

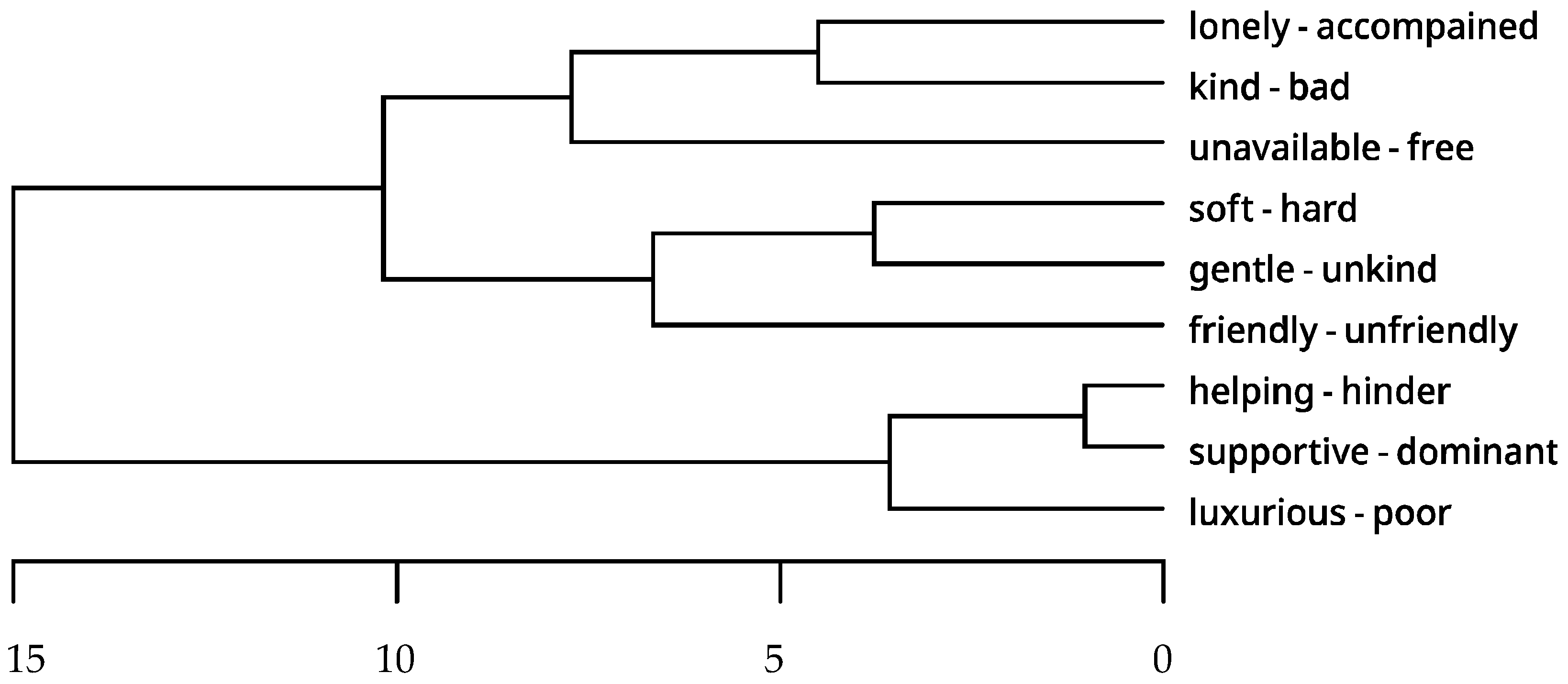

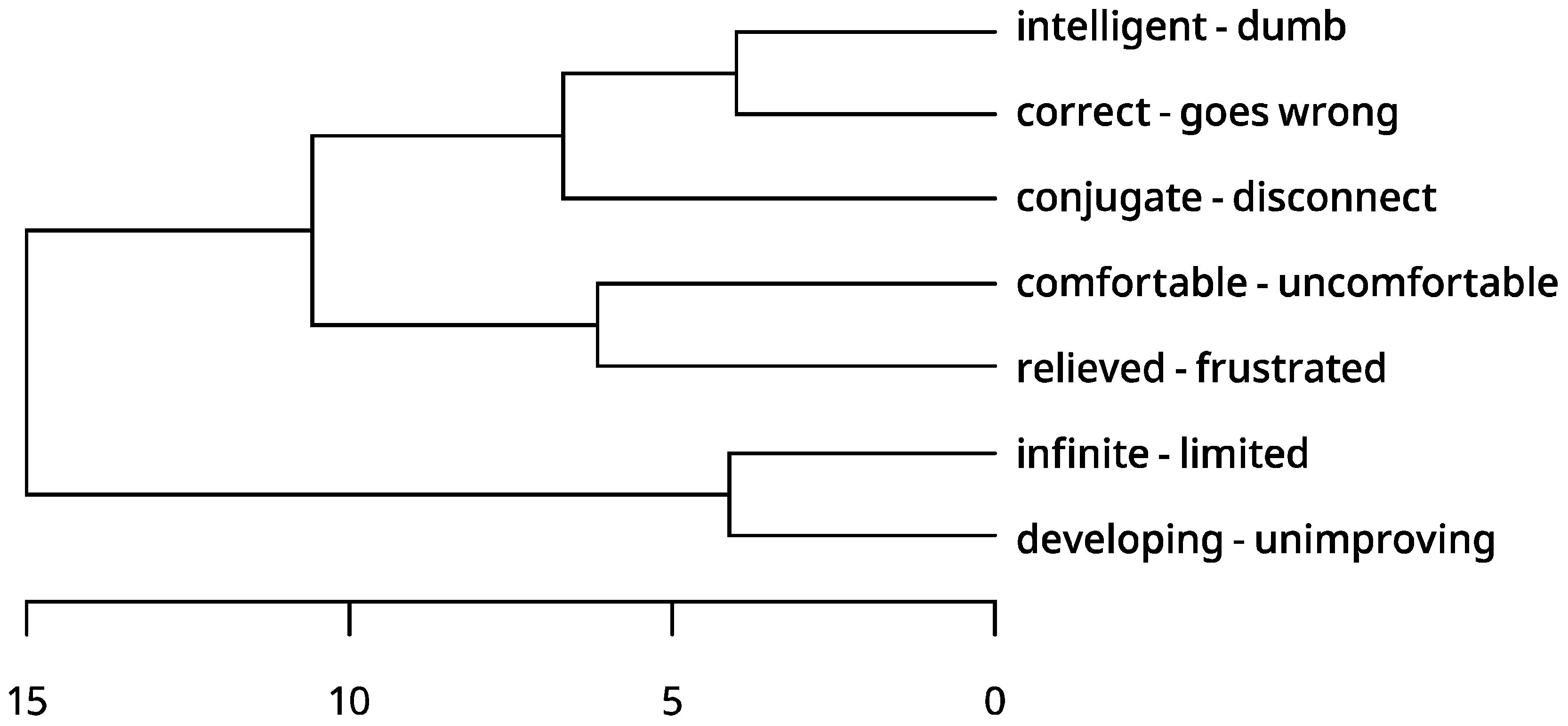

4.2. Results of Study 2

5. Discussion

6. Conclusions and Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

Appendix A

| No | Collage Image | Summarized Explanation |

|---|---|---|

| P1 |  | I will keep my eyes on the voice assistants. I was a bit more negative on my first day using it, but the positive feelings have gotten bigger. I think it’ll get better as time passes. My biggest thought is to keep my eyes on them. The reaction voice assistant comes with sometimes is cute. |

| P2 |  | I feel like I’m the trainer the voice assistant. Though the voice assistant should be able to learn by itself, I am the one who’s educating it one by one. Even though there are many inconveniences, I think it’s user friendly. I felt the kindness there. |

| P3 |  | Overall, I tried to express a lot of symmetry. In particular, the eyes monitor me (user), but there is also a sense of monitoring to do it properly. I wanted to express a neutral position that was not biased toward one side by expressing the images of positive and negative in the color of the space. |

| P4 |  | The seas show that I have to go to the sea to see if there are ships and what is. The parts under the sea represents the parts where I feel stuffy, where it is yet to be developed. The scent of diffuser describes how the voice assistant is always by my side. |

| P5 |  | because the voice assistant gives more comfort than frustration, my collage was created with images without frustration. |

| P6 |  | It is a very cute friend to me, but it is a tremendous analyst and has tremendous knowledge. Sometimes it’s stupid, but it’s not always like that. I think it’s piled up in a veil to some extent. |

| P7 |  | It’s mechanical, but when I play words or say hello, I get the idea that I can be friends or family when I’m lonely. |

| P8 |  | There seemed to be some constant logic when making a robot. Based on this logic, they create a complex feeling, wrap it finely to create a neat appearance, and we think that this system supports, and does things that express charm for us. |

| P9 |  | I wanted to reveal the image of VA that was covered with the veil, so I looked for image that have low transparency. VA’s personalities that are revealed briefly are expressed in small and overlapping ways to show images. |

| P10 |  | A situation in which you cannot read the context of the outside world, and you do not know the outside, trapped in your own world. |

| P11 |  | It’s smart, and the plane flies in order to show quickness. the stone broke instead of the egg, which shows the new side/aspect. |

| P12 |  | There is a figure of VA at the top, and there is a figure of me using VA at the bottom. I want to show a professional appearance, but in reality, it shows me using it while hiding. I wanted to emphasize that aspect through contrasting yellow. |

| P13 |  | I expressed my heart. I expressed such a confused mind that I don’t know what I can do while using the voice assistant. |

| P14 |  | It would be gentle and kind as it responds very kindly whenever and whatever I ask. Also, Rather than expressing my feelings, I chose the image of a secretary because I thought that the speaker itself was an ‘artificial intelligence secretary’. If the speaker is actually working as a human, wouldn’t it be like this?” |

| P15 |  | There are hard and cold objects in this, but they exist without being mixed in people. I expressed the voice assistant being unable to determine what value itself should provide and the image of it roaming around as itself is not clear about things. |

| P16 |  | I feel uncomfortable without it. I feel very good if the conversation continues smoothly, like a conversation with a real person. I expected it to continue to develop in the future. The response of the voice assistant and my feelings changed according to the question, so I expressed it in an endless road in this respect. |

| P17 |  | The voice assistant works for me. When I ask for a certain work, it performs that work and shows it to me, so the part seems like a top-down relationship to me. A speaker that is very frustrating but has infinite possibilities. |

| P18 |  | Speakers are intangible and very high-dimensional, so I think they will be smart. I wanted to express a speaker in an intangible space. so it seems that it will continue to develop in the future. |

| P19 |  | I wanted to express the achromatic, mechanical feeling. I wanted to show the convenience of using it without touching it and the voice assistant I think of through the use of a metal watch. Overall, I tried to emphasize the achromatic feel. People were static and not laughing on a gray background, indicating the rigidity of the voice assistant. |

Appendix B

References

- Alexa Devices Maintain 70% Market Share in U.S. According to Survey. Available online: https://marketingland.com/alexa-devices-maintain-70-market-share-in-u-s-according-to-survey-265180 (accessed on 30 August 2020).

- Business-Insider. Apple Says that 500 Million Customers Use Siri Business Insider. Available online: https://www.businessinsider.com/apple-says-siri-has-500-million-users-2018-1 (accessed on 24 July 2020).

- Global Smart Speaker Users 2019. Available online: https://www.emarketer.com/content/global-smart-speaker-users-2019 (accessed on 28 May 2019).

- Business-Insider. Smart Speakers Are Becoming so Popular, More People will Use Them than Wearable Tech Products This Year. Business-Insider. Available online: https://www.businessinsider.com/more-us-adults-will-use-smart-speakers-than-wearables-in-2018-2018-5 (accessed on 13 May 2020).

- McLean, G.; Osei-Frimpong, K. Hey Alexa… examine the variables influencing the use of artificial intelligent in-home voice assistants. Comput. Hum. Behav. 2019, 99, 28–37. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y. Machines and mindlessness: Social responses to computers. J. Soc. Issues 2000, 56, 81–103. [Google Scholar] [CrossRef]

- Epstein, R.; Roberts, G.; Beber, G. Parsing the Turing Test: Philosophical and Methodological Issues in the Quest for the Thinking Computer, 1st ed.; Epstein, R., Roberts, G., Beber, G., Eds.; Springer: Dordrecht, The Netherlands, 2009. [Google Scholar]

- Shank, D.B.; Graves, C.; Gott, A.; Gamez, P.; Rodriguez, S. Feeling our way to machine minds: People’s emotions when perceiving mind in artificial intelligence. Comput. Hum. Behav. 2019, 98, 104–220. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y.; Green, N. Are machines gender neutral? Gender-stereotypic responses to computers with voices. J. Appl. Soc. Psychol. 1997, 27, 864–876. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y.; Carney, P. Are people polite to computers? Responses to computer-based interviewing systems. J. Appl. Soc. Psychol. 1999, 29, 1093–1110. [Google Scholar] [CrossRef]

- Edwards, A.; Edwards, C.; Westerman, D.; Spence, P.R. Initial expectations, interactions, and beyond with social robots. Comput. Hum. Behav. 2019, 90, 308–314. [Google Scholar] [CrossRef]

- Schrills, T.; Franke, T. How to answer why-evaluating the explanations of ai through mental model analysis. arXiv 2002, arXiv:2002.02526. [Google Scholar]

- Wahl, M.; Krüger, J.; Frommer, J. Users’ Sense-Making of an Affective Intervention in Human-Computer Interaction. In Proceedings of the International Conference on Human-Computer Interaction, Toronto, ON, Canada, 17–22 July 2016; Springer: Cham, Switzerland, 2016; pp. 71–79. [Google Scholar]

- Krüger, J.; Wahl, M.; Frommer, J. Users’ relational ascriptions in user-companion interaction. In Proceedings of the International Conference on Human-Computer Interaction, Toronto, ON, Canada, 17–22 July 2016; Springer: Cham, Switzerland, 2016; pp. 128–137. [Google Scholar]

- Mithen, S.; Boyer, P. Anthropomorphism and the evolution of cognition. J. R. Anthropol. Inst. 1996, 2, 717–722. [Google Scholar]

- Li, X.; Sung, Y. Anthropomorphism brings us closer: The mediating role of psychological distance in User–AI assistant interactions. Comput. Hum. Behav. 2021, 118, 106680. [Google Scholar] [CrossRef]

- Epley, N.; Waytz, A.; Cacioppo, J.T. On seeing human: A three-factor theory of anthropomorphism. Psychol. Rev. 2007, 114, 864–886. [Google Scholar] [CrossRef]

- Cafaro, A.; Vilhjálmsson, H.H.; Bickmore, T. First impressions in human–Agent virtual encounters. ACM Trans. Comput.-Hum. Interact. 2016, 23, 24. [Google Scholar] [CrossRef]

- Braun, M.; Mainz, A.; Chadowitz, R.; Pfleging, B.; Alt, F. At your service: Designing voice assistant personalities to improve automotive user interfaces. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow Scotland, UK, 4–9 May 2019. [Google Scholar]

- André, E.; Klesen, M.; Gebhard, P.; Allen, S.; Rist, T. Integrating models of personality and emotions into lifelike characters. In International Workshop on Affective Interactions; Lecture Notes in Computer Science; Paiva, A., Ed.; Springer: Berlin/Heidelberg, Germany, 2000; Volume 1814, pp. 150–165. [Google Scholar]

- Jung, M.F. Affective Grounding in Human-Robot Interaction. In Proceedings of the 2017 ACM/IEEE International Conference on Human-Robot Interaction (HRI ’17), Vienna, Austria, 6–9 March 2017; ACM: New York, NY, USA, 2017; pp. 263–273. [Google Scholar]

- McCrae, R.R.; Costa, P.T. Personality, coping, and coping effectiveness in an adult sample. J. Personal. 1986, 54, 385–404. [Google Scholar] [CrossRef]

- Kammrath, L.K.; Ames, D.R.; Scholer, A.A. Keeping up impressions: Inferential rules for impression change across the Big Five. J. Exp. Soc. Psychol. 2007, 43, 450–457. [Google Scholar] [CrossRef]

- Aaker, J.L. Dimensions of brand personality. J. Mark. Res. 1997, 34, 347–356. [Google Scholar] [CrossRef]

- Chen, Q.; Rodgers, S. Development of an instrument to measure web site personality. J. Interact. Advert. 2006, 7, 4–46. [Google Scholar] [CrossRef]

- Eysenck, H.J. Four ways five factors are not basic. Personal. Individ. Differ. 1992, 13, 667–673. [Google Scholar] [CrossRef]

- Goldberg, L.R. The development of markers for the big-five factor structure. Psychol. Assess. 1992, 4, 26–42. [Google Scholar] [CrossRef]

- Poushneh, A. Humanizing voice assistant: The impact of voice assistant personality on consumers’ attitudes and behaviors. J. Retail. Consum. Serv. 2021, 58, 1–10. [Google Scholar] [CrossRef]

- Aggarwal, P.; McGill, A.L. Is that car smiling at me? Schema congruity as a basis for evaluating anthropomorphized products. J. Consum. Res. 2007, 34, 468–479. [Google Scholar] [CrossRef]

- Damasio, A. The Strange Order of Things: Life, Feeling, and the Making of Cultures; Pantheon: New York, NY, USA, 2019. [Google Scholar]

- Holzwarth, M.; Janiszewski, C.; Neumann, M.M. The influence of avatars on online consumer shopping behavior. J. Mark. 2006, 70, 19–36. [Google Scholar] [CrossRef]

- Ng, I.C.; Wakenshaw, S.Y. The internet-of-things: Review and research directions. Int. J. Res. Mark. 2017, 34, 3–21. [Google Scholar] [CrossRef]

- Dunkel-Schetter, C.; Folkman, S.; Lazarus, R.S. Correlates of social support receipt. J. Personal. Soc. Psychol. 1987, 53, 71–80. [Google Scholar] [CrossRef][Green Version]

- Menon, K.; Dubé, L. The effect of emotional provider support on angry versus anxious consumers. Int. J. Res. Mark. 2007, 24, 268–275. [Google Scholar] [CrossRef]

- Duhachek, A. Coping: A multidimensional, hierarchical framework of responses to stressful consumption episodes. J. Consum. Res. 2005, 32, 41–53. [Google Scholar] [CrossRef]

- Turner, J.W.; Robinson, J.D.; Tian, Y.; Neustadtl, A.; Angelus, P.; Russell, M.; Mun, S.K.; Levine, B. Can messages make a difference? The association between e-mail messages and health outcomes in diabetes patients. Hum. Commun. Res. 2013, 39, 252–268. [Google Scholar] [CrossRef]

- Hill, C.A. Seeking emotional support: The influence of affiliative need and partner warmth. J. Personal. Soc. Psychol. 1991, 60, 112–121. [Google Scholar] [CrossRef]

- Lee, E.; Lee, J.; Sung, Y. Effects of Users Characteristics and Perceived Value on VPA Satisfaction. Korean J. Consum. Advert. Psychol. 2019, 20, 31–53. [Google Scholar]

- Wada, K.; Shibata, T. Living with seal robots-its sociopsychological and physiological influences on the elderly at a care house. IEEE Trans. Robot. 2007, 23, 972–980. [Google Scholar] [CrossRef]

- Kim, A.; Cho, M.; Ahn, J.; Sung, Y. Effects of gender and relationship type on the response to artificial intelligence. Cyberpsychology Behav. Soc. Netw. 2019, 22, 249–253. [Google Scholar] [CrossRef]

- Gelbrich, K.; Hagel, J.; Orsingher, C. Emotional support from a digital assistant in technology-mediated services: Effects on customer satisfaction and behavioral persistence. Int. J. Res. Mark. 2021, 38, 176–193. [Google Scholar] [CrossRef]

- Fiske, S.T.; Cuddy, A.J.C.; Glick, P.; Xu, J. A model of (often mixed) stereotype content: Competence and warmth respectively follow from perceived status and competition. J. Personal. Soc. Psychol. 2002, 82, 878–902. [Google Scholar] [CrossRef]

- Gao, Y.L.; Mattila, A.S. Improving consumer satisfaction in green hotels: The roles of perceived warmth, perceived competence, and CSR motive. Int. J. Hosp. Manag. 2014, 42, 20–31. [Google Scholar] [CrossRef]

- McKnight, D.H.; Chervany, N.L. Trust and distrust definitions: One bite at a time. Trust. Cyber-Soc. 2001, 2245, 27–54. [Google Scholar]

- Rheua, M.; Shina, J.Y.; Penga, W.; Huh-Yoob, J. Systematic review: Trust-building factors and implications for conversational agent design. Int. J. Hum. -Comput. Interact. 2021, 37, 81–96. [Google Scholar] [CrossRef]

- Pierce, J.R.; Kilduff, G.J.; Galinsky, A.D.; Sivanathan, N. From glue to gasoline: How competition turns perspective takers unethical. Psychol. Sci. 2013, 24, 1986–1994. [Google Scholar] [CrossRef] [PubMed]

- Waytz, A.; Heafner, J.; Epley, N. The mind in the machine: Anthropomorphism increases trust in an autonomous vehicle. J. Exp. Soc. Psychol. 2014, 52, 113–117. [Google Scholar] [CrossRef]

- Bickmore, T.W.; Picard, R.W. Establishing and maintaining long-term human-computer relationships. ACM Trans. Comput.-Hum. Interact. 2005, 12, 293–327. [Google Scholar] [CrossRef]

- Broadbent, E.; Kumar, V.; Li, X.; Sollers, J., 3rd; Stafford, R.Q.; MacDonald, B.A.; Wegner, D.M. Robots with display screens: A robot with a more human-like face display is perceived to have more mind and a better personality. PLoS ONE 2013, 8, e72589. [Google Scholar]

- Yam, K.C.; Bigman, Y.E.; Tang, P.M.; Ilies, R.; De Cremer, D.; Soh, H.; Gray, K. Robots at work: People prefer—and forgive—service robots with perceived feelings. J. Appl. Psychol. 2020. Advance online publication. [Google Scholar] [CrossRef]

- Guzman, A.L. Voices in and of the machine: Source orientation toward mobile virtual assistants. Comput. Hum. Behav. 2018, 90, 343–350. [Google Scholar] [CrossRef]

- Cho, M.; Lee, S.; Lee, K.P. Once a Kind Friend is Now a Thing: Understanding How Conversational Agents at Home are Forgotten. In Proceedings of the 2019 on Designing Interactive Systems Conference (DIS ’19), San Diego, CA, USA, 23–28 June 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1557–1569. [Google Scholar]

- Cowan, B.R.; Pantidi, N.; Coyle, D.; Morrissey, K.; Clarke, P.; Al-Shehri, S.; Earley, D.; Bandeira, N. "What can i help you with?": Infrequent users’ experiences of intelligent personal assistants. In Proceedings of the 19th International Conference on Human-Computer Interaction with Mobile Devices and Services (MobileHCI’17), Vienna, Austria, 4–7 September 2017; Association for Computing Machinery: New York, NY, USA, 2017. Article 43. pp. 1–12. [Google Scholar]

- Marchick, A. The 2017 Voice Report by Alpine (fka VoiceLabs). Available online: https://medium.com/@marchick/the-2017-voice-report-by-alpine-fka-voicelabs-24c5075a070f (accessed on 29 August 2020).

- Rieh, S.Y.; Yang, J.Y.; Yakel, E.; Markey, K. Conceptualizing institutional repositories: Using co-discovery to uncover mental models. In Proceedings of the third symposium on Information interaction in context, New Brunswick, NJ, USA, 18–21 August 2010; pp. 165–174. [Google Scholar]

- Katz, E.; Blumler, J.G.; Gurevitch, M. Utilization of Mass Communication by the Individual. In The Uses of Mass Communications: Current Perspectives on Gratifications Research; Sage: Beverly Hills, CA, USA, 1974; pp. 19–32. [Google Scholar]

- Grellhesl, M.; Punyaunt-Carter, N.M. Using the uses and gratifications theory to understand gratifications sought through text messaging practices of male and female undergraduate students. Comput. Hum. Behav. 2012, 28, 2175–2181. [Google Scholar] [CrossRef]

- Ashfaq, M.; Yun, J.; Yu, S. My smart speaker is cool! perceived coolness, perceived values, and users’ attitude toward smart speakers. Int. J. Hum.-Comput. Interact. 2020, 37, 560–573. [Google Scholar] [CrossRef]

- Yoo, J.; Choi, S.; Choi, M.; Rho, J. Why people use Twitter: Social conformity and social value perspectives. Online Inf. Rev. 2014, 38, 265–283. [Google Scholar] [CrossRef]

- Rauschnabel, P.A.; He, J.; Ro, Y.K. Antecedents to the adoption of augmented reality smart glasses: A closer look at privacy risks. J. Bus. Res. 2018, 92, 374–384. [Google Scholar] [CrossRef]

- Park, K.; Kwak, C.; Lee, J.; Ahn, J.H. The effect of platform characteristics on the adoption of smart speakers: Empirical evidence in South Korea. Telemat. Inform. 2018, 35, 2118–2132. [Google Scholar] [CrossRef]

- Kowalczuk, P. Consumer acceptance of smart speakers: A mixed methods approach. J. Res. Interact. Mark. 2018, 12, 418–431. [Google Scholar] [CrossRef]

- Yang, H.; Lee, H. Understanding user behavior of virtual personal assistant devices. Inf. Syst. e-Bus. Manag. 2019, 17, 65–87. [Google Scholar] [CrossRef]

- Chattaramana, V.; Kwon, W.; Gilbert, J.E.; Ross, K. Should AI-Based, conversational digital assistants employ social- or task- oriented interaction style? A task-competency and reciprocity perspective for older adults. Comput. Hum. Behav. 2019, 90, 315–330. [Google Scholar] [CrossRef]

- Reeves, B.; Nass, C. The Media Equation: How People Treat Computers, Television, and New Media Like Real People and Places; CSLI Publications and Cambridge University Press: Cambridge, UK, 1996. [Google Scholar]

- Tamagawa, R.; Watson, C.I.; Kuo, I.H.; MacDonald, B.A.; Broadbent, E. The effects of synthesized voice accents on user perceptions of robots. Int. J. Soc. Robot. 2011, 3, 253–262. [Google Scholar] [CrossRef]

- Kwon, M.; Jung, M.F.; Knepper, R.A. Human Expectations of Social Robots. In Proceedings of the 2016 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI), Christchurch, New Zealand, 7–10 March 2016, ISSN 2167-2148. [Google Scholar]

- Hoy, M.B. Alexa, Siri, Cortana, and More: An Introduction to Voice Assistants. Interactive home healthcare system with integrated voice assistant. In Proceedings of the 2019 42nd International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO), Opatija, Croatia, 20–24 May 2019; pp. 284–288. [Google Scholar]

- Hergeth, S.; Lorenz, L.; Vilimek, R.; Krems, J.F. Keep your scanners peeled: Gaze behavior as a measure of automation trust during highly automated driving. Hum. Factors 2016, 58, 509–519. [Google Scholar] [CrossRef]

- Cardiocube Voice-Based Ai Software. Available online: https://www.cardiocube.com/ (accessed on 21 November 2021).

- The Intelligent Healthcare Companion for the Home. Available online: https://www.pillohealth.com/ (accessed on 21 November 2021).

- Wolters, M.K.; Kelly, F.; Kilgour, J. Designing a spoken dialogue interface to an intelligent cognitive assistant for people with dementia. Health Inform. J. 2016, 22, 854–866. [Google Scholar] [CrossRef] [PubMed]

- Ondáš, S.; Pleva, M.; Hládek, D. How chatbots can be involved in the education process. In Proceedings of the 2019 17th International Conference on Emerging eLearning Technologies and Applications (ICETA), Starý Smokovec, Slovakia, 21–22 November 2019; pp. 575–580. [Google Scholar]

- Terzopoulos, G.; Satratzemi, M. Voice assistants and artificial intelligence in education. In Proceedings of the 9th Balkan Conference on Informatics (BCI’19), Sofia, Bulgaria, 26–28 September 2019; Association for Computing Machinery: New York, NY, USA, 2019; Volume 34, pp. 1–6. [Google Scholar]

- Alonso, R.; Concas, E.; Reforgiato Recupero, D. An abstraction layer exploiting voice assistant technologies for effective human—Robot interaction. Appl. Sci. 2021, 11, 9165. [Google Scholar] [CrossRef]

- Teixeira, A.; Hämäläinen, A.; Avelar, J.; Almeida, N.; Németh, G.; Fegyó, T.; Zainkó, C.; Csapó, T.; Tóth, B.; Oliveira, A.; et al. Speech-centric Multimodal Interaction for Easy-to-access Online Services—A Personal Life Assistant for the Elderly. Procedia Comput. Sci. 2014, 27, 389–397. [Google Scholar] [CrossRef]

- Fernando, N.; Tan, F.T.C.; Vasa, R.; Mouzaki, K.; Aitken, I. Examining digital assisted living: Towards a case study of smart homes for the elderly. In Proceedings of the 24th European Conference on Information Systems ECIS, Istanbul, Turkey, 12–15 June 2016; pp. 12–15. [Google Scholar]

- Portet, F.; Vacher, M.; Golanski, C.; Roux, C.; Meillon, B. Design and evaluation of a smart home voice interface for the elderly: Acceptability and objection aspects. Pers. Ubiquitous Comput. 2013, 17, 127–144. [Google Scholar] [CrossRef]

- Johnson-Laird, P.N. Mental models and thought. In The Cambridge Handbook of Thinking and Reasoning; Cambridge University Press: Cambridge, UK, 2005; pp. 185–208. [Google Scholar]

- Volkamer, M.; Renaud, K. Mental models general introduction and review of their application to human-centred security. Number Theory Cryptogr. 2013, 8260, 255–280. [Google Scholar]

- Craik, K.J.W. The Nature of Explanation; CUP Archive: Cambridge, UK, 1967; p. 445. [Google Scholar]

- Zaltman, G. How Customers Think: Essential Insights into the Mind of the Market; Harvard Business School Press: Boston, MA, USA, 2003. [Google Scholar]

- Carey, S. Cognitive science and science education. Am. Psychol. 1986, 41, 1123–1130. [Google Scholar] [CrossRef]

- Devedzic, V.; Radovic, D. A Framework for Building Intelligent Manufacturing Systems. IEEE Trans. Syst. Man Cybern. Part C (Appl. Rev.) 1999, 29, 422–439. [Google Scholar] [CrossRef]

- Xie, B.; Zhou, J.; Wang, H. How influential are mental models on interaction performance? Exploring the gap between users’ and designers’ mental models through a new quantitative method. Adv. Hum. -Comput. Interact. 2017, 2017, 1–14. [Google Scholar] [CrossRef]

- Norman, D.A. The Psychology of Everyday Things (The Design of Everyday Things); Basic Books: New York, NY, USA, 1988. [Google Scholar]

- Helander, M.G.; Landauer, T.K.; Prabhu, P.V. Handbook of Human-Computer Interaction, 2nd ed.; North Holland/Elsevier: Amsterdam, The Netherlands, 1997. [Google Scholar]

- Van der veer, G.C.; Melguizo, M.C.P. Mental models. In The Human-Computer Interaction Handbook: Fundamentals, Evolving Technologies and Emerging Applications; CRC Press: Boca Raton, FL, USA, 2002; pp. 52–80. [Google Scholar]

- Powers, A.; Kiesler, S. The advisor robot: Tracing people’s mental model from a robot’s physical attributes. In Proceedings of the 1st ACM SIGCHI/SIGART conference on Human-robot interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 218–225. [Google Scholar]

- Nass, C.; Brave, S. Wired for Speech: How Voice Activates and Advances the Human–Computer Relationship; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Nass, C.; Lee, K.M. Does computer-synthesized speech manifest personality? Experimental tests of recognition, similarity-attraction, and consistency-attraction. J. Exp. Psychol. 2001, 7, 171–181. [Google Scholar] [CrossRef]

- Kiesler, S. Fostering common ground in human-robot interaction. In Proceedings of the IEEE International Workshop on Robots and Human Interactive Communication (RO-MAN 2005), Nashville, TN, USA, 13–15 August 2005; pp. 158–163. [Google Scholar]

- Rowe, A.L.; Cooke, N.J. Measuring mental models: Choosing the right tools for the job. Hum. Resour. Dev. Q. 1995, 6, 243–255. [Google Scholar] [CrossRef]

- Yamada, Y.; Ishihara, K.; Yamaoka, T. A study on an usability measurement based on the mental model. In International Conference on Universal Access in Human-Computer Interaction; Springer: Berlin/Heidelberg, Germany, 2011; pp. 168–173. [Google Scholar]

- Hsu, Y.C. The effects of metaphors on novice and expert learners’ performance and mental-model development. Interact. Comput. 2006, 18, 770–792. [Google Scholar] [CrossRef]

- Ziefle, M.; Bay, S. Mental models of a cellular phone menu. Comparing older and younger novice users. In Proceedings of the International Conference on Mobile Human-Computer Interaction, Glasgow, UK, 13 September 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 25–37. [Google Scholar]

- Brooks, J.; Brooks, M. In Search of Understanding: The Case for the Constructivist Classrooms; ASCD: Alexandria, VA, USA, 1999. [Google Scholar]

- Wilson, B.; Lowry, M. Constructivist Learning on the Web. New Dir. Adult Contin. Educ. 2000, 2000, 79–88. [Google Scholar] [CrossRef]

- Kelly, G.A. A brief introduction to personal construct psychology. In Perspectives in Personal Construct Psychology; Bannister, D., Ed.; Academic Press: Cambridge, MA, USA, 1970; pp. 1–30. [Google Scholar]

- Botella, L. Personal Construct Psychology and social constructionism. In Proceedings of the XI International Congress on Personal Construct Psychology, Barcelona, Spain; 1995; pp. 1–22. [Google Scholar]

- Raskin, J.D. Constructivism in psychology: Personal construct psychology, radical constructivism, and social constructionism. Am. Commun. J. 2002, 5, 1–25. [Google Scholar]

- Glaserfeld, E. Radical Constructivism: A Way of Knowing and Learning; The Falmer Press: London, UK, 1995. [Google Scholar]

- Maturana, H.R.; Varela, F.J. The Tree of Knowledge: The Biological Roots of Human Understanding; Shambhala: Boston, MA, USA, 1987. [Google Scholar]

- Ziemke, T. The construction of ‘reality’ in the robot: Constructivist perspectives on situated artificial intelligence and adaptive robotics. Found. Sci. 2001, 6, 163–233. [Google Scholar] [CrossRef]

- Walker, B.M.; Winter, D.A. The elaboration of personal construct psychology. Annu. Rev. Psychol. 2007, 58, 453–477. [Google Scholar] [CrossRef]

- Botella, L.; Feixas, G. Teoría de los Constructos Personales: Aplicaciones a la Práctica Psicológica; Laertes: Barcelona, Spain, 1988. [Google Scholar]

- Tomico, O.; Pifarré, M.; Lloveras, J. Experience landscapes: A subjective approach to explore user-product interaction. Int. Des. Conf.-Des. 2006, 2006, 393–400. [Google Scholar]

- Burr, V.; McGrane, A.; King, N. Personal construct qualitative methods. In Handbook of Research Methods in Health Social Sciences; Liamputtong, P., Ed.; Springer: Singapore, 2017. [Google Scholar]

- Leach, C.; Freshwater, K.; Aldridge, J.; Sunderland, J. Analysis of repertory grids in clinical practice. Br. J. Clin. Psychol. 2001, 40, 225–248. [Google Scholar] [CrossRef]

- Burr, V.; King, N.; Heckmann, M. The qualitative analysis of repertory grid data: Interpretive Clustering. Qual. Res. Psychol. 2020, 1–25. [Google Scholar] [CrossRef]

- Gutman, J.; Reynolds, T.J. An investigation at the levels of cognitive abstraction utilized by the consumers in product differentiation. In Attitude Research under the Sun; Eighmey, J., Ed.; American Marketing Association: Chicago, IL, USA, 1979. [Google Scholar]

- Reynolds, T.J.; Gutman, J. Laddering: Extending the repertory grid methodology to construct attribute-consequence-value hierarchies. Pers. Values Consum. Psychol. 1984, 2, 155–167. [Google Scholar]

- Bech-Larsen, T.; Nielsen, N.A. A comparison of five elicitation techniques for elicitation of attributes of low involvement products. J. Econ. Psychol. 1999, 20, 315–341. [Google Scholar] [CrossRef]

- Reynolds, T.J.; Gutman, J. Laddering theory, method, analysis, and interpretation. J. Advert. Res. 1988, 28, 11–31. [Google Scholar]

- Fransella, F. Some skills and tools for personal construct practitioners. In International Handbook of Personal Construct Psychology; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2003; pp. 105–121. [Google Scholar]

- Subramony, D. Introducing a "Means-End" Approach to Human-Computer Interaction: Why Users Choose Particular Web Sites Over Others; Association for the Advancement of Computing in Education (AACE): Waynesville, NC, USA, 2002. [Google Scholar]

- Zaman, B. Introducing contextual laddering to evaluate the likeability of games with children. Cogn. Technol. Work. 2008, 10, 107–117. [Google Scholar] [CrossRef]

- Jans, G.; Calvi, L. Using Laddering and Association Techniques to Develop a User-Friendly Mobile (City) Application. In On the Move to Meaningful Internet Systems 2006: OTM 2006 Workshops; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1956–1965. [Google Scholar]

- Kagan, J. Surprise, Uncertainty, and Mental Structures; Harvard University Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Zaltman, G.; Coulter, R.H. Seeing the voice of the customer: Metaphor-based advertising research. J. Advert. Res. 1995, 35, 35–51. [Google Scholar]

- Coulter, R.A.; Zaltman, G.; Coulter, K.S. Interpreting consumer perceptions of advertising: An application of the zaltman metaphor elicitation technique. J. Advert. 2001, 30, 1–21. [Google Scholar] [CrossRef]

- Ho, S.; Liao, C.; Sun, P. Explore Consumers’ Experience In Using Facebook Through Mobile Devices. PACIS 2012 Proceedings, 7 July 2012. Available online: http://aisel.aisnet.org/pacis2012/713 (accessed on 17 July 2020).

- Siergiej, E.; Mentor, F. Intimate Advertising: A Study of Female Emotional Responses Using the ZMET. Explorations 2009, IV, 108–119. [Google Scholar]

- Glenn, L.C.; Jerry, C.O. Mapping consumers’ mental models with ZMET. Psychol. Mark. 2002, 19, 477–502. [Google Scholar]

- Zaltman, G.; Zaltman, L.H. Marketing Metaphoria: What Deep Metaphors Reveal about the Minds of Consumers; Harvard Business Press: Boston, MA, USA, 2008. [Google Scholar]

- Luntz, F. Word That Work: It’s Not What You Say, It’s What People Hear; Hachette UK: Paris, France, 2007. [Google Scholar]

- Hancock, C.C.; Foster, C. Exploring the ZMET methodology in services marketing. J. Serv. Mark. 2019, 8, 1–11. [Google Scholar] [CrossRef]

- Annamma, J.; Sherry, J., Jr.; Venkatesh, A.; Deschenes, J. Perceiving images and telling tales: A visual and verbal analysis of the meaning of the Internet. J. Consum. Psychol. 2009, 19, 556–566. [Google Scholar]

- Zaltman, G.; Coulter, R.H. Using the Zaltman metaphor elicitation technique to understand brand images. Assoc. Consum. Res. 1994, 21, 281–295. [Google Scholar]

- Lee, M.S.Y.; McGoldrick, P.J.; Keeling, K.A.; Doherty, J. Using ZMET to explore barriers to the adoption of 3G mobile banking services. Int. J. Retail. Distrib. Manag. 2003, 31, 340–348. [Google Scholar] [CrossRef]

- Bias, R.G.; Moon, B.M.; Hoffman, R.R. Concept mapping usability evaluation: An exploratory study of a new usability inspection method. Int. J. Hum.–Comput. Interact. 2015, 31, 571–583. [Google Scholar] [CrossRef]

- Eakin, E. Penetrating the Mind by Metaphor. New York Times, 23 February 2002. [Google Scholar]

| No | Gender | Age | Total Duration of VA | Type of VA Used | Frequency of Use |

|---|---|---|---|---|---|

| P1 | Female | 30 | 5 years | Smart Phone | once a week |

| P2 | Female | 29 | 3 years | Smart Phone, AI Speaker | once a week |

| P3 | Female | 46 | A year | Smart Phone, AI Speaker | 10–14 times a week |

| P4 | Male | 29 | 6 months | Navigation | 5 times a week |

| P5 | Male | 31 | 2 years | Navigation, AI Speaker | everyday |

| P6 | Female | 28 | A year and half | Smart Phone, AI Speaker | 1–2 times a week |

| P7 | Female | 37 | 6 years | Smart Phone, AI Speaker | once a week |

| P8 | Male | 29 | 3 months | Smart Phone, AI Speaker | once a week |

| P9 | Female | 25 | 2 months | Smart Phone, AI Speaker | everyday |

| P10 | Female | 43 | A year and half | Smart Phone, AI Speaker | once a week |

| P11 | Male | 29 | A year | Navigation | once a week |

| P12 | Male | 27 | A month | Smart Phone | once a week |

| P13 | Male | 39 | A month | Smart Phone | once a week |

| P14 | Male | 30 | 2 years | Smart Phone, AI Speaker | 5 times a week |

| P15 | Female | 34 | 2 years | Smart Phone, AI Speaker | everyday |

| P16 | Female | 29 | 4 years | Smart Phone, AI Speaker | everyday |

| P17 | Female | 29 | 3 years | Smart Phone | 1–2 times a week |

| P18 | Female | 28 | 5 years | Smart Phone | everyday |

| P19 | Male | 35 | 2 years | Smart Phone | 2–3 times a week |

| Category | Process | |

|---|---|---|

| Pre-test Questionnaire | Laddering | What is the reason why you use voice assistant? If you have not used one, what would you use it for? |

| What are the consequences and/or values of using voice assistant? | ||

| What role would you like the voice assistant to be? | ||

| What are your opinions/thoughts about voice assistants? | ||

| Free Navigation | Use the provided voice assistant until you feel you are satisfied with the questions in the categories below. (music, timer, weather, web-search, small ltalk, scheduling, translate, speaker settings, calculate, traffic navigation, find a location, alarm) | |

| ZMET | Step 1 collect image | Please scrape some images within the magazine provided that voice emotions or evocate images while using voice assistants in the previous step. |

| Step 2 storytelling | Please explain the images you have collected. | |

| Step 3 missing image | Was there an image that you were looking for in the magazine when you were scraping the images? | |

| Why were you aiming to look for those images? | ||

| Is there any image that you replaced instead of the ones you were looking for because the one you needed wasn’t on the magazine? | ||

| Step 4 construct elicitation | (If so,) why did you think that it could replace the image you were looking for? | |

| Please classify the images you found according to your criteria. | ||

| If you are done sorting, please label them by category. | ||

| Please explain the theme of the categories. Explain your own criteria of sorting the images, and the way you sorted them. | ||

| Was there an additional category that came up while you were deciding the categories? If there was, describe some images that would fit the category. | ||

| Step 5 Metaphor elaboration | Please choose one image that describes your thoughts and emotions about voice assistants the best out of all the images. | |

| What should be added to clarify your thoughts and emotions to the images selected (such as colors or images)? Please use the sticky notes to give an explanation. | ||

| Step 6 sensory image | Please describe the image that is opposite to the images in the theme classified in Step 4. | |

| Please describe voice assistant using sensory images. | ||

| Why did you think so? | ||

| Step 7 the vignette | Please create a collage that describes your thoughts and feelings for a voice assistant. | |

| Please explain your collage. | ||

| Theme | Image | Meaning | |

|---|---|---|---|

| Image (a) P6 |  | Shoulder contact with a person with a dog’s face. | I am happy that I am using the VA. VA, my cute friend, and me. |

| The sun behind the dog’s face. | Emphasize cute and friendly images. | |

| A person is watching a screen from a dark background. | VA is searching to find out what information I am asking. | |

| A dim-faced person. | It’s hard to tell because it’s piled up in a veil—the VA’s double side like a friend. | |

| Image (b) P14 |  | A luxurious bag. | (1) Bag the secretary is likely to carry. (2) Luxurious feeling reminds me of a secretary. |

| A kite-flying man. | A free-spreading seeker of information in the VA. | |

| A person is watching a screen from a dark background. | VA searching for information for me reminds me of a secretary. | |

| Image (c) P18 |  | Nose sculptures and sunglasses. | Unlike humans, with an intelligent, high-dimensional machine, expressing that it is similar to and different from humans. |

| Various colors. | Emphasis on VA moving forward. | |

| Earth and stars. | VA’s potential for development with endless and infinite space. | |

| People are standing on the black line. | The black line is the borderline between man and VA, and those standing above represent the present in contrast to the forward VA. | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, D.; Namkung, K. Exploring Users’ Mental Models for Anthropomorphized Voice Assistants through Psychological Approaches. Appl. Sci. 2021, 11, 11147. https://doi.org/10.3390/app112311147

Park D, Namkung K. Exploring Users’ Mental Models for Anthropomorphized Voice Assistants through Psychological Approaches. Applied Sciences. 2021; 11(23):11147. https://doi.org/10.3390/app112311147

Chicago/Turabian StylePark, Dasom, and Kiechan Namkung. 2021. "Exploring Users’ Mental Models for Anthropomorphized Voice Assistants through Psychological Approaches" Applied Sciences 11, no. 23: 11147. https://doi.org/10.3390/app112311147

APA StylePark, D., & Namkung, K. (2021). Exploring Users’ Mental Models for Anthropomorphized Voice Assistants through Psychological Approaches. Applied Sciences, 11(23), 11147. https://doi.org/10.3390/app112311147