Weed Detection in Rice Fields Using Remote Sensing Technique: A Review

Abstract

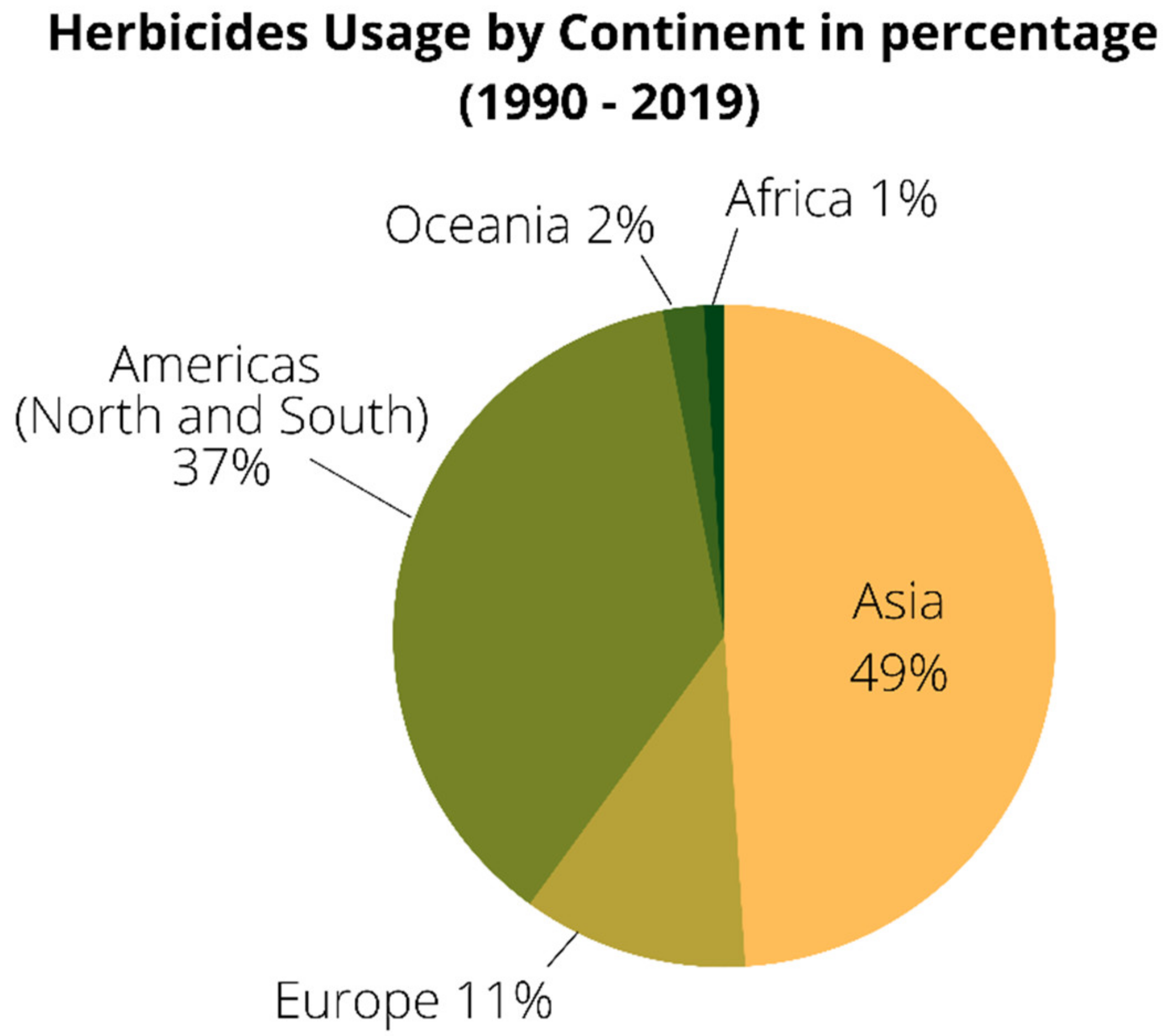

1. Introduction

2. Methodology

3. The Importance of Rice Productivity

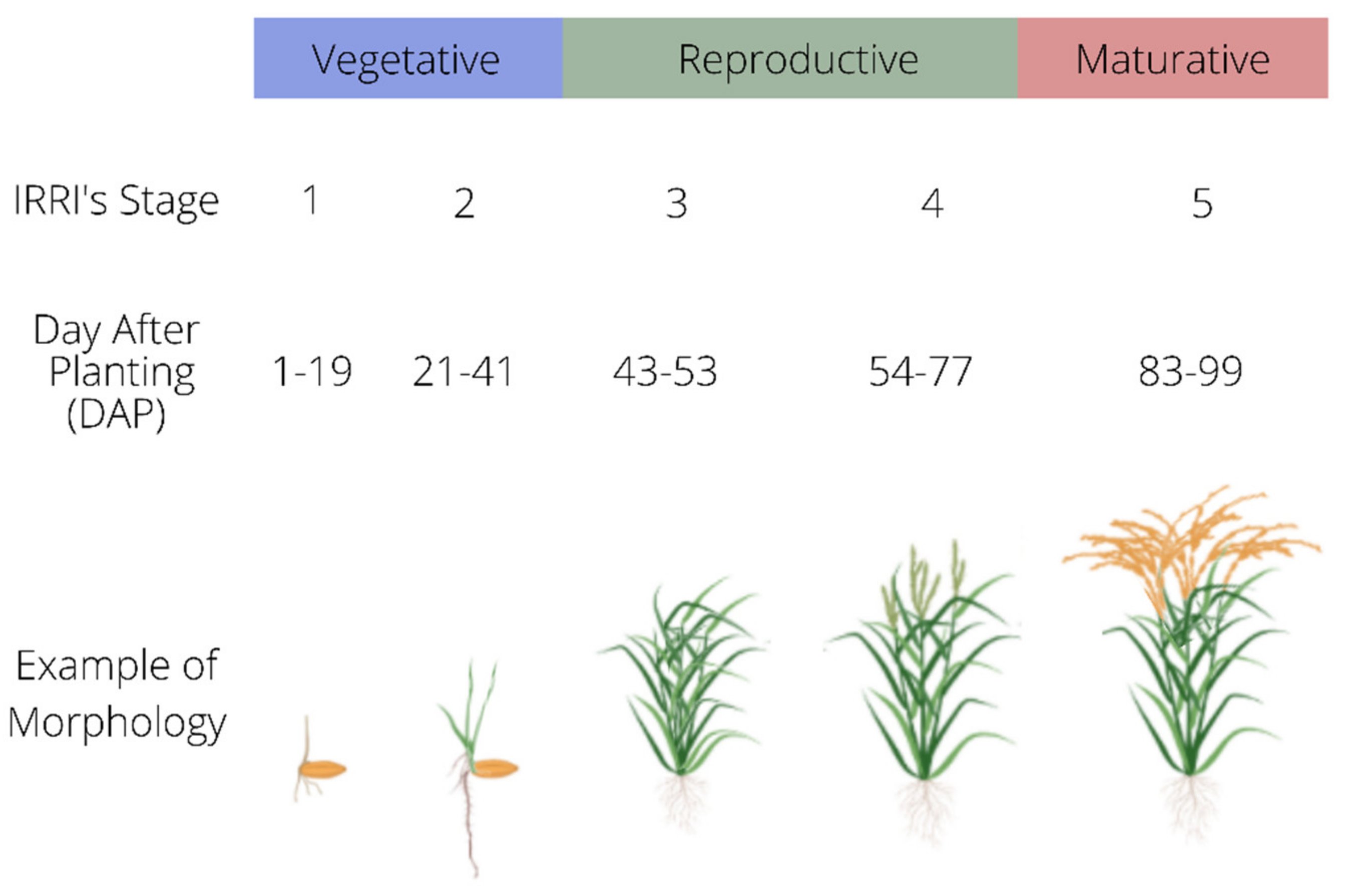

4. Controlling Weed in Paddy Fields at Different Growth of Stages

5. Weed Detection Using Remote Sensing Technique

5.1. Image Data Collection

5.1.1. RGB Sensor

5.1.2. Multispectral Sensor

5.1.3. Hyperspectral Sensor

5.2. Image Mosaicking and Calibration

5.3. Feature Extraction and Selection

5.4. Image Classification and Validation

- -

- caa = element at a position ath row and ath column.

- -

- c.a = column sums.

- -

- Q = total number of pixels.

- -

- U = total number of classes.

- -

- ca = row sums.

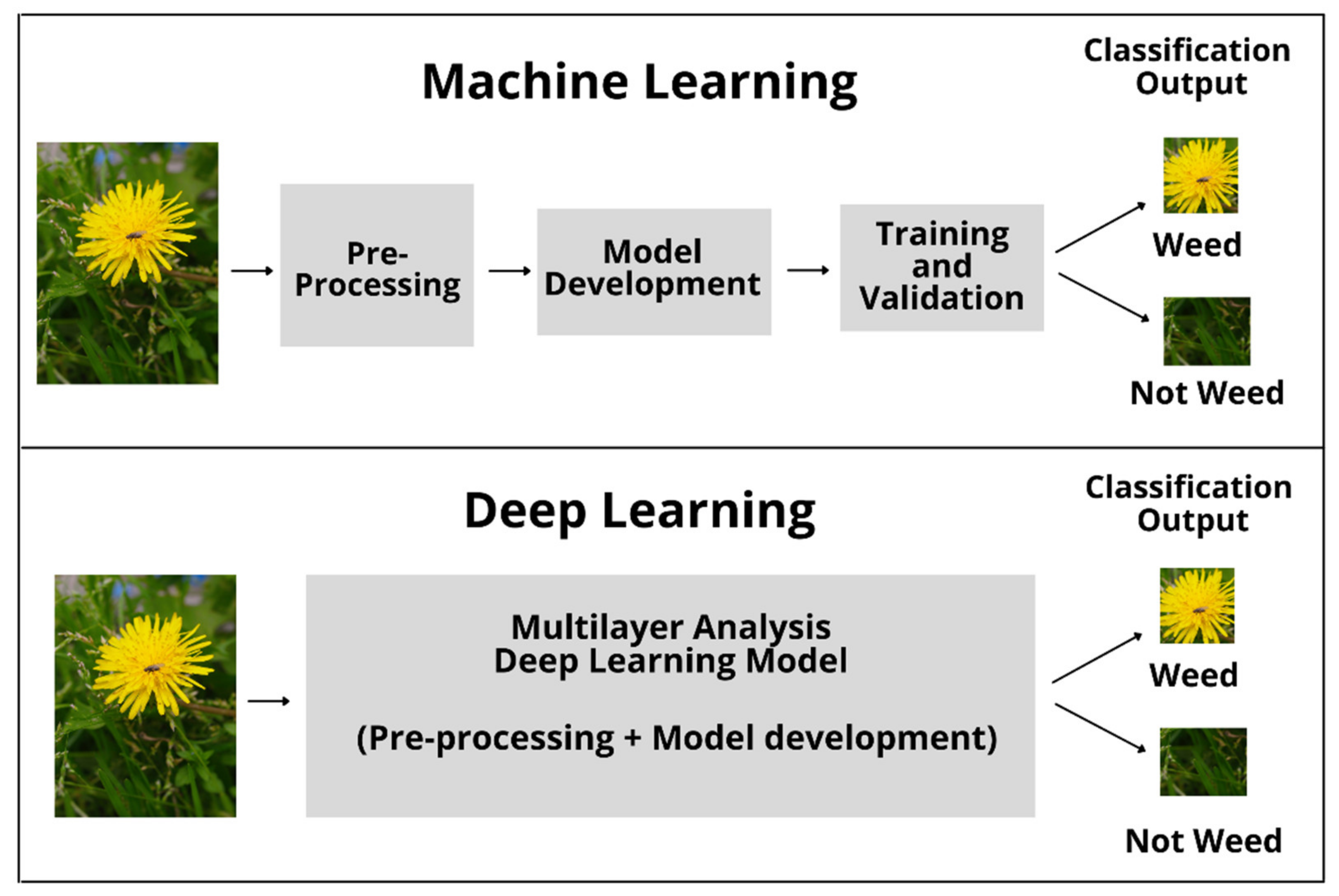

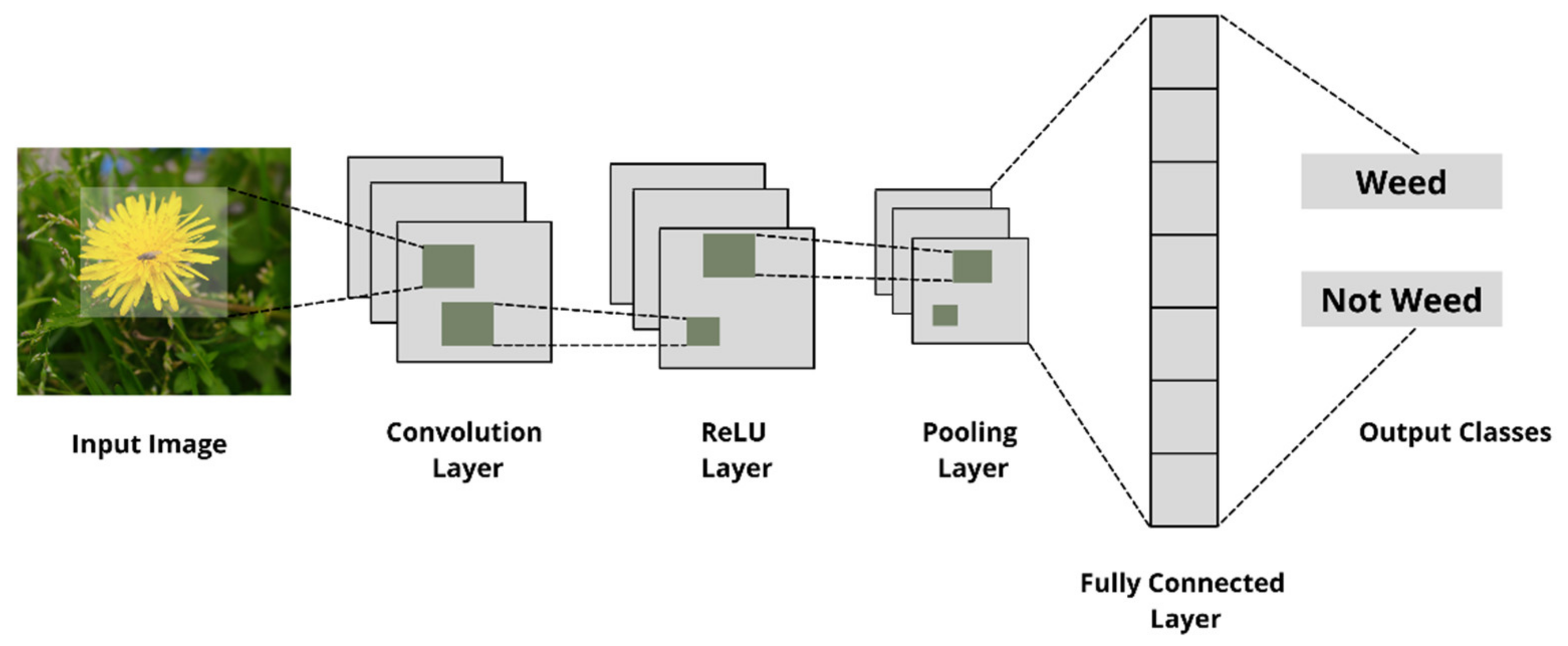

5.5. An Overview of Machine Learning in Agriculture

- -

- = feature maps in size (m – n – 1).

- -

- = weightage.

- -

- = bias.

5.6. The Application of Remote Sensing and Machine Learning Technique into Weed Detection

5.6.1. Machine Learning (ML)

- Y = Percentage of crop yield loss.

- X = Percentage of weed coverage.

- M = Proportional percentage increase in grain moisture.

- X = Proportional percentage of weed coverage.

5.6.2. Deep Learning (DL)

5.7. Advantages of Implementation of Remote Sensing in Weed Detection through PA

6. Impact of Weeds Management on Crops, Yield and Economy

7. Future Direction

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Patel, M.; Jernigan, S.; Richardson, R.; Ferguson, S.; Buckner, G. Autonomous Robotics for Identification and Management of Invasive Aquatic Plant Species. Appl. Sci. 2019, 9, 2410. [Google Scholar] [CrossRef]

- Dilipkumar, M.; Chuah, T.S.; Goh, S.S.; Sahid, I. Weed management issues, challenges, and opportunities in Malaysia. Crop Prot. 2020, 134, 104347. [Google Scholar] [CrossRef]

- Food and Agriculture Organization of the United Nations (F.A.O.). F.A.O.S.T.A.T. 2021. Available online: http://www.fao.org/faostat/en/#data/RP/visualize (accessed on 4 June 2021).

- Jones, E.A.L.; Owen, M.D.K.; Leon, R.G. Influence of multiple herbicide resistance on growth in Amaranthus tuberculatus. Weed Res. 2019, 59, 235–244. [Google Scholar] [CrossRef]

- Löbmann, A.; Christen, O.; Petersen, J. Development of herbicide resistance in weeds in a crop rotation with acetolactate synthase-tolerant sugar beets under varying selection pressure. Weed Res. 2019, 59, 479–489. [Google Scholar] [CrossRef]

- Yuzugullu, O.; Erten, E.; Hajnsek, I. A multi-year study on rice morphological parameter estimation with X-band PolSAR data. Appl. Sci. 2017, 7, 602. [Google Scholar] [CrossRef]

- Shiu, Y.S.; Chuang, Y.C. Yield Estimation of Paddy Rice Based on Satellite Imagery: Comparison of Global and Local Regression Models. Remote Sens. 2019, 11, 111. [Google Scholar] [CrossRef]

- Xiang, K.; Ma, M.; Liu, W.; Dong, J.; Zhu, X.; Yuan, W. Mapping Irrigated Areas of Northeast China in Comparison to Natural Vegetation. Remote Sens. 2019, 11, 825. [Google Scholar] [CrossRef]

- Papademetriou, M.K. Rice production in the Asia-Pacific region: Issues and perspectives. Bridg. Rice Yield Gap Asia-Pac. Reg. 2000, 16, 5. [Google Scholar]

- Pandey, S.; Byerlee, D.; Dawe, D.; Dobermann, A.; Mohanty, S.; Rozelle, S.; Hardy, B. Rice in the Global Economy; International Rice Research Institute: Los Banos, Phillipines, 2010. [Google Scholar]

- United States Department of Agriculture, Foreign Agricultural Services. World Rice Production, Consumption and Stocks. PSD Reports. Available online: https://apps.fas.usda.gov/psdonline/app/index.html#/app/downloads (accessed on 10 November 2020).

- Masum, S.M.; Hossain, M.A.; Akamine, H.; Sakagami, J.; Ishii, T.; Nakamura, I.; Asaduzzaman, M.; Bhowmik, P.C. Performance of Bangladesh indigenous rice in a weed infested field and separation of allelopathy from resource competition. Weed Biol. Manag. 2019, 19, 39–50. [Google Scholar] [CrossRef]

- Yamori, W.; Kondo, E.; Sugiura, D.; Terashima, I.; Suzuki, Y.; Makino, A. Enhanced leaf photosynthesis as a target to increase grain yield: Insights from transgenic rice lines with variable Rieske FeS protein content in the cytochrome b6/f complex. Plant Cell Environ. 2016, 39, 80–87. [Google Scholar] [CrossRef]

- Simkin, A.J.; López-Calcagno, P.E.; Raines, C.A. Feeding the world: Improving photosynthetic efficiency for sustainable crop production. J. Exp. Bot. 2019, 70, 1119–1140. [Google Scholar] [CrossRef]

- Maneepitak, S.; Ullah, H.; Paothong, K.; Kachenchart, B.; Datta, A.; Shrestha, R.P. Effect of water and rice straw management practices on yield and water productivity of irrigated lowland rice in the Central Plain of Thailand. Agric. Water Manag. 2019, 211, 89–97. [Google Scholar] [CrossRef]

- LaHue, G.T.; Chaney, R.L.; Adviento-Borbe, M.A.; Linquist, B.A. Alternate wetting and drying in high yielding direct-seeded rice systems accomplishes multiple environmental and agronomic objectives. Agric. Ecosyst. Environ. 2016, 229, 30–39. [Google Scholar] [CrossRef]

- Liang, K.; Zhong, X.; Huang, N.; Lampayan, R.M.; Pan, J.; Tian, K.; Liu, Y. Grain yield, water productivity and CH4 emission of irrigated rice in response to water management in south China. Agric. Water Manag. 2016, 163, 319–331. [Google Scholar] [CrossRef]

- Van Oort, P.A.; Zwart, S.J. Impacts of climate change on rice production in Africa and causes of simulated yield changes. Glob. Chang. Biol. 2018, 24, 1029–1045. [Google Scholar] [CrossRef]

- Van Oort, P.A.J. Mapping abiotic stresses for rice in Africa: Drought, cold, iron toxicity, salinity and sodicity. Field Crop. Res. 2018, 219, 55–75. [Google Scholar] [CrossRef]

- Dossou-Yovo, E.; Zwart, S.; Kouyaté, A.; Ouédraogo, I.; Bakare, O. Predictors of Drought in Inland Valley Landscapes and Enabling Factors for Rice Farmers’ Mitigation Measures in the Sudan-Sahel Zone. Sustainability 2019, 11, 79. [Google Scholar] [CrossRef]

- Ariza, A.A. Machine Learning and Big Data Techniques for Satellite-Based Rice Phenology Monitoring. PhD Thesis, The University of Manchester, Manchester, UK, 2019. [Google Scholar]

- Global Rice Science Partnership. Rice Almanac, 4th ed.; International Rice Research Institute: Los Banos, Philippines, 2013. [Google Scholar]

- Anwar, M.P.; Juraimi, A.S.; Samedani, B.; Puteh, A.; Man, A. Critical period of weed control in aerobic rice. Sci. World J. 2012, 2012, 603043. [Google Scholar] [CrossRef]

- Kamath, R.; Mamatha, B.; Srikanth, P. Paddy Crop and Weed Discrimination: A Multiple Classifier System Approach. Int. J. Agron. 2020, 2020, 6474536. [Google Scholar] [CrossRef]

- Chadhar, A.R.; Nadeem, M.A.; Tanveer, A.; Yaseen, M. Weed management boosts yield in fine rice under system of rice intensification. Planta Daninha 2014, 32, 291–299. [Google Scholar] [CrossRef]

- Ahmed, Q.N.; Hussain, P.Z.; Othman, A.S. Comparative study on vegetative and reproductive development between weedy rice morphotypes and commercial rice varieties in Perak, Malaysia. Trop. Life Sci. Res. 2012, 23, 17. [Google Scholar]

- Halip, R.M.; Norasma, N.; Fadzli, W.F.I.; Roslee, R.; Roslin, N.A.; Ismail, M.R.; Berahim, Z.; Omar, M.H. Pemantauan Tanaman Padi Menggunakan Sistem Maklumat Geografi dan Imej Multispektral. Adv. Agric. Food Res. J. 2020, 1, 1–18. [Google Scholar]

- Man, A.; Mohammad Saad, M.; Amzah, B.; Masarudin, M.F.; Jack, A.; Misman, S.N.; Ramachandran, K. Buku Poket Perosak, Penyakit dan Rumpai Padi di Malaysia. In Cetakan Kelima; Institut Penyelidikan dan Kemajuan Pertanian Malaysia (MARDI): Kuala Lumpur, Malaysia, 2018. [Google Scholar]

- Juraimi, A.S.; Uddin, M.K.; Anwar, M.P.; Mohamed, M.T.M.; Ismail, M.R.; Man, A. Sustainable weed management in direct seeded rice culture: A review. Aust. J. Crop Sci. 2013, 7, 989. [Google Scholar]

- Power, E.F.; Kelly, D.L.; Stout, J.C. The impacts of traditional and novel herbicide application methods on target plants, non-target plants and production in intensive grasslands. Weed Res. 2013, 53, 131–139. [Google Scholar] [CrossRef]

- Brown, R.B.; Noble, S.D. Site-specific weed management: Sensing requirements—what do we need to see? Weed Sci. 2005, 53, 252–258. [Google Scholar] [CrossRef]

- Oebel, H.; Gerhards, R. Site-specific weed control using digital image analysis and georeferenced application maps: On-farm experiences. In Precision Agriculture ’05. Papers presented at the 5th European Conference on Precision Agriculture, Uppsala, Sweden; Wageningen Academic Publishers: Wageningen, The Nertherlands, 2005; pp. 131–137. [Google Scholar]

- Matloob, A.; Safdar, M.E.; Abbas, T.; Aslam, F.; Khaliq, A.; Tanveer, A.; Rehman, A.; Chadhar, A.R. Challenges and prospects for weed management in Pakistan: A review. Crop Prot. 2019, 134, 104724. [Google Scholar] [CrossRef]

- Bajwa, A.A.; Chauhan, B.S.; Farooq, M.; Shabbir, A.; Adkins, S.W. What do we really know about alien plant invasion? A review of the invasion mechanism of one of the world’s worst weeds. Planta 2016, 244, 39–57. [Google Scholar] [CrossRef] [PubMed]

- Shanmugapriya, P.; Rathika, S.; Ramesh, T.; Janaki, P. Applications of remote sensing in agriculture—A Review. Int. J. Curr. Microbiol. Appl. Sci. 2019, 8, 2270–2283. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 1–74. [Google Scholar] [CrossRef]

- Bakhshipour, A.; Jafari, A. Evaluation of support vector machine and artificial neural networks in weed detection using shape features. Comput. Electron. Agric. 2018, 145, 153–160. [Google Scholar] [CrossRef]

- Fletcher, R.S.; Reddy, K.N. Random forest and leaf multispectral reflectance data to differentiate three soybean varieties from two pigweeds. Comput. Electron. Agric. 2016, 128, 199–206. [Google Scholar] [CrossRef]

- Fenfang, L.; Dongyan, Z.; Xiu, W.; Taixia, W.; Xinfu, C. Identification of corn and weeds on the leaf scale using polarization spectroscopy. Infrared Laser Eng. 2016, 45, 1223001. [Google Scholar] [CrossRef]

- Matongera, T.N.; Mutanga, O.; Dube, T.; Sibanda, M. Detection and mapping the spatial distribution of bracken fern weeds using the Landsat 8 O.L.I. new generation sensor. Int. J. Appl. Earth Obs. Geoinf. 2017, 57, 93–103. [Google Scholar] [CrossRef]

- Esposito, M.; Crimaldi, M.; Cirillo, V.; Sarghini, F.; Maggio, A. Drone and sensor technology for sustainable weed management: A review. Chem. Biol. Technol. Agric. 2021, 8, 1–11. [Google Scholar] [CrossRef]

- Huang, H.; Lan, Y.; Deng, J.; Yang, A.; Deng, X.; Zhang, L.; Wen, S. A semantic labeling approach for accurate weed mapping of high resolution UAV imagery. Sensors 2018, 18, 2113. [Google Scholar] [CrossRef]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Zhang, L. A fully convolutional network for weed mapping of unmanned aerial vehicle (UAV) imagery. PLoS ONE 2018, 13, e0196302. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Madrigal, V.P.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the use of unmanned aerial systems for environmental monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Barrero, O.; Rojas, D.; Gonzalez, C.; Perdomo, S. Weed detection in rice fields using aerial images and neural networks. In Proceedings of the 2016 XXI Symposium on Signal Processing, Images and Artificial Vision (S.T.S.I.V.A.), Bucaramanga, Colombia, 31 August–2 September 2016; pp. 1–4. [Google Scholar]

- De Castro, A.; Torres-Sánchez, J.; Peña, J.; Jiménez-Brenes, F.; Csillik, O.; López-Granados, F. An automatic random forest-OBIA algorithm for early weed mapping between and within crop rows using UAV imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef]

- Kawamura, K.; Asai, H.; Yasuda, T.; Soisouvanh, P.; Phongchanmixay, S. Discriminating crops/weeds in an upland rice field from UAV images with the SLIC-RF algorithm. Plant Prod. Sci. 2020, 24, 198–215. [Google Scholar] [CrossRef]

- Micasense Inc. Best Practices: Collecting Data with MicaSense Sensors. MicaSense Knowl. Base. 2020. Available online: https://support.micasense.com/hc/en-us/articles/224893167 (accessed on 23 June 2021).

- Pantazi, X.E.; Tamouridou, A.A.; Alexandridis, T.K.; Lagopodi, A.L.; Kashefi, J.; Moshou, D. Evaluation of hierarchical self-organising maps for weed mapping using U.A.S. multispectral imagery. Comput. Electron. Agric. 2017, 139, 224–230. [Google Scholar] [CrossRef]

- Stroppiana, D.; Villa, P.; Sona, G.; Ronchetti, G.; Candiani, G.; Pepe, M.; Busetto, L.; Migliazzi, M.; Boschetti, M. Early season weed mapping in rice crops using multispectral UAV data. Int. J. Remote Sens. 2019, 39, 5432–5452. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Brook, A.; Ben-Dor, E. Supervised vicarious calibration (S.V.C.) of multi-source hyperspectral remote-sensing data. Remote Sens. 2015, 7, 6196–6223. [Google Scholar] [CrossRef]

- Guo, Y.; Senthilnath, J.; Wu, W.; Zhang, X.; Zeng, Z.; Huang, H. Radiometric calibration for multispectral camera of different imaging conditions mounted on a UAV platform. Sustainability 2019, 11, 978. [Google Scholar] [CrossRef]

- Kelcey, J.; Lucieer, A. Sensor correction and radiometric calibration of a 6-band multispectral imaging sensor for UAV remote sensing. In Proceedings of the 12th Congress of the International Society for Photogrammetry and Remote Sensing, Melbourne, Australia, 25 August–1 September 2019; pp. 393–398. [Google Scholar]

- Mafanya, M.; Tsele, P.; Botai, J.O.; Manyama, P.; Chirima, G.J.; Monate, T. Radiometric calibration framework for ultra-high-resolution UAV-derived orthomosaics for large-scale mapping of invasive alien plants in semi-arid woodlands: Harrisia pomanensis as a case study. Int. J. Remote Sens. 2018, 39, 5119–5140. [Google Scholar] [CrossRef]

- Karpouzli, E.; Malthus, T. The empirical line method for the atmospheric correction of IKONOS imagery. Int. J. Remote Sens. 2003, 24, 1143–1150. [Google Scholar] [CrossRef]

- Xu, K.; Gong, Y.; Fang, S.; Wang, K.; Lin, Z.; Wang, F. Radiometric calibration of UAV remote sensing image with spectral angle constraint. Remote Sens. 2019, 11, 1291. [Google Scholar] [CrossRef]

- Parrot. Application Note: Pixel Value to Irradiance Using the Sensor Calibration Model; Parrot: Paris, France, 2017; Volume SEQ-AN-01. [Google Scholar]

- Tu, Y.H.; Phinn, S.; Johansen, K.; Robson, A. Assessing radiometric correction approaches for multispectral UAS imagery for horticultural applications. Remote Sens. 2018, 10, 1684. [Google Scholar] [CrossRef]

- Kumar, G.; Bhatia, P.K. A detailed review of feature extraction in image processing systems. In Proceedings of the 2014 Fourth International Conference on Advanced Computing and Communication Technologies, Rohtak, India, 8–9 February 2014; pp. 5–12. [Google Scholar]

- Xue, B.; Zhang, M.; Browne, W.N. Particle swarm optimization for feature selection in classification: A multi-objective approach. IEEE Trans. Cybern. 2013, 43, 1656–1671. [Google Scholar] [CrossRef]

- Che’Ya, N.N.; Dunwoody, E.; Gupta, M. Assessment of Weed Classification Using Hyperspectral Reflectance and Optimal Multispectral UAV Imagery. Agronomy 2021, 11, 1435. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Shapiro, L.; Stockman, G. Computer Vision; Prentice Hall Inc.: Upper Saddle River, NJ, USA, 2001. [Google Scholar]

- Stanković, R.S.; Falkowski, B.J. The Haar wavelet transform: Its status and achievements. Comput. Electr. Eng. 2003, 29, 25–44. [Google Scholar] [CrossRef]

- Pearson, K. LIII. On lines and planes of closest fit to systems of points in space. Lond. Edinb. Dublin Philos. Mag. J. Sci. 1901, 2, 559–572. [Google Scholar] [CrossRef]

- Taati, A.; Sarmadian, F.; Mousavi, A.; Pour, C.T.H.; Shahir, A.H.E. Land Use Classification using Support Vector Machine and Maximum Likelihood Algorithms by Landsat 5 TM Images. Walailak J. Sci. Technol. 2014, 12, 681–687. [Google Scholar]

- Whitside, T.G.; Maier, S.F.; Boggs, G.S. Area-based and location-based validation of classified image objects. Int. J. Appl. Earth Obs. Geoinform. 2014, 28, 117–130. [Google Scholar] [CrossRef]

- Bah, M.D.; Dericquebourg, E.; Hafiane, A.; Canals, R. Deep learning based classification system for identifying weeds using high-resolution UAV imagery. In Science and Information Conference; Springer: Cham, Switzerland, 2018; pp. 176–187. [Google Scholar]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep learning with unsupervised data labeling for weed detection in line crops in UAV images. Remote Sens. 2018, 10, 1690. [Google Scholar] [CrossRef]

- Dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Unsupervised deep learning and semi-automatic data labeling in weed discrimination. Comput. Electron. Agric. 2019, 165, 104963. [Google Scholar] [CrossRef]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine learning in agriculture: A review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, Y.; Pu, R.; Gonzalez-Moreno, P.; Yuan, L.; Wu, K.; Huang, W. Monitoring plant diseases and pests through remote sensing technology: A review. Comput. Electron. Agric. 2019, 165, 104943. [Google Scholar] [CrossRef]

- Janiesch, C.; Zschech, P.; Heinrich, K. Machine learning and deep learning. Electron. Mark. 2021, 31, 689–695. [Google Scholar] [CrossRef]

- Ongsulee, P. Artificial intelligence, machine learning and deep learning. In Proceedings of the 2017 15th International Conference on ICT and Knowledge Engineering (ICT&KE), Bangkok, Thailand, 22–24 November 2017; pp. 1–6. [Google Scholar]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Huang, H.; Deng, J.; Lan, Y.; Yang, A.; Deng, X.; Wen, S.; Zhang, H.; Zhang, Y. Accurate weed mapping and prescription map generation based on fully convolutional networks using UAV imagery. Sensors 2018, 18, 3299. [Google Scholar] [CrossRef] [PubMed]

- Abirami, S.; Chitra, P. Energy-efficient edge based real-time healthcare support system. In Advances in Computers; Elsevier: Amsterdam, The Netherlands, 2020; Volume 117, pp. 339–368. [Google Scholar]

- Saha, S.; Nagaraj, N.; Mathur, A.; Yedida, R.; Sneha, H.R. Evolution of novel activation functions in neural network training for astronomy data: Habitability classification of exoplanets. Eur. Phys. J. Spec. Top. 2020, 229, 2629–2738. [Google Scholar] [CrossRef] [PubMed]

- Voulodimos, A.; Doulamis, N.; Doulamis, A.; Protopapadakis, E. Deep learning for computer vision: A brief review. Comput. Intell. Neurosci. 2018, 2018, 7068349. [Google Scholar] [CrossRef]

- Aitkenhead, M.J.; Dalgetty, I.A.; Mullins, C.E.; McDonald, A.J.S.; Strachan, N.J.C. Weed and crop discrimination using image analysis and artificial intelligence methods. Comput. Electron. Agric. 2003, 39, 157–171. [Google Scholar] [CrossRef]

- Karimi, Y.; Prasher, S.O.; Patel, R.M.; Kim, S.H. Application of support vector machine technology for weed and nitrogen stress detection in corn. Comput. Electron. Agric. 2006, 51, 99–109. [Google Scholar] [CrossRef]

- De Castro, A.I.; López-Granados, F.; Jurado-Expósito, M. Broad-scale cruciferous weed patch classification in winter wheat using QuickBird imagery for in-season site-specific control. Precis. Agric. 2013, 14, 392–413. [Google Scholar] [CrossRef]

- Doi, R. Discriminating crop and other canopies by overlapping binary image layers. Opt. Eng. 2013, 52, 020502. [Google Scholar] [CrossRef][Green Version]

- Shapira, U.; Herrmann, I.; Karnieli, A.; Bonfil, D.J. Field spectroscopy for weed detection in wheat and chickpea fields. Int. J. Remote Sens. 2013, 34, 6094–6108. [Google Scholar] [CrossRef]

- Eddy, P.R.; Smith, A.M.; Hill, B.D.; Peddle, D.R.; Coburn, C.A.; Blackshaw, R.E. Weed and crop discrimination using hyperspectral image data and reduced bandsets. Can. J. Remote Sens. 2014, 39, 481–490. [Google Scholar] [CrossRef]

- López-Granados, F.; Torres-Sánchez, J.; Serrano-Pérez, A.; de Castro, A.I.; Mesas-Carrascosa, F.J.; Pena, J.M. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2016, 17, 183–199. [Google Scholar] [CrossRef]

- López-Granados, F.; Torres-Sánchez, J.; De Castro, A.I.; Serrano-Pérez, A.; Mesas-Carrascosa, F.J.; Peña, J.M. Object-based early monitoring of a grass weed in a grass crop using high resolution UAV imagery. Agron. Sustain. Dev. 2016, 36, 67. [Google Scholar] [CrossRef]

- Tamouridou, A.A.; Alexandridis, T.K.; Pantazi, X.E.; Lagopodi, A.L.; Kashefi, J.; Moshou, D. Evaluation of UAV imagery for mapping Silybum marianum weed patches. Int. J. Remote Sens. 2017, 38, 2246–2259. [Google Scholar] [CrossRef]

- Yano, I.H.; Santiago, W.E.; Alves, J.R.; Mota, L.T.M.; Teruel, B. Choosing classifier for weed identification in sugarcane fields through images taken by UAV. Bulg. J. Agric. Sci. 2017, 23, 491–497. [Google Scholar]

- Baron, J.; Hill, D.J.; Elmiligi, H. Combining image processing and machine learning to identify invasive plants in high-resolution images. Int. J. Remote Sens. 2018, 39, 5099–5118. [Google Scholar] [CrossRef]

- Gao, J.; Nuyttens, D.; Lootens, P.; He, Y.; Pieters, J.G. Recognising weeds in a maise crop using a random forest machine-learning algorithm and near-infrared snapshot mosaic hyperspectral imagery. Biosyst. Eng. 2018, 170, 39–50. [Google Scholar] [CrossRef]

- Mateen, A.; Zhu, Q. Weed detection in wheat crop using uav for precision agriculture. Pak. J. Agric. Sci. 2019, 56, 809–817. [Google Scholar]

- Huang, H.; Lan, Y.; Yang, A.; Zhang, Y.; Wen, S.; Deng, J. Deep learning versus Object-based Image Analysis (OBIA) in weed mapping of UAV imagery. Int. J. Remote Sens. 2020, 41, 3446–3479. [Google Scholar] [CrossRef]

- Rasmussen, J.; Nielsen, J. A novel approach to estimating the competitive ability of Cirsium arvense in cereals using unmanned aerial vehicle imagery. Weed Res. 2020, 60, 150–160. [Google Scholar] [CrossRef]

- De Castro, A.I.; Peña, J.M.; Torres-Sánchez, J.; Jiménez-Brenes, F.M.; Valencia-Gredilla, F.; Recasens, J.; López-Granados, F. Mapping cynodon dactylon infesting cover crops with an automatic decision tree-OBIA procedure and UAV imagery for precision viticulture. Remote Sens. 2020, 12, 56. [Google Scholar] [CrossRef]

- Sapkota, B.; Singh, V.; Cope, D.; Valasek, J.; Bagavathiannan, M. Mapping and estimating weeds in cotton using unmanned aerial systems-borne imagery. AgriEngineering 2020, 2, 350–366. [Google Scholar] [CrossRef]

- Boukabara, S.A.; Krasnopolsky, V.; Stewart, J.Q.; Maddy, E.S.; Shahroudi, N.; Hoffman, R.N. Leveraging modern artificial intelligence for remote sensing and N.W.P.: Benefits and challenges. Bull. Am. Meteorol. Soc. 2019, 100, ES473–ES491. [Google Scholar] [CrossRef]

- Arroyo, L.A.; Johansen, K.; Phinn, S. Mapping Land Cover Types from Very High Spatial Resolution Imagery: Automatic Application of an Object Based Classification Scheme. In Proceedings of the GEOBIA 2010: Geographic Object-Based Image Analysis, Ghent, Belgium, 29 June–2 July 2010; International Society for Photogrammetry and Remote Sensing: Ghent, Belgium, 2010. [Google Scholar]

- Mohamed, Z.; Terano, R.; Shamsudin, M.N.; Abd Latif, I. Paddy farmers’ sustainability practices in granary areas in Malaysia. Resources 2016, 5, 17. [Google Scholar] [CrossRef]

- Jafari, Y.; Othman, J.; Kuhn, A. Market and welfare impacts of agri-environmental policy options in the Malaysian rice sector. Malays. J. Econ. Stud. 2017, 54, 179–201. [Google Scholar] [CrossRef]

- Liu, B.; Bruch, R. Weed Detection for Selective Spraying: A Review. Curr. Robot. Rep. 2020, 1, 19–26. [Google Scholar] [CrossRef]

- Hosoya, K.; Sugiyama, S.I. Weed communities and their negative impact on rice yield in no-input paddy fields in the northern part of Japan. Biol. Agric. Hortic. 2017, 33, 215–224. [Google Scholar] [CrossRef]

- Sosa, A.J.; Cardo, M.V.; Julien, M.H. Predicting weed distribution at the regional scale in the native range: Environmental determinants and biocontrol implications of Phyla nodiflora (Verbenaceae). Weed Res. 2017, 57, 193–203. [Google Scholar] [CrossRef]

- Kuan, C.Y.; Ann, L.S.; Ismail, A.A.; Leng, T.; Fee, C.G.; Hashim, K. Crop loss by weeds in Malaysia. In Proceedings of the Third Tropical Weed Science Conference., Kuala Lumpur, Malaysia, 4–6 December 1990; Malaysian Plant Protection Society: Kuala Lumpur, Malaysia, 1990; pp. 1–21. [Google Scholar]

- Wayayok, A.; Sooma, M.A.M.; Abdana, K.; Mohammeda, U. Impact of Mulch on Weed Infestation in System of Rice Intensification (S.R.I.) Farming. Agric. Agric. Sci. Procedia 2014, 2, 353–360. [Google Scholar]

- Martin, R.J. Weed research issues, challenges, and opportunities in Cambodia. Crop Prot. 2017, 134, 104288. [Google Scholar] [CrossRef]

- Abdulahi, A.; Nassab, D.M.A.; Nasrolahzadeh, S.; Salmasi, Z.S.; Pourdad, S.S. Evaluation of wheat-chickpea intercrops as influence by nitrogen and weed management. Am. J. Agric. Biol. Sci. 2012, 7, 447–460. [Google Scholar]

- Zhu, J.; Wang, J.; DiTommaso, A.; Zhang, C.; Zheng, G.; Liang, W.; Islam, F.; Yang, C.; Chen, X.; Zhou, W. Weed research status, challenges, and opportunities in China. Crop Prot. 2018, 134, 104449. [Google Scholar] [CrossRef]

- Varah, A.; Ahodo, K.; Coutts, S.R.; Hicks, H.L.; Comont, D.; Crook, L.; Hull, R.; Neve, P.; Childs, D.; Freckleton, R.P.; et al. The costs of human-induced evolution in an agricultural system. Nat. Sustain. 2020, 3, 63–71. [Google Scholar] [CrossRef] [PubMed]

- Balafoutis, A.; Beck, B.; Fountas, S.; Vangeyte, J.; Van Der Wal, T.; Soto, I.; Gómez-Barbero, M.; Barnes, A.; Eory, V. Precision agriculture technologies positively contributing to GHG emissions mitigation, farm productivity and economics. Sustainability 2017, 9, 1339. [Google Scholar] [CrossRef]

- Ruzmi, R.; Ahmad-Hamdani, M.S.; Bakar, B.B. Prevalence of herbicide-resistant weed species in Malaysian rice fields: A review. Weed Biol. Manag. 2017, 17, 3–16. [Google Scholar] [CrossRef]

- Singh, P.K.; Gharde, Y. Adoption level and impact of weed management technologies in rice and wheat: Evidence from farmers of India. Indian J. Weed Sci. 2020, 52, 64–68. [Google Scholar] [CrossRef]

- Matthews, G. Can drones reduce compaction and contamination? Int. Pest Control 2018, 60, 224–226. [Google Scholar]

- Herrera, P.J.; Dorado, J.; Ribeiro, Á. A novel approach for weed type classification based on shape descriptors and a fuzzy decision-making method. Sensors 2014, 14, 15304–15324. [Google Scholar] [CrossRef]

- Gerhards, R.; Oebel, H. Practical experiences with a system for site specific weed control in arable crops using real time image analysis and G.P.S. controlled patch spraying. Weed Res. 2006, 46, 185–193. [Google Scholar] [CrossRef]

| Family Name | Scientific Name | Common Name |

|---|---|---|

| Grasses weeds | ||

| Poaceae | Oriza sativa complex | Weedy rice |

| Leptochloa chinensis (L.) Nees | Chinese sprangletop | |

| Chloris barbata Sw. | Swollen fingergrass | |

| Echinochloa crus-galli (L.) Beauv. | Barnyardgrass | |

| Echinochloa colana (L.) Link | Jungle rice | |

| Ischeamum rugosum Salisb | Ribbed murainagrass | |

| Brachiaria mutica (Forsk.) Stapf | Para grass, buffalo grass | |

| Cynodon dactylon (L.) Pers. | Bermuda grass | |

| Sedge weeds | ||

| Cyperaceae | Fimbristylis miliacea (L.) Vahl. | Fimbry |

| Cyperus iria | Rice flat sedge | |

| Cyperus difformis | Small flower umbrella plant | |

| Cyperus rotundus | Nut grass, nut sedge | |

| Eleocharis dulcis (Burm.f) Henschel | Chinese water chestnut | |

| Fimbristylis globulosa (Retz.) Kunth | Globe fimbry | |

| Fuirena umbellate Rottb | Yefen, tropical umbrella sedge | |

| Scirpus grossus L.f. | Tukiu, giant bulrush | |

| Scirpus juncoides Roxb. | Club-rush, wood club-rush, bulrush | |

| Scirpus suspinus L. | - | |

| Broad leaved weeds | ||

| Butomaceae | Limnocharis flava (L.) Buchenau | Yellow velvet-leaf, sawah lettuce, sawah flower rush |

| Pontederiaceae | Monochoria vaginalis (Burm.f.) C.Presl | Pickerel weed, heartshape false pickerel weed |

| Eichhornia crassipes (Mart.) Solms | Floating water-hyacinth | |

| Alismataceae | Sagittaria guayanensis Kunth | Arrowhead, swamp potato |

| Onagraceae | Ludwigia hyssopifolia (G.Don) Exell | Seedbox, linear leaf water primrose |

| Sphenocleaceae | Sphenoclea zeylanica Gaertn | Goose weed, wedgewort |

| Convolvulaceae | Ipomoea aquatica Forsk | Kangkong, swamp morning glory, water spinach, swamp cabbage |

| Sensors/Details | RGB | Multispectral | Hyperspectral |

|---|---|---|---|

| Resolution (Mpx) | 16–42 | 1.2–3.2 | 0.0025–2.2 |

| Spectral range (nm) | 400–700 | 400–900 | 300–2500 |

| Spectral bands | 3 | 3–10 | 40–660 |

| Weight (approx.) (kg) | 0.5–1.5 | 0.18–0.7 | 0.032–5 |

| Price (approx.) (USD) | 950–1780 | 3560–20,160 | 47,434–59,293 |

| Advantages | High-quality images Low-cost operational needs No need for radiometric and atmospheric calibration | Have more than three bands Can generates more vegetation indices than RGB | Hundreds of narrow radiometric bands Can calculate narrowband indices that can target specific concerns. |

| Disadvantages | Only have three bands A limited number of vegetation indices can be computed | Radiometric and atmospheric calibration is compulsory Unable to deliver a high-quality resolution image | Expensive, heavier, and more extensive compared to the other sensors Complicated system Complex radiometric and atmospheric calibration Unable to deliver a high-quality resolution image |

| Categories | Feature | Description/Formula | Reference |

|---|---|---|---|

| Vegetation indices | Normalized vegetation index (NDVI) Excess green index (ExG) | (NIR − R)/(NIR + R) | [62,63] |

| Color space transformed features | Hue Saturation Value | A gradation or variety of a color Depth, purity, or shades of the color Brightness intensity of the color tone | [64] |

| Wavelet transformed coefficients | Wavelet coefficient mean Wavelet coefficient standard deviation | Mean value calculated for a pixel using discrete wavelet transformation Standard deviation calculated for a pixel using discrete wavelet transformation | [65] |

| Principal components (1) | Principal component 1 | Principal component analysis-derived component accounting maximum amount of variance | [66] |

| Sensors | Crops | Weed Type | Technique | Accuracy (%) | Implications | Year | Reference |

|---|---|---|---|---|---|---|---|

| RGB* | Carrots: Autumn King | Grass and broad-leaved | Auto-associative neural network | >75% | Neural network-based allows the system to learn and discriminate between species without predefined plant descriptions | 2003 | [81] |

| Hyperspectral images: 72-waveband | Corn | Grass and broad-leaved | Support vector machine (SVM) vs artificial neural network (ANN) | 66–76% | The SVM technique outperforms the ANN method | 2006 | [82] |

| Multispectral | Winter wheat | Cruciferous weeds | Maximum likelihood classification (MCL) | 91.3% | MCL accurately discriminated weed patches field-scale and broad-scale scenarios | 2013 | [83] |

| RGB* | Rice | Various types | Overlapping and merging the binary image layers | N/A | RGB images can be used to validate proper growth and discover the irregularities such as weeds in the paddy field | 2013 | [84] |

| Multispectral and hyperspectral | Cereals and broad-leaved crops | Grass and broad-leaved | General discriminant analysis (GDA) | 87 ± 5.57% | Using GDA, it is feasible to distinguish between crops and weeds | 2013 | [85] |

| Hyperspectral 61 bands: 400–1000 nm spectral resolution: 10 nm | Field pea, spring wheat, canola | Sedge and broad-leaved | Artificial neural network (ANN) | 94% | ANN successfully discriminates weeds from crops | 2014 | [86] |

| Hyperspectral | Soybean | Broad-leaved | Random forest (RF) | >93.8% | Shortwave infrared: best spectrum to differentiate pigweeds from soybean | 2016 | [38] |

| RGB* | Rice | N/A | Artificial neural networks (ANN) | 99% | ANN can detect weeds in paddy fields with reasonable accuracy, but 50 m above the ground is insufficient for weeds similar to paddy | 2016 | [45] |

| RGB* | Sunflower | Broad-leaved | Object-based image analysis (OBIA) | >85% | The OBIA procedure computed multiple data points, allowing herbicide requirements for timely and improved site-specific post-emergence weed seedling management | 2016 | [87] |

| RGB*, multispectral | Maize | Grass | Object-based image analysis (OBIA) | 86–92% | Successfully produced accurate weed map, reduced spraying herbicides and costs | 2016 | [88] |

| Multispectral | Bracken fern | Broad-leaved | Discriminant analysis (DA) | 87.80% | WolrdView-2 has the highest overall classification accuracy compared to Landsat 8 OLI, but Landsat 8 OLI* provides valuable information for long term continuous monitoring | 2017 | [40] |

| Multispectral camera | Cereals | Broad-leaved | Supervised Kohonen network (SKN), counter-propagation artificial neural network (CP-ANN) and XY-fusion network | >98% | The results demonstrate the feasibility of weed mapping on the multispectral image using hierarchical self-organizing maps | 2017 | [49] |

| Multispectral | Cereals | Broad-leaved | Maximum likelihood classification (MCL) | 87.04% | The results prove the feasibility of weed mapping using multispectral imaging | 2017 | [89] |

| RGB* | Sugarcane | Grass | Artificial neural network (ANN) and random forest (RF) | 91.67% | Even though ANN and RF achieved nearly identical accuracy. However, ANN outperform RF classification | 2017 | [90] |

| RGB* | Sugar beet | Broad-leaved | Support vector machine SVM vs artificial neural network (ANN) | 95.00% | The SVM technique outperformed the ANN method in terms of shape-based weed detection | 2018 | [37] |

| RGB* | Rice | Grass and sedge | Pre-trained CNN with the residual framework in an FCN form and transferred to a dataset by fine-tuning. | 94.45% | The proposed method produced accurate weed mapping | 2018 | [42] |

| RGB* | Rice | Grass and sedge | Fully convolutional neural network (FCN) | 93.5% | A fully convolutional network (FCN) outperformed convolutional neural network (CNN) | 2018 | [43] |

| RGB* | Sunflower and cotton | Grass and broad-leaved | Object-based image analysis (OBIA) and random forest (RF) | Sunflower (87.9%) and cotton (84%) | The proposed technique allowed short processing time at critical periods, which is critical for preventing yield loss | 2018 | [46] |

| Multispectral | Rice | Grass and broad-leaved | ISODATA classification and vegetation indices (VI) | 96.5% | SAVI and GSAVI were the best inputs and improved weed classification | 2018 | [50] |

| RGB* | Spinach, beet, and bean | N/A | Convolutional neural networks (CNN) | Spinach (81%), beet (93%) and bean (69%) | The proposed method of weed detection was effective in different crop fields | 2018 | [69] |

| RGB* | Spinach and bean | N/A | Convolutional neural network (CNN) | 94.5% | Best option to replace supervised classification | 2018 | [70] |

| RGB* | Rice | Grass and sedge | Fully convolutional neural network (FCN) | >94% | Proposed methods successfully produced prescription and weed maps | 2018 | [77] |

| RGB* | N/A | Yellow flag iris | Random forest (RF) | 99% | Hybrid image-processing demonstrated good weed classification | 2018 | [91] |

| Hyperspectral | Maize | Broad-leaved | Random forest (RF) | C. arvensis (95.9%), Rumex (70.3%) and C. arvense (65.9%,) | RF algorithm successfully discriminated weeds from crops and combination with VIs improved the classification’s accuracy | 2018 | [92] |

| RGB* | Soybean | Grass and broad-leaved | Joint unsupervised learning of deep representations and image clusters (JULE) and deep clustering for unsupervised learning of visual features (DeepCluster) | 97% | Semi-automatic data labelling can reduce the cost of manual data labelling and be easily replicated to different datasets | 2019 | [71] |

| RGB* and Multispectral | Wheat | Unwanted crop | Object-based image analysis (OBIA), vegetation index (VIs) | 87.48% | 30m is the best altitude to detect weed patches within the crop rows and between the crop rows in the wheat field, and VIs successfully extracted green channels and improved weed detection | 2019 | [93] |

| RGB* | Upland rice | Grass and broad-leaved | Object-based image analysis (OBIA) | 90.4% | Rice and weeds can be distinguished by consumer-grade UAV images using the SLIC-RF algorithm developed in this study with acceptable accuracy | 2020 | [47] |

| RGB* | Rice | Grass and sedge | Convolutional neural network (CNN) | 80.2% | A fully convolutional network (FCN) outperformed OBIA classification | 2020 | [94] |

| RGB* | Barley | Broad-leaved | Linear regression | N/A | Qualitative methods proved to have high-quality classification | 2020 | [95] |

| RGB* | Vineyard | Grass | OBIA and combined decision tree (DT–OBIA) | 84.03–89.82% | Proposed methods enable winegrowers to apply site-specific weed control while maintaining cover crop-based management systems and their vineyards’ benefits. | 2020 | [96] |

| RGB* | Cotton | Sedge and broad-leaved | Object-based image analysis (OBIA) and random forest (RF) | 83.33% (low density plot), 85.83% (medium density plot) and 89.16% (high density plot) | The findings demonstrated the value of RGB images for weed mapping and density estimation in cotton for precision weed management | 2020 | [97] |

| Multispectral and hyperspectral | Sorghum | Grass and broadleaved | OBIA with artificial nearest neighbor (NN) algorithm | 92% | The combination of OBIA–ANN demonstrated the feasibility of weed mapping in the sorghum field | 2021 | [62] |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rosle, R.; Che’Ya, N.N.; Ang, Y.; Rahmat, F.; Wayayok, A.; Berahim, Z.; Fazlil Ilahi, W.F.; Ismail, M.R.; Omar, M.H. Weed Detection in Rice Fields Using Remote Sensing Technique: A Review. Appl. Sci. 2021, 11, 10701. https://doi.org/10.3390/app112210701

Rosle R, Che’Ya NN, Ang Y, Rahmat F, Wayayok A, Berahim Z, Fazlil Ilahi WF, Ismail MR, Omar MH. Weed Detection in Rice Fields Using Remote Sensing Technique: A Review. Applied Sciences. 2021; 11(22):10701. https://doi.org/10.3390/app112210701

Chicago/Turabian StyleRosle, Rhushalshafira, Nik Norasma Che’Ya, Yuhao Ang, Fariq Rahmat, Aimrun Wayayok, Zulkarami Berahim, Wan Fazilah Fazlil Ilahi, Mohd Razi Ismail, and Mohamad Husni Omar. 2021. "Weed Detection in Rice Fields Using Remote Sensing Technique: A Review" Applied Sciences 11, no. 22: 10701. https://doi.org/10.3390/app112210701

APA StyleRosle, R., Che’Ya, N. N., Ang, Y., Rahmat, F., Wayayok, A., Berahim, Z., Fazlil Ilahi, W. F., Ismail, M. R., & Omar, M. H. (2021). Weed Detection in Rice Fields Using Remote Sensing Technique: A Review. Applied Sciences, 11(22), 10701. https://doi.org/10.3390/app112210701