Autonomous Driving Robot That Drives and Returns along a Planned Route in Underground Mines by Recognizing Road Signs

Abstract

:1. Introduction

2. Materials and Methods

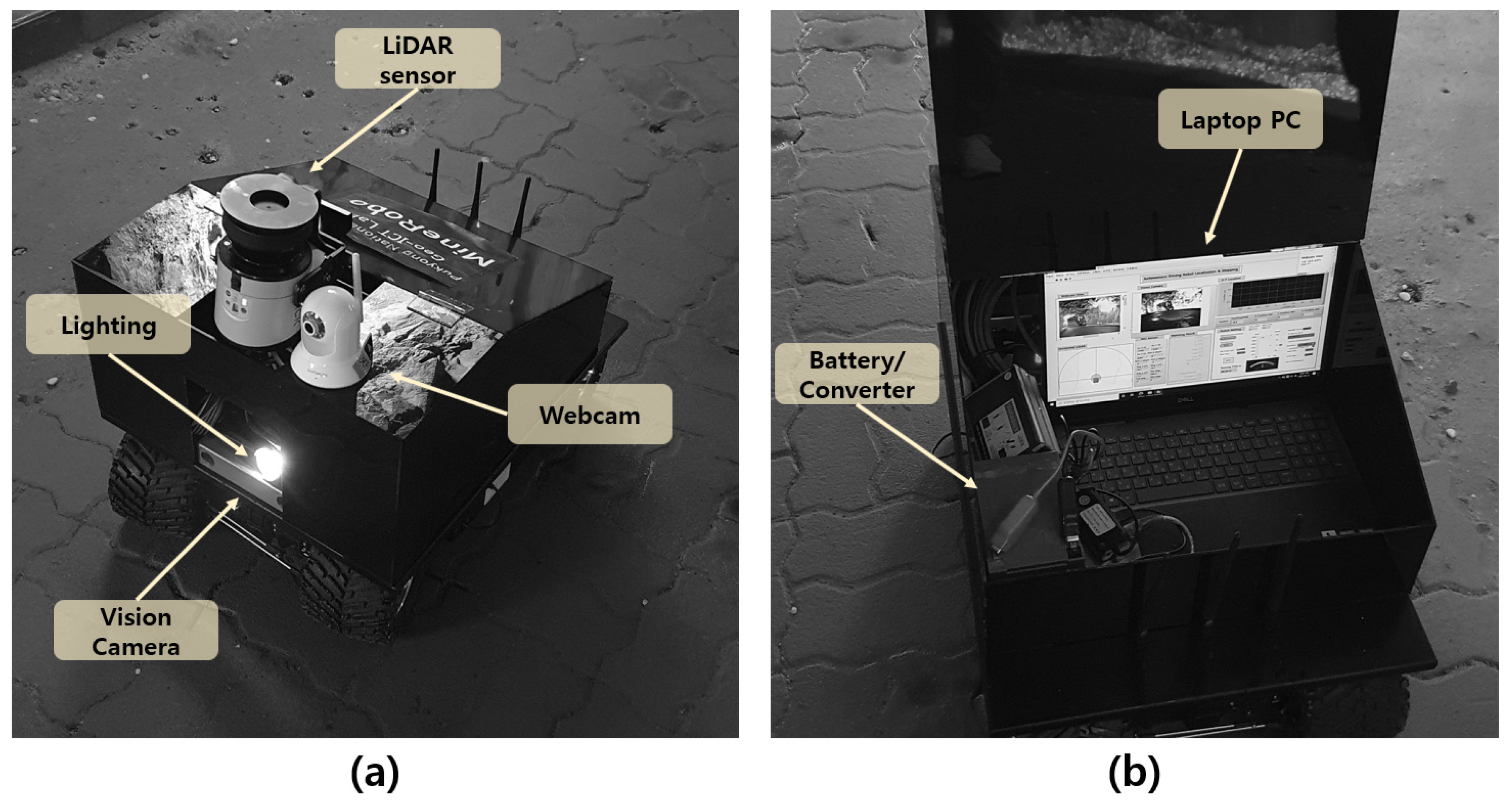

2.1. Autonomous Driving Robot

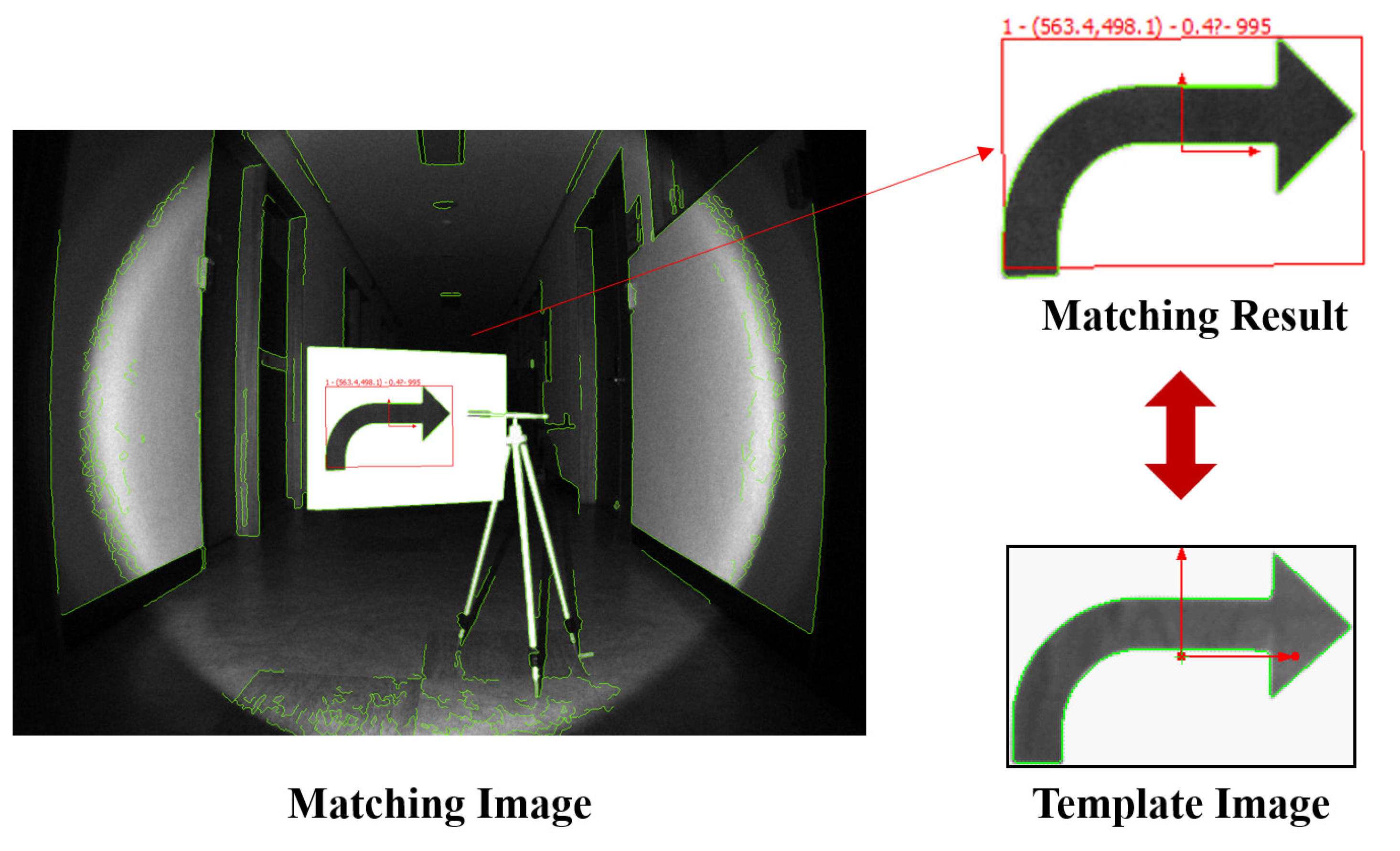

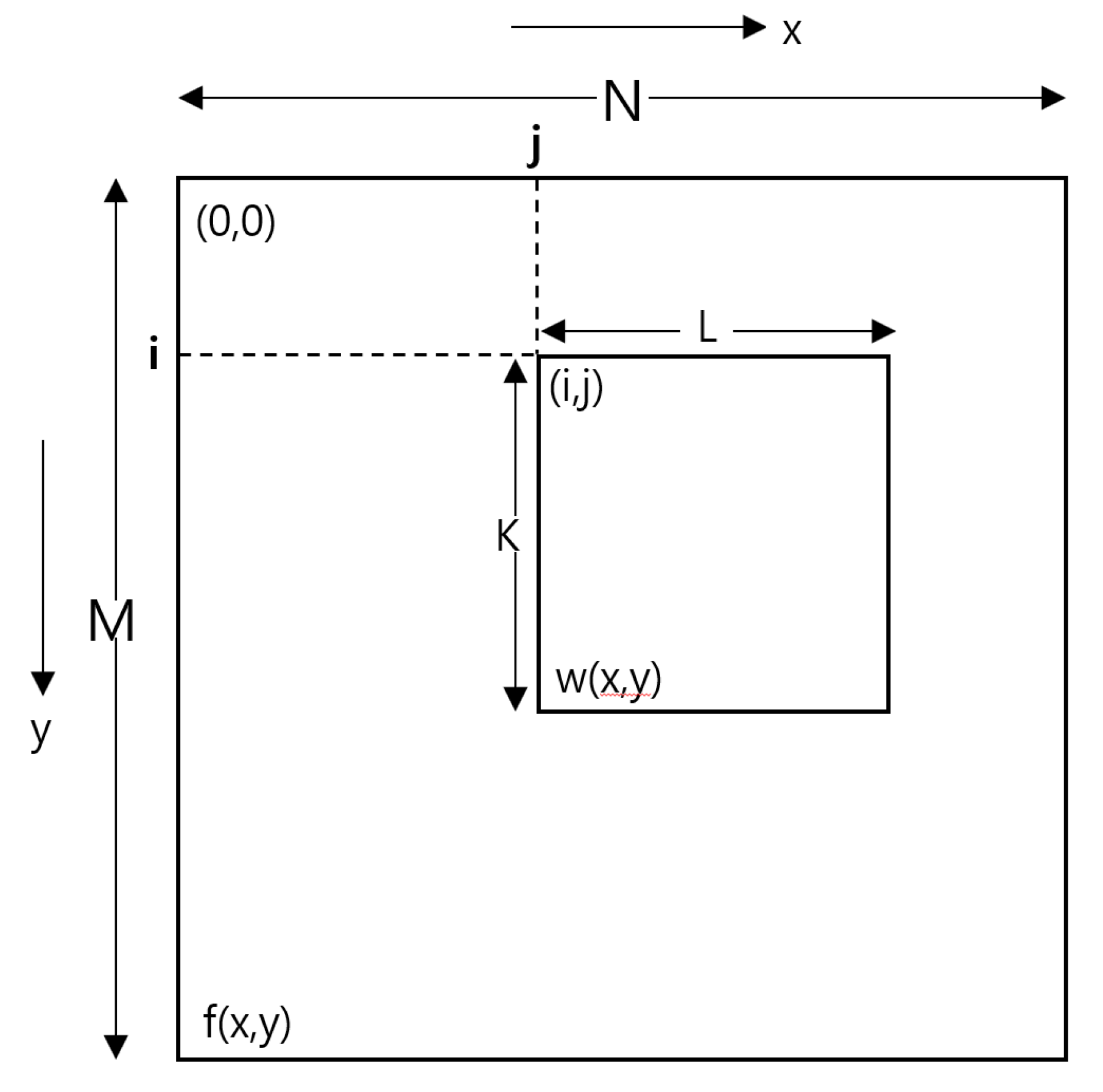

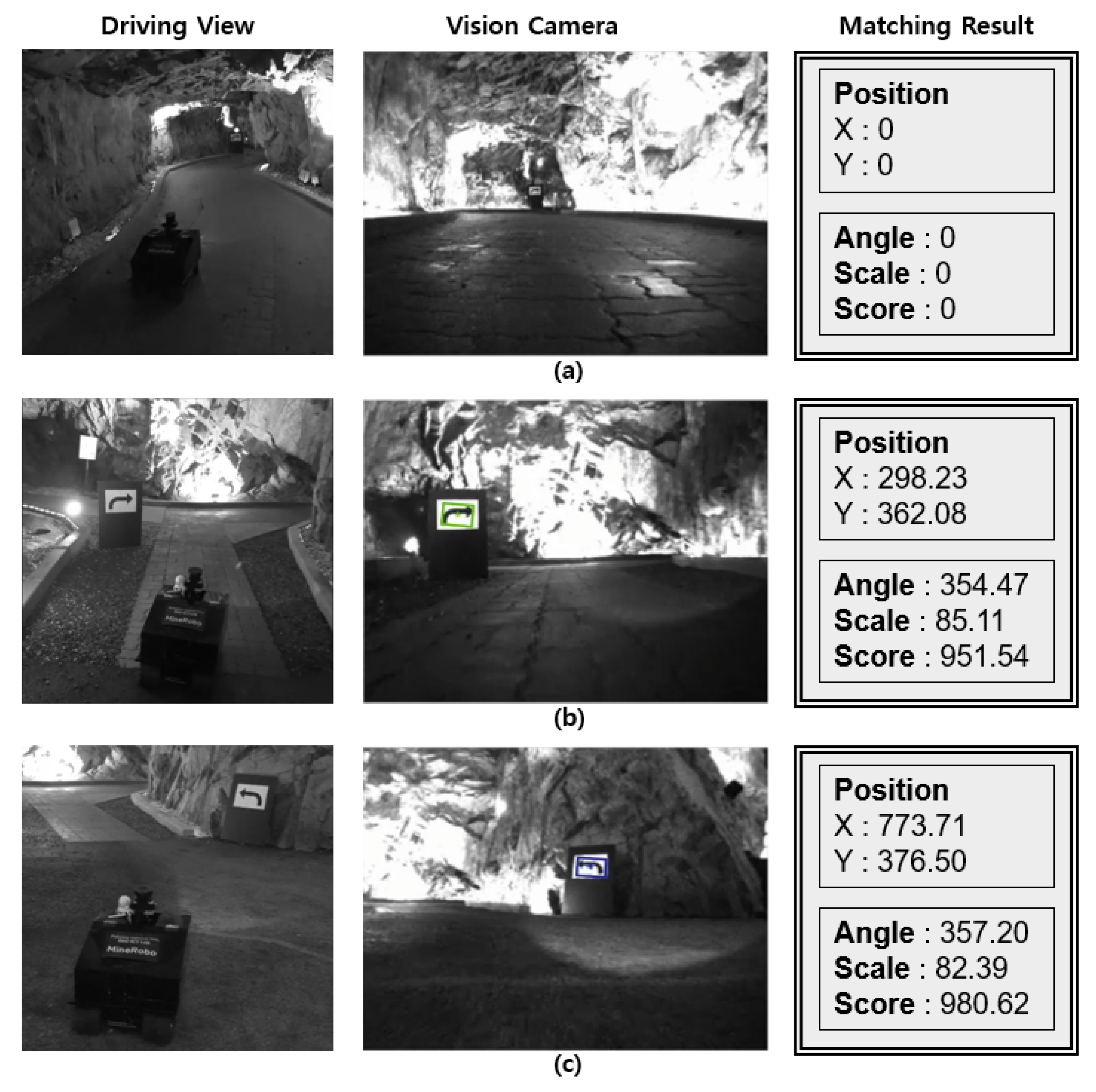

2.2. Machine Vision Algorithm

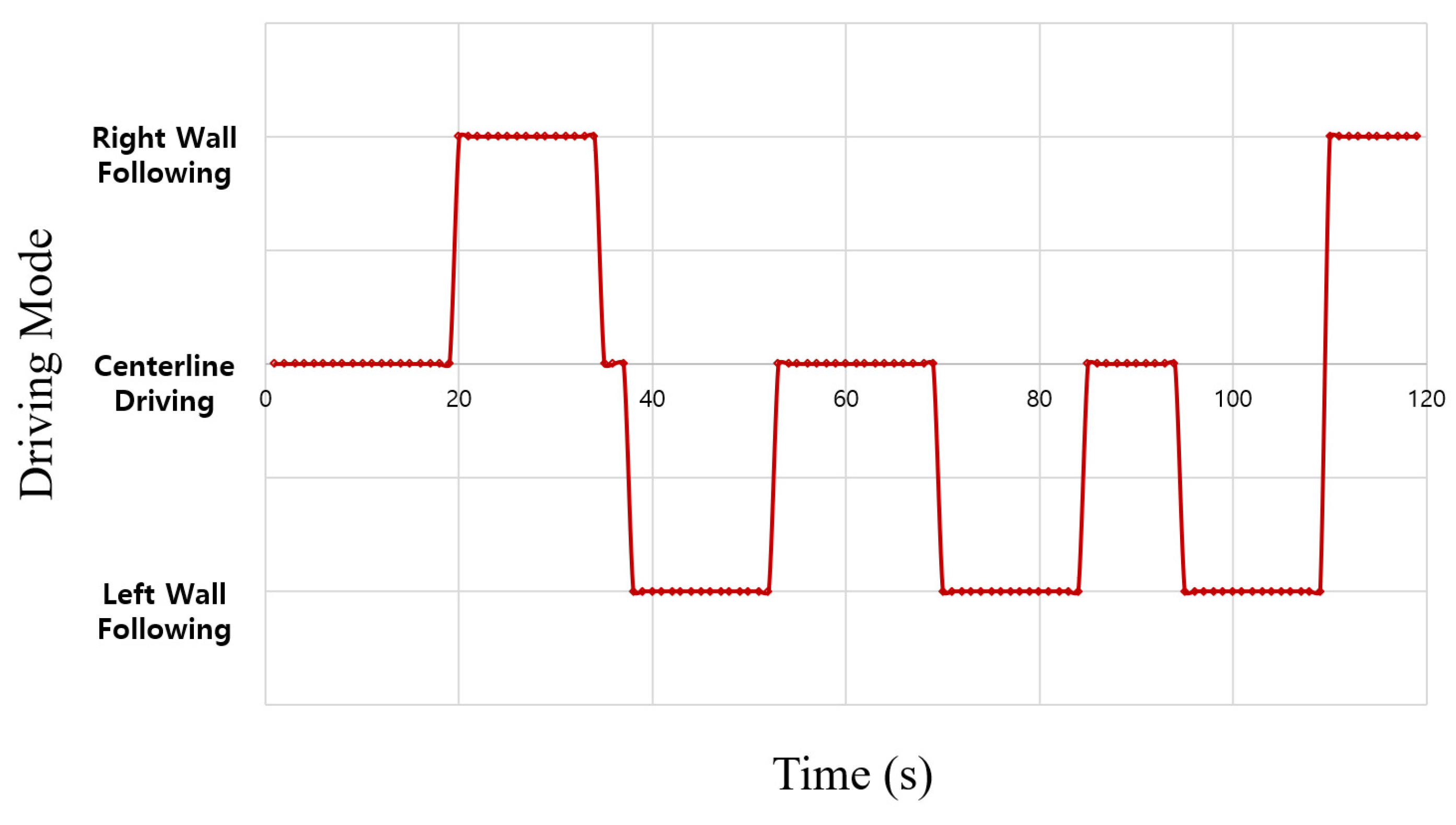

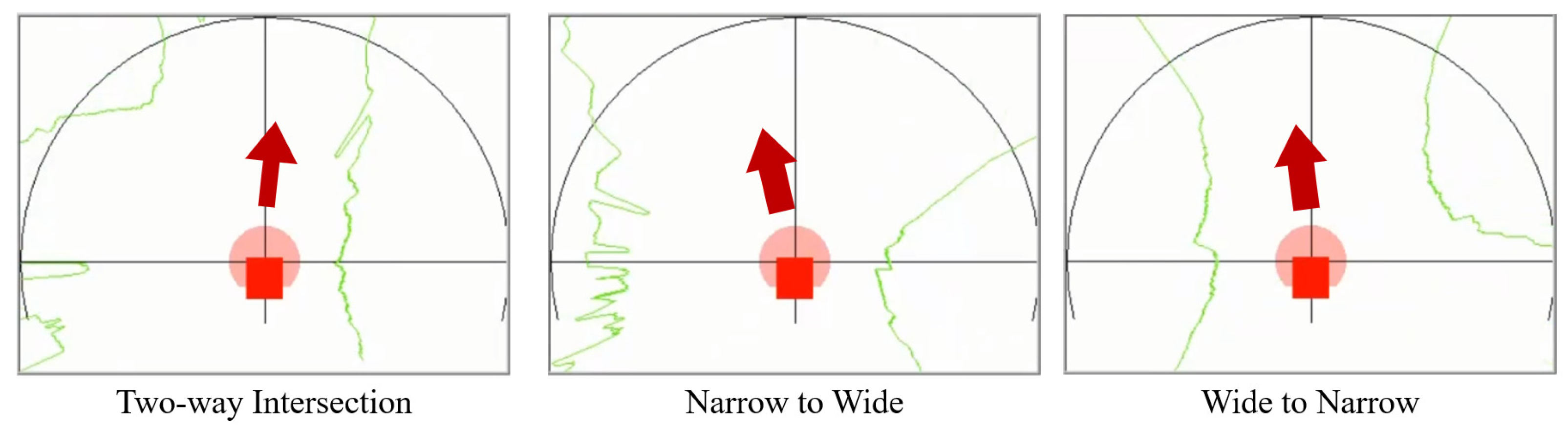

2.3. Autonomous Driving and Wall following Algorithm

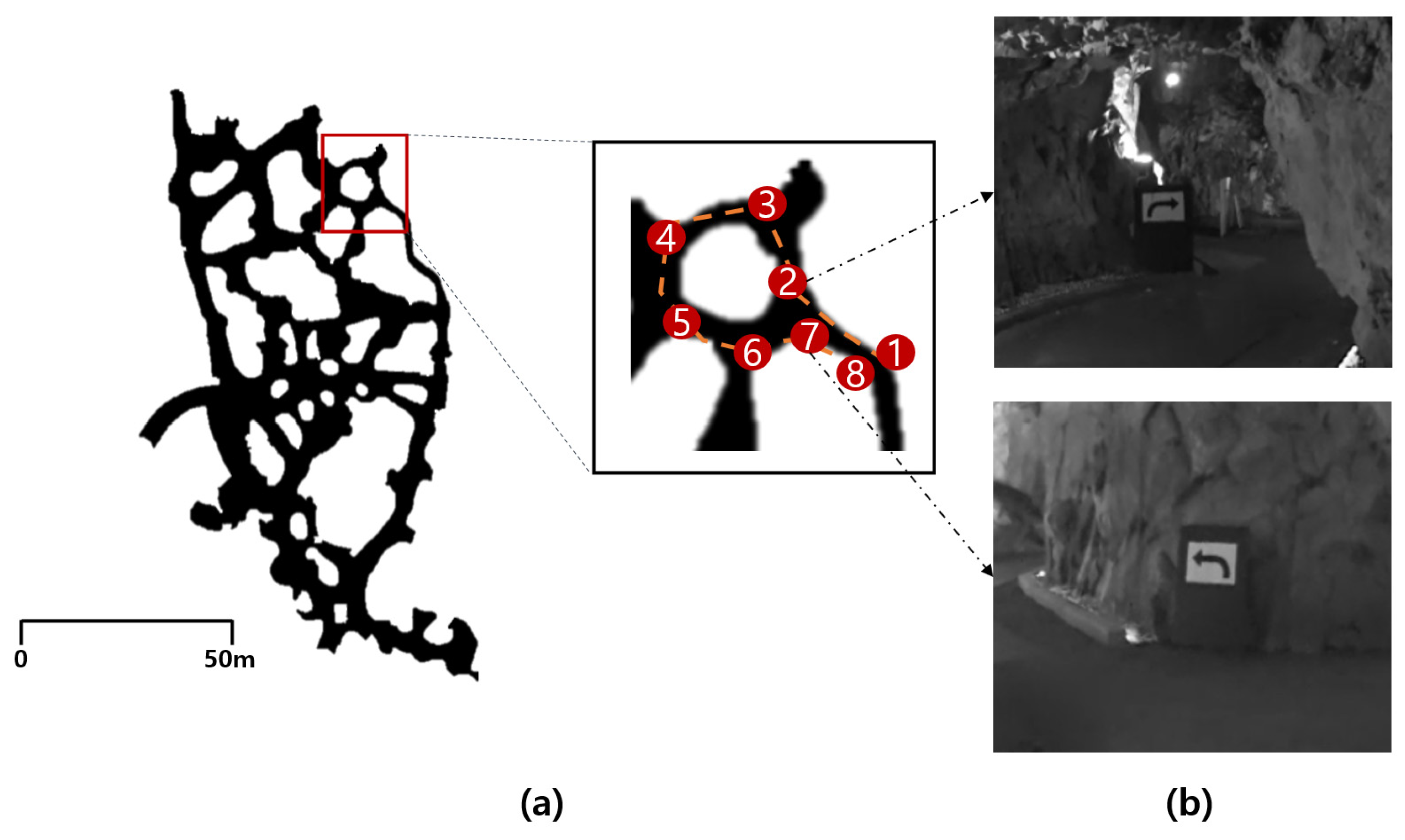

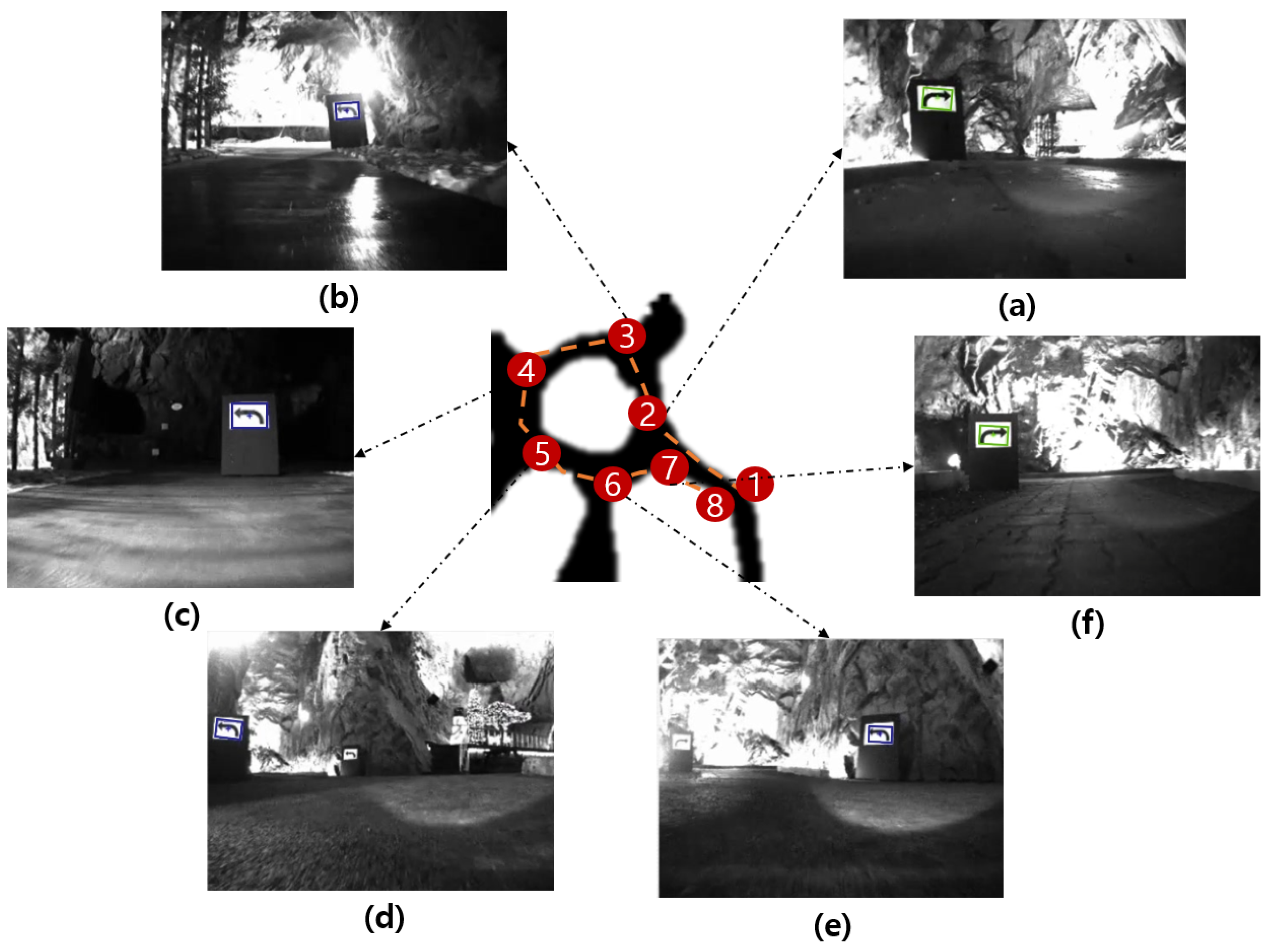

2.4. Field Experiment

3. Results

4. Discussion

4.1. Applications and Expected Effect

4.2. Limitations and Future Work

- Artificial intelligence object recognition: The shape of the entire tunnel changes frequently because of the ongoing excavation work in underground mines, and accordingly, the movement paths of vehicles and workers also change frequently. Hence, road signs at actual underground mine sites are often temporarily marked on the wall. Therefore, the utilization of the road sign recognition system can be expected to further expand if the image of each temporary marker is stored as data and object recognition technology that uses a large number of learning images, such as machine learning and deep learning, is used. In addition, the recognition of static objects, such as workers or transport equipment in the tunnel, as well as stationary road signs, may be performed.

- Sensor: Because there are no lanes in underground mines, the drivable area is unclear, and because the shape of the tunnel wall is irregular, collisions may occur in unpredictable areas. Therefore, it is suggested to use not only the 2D LiDAR sensor or vision camera in this study, but also a 3D LiDAR that can widely recognize the rear, side, and upper part of the tunnel. In addition, because the intensity of lighting is different for each underground mining site, and the accuracy of matching may be reduced if the lighting is too strong, an illuminance sensor that can recognize the illuminance intensity of the surroundings and reflect it in the lighting system should be additionally utilized.

- Specificity of the underground mining site: The underground mining site has various sections such as a U-turn area, a three-pronged road, and an area where minerals are loaded, in addition to straight, left, and right turns. Therefore, to consider these environmental characteristics and changes, additional research on autonomous driving algorithms for driving in complex routes should be conducted.

- Road sign visibility: In an underground mine environment, dust is frequently generated by blasting, and puddles and mud can be caused by stagnant water on the edge of the road. The visibility of the road sign may be limited by these factors, and the robot may not accurately recognize the road sign. Therefore, for a robot to drive along a planned route, elements (dust, mud) that hinder visibility must be periodically removed. In addition, in mines with large shafts, the minimum size to clearly recognize road signs should be considered when driving along the centerline of the road, and the installation location of road signs should be selected so as not to interfere with the robot’s driving route [37].

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bavelos, A.C.; Kousi, N.; Gkournelos, C.; Lotsaris, K.; Aivaliotis, S.; Michalos, G.; Makris, S. Enabling Flexibility in Manufacturing by Integrating Shopfloor and Process Perception for Mobile Robot Workers. Appl. Sci. 2021, 11, 3985. [Google Scholar] [CrossRef]

- Kassai, E.T.; Azmat, M.; Kummer, S. Scope of Using Autonomous Trucks and Lorries for Parcel Deliveries in Urban Settings. Logistics 2020, 4, 17. [Google Scholar] [CrossRef]

- Reis, J.; Cohen, Y.; Melão, N.; Costa, J.; Jorge, D. High-Tech Defense Industries: Developing Autonomous Intelligent Systems. Appl. Sci. 2021, 11, 4920. [Google Scholar] [CrossRef]

- Fox, D.; Burgard, W.; Thrun, S. The dynamic window approach to collision avoidance. IEEE Robot. Autom. Mag. 1997, 4, 23–33. [Google Scholar] [CrossRef] [Green Version]

- Adams, M.; Zhang, S.; Xie, L. Particle filter based outdoor robot localization using natural features extracted from laser scanners. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA ’04), New Orleans, LA, USA, 26 April–1 May 2004; Volume 2, pp. 1493–1498. [Google Scholar]

- Thrun, S.; Burgard, W.; Fox, D. Probabilistic Robotics; MIT Press: Cambridge, MA, USA, 2005. [Google Scholar]

- Moreno, L.; Armingol, J.M.; Garrido, S.; De La Escalera, A.; Salichs, M.A. A genetic algorithm for mobile robot localization using ultrasonic sensors. J. Intell. Robot. Syst. Theory Appl. 2002, 34, 135–154. [Google Scholar] [CrossRef] [Green Version]

- Liu, C.; Zhou, C.; Cao, W.; Li, F.; Jia, P. A Novel Design and Implementation of Autonomous Robotic Car Based on ROS in Indoor Scenario. Robotics 2020, 9, 19. [Google Scholar] [CrossRef] [Green Version]

- Boston Dynamics. Available online: https://www.bostondynamics.com/ (accessed on 5 July 2021).

- DAIMLER’S PROMETHEUS Project. Available online: https://media.daimler.com/marsMediaSite/en/instance/ko/The-PROMETHEUS-project-launched-in-1986-Pioneering-autonomous-driving.xhtml?oid=13744534 (accessed on 5 July 2021).

- Masood, K.; Dauptain, X.; Zoppi, M.; Molfino, R. Hydraulic Pressure-Flow Rate Control of a Pallet Handling Robot for an Autonomous Freight Delivery Vehicle. Electronics 2020, 9, 1370. [Google Scholar] [CrossRef]

- Mercorelli, P. Using Fuzzy PD Controllers for Soft Motions in a Car-like Robot. Adv. Sci. Technol. Eng. Syst. J. 2018, 3, 380–390. [Google Scholar] [CrossRef] [Green Version]

- Datta, S.; Ray, R.; Banerji, D. Development of autonomous mobile robot with manipulator for manufacturing environment. Int. J. Adv. Manuf. Technol. 2008, 38, 536–542. [Google Scholar] [CrossRef]

- Wang, C.; Du, D. Research on logistics autonomous mobile robot system. In Proceedings of the 2016 IEEE International Conference on Mechatronics and Automation, Harbin, China, 7–10 August 2016. [Google Scholar]

- Park, Y.; Jee, T.; Kang, S.; Ryu, C.; Ko, J. Implementation of Autonomous Navigation based on the Open Architecture. J. Inst. Electron. Eng. Korea 2007, 44, 34–38. [Google Scholar]

- Miller, I.D.; Fernando, C.; Anthony, C.; Shivakumar, S.S.; Lee, E.S.; Jarin-Lipschitz, L.; Akhilesh, B.; Rodrigues, N.; Zhou, A.; Cohen, A.; et al. Mine Tunnel Exploration Using Multiple Quadrupedal Robots. IEEE Rob. Autom. Lett. 2020, 5, 2840–2847. [Google Scholar] [CrossRef] [Green Version]

- Berglund, T.; Brodnik, A.; Jonsson, H.; Staffanson, M.; Söderkvist, I. Planning Smooth and Obstacle-Avoiding B-Spline Paths for Autonomous Mining Vehicles. IEEE Trans. Autom. Sci. Eng. 2010, 7, 167–172. [Google Scholar] [CrossRef] [Green Version]

- Bakambu, J.N.; Polotski, V. Autonomous system for navigation and surveying in underground mines. J. Field Rob. 2007, 24, 829–847. [Google Scholar] [CrossRef]

- Shaffer, G.K.; Stentz, A.; Whittaker, W.L.; Fitzpatrick, K.W. Position Estimator for Underground Mine Equipment. IEEE Trans. Ind. Appl. 1992, 28, 1131–1140. [Google Scholar] [CrossRef]

- MobileTronics’s VirtuRail. Available online: https://innovation.strabag.com/en/project/virturail-with-an-autonomous-train-into-the-heart-of-the-tunnel/ (accessed on 5 July 2021).

- Günther, F.; Mischo, H.; Lösch, R.; Grehl, S.; Güth, F. Increased safety in deep mining with iot and autonomous robots. In Proceedings of the 39th International Symposium ‘Application of Computers and Operations Research in the MIneral Industry’(APCOM 2019), Wroclaw, Poland, 4–6 June 2019; Mueller, C., Assibey-Bonsu, W., Baafi, E., Dauber, C., Doran, C., Jaszczuk, M.J., Nagovitsyn, O., Eds.; CRC Press: London, UK, 2019; pp. 101–105. [Google Scholar]

- Kim, H.; Choi, Y. Development of a LiDAR Sensor-based Small Autonomous Driving Robot for Underground Mines and Indoor Driving Experiments. J. Korean Soc. Miner. Energy Resour. Eng. 2019, 56, 407–415. [Google Scholar] [CrossRef]

- Kim, H.; Choi, Y. Field Experiment of a LiDAR Sensor-based Small Autonomous Driving Robot in an Underground Mine. Tunn. Undergr. Space 2020, 30, 76–86. [Google Scholar] [CrossRef]

- Kim, H.; Choi, Y. Comparison of Three Location Estimation Methods of an Autonomous Driving Robot for Underground Mines. Appl. Sci. 2020, 10, 4831. [Google Scholar] [CrossRef]

- Kim, H.; Choi, Y. Self-driving algorithm and location estimation method for small environmental monitoring robot in underground mines. Comput. Model. Eng. Sci. 2021, 127, 943–964. [Google Scholar] [CrossRef]

- Kim, H.; Choi, Y. Location estimation of autonomous driving robot and 3D tunnel mapping in underground mines using pattern matched LiDAR sequential images. Int. J. Min. Sci. 2021, 31, 779–788. [Google Scholar] [CrossRef]

- Baker, C.; Morris, A.; Ferguson, D.; Thayer, S.; Whittaker, C.; Omohundro, Z.; Reverte, C.; Whittaker, W.; Thrun, S. A Campaign in Autonomous Mine Mapping. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA ’04), New Orleans, LA, USA, 26 April–1 May 2004; IEEE: New York, NY, USA, 2004. [Google Scholar]

- Bakambu, J.N. Integrated autonomous system for exploration and navigation in underground mines. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Beijing, China, 9–15 October 2006. [Google Scholar]

- Szrek, J.; Zimroz, R.; Wodecki, J.; Michalak, A.; Góralczyk, M.; Worsa-Kozak, M. Application of the Infrared Thermography and Unmanned Ground Vehicle for Rescue Action Support in Underground Mine—The AMICOS Project. Remote Sens. 2021, 13, 69. [Google Scholar] [CrossRef]

- Szrek, J.; Wodecki, J.; Błażej, R.; Zimroz, R. An Inspection Robot for Belt Conveyor Maintenance in Underground Mine—Infrared Thermography for Overheated Idlers Detection. Appl. Sci. 2020, 10, 4984. [Google Scholar] [CrossRef]

- Zhao, J.; Gao, J.; Zhao, F.; Liu, Y. A Search and Rescue Robot System for Remotely Sensing the Underground Coal Mine Environment. Sensors 2017, 17, 2426. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jing, N.; Ma, X.; Guo, W.; Wang, M. 3D Reconstruction of Underground Tunnel Using Depth-camera-based Inspection Robot. Sensors Mater. 2019, 31, 2719–2734. [Google Scholar] [CrossRef]

- Zeng, F.; Jacobson, A.; Smith, D.; Boswell, N.; Peynot, T.; Milford, M. TIMTAM: Tunnel-Image Texturally Accorded Mosaic for Location Refinement of Underground Vehicles with a Single Camera. IEEE Robot. Autom. Lett. 2019, 4, 4362–4369. [Google Scholar] [CrossRef] [Green Version]

- IMAQ Vision Concept Manual. Available online: https://www.ni.com/pdf/manuals/322916a.pdf (accessed on 5 July 2021).

- Sharma, G.; Sood, S.; Singh Gaba, G.; Gupta, N. Image Recognition System using Geometric Matching and Contour Detection. Int. J. Comput. Appl. 2012, 51, 48–53. [Google Scholar] [CrossRef]

- National Instruments. Available online: https://www.ni.com/ (accessed on 5 July 2021).

- Civera, M.; Zanotti Fragonara, L.; Surace, C. Using Video Processing for the Full-Field Identification of Backbone Curves in Case of Large Vibrations. Sensors 2019, 19, 2345. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Equipment | Model | Specification |

|---|---|---|

| Main Controller | Laptop PC Windows 10 (Microsoft Corporation, Redmond, WA, USA) | Intel Core i7-9750H CPU 4.50 GHz (Intel, Santa Clara, CA, UAS), 16 GB RAM, NVIDIA GeForce 1650 4GB (NVIDIA, Santa Clara, CA, USA) |

| Mobile Robot | ERP-42 (Unmanned Solution, Seoul, Korea) | Size: 650 mm (length) × 470 mm (width) × 158 mm (height) Weight: 8kg Drive: 4 differential gears Max speed: 8 km/h Max run time: 2.5 h Battery: 14.8 V, 12 ah Lighting intensity: 1050 lumen |

| Vision Camera | Bumblebee XB3 (Flir, Wilsonville, OR, USA) | Image: ICX445 (1280 × 960 max pixels) 3.75 μm square pixels Interface: IEEE-1394 Frame Rate: 16 FPS |

| LiDAR | LMS-111 (SICK, Waldkirch, Germany) | Field of View: 270° Interface: TCP/IP Operating Range: 0.5 m–20 m Scanning frequency: 25 Hz/50 Hz |

| IMU | EBIMU–9DOFV4 (E2BOX, Hanam, Korea) | Error: Roll/Pitch ± 0.2°, Yaw ± 0.5° Output Range: −180–+180° |

| Wheel encoder | IG-32PGM 01TYPE (YOUNGJIN B&B, Seoul, Korea) | Motor gear ratio: 13Encoder gear ratio: 61 |

| Item | Site 2 | Site 3 | Site 4 | Site 5 | Site 6 | Site 7 |

|---|---|---|---|---|---|---|

| Score | 985.08 | 974.02 | 984.66 | 998.94 | 980.62 | 951.54 |

| Direction | Right | Left | Left | Left | Left | Right |

| Scale (%) | 92.51 | 81.11 | 117.90 | 94.13 | 82.39 | 85.11 |

| Rotation (°) | 354.52 | 3.44 | 0.41 | 354.00 | 357.20 | 354.47 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, H.; Choi, Y. Autonomous Driving Robot That Drives and Returns along a Planned Route in Underground Mines by Recognizing Road Signs. Appl. Sci. 2021, 11, 10235. https://doi.org/10.3390/app112110235

Kim H, Choi Y. Autonomous Driving Robot That Drives and Returns along a Planned Route in Underground Mines by Recognizing Road Signs. Applied Sciences. 2021; 11(21):10235. https://doi.org/10.3390/app112110235

Chicago/Turabian StyleKim, Heonmoo, and Yosoon Choi. 2021. "Autonomous Driving Robot That Drives and Returns along a Planned Route in Underground Mines by Recognizing Road Signs" Applied Sciences 11, no. 21: 10235. https://doi.org/10.3390/app112110235

APA StyleKim, H., & Choi, Y. (2021). Autonomous Driving Robot That Drives and Returns along a Planned Route in Underground Mines by Recognizing Road Signs. Applied Sciences, 11(21), 10235. https://doi.org/10.3390/app112110235