Abstract

The wireless sensor network (WSN) plays an essential role in various practical smart applications, e.g., smart grids, smart factories, Internet of Things, and smart homes, etc. WSNs are comprised and embedded wireless smart sensors. With advanced developments in wireless sensor networks research, sensors have been rapidly used in various fields. In the meantime, the WSN performance depends on the coverage ratio of the sensors being used. However, the coverage of sensors generally relates to their cost, which usually has a limit. Hence, a new bi-tuning simplified swarm optimization (SSO) is proposed that is based on the SSO to solve such a budget-limited WSN sensing coverage problem to maximize the number of coverage areas to improve the performance of WSNs. The proposed bi-tuning SSO enhances SSO by integrating the novel concept to tune both the SSO parameters and SSO update mechanism simultaneously. The performance and applicability of the proposed bi-tuning SSO using seven different parameter settings are demonstrated through an experiment involving nine WSN tests ranging from 20, 100, to 300 sensors. The proposed bi-tuning SSO outperforms two state-of-the-art algorithms: genetic algorithm (GA) and particle swarm optimization (PSO), and can efficiently accomplish the goals of this work.

1. Introduction

Wireless sensor networks (WSNs), which contain with operation-driven sensors in wireless networks, reveal a major system of wireless environments for many application systems in the modern world, such as solar systems [1], mobile systems [2], railway systems [3], agricultural systems [4], 3-D camera systems [5], traffic systems [6], Internet of Things (IoT) [7], smart cities [8], and body sensing systems [9].

Because of their greater flexibility and efficiency over wired networks [10,11,12,13,14], sensors are deployed, operated, and embedded widely in devices, buildings, vehicles, and other items to model, gather, sense, investigate, and exchange data; to interconnect objects; and to improve production efficiency and offer more efficient resource consumption [1,2,3,4,5,6,7,8,9,10,11,12,13,14].

The sensing coverage problem is one of the fundamental issues in wireless sensor networks, which is a kind of tool used to measure the quality of service (QoS). Coverage in wireless sensor networks refers to the extent of the area to which the wireless signals are transmitted. Thus, the sensing coverage problem has attracted much research investment in recent years. For example, Kim and Choi optimized the sensing coverage by the deployment of sensing nodes using the machine learning method in radio networks in 2019 [15]. Singh and Chen enhanced the sensing coverage by finding the sensing coverage holes using the chord-based hole covering approach in 2020 [16]. Huang et al. addressed sensing coverage by the detection of sensing coverage using the reactive real-time control method for unmanned aerial vehicles in 2020 [17]. Chen et al. optimized the sensing coverage using a reciprocal decision approach for unmanned aerial vehicles in 2018 [18]. Wang et al. maximized the sensing coverage and minimized the distance of objective nodes and the sensor nodes by the deployment of nodes using a non-dominated method in 2021 [19], and Zhou et al. targeted coverage by a routing design with minimized costs in WSN [20]. The increase in the sensing coverage rate requires considerable investment. However, most sensing coverage studies have seldom discussed the cost limitation. Therefore, how to maximize the sensing coverage rate within the budget limitation is an important research topic, which is the subject of this work.

Research on coverage enhancing, either comprehensive coverage-enhancing studies or the k-coverage over the years, shows that sensor deployment is a very effective method. Therefore, numerous studies have used the sensor deployment strategy to optimize the coverage in WSNs [21,22,23,24,25,26]. For example, Nguyen and Liu aimed to optimize sensor coverage by planning the sensor deployment in mobile WSNs [21]. Alia and Al-Ajouri investigated sensor deployment via planning of the optimal locations to place sensors to maximize the sensor coverage with consideration of cost by a harmony search approach in WSNs [22]. Dash deployed the minimum number of sensors to achieve optimal sensor coverage under cost limitation in a transport WSN [23]. Al-Karaki and Gawanmeh maximized the sensor coverage by planning an optimal strategy of sensor deployment in a WSN [24]. Yu et al. focused on optimizing a decided area of sensor coverage, i.e., k-coverage, by sensor deployment planning under limited energy in a WSN [25]. Manju et al. guaranteed a predefined range of coverage, such as Q-coverage, by the sensor deployment strategy with an energy constraint using the greedy heuristic method in a WSN [26]. To achieve enhanced coverage by the sensor deployment approach, perfect sensor planning is necessary. However, one of the challenges is planning sensor deployment to maximize coverage if the required number of sensors is known and fixed.

For some unstructured WSN types, such as battleground monitoring and plantation administering, it is impossible to plan the sensor deployment. According to the budget limitation, the maintenance of coverage with at least a certain value must be provided in these unstructured types of WSNs. In this situation, the sensors can be randomly deployed in the decided range of coverage in WSNs. However, maximizing sensor coverage while simultaneously minimizing budget are conflicting objectives.

A mathematical optimization model for the proposed budget-limited WSN sensing coverage problem is derived to maximize the number of coverage grids in the presence of the grid concept in our work. The sensor coverage problem and the strategy of sensor deployment in WSNs are NP-Hard, which indicates it is difficult to obtain the solution within a polynomial time. Therefore, numerous studies in these fields have adopted various heuristic algorithms to solve this difficulty, such as the harmony search method [22,27], the greedy heuristic method [26], GA [28,29], and PSO [30].

The swarm intelligence algorithm, which belongs to the family of heuristic algorithms, is efficient and simple as shown by countless studies solving various problems that are NP-Hard in many fields. Simplified swarm optimization (SSO), which is included in the swarm intelligence algorithm, was originally developed by Yeh [31] in 2009. The SSO algorithm has been indicated to be efficient, simple, and flexible by numerous studies for resolving different problems in various areas, such as intelligence microgrids [32], parameter identification for solar cells [33,34], power generator dispatch [35], cloud computing [36], the task assignment problem [37,38], Internet of Things (IoT) [39], supply chain networks [40], the disassembly sequencing problem [41], WSNs [42], and forecasting of the stock market [43].

In this study, a new swarm algorithm called bi-tuning SSO (bi-SSO) based on SSO is proposed. The proposed bi-SSO improves the SSO by tuning the parameter settings, which is always an important issue in all AI algorithms. The proposed Bi-SSO can also be implemented to tune the update mechanism at the same time to enhance the quality of solutions found by SSO. The proposed algorithm targets optimization of the proposed budget-limited WSN sensing coverage problem to maximize the number of coverage grids in the presence of the grid concept.

The remainder of this paper is organized as follows. The related work of the sensing coverage problem is analyzed in Section 2. Section 3 introduces grids and WSNs. Section 4 presents the traditional SSO. The proposed novel bi-tuning SSO is shown in Section 5. Section 6 presents the numerical experiments. Conclusions and future research are discussed in Section 7.

2. Related Work

Efficient enhancement of sensor coverage is a very important topic, especially for many modern systems that are modeled in WSN. Therefore, the sensing coverage problem has been put forward by various studies over the years in different methods and levels to be discussed in order to efficiently term the target of sensor coverage. In the studies of the sensor coverage problem, some emphasize the maintenance of coverage with at least a certain value, such as k-coverage, and some strengthen the comprehensive coverage enhancement.

The comprehensive coverage-enhancing studies can be classified into the following strategies, such as:

- Sensor (node) deployment method: coverage enhancing using a sensor deployment model in a mobile WSN [21,24], target coverage-enhancing with minimum cost using sensor deployment by a harmony search in a WSN [22], and sensor deployment to improve coverage with minimum cost in a transport WSN [23];

- Sensor energy strategy: evaluation of the effect of energy-depleted nodes to improve the energy efficiency for coverage-enhancing [44];

- Maximization of the perception range of a single sensor node: coverage-enhancing while minimizing the number of sensors in a 3-D WSN [45]; and

- Network connectivity: coverage-enhancing by a sensor-connected design with energy consideration [46].

For the maintenance of coverage with at least a certain value, sensor deployment and routing design are the two famous methods to enhance coverage, such as sensor deployment considering the energy to improve coverage in WSNs [25], and sensor deployment using the greedy heuristic method to improve the coverage in WSNs [26].

3. Problem Description

The coverage problem is derived from real-life applications, and it is one of the essential topics in sensor networks. The coverage problem is used to measure the quality of the sensors that are able to monitor or track in WSNs. In this section, the mathematical optimization model for the budget-limited WSN sensing coverage problem is presented to maximize the coverage in WSNs under the budget constraint to balance various characteristics in evaluating WSNs, together with the terminologies used in this study.

3.1. Grids and WSNs

The grid is often used in the geographic information system (GIS) to manage assets and outages and map the location of overhead and underground circuits [1,2]. The grid separates the area needed to be monitored by sensors into grids with uniformly spaced horizontal and vertical lines. Due to the convenience of use, the grid is adapted, such that the whole WSN monitor area is divided into XUB × YUB sensing grids in this study, where XUB and YUB mean the maximum radius of the x axis and y axis in the grid, respectively. Each grid is a location, object, city, etc., and each sensor is also located in a grid, say (x, y), where x = 0, 1, …, XUB−1, and y = 0, 1, …, YUB − 1.

Let WSN(S, AREA, RADIUS, COST) be a WSN with a hybrid topology, where S = {1, 2, …, n} is a set of sensors; AREA = [0, XUB) × [0, YUB) is the area for WSN to cover, monitor, or track, etc.; RADIUS is the radius level for each sensor; and COST is the price corresponding to the RADIUS level for each sensor.

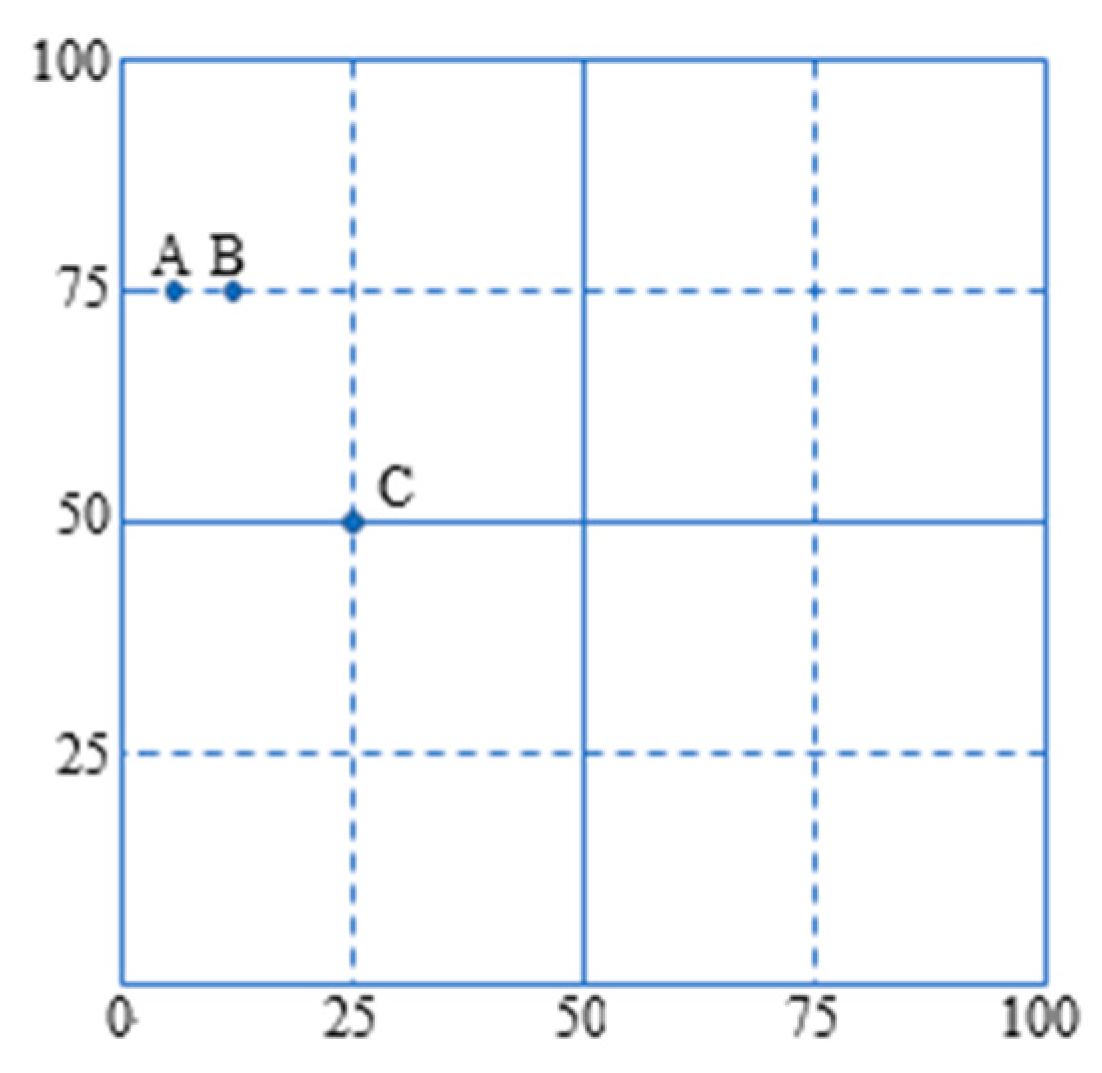

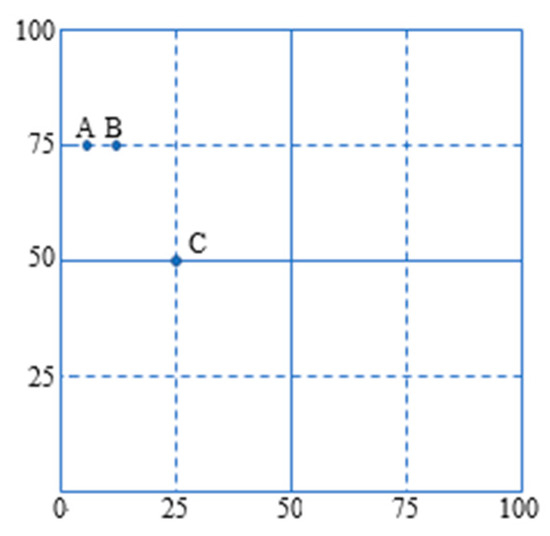

For instance, in Figure 1, the WSN has AREA = [0, 99) × [0, 99) and three sensors are labeled at A, B, and C located at (5, 75), (12, 75), and (25, 50), respectively. The RADIUS r(x, y) and COST(r(x, y)) for each sensor in the WSN in Figure 1 are provided in Table 1. From Table 1, the price needed for the sensor located at A to have RADIUS 10 is 4 units of cost., i.e., r(5, 75) = 10 and COST(r(5, 75)) = 4.

Figure 1.

Example WSN.

Table 1.

Information of the WSN in Figure 1.

3.2. Effective Covered Grids and COST

Let |●| be the number of elements in ● and the sensing radius of the sensor located at (x, y) be r(x, y). In WSN(S, AREA, RADIUS, COST), the effectively covered grids ECG of the sensor in S located at (x, y) ϵ AREA is the set of grids inside the circle under radius r(x, y), i.e.,

where CIRCLE(r(x, y)) is the circle with center (x, y) and radius and r(x, y).

ECG(r(x, y)) = { p | grid p is inside in CIRCLE(r(x, y)∩AREA },

For example, in Figure 1, assume the sensor A located at (5, 75) is with r(A) = r(5, 75) = 10, and 255 grids are inside the circle under radius 10, i.e., the number of grids in { p | grid p is inside in (the circle with center and radius (5, 75) and r(5, 75)) } is 255. However, the area we are interested in is only in AREA = [0, 99) × [0, 99). Hence, these grids that are out of these range should be removed, i.e., (−1, 75), (−2, 75), (−3, 75), (−4, 75), (−5, 75), …., and 50 grids are removed because of this and only |ECG(r(5, 75) = 10)| = 255 grids are left.

The total ECG of a whole WSN is calculated based on Equation (1) after removing these grids outside AREA or in the intersection ranges of sensors as follows:

The total grids coved by all sensors is:

For example, |ECG(r(A) = 10)| = 255 as shown before, |ECG(r(B) = 8)| = 193, and |ECG(r(C) = 5)| = 69. There are 124 grids in ECG(r(A) = 10) ∩ ECG(r(B) = 8) and ECG(r(A) = 10) ∩ ECG(r(C) = 5) = ECG(r(B) = 8) ∩ ECG(r(C) = 5) = ∅, where sensors A, B, and C are located at (5, 75), (12, 75), and (25, 50), respectively. We have:

|ECG(r(A) = 10) ∩ ECG(r(B) = 8) ∩ ECG(r(C) = 5)|

= 255 + 193 + 69 − 124 = 393.

= 255 + 193 + 69 − 124 = 393.

Moreover, from COST in WSN(S, AREA, RADIUS, COST), if the cost of the sensor located in (x, y) with radius r(x, y) is COST(r(x, y)), the total cost to have the above deploy plan for all sensors in S is:

3.3. Proposed Mathematical Model

It is assumed that WSN(S, AREA, RADIUS, COST) are the WSN we considered, where the location, the levels of radius, and the prices of each radius level are all provided in S, RADIUS, and COST for each sensor, respectively. The proposed budget-limited WSN sensing coverage problem needs to determine the radius level for each sensor to have the maximal effective covered grids of the whole WSN under a limited budget to improve the WSN service quality.

A mathematical model for the problem is presented below:

The objective function in Equation (7) maximizes the number of grids covered by sensors. The only constraint of Equation (8) is the budget-limited total cost of the sensors. Note that, if without Equation (8), each sensor can be set to its maximum radius level, i.e., it is impractical.

The proposed budget0-limited WSN sensing coverage problem is one of the variants of the knapsack problem. Hence, the proposed problem is also an NP-Hard problem, and it is impossible to be solved in polynomial time [22,23]. It is always necessary to have an efficient algorithm to solve the important and practical sensor problem. This study thus proposes a new algorithm based on SSO to overcome the NP-Hard obstacles to improve the SSO to enhance the obtained WSN service quality.

4. Proposed Novel Bi-Tuning SSO

The proposed bi-tuning SSO is based on SSO, and it inheres all characteristics from SSO, i.e., the population-based, the fixed-length solutions, evolution from generation to generation as the other algorithms in the evolution computing, a leader as other algorithms in the swarm intelligence, and the all-variable update such that each variable is updated based on the stepwise function update mechanism shown in Equation (9). The details, pseudo-code, explanation, and example of the proposed bi-tuning SSO are presented in this section. Moreover, the proposed method is verified/validated by the numerical experiments and the results obtained by the proposed bi-tuning SSO are compared with the state-of-art algorithms PSO, GA, and SSO in Section 6.

Solution Structure

As with most machine learning algorithms, the first step is to define the solution structure [1,2,3,4,5,6,7,8,9,37,38,39,40,41,42,43]. A solution in the proposed bi-tuning SSO for the proposed problem is defined as a vector, where the number of coordinates in each vector is the number of sensors, and the value, say k, of the ith coordinate of each vector is the radius level k of the ith sensor utilized in the WNS. For example, in Figure 1, let X5 = (4, 3, 4) be the 5th solution in the current generation and the radius levels of sensors A, B, and C are 4, 3, and 5, i.e., r(5, 75) = 10, r(12, 75) = 8, and r(25, 50) = 5 in X5, respectively.

5. Results

Proposed by Yeh in 2009 [31], the simplified swarm optimization (SSO) is said to be the simplest of all machine learning methods [13,14,21,22]. The SSO was initially called the discrete PSO (DPSO) to tackle the shortcomings of the PSO in discrete problems and is appealing due to its smooth and straightforward implementation, a fast convergence rate, and fewer parameters to tune, which has been shown by numerous related works of SSO, such as optimization of the vehicle routing in a supply chain [47], solving of reliability redundancy allocation problems [48,49], optimization of related problems in wireless sensor networks [42,50], resolving of redundancy allocation problems considering uncertainty [39,51], optimization of the capacitated facility location problems [52], improvement of the update mechanism of SSO [53], recognition of lesions in medical images [54], resolving of service in a traffic network [55], and optimization of numerous types of network research [56,57,58,59,60,61,62,63].

As a population-based stochastic optimization technique, the SSO belongs to the category of swarm intelligence methods with leaders to follow. The SSO is also an evolutionary computational method used to update the solution from generation to generation.

Moreover, SSO is a very influential tool in data mining for certain datasets [13,14,21] and is therefore implemented to solve the proposed budget-limited WSN sensing coverage problem.

5.1. Parameters

Each AI algorithm has its parameters in its update mechanism and/or the selection procedure, e.g., crossover rate cx and mutation rate cm in GA, c1 and c2 in PSO, and Cg, Cp, and Cw in the SSO, etc.

It is assumed that Xi, Pi, and PgBest are the ith solution, the best ith solution in its evolutionary history, and the best solution among all solutions, respectively. Let xi,j, pi,j, and pgBest,j be the jth variable of Xi, Pi, and PgBest, respectively. SSO is the adapted all-variable update, i.e., all variables need to be updated, such that xi,j is obtained from either pgBest,j, pi,j, xi,j, and a random generated feasible value x with probabilities cg, cp, cw, and cr, respectively.

Because cg + cp + cw + cr = 1, there are three parameters to tune in SSO: Cg = cg, Cp = Cg + cp, and Cw = Cp + cw. Additionally, in the proposed algorithm, cg = 0.5, cp = 0.95, and cw = 0.95.

5.2. Update Mechanism

Hence, the update procedure of each variable can be presented as a stepwise-function:

where ρ[0,1] is a random number generated within [0,1] consistently.

From Equation (9), the update in SSO is simple to code, runs efficiently, and flexible amd made-to-fit [20,23,24,25,26,27,28,46]. Each AI algorithm has its own update mechanism, e.g., crossover and mutation in GA, vectorized update mechanism in PSO, etc. The stepwise update function is a unique update mechanism of SSO [23,24,37,38,39,40,41,42]. All SSO variants are based on their made-to-fit stepwise function to update solutions.

The stepwise-function update mechanism shown in Equation (9) is powerful, straightforward, and efficient with proven success, as evidenced through successful applications, e.g., the redundancy allocation problem [37,38], disassembly sequencing problem [39,40], artificial neural network [41], energy problems [42], etc. Moreover, the stepwise-function update mechanism allows for greater ease of customization to made-to-fit by replacing any item of its stepwise function with other algorithms [38,41], even hybrid algorithms [43] applied in sequence or parallel [41], to address different problems as opposed to tedious customization of other algorithms [23,24,37,38,39,40,41,42,43].

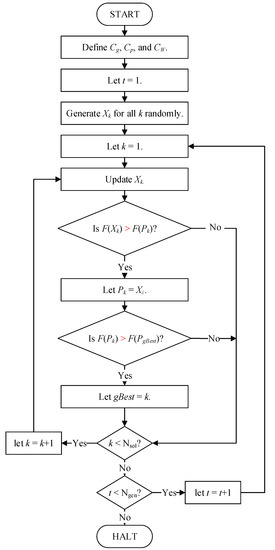

5.3. Pseudocode, Flowchart, and Example

The SSO is very easy to code using any computer language and its pseudocode is provided below [17,18,35,36,37,38,39]:

- STEP S0.

- Generate Pi = Xi randomly, calculate F(Pi) = F(Xi), find gBest, and let t = 1 and k = 1 for i = 1, 2, …, Nsol.

- STEP S1.

- Update Xk based on Equation (9).

- STEP S2.

- If F(Xk) > F(Pk), let Pk = Xk. Otherwise, go to STEP S5.

- STEP S3.

- If F(Pk) > F(PgBest), let gBest = k.

- STEP S4.

- If k < Nsol, let k = k + 1 and go to STEP S1.

- STEP S5.

- If t < Ngen, let t = t + 1, k = 1, and go to STEP S1. Otherwise, halt.

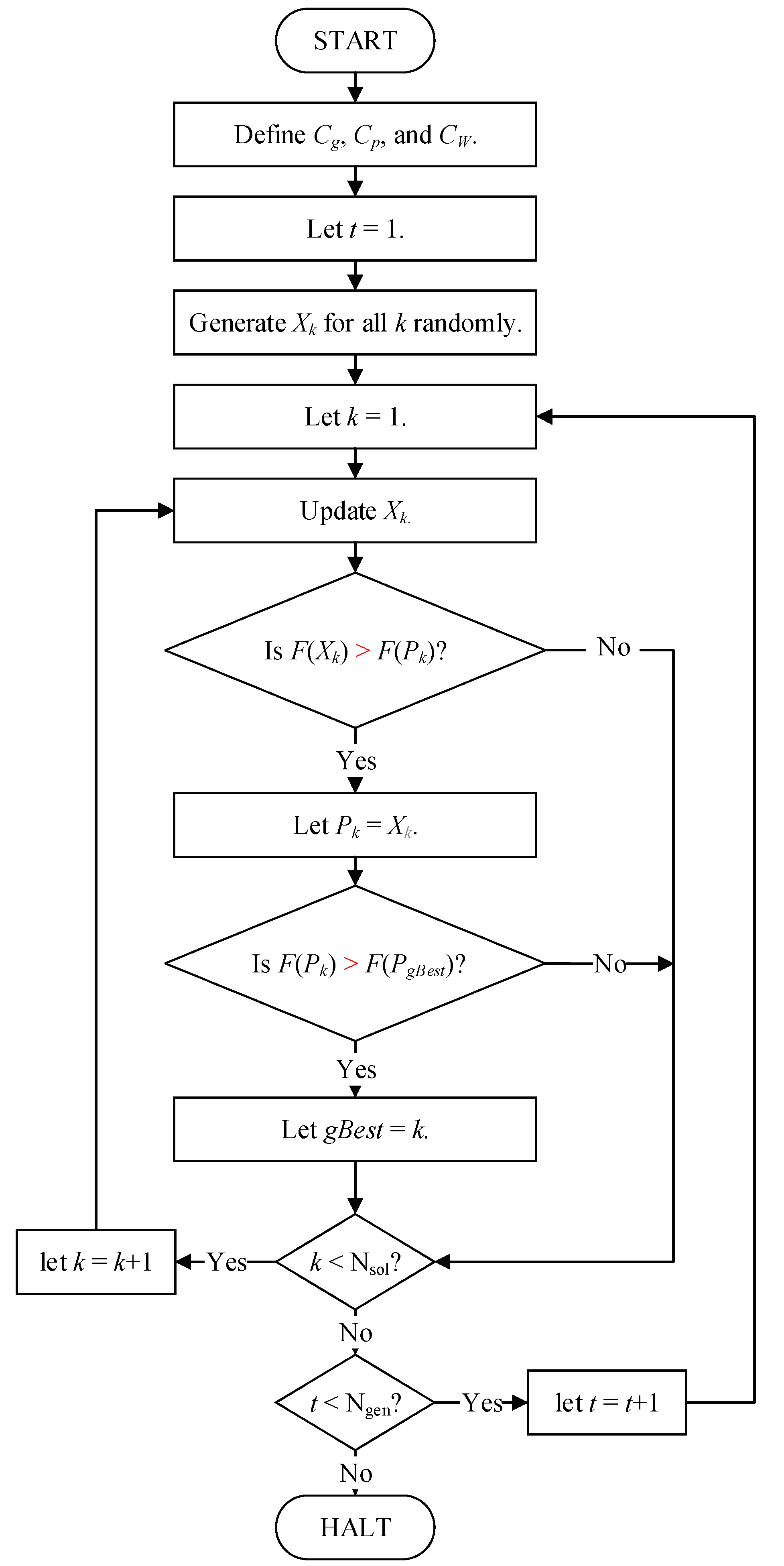

The flowchart of the above pseudocode is given in Figure 2:

Figure 2.

The flowchart of SSO.

STEP S0 initializes all solutions randomly because the SSO is a population-based algorithm. STEP S1 implements the SSO stepwise function shown in Equation (9) to update the solution. STEPs S2 and S3 test whether Pk is replaced with Xk and PgBest is replaced with Pk, respectively. STEP S5 is the stopping criteria, which is the number of generations. Note that the stopping criteria are changed to the runtime in this study and the details are discussed in Section 4.

For example, let Cg = 0.4, Cp = 0.7, Cw = 0.9, and ρ = (ρ1, ρ2, ρ3, ρ4, ρ5) = (0.53, 0.78, 0.16, 0.97, 0.32). Assume that we have the solution in the second generation, i.e., X15 = (4, 3, 2, 1, 4), P15 = (1, 4, 2, 3, 2), and PgBest = (1, 4, 2, 3, 2), which are the 15 solutions in the second generation of the evolutionary, the best 15 solutions before the second generation, and the best solution before the second generation, respectively. Now, we are going to update X15 to obtain the new X15 of the third generation, which is presented in Table 2, based on the stepwise function of the SSO update mechanism provided in Equation (9).

Table 2.

An example of the SSO update process.

From the above pseudocode, flowchart, and example, we can find that the update mechanism of the SSO is simple, convenient, and efficient.

5.4. Fitness Function and Penalty Fitness Function

The fitness function F(X) guides solution X towards optimization, which, in turn, will attain goals in artificial intelligence, such as the SSO, GA, and PSO. All suitable fitness functions vary, depending on the optimization problem defined by the corresponding application. In this study, Equation (7) is adopted here to represent the fitness function, which is to be maximized in the proposed problem. For example, without considering the budget limit, F(X5) = 393, as discussed in Equation (4) for X5 = (4, 3, 4).

The penalty fitness function FP(X) helps deal with these problems without too many constraints and it is not easy to generate feasible solutions that satisfy the constraints, e.g., Equation (5). Penalty functions can force these infeasible solutions near the feasible boundary back to the feasible region by adding or subtracting larger positive values to the fitness for the maximum or minimum problems, respectively.

If the larger positive value is not large enough, the final solution may be not feasible. Hence, a novel self-adaptive penalty function based on the budget and the deploy plan is provided below:

where:

For example, let COSTUB = 10 in Figure 1. The total cost for the fifth solution X5 = (4, 3, 4) is COST(X5) = COST(4, 3, 4) = 12 from Equation (6). Because COST(X5) =12 > 10, we have:

PENALTY(X5) = (10 × 12)2 = 14,400,

The penalty fitness function of X5 is:

Fp(X5) = F(X5) − PENALTY(X5) = 393 − 14,400 = −14,007,

Here, the penalty fitness function is that the fitness function subtracts the penalty if the cost is over the COSTUB.

5.5. The Bi-Tuning Method

All machine learning algorithms have their parameters in each update procedure and/or the selection procedure. Thus, there is a need to tune parameters for better results. In the SSO, there are already two main concerns regarding the improvement of the solution quality by either focusing on the parameter-tuning to tune parameters or paying attention to the item-tuning to remove an item from Equation (9), e.g., Equations (12) and (13) remove the second and the third items from Equation (9). However, none of them can deal with the above two processes, i.e., the parameter-tuning and the item-tuning, at the same time:

Hence, a new bi-tuning method is provided to achieve the above goal to determine the best parameters Cp, Cp, and Cw together with the best solution to answer whether any item in Equation (9) should be removed to obtain a better result.

Seven different settings are adapted and named as SSO1–SSO7 as shown in Table 3.

Table 3.

Seven parameter settings.

If Cg = Cp in SSO2, SSO3, and SSO5 or Cp = Cw in SSO4, SSO6, and SSO7, the items 2 or 3 are redundant, i.e., Pi is never used as in Equation (12) and xi,j must be replaced with a value as shown in Equation (13), respectively, from Table 3.

Additionally, SSO2 and SSO4 are used to test whether having a larger value of cr = 1 − Cw is useful in improving the efficiency and solution quality. Similarly, SSO5 and SSO7 are applied to determine whether having a larger value of cg = Cg improves the efficiency and solution quality.

5.6. Pseudocode of Bi-Tuning SSO

The bi-tuning SSO is a new SSO and can be used to tune both the parameters, i.e., Cg, Cp, and Cw, and update the mechanism in the traditional SSO efficiently and easily. The pseudocode of the proposed bi-tuning SSO is designated for the budget-limited WSN sensing coverage problem by the following procedures:

- STEP 0.

- Let i = 1.

- STEP 1.

- STEP 2.

- If i < 8, go to STEP 1.

- STEP 3.

- The best one among G1, G2, …, and G7, is the one we need.

It is always a very critical task to determine the time complexity of any algorithms and the time complexity is always based on the worst time complexity, i.e., the O-notation. Additionally, the time complexity of the fitness calculation depends on the problems and was ignored in some studies. Hence, due to the simplicity of the SSO, the computational complexity of the proposed bi-tuning SSO is determined mainly by the update mechanism.

The proposed bi-tuning SSO implementing the all-variable update mechanism needs to be run Nvar times for each solution in each generation. Hence, the time complexity of the proposed bi-tuning SSO is O(7 × Ngen × Nsol × Nvar). Furthermore, the practical performance of the proposed bi-tuning SSO was tested for nine problems, as described in Section 5.

6. Numerical Experiments

To demonstrate the performance of the proposed bi-tuning SSO, three numerical experiments with three different values of Nvar = 20, 100, and 300 were implemented based on the bi-tuning method mentioned in Section 5.3. Similar published literature to this work could not be found so the experimental results of the bi-tuning SSO could not be compared with any other published contribution. However, the experimental results of the bi-tuning SSO were compared with the state-of-the-art algorithms PSO, GA, and SSO, which are listed as SSO1 in Table 3.

6.1. Parameters and Experimental Environment Settings

The performance of each AI algorithm is always affected by the parameter setting and experimental environment setting. The parameter settings for both GA and PSO are listed below:

- The crossover rate cx = 0.7, the mutation rate cm = 0.3, and elite selection.

- c1 = c2 =2.0, w = 0.95, the lower and upper bounds of velocities VLB = −2 and VUB = 2, the lower and upper bounds of positions XLB = 1 and XUB = the maximum radius.

Nine algorithms were tested, i.e., the bi-tuning SSO included seven SSO variants based on Table 3, GA, and PSO. To perform a fair performance evaluation of all algorithms, each algorithm was run 30 times, i.e., Nrun = 30, with Nsol = 100, and the stopping criteria were based on the run time, which was defined as Nvar/10 s.

All algorithms were tested on three datasets, where the coordinate of X has a uniform distribution: 0 ~ (square of the number of vertices/32767)/(the number of vertices) and the coordinate of Y has a uniform distribution: 0 ~ (square of the number of vertices/32767)-the coordinate of X*(the number of vertices). Without loss of generality, each dataset has 1000 data of which the ith data in the dataset is a 2-tuple vector (x, y) representing the location of the ith sensor and it was generated randomly within [0,99] × [0,99] based on the grid concept. To verify the capacity of the proposed bi-tuning SSO, each dataset was separated into sub datasets based on the number of sensors Nvar = 20, 100, and 300 by choosing the first Nvar data to denote small-sized, middle-sized, and larger-sized problems.

All nine algorithms including the proposed bi-tuning SSO were coded in DEV C++ on a 64-bit Windows 10 PC, implemented on an Intel Core i7-6650U CPU @ 2.20 GHz notebook with 16 GB of memory.

6.2. Analysis of Results

The descriptive statistics including the maximum (denoted by MAX), i.e., the best solution, minimum (denoted by MIN), average (denoted by AVG), and standard deviation (denoted by STD) of the run time (denoted by T, in seconds), fitness function value (denoted by F), number of generations to obtain the optimal solution (denoted by Best), how many generations were run during the provided time (denoted by Ngen), and total cost (denoted by Cost) were employed for the nine algorithms including GA, PSO, and the proposed bi-tuning SSO including seven SSO variates (denoted by SSO1 to SSO7) and the best solutions compared among the nine algorithms are shown in bold. Hence, the following Table 4, Table 5 and Table 6 indicate the experimental results obtained by all algorithms for the first dataset to the third dataset of the small-sized problem, Table 7, Table 8 and Table 9 indicate the experimental results obtained by all algorithms for the first dataset to the third dataset of the middle-sized problem, and Table 10, Table 11 and Table 12 indicate the experimental results obtained by all algorithms for the first dataset to the third dataset of the larger-sized problem.

Table 4.

The experimental results obtained by all algorithms for the first dataset of the small-sized problem.

Table 5.

The experimental results obtained by all algorithms for the second dataset of the small-sized problem.

Table 6.

The experimental results obtained by all algorithms for the third dataset of the small-sized problem.

Table 7.

The experimental results obtained by all algorithms for the first dataset of the middle-sized problem.

Table 8.

The experimental results obtained by all algorithms for the second dataset of the middle-sized problem.

Table 9.

The experimental results obtained by all algorithms for the third dataset of the middle-sized problem.

Table 10.

The experimental results obtained by all algorithms for the first dataset of the larger-sized problem.

Table 11.

The experimental results obtained by all algorithms for the second dataset of the larger-sized problem.

Table 12.

The experimental results obtained by all algorithms for the third dataset of the larger-sized problem.

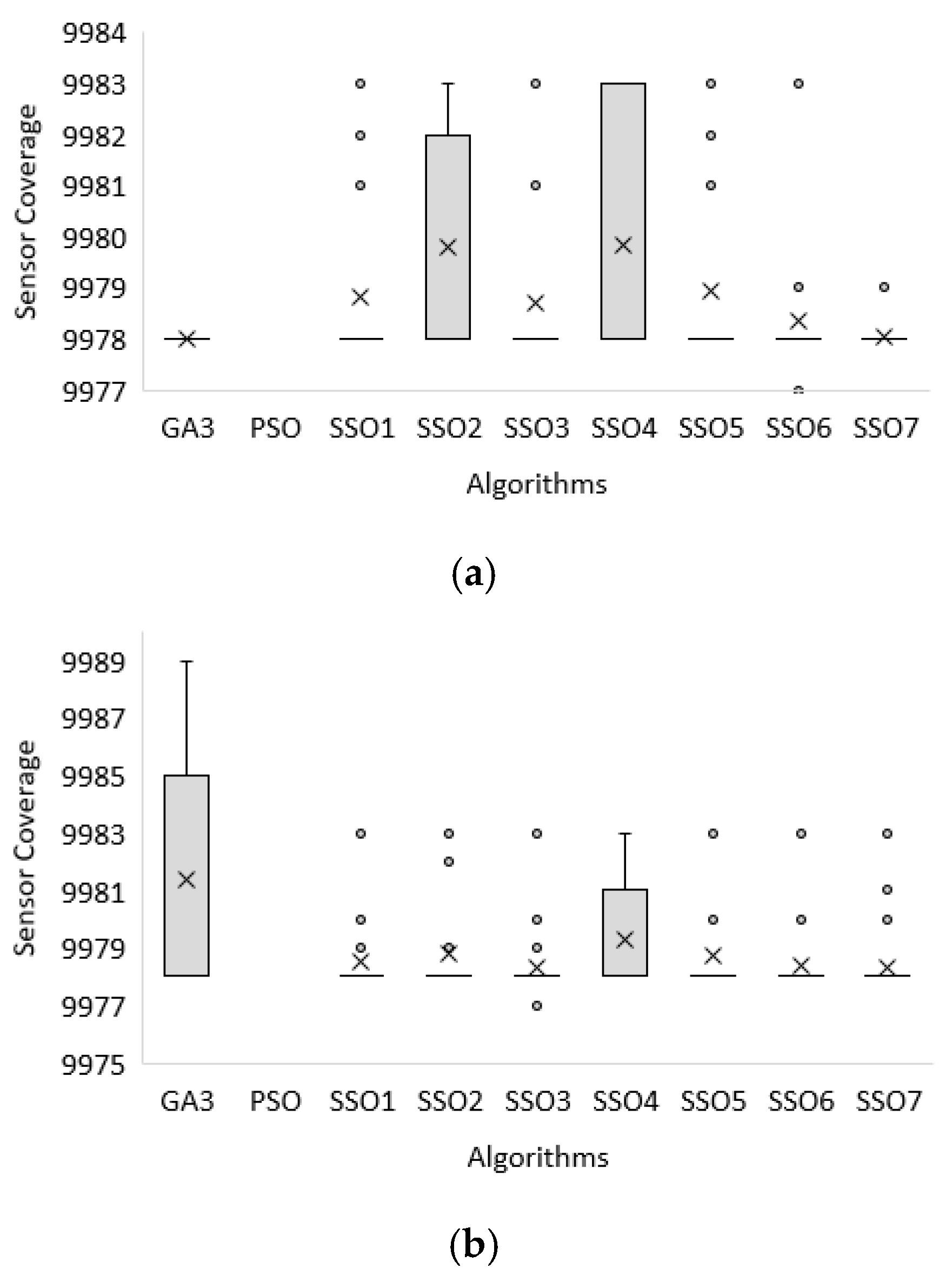

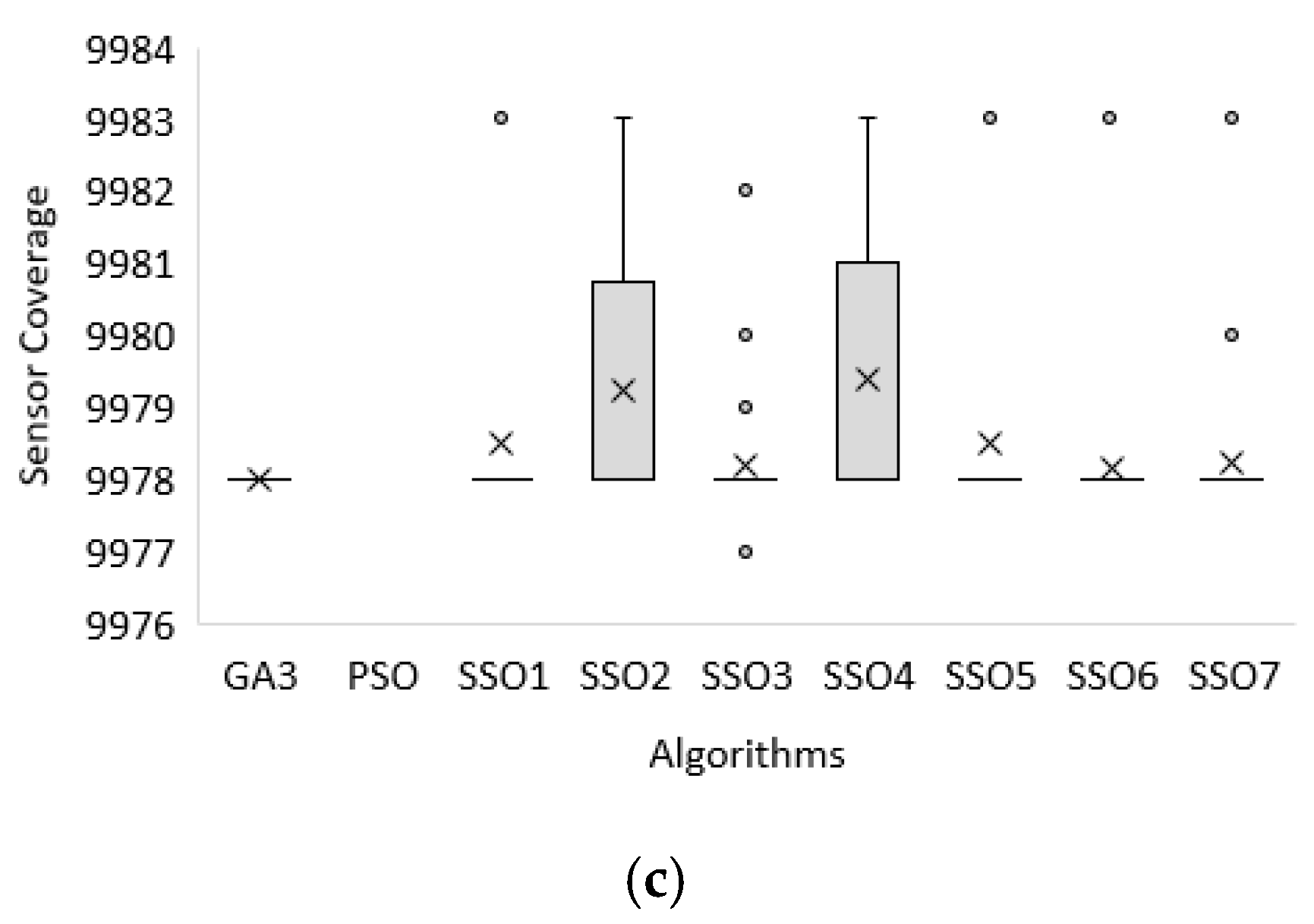

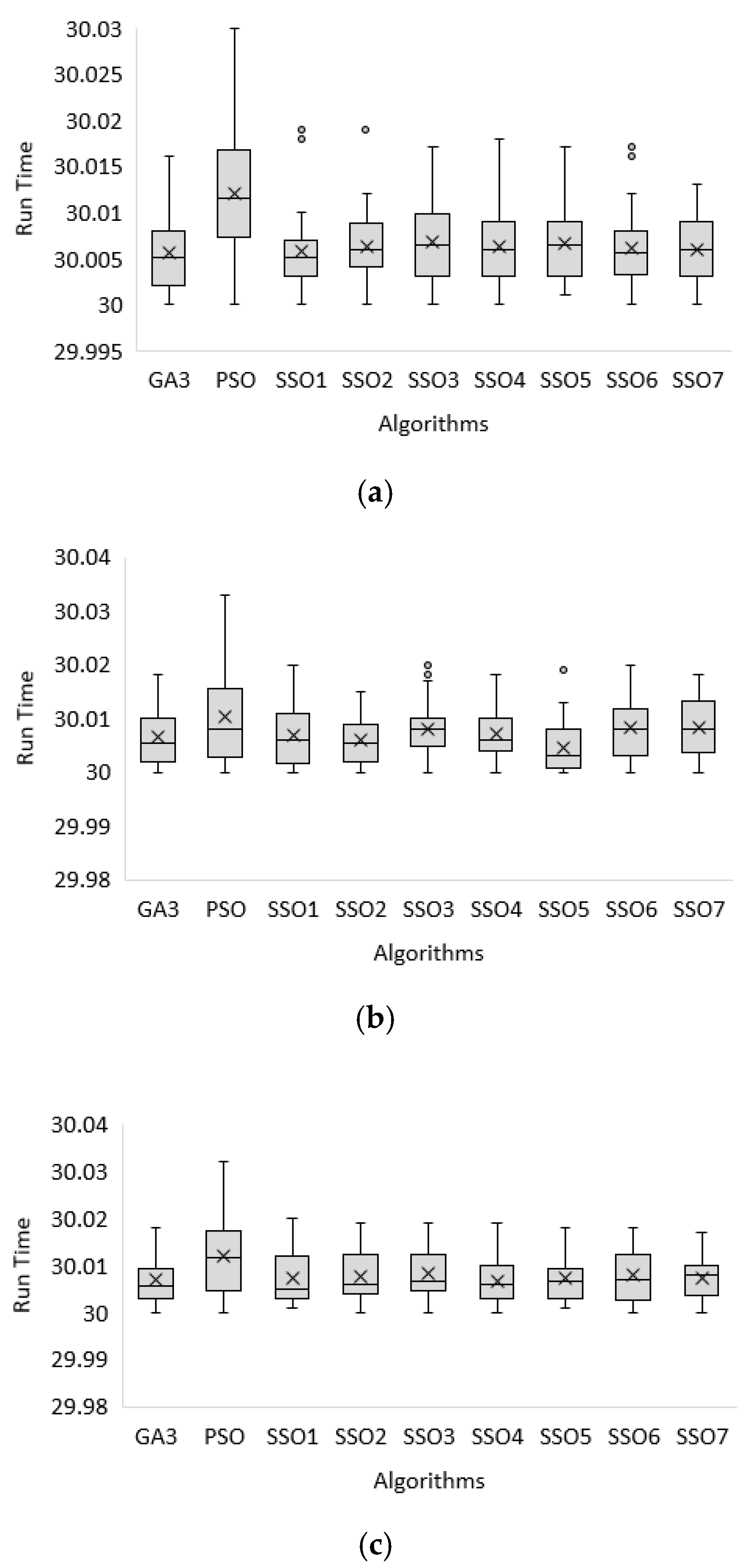

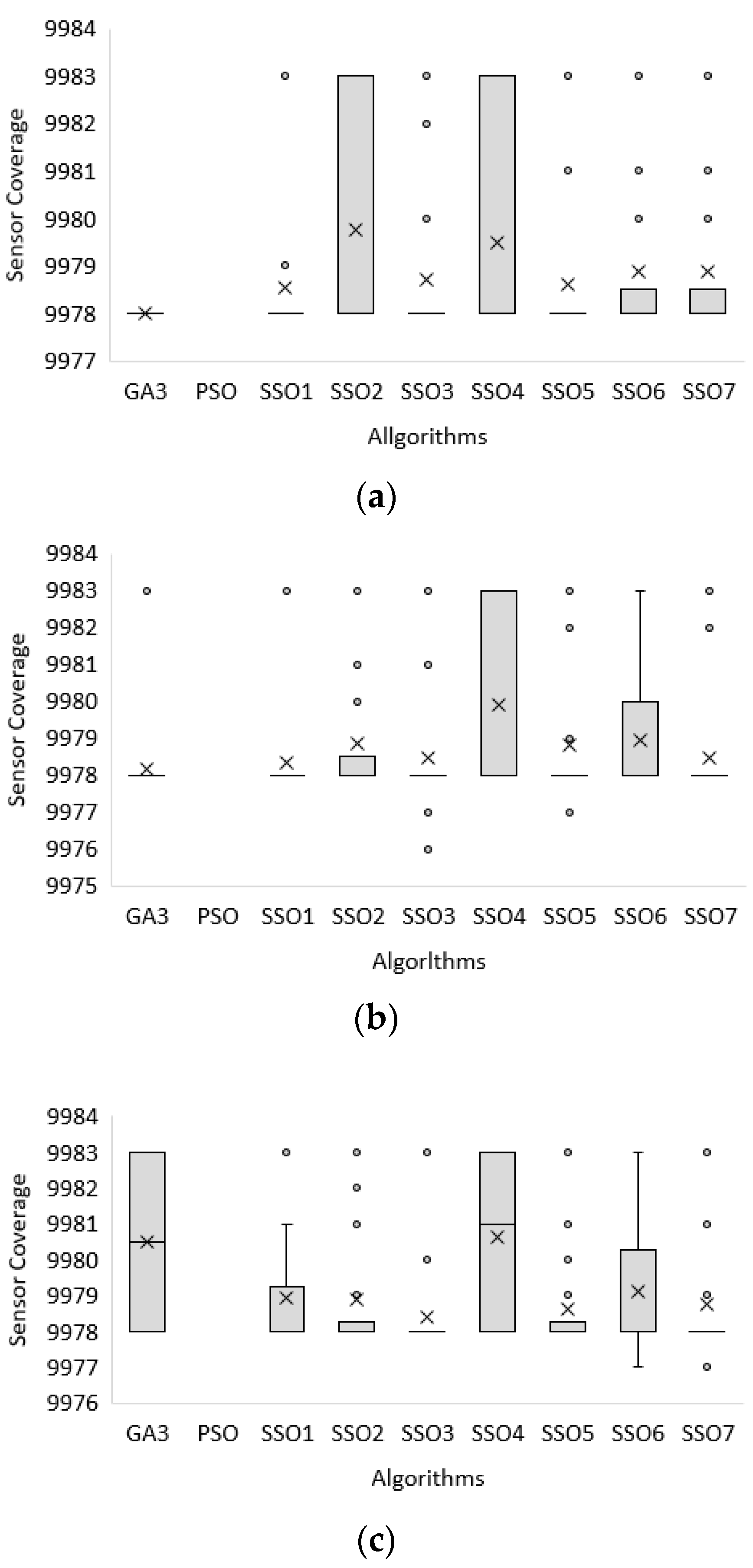

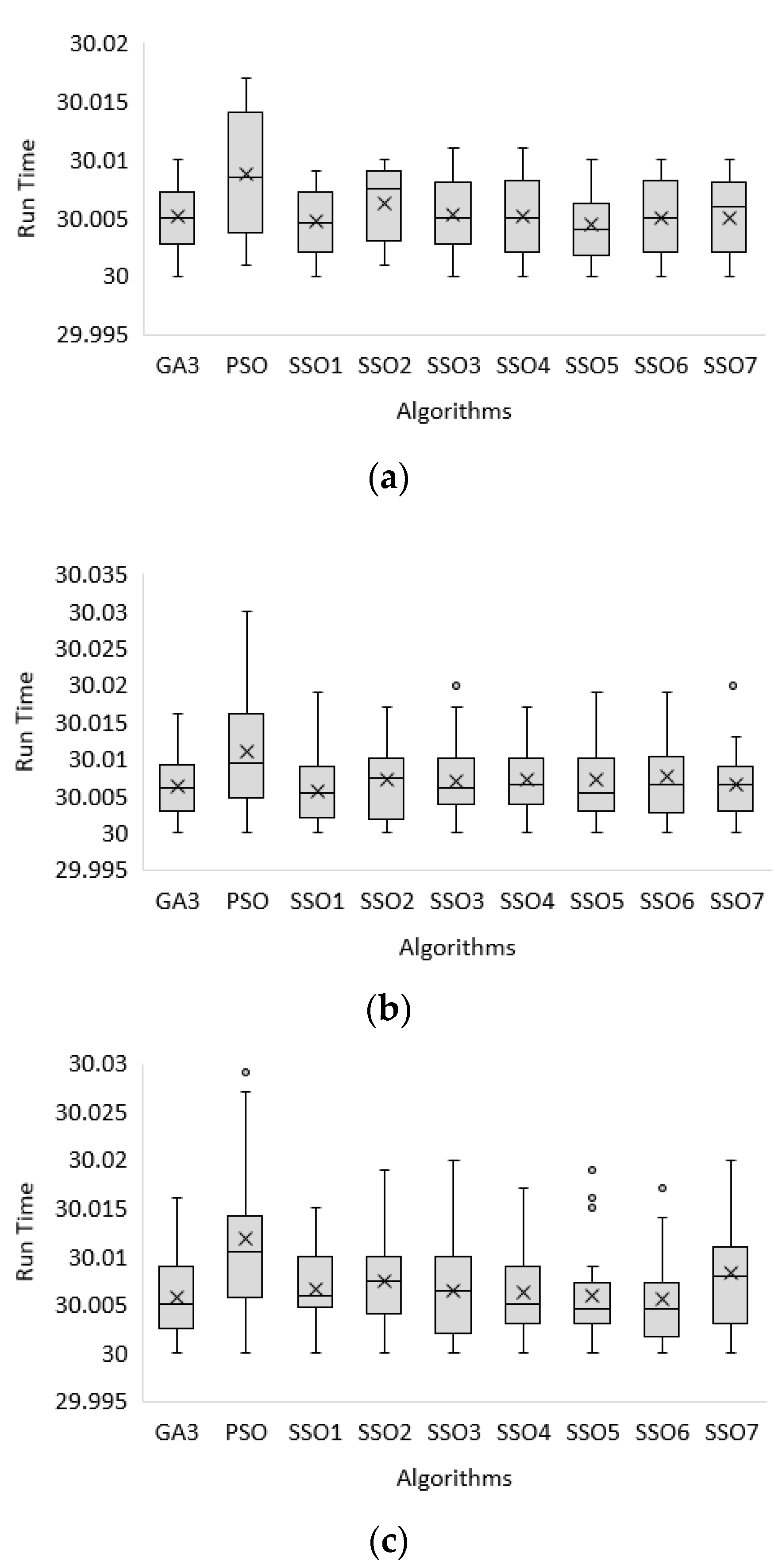

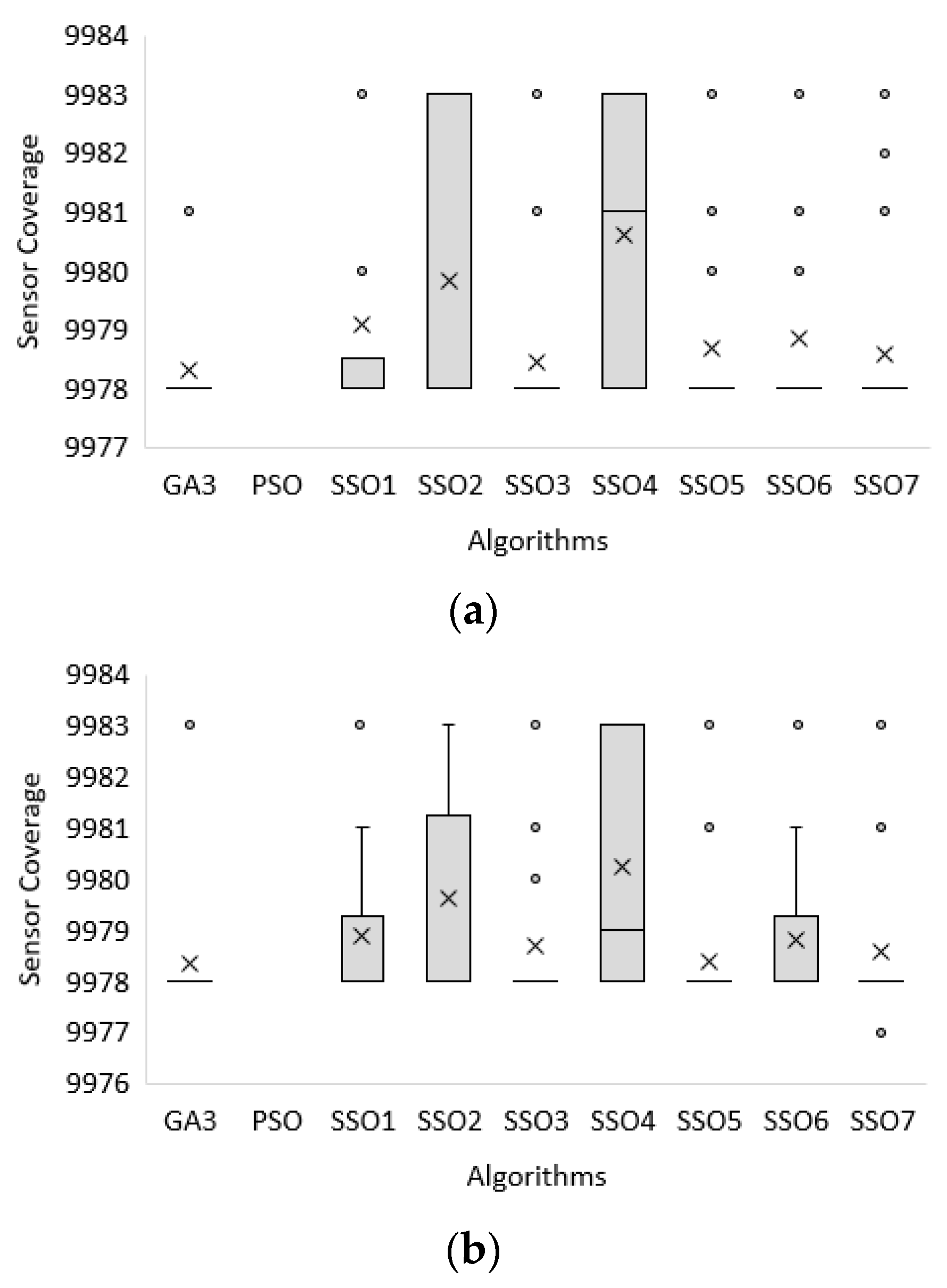

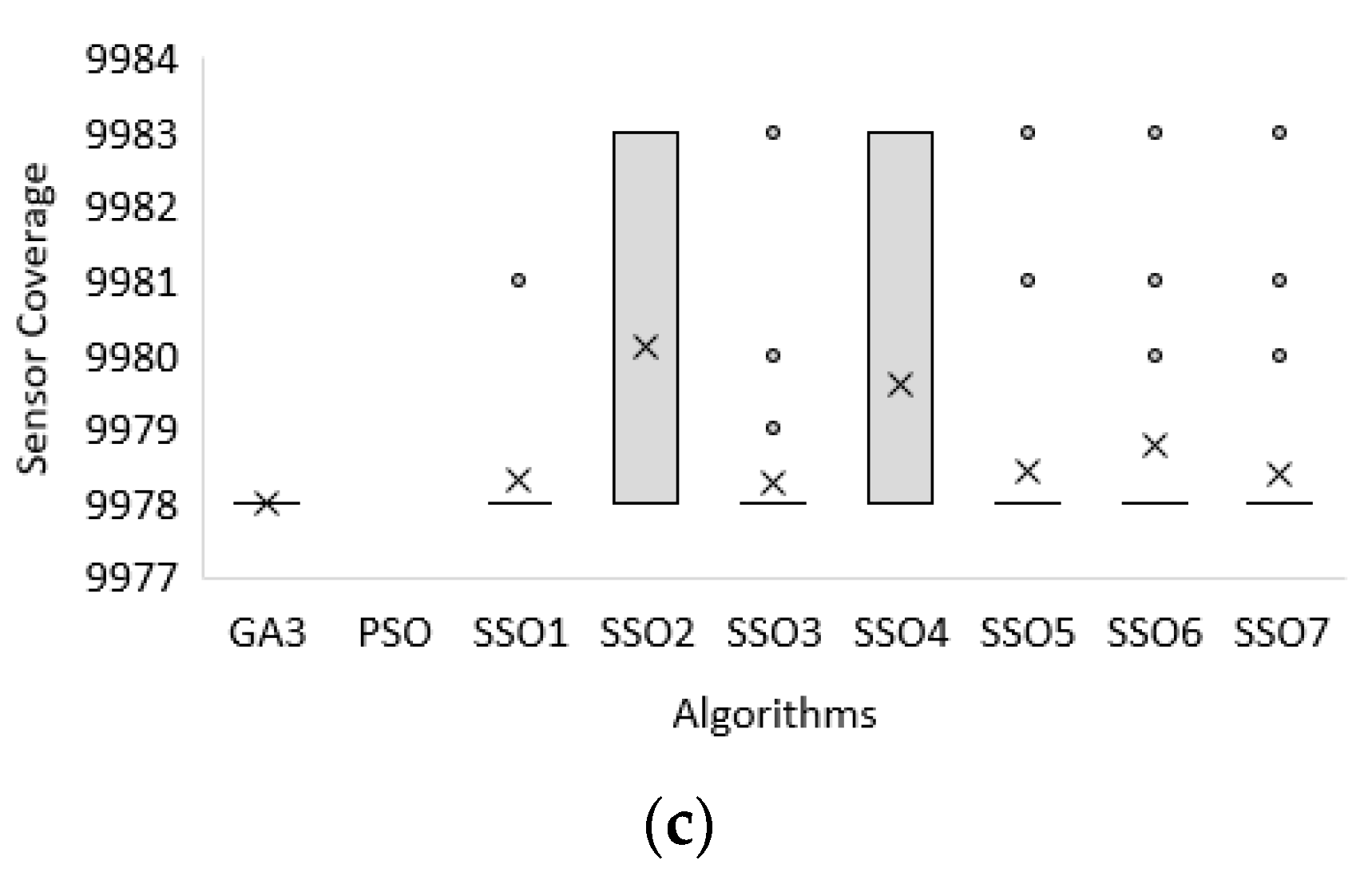

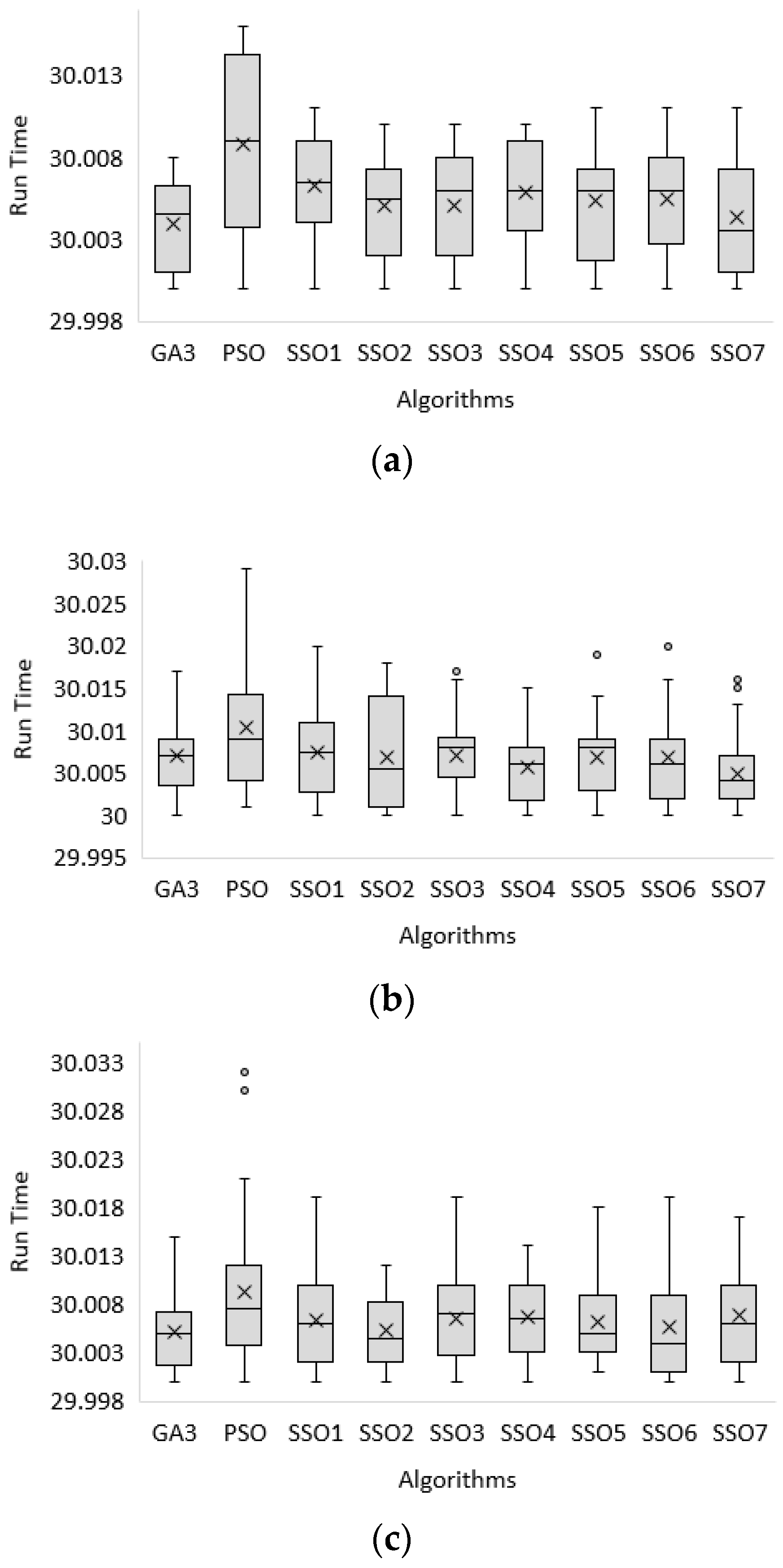

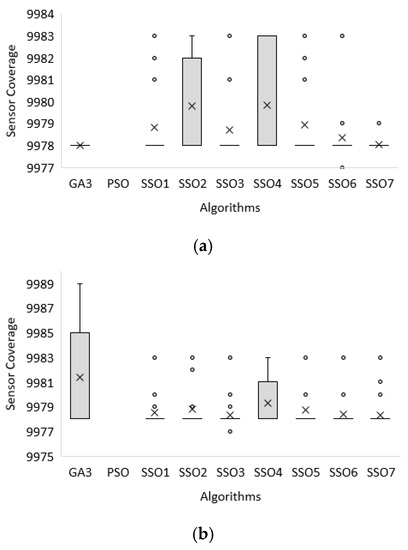

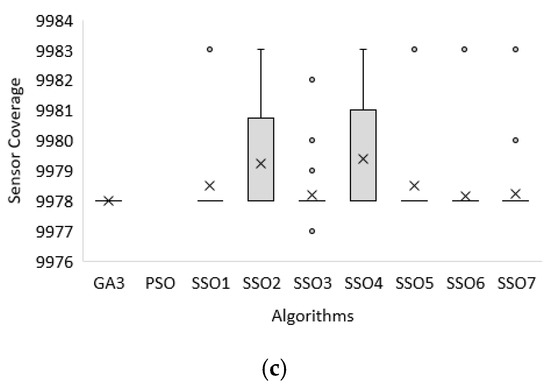

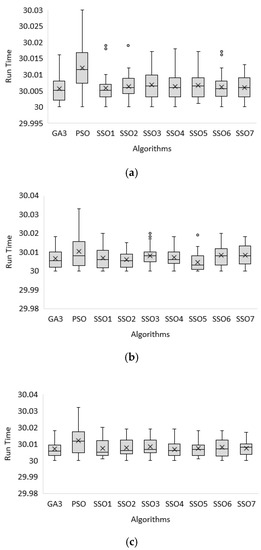

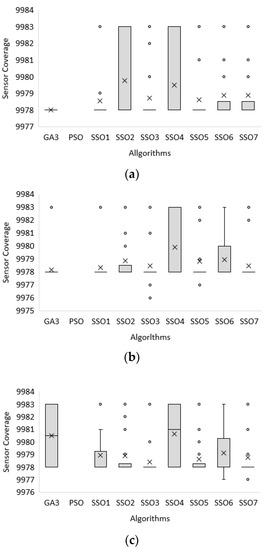

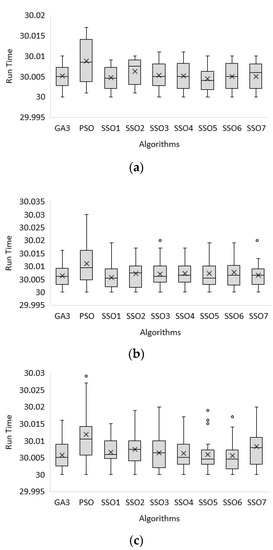

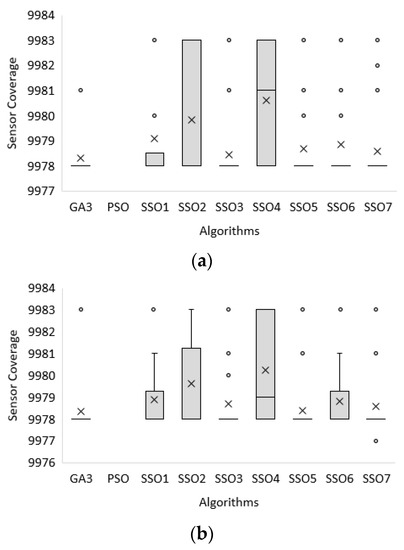

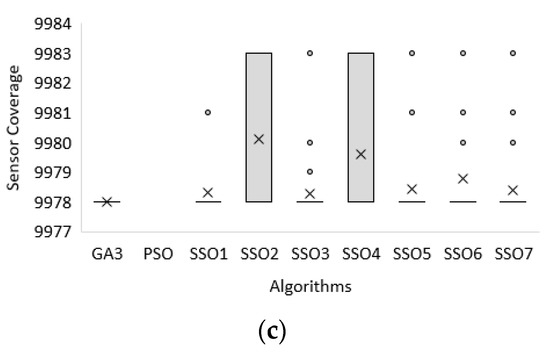

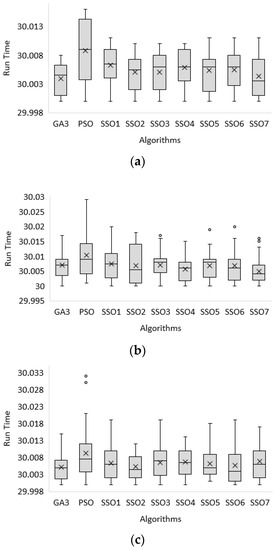

For a more abundant and detailed analysis of the experimental results, the statistical boxplots of the displayed images were adopted to show the performance including the maximum, interquartile range (75th percentile, median, and 25th percentile), and minimum and are shown in Figure 3, Figure 4, Figure 5, Figure 6, Figure 7 and Figure 8. Figure 3 and Figure 4 indicate the sensor coverage (fitness function value) and run time for all algorithms for the small-sized problem, Figure 5 and Figure 6 indicate the sensor coverage (fitness function value) and run time for all algorithms for the middle-sized problem, and Figure 7 and Figure 8 indicate the sensor coverage (fitness function value) and run time for all algorithms for the larger-sized problem, respectively.

Figure 3.

Boxplot of the sensor coverage (fitness function value) among all algorithms for the small-sized problem. (a) The first dataset. (b) The second dataset. (c) The third dataset.

Figure 4.

Boxplot of the run time among all algorithms for the small-sized problem. (a) The first dataset. (b) The second dataset. (c) The third dataset.

Figure 5.

Boxplot of the sensor coverage (fitness function value) among all algorithms for the middle-sized problem. (a) The first dataset. (b) The second dataset. (c) The third dataset.

Figure 6.

Boxplot of the run time among all algorithms for the middle-sized problem. (a) The first dataset. (b) The second dataset. (c) The third dataset.

Figure 7.

Boxplot of the sensor coverage (fitness function value) among all algorithms for the larger-sized problem. (a) The first dataset. (b) The second dataset. (c) The third dataset.

Figure 8.

Boxplot of the run time among all algorithms for the larger-sized problem. (a) The first dataset. (b) The second dataset. (c) The third dataset.

Here, for the small-sized problem, the experimental results in terms of the fitness function of sensor coverage (F), the run time (T), the number of generations to obtain the optimal solution (Best), how many generations were run during the provided time (Ngen), and total cost (Cost) obtained by the GA, PSO, and the proposed bi-tuning SSO (SSO1–SSO7) are shown in Table 4, Table 5 and Table 6 and Figure 3 and Figure 4 and were analyzed as follows:

For the fitness function value (F):

- The average (AVG) of the fitness function value (F) was obtained by SSO4, GA. The AVG values in each database are 9979.83333, 9981.4, and 9979.36667, which are the best among all algorithms for the first dataset to the third dataset of the small-sized problem, respectively, as shown in Table 4, Table 5 and Table 6.

- The minimum (MIN) fitness function value (F) obtained by GA, SSO1-5, and SSO7 is 9978, which is the best among all algorithms for the first dataset of the small-sized problem, as shown in Table 4.

For the run time (T):

For the number of generations obtains the optimal solution (Best):

- The average (AVG) number of generations to obtain the optimal solution (Best) obtained by PSO is around 1 for the first dataset to the third dataset of the small-sized problem, respectively, as shown in Table 4, Table 5 and Table 6. In the same run time, the PSO converges faster but the solution is not better, which indicates it is trapped in the local solution and cannot escape.

For how many generations were run during the provided time (Ngen):

- The proposed SSO1–SSO7 are the updates of all variables, showing that the average run time of each generation is long. In the future, it will be changed to the update of some variables.

For the total cost (Cost):

Secondly, for the middle-sized problem, the experimental results in terms of the fitness function value (F), the run time (T), the number of generations to obtain the optimal solution (Best), how many generations were run during the provided time (Ngen), and total cost (Cost) obtained by the GA, PSO, and the proposed bi-tuning SSO (SSO1–SSO7) are shown in Table 7, Table 8 and Table 9 and Figure 5 and Figure 6 and were analyzed as follows:

For the fitness function value (F):

- The minimum (MIN) fitness function value (F) obtained by GA, SSO1–7 is 9978, which is the best among all algorithms for the first dataset of the middle-sized problem, as shown in Table 7.

- The minimum (MIN) fitness function value (F) obtained by GA, SSO1-2, SSO4, and SSO6-7 is 9978, which is the best among all algorithms for the second dataset of the middle-sized problem, as shown in Table 8.

- The minimum (MIN) fitness function value (F) obtained by GA and SSO1-5 is 9978, which is the best among all algorithms for the third dataset of the middle-sized problem, as shown in Table 9.

For the run time (T):

For the number of generations to obtain the optimal solution (Best):

- The average (AVG) number of generations to obtain the optimal solution (Best) obtained by PSO is around 1 for the first dataset to the third dataset of the middle-sized problem, respectively, as shown in Table 7, Table 8 and Table 9. In the same run time, the PSO converges faster but the solution is not better, which indicates it is trapped in the local solution and cannot escape.

For how many generations were run during the provided time (Ngen):

- The proposed SSO1–SSO7 represent the update of all variables, meaning that the average run time of each generation is long. In the future, it will be changed to the update of some variables.

For the total cost (Cost):

Finally, for the larger-sized problem, the experimental results in terms of the fitness function value (F), the run time (T), the number of generations to obtain the optimal solution (Best), how many generations were run during the provided time (Ngen), and total cost (Cost) obtained by the GA, PSO, and the proposed bi-tuning SSO (SSO1–SSO7) are shown in Table 10, Table 11 and Table 12 and Figure 7 and Figure 8 and were analyzed as follows:

For the fitness function value (F):

- The minimum (MIN) fitness function value (F) obtained by GA and SSO1-6 is 9978, which is the best among all algorithms for the second dataset of the larger-sized problem, as shown in Table 11.

- The standard deviation (STD) values of the fitness function value (F) obtained by GA, SSO5, and GA are 0.915386, 1.159171, and 0, which are the best among all algorithms for the first dataset to the third dataset of the larger-sized problem, respectively, as shown in Table 10, Table 11 and Table 12.

For the run time (T):

For the number of generations to obtain the optimal solution (Best):

- The average (AVG) number of generations to obtain the optimal solution (Best) obtained by PSO is around 1 for the first dataset to the third dataset of the larger-sized problem, respectively, as shown in Table 10, Table 11 and Table 12. In the same run time, the PSO converges faster but the solution is not better, which indicates it is trapped in the local solution and cannot escape.

For how many generations were run during the provided time (Ngen):

- The best solution (MAX) values of how many generations were run during the provided time (Ngen) obtained by GA are 3140, 2864, and 2959, which are the best among all algorithms for the first dataset to the third dataset of the larger-sized problem, respectively, as shown in Table 10, Table 11 and Table 12.

- The proposed SSO1–SSO7 represent the update of all variables, meaning that the average run time of each generation is long. In the future, it will be changed to the update of some variables.

For the total cost (Cost):

Therefore, a more streamlined summary from the above analysis is shown as follows.

For the small-sized problem, the experimental results obtained by the proposed bi-tuning SSO outperform those found by PSO, GA, and SSO in terms of the fitness function of the sensor coverage (F) for the first dataset and the third dataset and comply with the cost limit of 2000 for the first dataset to the third dataset.

For the middle-sized problem and larger-sized problem, the experimental results obtained by the proposed bi-tuning SSO outperform those found by PSO, GA, and SSO in terms of the fitness function of the sensor coverage (F) for the first dataset to the third dataset and comply with the cost limit of 2000 for the first dataset to the third dataset.

Thus, the experimental results obtained by the proposed bi-tuning SSO achieve an excellent performance in terms of the fitness function of the sensor coverage (F) and comply with the cost limit of 2000 for all size problems including the small-sized, middle-sized, and larger-sized problems.

7. Conclusions

The WSN reveals a major system of wireless environments for many application systems in the modern world. In this study, a budget-limited WSN sensing coverage problem was considered. To enhance the QoS in WSN, the objective of the budget-limited WSN sensing coverage problem is to maximize the number of sensor coverage grids under the assumption that the number of sensors, the coverage radius level, the related cost of each sensor, and the budget limit are known.

This paper presented a new multi-objective swarm algorithm called the bi-tuning SSO including seven SSO variants (SSO1–SSO7) to optimize the solution of the studied problem in this paper. The proposed bi-tuning SSO was found to improve the SSO by tuning the parameter settings, which is always an important issue in all AI algorithms.

A comparative experiment of the effectiveness and performance of the proposed bi-tuning SSO algorithm was performed and compared to state-of-the-art algorithms including PSO and GA on three datasets with different settings of Nvar = 20, 100, and 300, representing the scale of small, middle, and larger WSNs. The optimization solution obtained by all considered algorithms indicated the proposed bi-tuning SSO performs better than the compared algorithms from the best, the worst, the average, and standard deviation for the fitness function values obtained in all cases in this study. Given these outcomes, the proposed bi-tuning SSO should be extended, with future studies applying it to multi-class datasets with more attributes, classes, and instances.

Author Contributions

Conceptualization, W.Z., C.-L.H., W.-C.Y., Y.J. and S.-Y.T.; methodology, W.Z., C.-L.H. and W.-C.Y.; validation, W.Z., C.-L.H. and W.-C.Y.; formal analysis, W.Z., C.-L.H., W.-C.Y., Y.J. and S.-Y.T.; investigation, W.Z., C.-L.H. and W.-C.Y.; resources, W.Z., C.-L.H. and W.-C.Y.; data curation, W.Z., C.-L.H., W.-C.Y., Y.J. and S.-Y.T.; writing—original draft preparation, W.Z., C.-L.H. and W.-C.Y.; supervision, W.Z., C.-L.H. and W.-C.Y.; project administration, W.Z., C.-L.H. and W.-C.Y.; funding acquisition, W.Z. All authors have read and agreed to the published version of the manuscript.

Funding

I wish to thank the anonymous editor and the reviewers for their constructive comments and recommendations, which have significantly improved the presentation of this paper. This research was supported in part by National Natural Science Found of China (No. 62106048), Natural Science Foundation of Guangdong Province (No. 2019A1515110273), the Ministry of Science and Technology, R.O.C (MOST 107-2221-E-007-072-MY3 and MOST 110-2221-E-007-107-MY3), Open object No. RCS2019K010 from Key Laboratory of Rail Transit Control and Safety.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, C.; Li, J.; Yang, Y.; Ye, F. Combining Solar Energy Harvesting with Wireless Charging for Hybrid Wireless Sensor Networks. IEEE Trans. Mob. Comput. 2018, 17, 560–576. [Google Scholar] [CrossRef]

- Yang, X.; Wang, L.; Su, J.; Gong, Y. Hybrid MAC Protocol Design for Mobile Wireless Sensors Networks. IEEE Sens. Lett. 2018, 2, 7500604. [Google Scholar] [CrossRef]

- Tolani, M.; Singh, R.K.; Shubham, K.; Kumar, R. Two-Layer Optimized Railway Monitoring System Using Wi-Fi and ZigBee Interfaced Wireless Sensor Network. IEEE Sens. J. 2017, 17, 2241–2248. [Google Scholar] [CrossRef]

- Daskalakis, S.N.; Goussetis, G.; Assimonis, S.D.; Tentzeris, M.M.; Georgiadis, A. A uW Backscatter-Morse-Leaf Sensor for Low-Power Agricultural Wireless Sensor Networks. IEEE Sens. J. 2018, 18, 7889–7898. [Google Scholar] [CrossRef] [Green Version]

- Wang, J.; Zhang, X. Cooperative MIMO-OFDM-Based Exposure-Path Prevention Over 3D Clustered Wireless Camera Sensor Networks. IEEE Trans. Wirel. Commun. 2020, 19, 4–18. [Google Scholar] [CrossRef]

- Sharma, D.; Bhondekar, A.P. Traffic and Energy Aware Routing for Heterogeneous Wireless Sensor Networks. IEEE Commun. Lett. 2018, 22, 1608–1611. [Google Scholar] [CrossRef]

- Osamy, W.; Khedr, A.M.; Salim, A. ADSDA: Adaptive Distributed Service Discovery Algorithm for Internet of Things Based Mobile Wireless Sensor Networks. IEEE Sens. J. 2019, 19, 10869–10880. [Google Scholar] [CrossRef]

- Chen, S.; Zhang, L.; Tang, Y.; Shen, C.; Kumar, R.; Yu, K.; Tariq, U.; KashifBashir, A. Indoor Temperature Monitoring using Wireless Sensor Networks: A SMAC Application in Smart Cities. Sustain. Cities Soc. 2020, 61, 102333. [Google Scholar] [CrossRef]

- Kalaivaani, P.T.; Krishnamoorthi, R. Design and Implementation of Low Power Bio Signal Sensors for Wireless Body Sensing Network Applications. Microprocess. Microsyst. 2020, 79, 103271. [Google Scholar] [CrossRef]

- Huang, T.Y.; Chang, C.J.; Lin, C.W.; Roy, S.; Ho, T.Y. Delay-Bounded Intravehicle Network Routing Algorithm for Minimization of Wiring Weight and Wireless Transmit Power. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2017, 36, 551–561. [Google Scholar] [CrossRef]

- Harbouche, A.; Djedi, N.; Erradi, M.; Ben-Othman, J.; Kobbane, A. Model Driven Flexible Design of a Wireless Body Sensor Network for Health Monitoring. Comput. Netw. 2017, 129, 548–571. [Google Scholar] [CrossRef]

- Kim, K.B.; Cho, J.Y.; Jeon, D.H.; Ahn, J.H.; Hong, S.D.; Jeong, Y.H.; Nahm, S.; Sung, T.H. RETRACTED: Enhanced Flexible Piezoelectric Generating Performance via High Energy Composite for Wireless Sensor Network. Energy 2018, 159, 196–202. [Google Scholar] [CrossRef]

- Munirathinam, K.; Park, J.; Jeong, Y.J.; Lee, D.W. Galinstan-Based Flexible Microfluidic Device for Wireless Human-Sensor Applications. Sens. Actuators A Phys. 2020, 315, 112344. [Google Scholar] [CrossRef]

- Haque, I.; Nurujjaman, M.; Harms, J.; Abu-Ghazaleh, N. SDSense: An Agile and Flexible SDN-Based Framework for Wireless Sensor Networks. IEEE Trans. Veh. Technol. 2019, 68, 1866–1876. [Google Scholar] [CrossRef]

- Kim, J.; Choi, J.P. Sensing Coverage-Based Cooperative Spectrum Detection in Cognitive Radio Networks. IEEE Sens. J. 2019, 19, 5325–5332. [Google Scholar] [CrossRef]

- Singh, P.; Chen, Y.C. Sensing Coverage Hole Identification and Coverage Hole Healing Methods for Wireless Sensor Networks. Wirel. Netw. 2020, 26, 2223–2239. [Google Scholar] [CrossRef]

- Huang, H.; Savkin, A.V.; Li, X. Reactive Autonomous Navigation of UAVs for Dynamic Sensing Coverage of Mobile Ground Targets. Sensors 2020, 20, 3720. [Google Scholar] [CrossRef]

- Chen, R.; Xu, N.; Li, J. A Self-Organized Reciprocal Decision Approach for Sensing Coverage with Multi-UAV Swarms. Sensors 2018, 18, 1864. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.C.; Hsiao, H.C.W.; Lin, C.C.; Chin, H.H. Multi-Objective Wireless Sensor Network Deployment Problem with Cooperative Distance-Based Sensing Coverage. Mob. Netw. Appl. 2021, 134, 1–12. [Google Scholar] [CrossRef]

- Zhou, P.; Wang, C.; Yang, Y. Static and Mobile Target kk-Coverage in Wireless Rechargeable Sensor Networks. IEEE Trans. Mob. Comput. 2019, 18, 2430–2445. [Google Scholar] [CrossRef]

- Nguyen, N.T.; Liu, B.H. The Mobile Sensor Deployment Problem and the Target Coverage Problem in Mobile Wireless Sensor Networks are NP-Hard. IEEE Syst. J. 2019, 13, 1312–1315. [Google Scholar] [CrossRef]

- Alia, O.M.; Al-Ajouri, A. Maximizing Wireless Sensor Network Coverage with Minimum Cost Using Harmony Search Algorithm. IEEE Sens. J. 2017, 17, 882–896. [Google Scholar] [CrossRef]

- Dash, D. Approximation Algorithms for Road Coverage Using Wireless Sensor Networks for Moving Objects Monitoring. IEEE Trans. Intell. Transp. Syst. 2020, 21, 4835–4844. [Google Scholar] [CrossRef]

- Al-Karaki, J.N.; Gawanmeh, A. The Optimal Deployment, Coverage, and Connectivity Problems in Wireless Sensor Networks: Revisited. IEEE Access 2017, 5, 18051–18065. [Google Scholar] [CrossRef]

- Yu, J.; Wan, S.; Cheng, X.; Yu, D. Coverage Contribution Area Based k-Coverage for Wireless Sensor Networks. IEEE Trans. Veh. Technol. 2017, 66, 8510–8523. [Google Scholar] [CrossRef]

- Singh, S.; Kumar, S.; Nayyar, A.; Al-Turjman, F.; Mostarda, L. Proficient QoS-Based Target Coverage Problem in Wireless Sensor Networks. IEEE Access 2020, 8, 74315–74325. [Google Scholar]

- Yang, X.; Wen, Y.; Yuan, D.; Zhang, M.; Zhao, H.; Meng, Y. Coverage Degree-Coverage Model in Wireless Visual Sensor Networks. IEEE Wirel. Commun. Lett. 2019, 8, 817–820. [Google Scholar] [CrossRef]

- Elhoseny, M.; Tharwat, A.; Farouk, A.; Hassanien, A.E. K-Coverage Model Based on Genetic Algorithm to Extend WSN Lifetime. IEEE Sens. Lett. 2017, 1, 7500404. [Google Scholar] [CrossRef]

- Chakravarthi, S.S.; Kumar, G.H. Optimization of Network Coverage and Lifetime of the Wireless Sensor Network based on Pareto Optimization using Non-dominated Sorting Genetic Approach. Procedia Comput. Sci. 2020, 172, 225–228. [Google Scholar] [CrossRef]

- Jiao, Z.; Zhang, L.; Xu, M.; Cai, C.; Xiong, J. Coverage Control Algorithm-Based Adaptive Particle Swarm Optimization and Node Sleeping in Wireless Multimedia Sensor Networks. IEEE Access 2019, 7, 170096–170105. [Google Scholar] [CrossRef]

- Yeh, W.C. A two-stage discrete particle swarm optimization for the problem of multiple multi-level redundancy allocation in series systems. Expert Syst. Appl. 2009, 36, 9192–9200. [Google Scholar] [CrossRef]

- Yeh, W.C.; He, M.F.; Huang, C.L.; Tan, S.Y.; Zhang, X.; Huang, Y.; Li, L. New genetic algorithm for economic dispatch of stand-alone three-modular microgrid in DongAo Island. Appl. Energy 2020, 263, 114508. [Google Scholar] [CrossRef]

- Yeh, W.C.; Huang, C.L.; Lin, P.; Chen, Z.; Jiang, Y.; Sun, B. Simplex Simplified Swarm Optimization for the Efficient Optimization of Parameter Identification for Solar Cell Models. IET Renew. Power Gener. 2018, 12, 45–51. [Google Scholar] [CrossRef]

- Lin, P.; Cheng, S.; Yeh, W.C.; Chen, Z.; Wu, L. Parameters Extraction of Solar Cell Models Using a Modified Simplified Swarm Optimization Algorithm. Sol. Energy 2017, 144, 594–603. [Google Scholar] [CrossRef]

- Zhang, X.; Huang, Y.; Li, L.; Yeh, W.C. Power and Capacity Consensus Traking of Distributed Battery Storage Systems in Modular Microgrids. Energies 2018, 11, 1439. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.L.; Yin, Y.; Jiang, Y.; Yeh, W.C.; Chung, Y.Y.; Lai, C.M. Multi-objective Scheduling in Cloud Computing using MOSSO. In Proceedings of the 2018 IEEE Congress on Evolutionary Computation (CEC 2018), Rio de Janeiro, Brazil, 8–13 July 2018. [Google Scholar]

- Lai, C.M.; Yeh, W.C.; Huang, Y.C. Entropic Simplified Swarm Optimization for the Task Assignment Problem. Appl. Soft Comput. 2017, 58, 115–127. [Google Scholar] [CrossRef]

- Yeh, W.C.; Lai, C.-M.; Huang, Y.-C.; Cheng, T.-W.; Huang, H.-P.; Jiang, Y. Simplified Swarm Optimization for Task Assignment Problem in Distributed Computing System. In Proceedings of the 2017 13th International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery (ICNC-FSKD 2017), Guilin, China, 29–31 July 2017. [Google Scholar]

- Yeh, W.C.; Lin, J.S. New parallel swarm algorithm for smart sensor systems redundancy allocation problems in the Internet of Things. J. Supercomput. 2018, 74, 4358–4384. [Google Scholar] [CrossRef]

- Yeh, W.C. A Hybrid Heuristic Algorithm for the Multistage Supply Chain Network Problem. Int. J. Adv. Manuf. Technol. 2005, 26, 675–685. [Google Scholar] [CrossRef]

- Yeh, W.C. Simplified Swarm Optimization in Disassembly Sequencing Problems with Learning Effects. Comput. Oper. Res. 2012, 39, 2168–2177. [Google Scholar] [CrossRef]

- Yeh, W.C.; Jiang, Y.; Huang, C.L.; Xiong, N.N.; Hu, C.F.; Yeh, Y.H. Improve Energy Consumption and Signal Transmission Quality of Routings in Wireless Sensor Networks. IEEE Access 2020, 8, 198254–198264. [Google Scholar] [CrossRef]

- Hsieh, J.; Hsiao, H.F.; Yeh, W.C. Forecasting Stock Markets Using Wavelet Transforms and Recurrent Neural Networks: An Integrated System Based on Artificial Bee Colony Algorithm. Appl. Soft Comput. 2011, 11, 2510–2525. [Google Scholar] [CrossRef]

- Chakraborty, S.; Goyal, N.K.; Soh, S. On Area Coverage Reliability of Mobile Wireless Sensor Networks with Multistate Nodes. IEEE Sens. J. 2020, 20, 4992–5003. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, T.T.; Wen, F.Q.; Hu, L.; Hei, C.; Wang, K. Differential Evolution Based Regional Coverage-Enhancing Algorithm for Directional 3D Wireless Sensor Networks. IEEE Access 2019, 7, 93690–93700. [Google Scholar] [CrossRef]

- Han, G.; Liu, L.; Jiang, J.; Shu, L.; Hancke, G. Analysis of Energy-Efficient Connected Target Coverage Algorithms for Industrial Wireless Sensor Networks. IEEE Trans. Ind. Inform. 2017, 13, 135–143. [Google Scholar] [CrossRef]

- Yeh, W.C.; Tan, S.Y. Simplified Swarm Optimization for the Heterogeneous Fleet Vehicle Routing Problem with Time-Varying Continuous Speed Function. Electronics 2021, 10, 1775. [Google Scholar] [CrossRef]

- Yeh, W.C.; Su, Y.Z.; Gao, X.Z.; Hu, C.F.; Wang, J.; Huang, C.L. Simplified Swarm Optimization for Bi-Objection Active Reliability Redundancy Allocation Problems. Appl. Soft Comput. J. 2021, 106, 107321. [Google Scholar] [CrossRef]

- Yeh, W.C. Solving cold-standby reliability redundancy allocation problems using a new swarm intelligence algorithm. Appl. Soft Comput. 2019, 83, 105582. [Google Scholar] [CrossRef]

- Wang, M.; Yeh, W.C.; Chu, T.C.; Zhang, X.; Huang, C.L.; Yang, J. Solving Multi-Objective Fuzzy Optimization in Wireless Smart Sensor Networks under Uncertainty Using a Hybrid of IFR and SSO Algorithm. Energies 2018, 11, 2385. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.L.; Jiang, Y.; Yeh, W.C. Developing Model of Fuzzy Constraints Based on Redundancy Allocation Problem by an Improved Swarm Algorithm. IEEE Access 2020, 8, 155235–155247. [Google Scholar] [CrossRef]

- Lai, C.M.; Chiu, C.C.; Liu, W.C.; Yeh, W.C. A novel nondominated sorting simplified swarm optimization for multi-stage capacitated facility location problems with multiple quantitative and qualitative objectives. Appl. Soft Comput. 2019, 84, 105684. [Google Scholar] [CrossRef]

- Yeh, W.C. A new harmonic continuous simplified swarm optimization. Appl. Soft Comput. 2019, 85, 105544. [Google Scholar] [CrossRef]

- Zhu, W.; Yeh, W.C.; Cao, L.; Zhu, Z.; Chen, D.; Chen, J.; Li, A.; Lin, Y. Faster Evolutionary Convolutional Neural Networks Based on iSSO for Lesion Recognition in Medical Images. Basic Clin. Pharmacol. Toxicol. 2019, 124, 329. [Google Scholar]

- Huang, C.L.; Huang, S.Y.; Yeh, W.C.; Wang, J. Fuzzy System and Time Window Applied to Traffic Service Network Problem under Multi-demand Random Network. Electronics 2019, 8, 539. [Google Scholar] [CrossRef] [Green Version]

- Sun, L.; Zhu, W.; Lu, Q.; Li, A.; Luo, L.; Chen, J.; Wang, J. CellNet: An Improved Neural Architecture Search Method for Coal and Gangue Classification. In Proceedings of the IJCNN 2021: International Joint Conference on Neural Networks, Shenzhen, China, 18–22 July 2021. [Google Scholar]

- Jin, H.; Zhu, W.; Duan, Z.; Chen, J.; Li, A. TDNN-LSTM Model and Application Based on Attention Mechanism. Acoust. Technol. 2021, 40, 508–514. [Google Scholar]

- Zhu, W.; Ma, H.; Cai, G.; Chen, J.; Wang, X.; Li, A. Research on PSO-ARMA-SVR Short-Term Electricity Consumption Forecast Based on the Particle Swarm Algorithm. Wirel. Commun. Mob. Comput. 2021, 2021, 6691537. [Google Scholar]

- Zhu, W.; Yeh, W.C.; Xiong, N.N.; Sun, B. A New Node-based Concept for Solving the Minimal Path Problem in General networks. IEEE Access 2019, 7, 173310–173319. [Google Scholar] [CrossRef]

- Zhu, W.; Yeh, W.C.; Chen, J.; Chen, D.; Li, A.; Lin, Y. Evolutionary convolutional neural networks using ABC. In Proceedings of the 2019 11th International Conference on Machine Learning and Computing (ICMLC 2019), Zhuhai, China, 22–24 February 2019. [Google Scholar]

- Yeh, W.C. A new branch-and-bound approach for the n/2/flowshop/αF+ βCmax flowshop scheduling problem. Comput. Oper. Res. 1999, 26, 1293–1310. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, G.G.; Li, K.; Yeh, W.C.; Jian, M.; Dong, J. Enhancing MOEA/D with information feedback models for large-scale many-objective optimization. Inf. Sci. 2020, 522, 1–16. [Google Scholar] [CrossRef]

- Corley, H.W.; Rosenberger, J.; Yeh, W.C.; Sung, T.K. The cosine simplex algorithm. Int. J. Adv. Manuf. Technol. 2006, 27, 1047–1050. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).