Abstract

As more and more fields utilize deep learning, there is an increasing demand to make suitable training data for each field. The existing interactive object segmentation models can easily make the mask label data because these can accurately segment the area of the target object through user interaction. However, it is difficult to accurately segment the target part in the object using the existing models. We propose a method to increase the accuracy of part segmentation by using the proposed interactive object segmentation model trained only with edge images instead of color images. The results evaluated with the PASCAL VOC Part dataset show that the proposed method can accurately segment the target part compared to the existing interactive object segmentation model and the semantic part-segmentation model.

1. Introduction

There are many results of using convolutional neural networks (CNNs) in research fields. In various industrial fields, there have been many attempts to apply the research results obtained through CNN models to specific applications. To properly apply the results obtained using a CNN model to specific applications, training data suitable for the application is required.

One of the industries that often use images is augmented reality. Augmented reality provides useful information to users based on recognized real space or objects. For example, trainees learning to assemble a car can learn how to assemble it based on car parts recognized by an augmented reality device such as an HMD or smartphone. As another example, trainees learning machine maintenance can easily recognize the location or condition of a part with an augmented reality device and learn behaviors appropriate to the current machine condition to repair the machine.

To train a CNN model suitable for a specific application in augmented reality, training data suitable for a specific scenario is required. For example, to train a CNN model for recognizing the class of an object, the class labels corresponding to images are required. To train a CNN model for recognizing which part an object is composed of, the mask labels indicating the location and area of each part of the object and the class name of each part are required. It takes a large amount of time and manpower to make the mask labels from the suitable dataset for a specific application. Since each object is usually composed of several parts, making the mask labels of each part takes longer than making the mask labels of each object.

Interactive object segmentation methods enable a user to segment the area pixel level of a target object area through user interaction. Methods using CNNs have emerged that improve the accuracy of object segmentation over classical algorithm methods (e.g., GrabCut [1]). The interactive object segmentation methods using CNN easily and accurately make the mask labels.

Interactive object segmentation models based on CNN are generally supervised learning through images and ground truth mask labels in the dataset. Due to the limited number of classes of objects in the dataset, the models utilize the backbone of classification models trained with Image Net datasets, which contain the largest number of object classes. However, it is difficult to accurately segment the target part in an object with the models for segmenting the target object. One of the reasons is that the size of the target part is smaller than the size of the target object. Another reason for the lower accuracy of the target part is that the texture in the interaction area during part segmentation is different from the texture during object segmentation. For example, when a user segments the person in the image with the interactive object segmentation model and interaction, it can accurately segment the target object (e.g., person) because it is trained using the clicked areas which contain the different parts of the object. However, when a user segments the target part (e.g., nose) with the same model, it cannot accurately segment the target partly because the model has never been trained with the texture around the nose that the user clicked on. Table 1 below shows the estimated masks when the interactive object segmentation models segment the motorcycle (object) and the motorcycle’s wheel (part). For the two models tested, the accuracy of part segmentation is much lower than the accuracy of object segmentation.

Table 1.

The results of estimated masks for segmenting motorcycle (object) and wheel (part).

The edge image can be expressed as a binary image if the pixel is greater than the threshold and the pixel is true or false, otherwise, it be expressed as a grayscale image according to the value of the change in the local area. The size of the dataset which consists of edge images is lower than the size of the color image dataset because the edge image consists of a single channel, whereas the color image consists of three or four channels. The edge image, which consists of a single channel, has one disadvantage in that it is difficult to distinguish the class of object in the selected area because there is no texture information, but it can be suitable as training data for the interactive (object) segmentation models. The ability to distinguish between the area of the target object and background is important because it is important for an interactive segmentation model to accurately segment the target area rather than to recognize what it is. Thus, instead of texture information that can recognize the classes of objects, the edge images can be more suitable for an interactive segmentation model than color images. Edge images generated by the classic edge detectors such as Canny Edge [4] are difficult to use in real applications due to a large amount of noise, but with the advent of edge detectors using CNN models, relatively accurate edge images can be obtained.

Existing deep-learning models are trained the feature of the color texture of the image in the training dataset. These models show good performance in the evaluation dataset because they are evaluated with color images obtained under similar environment conditions, but when they are applied in the actual industry, the desired performance sometimes is not achieved because the environment is different. On the other hand, an edge image is an image in which the same characteristics are refined even in color images taken under different environments. In this paper, we will examine whether similar performance can be achieved only with edge images instead of color images in interactive object or parts segmentation, which is one of the tasks sensitive to color texture characteristics. One of the contributions of this paper is to find an edge image suitable for an input image of an interactive segmentation model. In this paper, suitable edge images are found through experiments to accurately segment the target object. We conducted the second experiment to compare with the proposed model trained with the edge images which were selected based on the results from the first experiment and the existing interactive object segmentation model. The proposed models were trained with an object segmentation dataset (e.g., PASCAL VOC 2012 [5] or SBD [6]), and these were evaluated by a part segmentation dataset. Here we used PASCAL VOC Part [7] dataset for evaluation. Experimental results showed that the proposed model trained with edge images could segment the target part more accurately compared to the existing segmentation models trained with color images.

2. Related Work

2.1. Semantic Part Segmentation

The problem of segmenting the target part in an image is one of the important issues that has been studied. GMNet [8], one of the most recently published semantic part segmentation methods, proposed a method of part segmentation by combining CNN and graph methods. The proposed model is trained and evaluated with PASCAL VOC Part [7] dataset (based on 108 Part) and the average accuracy intersection of union (IOU) is about 50%.

Many studies have been conducted on part segmentation, but there is still a problem that the IOU accuracy is not high enough. One of the reasons the IOU accuracy of part segmentation is not high enough is that segmentation is difficult because the size of part is smaller than the size of objects. Another reason is that the number of part classes is bigger than the number of object classes, so it is difficult to recognize them. In addition, to train a part-segmentation model based on CNN, many mask labels are needed for each part. However, the number of part segmentation datasets is much smaller than the number of object segmentation datasets.

2.2. Interactive Object Segmentation

Unlike semantic segmentation methods, interactive segmentation methodsonly segment the area of the target object or the part with user interaction. In addition, they cannot recognize the classes of the selected object. However, they have the advantage of being able to segment an unlearned object or region through user input.

DeepGrabCut [9] is one methods of segmentation of the target objects with user interaction. In DeepGrabCut [9], when the user selects the object around the target object by drawing the bounding box, the object is segmented. In the process of drawing the bounding box, the target object can be segmented easily with one operation, but it is difficult for it to be segmented precisely.

Another interaction method is to use only the click interaction. In this method, segmentation occurs by clicking the target object area and the non-target area. The user can see the object mask predicted by the deep-learning model and finely segment the target object through additional clicks. Forete et al. [10] proposed a method to solve the problem of IOU accuracy decreasing when the number of clicks increases in the case of the existing models by adding the previously estimated mask as input to the proposed deep-learning model. Maninis et al. [11] proposed a method of segmentation of the target object by clicking some of the points on the boundary of the target object. Lin et al. [12] proposed a method for better segmentation of the target object by weighting the area the user first clicked on. Jang et al. [13] proposed a more accurate object segmentation method (BRS) with refining information converted from user interaction by using the backpropagation method. Sofiiuk et al. [2] proposed a faster segmentation method (f-BRS) than BRS by performing backpropagation in only part of the model. Zhang et al. [3] proposed a method (IOG) for segmenting the target objects through interaction that combines the advantages of the bounding box and click methods. In IOG, a user first selects the area around the target object using the bounding box, and then can segment the target object precisely by clicking additionally on the area that is an object or the area that is not an object based on the area of the mask estimated by previous interaction.

The above methods allow a user to accurately segment the target object with less interaction. However, in all of the existing methods, if the part of an object is segmented with these models trained using color images, the IOU accuracy is greatly reduced.

2.3. Color Texture

Geirhos et al. [14] showed that the CNN models trained to recognize the object classes in the ImageNet dataset focus on the texture feature rather than the shape feature of the object. Since humans focus on the shape features rather than the texture features when recognizing an object, they change the texture of the images to train the proposed model to focus on the shape features of the object when recognizing the target objects.

Because CNN models train based on color texture, they cannot accurately recognize rotated objects. In the results from Wang et al. [15], instead of changing the texture of the input image, they proposed a method to better recognize the rotated object (Modified National Institute of Standards and Technology (MNIST)) by additionally inputting the grayscale image.

There have been many studies to solve the problem of CNN models trained by focusing on the color texture feature, but most of them focus on the classification problem, and relatively few studies deal with the segmentation problem.

2.4. Edge Detector

Edge has been regarded as one of the most basic problems of computer vision because of the aforementioned characteristics. One of the typical classic edge detectors is the canny edge method. Most of the classic edge detectors show some performance, but the accuracy is not high enough for real-world applications.

With the advent of deep learning, especially CNNs, many edge detectors based on CNNs have appeared. Holistically-nested edge detection (HED) [16] shows higher edge detection accuracy than the classical edge detector based on the VGG [17] model, which is one of CNN’s models. Many studies for edge detection have been conducted compared to HED. The richer convolutional features for edge detection (RCF) [18] proposed a method of performing edge detection with high accuracy in real-time based on the VGG model. The dense extreme inception network for edge detection (DexiNed) [19], one of the most recently proposed edge detectors, accurately detects even small-sized edges with less noise than the results of edge detectors using CNNs.

With the development of deep learning, many edge detectors have been studied, but there are not many applications or studies using edge images estimated from edge detector models.

The existing interactive segmentation models are trained on how to interactively segment an object with a color image. Just like the method by adding or changing input images to solve the problem that a CNN is trained by focusing on texture features, an interactive segmentation model can be trained based on shape features instead of texture features when input images are changed. We propose a method to segment the target object or part with the model trained by edge images instead of color images.

3. Method

3.1. The Proposed Models

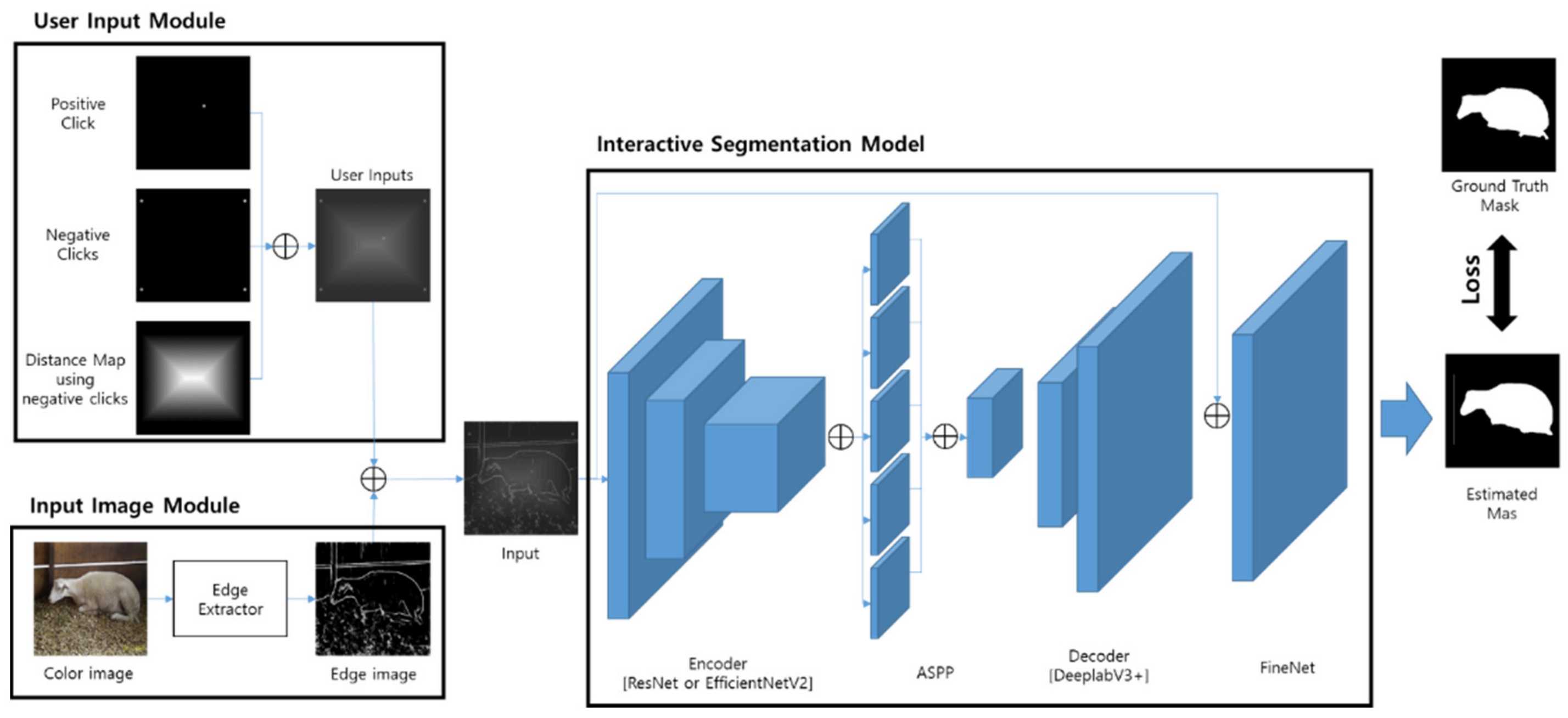

We propose two interactive segmentation models. We set the DeeplabV3+ [20] with ResNet-101 [21] trained with edge images—proposed model 1. Since the input images are edge images, not color images, only the structure of ResNet-101 is used without using the trained parameters of ResNet-101. In the preliminary experiment, when only the edge image is used as input, the accuracy of DeeplabV3+ with ResNet-101 is higher than that of DeeplabV3+ with ResNet-50. It seems to represent the edge image better as the number of layers and parameters of the CNN model increases.

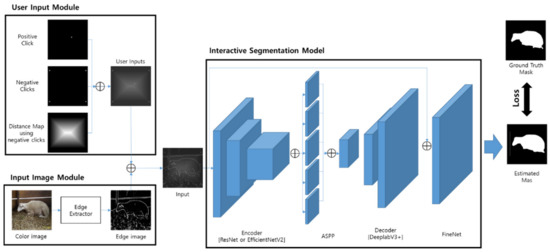

The interactive segmentation model is mainly composed of an encoder and decoder. The encoder is in charge of extracting features from the input images. The encoder of the proposed model 1 used ResNet-101, which is the one most commonly used. Among the recently published papers, we used EfficientNetV2 [22], one of the most accurate classification models for the ImageNet [23] dataset, as the encoder of another proposed model—proposed model 2. EfficientNetV2 [22] is one of the models that can most accurately recognize the object classes in ImageNet datasets with a smaller number of parameters and short training time.

The decoder is the part that restores the image suitable for the feature. In the interactive segmentation model, the decoder predicts the mask of the target object or partly based on the features generated from the encoder. The decoder of proposed model 1 uses the structure of decoder used in DeeplabV3+ [20] as it is. The trained parameters of the decoder from DeeplabV3+ [20] are not used. The proposed model 2 using EfficientNetV2 [22] as an encoder improves the decoder as shown in Figure 1. The proposed model 1 to which DeeplabV3+ [20] is applied is the same except for FineNet in Figure 1. The output value from the decoder of the proposed model 2 to which EfficientNetV2 [22] is applied are concatenated with input data, then the concatenated value is passed through FineNet to obtain the final estimated mask. FineNet consists of two 3 × 3 convolutions and the last one is 1 × 1 convolution. The final estimated mask of FineNet has the same size as the input image, but the number of channels is one. For specific parameter values for proposed model 1 and proposed model 2, please refer to the Supplementary Materials.

Figure 1.

The diagram of the proposed model 2.

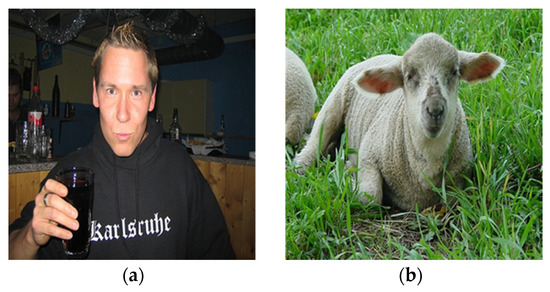

3.2. Input Image Module

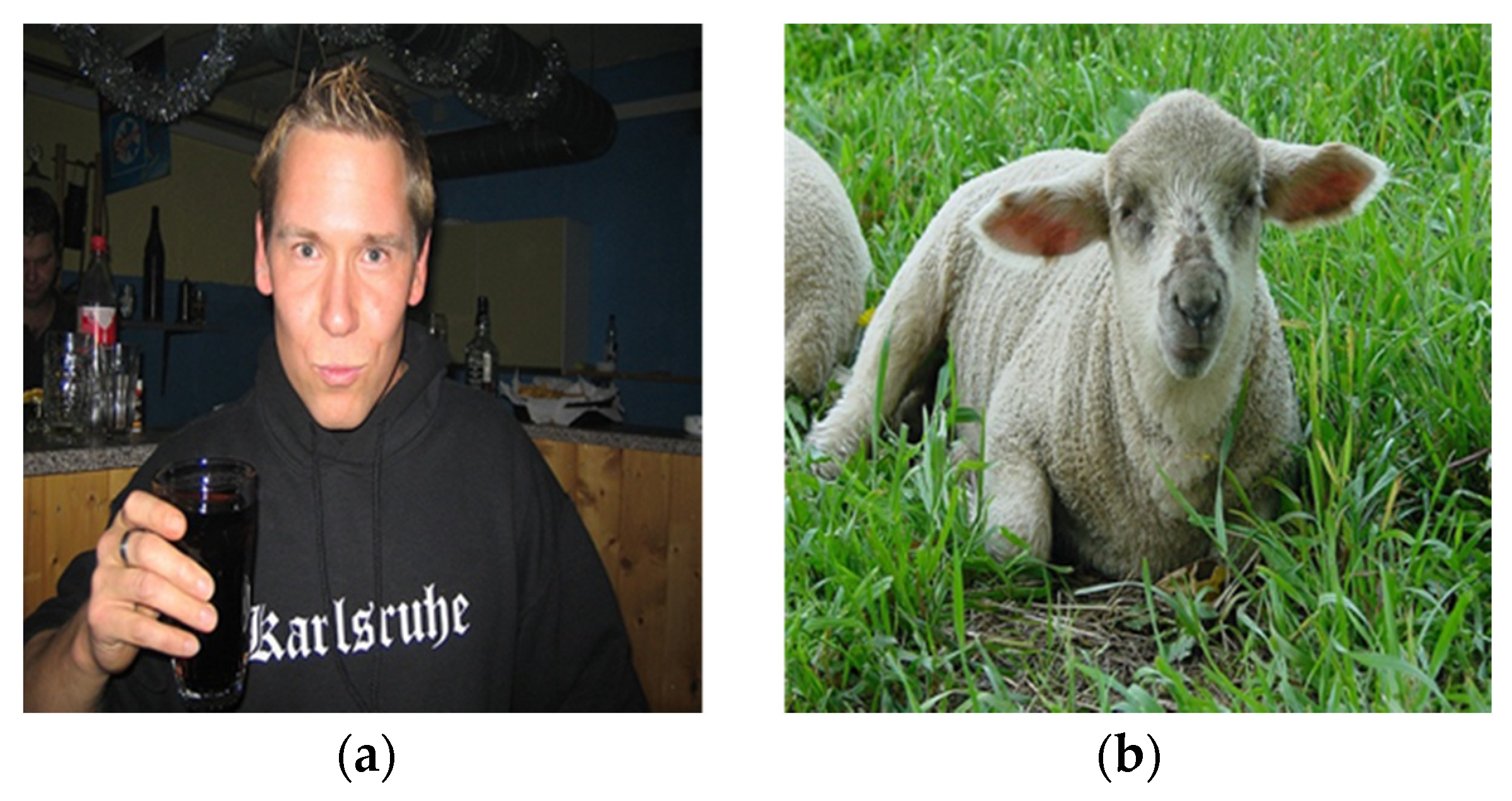

Since edge images lacking texture features are used as input images instead of color images rich in texture features, the accuracy of segmentation differs greatly depending on which edge images are used as input images. RCF [18] based on CNN is one of the most cited edge detection models. Also, DexiNed [19] based on CNN is one of the most recently proposed edge detectors. Both edge detectors are CNN-based deep-learning models that can extract edge images for each layer. Table 2 and Table 3 are the edge images for each layer of each edge detector for the same color images as shown in Figure 2.

Table 2.

Edge images generated by each edge detector for color image in Figure 2a.

Table 3.

Edge images generated by each edge detector for color image in Figure 2b.

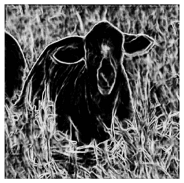

Figure 2.

Example of color images: (a) person (b) animal.

3.3. User Interaction Module

For interactive segmentation, representative interaction methods are the click method (click on the area inside or outside the target object) and the bounding box method. Recently, interaction methods that combine bounding box and click are emerging. In the case of IOG [3], after the user sets the target area, b, drawing the bounding box, the target area can be segmented in detail by additionally clicking on the target area or the background based on the mask estimated by previous interaction. However, when the interaction method in IOG is directly applied to the proposed model trained by edge images, the proposed model may not be trained well because there are few texture features in edge images. To supplement the missing texture information, as shown in Table 4 below, the distance-transformed information of the area of the bounding box is added as an input.

Table 4.

Transform–user interaction.

4. Experiments

In order to train the proposed models, we trained them with only the training image set excluding the evaluation image set of the PASCAL VOC 2012 [5] dataset and the SBD [6] dataset. The proposed models have only been trained to segment the target object with user interaction, not to segment the target part. The PASCAL VOC Part [7] dataset was used only when evaluating the proposed models.

Unlike color images, edge images do not have texture information, so it is necessary to set a segmentation area for users to interact with. The interaction method of IOG is that a user sets the segmentable area by drawing a bounding box and distinguishing the object area and the non-object area by clicking. Many existing interactive segmentation models take color images as input, but because their interaction methods are different, they are excluded from the comparative evaluation, and only the IOG model was comparatively evaluated. There is no study on interactive part segmentation, and in the case of part segmentation, parts of general objects were compared with the latest model among models that can be segmented.

4.1. Implementation Detail

4.1.1. User Interaction

We generated user input based on the ground truth mask of each object in the training dataset. As for the method of generating the click position in the IOG [3] paper, the positive points are randomly selected from the area larger than 0.8 from the normalized image after distance-transforming the ground truth mask. The feature of the distance map is generated from 4 points of the bounding box.

4.1.2. Edge Images

The edge image is generated from the edge detection model based on CNN. After the outputs of each layer are applied to 1 × 1 convolution, the normalized edge images are generated by applying the up-sampling function and the sigmoid function. The edge detection models used are RCF [18] and DexiNed [19]. For each edge detection model, the edge image of the first layer, the edge image of the second layer, the edge image of the third layer, and the final edge image are compared. The reason we did not experiment with the edge image above the fourth layer is there is little information about the texture inside the selected area when segmenting part. When training the proposed model, the edge image is used as the color image in advance. To use the trained proposed model, it is necessary to convert the color image to an edge image. In our PC environment, the average computation time for RCF is about 520 ms, and the average computation time for DexiNed is about 430 ms.

4.1.3. Training and Evaluation

The proposed models are first trained for 1000 epochs and further trained for up to 1000 epochs by further reducing the learning rate. The image size of the training dataset is fixed to 512 × 512 and the batch size is set to five to train the proposed models. SGD is used as the optimizer. When first training the proposed models, the learning rate is 10−7, and in additional training, the learning rate is changed to 10−9. When conducting the additional training, cosine annealing is applied to reduce the learning rate at every epoch. The momentum value is set to 0.9 and the weight decay is set to 10−3. After training, the proposed models are evaluated by the accuracy of segmenting part with the PASCAL VOC Part [7] dataset.

4.2. Comparison to the State-of-the Art Models

We evaluated the proposed models in two ways to compare them with the existing state-of-the-art models. The first experiment compared the IOU accuracy of object and part segmentation between the proposed models and the existing interactive object segmentation model with the PASCAL VOC [7] dataset. We used the IOG [3] model as the interactive object segmentation model for comparison. During the experiment, the proposed models and the IOG model were evaluated using the same interaction information (two clicks for the bounding box and one click for the object). The code and parameters of IOG were from https://github.com/shiyinzhang/Inside-Outside-Guidance (accessed on 21 August 2021). The second experiment was to compare the IOU accuracy of part segmentation with the model for semantic part segmentation trained with the PASCAL VOC Part [7] dataset and the proposed models. The interactive segmentation models use the input image and interaction information for each part or object, but the semantic part-segmentation model uses only the image as input without additional interaction information. Although the input information of the two types of models is different, a semantic part-segmentation model was added as one of the comparison models to compare the accuracy of part segmentation since, to date, there has been no study evaluating the accuracy of part segmentation with the interactive object segmentation model.

5. Results

5.1. The IOU Accuracy of the Proposed Models for Each Edge Image

Since our purpose was to train the proposed models with only the edge images instead of the color images, we conducted the first experiment to find out which edge image has the highest accuracy of object segmentation and how much the highest accuracy differs from the accuracy of the interactive segmentation model trained only with color images.

In the first experiment, proposed model 1 was trained with the training data in PASCAL VOC 2012 [5] dataset and the SBD [6] dataset and evaluated with the evaluation data in PASCAL VOC 2012 [5] and the SBD [6] datasets. As shown in Table 5 and Table 6, both RCF [18] and DexiNed [19] had the highest accuracy in the edge images of Layer 3, and the result edge images, calculated by finally combining the results of all layers from each edge detector, had the second-highest accuracy. Both edge detectors had the lowest accuracy in the edge image of the first layer. Comparing two edge detectors for each layer, DexiNed [19] had higher IOU accuracy than RCF [18] for all layers. The edge images of DexiNed [19] had less noise than the edge images of RCF [18] and the edge inside the object was more detailed than the edge of RCF [18], so the accuracy of object segmentation using DexiNed [19] was higher than when using RCF [18].

Table 5.

The object IOU accuracy of the proposed model 1 for each edge image.

Table 6.

The object and part IOU accuracy of the proposed model 1 for each edge image.

The IOU accuracy of proposed model 1 trained with the edge images of DexiNed [19], which has several advantages over the edge images of RCF [18] was calculated when segmenting part from the PASCAL VOC Part dataset. As shown in Table 6, results similar to those obtained in the object segmentation experiment were obtained in the part segmentation experiment.

In the part segmentation experiment, the IOU accuracy was the highest when the edge images from layer 3 were used, the IOU accuracy was the second-highest when the result edge images were used, and the IOU accuracy was the lowest when the edge images from the first layer were used. The mIOU is the average value of the IOU accuracy of each part.

5.2. The IOU Accuracy for the Proposed Models and the State-of-the Art Interactive Segmentation Model

The IOU accuracy of the target object of IOG [3] is higher than the accuracy of the proposed models. According to the IOG [3] paper, the IOU accuracy of object segmentation with the DeeplabV3+ [20] model trained with color images is about 90%. The IOU accuracy of object segmentation with proposed model 1 which is applied with DeeplabV3+ [20] is about 88%. The experimental results showed that the model trained with color images segmented objects more accurately than the proposed models trained with edge images. However, in the case of part segmentation, the accuracy of the proposed models trained with edge images was higher than that of IOG [3]. In particular, in the case of proposed model 1 using DeeplabV3+ [20], the mIOU exceeded 60%. Compared to the proposed models with edge images, the interactive segmentation model trained with color images has lower segmentation accuracy when texture information is not abundant or when the texture information when training and the input texture information are very different. Proposed model 2 using EfficientNetV2 [22] as an encoder and adding FineNet shows that the IOU accuracy increases when segmenting objects, but when segmenting parts, the accuracy of that is not higher than that of proposed model 1. Table 7 summarizes the results for each model.

Table 7.

The IOU accuracy of object and part segmentation for each interactive segmentation model.

5.3. The IOU Accuracy of Part Segmentation for the Proposed Interactive Segmentation Models and the State-of-the Art Semantic Part-Segmentation Model

We compared the proposed models with the interactive object segmentation model and semantic part segmentation. Table 8 shows the accuracy of part segmentation for each object class in the PASCAL VOC Part dataset. Avg. is the average of each object class mIOU. The interactive object segmentation model, which can segment the area of the target object or the part with user interaction and the input image, has higher accuracy than semantic part-segmentation models, which can segment the area of the part and recognize the class of part using only input image. In the interactive object segmentation models, proposed model 1 (using DeeplabV3+ [20]), with the highest mIOU accuracy, also had the highest average (64.8%).

Table 8.

The IOU accuracy of the part segmentation for each object class.

Although proposed model 1 did not have higher IOU accuracy for all object classes than other interactive segmentation models, the number of higher IOU accuracies for each object class was greater than among the comparison models. Even for the object class that did not have the highest IOU accuracy with proposed model 1, the difference between the accuracy of the model with the highest IOU accuracy and the accuracy of proposed model 1 was less than 1%. When segmenting the target part using user interaction, a model trained using edge images can segment more accurately than a model trained using color images.

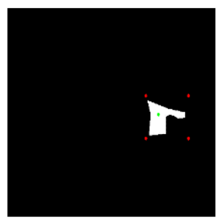

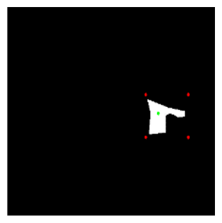

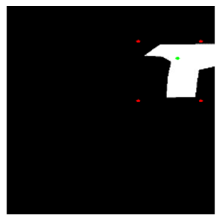

5.4. Comparison of Estimated Mask from IOG Model and the Proposed Model

When segmenting a part with the IOG model, the boundary of the estimated mask is similar to the boundary of the object; on the other hand, when segmenting a part with the proposed model that trained object segmentation with the edge image, the boundary of the estimated mask is similar to the boundary of the part. Table 9 compares each estimated mask obtained from the IOG model and the proposed model. The images of each table have large parts to segment. Looking at the train results in Table 9, in the case of the IOG model, the boundary of the estimated mask is similar to the boundary of the train, but in the case of the proposed model, the boundary of the estimated mask is similar to the boundary of the edge inside the train, which is similar to the boundary of the part. Although there is noise in the area to segment the part in the car of Table 9, the boundary of the estimated mask evaluated from the proposed model is similar to the boundary of the edge with noise. It can be seen that the boundary of the estimated mask from the proposed model, which trained only object segmentation without training part segmentation, is relatively accurate with the boundary of the part.

Table 9.

The estimated masks of parts from the IOG model and proposed model 1.

6. Conclusions

We have shown how to improve the IOU accuracy of part segmentation using a proposed model trained with edge images and user interaction. Through the experiment, we found edge images generated by edge detectors suitable for part segmentation. In addition, the proposed model trained with edge images has higher IOU accuracy of part segmentation compared to the existing segmentation model trained with color images. In future research, we plan to study a deep-learning model suitable for edge images so that object or part segmentation can be performed more accurately. We hope that our research will be utilized in various fields as well as in the interactive segmentation model.

Supplementary Materials

The following are available online at https://www.mdpi.com/article/10.3390/app112110106/s1: the proposed model 1 detail.xlsx; the details of proposed model 1; the proposed model 2 detail.xlsx; and the details of proposed model 2.

Author Contributions

Conceptualization, J.-Y.O.; methodology, J.-Y.O.; software, J.-Y.O.; validation, J.-Y.O.; writing—original draft preparation, J.-Y.O.; writing—review and editing, J.-M.P.; supervision, J.-M.P.; project administration, J.-M.P.; funding acquisition, J.-M.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Korea Institute of Science and Technology (KIST) Institutional Program under Project 2E31081.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Rother, C.; Kolmogorov, V.; Blake, A. Interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 2012, 23, 3. [Google Scholar]

- Sofiiuk, K.; Petrov, I.; Barinova, O.; Konushin, A. f-BRS: Rethinking backpropagating refinement for interactive segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020, Seattle, WA, USA, 14–19 June 2020; pp. 8623–8632. [Google Scholar]

- Zhang, S.; Liew, J.H.; Wei, Y.; Wei, S.; Zhao, Y. Interactive object segmentation with inside-outside guidance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020, Seattle, WA, USA, 14–19 June 2020; pp. 12234–12244. [Google Scholar]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 6, 679–698. [Google Scholar] [CrossRef]

- Everingham, M.; Eslami, S.A.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes challenge: A retrospective. Int. J. Comput. Vis. 2015, 111, 98–136. [Google Scholar] [CrossRef]

- Hariharan, B.; Arbeláez, P.; Bourdev, L.; Maji, S.; Malik, J. Semantic contours from inverse detectors. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 991–998. [Google Scholar]

- Chen, X.; Mottaghi, R.; Liu, X.; Fidler, S.; Urtasun, R.; Yuille, A. Detect what you can: Detecting and representing objects using holistic models and body parts. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2014, Columbus, OH, USA, 23–28 June 2014; pp. 1971–1978. [Google Scholar]

- Michieli, U.; Borsato, E.; Rossi, L.; Zanuttigh, P. Gmnet: Graph matching network for large scale part semantic segmentation in the wild. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 397–414. [Google Scholar]

- Xu, N.; Price, B.; Cohen, S.; Yang, J.; Huang, T. Deep grabcut for object selection. arXiv 2017, arXiv:1707.00243. [Google Scholar]

- Forte, M.; Price, B.; Cohen, S.; Xu, N.; Pitié, F. Getting to 99% accuracy in interactive segmentation. arXiv 2020, arXiv:2003.07932. [Google Scholar]

- Maninis, K.-K.; Caelles, S.; Pont-Tuset, J.; Van Gool, L. Deep extreme cut: From extreme points to object segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2018, Salt Lake City, UT, USA, 18–22 June 2018; pp. 616–625. [Google Scholar]

- Lin, Z.; Zhang, Z.; Chen, L.-Z.; Cheng, M.-M.; Lu, S.-P. Interactive image segmentation with first click attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2020, Seattle, WA, USA, 14–19 June 2020; pp. 13339–13348. [Google Scholar]

- Jang, W.-D.; Kim, C.-S. Interactive image segmentation via backpropagating refinement scheme. In Proceedings of the Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2019, Long Beach, CA, USA, 15–20 June 2019; pp. 5297–5306. [Google Scholar]

- Geirhos, R.; Rubisch, P.; Michaelis, C.; Bethge, M.; Wichmann, F.A.; Brendel, W. ImageNet-trained CNNs are biased towards texture; increasing shape bias improves accuracy and robustness. arXiv 2018, arXiv:1811.12231. [Google Scholar]

- Wang, H.; He, Z.; Lipton, Z.C.; Xing, E.P. Learning robust representations by projecting superficial statistics out. arXiv 2019, arXiv:1903.06256. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision 2015, Las Condes, Chile, 11–18 December 2015; pp. 1395–1403. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Liu, Y.; Cheng, M.-M.; Hu, X.; Wang, K.; Bai, X. Richer convolutional features for edge detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2017, Honolulu, HI, USA, 21–26 June 2017; pp. 3000–3009. [Google Scholar]

- Poma, X.S.; Riba, E.; Sappa, A. Dense extreme inception network: Towards a robust cnn model for edge detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision 2020, Snowmass Village, CO, USA, 5–9 January 2020; pp. 1923–1932. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV) 2018, Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2016, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Tan, M.; Le, Q.V. Efficientnetv2: Smaller models and faster training. arXiv 2021, arXiv:2104.00298. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).