1. Introduction

In an era when our daily life has become penetrated by the applications of artificial intelligence (AI) and machine learning, data has become the “new dollar”. We generate massive amount of data every day in fields like computer vision and natural language process (NLP). Thus, one of the main challenges is how to find and define useful features for identifying objects or distinguishing fake news. Particularly in the domain of NLP, word selections, word relations, and semantic meanings are studied to extract hidden information behind words. For instance, Bidirectional Encoder Representations from Transformers (BERT) explores word sequence to gain contextual meanings of an article [

1]. Text classification using Convolutional Neural Network (CNN) extracts features from words themselves [

2]. For these approaches, we receive mountains of data as input and try our best to conduct dimensional reduction to acquire useful features.

However, data-hungry methods face legal and ethical constraints. More governments are imposing stringent requirements on the use of personal data. The European Commission issued the General Data Protection Regulation in 2016 to protect personal data and privacy [

3]. Similarly, the Chinese government announced the Data Security Law and Personal Data Privacy Law, which came into effect in 2021 [

4,

5]. Meanwhile, these black-box algorithms receive increasing criticism regarding potential biases and manipulation [

6,

7]. Therefore, there is an emerging trend that AI will rely less on big data and more on reasoning ability [

8]. This means training models to approach problems and tasks using more common sense and ready expertise.

Following the return of ownership of personal data to users’ hands, it is becoming harder to acquire detailed data without individuals’ consent. More often, what we can access is only names. However, certain demographic attributes like age and gender are crucial to make good recommendations or conduct marketing events. Under the scenario where only minimum data is available, one of the possible ways is to infer results by considering the contextual environment. In this paper, a method to estimate age and gender using names only is demonstrated.

The styles of character-based names from different countries or regions are varied. For example, Taiwanese names mostly consist of three words like “王大明” or “陳淑芬”; Two-character names are more common in China, such as “張浩” or “李燕”; Japanese names usually include more characters like “いとうひろぶみ” or “あべしんぞう”. When we study a name, there are not enough inherent features. It is possible to study the last name, first name, characters, or word pronunciation. However, it is insufficient to generate useful results based on these features only. Hence, creating additional features by considering contextual environments, such as culture, society, history, or belief, to extend our feature selections is worthy of study. The corresponding features could be more representative than those directly extracted from names. With the attempt to enlarge our feature basis, we explore various features, such as character combination, pronunciation, fortune-telling elements, and zodiac, to predict the age and gender of a given name.

This paper aims to predict age and gender based on a given name considering local cultural and social contexts. In Taiwan, the naming rules, anthroponymy, usually reflect parents’ blessing or expectations of their children. For example, a female name may contain characters or radicals with the meaning of beauty or elegance, such as “美“ or “妍”. In older generations, the parents may prefer the character “美“, which is directly translated as beauty, while modern parents may prefer the character “妍“, which implies beauty in a more implicit way. Different generations have their own preferences in choosing characters to represent the same meaning. Furthermore, fortune-telling elements may be considered during the naming process because of Chinese tradition. The five elements (Gold “金”, Wood “木”, Water “水”, Fire “火”, and Earth “土”), fortune map “三才五格”, and the 12 Chinese zodiacs are popular fortune-telling characteristics. For instance, if a person’s fortune map shows a lack of “Water” according to the fortune teller, the parents may give a name with the radical “Water” like “源”.

In addition to the above features, how to pronounce a name, the domain of phonology, is also discussed, as we believe it could be an important feature for age and gender prediction. In previous linguistic research, name–gender relations have demonstrated that phonetic characteristics provide evidence about identifying the gender of a name [

9]. The pronunciation of a name contains vowels, consonants, and tones (though there are some characters pronounced as soft tone, such as “們“ or “呢“, they are not applied to names). We further attempt to apply phonetic characteristics for age prediction. Finally, a cross-border experiment using ethnic-Chinese Malaysian names is conducted to examine the generalizability of the model. The major contributions of this paper are listed below.

Create additional features beyond a given name, such as fortune-telling elements, Chinese zodiac, and word radical, from the contextual environment.

Explore how the name features impact the prediction results of age and gender estimation.

Examine the generalizability of the model trained by Taiwanese names on bilingual names (Chinese/English names) and ethnic-Chinese Malaysian names.

Present the methods of preprocessing name data from multiple sources in Taiwan and Malaysia.

3. Materials and Methods

The goals of this paper are to predict the gender and age interval of an individual using Taiwanese names and to verify the generalizability of the proposed framework on bilingual names and ethnic-Chinese Malaysian names. Meanwhile, the names used in this research are focused on their given names. For the Taiwanese name dataset, the problem is addressed as:

Definition 1. Given a Taiwanese name, where C is a set of Chinese characters;can be presented aswhereandis a set of Taiwanese family names. We denote a model f to estimate the age interval ŷ ∈ {[y, y + 5) | y ∈ {1940, 1945, ..., 2010}} of an input name, such that ŷ = f().

The basic features of this problem are character-based features that can reflect the meanings of the name itself and then help with understanding the popular characters used in different generations. By precisely capturing these features, the results of age estimation can reach high accuracy.

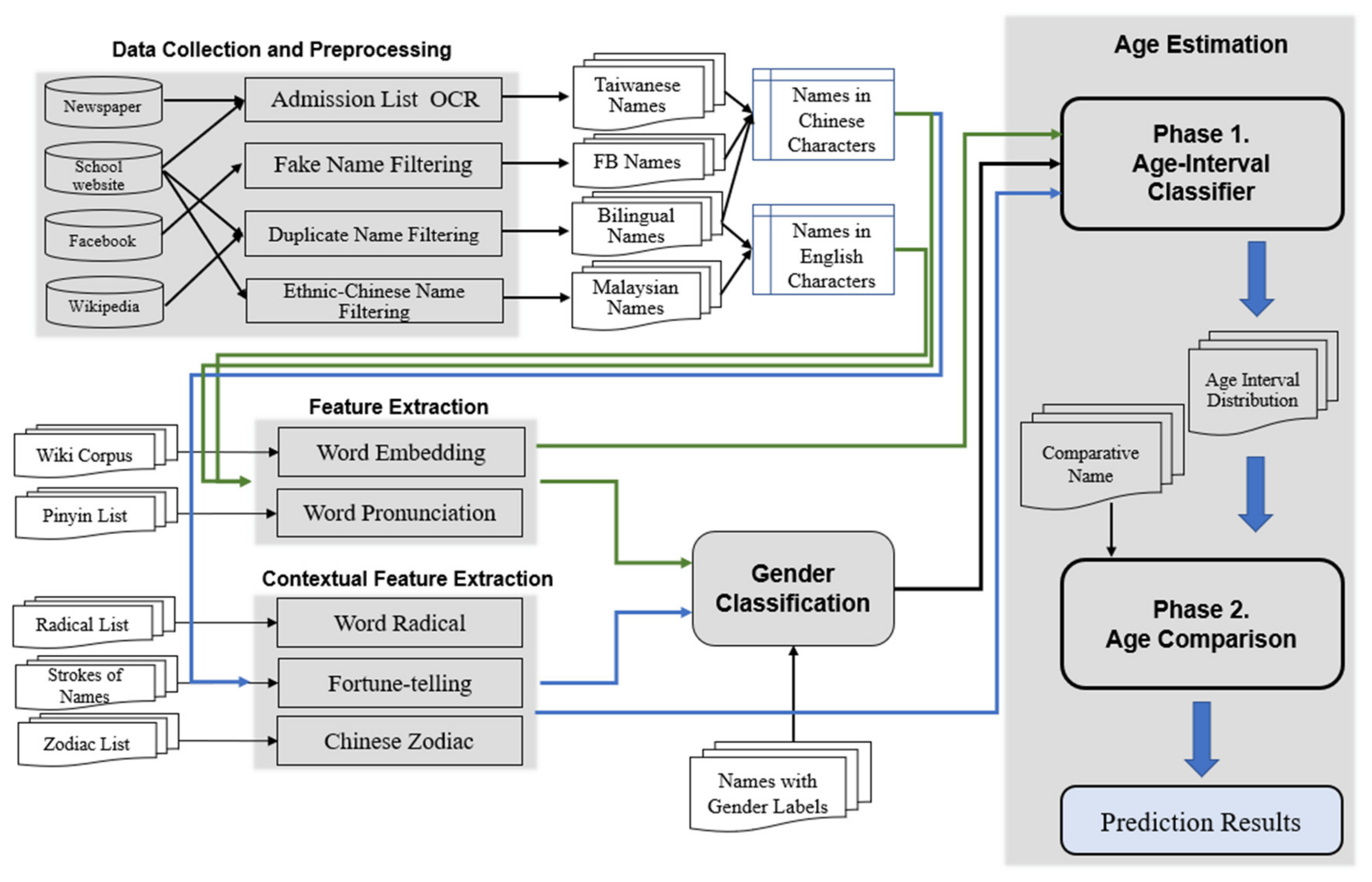

Naming customs vary among different ethnic groups. Therefore, the whole task was divided into two phases. In the first phase, we constructed features based on given names and then built an age-interval classifier. In the second phase, to examine the generalizability of the model, the Taiwanese Public Figure Name dataset and the Ethnic-Chinese Malaysian Name dataset were applied to conduct cross-border tests.

According to the statistics of the collected data, the range of birth year was from 1940 to 2010. Hence, the output age values ranged from 1940 to 2010. An overall framework is presented in

Figure 1.

3.1. Data Collection

Taiwanese names are based on Chinese characters. In this paper, four datasets were collected from multiple sources: the Taiwanese Real Name dataset, the Taiwanese Social Media Name dataset, the Taiwanese Public Figure Name dataset, and the Ethnic-Chinese Malaysian Name dataset. The former two datasets were collected from our previous work [

43]. The details of the collection process are elaborated below.

3.1.1. Taiwanese Real Name Dataset

Most Taiwanese people follow the same path for receiving education—sitting an entrance examination before each phase of school entrance. The examinations were held by the Taiwanese government Joint College Entrance Examination from 1954 to 2002. The names of students who passed the examinations were publicly released in various formats over time. More than 100,000 high school graduates took the examination each year. To collect as many names as possible, the real-world names were collected in three ways.

(A). Newspaper Optical Character Recognition (OCR)

: In the early stage, the Taiwanese government published the student names along with their qualified schools in newspapers. The scanned photos of the lists were downloaded, and the names were recognized by

ABBYY FineReader software, which performs best for old newspapers. (B). College Admission List: The electronic versions of the admission lists have been available since 1994. The files of Taiwanese names on the admission lists from 1994 to 2012 were acquired from the website of Professor Tsai [

44]. (C). Graduation Name List: To obtain extended coverage of the names collected, the graduation name lists from reunion websites were gathered. The data were manually collected using suitable keywords on the search engine.

3.1.2. Taiwanese Social Media Name Dataset

Additionally, Taiwanese names from the popular social media website, Facebook (FB), were collected. Although the registration process for FB does not request a real name, users tend to have a username close to their real one. Meanwhile, there are several fanpages that provide fortune-telling games that attract many Taiwanese users. Users provided their birth dates as input and received replies, such as “Excellent,” regarding their fortune of love. The data was included as the FB dataset.

3.1.3. Taiwanese Public Figure Name Dataset (Bilingual Names)

To explore the influence of name features on age-interval prediction regarding a person who has a Chinese name and an English name simultaneously, the bilingual names of Taiwanese public figures from Wikipedia and Taiwan local university faculty websites were collected. The process was manually collected using keywords, such as “Taiwanese actors,” “Taiwanese athletics,” and “Taiwanese,” and obtaining professors’ names from official websites. The data includes their Chinese name, English name, gender, and year of birth. As a result, a total of 1002 Taiwanese public figure names were collected for the years 1900–2009.

3.1.4. Ethnic-Chinese Malaysian Name Dataset

Due to the privacy policy, name data in Malaysia can only be collected from the university graduation lists. We downloaded the data from the Multimedia University graduation portal from 2000 to 2018, comprising 19 graduation sessions in total. After combining all the names and removing those with non-Chinese name formats, 30,715 names were collected. By looking at a person’s degree, their age can be easily estimated using Equation (1) and

Table 1, as shown below.

The overview of the datasets is shown in

Table 2. As the collected names were not evenly distributed among different datasets and age intervals, the data size was re-sampled in the following stages.

3.2. Data Preprocessing

As our datasets were collected from multiple sources in Taiwan and Malaysia, the regular name input was prepared for future manipulation. The proposed method is two-step data preprocessing for each dataset.

3.2.1. Name Filtering

Taiwanese names are based on Chinese characters, basically two to four characters. Thus, the names with non-Chinese characters and those that have more than four characters were dropped. For Taiwanese real names, duplicate names on school lists were excluded, as they may be the same person in different grades. For names collected by the OCR process, the simplified Chinese characters were switched into the traditional Chinese characters used in Taiwan. For Taiwanese social media names, duplicate names and IDs were excluded to avoid the same person playing fortune-telling games multiple times.

Malaysia is a multi-ethnic country, with people originating from China, Taiwan, Malay, India, etc. Based on the study on Malaysian naming culture, we manually labeled the Malaysian name dataset by classifying ethnic groups. The formats of names from different ethnic groups vary. For Malay, the way of naming consists of the word “BIN” or “BINT”; for Indian, “A/L” or “A/P” is shown in their names; as for Chinese, the names usually contain no more than four words. As our study is focused on ethnic Chinese, all the names with non-Chinese name formats were removed. An example of labeling ethnic groups is presented in

Table 3.

3.2.2. Family Name Segmentation

According to the latest statistics from the Ministry of Interior in Taiwan, there are 1832 family names. The top 100 family names account for 96.57% of the total population of Taiwan. We only retained the names in which the family name N (Last) belongs to the top 1500 family names. Most Taiwanese family names are a single Chinese character, such as “陳” (Chen). However, some of them combine two Chinese characters, such as “張簡” (Zhang Jian). Some people add another’s family name in front of theirs because of marriage. Although the probability of having a family name with two Chinese characters is low, our research took this scenario into account.

As different countries have different sequences of first and family names, the naming style should be learned first. For the Taiwanese style, the family name is arranged after the first name. Conversely, for the Malaysian style, the family name is arranged before the first name. By identifying the styles, family name segmentation can be conducted for the English names of Taiwanese and Malaysian names. An example of English family name segmentation is shown in

Table 4.

3.3. Feature Extraction

In Taiwan, when parents name their child, they may consider their expectations and blessings, fortune-telling elements, pronunciation, social factors, etc. The characters chosen for different genders might vary as well. The features below are extracted from the name itself or combined with contextual considerations when giving a name. To study names, knowledge of anthroponymy and phonology are applied.

3.3.1. Word Meaning (W)

The name characters represent different meanings, which usually link to parents’ wishes. With the different age generations and genders, parents will choose different words for their children. For example, parents in the early generations may give the name “添財”, which means “bringing fortune” under the background of living in a rural area. However, such a name is rare in recent years, as the standards of living have been lifted following economic growth.

Usually the meaning of a word depends on which key phrase it is linked to. Taking a word “圓” (circle) as an example, it can be used as “圓滿” referring to being perfect, or “圓潤” referring to being mellowed. However, what is critical for naming is capturing the meanings of the single words used in names. The similarity among words is more important. Therefore, the purpose of training is to find similar words of the same meaning. Different from the transformer-based models such as BERT and XLNET which explore multiple meanings of the same word used in different contexts [

17], the

Word2Vec model with the focus of capturing the word meanings was chosen here [

9].

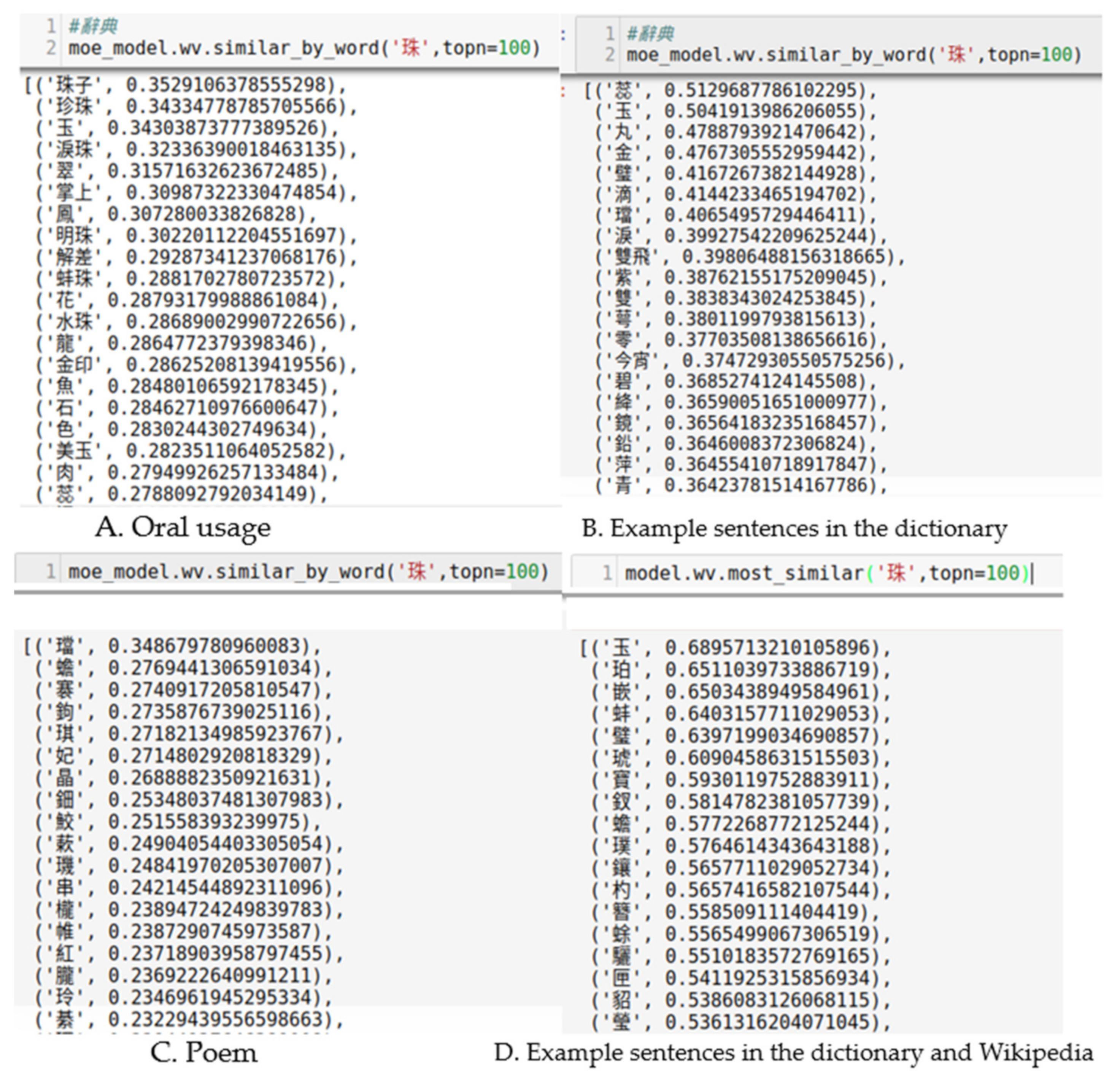

Then, the

Word2Vec model was trained with different settings, including oral usage, example sentences on the dictionary, poem, and Wikipedia sentences, shown as

Figure 2. The results demonstrated that the combination of example sentences on the dictionary and Wikipedia performed best and therefore was decided to be the word embedding model. The reason is because training on example sentences can do better in capturing the similarity of words than training on other data.

3.3.2. Fortune-Telling Feature (F)

In Taiwanese culture, fortune telling is the ancient wisdom to infer the future of individuals [

45]. There was a period when people might refer to the suggestions, in terms of lucky or unlucky, on the Chinese Fortune Calendar when choosing a day for moving or traveling [

46]. Still now, people may ask for help from a fortune teller to give a suitable name. People tend to believe that a good name might be helpful to the fortune of their children, and there is no harm to include fortune-telling during naming. Fortune tellers utilize varying techniques, such as palm and face reading “手相和面相”, the four pillars: hour, day, month, and year of birth “生辰八字”, or name strokes “姓名筆劃” to predict personality, marriage, occupations, and future incidents. Therefore, Taiwanese naming rules with fortune-telling elements, anthroponymy, have become part of the tradition [

47].

There are two primary rules used in Taiwanese anthroponymy: a fortune map “三才五格” and a Chinese zodiac “生肖”. In the fortune map, a name is originated from three basics (“三才”-天格, 地格, 人格) and extended to five elements (“五格”-天格, 地格, 人格, 外格, 總格). “天格” refers to the family name, which is related to fortune during childhood. “地格” refers to the first name, which is related to fortune during adolescence. “人格”, as the core of a name, refers to the first character of the name, which can affect the whole life. “外格” is related to fortune during middle age. “總格” is related to fortune during old age. As a total, the fortune of an individual is completely presented through a fortune map. The rule of a fortune map, which calculates the strokes of Chinese characters of a name, is illustrated in

Table 5. The Chinese zodiac will be discussed in the next section.

3.3.3. Chinese Zodiac (Z)

The Chinese zodiac is a classification method that assigns a representative animal, namely Rat, Ox, Tiger, Rabbit, Dragon, Snake, Horse, Goat, Monkey, Rooster, Dog, and Pig, for each year in 12 consecutive years. The 12-year cycle continuously repeats itself. Each animal has its own unique characteristics, which are important references for Taiwanese anthroponymy. Some parents may hope their child is born in a specific year of the zodiac, especially the year of the Dragon. According to Chinese culture, being born in this year is considered auspicious [

48].

As the Chinese zodiac repeats every 12 years, the order of the Chinese zodiac was utilized as a feature. Based on the age interval of five years, there are 12 combinations from 1945 to 2004, as shown in

Table 6. When the model predicts the age of a Taiwanese name, the feature of the Chinese zodiac can enhance the accuracy. For example, according to the model, an age estimation is possibly in either interval A (Rooster, Dog, Pig, Rat, and Ox) or interval B (Tiger, Rabbit, Dragon, Snake, and Horse) with equal probability. Under this circumstance, if a Chinese zodiac characteristic of Pig can be identified in the name, the model may classify the name into interval A.

3.3.4. Word Radical (R)

A Chinese radical is a graphical component of a Chinese character under which the character is listed in the dictionary. The radical, as an important component in Chinese fortune-telling, is counted as the number of strokes of a Chinese character. Additionally, some families require different radicals for the names of different generations. The most commonly accepted radicals for traditional Chinese characters consist of 214 entries.

Table 7 below is an example of a traditional Chinese radical table. One-hot encoding was used to express this categorical information.

3.3.5. Word Pronunciation (P)

The pronunciation of each word of the given name was utilized as a feature. Hanyu Pinyin, often abbreviated to Pinyin, is a system of phonetic transcriptions of standard Chinese in Taiwan. The system includes four diacritics denoting tones, as shown in

Table 8. Pinyin without tone marks is used to spell Chinese words in Latin alphabets. Unlike European languages, which consist of clusters of letters, the fundamental elements of Pinyin are initials and finals. There are 23 initials and 33 finals, as indicated in

Table 9. Each Chinese character has its corresponding Pinyin, exactly one initial followed by one final. Based on the rules of Pinyin, we converted Chinese characters into Pinyin and further separated Pinyin into initials, finals, and tones. An example of Pinyin rules utilized on Chinese characters is depicted in

Table 10.

When a Taiwanese name is given, usually there is a corresponding English name translated from the pronunciation of the Taiwanese name. The tones of Chinese characters are neglected during the translation process. For instance, the names “偉成” and “薇澄” are both pronounced as “Wei-Cheng” but in different characters. Therefore, it is common for different Chinese characters to have similar pronunciations in English. Meanwhile, the features, such as fortune-telling or Chinese zodiac, cannot be applied to English names for making predictions. However, even with such differences, it is interesting to learn whether the English name directly translated from the Taiwanese name can be used to estimate an individual’s age.

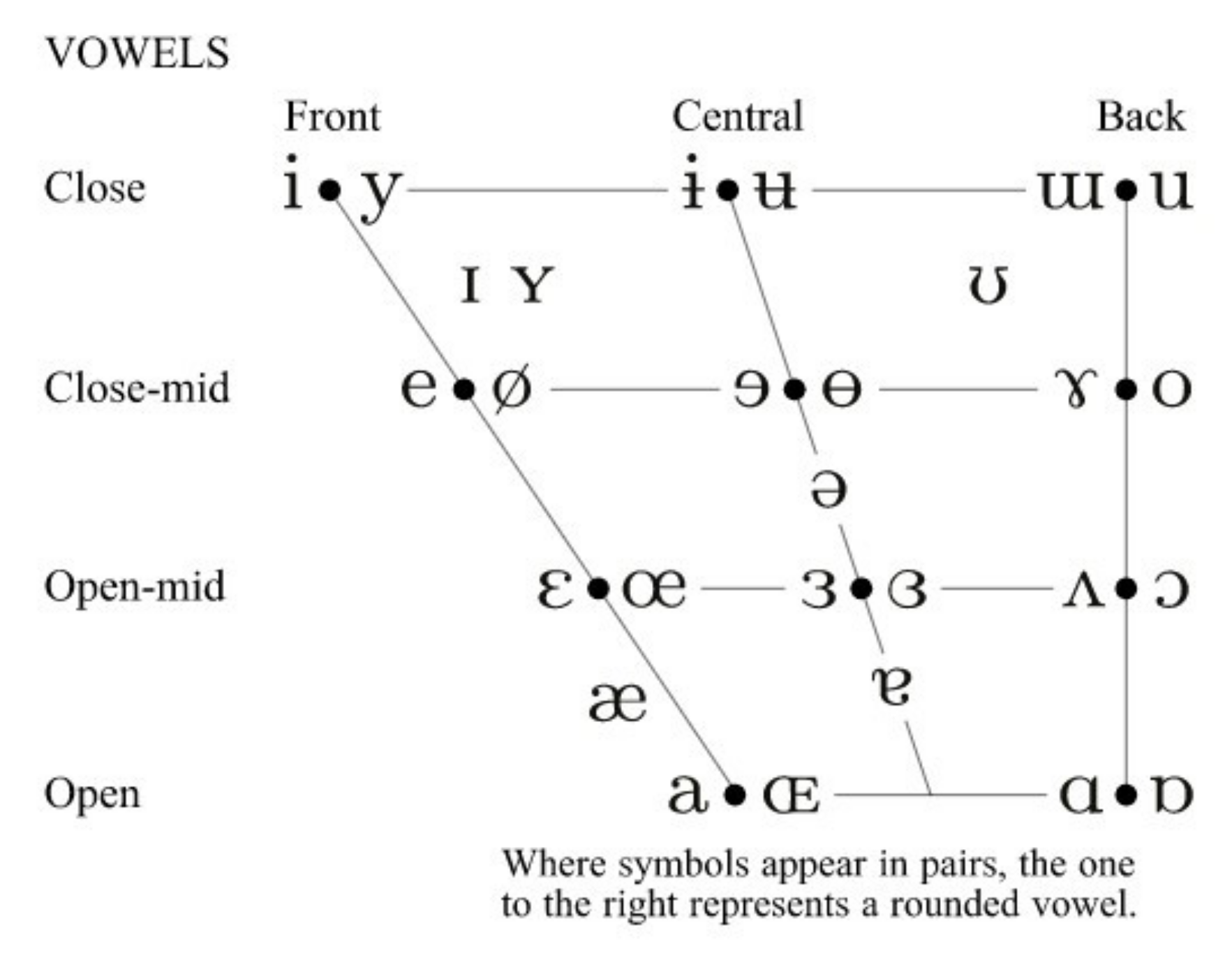

Like many languages, English has wide variation in pronunciation, both from historical development and from dialect to dialect. Here, we indicated the pronunciation of English words with the International Phonetic Alphabet (IPA). This is a system based on phonetic notation of Latin alphabets designed by the International Phonetic Association to be the standardized representation of the sound of spoken language. The main advantage of using the IPA instead of other forms is that we can apply it to multiple languages in the future without significantly changing the format.

Figure 3 and

Figure 4 show the IPA symbols for consonants and vowels separately [

49].

Although the IPA is commonly recognized to represent the pronunciation of English words, some names still failed to be converted when we were working on our datasets. This failure stemmed from the differences of various dialects, such as Mandarin, Hokkien, Cantonese, Teochew, etc. The IPA cannot recognize dialect, so those words are unconvertable. Considering this situation, we then looked at the consonants and vowels to get the pronunciation of names.

The modern English alphabet is a Latin alphabet consisting of 26 letters. The letters A, E, I, O, and U are considered vowel letters, while the remaining letters are consonant letters. According to this rule, the English names, directly translated from their Taiwanese names, were separated using Algorithm 1. An example of separating an English name is shown in

Table 11. According to the principle of Chinese pronunciation, a word “Lork” consists of only one consonant “L” and one vowel “ork”. Though “r, k” does not belong to vowels, there is no further separation for names.

| Algorithm 1. Syllable separation of English names |

| Given: V = [A, E, I, O, U, a, e, I, o, u] |

| con = [ ] |

| vow = [ ] |

| for wordlen in word and split into each letter do |

| length ← length of word |

| for i in the range of length do |

| current ← wordlen[i] |

| if current is not V then |

| add current into con |

| move current to next length |

| else if current is V then |

| split current |

| vow ← the rest of the wordlen |

| end if |

| end for |

| end for |

3.3.6. Gender Probability of Name (G)

A classifier was trained to discriminate the gender of a name with the word meaning, word radical, word pronunciation, fortune-telling feature, etc. An ensemble supervised machine-learning approach, the Random Forest Classifier (RFC), was empirically selected to be our training model. The labeled data was acquired in two ways: the names of single-gender education schools and FB names with gender labels. In Taiwan, there are several single-gender schools in which all the students are either male or female. FB names were collected through the fortune-telling games on the website, in which users need to input gender and birthday (such as M19850312, “M” is male, “19850312” is the birthday) and receive a reply of their love fortune. The model was trained using the combination of the two types of labeled data. The output of gender probability was used as a feature.

3.4. Model Design

The model adopted additional features and was trained based on the prior framework to improve the performance [

43]. Meanwhile, it was further applied to bilingual names and ethnic-Chinese Malaysian names. First, all the name features mentioned above—including word meaning, fortune-telling feature, Chinese zodiac, word radical, and word pronunciation—were utilized to classify the gender of a given name. Second, the gender classification result together with other features were used as input to generate probability distribution in each age interval.

In practice, humans have several judgments to estimate the age generation of a given name, but they may have difficulty in giving precise estimation without any assisted method or tool. For instance, we may judge the name “淑芬” as popular in early generations. However, it is difficult to ascertain whether the name belongs to the 50s, 60s, or even older age groups. If reference information with the known age is provided, people can compare and make judgments about the age prediction more precisely. Following this concept, a two-layer comparative estimation model is proposed, including an age-interval classifier and an age comparison mechanism, as illustrated in

Figure 5. In the first layer, an individual’s age is regarded as a multi-label question and corresponding multiple answers. In the second layer, inspired by Abousaleh et al.’s work, an estimated age interval is selected after multiple comparisons [

42].

To speed up the comparison time and minimize the comparison cycles, experiments were conducted to investigate the optimized comparative name size and initial point using the RFC. For each age interval, the best size was 100 names. Additionally, starting from the middle point can effectively reduce the required comparison cycles.

Figure 5 illustrates how the two-layer comparative estimation model works. In the first layer, with the designed features of name N, the neural network outputs the probability distribution of each age interval. The comparative mechanism is demonstrated in the second layer. The right side provides the details of the comparison process. The above example shows that, after the first layer, the two possible candidates for age estimation of the input N are 25 and 65 years old. To further help with precise prediction, three reference names with known ages were selected, namely “楊小琳” (A1) in 31 years old, “陳淑芬” (A2) in 59 years old, and “蔡阿滿” (A3) in 75 years old. Meanwhile, though there should be many age intervals in this example, a simple way that five labels were used to represent age intervals of 20~29, 30~39, 40~49, 50~59, and 60~69 is illustrated here. The comparison process follows the following steps: in Round 0, both Label 20~29 and 60~69 receive 1 point according to the initial result from probability distribution; next, in Round 1, N is estimated to be older than A1, so 1 point is added to Labels 30~39, 40~49, 50~59, and 60~69. Following in Round 2, N is again estimated to be older than A2, therefore, Labels 50~59 and 60~69 get 1 point. Finally, in Round 3, N is estimated to be younger than A3, all the labels receive an additional 1 point. As a result, Label 60~69 accumulates the highest 4 points. Hence, the age estimation of the name N is 65 years old.

As for the bilingual names, the model trained on Taiwanese names was applied. However, due to linguistic differences, the name features that can be extracted from English names are more limited compared to those from Chinese names. Only word embedding and word pronunciation can be utilized as the features for English names.

To further examine the cross-border learning generalizability of the model, the dataset from a different country was selected: ethnic-Chinese Malaysian names, expressed in English. In Malaysia, ethnic Chinese may speak in different dialects, such as Cantonese, Hokkien, Teochew, or Chinese. Each dialect has its own unique pronunciation. The naming rules might be affected by the dialect used by a family. In this experiment, Malaysian names were used as the testing dataset. Like the previous process, an age interval classifier was first established using the RFC, which generated the probability distribution of each age interval. Then, the target name was repeatedly compared with a reference name with the known age until the target name was in the same age interval as the reference name.

4. Experiment and Results

4.1. Experimental Setup

The model was trained on Taiwanese names, and then the generalizability was verified on bilingual names and ethnic-Chinese Malaysian names. For Taiwanese real names, OCR was used to scan the downloaded images from college admission lists in newspapers from 1958 to 1994. The newspapers from 1988 to 1992 were missing; therefore, a total of 31 years of Taiwanese names were collected. Meanwhile, the electronic versions of the college admission lists were available from 1994 to 2012, in which the file of 2009 was missing. Additionally, student names from over 50 school yearbooks were acquired. For Taiwanese social media names, FB names and all the posts of fortune-telling games were collected until September 2017. In total, there were 4.04 million messages from 48 fanpages. The statistics of the data are presented in

Table 12 and

Table 13.

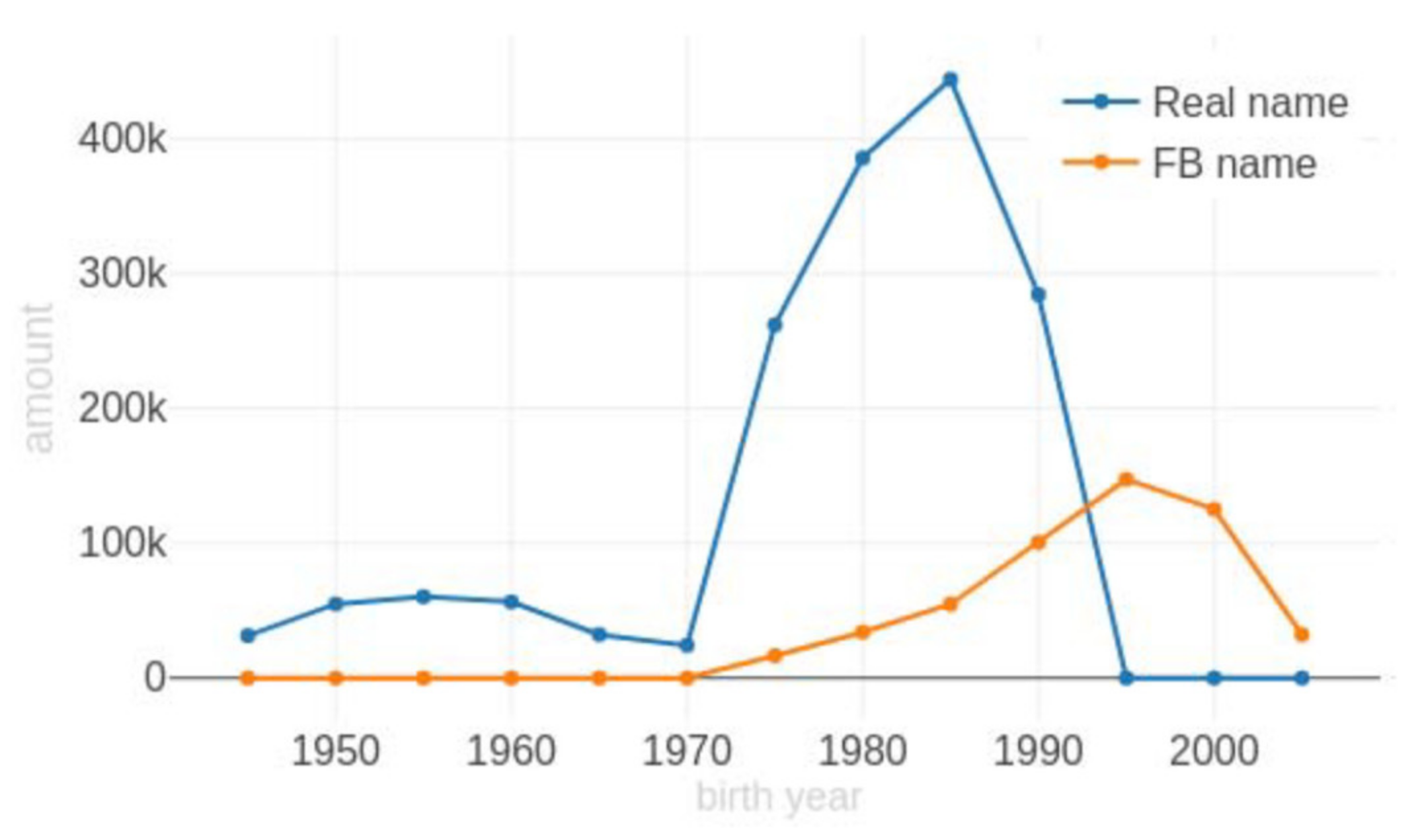

Here, two observations can be identified regarding the two datasets. First, people tend to use fake names or nicknames instead of real names on social media. Even with a fake-name filtering step on social media names, stacked first names (such as “莉莉”) and single-character first names (such as “建”) appear more often compared to real names. According to the statistics, the ratio of stacked first names on social media is 3.4%, compared to 0.27% on real names; while the ratio of single-character first names is 8.14%, compared to 1.4% on real names. Second, the age distributions of the two datasets are different, as indicated in

Figure 6, which refers to the unbalanced data issue.

In the following experiments, each age interval was set of five years, starting with 1945. To solve the data imbalance issue, the continuous years with sufficient data sizes were selected such that balanced data was reached for each age interval, shown as

Table 14.

For Malaysian names collected from the university graduation lists, the age may be varied for a person with a doctorate degree. Thus, the names with “Doctor of” were removed from the Malaysian name dataset. Additionally, the same sampling approach was adopted to generate a balanced dataset. Only experiments for age-interval prediction were conducted because the gender label is not available for Malaysian names.

4.2. Evaluation Method

To evaluate the performance of the proposed model, the experiments were designed in three directions. The first direction compared the performance using different features and feature combinations for gender prediction and age-interval prediction. Through a series of experiments, how these features impacted the prediction results can be elaborated. The second way examined the generalizability using bilingual names—Chinese names and their corresponding English names. Finally, a different country dataset, Malaysian names, was applied to understand how our proposed model and features support cross-border situations.

In this research, different methods were adopted to evaluate the performance of gender and age-interval prediction. Gender prediction was treated as a binary classification task; therefore, prediction accuracy and the F1 score were applied. The age-interval prediction was regarded as a multi-label classification task with multiple answers. Thus, the metrics of average year error and multi-answer accuracy were utilized to evaluate the performance. The year error calculates the gap between the predicted age interval and the exact birth year of the given name. For example, the model predicts that the age interval of “淑芬” is 1950–1954, and the birth year of this name is 1955. Then, the year error is considered as 1. The smaller the average year error, the better the performance of the proposed model. In this model, the minimum average year error is more important than higher multi-answer accuracy.

The data was divided into a training set and a testing set at 70% and 30%, respectively. The RFC and Multi-Layer Perceptron (MLP) were empirically selected as our classifiers during the model training. In this part, different settings used for uni-class classification of the age-interval classifier were checked, shown as

Table 15. The combination of RFC and MLP had the best performance. The two datasets (the Taiwanese Public Figure Name dataset and the Ethnic-Chinese Malaysian Name dataset) used to examine the generalizability of the model were excluded from the training and testing datasets.

4.3. Experimental Results

To demonstrate how the designed features can improve the prediction results and generalizability of the proposed model, the performance of the trained model will be discussed. Following this, the prediction results of the extended datasets will be examined.

4.3.1. Performance on Taiwanese Names

The overall performance of gender classification is demonstrated in

Table 16. It shows that even with the basic uni-gram character feature (U) or word embedding feature (W), the model can distinguish gender with high accuracy. Between these two, word embedding is more informative than uni-gram in terms of gender classification. To enhance the accuracy, features like first character (FC), second character (SC), word pronunciation (P), fortune-telling feature (F), zodiac (Z), and radical (R) were considered. All the additional features together can slightly improve the accuracy by 0.7%. Meanwhile, the second character was found to be better than the first character for identifying gender.

As for the age-interval prediction, similarly, word embedding (W) achieved better accuracy than uni-gram in both datasets, as shown in

Table 17. Unlike gender classification, the designed features (All denotes all the features except uni-gram) are more effective for age-interval prediction. The prediction accuracy was enhanced by 12.55% on Taiwanese real names and 17.44% on FB names. This implies that additional features are especially important for age-interval prediction.

Multi-label classification can be regarded as one of the performance indicators, in which the model only needs to match one of the correct labels.

Table 18 shows that the performance between the RFC and MLP is similar, but the performance of MLP dropped if more features were included.

4.3.2. Performance on Bilingual Names and Ethnic-Chinese Malaysian Names

Table 19 and

Table 20 below show that the features trained on Taiwanese names achieved slightly lower accuracy in gender prediction on Chinese names and age-interval prediction on bilingual names. Additionally, it follows a similar trend in which word embedding is more effective than uni-gram for gender and age-interval prediction. However, the performance of gender prediction on English names is poor. The possible reasons will be discussed in

Section 5.2.

There are two differences between ethnic-Chinese Malaysian names and standard English names. First, there are multiple Chinese dialects with different pronunciations used in Malaysia. Second, Malaysian names are usually disyllabic, written in two characters, but monosyllabic names, written in one character, are included as well. Therefore, the experiments were conducted based on the syllable: monosyllabic name denoted as

single name; while disyllabic name denoted as

without single name. The prediction results on Malaysian names were significantly worse than those on Taiwanese names and bilingual names, as shown in

Table 21. Additionally, word-embedding methods performed poorly compared to unigram-based approaches in terms of average year errors, as presented in

Table 21.

5. Discussion

5.1. Features’ Impacts on Prediction Accuracy

The model we proposed is based on six features: word meaning (W), fortune-telling feature (F), Chinese zodiac (Z), word radical (R), word pronunciation (P), and gender probability (G). These features can be categorized into two groups: direct features and contextual features. The Direct Features include word meaning and word pronunciation, which come directly from name characters. Taking the Chinese word “美” as an example, the word meaning is beauty; the word pronunciation is “Mei”. The Contextual Features include fortune-telling feature, Chinese zodiac, word radical, and gender probability, which originate from the Taiwanese cultural, social, and historic background. According to our experience, the Chinese word “美” is highly likely to belong to a female name.

The impact of these features on the prediction results can be discussed in three directions. First, considering how a single feature affects prediction results, the word meaning feature was found to be the most informative one. Using the word embedding feature alone, it can achieve 93.94% accuracy in gender prediction, as shown in

Table 22, and an average error year of 3.9785 (equal to 0.80 age-interval) for age-interval prediction, as indicated in

Table 23. However, the Chinese zodiac feature only provides 58.79% accuracy in gender prediction and generates an average error year of 8.8080 (equal to 1.76 age-interval) for age-interval prediction. The performance differences from different features are significant.

Second, if we utilize word-embedding with one-hot encoding as the basis, combining with only one other feature, the word pronunciation feature most successfully predicts gender, with 94.48% accuracy. This is very close to the highest level of 94.64% accuracy with multiple features. The combination of word embedding with other single features can achieve similar performance as well, as shown in

Table 24. As for the age-interval prediction, the word-embedding feature combined with Chinese zodiac reaches the lowest average error year of 3.6481 (equal to 0.73 age-interval), as shown in

Table 25. Therefore, word embedding with Chinese zodiac is the most effective feature pair for age estimation.

Finally, to achieve the best performance using all the available features, the feature combination of word embedding, Chinese zodiac, word radical, and unigram can reach the best accuracy—94.64% for gender prediction. However, interestingly, with only two features (word embedding and pronunciation), the model can also reach a similar accuracy of 94.48%. This also implies that, under the scenarios without Chinese contextual consideration, word pronunciation is quite informative for gender prediction, as shown in

Table 26. As for the age-interval prediction, the minimum average year error of 3.3878 (equal to 0.68 age-interval) can be reached using all the features, as indicated in

Table 27. Therefore, it can be concluded that, especially for complicated tasks such as age-interval prediction, contextual features effectively enhance the accuracy of prediction results besides the direct features. This finding further proves the importance of considering the contextual environment for prediction tasks when only minimal input is available.

5.2. The Model’s Generalizability on Bilingual Names

To examine the generalizability of our model trained on Taiwanese names, we utilized the Taiwanese Public Figure Names dataset, which contains bilingual names—Chinese and English names for an individual. The difference between the two different languages is that features like fortune-telling, Chinese zodiac, or word radical cannot be applied to English names. To make a fair comparison, only the features useful for both languages were selected.

Table 19 demonstrates the performance comparison for gender prediction using different features. First, it can be observed that by using fewer features, the prediction accuracy on Chinese/bilingual names (90.5%) is lower compared to that on the Taiwanese Real Names dataset. One possible reason is that the names collected from Taiwanese public figures are more unique, which cannot represent the normal distribution of Taiwanese names. Additionally, as a public figure, the name may be changed for various reasons. Therefore, their names are less inclined to follow the naming rules described above.

Second, the gender prediction accuracy on English/bilingual names is significantly lower compared to the results on Chinese/bilingual names. This is because when a Taiwanese name is translated into English, some features may be missing. For example, both “偉宏”and “薇虹” are pronounced “Wei Hong” in English. However, they have different word meanings and radicals. The former one is usually used for a male name, while the latter is often for a female. Due to the missing features, the gender prediction accuracy on English names is just slightly better than a random guess. This implies that additional research is required to acquire more useful features.

Table 20 shows the performance comparison for age-interval prediction based on two different methods: unigram-based and embedding-based with one-hot encoding methods. To explore more features, we further separated the word pronunciation feature (P) into consonant feature (S) and vowel feature (M), as elaborated in the Feature Extraction section. In general, embedding-based approaches have less average year errors than unigram-based methods. Among all the features, word embedding combined with pronunciation achieved the minimum average year errors of 4.6872 (equal to 0.94 age-interval) and 4.6915 (equal to 0.94 age-interval) for Chinese names and English names, respectively. The prediction errors for bilingual names were slightly higher than those for Taiwanese names. Unlike the results of gender prediction, the differences in age-interval prediction between bilingual names were negligible. It can be regarded as the same level of performance. Therefore, word pronunciation (P) is an informative feature for age prediction on bilingual names.

One interesting question here is as follows: for a name translated from Chinese to English, why is word embedding combined with the pronunciation feature informative for age-interval estimation but not for gender classification? A possible explanation is the language preferences in different generations. In early generations, parents may have preferred using Taiwanese. More recently, parents mostly speak in standard Chinese. In other words, parents in different generations have different preferences in terms of choosing characters and their corresponding pronunciations. Therefore, the model can still make useful age-interval prediction for English names after translation. However, the features useful for gender prediction were missing under the same scenario.

Therefore, it can be concluded that the generalizability of our model can be successfully extended to bilingual language for age-interval prediction. However, for gender prediction, we need additional features for English names.

5.3. The Model’s Cross-Border Generalizability on Ethnic-Chinese Malaysian Names

The model’s cross-border learning generalizability was further examined on a dataset collected from Malaysia: ethnic-Chinese Malaysian names, expressed in English. From

Table 21, it can be easily observed that all the average year errors from various single features or feature combinations are significantly larger than the results from previous experiments. The best performance of average year error of 7.2471 (equal to 1.45 age-interval) using uni-gram combined with the vowel feature is 2.14 times larger (average year error of 3.3878) than that on Taiwanese names. The worst performance can even reach an average year error of 18.3118 (equal to 3.66 age-interval).

The main reason is that multiple Chinese dialects used in Malaysia have different pronunciations. For instance, the surname “陳” is pronounced “Chen” in Chinese, but “Tan” in Hokkien, one of the Chinese dialects, as shown in

Table 28. Although the previous experiments on bilingual names received satisfactory results concerning age-interval prediction for Chinese and English names, the model did not present similar generalizability for the English names collected in Malaysia. In other words, the word pronunciation feature extracted from Taiwanese names using standard Chinese does not fit into Malaysian names pronounced in other Chinese dialects. Considering the large performance gap with the previous results, it is claimed that, unfortunately, the model trained on Taiwanese names cannot be directly applied to ethnic-Chinese Malaysian names. Thus, additional research exploring other features based on Malaysian names should be conducted in the future.

6. Conclusions

In this paper, making gender and age interval predictions from minimal data by considering the contextual environment of a given name was demonstrated. It showed that not only data itself but also its contextual factors could be the input to generate valuable outcomes. The designed features—such as Chinese zodiac, fortune-telling feature, and word radical—provide insights into how these factors improve prediction accuracy based on cultural, social, and historical understandings.

In contrast to previous studies, we presented a complete framework utilizing direct information and contextual consideration as input for making predictions. This framework provides insights into how AI can create value under the scenarios of limited available data. Furthermore, the generalizability of the model was examined using bilingual and cross-border datasets. The results show satisfactory prediction accuracy for Chinese names. However, the different pronunciations of multiple Chinese dialects used in Malaysia lowered the transferability of the model trained on standard Chinese. This implies that further studies exploring the additional features of different languages could be a worthwhile future research direction.