Energy-Efficient Cloud Service Selection and Recommendation Based on QoS for Sustainable Smart Cities

Abstract

Featured Application

Abstract

1. Introduction

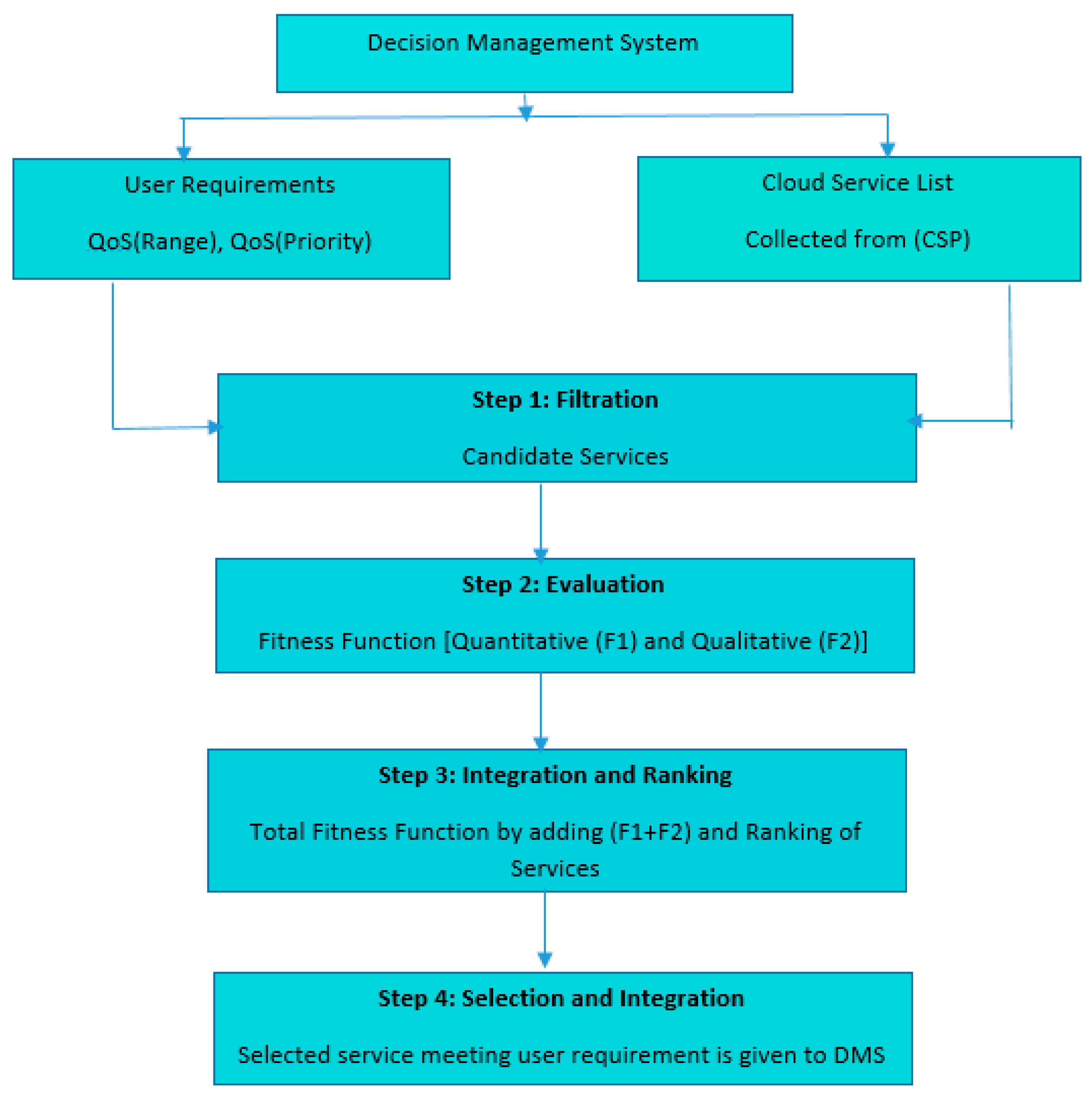

- The novel algorithm called cloud service selection and recommendation (CS-SR) is proposed. The algorithm entails four phases, including filtration, evaluation, integration, and last is selection and recommendation. The outcome of CS-SR is two-fold. (a) Offering a QoS-based service selection, (b) reducing overall execution time required to find optimal service;

- The filtration phase will reduce unnecessary comparison by filtering out candidate services;

- The proposed approach makes use of quantitative and qualitative attributes that will improve the overall efficiency of our selection and recommendation approach;

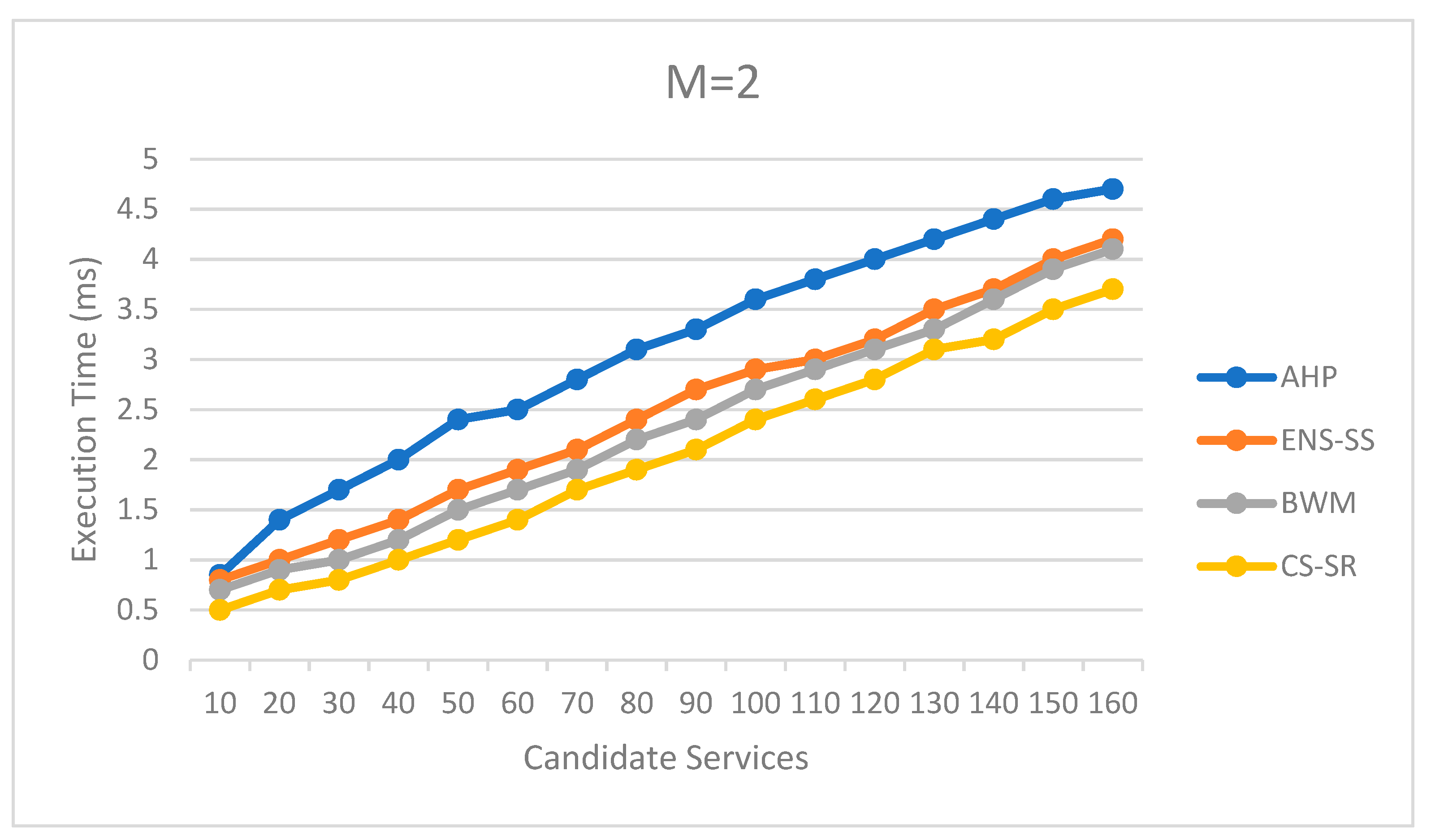

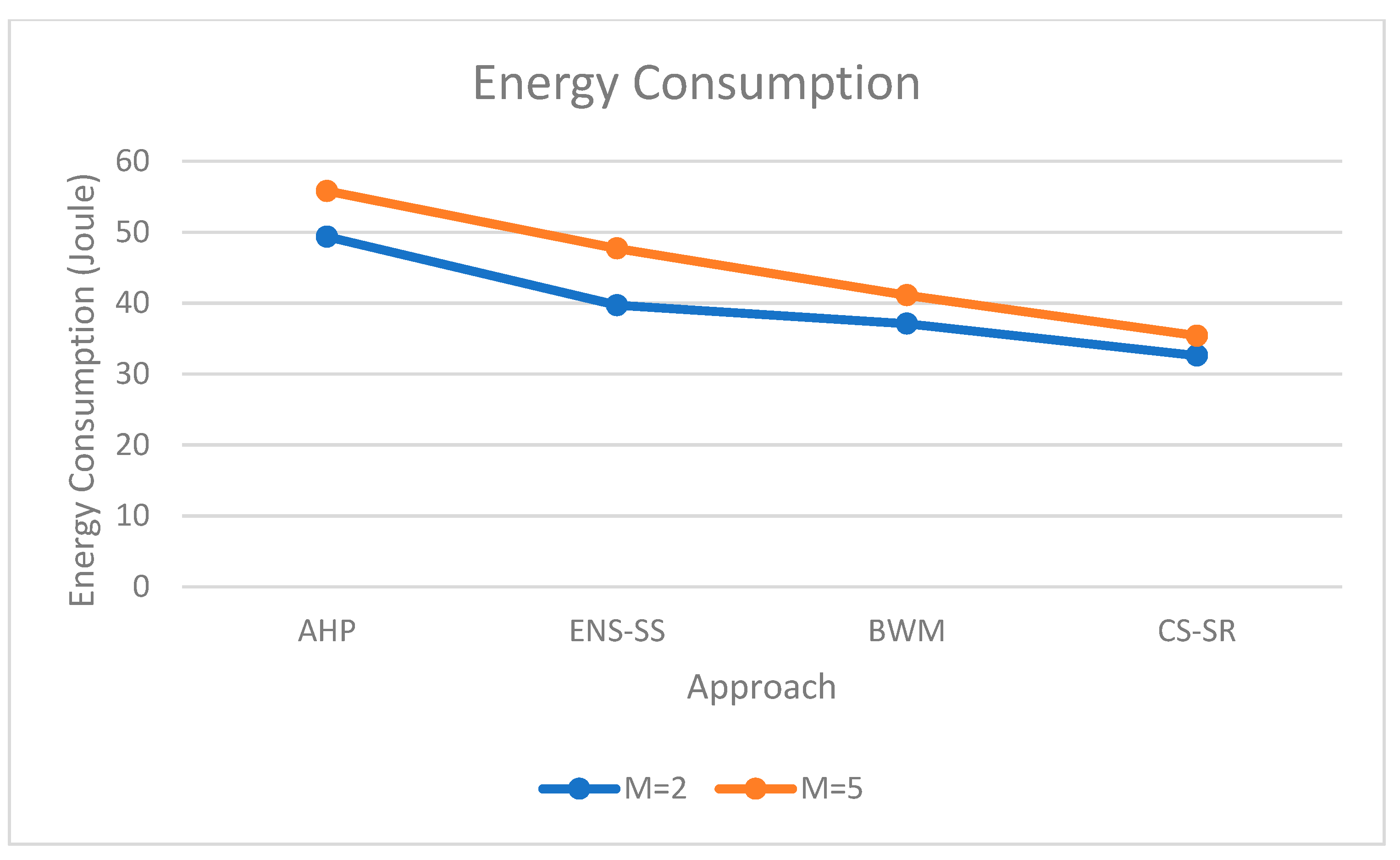

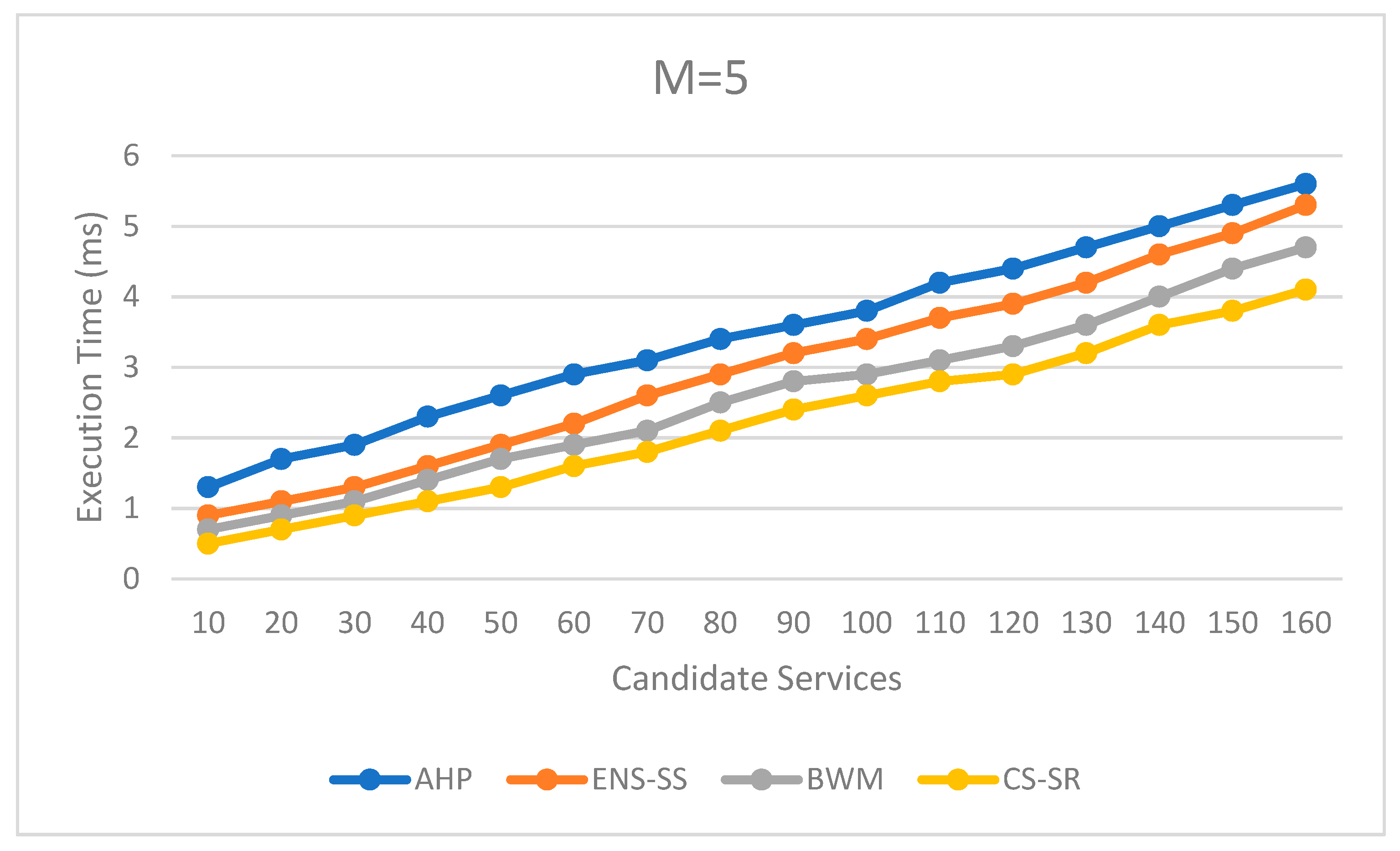

- The proposed CS-SR is compared with the analytical hierarchy process (A.H.P.) [38], efficient non-dominated sorting-sequential search (ENS-SS) [39], and best-worst method (B.W.M.) [40] on the performance parameter: total execution time and the energy consumption used in selecting and recommending the cloud service. The result shows that CS-SR outperforms the compared method. The three existing algorithms are chosen because all three algorithm deals with multi-criteria decision making and finds the optimal solution among the list of available solutions.

2. Related Work

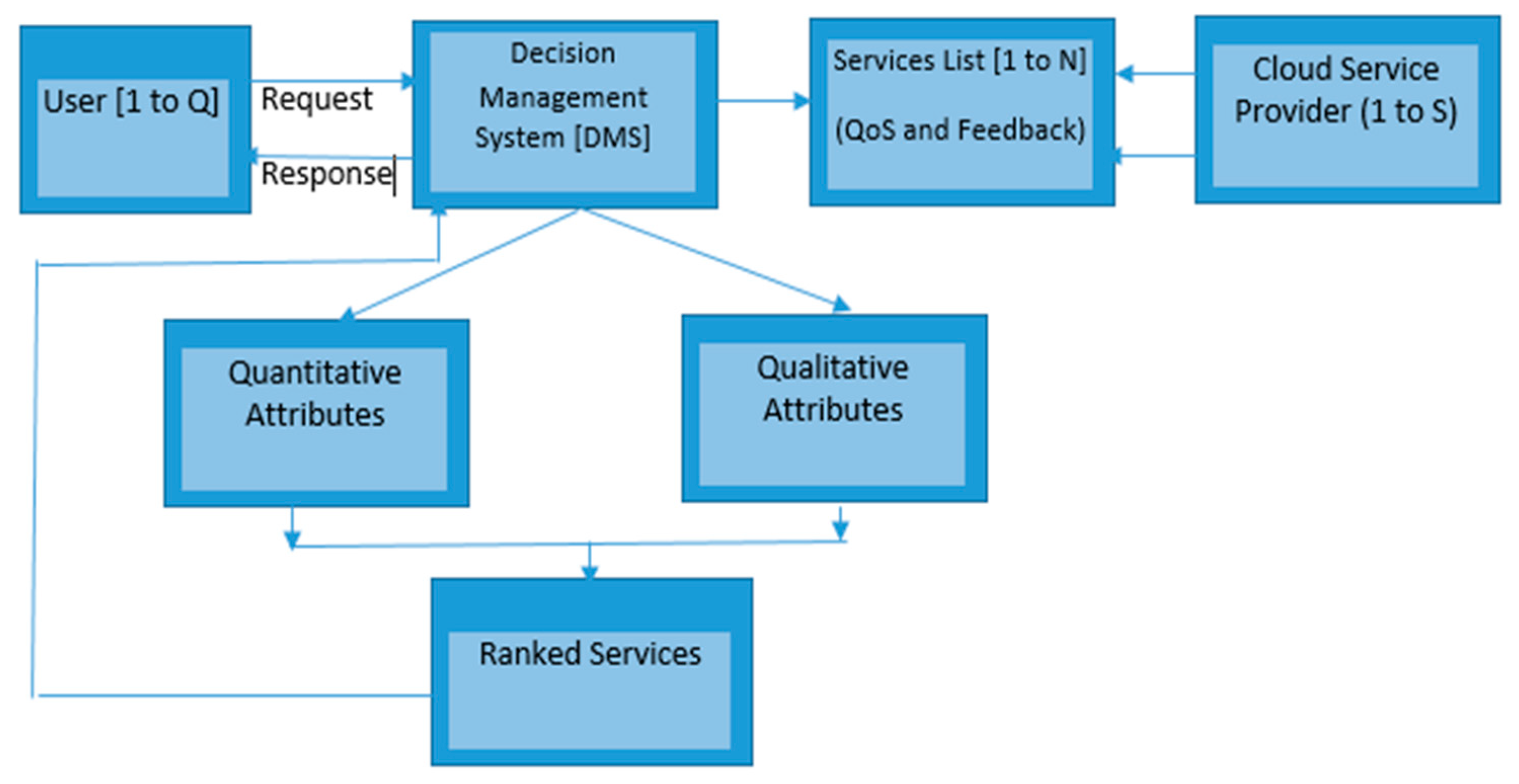

3. Proposed Architecture: CS-SR

3.1. Filtration Step

3.2. Evaluation of the Fitness Function

3.3. Integration and Ranking

3.4. Selection

4. Assumption Involved in CS-SR

4.1. Modeling Variables Used in CS-SR

4.2. Filtration of Candidate Service in CS-SR

| Algorithm 1: Filtration of Candidate Services |

, feedback (fnSn); list do to set of candidate services 4: end if 5: end for 6: return candidate set and feedback to D.M.S. |

4.3. Evaluation of Candidate Service in CS-SR

4.4. Integration and Ranking of Candidate Service in CS-SR

| Algorithm 2: Integration and Ranking Candidate Services by D.M.S. |

); ) ; > = compared services 5: set Service Rank to R1 and remove service from comparison 7: repeat step 3–6 for remaining services 8: end if 9: end for 10: return the rank of candidate services |

4.5. Selection of Candidate Service in CS-SR

5. Illustration of CS-SR through Example

6. Experimental Work

6.1. Implementation Details

- (a)

- Execution Time: Execution time is the time the user request for the service and the execution (optimal service) the user gets from the system.

- (b)

- Energy Computation:—The total amount of energy or power consumed for finding services through execution time.

6.2. Analysis of CS-SR through Execution Time

6.3. Analysis of CS-SR through Energy Consumption

7. Conclusions and Future Scope

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Buyya, R.; Yeo, S.C.; Venugopal, S.; Broberg, J.; Brandic, I. Cloud computing and emerging I.T. platforms: Vision, hype, and reality for delivering computing as the 5th utility. Future Gener. Comput. Syst. 2009, 25, 599–616. [Google Scholar] [CrossRef]

- Zhang, Q.; Lu, C.; Raouf, B. Cloud computing: State-of-the-art and research challenges. J. Internet Serv. Appl. 2010, 1, 7–18. [Google Scholar] [CrossRef]

- Jahani, A.; Leyli, M.K. Cloud service ranking as a multiobjective optimization problem. J. Supercomput. 2016, 72, 1897–1926. [Google Scholar] [CrossRef]

- Vecchiola, C.; Suraj, P.; Rajkumar, B. High-performance cloud computing: A view of scientific applications. In Proceedings of the 2009 10th International Symposium on Pervasive Systems, Algorithms, and Networks, Kaoshiung, Taiwan, 14–16 December 2009. [Google Scholar]

- Iordache, M.; Iordache, A.M.; Sandru, C.; Voica, C.; Zgavarogea, R.; Miricioiu, M.G.; Ionete, R.E. Assessment of heavy metals pollution in sediments from reservoirs of the Olt River as tool of environmental risk management. Rev. Chim. 2019, 70, 4153–4162. [Google Scholar]

- Botoran, O.R.; Ionete, R.E.; Miricioiu, M.G.; Costinel, D.; Radu, G.L.; Popescu, R. Amino acid profile of fruits as potential fingerprints of varietal origin. Molecules 2019, 24, 4500. [Google Scholar] [CrossRef]

- Raboaca, M.S. Sustaining the Passive House with Hybrid Energy Photovoltaic Panels—Fuel Cell. Prog. Cryog. Isot. Sep. 2015, 18. [Google Scholar]

- Raboaca, M.S.; Felseghi, R.A. Energy Efficient Stationary Application Supplied with Solar-Wind Hybrid Energy. In Proceedings of the 2019 International Conference on Energy and Environment (CIEM), Timisoara, Romania, 17–18 October 2019; pp. 495–499. [Google Scholar]

- Dewangan, B.K.; Jain, A.; Choudhury, T. AP: Hybrid Task Scheduling Algorithm for Cloud. Rev. d’Intelligence Artif. 2020, 34, 479–485. [Google Scholar] [CrossRef]

- Katchabaw, M.J.; Lutfiyya, H.L.; Bauer, M.A. Usage-based service differentiation for end-to-end quality of service management. Comput. Commun. 2005, 28, 2146–2159. [Google Scholar] [CrossRef]

- Fan, H. An integrated personalization framework for SaaS-based cloud services. Future Gener. Comput. Syst. 2015, 53, 157–173. [Google Scholar] [CrossRef]

- Triantaphyllou, E.; Mann, S.H. Using the analytic hierarchy process for decision making in engineering applications: Some challenges. Int. J. Ind. Eng. Appl. Pract. 1995, 2, 35–44. [Google Scholar]

- El-Gazzar, R.; Hustad, E.; Olsen, D.H. Understanding cloud computing adoption issues: A Delphi study approach. J. Syst. Softw. 2016, 118, 64–84. [Google Scholar] [CrossRef]

- Basahel, A.; Yamin, M.; Drijan, A. Barriers to Cloud Computing Adoption for S.M.E.s in Saudi Arabia. Bvicams Int. J. Inf. Technol. 2016, 8, 1044–1048. [Google Scholar]

- Ding, S.; Yang, S.; Zhang, Y.; Liang, C.; Xia, C. Combining QoS prediction and customer satisfaction estimation to solve cloud service trustworthiness evaluation problems. Knowl. Based Syst. 2014, 56, 216–225. [Google Scholar] [CrossRef]

- Garg, S.K.; Versteeg, S.; Buyya, R. Smicloud: A framework for comparing and ranking cloud services. In Proceedings of the Utility and Cloud Computing (U.C.C.), 2011 Fourth IEEE International Conference on IEEE, Victoria, NSW, Australia, 5–8 December 2011. [Google Scholar]

- Liu, Y.; Moez, E.; Boulahia, L.M. Evaluation of Parameters Importance in Cloud Service Selection Using Rough Sets. Appl. Math. 2016, 7, 527. [Google Scholar] [CrossRef]

- Stojanovic, M.D.; Rakas, S.V.B.; Acimovic-Raspopovic, V.S. End-to-end quality of service specification and mapping: The third party approach. Comput. Commun. 2010, 33, 1354–1368. [Google Scholar] [CrossRef]

- Qu, L.; Wang, Y.; Orgun, M.A. Cloud service selection based on the aggregation of user feedback and quantitative performance assessment. In Proceedings of the 2013 IEEE International Conference on Services Computing, Santa Clara, CA, USA, 28 June–3 July 2013; IEEE Computer Society: Washington, DC, USA, 2013; pp. 152–159. [Google Scholar]

- Mao, C. Search-based QoS ranking prediction for web services in cloud environments. Future Gener. Comput. Syst. 2015, 50, 111–126. [Google Scholar] [CrossRef]

- Ardagna, D.; Casale, G.; Ciavotta, M.; Pérez, J.F.; Wang, W. Quality-of-service in cloud computing: Modeling techniques and their applications. J. Internet Serv. Appl. 2014, 5, 11. [Google Scholar] [CrossRef]

- Singh, P.K., Paprzycki, M., Bhargava, B., Chhabra, J.K., Kaushal, N.C., Kumar, Y., Eds.; Futuristic Trends in Network and Communication Technologies. In Proceedings of the First International Conference, FTNCT 2018, Solan, India, 9–10 February 2018. [Google Scholar]

- Garg, S.K.; Versteeg, S.; Buyya, R. A framework for ranking of cloud computing services. Future Gener. Comput. Syst. 2013, 29, 1012–1023. [Google Scholar] [CrossRef]

- Chan, H.; Trieu, C. Ranking and mapping of applications to cloud computing services by S.V.D. In Proceedings of the Network Operations and Management Symposium Workshops (NOMS Wksps), Osaka, Japan, 19–23 April 2010; IEEE: Piscataway, NJ, USA, 2010. [Google Scholar]

- Fang, H.; Wang, Q.; Tu, Y.-C.; Horstemeyer, M.F. An efficient non-dominated sorting method for evolutionary algorithms. Evol. Comput. 2008, 16, 355–384. [Google Scholar] [CrossRef] [PubMed]

- Baghel, S.K.; Dewangan, B.K. Defense in Depth for Data Storage in Cloud Computing. Int. J. Technol. 2012, 2, 58–61. [Google Scholar]

- Wooldridge, M. An Introduction to Multi-Agent Systems; Department of Computer Science, University of Liverpool: Liverpool, UK, 2009; pp. 200–450. [Google Scholar]

- Dewangan, B.K.; Agarwal, A.; Choudhury, T.; Pasricha, A. Cloud resource optimization system based on time and cost. Int. J. Math. Eng. Manage. Sci. 2020, 5, 758–768. [Google Scholar] [CrossRef]

- Yau, S.; Yin, Y. QoS-based service ranking and selection for service-based systems. In Proceedings of the IEEE International Conference on Services Computing, Washington, DC, USA, 4 June–9 July 2011; pp. 56–63. [Google Scholar]

- Almulla, M.; Yahyaoui, H.; Al-Matori, K. A new fuzzy hybrid technique for ranking real-world Web services. Knowl. Based Syst. 2015, 77, 1–15. [Google Scholar] [CrossRef]

- Skoutas, D.; Sacharidis, D.; Simitsis, A.; Sellis, T. Ranking and clustering web services using multi-criteria dominance relationships. IEEE Trans. Serv. Comput. 2010, 3, 163–177. [Google Scholar] [CrossRef]

- Dikaiakos, M.D.; Yazti, D.Z. A distributed middleware infrastructure for personalized services. Comput. Commun. 2004, 27, 1464–1480. [Google Scholar] [CrossRef][Green Version]

- Dewangan, B.K.; Agarwal, A.; Venkatadri, M.; Pasricha, A. Design of self-management aware autonomic resource scheduling scheme in cloud. Int. J. Comput. Inf. Syst. Ind. Manag. Appl. 2019, 11, 170–177. [Google Scholar]

- Octavio, J.; Ramirez, A. Collaborative agents for distributed load management in cloud data centres using Live Migration of virtual machines. IEEE Trans. Serv. Comput. 2015, 8, 916–929. [Google Scholar]

- Octavio, J.; Ramirez, A. Agent-based load balancing in cloud data centres. Cluster Comput. 2015, 18, 1041–1062. [Google Scholar]

- Al-Masri, E.; Mahmoud, Q.H. Investigating web services on the world wide web. In Proceedings of the 17th International Conference on World Wide Web, Beijing, China, 21–25 April 2008; ACM Press: New York, NY, USA, 2008; pp. 795–804. [Google Scholar]

- Zheng, Z.; Wu, X.; Zhang, Y.; Lyu, M.; Wang, J. QoS ranking prediction for cloud services. J. IEEE Trans. Parallel Distrib. Syst. 2012, 24, 1213–1222. [Google Scholar] [CrossRef]

- Khan, I.; Meena, A.; Richhariya, P.; Dewangan, B.K. Optimization in Autonomic Computing and Resource Management. In Autonomic Computing in Cloud Resource Management in Industry 4.0; Springer: Cham, Switzerland, 2021; pp. 159–175. [Google Scholar]

- Zhang, X.; Tian, Y.; Cheng, R.; Jin, Y. An efficient approach to nondominated sorting for evolutionary multiobjective optimization. IEEE Trans. Evol. Comput. 2014, 19, 201–213. [Google Scholar] [CrossRef]

- Trueman, C. What Impact Are Data Centres Having on Climate Change? Available online: https://www.computerworld.com/article/3431148/why-data-centres-are-the-new-frontier-in-the-fight-against-climate-change.html (accessed on 9 August 2019).

- Holst, A. Number of Data Centers Worldwide 2015–2021. Available online: https://www.statista.com/statistics/500458/worldwide-datacenter-and-it-sites/ (accessed on 2 March 2020).

- Malhotra, R.; Dewangan, B.K.; Chakraborty, P.; Choudhury, T. Self-Protection Approach for Cloud Computing. In Autonomic Computing in Cloud Resource Management in Industry 4.0; Springer: Cham, Switzerland, 2021; pp. 213–228. [Google Scholar]

- Hao, Y.; Zhang, Y.; Cao, J. Web services discovery and Rank: An information retrieval approach. Future Gener. Comput. Syst. 2010, 26, 1053–1062. [Google Scholar] [CrossRef]

- Dewangan, B.K.; Agarwal, A.; Choudhury, T.; Pasricha, A. Workload aware autonomic resource management scheme using grey wolf optimization in cloud environment. IET Commun 2021, 15, 1869–1882. [Google Scholar] [CrossRef]

- Ishizaka, A.; Labib, A. Analytic hierarchy process and expert choice: Benefits and limitations. Or Insight 2009, 22, 201–220. [Google Scholar] [CrossRef]

- Dewangan, B.K.; Agarwal, A.; Choudhury, T.; Pasricha, A.; Chandra Satapathy, S. Extensive review of cloud resource management techniques in industry 4.0: Issue and challenges. Softw. Pract. Exp. 2020. [Google Scholar] [CrossRef]

- Jahani, A.; Farnaz, D.; Leyli, M.K. Arank: A multi-agent-based approach for ranking of cloud computing services. Scalable Comput. Pract. Exp. 2017, 18, 105–116. [Google Scholar] [CrossRef]

- Dewangan, B.K.; Shende, P. The Sliding Window Method: An Environment To Evaluate User Behavior Trust In Cloud Technology. Int. J. Adv. Res. Comput. Commun. Eng. 2013, 2, 1158–1162. [Google Scholar]

- McClymont, K.; Keedwell, E. Deductive sort and climbing sort: New methods for non-dominated sorting. Evol. Comput. 2012, 20, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Roy, P.C.; Islam, M.M.; Deb, K. Best order sort: A new algorithm to non-dominated sorting for evolutionary multiobjective optimization. In Proceedings of the 2016 Genetic and Evolutionary Computation Conference Companion, Denver, CO, USA, 20–24 July 2016; ACM Press: New York, NY, USA, 2016; pp. 1113–1120. [Google Scholar]

- Tang, S.; Cai, Z.; Zheng, J. A fast method of constructing the non-dominated set: Arena’s principle. In Proceedings of the ICNC’08, Fourth International Conference on Natural Computation, Jinan, China, 18–20 October 2008; IEEE: Piscataway, NJ, USA, 2008; Volume 1. [Google Scholar]

- Godse, M.; Mulik, S. An approach for selecting software-as-a-service (saas) product. In Proceedings of the IEEE International Conference on Cloud Computing, Bangalore, India, 21–25 September 2009; IEEE Computer Society: Washington, DC, USA, 2009; pp. 155–158. [Google Scholar]

- Limam, N.; Boutaba, R. Assessing software service quality and trustworthiness at selection time. IEEE Trans. Softw. Eng. 2010, 36, 559–574. [Google Scholar] [CrossRef]

- Dewangan, B.K.; Agarwal, A.; Venkatadri, M.; Pasricha, A. Resource scheduling in cloud: A comparative study. Int. J. Comput. Sci. Eng. 2018, 6, 168–173. [Google Scholar] [CrossRef]

- Rehman, Z.U.; Hussain, O.K.; Hussain, F.K. Iaas cloud selection using MCDM methods. In Proceedings of the 9th IEEE International Conference on E-Business Engineering, Hangzhou, China, 9–11 September 2012; pp. 246–251. [Google Scholar]

- Sun, L.; Dong, H.; Hussain, O.K.; Hussain, F.K.; Liu, A.X. A framework of cloud service selection with criteria interactions. Future Gener. Comput. Syst. 2019, 94, 749–764. [Google Scholar] [CrossRef]

- Tomar, R.; Khanna, A.; Bansal, A.; Fore, V. An architectural view towards autonomic cloud computing. In Data Engineering and Intelligent Computing; Springer: Singapore, 2018; pp. 573–582. [Google Scholar]

- Kero, A.; Khanna, A.; Kumar, D.; Agarwal, A. An Adaptive Approach Towards Computation Offloading for Mobile Cloud Computing. Int. J. Inf. Technol. Web Eng. IJITWE 2019, 14, 52–73. [Google Scholar] [CrossRef]

- Juarez, F.; Ejarque, J.; Badia, R.M. Dynamic energy-aware scheduling for parallel task-based application in cloud computing. Future Gener. Comput. Syst. 2018, 78, 257–271. [Google Scholar] [CrossRef]

- Yaqoob, I.; Ahmed, E.; Gani, A.; Mokhtar, S.; Imran, M. Heterogeneity-aware task allocation in mobile ad hoc cloud. IEEE Access 2017, 5, 1779–1795. [Google Scholar] [CrossRef]

- Hu, B.; Cao, Z.; Zhou, M. Scheduling Real-Time Parallel Applications in Cloud to Minimize Energy Consumption. IEEE Trans. Cloud Comput. 2019. [Google Scholar] [CrossRef]

- Mishra, S.K.; Puthal, D.; Sahoo, B.; Jena, S.K.; Obaidat, M.S. An adaptive task allocation technique for green cloud computing. J. Supercomput. 2018, 74, 370–385. [Google Scholar] [CrossRef]

- Xu, M.; Buyya, R. BrownoutCon: A software system based on brownout and containers for energy-efficient cloud computing. J. Syst. Softw. 2019, 155, 91–103. [Google Scholar] [CrossRef]

- Raboaca, M.S.; Dumitrescu, C.; Manta, I. Aircraft Trajectory Tracking Using Radar Equipment with Fuzzy Logic Algorithm. Mathematics 2020, 8, 207. [Google Scholar] [CrossRef]

- Dewangan, B.K.; Agarwal, A.; Venkatadri, M.; Pasricha, A. Sla-based autonomic cloud resource management framework by antlion optimization algorithm. Int. J. Innov. Technol. Explor. Eng. (IJITEE) 2019, 8, 119–123. [Google Scholar]

- Alabool, H.; Kamil, A.; Arshad, N.; Alarabiat, D. Cloud service evaluation method-based Multi-Criteria Decision-Making: A systematic literature review. J. Syst. Softw. 2018, 139, 161–188. [Google Scholar] [CrossRef]

- Singh, P.; Sood, S.; Kumar, Y.; Paprzycki, M.; Pljonkin, A.; Hong, W.C. Futuristic Trends in Networks and Computing Technologies; FTNCT 2019 Communications in Computer and Information Science; Springer: Singapore, 2019; Volume 1206, pp. 3–707. [Google Scholar]

- Singh, P.K.; Bhargava, B.K.; Paprzycki, M.; Kaushal, N.C.; Hong, W.C. Handbook of Wireless Sensor Networks: Issues and Challenges in Current Scenario’s; Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2020; Volume 1132, pp. 155–437. [Google Scholar]

- Whaiduzzaman, M.; Gani, A.; Anuar, N.B.; Shiraz, M.; Haque, M.N.; Haque, I.T. Cloud service selection using multi-criteria decision analysis. Sci. World J. 2014, 2014, 459375. [Google Scholar] [CrossRef]

- Sun, M.; Zang, T.; Xu, X.; Wang, R. Consumer-centered cloud services selection using A.H.P. In Proceedings of the 2013 International Conference on Service Sciences (ICSS), Shenzhen, China, 11–13 April 2013; IEEE: Piscataway, NJ, USA, 2013. [Google Scholar]

- Jatoth, C.; Gangadharan, G.R.; Fiore, U. Evaluating the efficiency of cloud services using modified data envelopment analysis and modified super-efficiency data envelopment analysis. Soft Comput. 2017, 21, 7221–7234. [Google Scholar] [CrossRef]

- Jatoth, C.; Gangadharan, G.R.; Fiore, U.; Buyya, R. SELCLOUD: A hybrid multi-criteria decision-making model for selection of cloud services. Soft Comput. 2019, 23, 4701–4715. [Google Scholar] [CrossRef]

| S. No. | Services | Attribute 1 | Attribute 2 | Feedback (1–10) in Range |

|---|---|---|---|---|

| 1. | S1 | 100 | 10 | 7 |

| 2. | S2 | 110 | 6 | 3 |

| 3. | S3 | 90 | 8 | 5 |

| 4. | S4 | 95 | 7 | 6 |

| 5. | S5 | 105 | 9 | 4 |

| 6. | S6 | 115 | 12 | 9 |

| 7. | S7 | 80 | 5 | 8 |

| 8. | S8 | 92 | 7.5 | 7 |

| 9. | S9 | 102 | 8 | 5 |

| 10. | S10 | 85 | 6.5 | 4 |

| S. No. | Services | Attribute 1 | Attribute 2 | Feedback (1–10) |

|---|---|---|---|---|

| 1. | S1 | 100 | 10 | 7 |

| 2. | S2 | 110 | 6 | 3 |

| 3. | S5 | 105 | 9 | 4 |

| 4. | S9 | 102 | 8 | 5 |

| S. No. | Services | Attr.1 | Attr.2 | Fitness Function | Feedback [1,2,3,4,5,6,7,8,9,10] | Fitness Function |

|---|---|---|---|---|---|---|

| 1. | S1 | 100 | 10 | 7 | ||

| 2. | S2 | 110 | 6 | 3 | ||

| 3. | S5 | 105 | 9 | 4 | ||

| 4. | S9 | 102 | 8 | 5 |

| S. No. | Services | Attribute 1 | Attribute 2 | Fitness Function | |

|---|---|---|---|---|---|

| 1. | S1 | 100 | 10 | Rank 1-S1 | |

| 2. | S2 | 110 | 6 | Rank 4-S2 | |

| 3. | S5 | 105 | 9 | Rank 3-S5 | |

| 4. | S9 | 102 | 8 | Rank 2-S9 |

| Candidate Services | AHP | ENS-SS | BWM | CS-SR |

|---|---|---|---|---|

| 10 | 0.85 | 0.8 | 0.7 | 0.5 |

| 20 | 1.4 | 1 | 0.9 | 0.7 |

| 30 | 1.7 | 1.2 | 1 | 0.8 |

| 40 | 2 | 1.4 | 1.2 | 1 |

| 50 | 2.4 | 1.7 | 1.5 | 1.2 |

| 60 | 2.5 | 1.9 | 1.7 | 1.4 |

| 70 | 2.8 | 2.1 | 1.9 | 1.7 |

| 80 | 3.1 | 2.4 | 2.2 | 1.9 |

| 90 | 3.3 | 2.7 | 2.4 | 2.1 |

| 100 | 3.6 | 2.9 | 2.7 | 2.4 |

| 110 | 3.8 | 3 | 2.9 | 2.6 |

| 120 | 4 | 3.2 | 3.1 | 2.8 |

| 130 | 4.2 | 3.5 | 3.3 | 3.1 |

| 140 | 4.4 | 3.7 | 3.6 | 3.2 |

| 150 | 4.6 | 4 | 3.9 | 3.5 |

| 160 | 4.7 | 4.2 | 4.1 | 3.7 |

| Candidate Services | AHP | ENS-SS | BWM | CS-SR |

|---|---|---|---|---|

| 10 | 1.3 | 0.9 | 0.7 | 0.5 |

| 20 | 1.7 | 1.1 | 0.9 | 0.7 |

| 30 | 1.9 | 1.3 | 1.1 | 0.9 |

| 40 | 2.3 | 1.6 | 1.4 | 1.1 |

| 50 | 2.6 | 1.9 | 1.7 | 1.3 |

| 60 | 2.9 | 2.2 | 1.9 | 1.6 |

| 70 | 3.1 | 2.6 | 2.1 | 1.8 |

| 80 | 3.4 | 2.9 | 2.5 | 2.1 |

| 90 | 3.6 | 3.2 | 2.8 | 2.4 |

| 100 | 3.8 | 3.4 | 2.9 | 2.6 |

| 110 | 4.2 | 3.7 | 3.1 | 2.8 |

| 120 | 4.4 | 3.9 | 3.3 | 2.9 |

| 130 | 4.7 | 4.2 | 3.6 | 3.2 |

| 140 | 5 | 4.6 | 4 | 3.6 |

| 150 | 5.3 | 4.9 | 4.4 | 3.8 |

| 160 | 5.6 | 5.3 | 4.7 | 4.1 |

| S. No. | M = 2 | M = 5 |

|---|---|---|

| AHP | 49.35 | 55.8 |

| ENS-SS | 39.7 | 47.7 |

| BWM | 37.1 | 41.1 |

| CS-SR | 32.6 | 35.4 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sirohi, P.; Al-Wesabi, F.N.; Alshahrani, H.M.; Maheshwari, P.; Agarwal, A.; Dewangan, B.K.; Hilal, A.M.; Choudhury, T. Energy-Efficient Cloud Service Selection and Recommendation Based on QoS for Sustainable Smart Cities. Appl. Sci. 2021, 11, 9394. https://doi.org/10.3390/app11209394

Sirohi P, Al-Wesabi FN, Alshahrani HM, Maheshwari P, Agarwal A, Dewangan BK, Hilal AM, Choudhury T. Energy-Efficient Cloud Service Selection and Recommendation Based on QoS for Sustainable Smart Cities. Applied Sciences. 2021; 11(20):9394. https://doi.org/10.3390/app11209394

Chicago/Turabian StyleSirohi, Preeti, Fahd N. Al-Wesabi, Haya Mesfer Alshahrani, Piyush Maheshwari, Amit Agarwal, Bhupesh Kumar Dewangan, Anwer Mustafa Hilal, and Tanupriya Choudhury. 2021. "Energy-Efficient Cloud Service Selection and Recommendation Based on QoS for Sustainable Smart Cities" Applied Sciences 11, no. 20: 9394. https://doi.org/10.3390/app11209394

APA StyleSirohi, P., Al-Wesabi, F. N., Alshahrani, H. M., Maheshwari, P., Agarwal, A., Dewangan, B. K., Hilal, A. M., & Choudhury, T. (2021). Energy-Efficient Cloud Service Selection and Recommendation Based on QoS for Sustainable Smart Cities. Applied Sciences, 11(20), 9394. https://doi.org/10.3390/app11209394