Concrete Crack Detection Based on Well-Known Feature Extractor Model and the YOLO_v2 Network

Abstract

1. Introduction

2. Methods

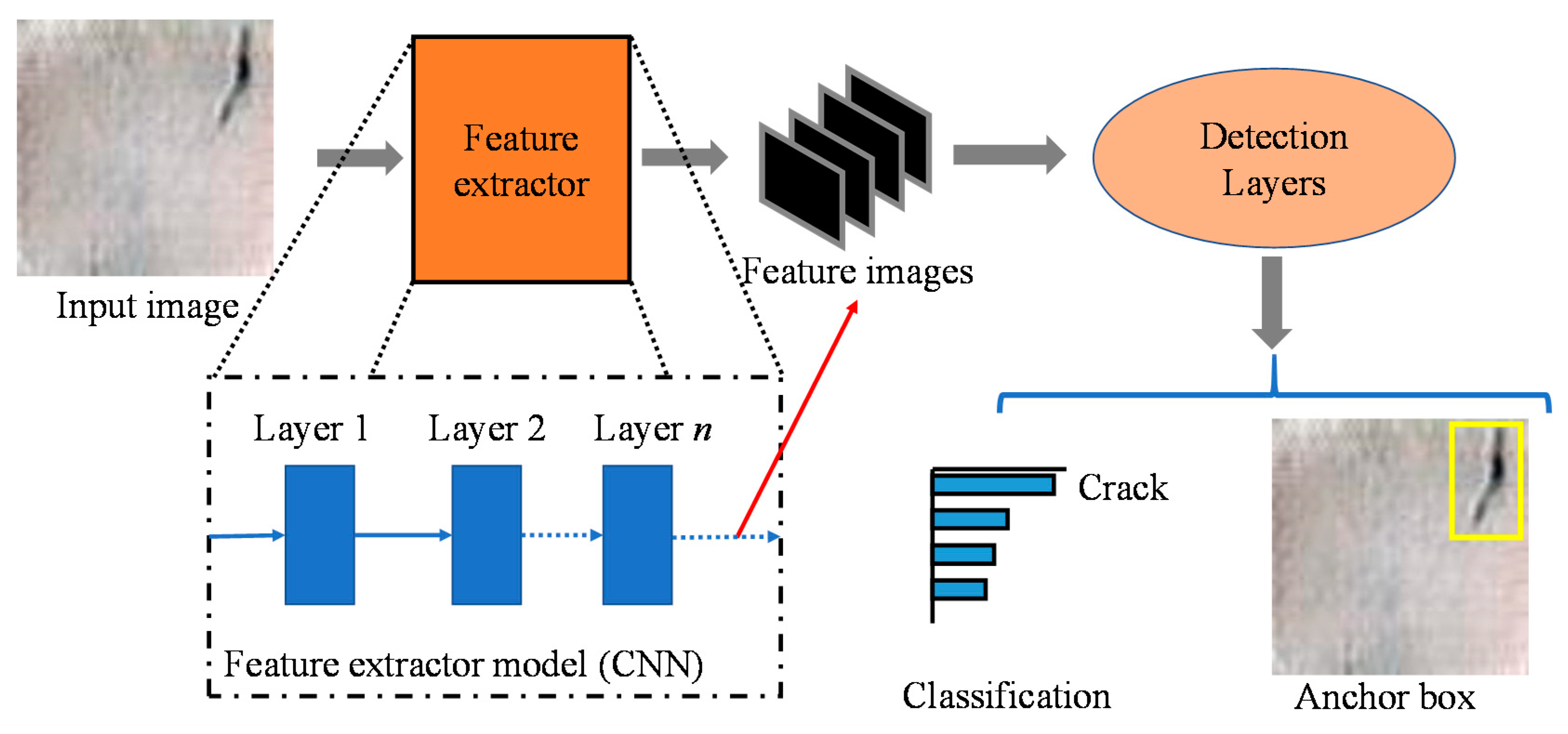

2.1. YOLO_v2

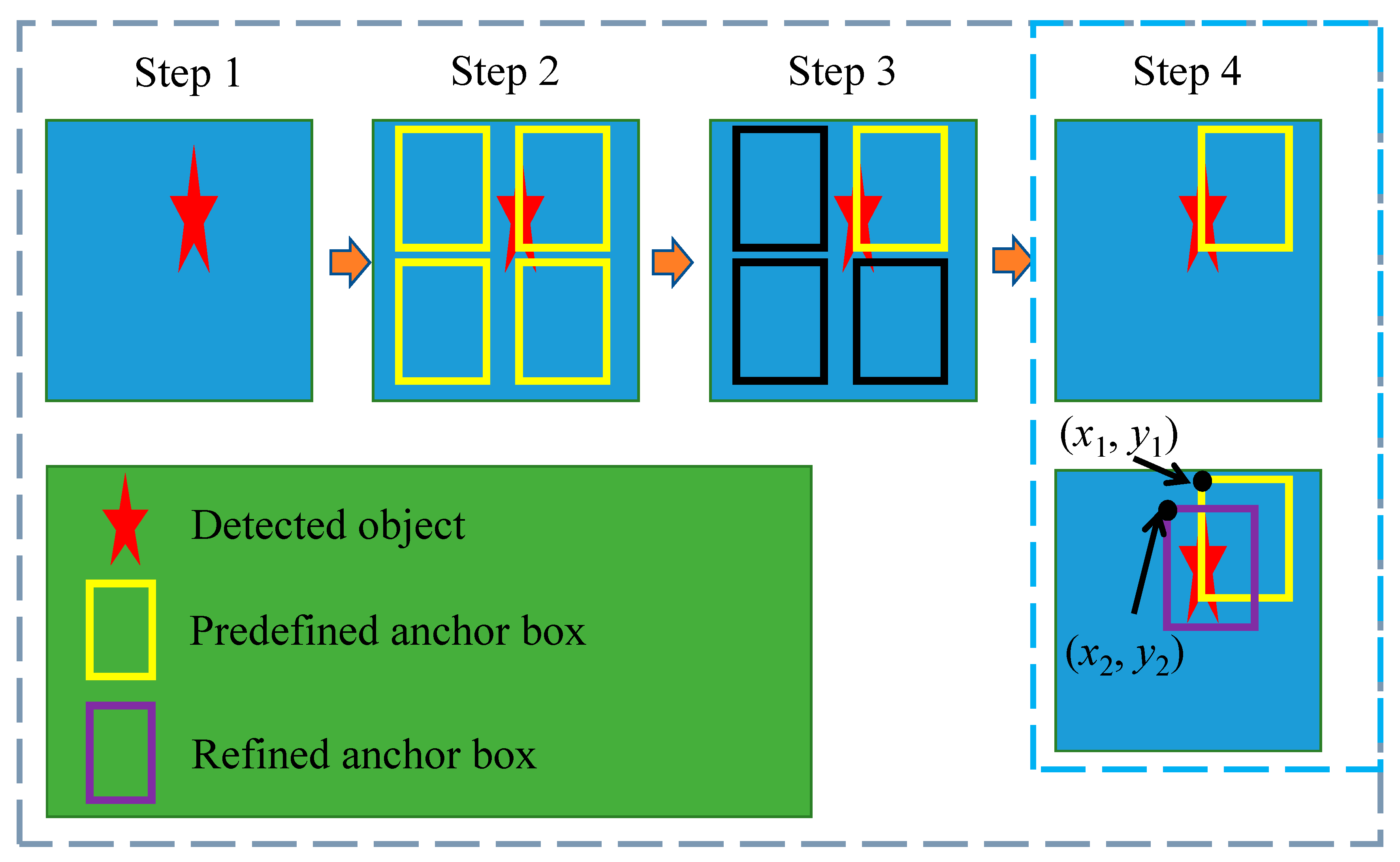

- Step 1: Obtained feature images from the feature extractor;

- Step 2: The predefined anchor boxes were tiled across the image;

- Step 3: To generate the final object detections, tiled anchor boxes that belonged to the background class were removed;

- Step 4: The location error (the distance between refined and predefined anchor box) was gradually reduced by minimizing the loss function. As shown in Figure 2, the upper left coordinates of the predefined anchor box were (x1, y1), and the refined anchor box was (x2, y2). During training, the precise location of the anchor box was obtained by minimizing the loss function (squared error loss, SEL):

2.2. Transfer Learning-Based Feature Extractors

2.3. Experimental Setup and Performance Evaluation

3. Results and Discussion

3.1. Crack Detection Results of the YOLO_v2

3.2. Parametric Study

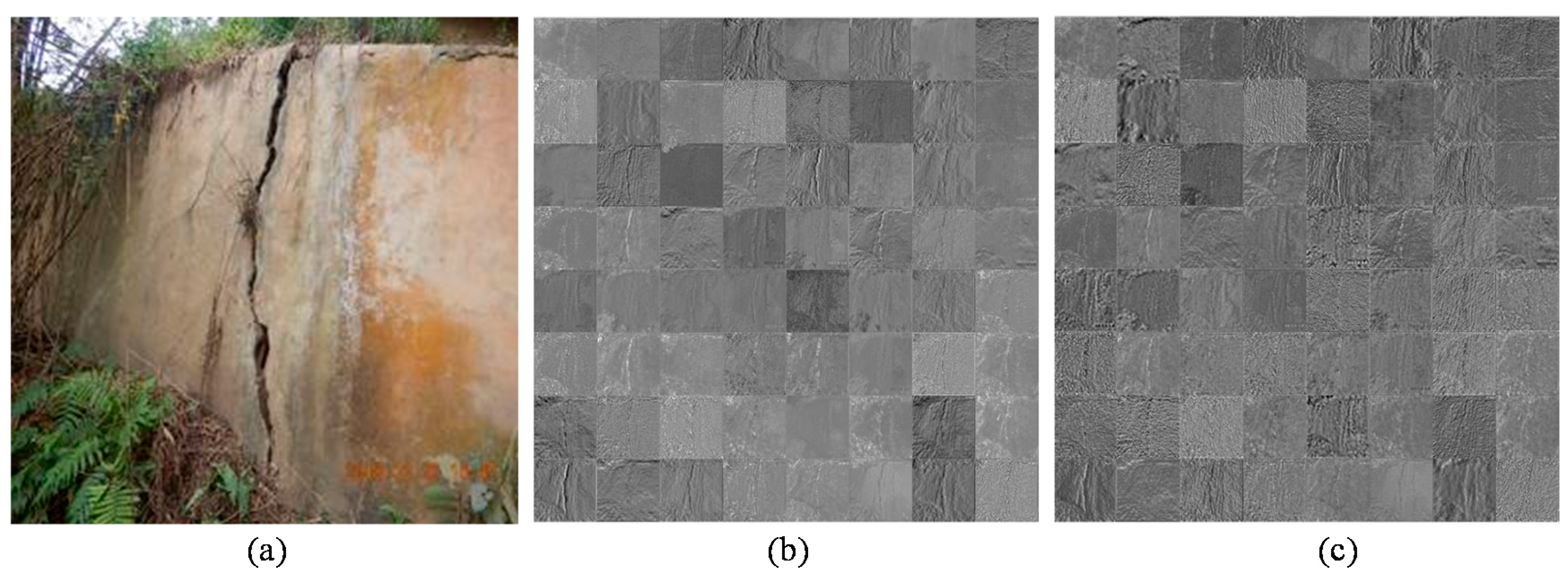

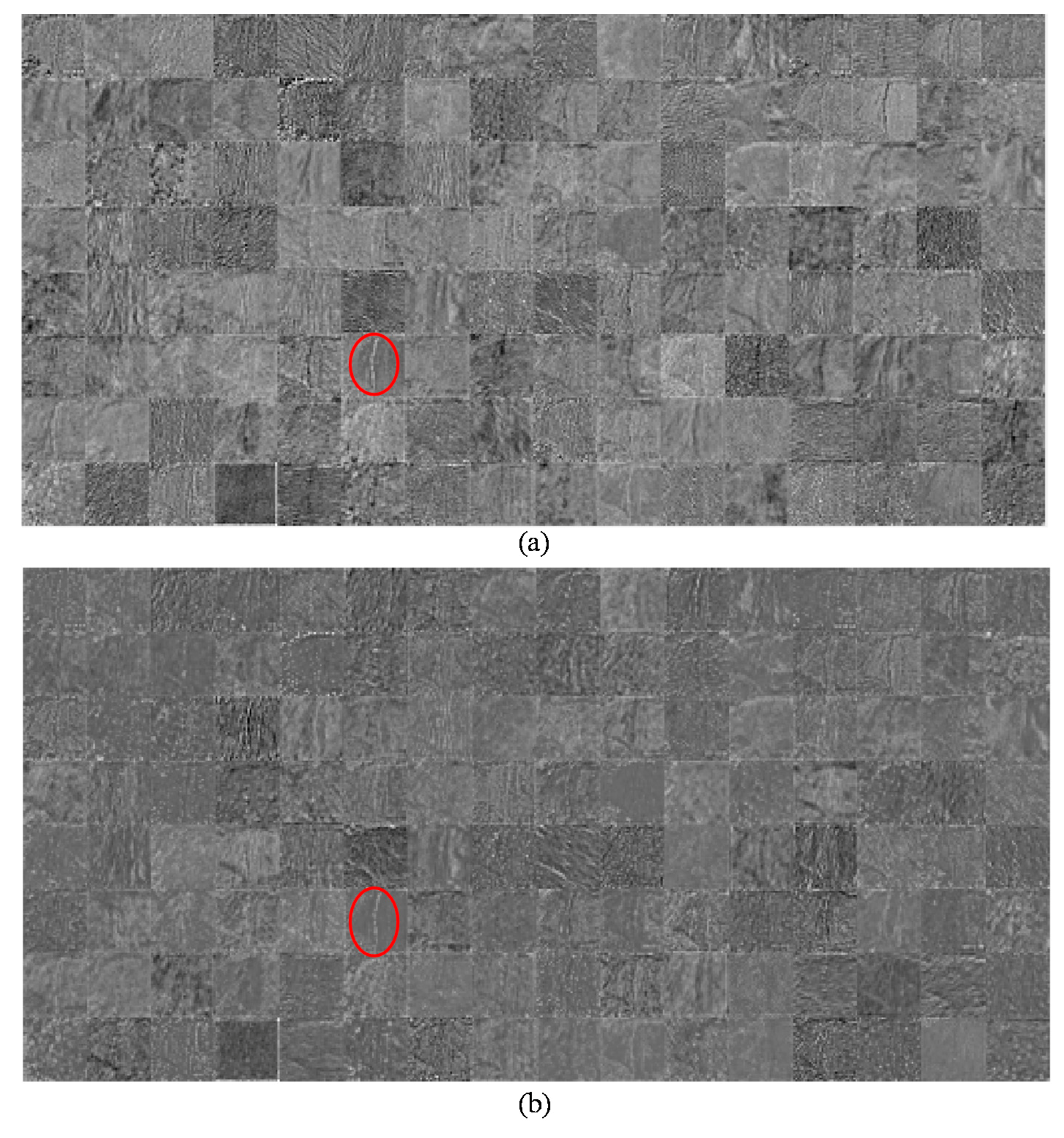

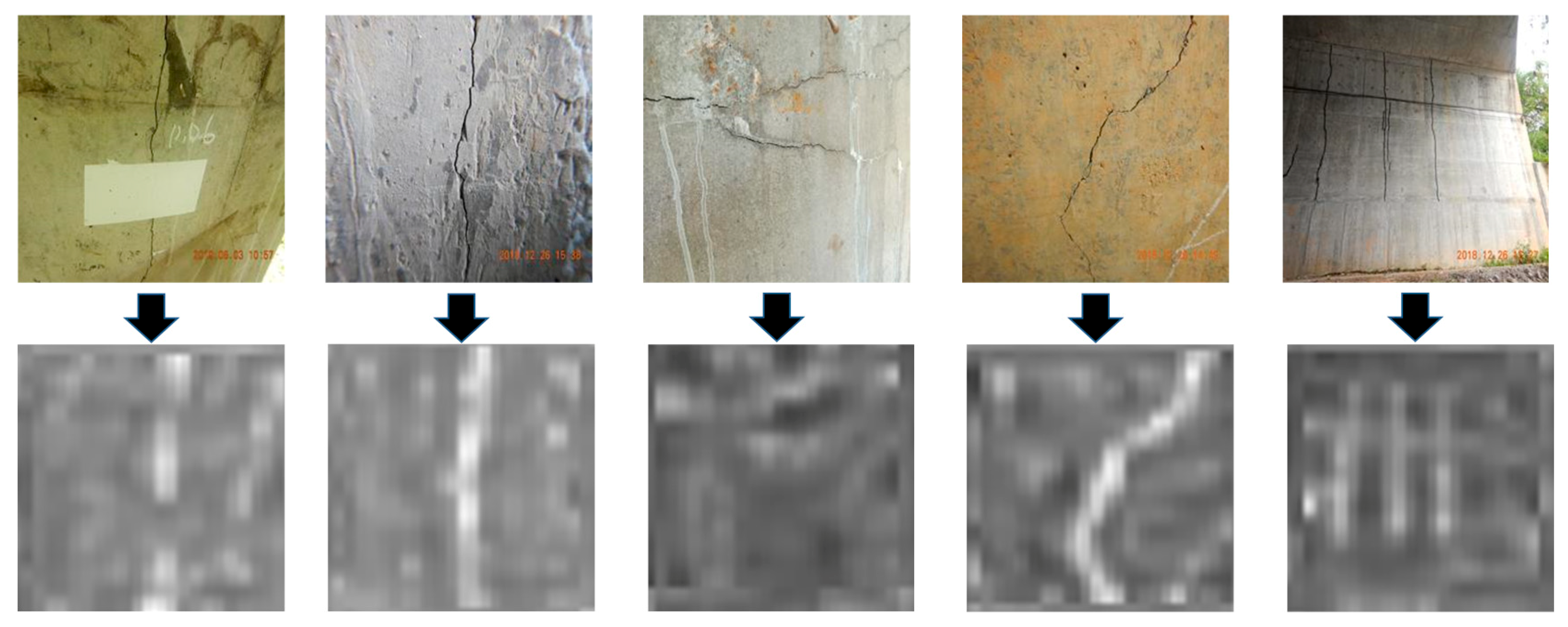

3.3. Visualization of Features

4. Conclusions

- The comparison of 11 well-known network models indicated that the ‘resnet18’ has a high precision (AP = 0.89) and fast computing speed.

- Influence of relevant parameters on detection results:

- (a)

- Once the detection precision is stable, there is no need to increase the epoch number, which will increase the computing cost.

- (b)

- An appropriate selection of the feature extraction layer can help to improve the detection results. Too shallow or too deep layers can also lead to unsatisfactory detection results.

- (c)

- The detection precision increases with the resolution of the images used; but once it reaches the optimal value, it is meaningless to further increase the image resolution, as it means more detection time.

- In the process of feature visualization, the feature extractor can extract effective crack features and confirms the conclusion of 2 (b).

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Koch, C.; Georgieva, K.; Kasireddy, V.; Akinci, B.; Fieguth, P. A review on computer vision based defect detection and condition assessment of concrete and asphalt civil infrastructure. Adv. Eng. Inform. 2015, 29, 196–210. [Google Scholar] [CrossRef]

- Prakash, G.; Narasimhan, S.; Al-Hammoud, R. A two-phase model to predict the remaining useful life of corroded reinforced concrete beams. J. Civil Struct. Health Monit. 2019, 9, 183–199. [Google Scholar] [CrossRef]

- Xu, J.; Gui, C.; Han, Q. Recognition of rust grade and rust ratio of steel structures based on ensembled convolutional neural network. Comput. Aided Civil Infrastruct. Eng. 2020, 35, 1160–1174. [Google Scholar] [CrossRef]

- Cha, Y.J.; Choi, W.; Suh, G.; Mahmoudkhani, S.; Buyukozturk, O. Autonomous Structural Visual Inspection Using Region-Based Deep Learning for Detecting Multiple Damage Types. Comput. Aided Civil Infrastruct. Eng. 2018, 33, 731–747. [Google Scholar] [CrossRef]

- Li, G.; Ren, X.; Qiao, W.; Ma, B.; Li, Y. Automatic bridge crack identification from concrete surface using ResNeXt with postprocessing. Struct. Control Health Monit. 2020, 27, e2620. [Google Scholar] [CrossRef]

- Zhang, C.; Chang, C.C.; Jamshidi, M. Concrete bridge surface damage detection using a single-stage detector. Comput. Aided Civil Infrastruct. Eng. 2019, 35, 389–409. [Google Scholar] [CrossRef]

- Shi, Y.; Cui, L.; Qi, Z.; Meng, F.; Chen, Z. Automatic Road Crack Detection Using Random Structured Forests. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3434–3445. [Google Scholar] [CrossRef]

- Jain, A.K.; Mao, J.; Mohiuddin, K.M. Artificial Neural Networks: A Tutorial. Computer 2015, 29, 31–44. [Google Scholar] [CrossRef]

- Wang, L.; Zhuang, L.; Zhang, Z. Automatic Detection of Rail Surface Cracks with a Superpixel-Based Data-Driven Framework. J. Comput. Civil Eng. 2019, 33, 04018053. [Google Scholar] [CrossRef]

- Hoang, N.D. An Artificial Intelligence Method for Asphalt Pavement Pothole Detection Using Least Squares Support Vector Machine and Neural Network with Steerable Filter-Based Feature Extraction. Adv. Civil Eng. 2018, 4, 1–12. [Google Scholar] [CrossRef]

- Yao, X. Evolutionary Artificial Neural Networks. Int. J. Neural Syst. 1993, 4, 203–222. [Google Scholar] [CrossRef]

- Lin, Y.Z.; Nie, Z.H.; Ma, H.W. Structural Damage Detection with Automatic Feature extraction through Deep Learning. Comput. Aided Civil Infrastruct. Eng. 2017, 32, 1–22. [Google Scholar] [CrossRef]

- Zhong, K.; Teng, S.; Liu, G.; Chen, G.; Cui, F. Structural Damage Features Extracted by Convolutional Neural Networks from Mode Shapes. Appl. Sci. 2020, 10, 4247. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef] [PubMed]

- Tang, Z.; Chen, Z.; Bao, Y.; Li, H. Convolutional neural network-based data anomaly detection method using multiple information for structural health monitoring. Struct. Control Health Monit. 2019, 26, e2296. [Google Scholar] [CrossRef]

- Cha, Y.; Choi, W.; Büyüköztürk, O. Deep Learning-Based Crack Damage Detection Using Convolutional Neural Networks. Comput. Aided Civil Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Rafiei, M.H.; Adeli, H. A novel machine learning-based algorithm to detect damage in high-rise building structures. Struct. Des. Tall Spec. Build. 2017, 26, e1400. [Google Scholar] [CrossRef]

- Teng, S.; Chen, G.; Gong, P.; Liu, G.; Cui, F. Structural damage detection using convolutional neural networks combining strain energy and dynamic response. Meccanica 2019, 55, 945–959. [Google Scholar] [CrossRef]

- Teng, S.; Chen, G.; Liu, G.; Lv, J.; Cui, F. Modal Strain Energy-Based Structural Damage Detection Using Convolutional Neural Networks. Appl. Sci. 2019, 9, 3376. [Google Scholar] [CrossRef]

- Gao, Y.; Mosalam, K.M. Deep Transfer Learning for Image-Based Structural Damage Recognition. Comput. Aided Civil Infrastruct. Eng. 2018, 33, 748–768. [Google Scholar] [CrossRef]

- Kumar, S.S.; Abraham, D.M.; Jahanshahi, M.R.; Iseley, T.; Starr, J. Automated defect classification in sewer closed circuit television inspections using deep convolutional neural networks. Autom. Constr. 2018, 91, 273–283. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Zhang, A.; Wang, K.C.P.; Li, B.; Yang, E.; Dai, X.; Peng, Y.; Fei, Y.; Liu, Y.; Li, J.Q.; Chen, C. Automated Pixel-Level Pavement Crack Detection on 3D Asphalt Surfaces Using a Deep-Learning Network. Comput. Aided Civil Infrastruct. Eng. 2017, 32, 805–819. [Google Scholar] [CrossRef]

- Li, B.; Wang, K.C.P.; Zhang, A.; Yang, E.; Wang, G. Automatic classification of pavement crack using deep convolutional neural network. Int. J. Pavement Eng. 2020, 21, 457–463. [Google Scholar] [CrossRef]

- Hiroya, M.; Yoshihide, S.; Toshikazu, S.; Takehiro, K.; Hiroshi, O. Road Damage Detection and Classification Using Deep Neural Networks with Smartphone Images. Comput. Aided Civil Infrastruct. Eng. 2018, 33, 1127–1141. [Google Scholar]

- Kaur, T.; Gandhi, T.K. Automated Brain Image Classification Based on VGG-16 and Transfer Learning. In Proceedings of the 2019 International Conference on Information Technology (ICIT), Bhubaneswar, India, 19–21 December 2019. [Google Scholar]

- Liang, Y.; Bing, L.; Wei, L.; Liu, Z.; Xiao, J. Deep Concrete Inspection Using Unmanned Aerial Vehicle Towards CSSC Database. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vancouver, BC, Canada, 24–28 September 2017; pp. 24–28. [Google Scholar]

- Yeum, C.M.; Dyke, S.J.; Ramirez, J. Visual data classification in post-event building reconnaissance. Eng. Struct. 2018, 155, 16–24. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Xu, Y.; Wei, S.; Bao, Y.; Li, H. Automatic seismic damage identification of reinforced concrete columns from images by a region-based deep convolutional neural network. Struct. Control Health Monit. 2019, 26, e2313. [Google Scholar] [CrossRef]

- Liang, X. Image-based post-disaster inspection of reinforced concrete bridge systems using deep learning with Bayesian optimization. Comput. Aided Civil Infrastruct. Eng. 2018, 3, 112–119. [Google Scholar] [CrossRef]

- Tran, V.P.; Tran, T.S.; Lee, H.J.; Kim, K.D.; Baek, J.; Nguyen, T.T. One stage detector (RetinaNet)-based crack detection for asphalt pavements considering pavement distresses and surface objects. J. Civil Struct. Health Monit. 2020. [Google Scholar] [CrossRef]

- Lattanzi, D.; Miller, G. Review of Robotic Infrastructure Inspection Systems. J. Infrastruct. Syst. 2017, 23, 04017004. [Google Scholar] [CrossRef]

- Yin, X.; Chen, Y.; Bouferguene, A.; Zaman, H.; Kurach, L. A deep learning-based framework for an automated defect detection system for sewer pipes. Autom. Constr. 2019, 109, 102967. [Google Scholar] [CrossRef]

- Liu, J.; Yang, X.; Lau, S.; Wang, X.; Luo, S.; Lee, V.C.-S.; Ding, L. Automated pavement crack detection and segmentation based on two-step convolutional neural network. Comput. Aided Civil Infrastruct. Eng. 2020, 35, 1291–1305. [Google Scholar] [CrossRef]

- Li, R.; Yuan, Y.; Zhang, W.; Yuan, Y. Unified Vision-Based Methodology for Simultaneous Concrete Defect Detection and Geolocalization. Comput. Aided Civil Infrastruct. Eng. 2018, 33, 527–544. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Available online: http://www.mathworks.com/ (accessed on 14 December 2020).

- Mandt, S.; Hoffman, M.D.; Blei, D.M. Stochastic Gradient Descent as Approximate Bayesian Inference. J. Mach. Learn. Res. 2017, 18, 1–35. [Google Scholar]

| Network | TN1 | TN2 | TN3 | TN4 | TN5 | TN6 | TN7 | TN8 | TN9 | TN10 | TN11 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Depth (layers) | 8 | 22 | 53 | 48 | 18 | 18 | 50 | 101 | 16 | 19 | 164 |

| Model size (MB) | 227 | 27 | 13 | 89 | 4.6 | 44 | 96 | 167 | 515 | 535 | 209 |

| Network | Feature Extraction Layer | Network | Feature Extraction Layer |

|---|---|---|---|

| TN1 | ‘relu5’ | TN7 | ‘activation_40_relu’ |

| TN2 | ‘inception_4d-output’ | TN8 | ‘res4b22_relu’ |

| TN3 | ‘block_13_expand_relu’ | TN9 | ‘relu5_3’ |

| TN4 | ‘mixed7’ | TN10 | ‘relu5_4’ |

| TN5 | ‘fire5-concat’ | TN11 | ‘block17_20_ac’ |

| TN6 | ‘res4b_relu’ |

| Models | Detection Precision (AP) | Computational Cost (s) |

|---|---|---|

| alexnet | 0 | 2,266 |

| googlenet | 0.84 | 4,451 |

| mobilenetv2 | 0.87 | 8,763 |

| inceptionv3 | 0.16 | 9,720 |

| squeezenet | 0.60 | 2,873 |

| resnet18 | 0.89 | 3,299 |

| resnet50 | 0.02 | 10,321 |

| resnet101 | 0.73 | 17,457 |

| vgg16 | 0 | 13,580 |

| vgg19 | 0.23 | 15,719 |

| inceptionresnetv2 | 0.86 | 22,523 |

| Layers | Detection Precision (AP) | Computational Cost (s) |

|---|---|---|

| L1 | 0.18 | 2411 |

| L2 | 0.40 | 2558 |

| L3 | 0.82 | 2637 |

| L4 | 0.80 | 2767 |

| L5 | 0.86 | 2944 |

| L6 | 0.89 | 3299 |

| L7 | 0.87 | 3739 |

| L8 | 0 | 4155 |

| Image Size | Detection Precision (AP) | FPS |

|---|---|---|

| 32 × 32 | 0 | 20 |

| 64 × 64 | 0.15 | 17 |

| 128 × 128 | 0.81 | 14 |

| 256 × 256 | 0.88 | 13 |

| 512 × 512 | 0.88 | 11 |

| 1024 × 1024 | 0.89 | 10 |

| 2048 × 2048 | 0.88 | 5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Teng, S.; Liu, Z.; Chen, G.; Cheng, L. Concrete Crack Detection Based on Well-Known Feature Extractor Model and the YOLO_v2 Network. Appl. Sci. 2021, 11, 813. https://doi.org/10.3390/app11020813

Teng S, Liu Z, Chen G, Cheng L. Concrete Crack Detection Based on Well-Known Feature Extractor Model and the YOLO_v2 Network. Applied Sciences. 2021; 11(2):813. https://doi.org/10.3390/app11020813

Chicago/Turabian StyleTeng, Shuai, Zongchao Liu, Gongfa Chen, and Li Cheng. 2021. "Concrete Crack Detection Based on Well-Known Feature Extractor Model and the YOLO_v2 Network" Applied Sciences 11, no. 2: 813. https://doi.org/10.3390/app11020813

APA StyleTeng, S., Liu, Z., Chen, G., & Cheng, L. (2021). Concrete Crack Detection Based on Well-Known Feature Extractor Model and the YOLO_v2 Network. Applied Sciences, 11(2), 813. https://doi.org/10.3390/app11020813