Equal Baseline Camera Array—Calibration, Testbed and Applications

Abstract

:1. Introduction

2. Related Work

2.1. 3D Vision Technologies

- Structured-light 3D scanners scan a 3D shape by emitting precisely defined light patters such as stripes and recording distortions of light on objects [14].

- Light Detection and Ranging (LIDAR) measures distances to a series of distant points by aiming a laser in different directions [15].

- Time-of-flight cameras (TOF) operate on a similar principle as LIDAR however instead of redirecting a measuring laser the entire measurement of distances is performed using a single light beam [16].

- Stereo cameras resemble 3D imaging with the use of a pair of eyes. Stereo cameras record relative differences between locations of objects in images taken from two constituent cameras. The extent of these disparities depends on distances between a stereo camera and objects located in the field of view. The closer the object is, the greater is the disparity [3].

- It can be used in highly illuminated areas. It is not possible to use structured-light 3D scanners in intensive natural light because external light sources interfere with the measurement performed by this kind of a sensor.

- An array provides dense data concerning distances to parts of objects visible in the 3D space. In contrast to LIDARs and TOF cameras which can provide only sparse depth maps.

- The technology of camera arrays can be flexibly used for measuring both small and large distances depending on a size of used cameras and types of their lens. Such a functionality is very limited when other kinds of 3D imaging devices are used.

- 3D imaging with the use of array does not require relocating the imaging device to different positions. It is necessary when the technology of structure from motion or multi-view stereo (MVS) is used.

- An array is a compact device which can be inexpensive if low-cost cameras are used.

- The weight of an array constructed from small-sized cameras is low, therefore it can be mounted on moving parts of an autonomous robot (e.g., robotic arms) without putting much load on servos or other mechanisms driving the robot.

Camera Arrays

2.2. Testbeds

2.3. Stereo Matching Algorithms

2.4. Calibration Methods

2.4.1. The Math behind openCV’s Calibration

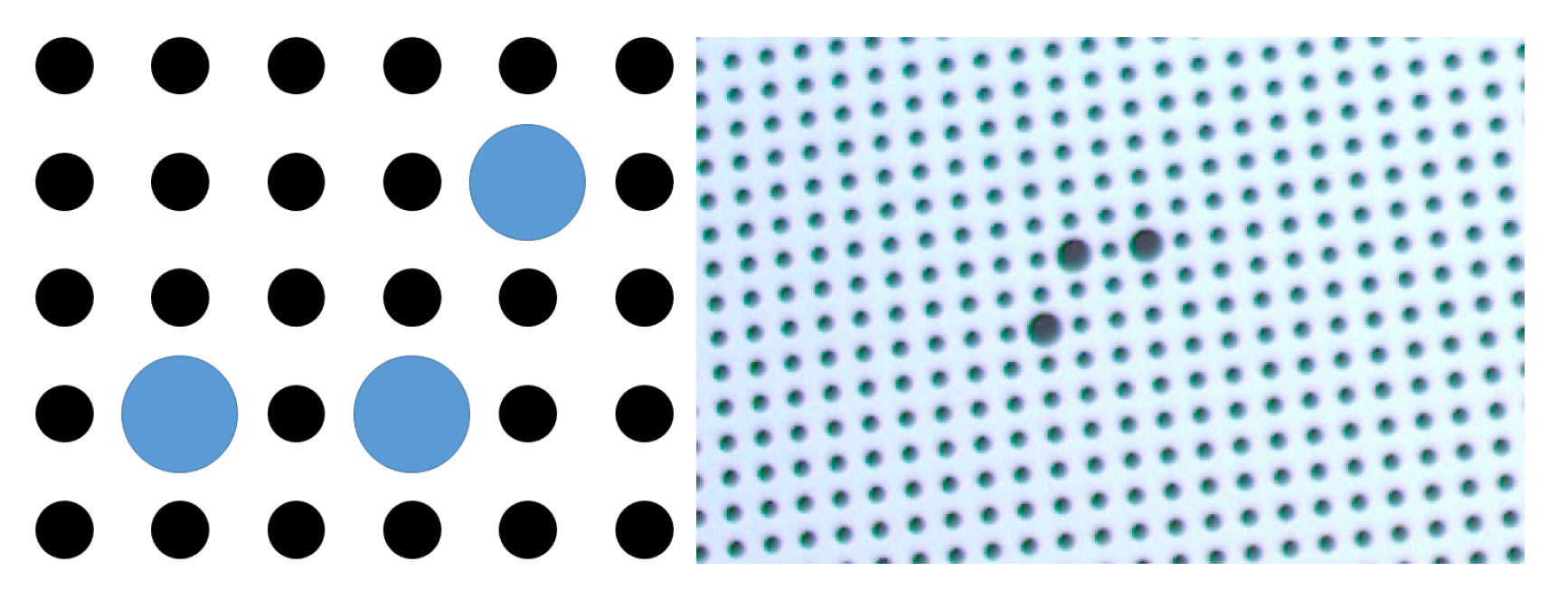

2.4.2. AIT Pattern vs. openCV’s Checkerboard

2.4.3. Other Patterns

2.4.4. Multi-Camera Calibration

2.5. Self-Calibration

3. Equal Baseline Camera Array

3.1. Exceptions Excluding Merging Method

4. Calibration of the Camera Array

4.1. Pairwise Calibration

- right camera

- The right camera forms with the central camera a standard stereo camera, therefore neither a rotation or an flipping is needed. Thus, in this case .

- up camera

- Images from a pair consisting of top and central camera were first rotated counterclockwise and than flipped around y-axis. The matrix defining the transformation for the pair created with the use of up camera is equal to the value presented in Equation (5).where is the center of an image. This value needs to be considered because point (0,0) is not located in the middle of the image, but it is in top left corner of an image affecting transformation matrices. The matrix was also calculated with regard to the convention such that coordinates of points in an image rises in the Y dimension from the top to bottom unlike in the commonly used Cartesian coordinate system.

- left camera

- Images from a left camera with corresponding images from a central camera were flipped around y-axis. Therefore,

- bottom camera

- In case of using a stereo camera consisting of a bottom camera and a central one images were rotated counterclockwise.

4.2. Self-Calibrating Baselines

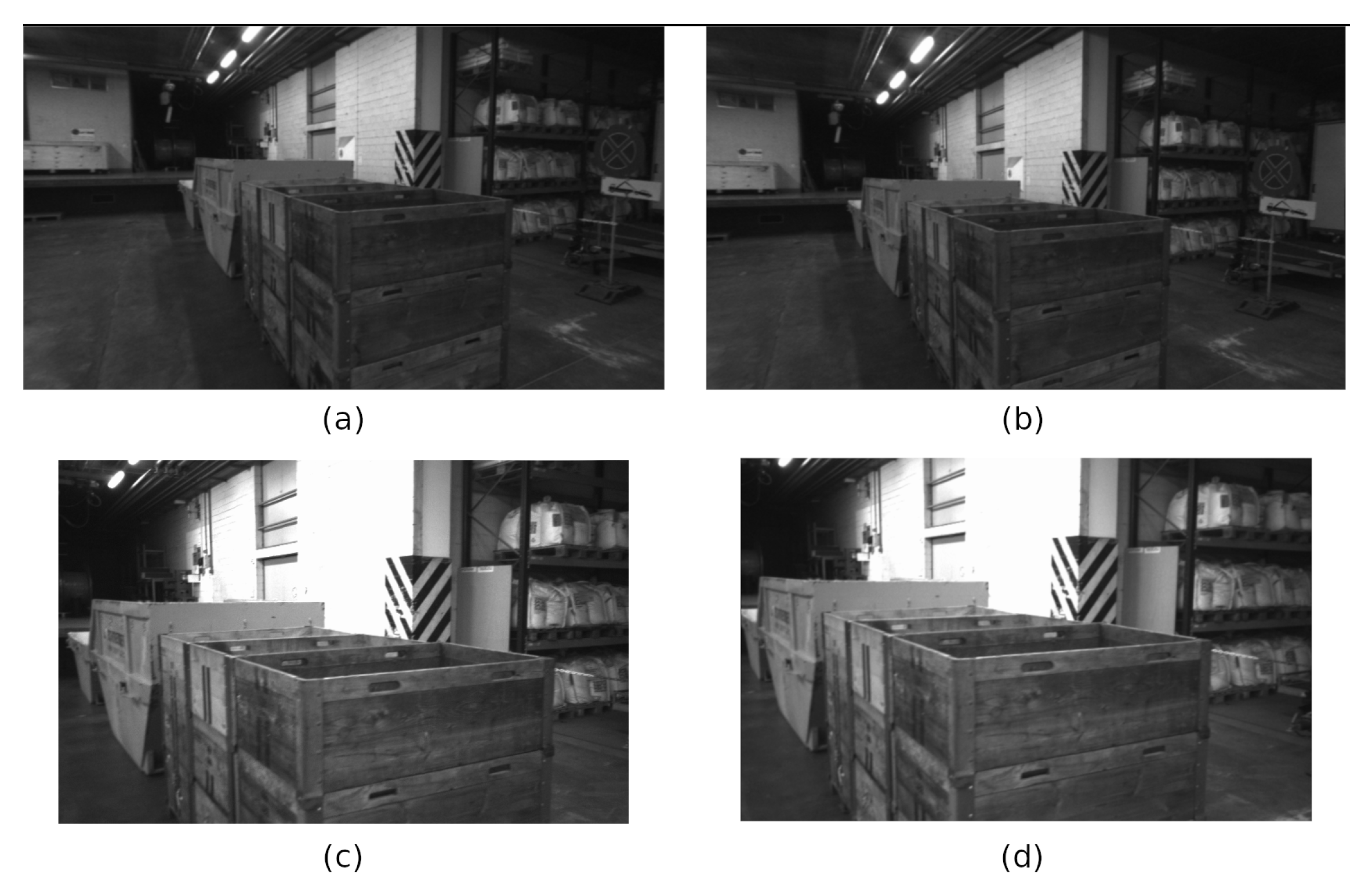

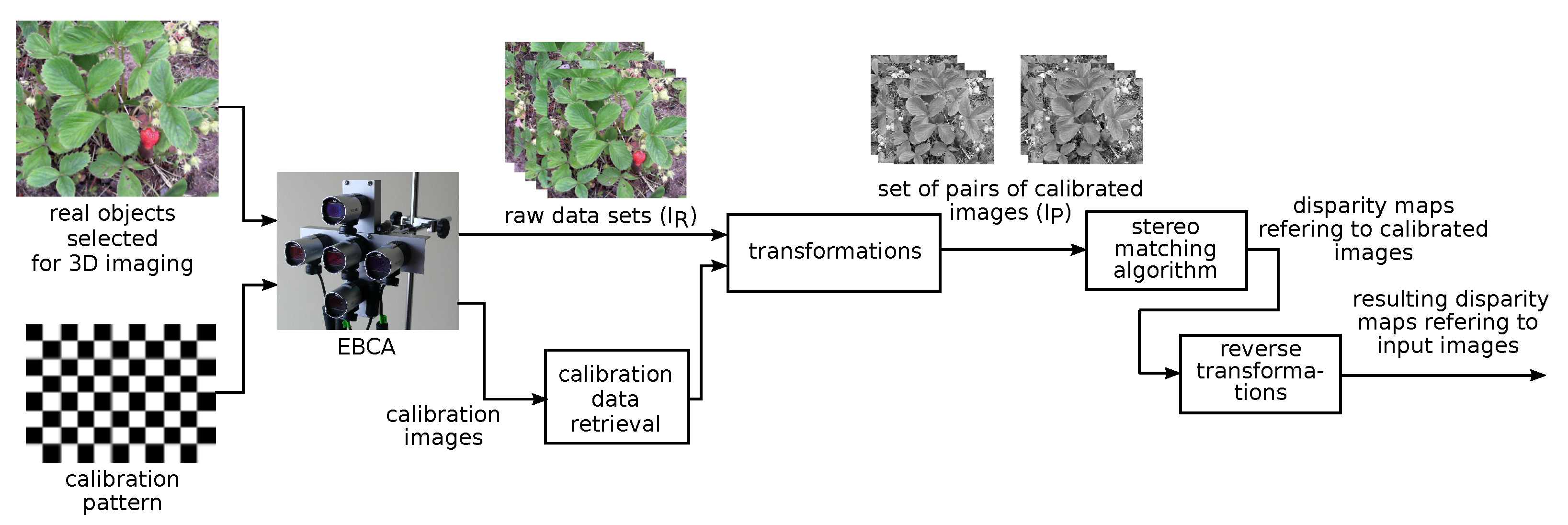

5. EBCA Testbed

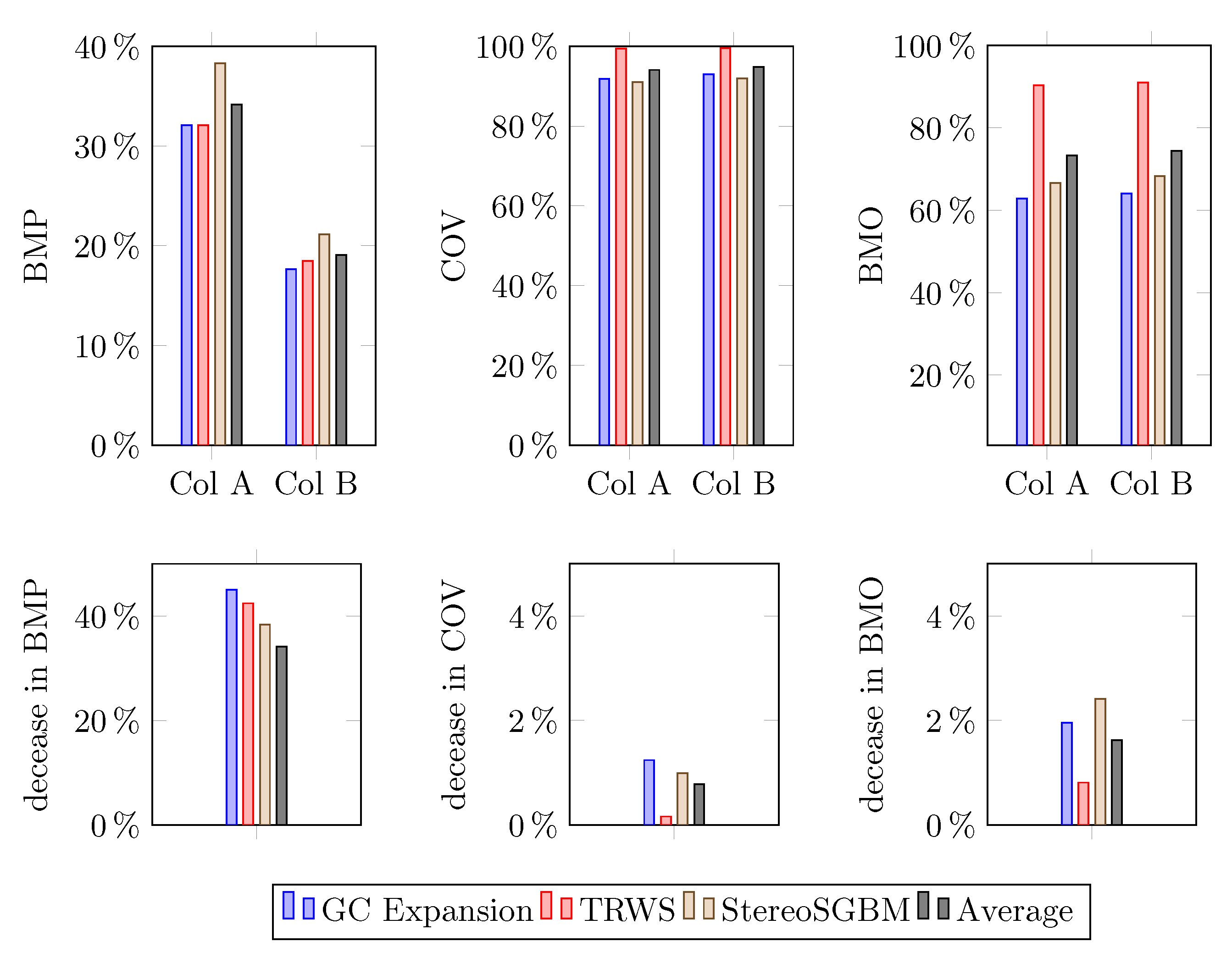

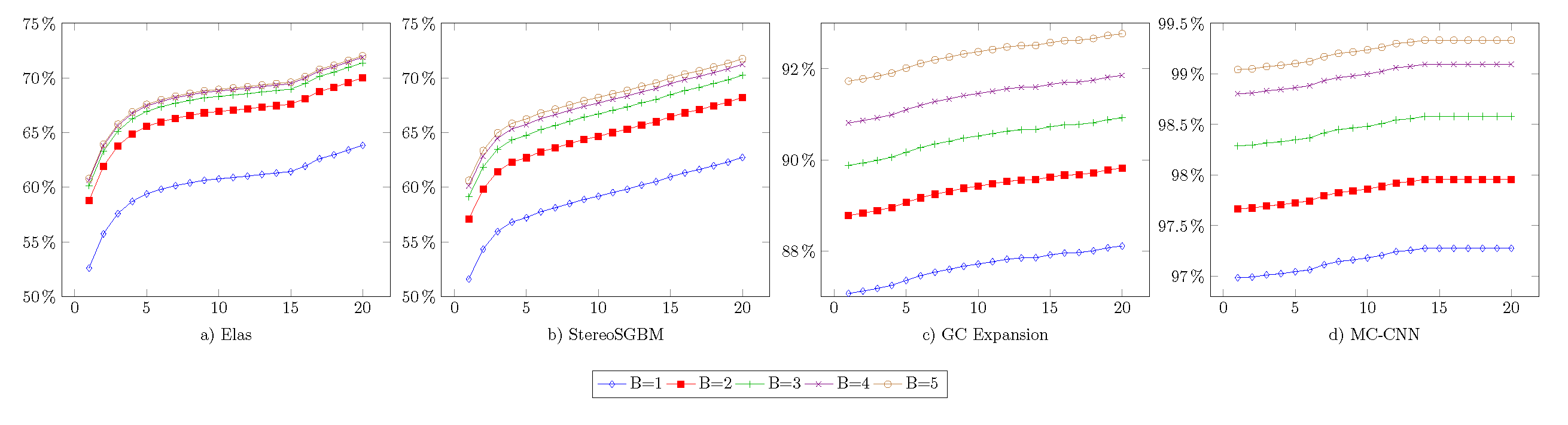

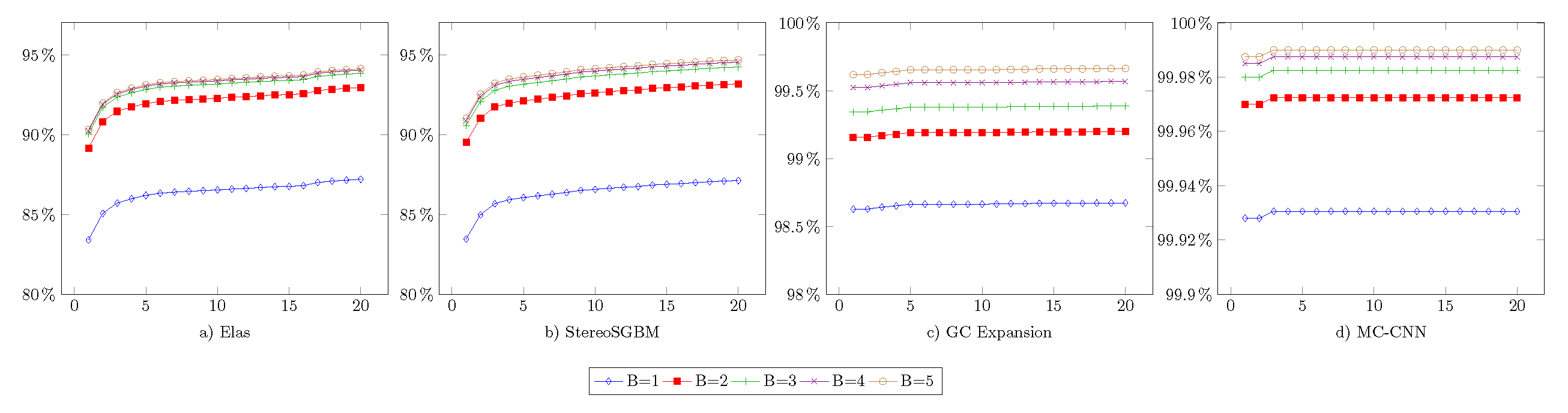

Evaluation Metrics

6. Experiments

6.1. Results of Self-Calibration

6.2. EEMM Parameters

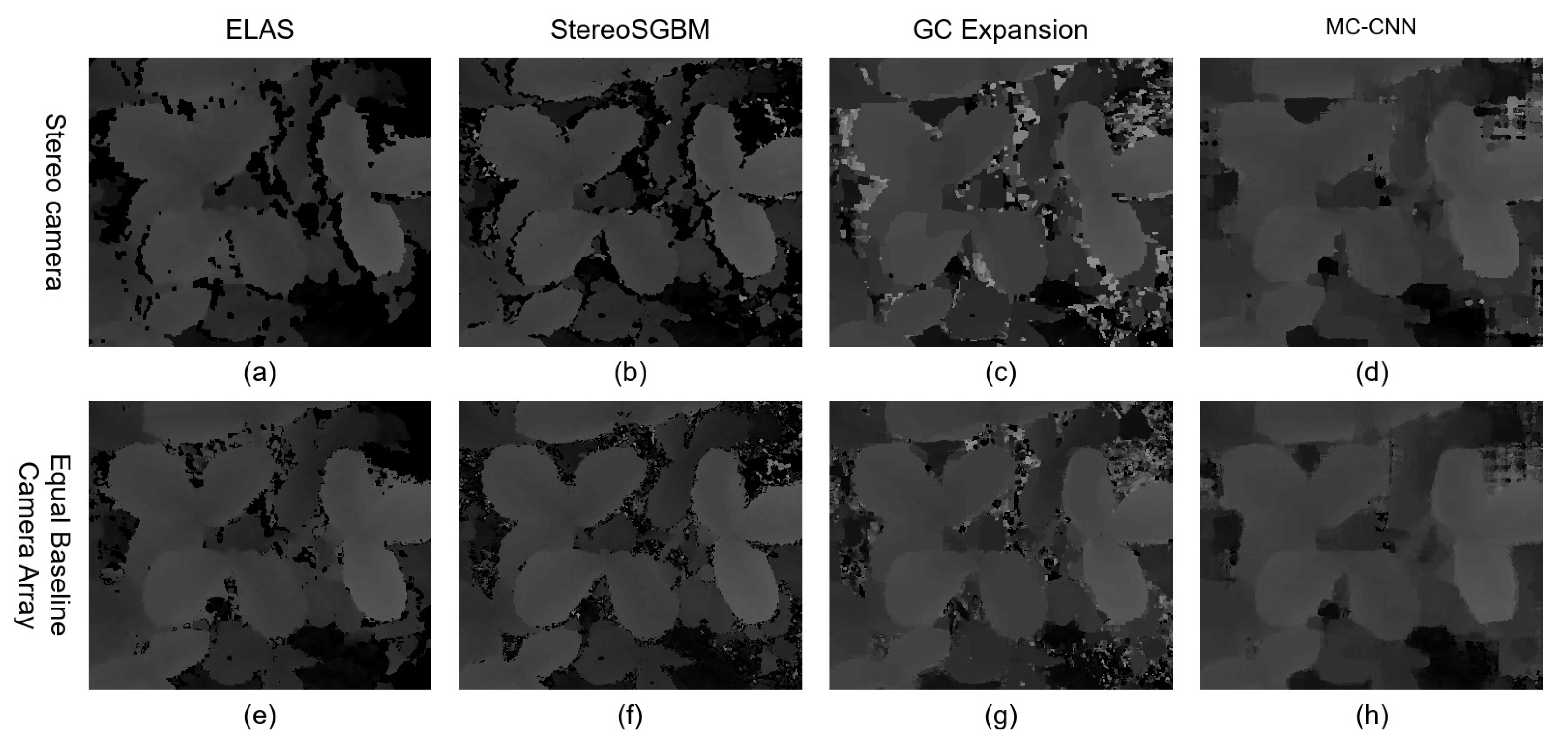

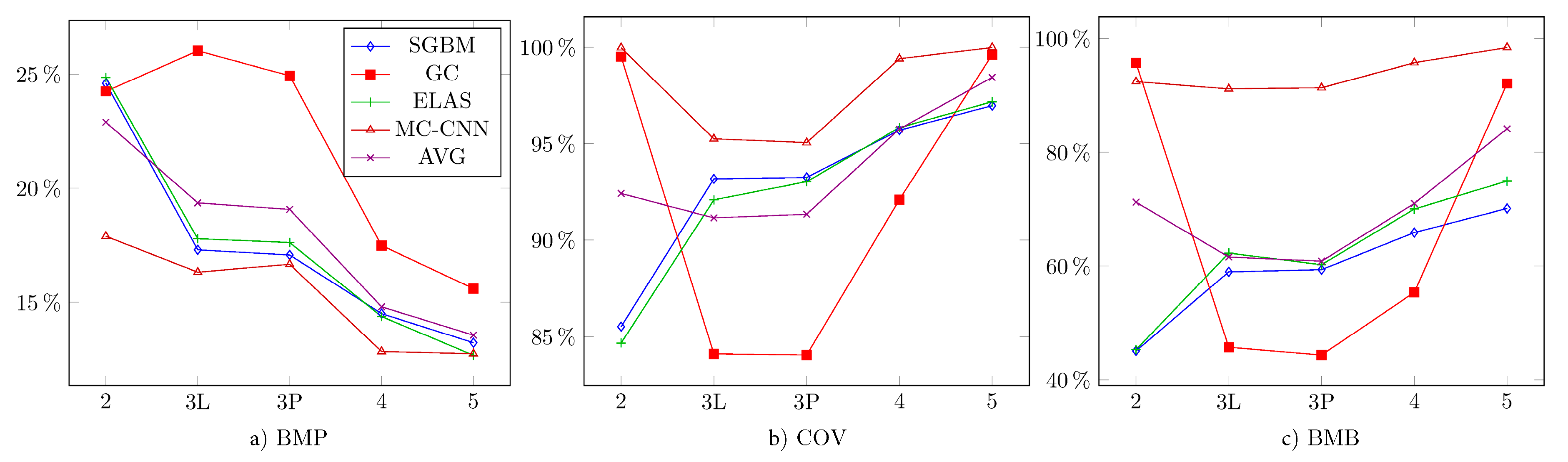

6.3. Different Number of Cameras

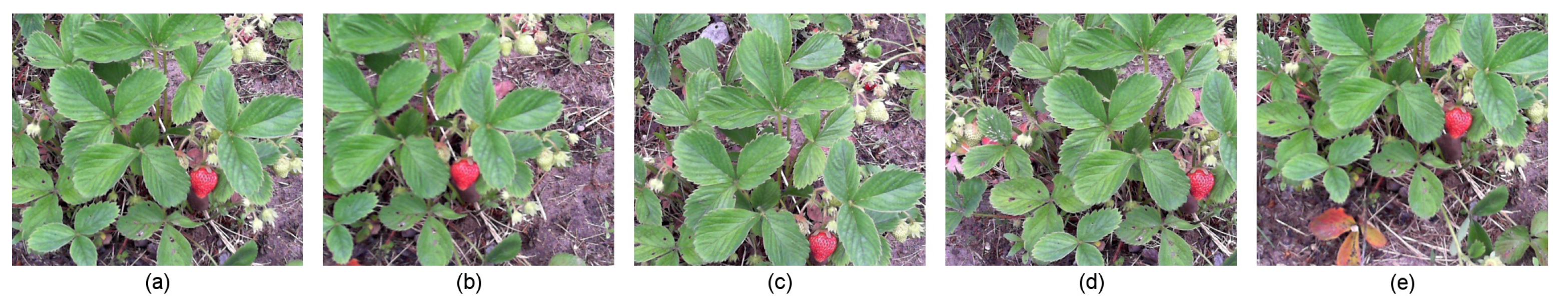

7. EBCA Applications

8. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| EBCA | Equal Baseline Camera Array |

| EEMM | Exceptions Excluding Merging Method |

| LIDAR | Light Detection and Ranging |

| TOF | Time-of-flight camera |

| ELAS | Efficient Large-scale Stereo Matching |

| StereoSGBM | Stereo Semi-Global Block Matching |

| GC Expansion | Graph Cut with Expansion Moves |

| BMP | percentage of bad matching pixels |

| BMB | percentage of bad matching pixels in background |

| COV | coverage |

| SIFT | scale-invariant feature transform |

| SURF | speeded up robust features |

| UUV | unmanned underwater vehicle |

| CNN | convolutional neural network |

| MA | matching area |

| MR | margin area |

References

- Park, J.I.; Inoue, S. Acquisition of sharp depth map from multiple cameras. Signal Process. Image Commun. 1998, 14, 7–19. [Google Scholar] [CrossRef]

- Kaczmarek, A.L. 3D Vision System for a Robotic Arm Based on Equal Baseline Camera Array. J. Intell. Robot. Syst. 2019, 99, 13–28. [Google Scholar] [CrossRef] [Green Version]

- Kaczmarek, A.L. Stereo vision with Equal Baseline Multiple Camera Set (EBMCS) for obtaining depth maps of plants. Comput. Electron. Agric. 2017, 135, 23–37. [Google Scholar] [CrossRef]

- Kaczmarek, A.L. Influence of Aggregating Window Size on Disparity Maps Obtained from Equal Baseline Multiple Camera Set (EBMCS). In Image Processing and Communications Challenges 8; Choraś, R.S., Ed.; Springer International Publishing: Cham, Switzerland, 2017; pp. 187–194. [Google Scholar]

- Kaczmarek, A.L. Stereo camera upgraded to equal baseline multiple camera set (EBMCS). In Proceedings of the 2017 3DTV Conference: The True Vision—Capture, Transmission and Display of 3D Video (3DTV-CON), Copenhagen, Denmark, 7–9 June 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Ciurea, F.; Lelescu, D.; Chatterjee, P.; Venkataraman, K. Adaptive Geometric Calibration Correction for Camera Array. Electron. Imaging 2016, 2016, 1–6. [Google Scholar] [CrossRef]

- Furukawa, Y.; Ponce, J. Accurate camera calibration from multi-view stereo and bundle adjustment. Int. J. Comput. Vis. 2009, 84, 257–268. [Google Scholar] [CrossRef]

- Antensteiner, D.; Blaschitz, B. Multi-camera Array Calibration For Light Field Depth Estimation. In Proceedings of the Austrian Association for Pattern Recognition Workshop (OAGM), Hall in Tirol, Austria, 15–16 May 2018. [Google Scholar]

- Scharstein, D.; Szeliski, R.; Zabih, R. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. In Proceedings of the IEEE Workshop on Stereo and Multi-Baseline Vision (SMBV 2001), Kauai, HI, USA, 9–10 December 2001; pp. 131–140. [Google Scholar] [CrossRef]

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar] [CrossRef]

- Zbontar, J.; LeCun, Y. Stereo matching by training a convolutional neural network to compare image patches. J. Mach. Learn. Res. 2016, 17, 1–32. [Google Scholar]

- Traxler, L.; Ginner, L.; Breuss, S.; Blaschitz, B. Experimental Comparison of Optical Inline 3D Measurement and Inspection Systems. IEEE Access 2021, 9, 53952–53963. [Google Scholar] [CrossRef]

- Jang, W.; Je, C.; Seo, Y.; Lee, S.W. Structured-light stereo: Comparative analysis and integration of structured-light and active stereo for measuring dynamic shape. Opt. Lasers Eng. 2013, 51, 1255–1264. [Google Scholar] [CrossRef]

- Rasul, A.; Seo, J.; Khajepour, A. Development of Sensing Algorithms for Object Tracking and Predictive Safety Evaluation of Autonomous Excavators. Appl. Sci. 2021, 11, 6366. [Google Scholar] [CrossRef]

- Yim, J.H.; Kim, S.Y.; Kim, Y.; Cho, S.; Kim, J.; Ahn, Y.H. Rapid 3D-Imaging of Semiconductor Chips Using THz Time-of-Flight Technique. Appl. Sci. 2021, 11, 4770. [Google Scholar] [CrossRef]

- Seitz, S.M.; Curless, B.; Diebel, J.; Scharstein, D.; Szeliski, R. A Comparison and Evaluation of Multi-View Stereo Reconstruction Algorithms. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 1, pp. 519–528. [Google Scholar] [CrossRef]

- Antensteiner, D.; Štolc, S.; Valentín, K.; Blaschitz, B.; Huber-Mörk, R.; Pock, T. High-precision 3d sensing with hybrid light field & photometric stereo approach in multi-line scan framework. Electron. Imaging 2017, 2017, 52–60. [Google Scholar]

- Okutomi, M.; Kanade, T. A multiple-baseline stereo. Pattern Anal. Mach. Intell. IEEE Trans. 1993, 15, 353–363. [Google Scholar] [CrossRef]

- Wilburn, B.; Joshi, N.; Vaish, V.; Talvala, E.V.; Antunez, E.; Barth, A.; Adams, A.; Horowitz, M.; Levoy, M. High Performance Imaging Using Large Camera Arrays; ACM SIGGRAPH 2005 Papers; ACM: New York, NY, USA, 2005; pp. 765–776. [Google Scholar] [CrossRef]

- Wang, D.; Pan, Q.; Zhao, C.; Hu, J.; Xu, Z.; Yang, F.; Zhou, Y. A Study on Camera Array and Its Applications. IFAC-PapersOnLine 2017, 50, 10323–10328. [Google Scholar] [CrossRef]

- Manta, A. Multiview Imaging and 3D TV. A Survey; Delft University of Technology, Information and Communication Theory Group: Delft, The Netherlands, 2008. [Google Scholar]

- Nalpantidis, L.; Gasteratos, A. Stereo Vision Depth Estimation Methods for Robotic Applications. In Depth Map and 3D Imaging Applications: Algorithms and Technologies; IGI Global: Hershey, PA, USA, 2011; Volume 3, pp. 397–417. [Google Scholar] [CrossRef]

- Ackermann, M.; Cox, D.; McGraw, J.; Zimmer, P. Lens and Camera Arrays for Sky Surveys and Space Surveillance. In Proceedings of the Advanced Maui Optical and Space Surveillance Technologies Conference, Wailea, HI, USA, 20–23 September 2016. [Google Scholar]

- Venkataraman, K.; Lelescu, D.; Duparré, J.; McMahon, A.; Molina, G.; Chatterjee, P.; Mullis, R.; Nayar, S. PiCam: An Ultra-thin High Performance Monolithic Camera Array. ACM Trans. Graph. 2013, 32, 166:1–166:13. [Google Scholar] [CrossRef] [Green Version]

- Ge, K.; Hu, H.; Feng, J.; Zhou, J. Depth Estimation Using a Sliding Camera. IEEE Trans. Image Process. 2016, 25, 726–739. [Google Scholar] [CrossRef] [PubMed]

- Xiao, X.; Daneshpanah, M.; Javidi, B. Occlusion Removal Using Depth Mapping in Three-Dimensional Integral Imaging. J. Disp. Technol. 2012, 8, 483–490. [Google Scholar] [CrossRef]

- Hensler, J.; Denker, K.; Franz, M.; Umlauf, G. Hybrid Face Recognition Based on Real-Time Multi-camera Stereo-Matching. In Lecture Notes in Computer Science, Proceedings of the Advances in Visual Computing, Las Vegas, NV, USA, 26–28 September 2011; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6939, pp. 158–167. [Google Scholar] [CrossRef] [Green Version]

- Ayache, N.; Lustman, F. Trinocular stereo vision for robotics. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 73–85. [Google Scholar] [CrossRef]

- Mulligan, J.; Kaniilidis, K. Trinocular stereo for non-parallel configurations. In Proceedings of the 15th International Conference on Pattern Recognition, ICPR-2000, Barcelona, Spain, 3–7 September 2000; Volume 1, pp. 567–570. [Google Scholar] [CrossRef] [Green Version]

- Mulligan, J.; Isler, V.; Daniilidis, K. Trinocular Stereo: A Real-Time Algorithm and its Evaluation. Int. J. Comput. Vis. 2002, 47, 51–61. [Google Scholar] [CrossRef]

- Andersen, J.C.; Andersen, N.A.; Ravn, O. Trinocular stereo vision for intelligent robot navigation. In Proceedings of the IFAC/EURON Symposium on Intelligent Autonomous Vehicles, Lisbon, Portugal, 5–7 July 2004. [Google Scholar] [CrossRef] [Green Version]

- Williamson, T.; Thorpe, C. A trinocular stereo system for highway obstacle detection. In Proceedings of the 1999 IEEE International Conference on Robotics and Automation (Cat. No.99CH36288C), Detroit, MI, USA, 10–15 May 1999; Volume 3, pp. 2267–2273. [Google Scholar] [CrossRef]

- Wang, J.; Xiao, X.; Javidi, B. Three-dimensional integral imaging with flexible sensing. Opt. Lett. 2014, 39, 6855–6858. [Google Scholar] [CrossRef]

- Scharstein, D.; Hirschmüller, H.; Kitajima, Y.; Krathwohl, G.; Nešić, N.; Wang, X.; Westling, P. High-Resolution Stereo Datasets with Subpixel-Accurate Ground Truth. In Proceedings of the Pattern Recognition: 36th German Conference, GCPR 2014, Münster, Germany, 2–5 September 2014; Springer International Publishing: Cham, Switzerland, 2014; pp. 31–42. [Google Scholar] [CrossRef] [Green Version]

- Menze, M.; Heipke, C.; Geiger, A. Joint 3D Estimation of Vehicles and Scene Flow. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Volume II-3W5, La Grande Motte, France, 28 September–3 October 2015. ISPRS: Hannover, Germany, 2015; pp. 427–434. [Google Scholar]

- Silberman, N.; Hoiem, D.; Kohli, P.; Fergus, R. Indoor Segmentation and Support Inference from RGBD Images. In Lecture Notes in Computer Science, Proceedings of the ECCV 2012, Florence, Italy, 7–13 October 2012; Springer: Berlin/Heidelberg, Germany, 2012; Volume 7576. [Google Scholar] [CrossRef]

- Schöps, T.; Schönberger, J.L.; Galliani, S.; Sattler, T.; Schindler, K.; Pollefeys, M.; Geiger, A. A Multi-View Stereo Benchmark with High-Resolution Images and Multi-Camera Videos. In Proceedings of the IEEE Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2538–2547. [Google Scholar] [CrossRef]

- Geiger, A.; Roser, M.; Urtasun, R. Efficient Large-Scale Stereo Matching. In Lecture Notes in Computer Science, Proceedings of the Computer Vision—ACCV 2010 10th Asian Conference on Computer Vision, Queenstown, New Zealand, 8–12 November 2010; Kimmel, R., Klette, R., Sugimoto, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 6492, pp. 25–38. [Google Scholar] [CrossRef]

- Hirschmuller, H. Stereo Processing by Semiglobal Matching and Mutual Information. Pattern Anal. Mach. Intell. IEEE Trans. 2008, 30, 328–341. [Google Scholar] [CrossRef]

- Bradski, D.G.R.; Kaehler, A. Learning Opencv, 1st ed.; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2008. [Google Scholar]

- Boykov, Y.; Veksler, O.; Zabih, R. Fast approximate energy minimization via graph cuts. Pattern Anal. Mach. Intell. IEEE Trans. 2001, 23, 1222–1239. [Google Scholar] [CrossRef] [Green Version]

- Besag, J. On the Statistical Analysis of Dirty Pictures. J. R. Stat. Soc. Ser. B (Methodol.) 1986, 48, 259–302. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 9 August 2021).

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar] [CrossRef] [Green Version]

- Blaschitz, B.; Štolc, S.; Antensteiner, D. Geometric calibration and image rectification of a multi-line scan camera for accurate 3d reconstruction. In Proceedings of the IS&T International Symposium on Electronic Imaging, Burlingame, CA, USA, 28 January–1 February 2018. IS&T: Springfield, VA, USA, 2018; pp. 240-1–240-6. [Google Scholar] [CrossRef]

- Tao, J.; Wang, Y.; Cai, B.; Wang, K. Camera Calibration with Phase-Shifting Wedge Grating Array. Appl. Sci. 2018, 8, 644. [Google Scholar] [CrossRef] [Green Version]

- Kang, Y.S.; Ho, Y.S. An efficient image rectification method for parallel multi-camera arrangement. Consum. Electron. IEEE Trans. 2011, 57, 1041–1048. [Google Scholar] [CrossRef]

- Yang, J.; Guo, F.; Wang, H.; Ding, Z. A multi-view image rectification algorithm for matrix camera arrangement. In Artificial Intelligence Research; Sciedu Press: Richmond Hill, ON, Canada, 2014; Volume 3, pp. 18–29. [Google Scholar] [CrossRef]

- Sun, C. Uncalibrated three-view image rectification. Image Vis. Comput. 2003, 21, 259–269. [Google Scholar] [CrossRef]

- Hartley, R.I. Theory and Practice of Projective Rectification. Int. J. Comput. Vis. 1999, 35, 115–127. [Google Scholar] [CrossRef]

- Hosseininaveh, A.; Serpico, M.; Robson, S.; Hess, M.; Boehm, J.; Pridden, I.; Amati, G. Automatic Image Selection in Photogrammetric Multi-view Stereo Methods. In Proceedings of the 13th International Symposium on Virtual Reality, Archaeology and Cultural Heritage VAST, Brighton, UK, 19–21 November 2012; Eurographics Association: Geneve, Switzerland, 2012; pp. 9–16. [Google Scholar]

- Lowe, D. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar] [CrossRef]

- Liu, R.; Zhang, H.; Liu, M.; Xia, X.; Hu, T. Stereo Cameras Self-Calibration Based on SIFT. In Proceedings of the 2009 International Conference on Measuring Technology and Mechatronics Automation, Zhangjiajie, China, 11–12 April 2009; Volume 1, pp. 352–355. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Boukamcha, H.; Atri, M.; Smach, F. Robust auto calibration technique for stereo camera. In Proceedings of the 2017 International Conference on Engineering MIS (ICEMIS), Monastir, Tunisia, 8–10 May 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Mentzer, N.; Mahr, J.; Payá-Vayá, G.; Blume, H. Online stereo camera calibration for automotive vision based on HW-accelerated A-KAZE-feature extraction. J. Syst. Archit. 2019, 97, 335–348. [Google Scholar] [CrossRef]

- Carrera, G.; Angeli, A.; Davison, A.J. SLAM-based automatic extrinsic calibration of a multi-camera rig. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2652–2659. [Google Scholar] [CrossRef] [Green Version]

- Heng, L.; Bürki, M.; Lee, G.H.; Furgale, P.; Siegwart, R.; Pollefeys, M. Infrastructure-based calibration of a multi-camera rig. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–5 June 2014; pp. 4912–4919. [Google Scholar] [CrossRef]

- Fehrman, B.; McGough, J. Depth mapping using a low-cost camera array. In Proceedings of the 2014 Southwest Symposium on Image Analysis and Interpretation, San Diego, CA, USA, 6–8 April 2014; pp. 101–104. [Google Scholar] [CrossRef]

- Fehrman, B.; McGough, J. Handling occlusion with an inexpensive array of cameras. In Proceedings of the 2014 Southwest Symposium on Image Analysis and Interpretation, San Diego, CA, USA, 6–8 April 2014; pp. 105–108. [Google Scholar] [CrossRef]

- Kaczmarek, A.L. Improving depth maps of plants by using a set of five cameras. J. Electron. Imaging 2015, 24, 023018. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Tadic, V.; Odry, A.; Burkus, E.; Kecskes, I.; Kiraly, Z.; Klincsik, M.; Sari, Z.; Vizvari, Z.; Toth, A.; Odry, P. Painting Path Planning for a Painting Robot with a RealSense Depth Sensor. Appl. Sci. 2021, 11, 1467. [Google Scholar] [CrossRef]

- Zaarane, A.; Slimani, I.; Al Okaishi, W.; Atouf, I.; Hamdoun, A. Distance measurement system for autonomous vehicles using stereo camera. Array 2020, 5, 100016. [Google Scholar] [CrossRef]

- Kopf, C.; Pock, T.; Blaschitz, B.; Štolc, S. Inline Double Layer Depth Estimation with Transparent Materials. In Lecture Notes in Computer Science, Proceedings of the Pattern Recognition: 42nd DAGM German Conference, DAGM GCPR 2020, Tübingen, Germany, 28 September–1 October 2020; Springer International Publishing: Cham, Switzerland, 2021; pp. 418–431. [Google Scholar]

- Ihrke, I.; Kutulakos, K.N.; Lensch, H.P.A.; Magnor, M.; Heidrich, W. Transparent and Specular Object Reconstruction. Comput. Graph. Forum 2010, 29, 2400–2426. [Google Scholar] [CrossRef]

- Kaczmarek, A.L.; Lebiedź, J.; Jaroszewicz, J.; Świȩszkowski, W. 3D Scanning of Semitransparent Amber with and without Inclusions. In Proceedings of the International Conference in Central Europe on Computer Graphics, Visualization and Computer Vision WSCG 2021, Pilsen, Czech Republic, 17–20 May 2021; pp. 145–154. [Google Scholar] [CrossRef]

- Watson, S.; Duecker, D.A.; Groves, K. Localisation of Unmanned Underwater Vehicles (UUVs) in Complex and Confined Environments: A Review. Sensors 2020, 20, 6203. [Google Scholar] [CrossRef] [PubMed]

- Fanta-Jende, P.; Steininger, D.; Bruckmüller, F.; Sulzbachner, C. A Versatile Uav near real-time mapping solution for disaster response—Concept, ideas and implementation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, 43, 429–435. [Google Scholar] [CrossRef]

| Set ID | Matching Size | Disparity Range |

|---|---|---|

| 440 × 380 | 1–28 | |

| 340 × 325 | 19–45 | |

| 420 × 370 | 31–79 | |

| 320 × 295 | 11–35 | |

| 470 × 380 | 40–69 | |

| 330 × 310 | 27–58 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kaczmarek, A.L.; Blaschitz, B. Equal Baseline Camera Array—Calibration, Testbed and Applications. Appl. Sci. 2021, 11, 8464. https://doi.org/10.3390/app11188464

Kaczmarek AL, Blaschitz B. Equal Baseline Camera Array—Calibration, Testbed and Applications. Applied Sciences. 2021; 11(18):8464. https://doi.org/10.3390/app11188464

Chicago/Turabian StyleKaczmarek, Adam L., and Bernhard Blaschitz. 2021. "Equal Baseline Camera Array—Calibration, Testbed and Applications" Applied Sciences 11, no. 18: 8464. https://doi.org/10.3390/app11188464

APA StyleKaczmarek, A. L., & Blaschitz, B. (2021). Equal Baseline Camera Array—Calibration, Testbed and Applications. Applied Sciences, 11(18), 8464. https://doi.org/10.3390/app11188464