Leaf Spot Attention Networks Based on Spot Feature Encoding for Leaf Disease Identification and Detection

Abstract

:1. Introduction

1.1. Related Works

1.1.1. Leaf Disease Identification

1.1.2. Leaf Diseases Detection

1.2. Motivation

1.3. Proposed Approach

1.4. Contributions

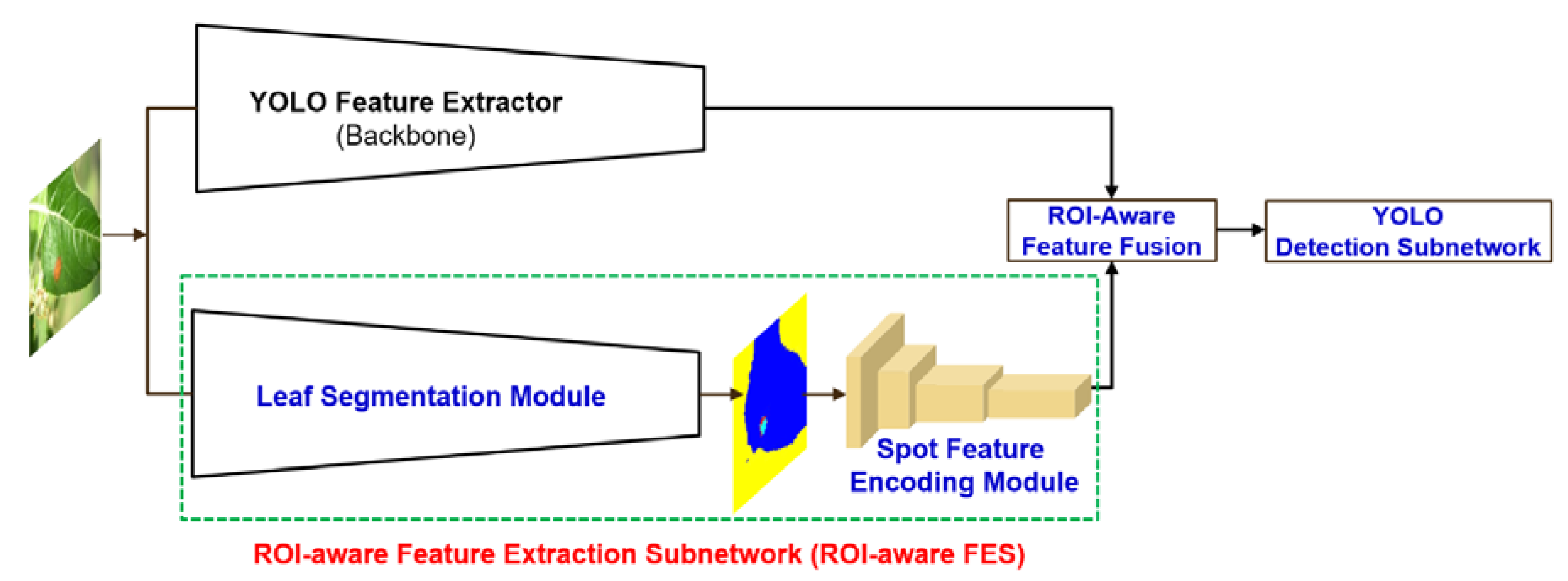

- This paper is an updated version of the previous work published in the IEEE CVPR workshop [40]. The previous work revealed that the proposed ROI-aware FES is very effective in improving leaf disease identification performance. In this line of research, it is interesting that the proposed ROI-aware FES can also be applied to leaf disease detection. Therefore, in this paper, we illustrate how to incorporate the proposed ROI-aware FES, that is, a new spot feature extractor, into the conventional YOLO framework. The advanced YOLO model that incorporates the ROI-aware FES and feature fusion is referred to as AE-YOLO in this study. In addition, it is revealed that the proposed AE-YOLO can improve leaf disease detection and surpass state-of-the-art object detection models. The proposed AE-YOLO is also expected to be applicable not only for the detection of apple leaf diseases, but also for the detection of pests and diseases in other crops.

- To the best of our knowledge, this study is the first attempt to introduce a novel deep learning architecture that considers the leaf spot attention mechanism and is applicable for both leaf disease identification and detection. Until now, existing models such as VGG, ResNet, and YOLO have been adopted for leaf disease identification and detection [15,16,17,18,19,20,21,37,38]. However, in the proposed architecture, a new ROI-aware FES and feature fusion are introduced to find spot areas and encode spot information. The major contribution is to show a novel deep learning architecture that can incorporate the leaf spot attention mechanism based on the ROI-aware FES into existing classification and detection models.

- Previous studies have targeted leaf images with a single background color and a single leaf [17,21]. These images are simple, and good results can be obtained by applying only TL. However, in this study, more complicated images are tested. In other words, the background has few branches and leaves. This is a much more challenging problem. The leaf disease dataset adopted in this study will be open to the public for research purposes.

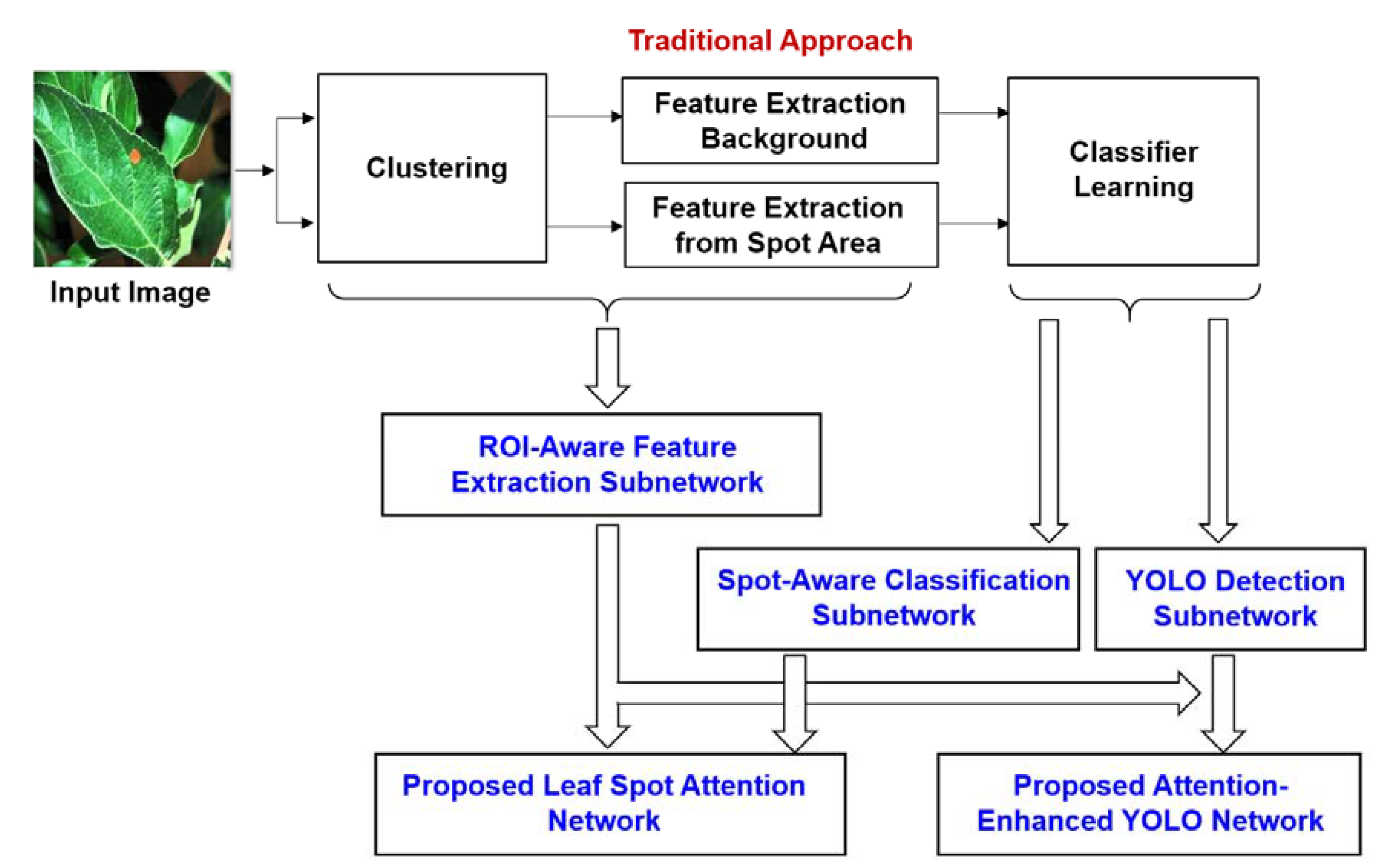

- This study reveals how to incorporate the conventional approach [5,9,39] (including three steps, i.e., clustering, feature extraction, and classifier learning) into a single deep learning architecture. In the proposed networks, the clustering and feature extraction steps are replaced by the proposed ROI-aware FES, and the classifier learning is replaced by the SAC-SubNet or YOLO detection subnetwork. The major difference between the proposed method and the conventional approach is that the proposed method performs three steps simultaneously. In addition, unlike conventional clustering [39], which might fail to extract spot colors from leaf images because of the similarity in their background and spot colors, the proposed method incorporates the clustering algorithm into the deep learning architecture; thus, more accurate feature clustering can be obtained.

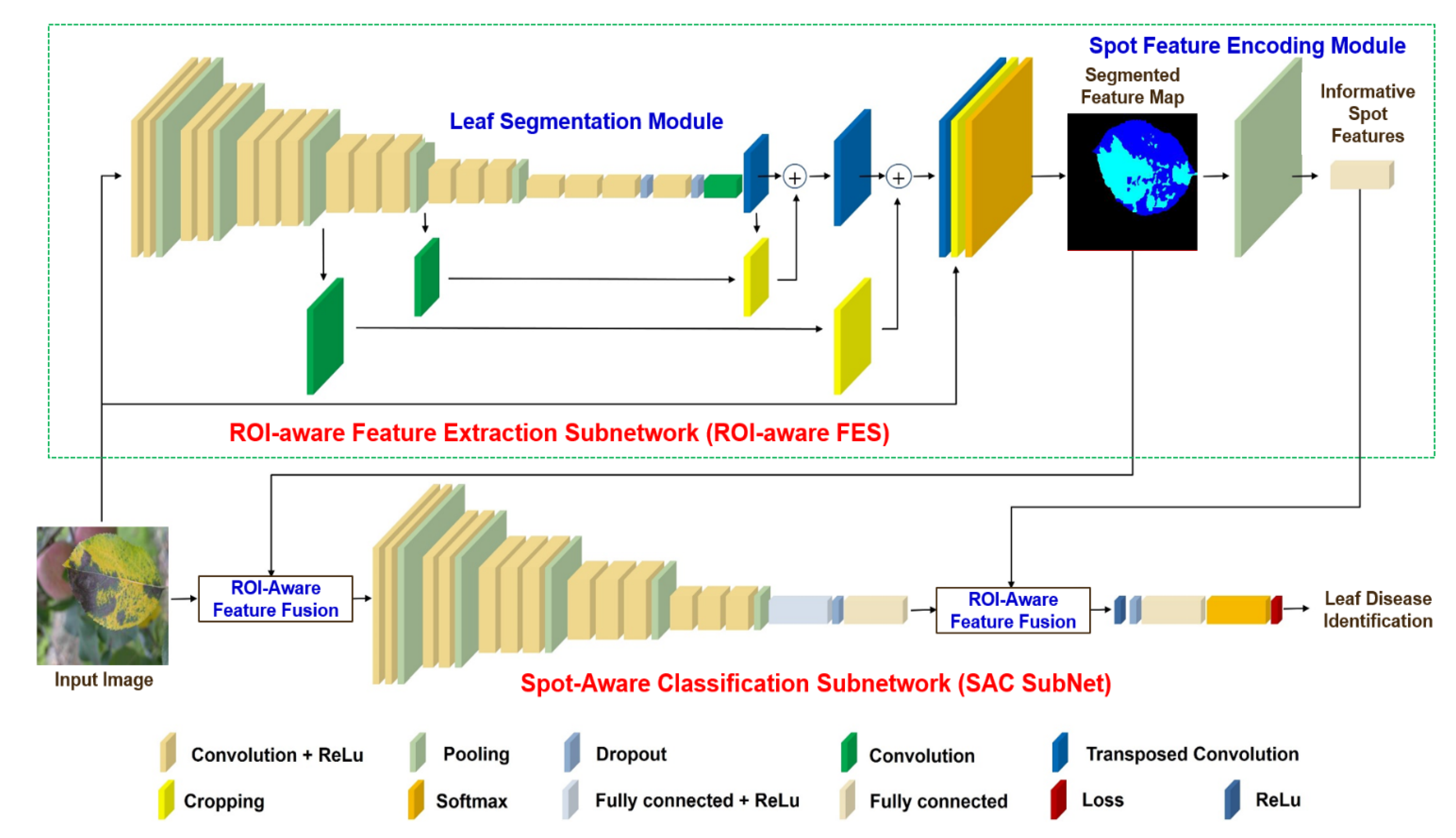

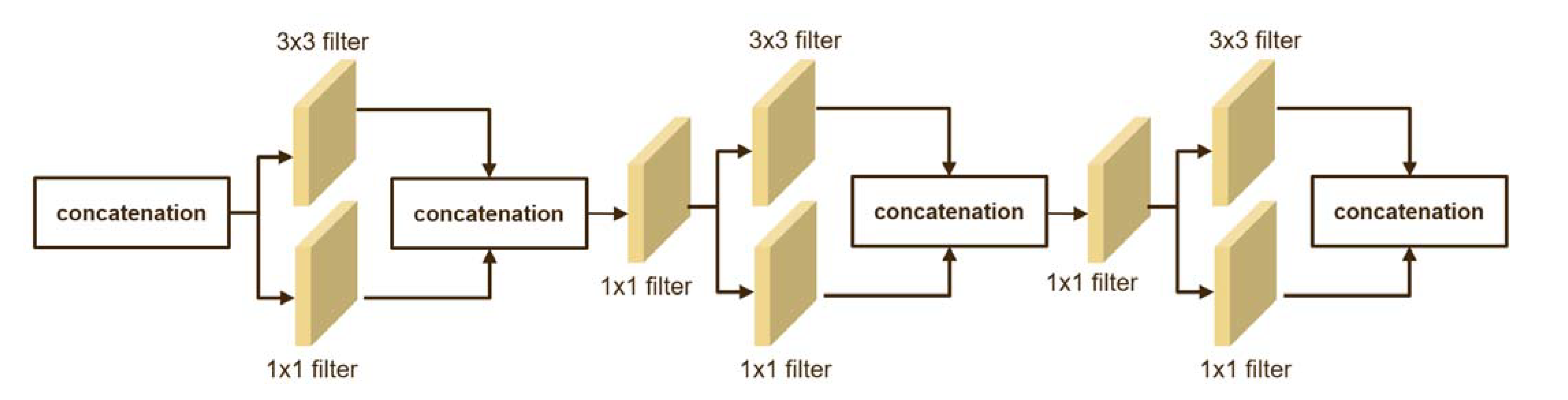

2. Proposed Networks

2.1. Proposed LSA-Net for Leaf Disease Identification

2.2. Proposed Attention-Enhanced YOLO Network for Leaf Disease Detection

3. Experiments

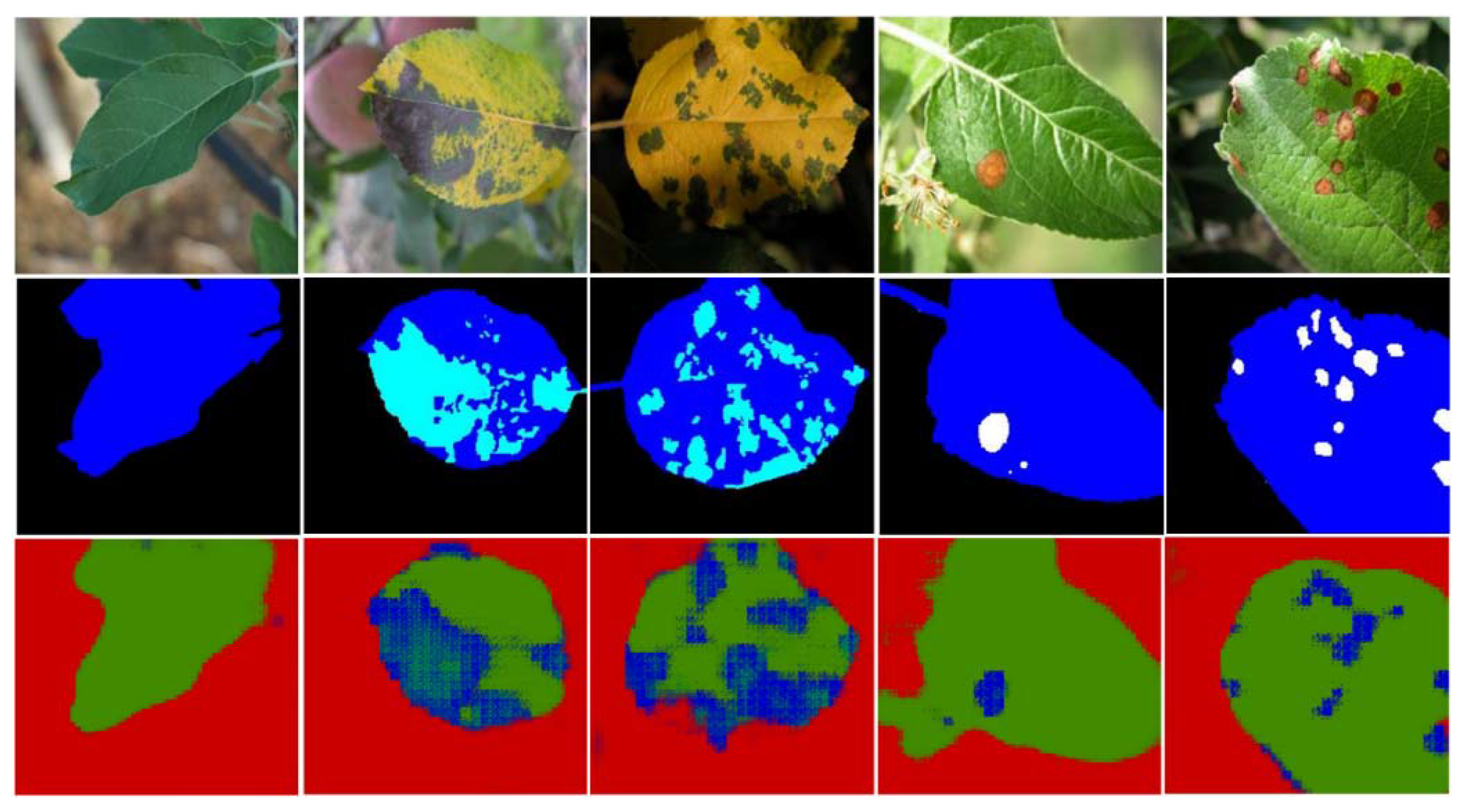

3.1. Image Collection

3.2. Leaf Segmentation Module Training

3.3. Entire Network Training

3.4. Performance Comparison

3.4.1. Leaf Disease Identification

3.4.2. Leaf Disease Detection

4. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet Classification with Deep Convolutional Neural Networks. In Proceedings of the Neural Information Processing Systems, Harrah’s Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1–9. [Google Scholar]

- Lowe, D.G. Distinct image features from scale-invariant key points. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Guo, Z.; Zhang, L.; Zhang, D. A completed modeling of local binary pattern operator for texture classification. IEEE Trans. Image Process. 2010, 19, 1657–1663. [Google Scholar] [PubMed] [Green Version]

- Yang, J.; Yu, K.; Gong, Y.; Huang, T. Linear Spatial Pyramid Matching Using Sparse Coding for Image Classification. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1794–1801. [Google Scholar]

- Singh, V.; Misra, A.K. Detection of plant leaf diseases using image segmentation and soft computing techniques. Inf. Process. Agric. 2017, 4, 41–49. [Google Scholar] [CrossRef] [Green Version]

- Lazebnik, S.; Schmid, C.; Ponce, J. Beyond Bags of Features: Spatial Pyramid Matching for Recognizing Natural Scene Categories. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 2169–2178. [Google Scholar]

- Perronnin, F.; Dance, C. Fisher Kernels on Visual Vocabularies for Image Categorization. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 18–23 June 2007; pp. 1–8. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Islam, M.; Dinh, A.; Wahid, K. Detection of potato diseases using image segmentation and multiclass support vector machine. In Proceedings of the IEEE Canadian Conference on Electrical and Computer Engineering, Windsor, ON, Canada, 30 April–3 May 2017; pp. 1–4. [Google Scholar]

- Quinlanm, J.R. Induction of decision trees. Mach. Learn. 1986, 1, 81–106. [Google Scholar] [CrossRef] [Green Version]

- Chuanlei, Z.; Shanwen, Z.; Jucheng, Y.; Yancui, S.; Jia, C. Apple leaf disease identification using genetic algorithm and correlation based feature selection method. Int. J. Agric. Biol. Eng. 2017, 1, 74–83. [Google Scholar]

- Aharon, M.; Elad, M.; Bruckstein, A. K-SVD: An algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans. Signal Process. 2006, 54, 4311–4322. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ferentinos, K.P. Deep learning models for plant disease detection and diagnosis. Comput. Electron. Agric. 2018, 145, 311–318. [Google Scholar] [CrossRef]

- Fuentes, A.; Yoon, S.; Kim, S.C.; Park, D.S. A robust deep-learning-based detector for real-time tomato plant diseases and pests recognition. Sensors 2017, 17, 2022. [Google Scholar] [CrossRef] [Green Version]

- Mohanty, S.P.; Hughes, D.; Salathe, M. Using deep learning for image-based plant disease detection. Front. Plant Sci. 2016, 7, 1419. [Google Scholar] [CrossRef] [Green Version]

- Lu, Y.; Yi, S.; Zeng, N.; Liu, Y.; Zhang, Y. Identification of rice diseases using deep convolutional neural networks. Neurocomputing 2017, 267, 378–384. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Menezes, G.K.; Oliveira, A.; Alvares, M.; Amorim, W.P.; Belete, N.; Silva, G.; Pistori, H. Automatic recognition of soybean leaf diseases using UAV images and deep convolutional neural networks. IEEE Geosci. Remote. Sens. Lett. 2020, 17, 903–907. [Google Scholar] [CrossRef]

- Bjerge, K.; Nielsen, J.B.; Sepstrup, M.V.; Helsing-Nielsen, F.; Hoye, T.T. An automated light trap to monitor moths (Lepidoptera) using computer vision-based tracking and deep learning. Sensors 2021, 21, 343. [Google Scholar] [CrossRef] [PubMed]

- Wspanialy, P.; Moussa, M. A detection and severity estimation system for generic diseases of tomato greeenhouse plants. Comput. Electron. Agric. 2020, 178, 105701. [Google Scholar] [CrossRef]

- Schlemper, J.; Oktay, O.; Schaap, M.; Heinrich, M.; Kainz, B.; Glocker, B.; Rueckert, D. Attention gated networks: Learning to leverage salient regions in medical images. Med. Image Anal. 2019, 53, 197–207. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 2117–2125. [Google Scholar]

- Wang, W.; Xie, E.; Li, X.; Fan, D.-P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. arXiv 2021, arXiv:2102.12122. [Google Scholar]

- Kumar, N.; Belhumeur, P.N.; Biswa, A.; Jacobs, D.W.; Kress, W.J.; Lopez, I.; Soares, J. Leafsnap: A Computer Vision System for Automatic Plant Species Identification. In Proceedings of the European Conference on Computer Vision, Florence Italy, 7–13 October 2012; pp. 502–516. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Uijlings, J.R.; van de Sande, K.E.; Gevers, T.; Smeulders, A.W. Selective search for object recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef] [Green Version]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [Green Version]

- Zitnick, C.L.; Dollár, P. Edge Boxes: Locating Object Proposals from Edges. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 8–11 September 2014; pp. 391–405. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only Look Once: Unified, Realtime Object Detection. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Drhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving into High Quality Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Jiang, E.; Chen, Y.; Liu, B.; He, D.; Liang, C. Real-time detection of apple leaf diseases using deep learning approach based on improved convolutional neural networks. IEEE Access 2019, 7, 59069–59080. [Google Scholar] [CrossRef]

- Tian, Y.; Yang, G.; Wang, Z.; Wang, H.; Li, E.; Liang, Z. Apple detection during different growth stages in orchards using improved YOLO-V3 model. Comput. Electron. Agric. 2019, 157, 417–426. [Google Scholar] [CrossRef]

- Ali, H.; Lali, M.I.; Nawaz, M.Z.; Sharif, M.; Saleem, B.A. Symptom based automated detection of citrus diseases using color histogram and textural descriptors. Comput. Electron. Agric. 2017, 138, 92–104. [Google Scholar] [CrossRef]

- Yu, H.-J.; Son, C.-H. Leaf Spot Attention Network for Apple Leaf Disease Identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Virtual, 14–19 June 2020; pp. 52–53. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the International Conference on Neural Information Processing System, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50× fewer parameters and <0.5MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Csuka, G.; Larlus, D.; Perronnin, F. What Is a Good Evaluation Measure for Semantic Segmentation? In Proceedings of the British Machine Vision Conference, Bristol, UK, 9–13 September 2013; pp. 32.1–32.11. [Google Scholar]

| Methods | CRC (%) |

|---|---|

| VGG16 [13] | 90.19 |

| ResNet50 [14] | 87.87 |

| Attention Gated Network (AGN) [22] | 87.69 |

| Feature Pyramid Network (FPN) [23] | 88.58 |

| SqueezeNet [43] | 92.24 |

| Pyramid Vision Transformer (PVT) [24] | 93.70 |

| Proposed LSA-Net | 96.07 |

| Methods | AP (%) (Marssonina) | AP (%) (Alternaria) | mAP (%) |

|---|---|---|---|

| RCNN [30] | 17.66 | 23.70 | 20.70 |

| Fast RCNN [31] | 46.10 | 38.00 | 42.10 |

| Faster RCNN [32] | 50.01 | 44.90 | 47.50 |

| RetinaNet [44] | 54.60 | 40.20 | 47.40 |

| YOLO [33] | 38.70 | 31.60 | 35.10 |

| Proposed attention-enhanced YOLO (ResNet50) | 54.30 | 47.40 | 50.80 |

| Proposed attention-enhanced YOLO (VGG16) | 55.00 | 42.40 | 48.70 |

| Proposed attention-enhanced YOLO (SqueezeNet) | 51.10 | 47.60 | 49.40 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Son, C.-H. Leaf Spot Attention Networks Based on Spot Feature Encoding for Leaf Disease Identification and Detection. Appl. Sci. 2021, 11, 7960. https://doi.org/10.3390/app11177960

Son C-H. Leaf Spot Attention Networks Based on Spot Feature Encoding for Leaf Disease Identification and Detection. Applied Sciences. 2021; 11(17):7960. https://doi.org/10.3390/app11177960

Chicago/Turabian StyleSon, Chang-Hwan. 2021. "Leaf Spot Attention Networks Based on Spot Feature Encoding for Leaf Disease Identification and Detection" Applied Sciences 11, no. 17: 7960. https://doi.org/10.3390/app11177960

APA StyleSon, C.-H. (2021). Leaf Spot Attention Networks Based on Spot Feature Encoding for Leaf Disease Identification and Detection. Applied Sciences, 11(17), 7960. https://doi.org/10.3390/app11177960