Motion Classification and Features Recognition of a Traditional Chinese Sport (Baduanjin) Using Sampled-Based Methods

Abstract

1. Introduction

2. Materials and Methods

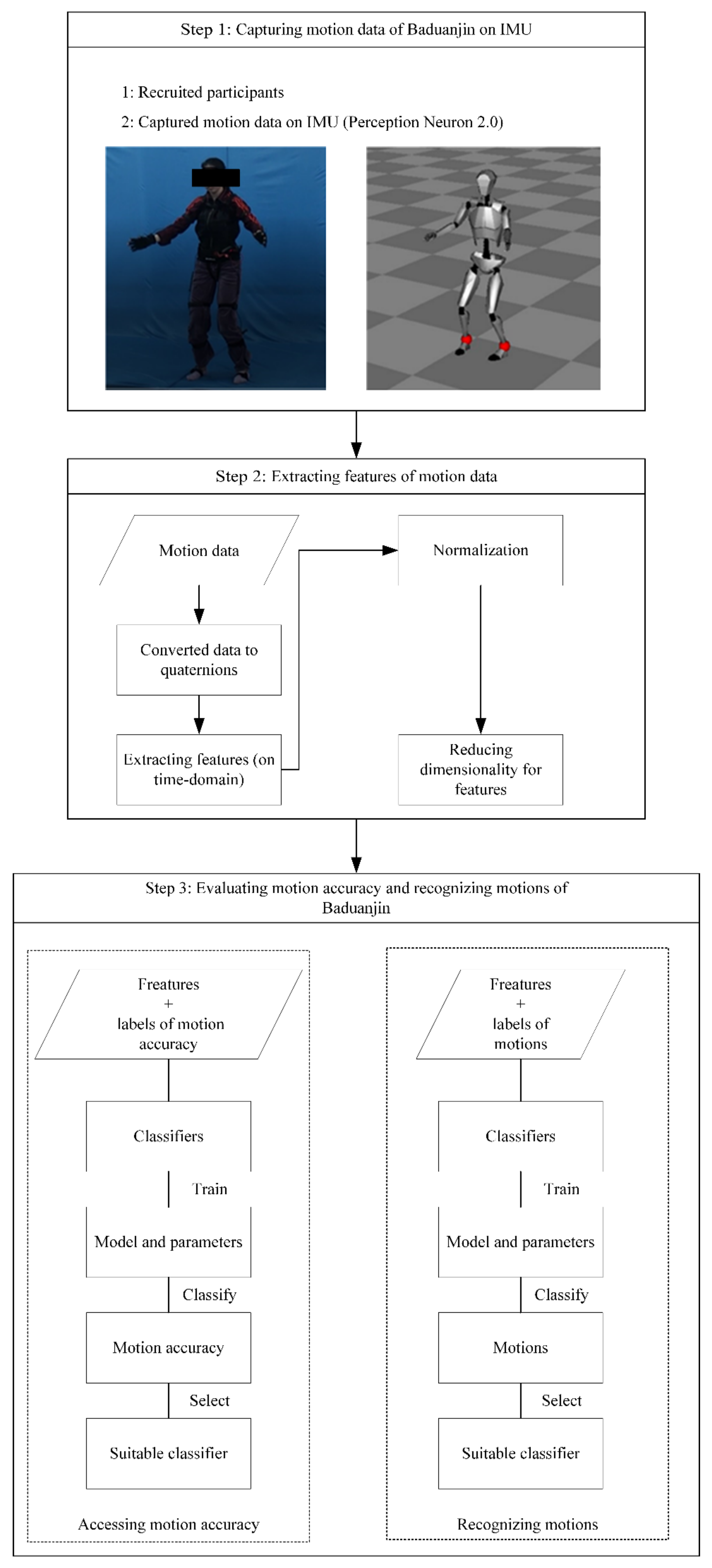

2.1. Overview

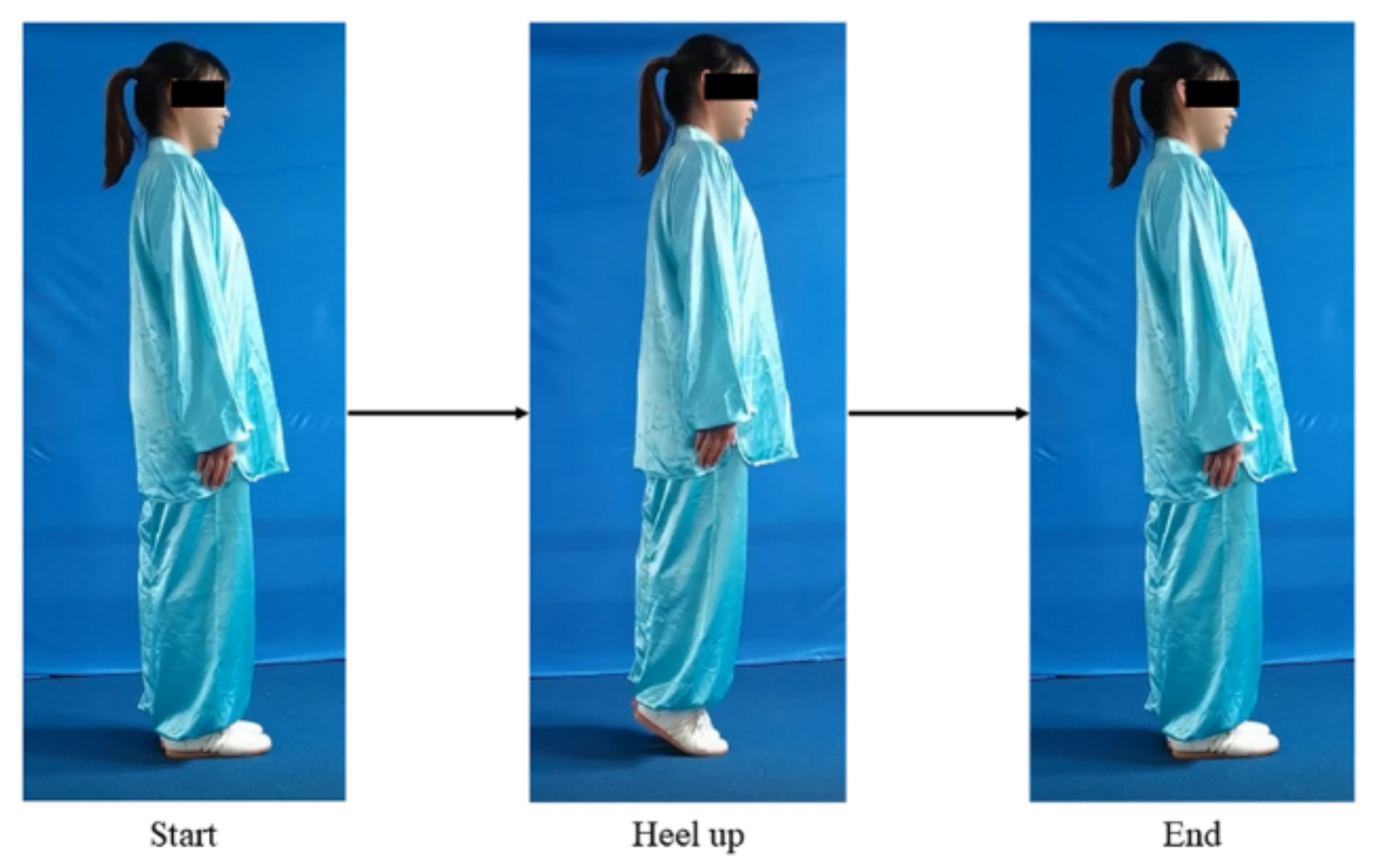

2.2. Capturing Motion Data of Baduanjin, Using IMU

2.3. Extracting Features of Motion Data

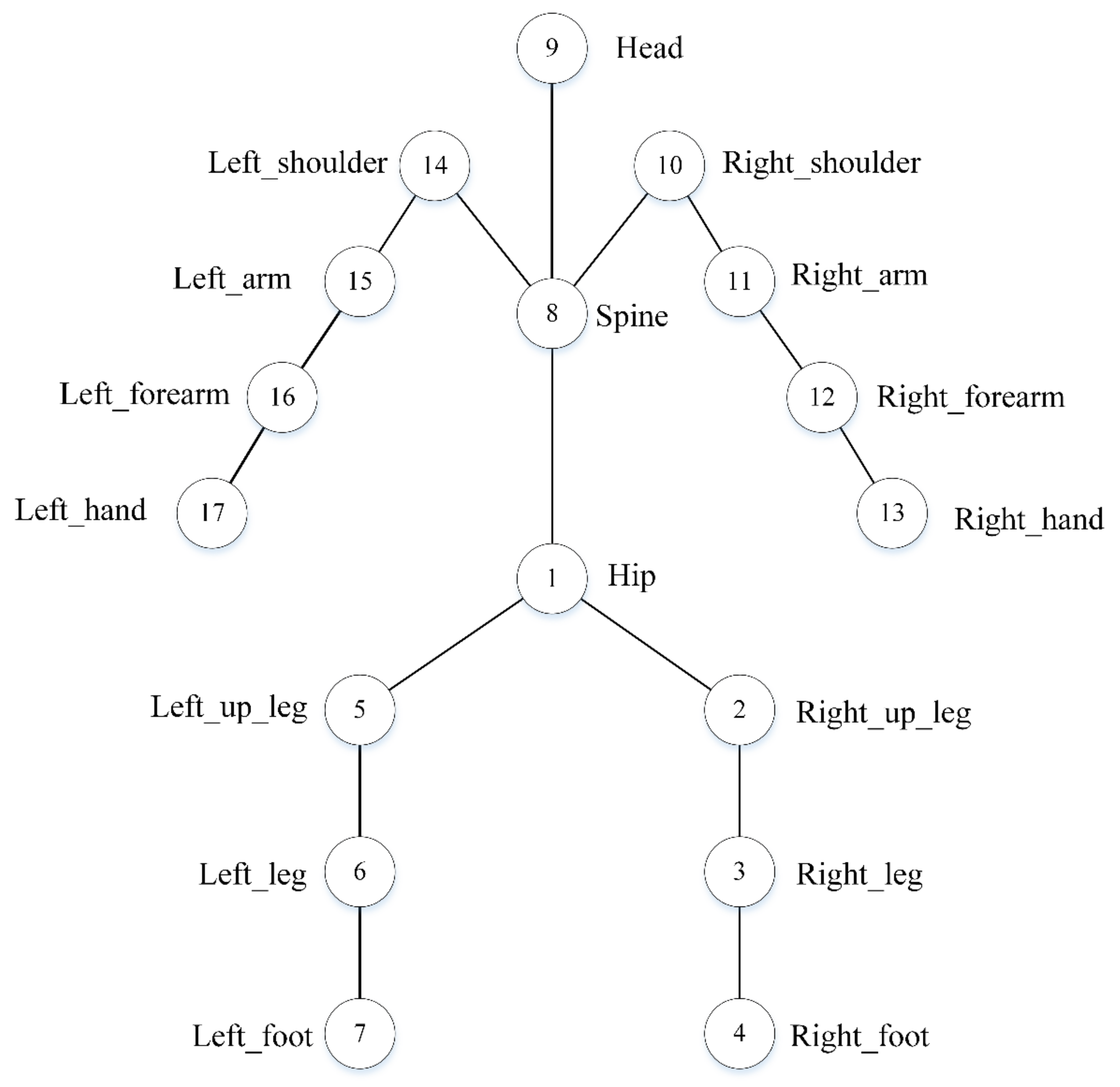

2.3.1. Extracting and Converting Raw Data

2.3.2. Extracting Features

- 1.

- Extracting time-domain features

- 2.

- Normalization

- 3.

- Dimensionality reduction of feature vectors

- Let the original data be the n-dimensional data of m;

- Form the original data into matrix X with n-rows and m-columns;

- Zero-average each row of matrix X, that is, subtract the average value of this row;

- Calculate the covariance matrix;

- Calculate the eigenvalues of the covariance matrix and the corresponding eigenvector r.

2.4. Assessing Motion Accuracy of Baduanjin

2.4.1. Classifiers

- 1.

- k-NN

- 2.

- SVM

- 3.

- NB

- 4.

- Logistic Regression

- 5.

- DT

- 6.

- ANN

- BPNN is a multilayer feedforward network trained according to the error backpropagation algorithm. It has an excellent ability to approximate any continuous function and healthy nonlinear mapping function [14]. In this study, BPNN is constructed with three layers. The first layer is the input layer. The number of neurons is equivalent to the dimension of the feature vectors; the second layer is the hidden layer. The tangent sigmoid equation is applied as the activation equation:

- A BPNN has a large amount of calculation and processing time [45]. The RBFNN is also a kind of feedforward network that is trained by using a supervised training algorithm, but the calculation and processing time is lower than that of BPNN. The main advantage of the RBFNN is that it has only one hidden layer and uses the radial basis equation as the activation equation [46]. The basic architecture of RBFNN is the same as the basic architecture of BPNN, which contains three layers, namely input, hidden and output layers, as shown in Figure 4. Unlike BPNN, the Gaussian equation is used as the basic equation; therefore, in RBFNN, the general formula for the output of the RBFNN is expressed as follows:

- 7.

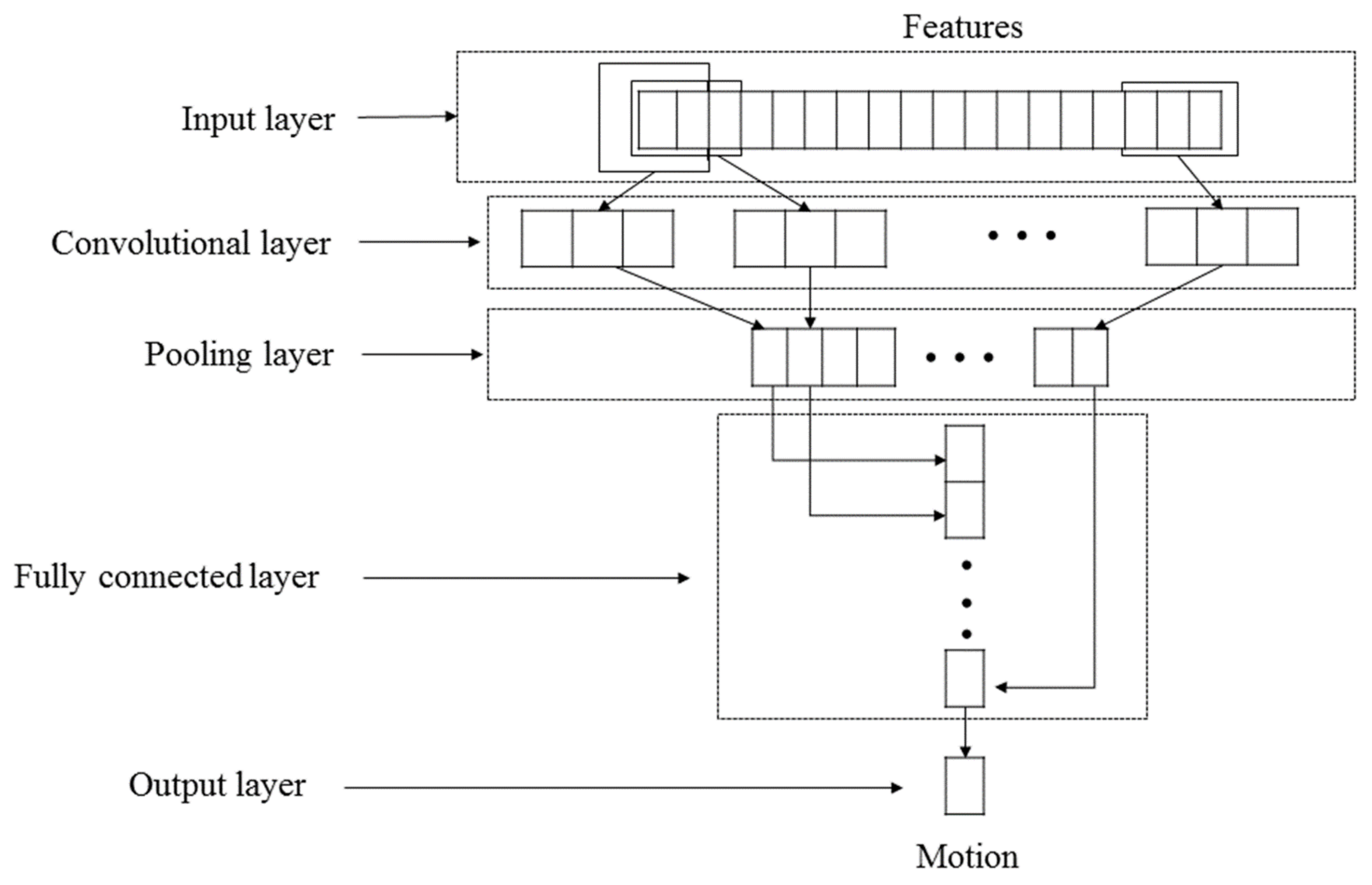

- 1D-CNN

2.4.2. Evaluation

2.5. Recognizing Motions of Baduanjin

3. Materials and Methods

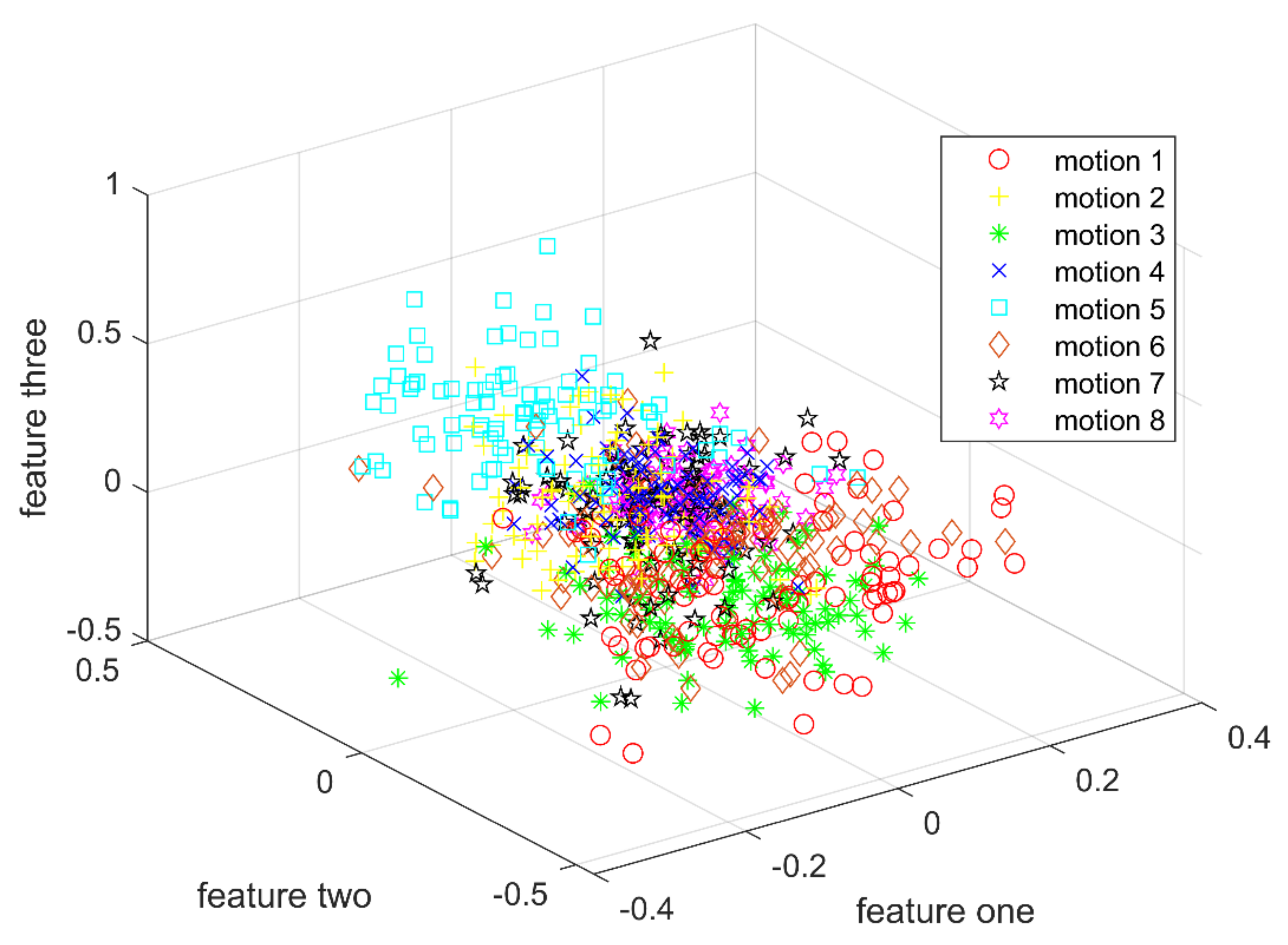

3.1. Extracting Features and Dimensionality Reduction

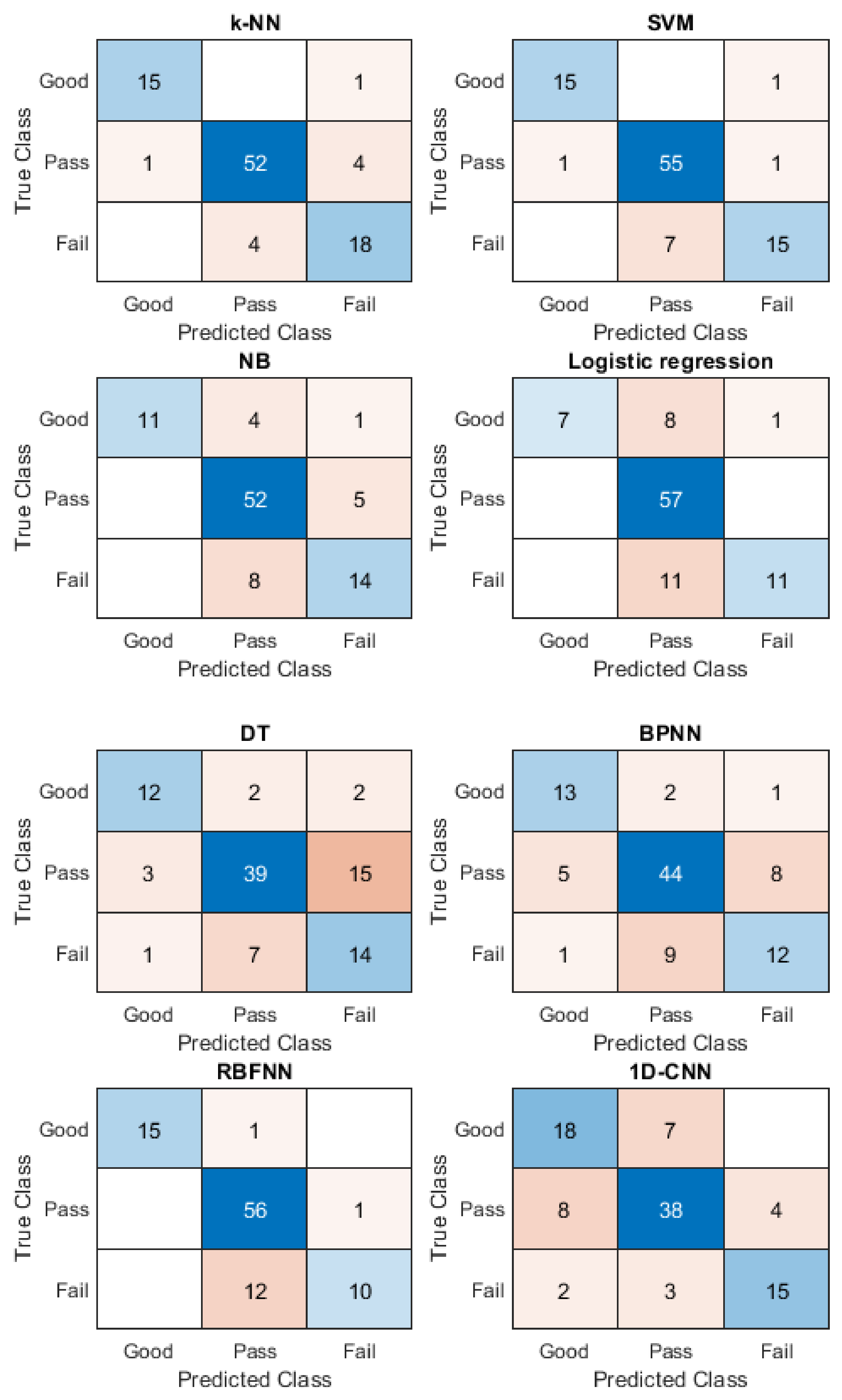

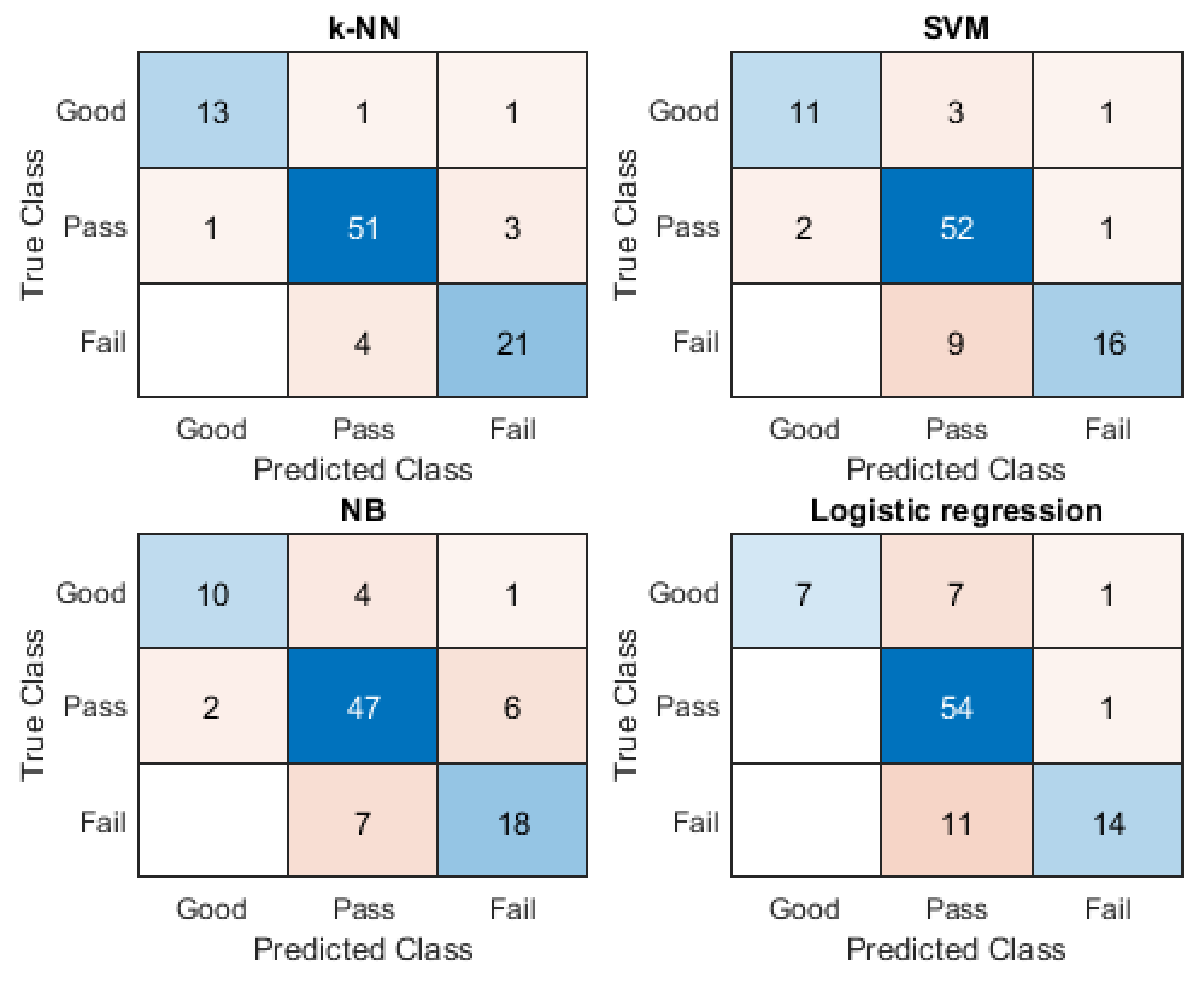

3.2. Assessing Motion Accuracy of Baduanjin

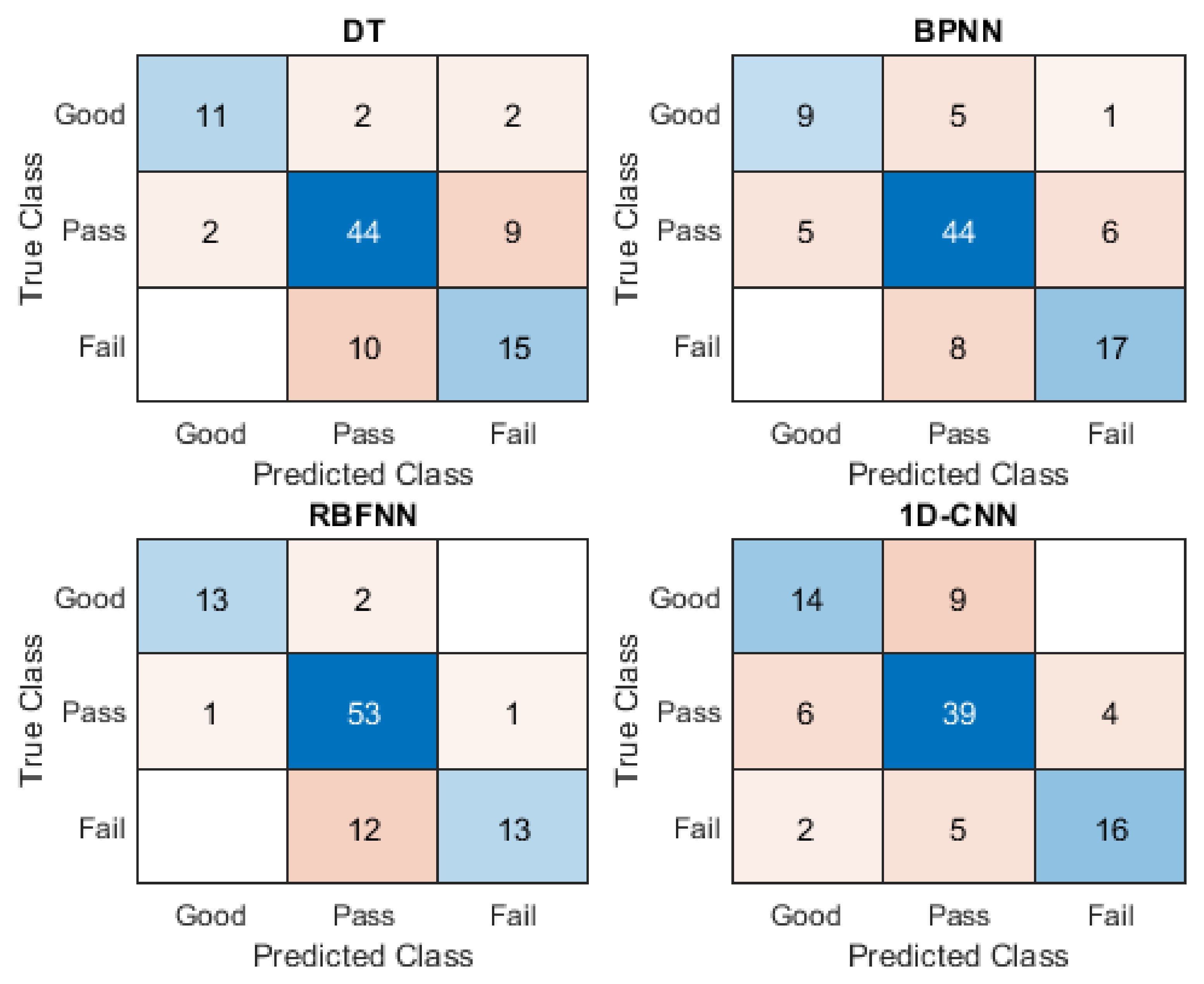

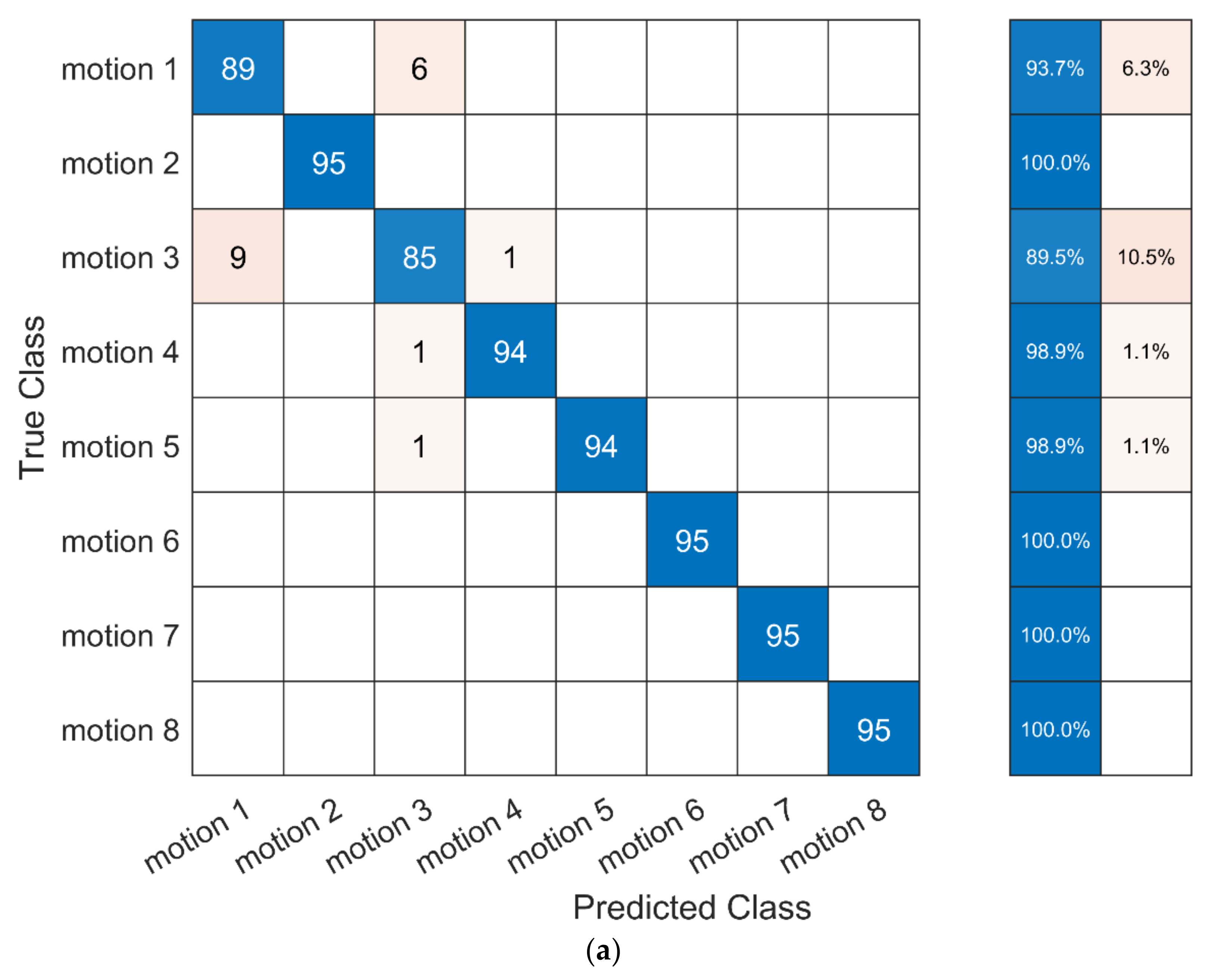

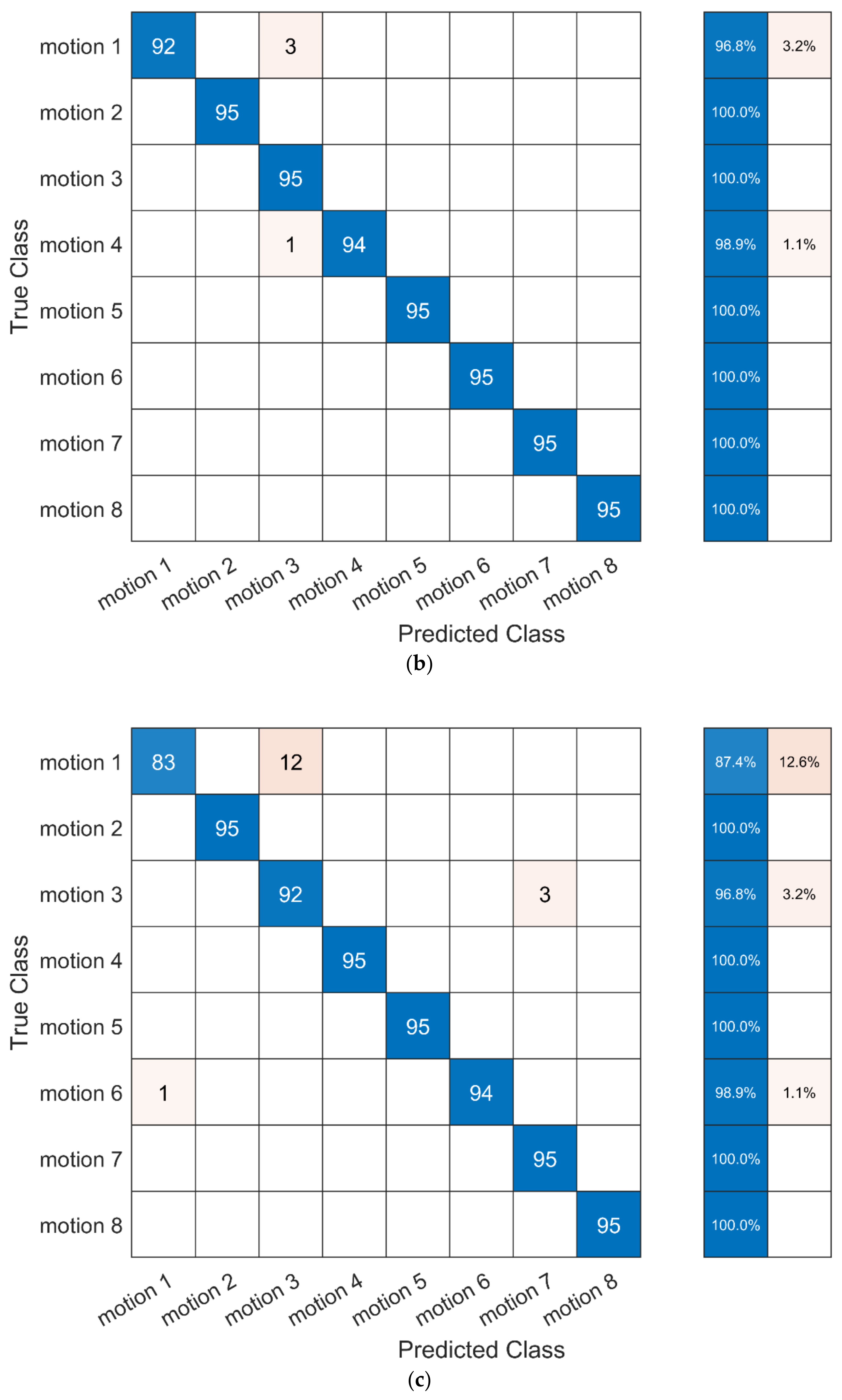

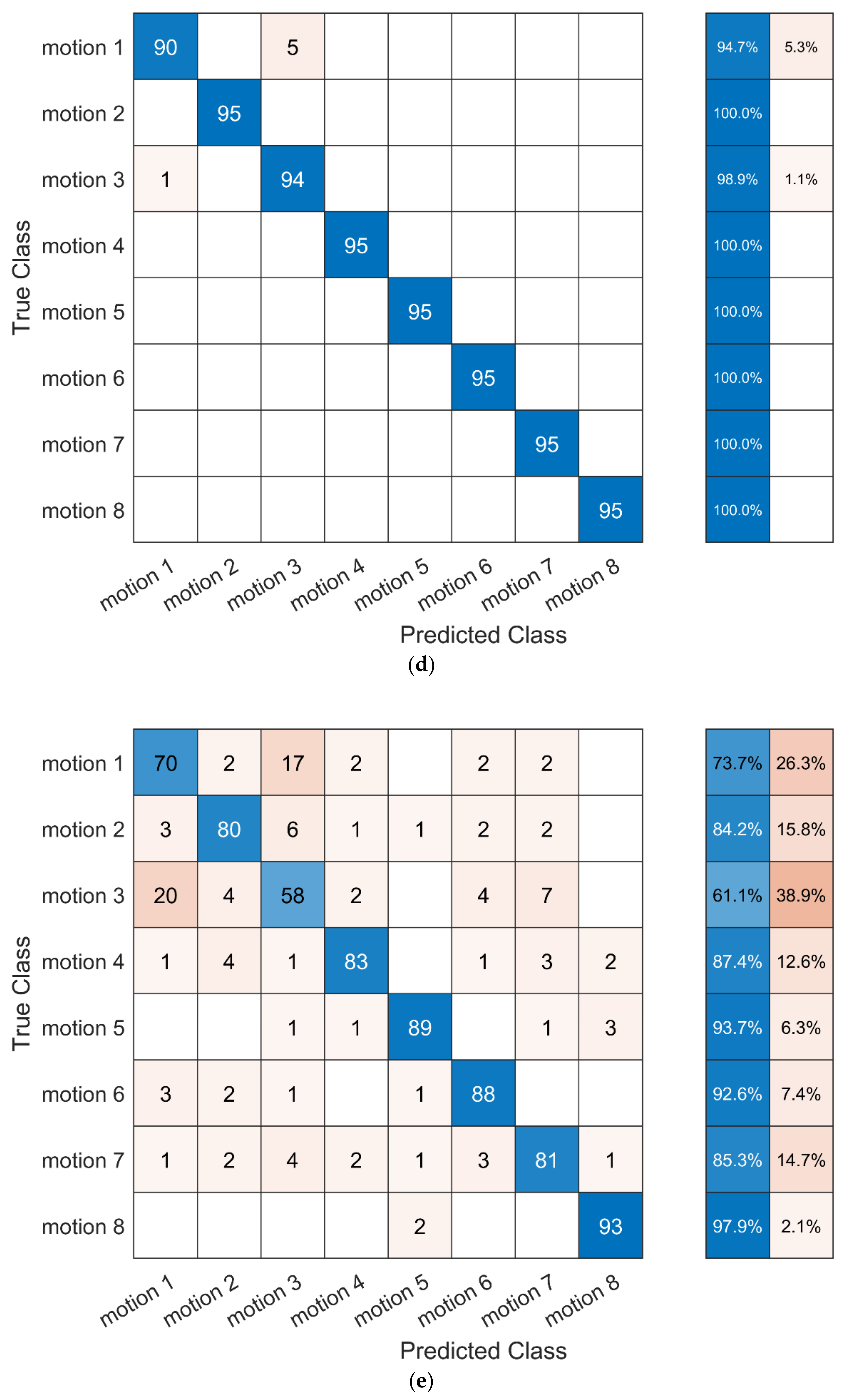

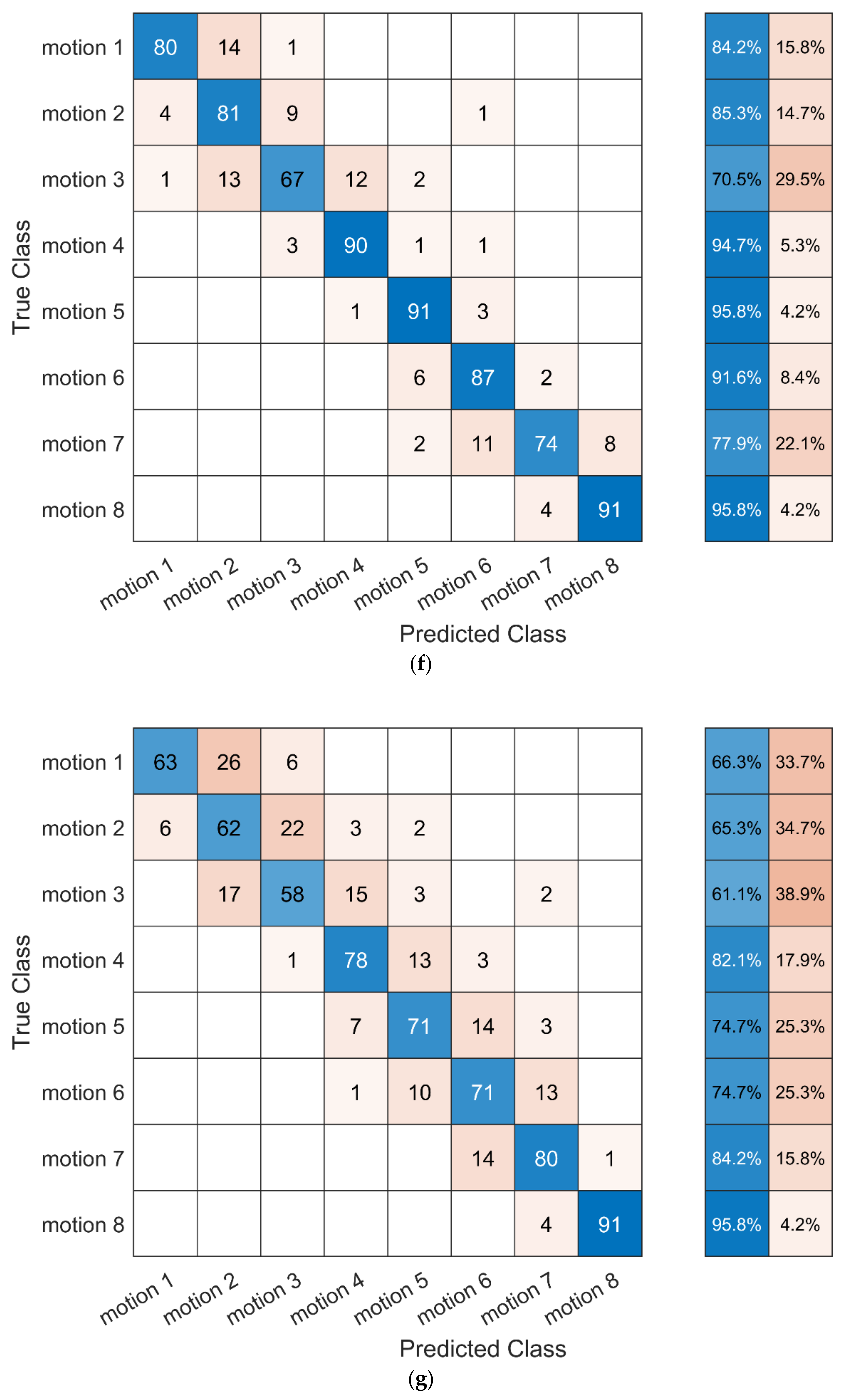

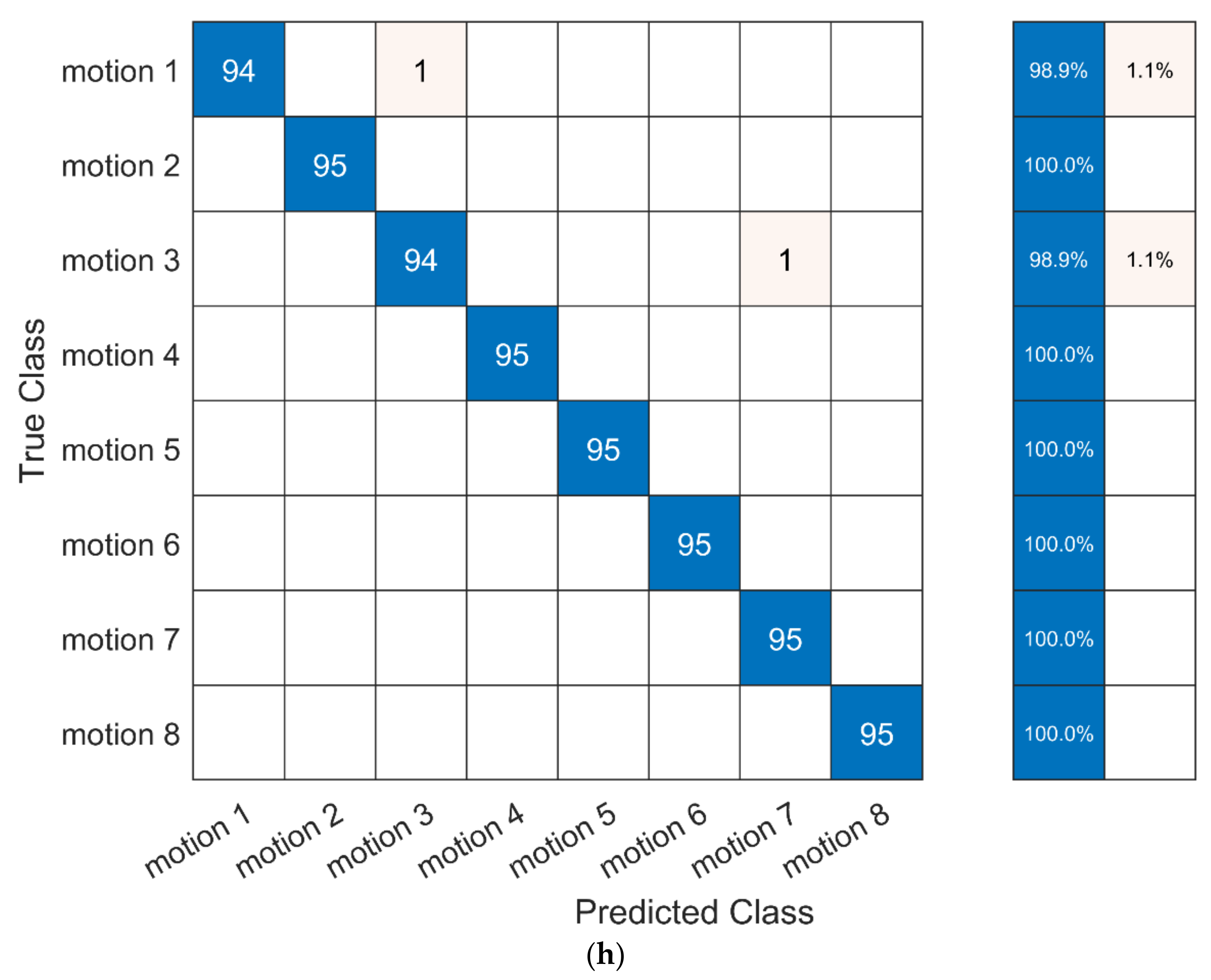

3.3. Recognizing Motions of Baduanjin

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, H.; Khoo, S.; Yap, H.J. Differences in Motion Accuracy of Baduanjin between Novice and Senior Students on Inertial Sensor Measurement Systems. Sensors 2020, 20, 6258. [Google Scholar] [CrossRef] [PubMed]

- The Central People’s Government of the People’s Republic of China. Healthy China 2030. Available online: http://www.gov.cn/zhengce/2016-10/25/content_5124174.htm (accessed on 21 July 2021).

- Ministry of Education of People’s Republic of China. Several Opinions on Comprehensively Improving the Quality of Higher Education. Available online: http://www.moe.gov.cn/srcsite/A08/s7056/201203/t20120316_146673.html (accessed on 7 August 2021).

- Li, F.Y.; Chen, X.H.; Liu, Y.C.; Zhang, Z.; GUO, L.; Huang, Z.L. Research progress on the teaching status of fitness Qigong Ba Duan Jin. China Med. Her. 2018, 15, 63–66. [Google Scholar] [CrossRef] [PubMed]

- Zhan, Y.Y. Exploring a New System of Martial Arts Teaching Content in Common Universities in Shanghai. Master’s Thesis, East China Normal University, Shanghai, China, 2015. [Google Scholar]

- Yamaoka, K.; Uehara, M.; Shima, T.; Tamura, Y. Feedback of flying disc throw with Kinect and its evaluation. Procedia Comput. Sci. 2013, 22, 912–920. [Google Scholar] [CrossRef][Green Version]

- Elaoud, A.; Barhoumi, W.; Zagrouba, E.; Agrebi, B. Skeleton-based comparison of throwing motion for handball players. J. Ambient Intell. Humaniz. Comput. 2019, 11, 419–431. [Google Scholar] [CrossRef]

- Chen, X.M.; Chen, Z.B.; Li, Y.; He, T.Y.; Hou, J.H.; Liu, S.; He, Y. ImmerTai: Immersive motion learning in VR environments. J. Vis. Commun. Image Represent. 2019, 58, 416–427. [Google Scholar] [CrossRef]

- Thomsen, A.S.S.; Bach-Holm, D.; Kjærbo, H.; Højgaard-Olsen, K.; Subhi, Y.; Saleh, G.M.; Park, Y.S.; la Cour, M.; Konge, L. Operating room performance improves after proficiency-based virtual reality cataract surgery training. Ophthalmology 2017, 124, 524–531. [Google Scholar] [CrossRef]

- Van der Kruk, E.; Reijne, M.M. Accuracy of human motion capture systems for sport applications; state-of-the-art review. Eur. J. Sport Sci. 2018, 18, 806–819. [Google Scholar] [CrossRef] [PubMed]

- Spörri, J.; Schiefermüller, C.; Müller, E. Collecting kinematic data on a ski track with optoelectronic stereophotogrammetry: A methodological study assessing the feasibility of bringing the biomechanics lab to the field. PLoS ONE 2016, 11, e0161757. [Google Scholar] [CrossRef] [PubMed]

- Panjkota, A.; Stancic, I.; Supuk, T. Outline of a qualitative analysis for the human motion in case of ergometer rowing. In Proceedings of the 9th WSEAS International Conference on Simulation, Modelling and Optimization, Iwate Prefectural University, Iwate, Japan, 23–25 March 2010; pp. 182–186. [Google Scholar]

- Schuler, N.; Bey, M.; Shearn, J.; Butler, D. Evaluation of an electromagnetic position tracking device for measuring in vivo, dynamic joint kinematics. J. Biomech. 2005, 38, 2113–2117. [Google Scholar] [CrossRef] [PubMed]

- Noitom Technology Ltd. Perception Neuron 2.0. Available online: https://www.noitom.com.cn/perception-neuron-2-0.html (accessed on 20 August 2020).

- McGinnis, R.S. Advancing Applications of IMUs in Sports Training and Biomechanics. Ph.D. Thesis, University of Michigan, Ann Arbor, MI, USA, 2013. [Google Scholar]

- Rokoko Company. Tech Specs of Smartgloves. Available online: https://www.rokoko.com/products/smartgloves/tech-specs (accessed on 12 August 2021).

- Mannini, A.; Sabatini, A.M. Machine Learning Methods for Classifying Human Physical Activity from On-Body Accelerometers. Sensors 2010, 10, 1154–1175. [Google Scholar] [CrossRef]

- Sers, R.; Forrester, S.E.; Moss, E.; Ward, S.; Zecca, M. Validity of the Perception Neuron inertial motion capture system for upper body motion analysis. Measurement 2019, 149, 107024. [Google Scholar] [CrossRef]

- Dai, H.; Cai, B.; Song, J.; Zhang, D.Y. Skeletal animation based on BVH motion data. In Proceedings of the 2nd International Conference on Information Engineering and Computer Science, Wuhan, China, 25–26 December 2010; pp. 1–4. [Google Scholar]

- Yap, H.J.; Taha, Z.; Dawal, S.Z.M. A Generic Approach of Integrating 3D Models into Virtual Manufacturing. J. Zhejiang Univ. SCIENCE C (Comput. Electron.) 2012, 13, 20–28. [Google Scholar] [CrossRef]

- Gao, L.; Bourke, A.K.; Nelson, J. Evaluation of accelerometer based multi-sensor versus single-sensor activity recognition systems. Med. Eng. Phys. 2014, 36, 779–785. [Google Scholar] [CrossRef]

- Altun, K.; Barshan, B.; Tunel, O. Comparative study on classifying human activities with miniature inertial and magnetic sensors. Pattern Recognit. 2010, 43, 3605–3620. [Google Scholar] [CrossRef]

- Zhang, M.; Sawchuk, A.A. Human Daily Activity Recognition With Sparse Representation Using Wearable Sensors. IEEE J. Biomed. Health Inform. 2013, 17, 553–560. [Google Scholar] [CrossRef] [PubMed]

- Bao, L.; Intille, S.S. Activity Recognition from User-Annotated Acceleration Data. In Proceedings of the Pervasive Computing, Pervasive 2004, Vienna, Austria, 21–23 April 2004. [Google Scholar]

- Altun, K.; Barshan, B. Human Activity Recognition Using Inertial/Magnetic Sensor Units. In Proceedings of the First International Workshop on Human Behavior Understanding (HBU 2010), in conjunction with the 20th International Conference on Pattern Recognition, Istanbul, Turkey, 20 August 2010; pp. 38–51. [Google Scholar]

- Khan, A.M.; Lee, Y.K.; Lee, S.Y.; Kim, T.S. A Triaxial Accelerometer-Based Physical-Activity Recognition via Augmented-Signal Features and a Hierarchical Recognizer. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 1166–1172. [Google Scholar] [CrossRef]

- Subasi, A.; Gursoy, M.I. EEG signal classification using PCA, ICA, LDA and support vector machines. Expert Syst. Appl. 2010, 37, 8659–8666. [Google Scholar] [CrossRef]

- Minhas, R.; Mohammed, A.A.; Wu, Q. A fast recognition framework based on extreme learning machine using hybrid object information. Neurocomputing 2010, 73, 1831–1839. [Google Scholar] [CrossRef]

- Guo, Z.B.; Zhang, Y. A Similar Distribution Discriminant Analysis with Orthogonal and Nearly Statistically Uncorrelated Characteristics. Math. Probl. Eng. 2019, 2019, 3145973. [Google Scholar] [CrossRef]

- Kenyhercz, M.W.; Passalacqua, N.V. Missing Data Imputation Methods and Their Performance with Biodistance Analyses. In Biological Distance Analysis; Pilloud, M.A., Hefner, J.T., Eds.; Elsevier Inc.: Amsterdam, The Netherlands, 2016; pp. 181–194. [Google Scholar]

- MathWorks. Fitcknn. Available online: https://www.mathworks.com/help/stats/fitcknn.html?s_tid=srchtitle (accessed on 2 February 2021).

- Boubou, S.; Suzuki, E. Classifying actions based on histogram of oriented velocity vectors. J. Intell. Inf. Syst. 2015, 44, 49–65. [Google Scholar] [CrossRef]

- Jegham, I.; Khalifa, A.B.; Alouani, I.; Mahjoub, M.A. Vision-based human action recognition: An overview and real world challenges. Digit. Investig. 2020, 32, 200901. [Google Scholar] [CrossRef]

- Morris, C.W.; Autret, A.; Boddy, L. Support vector machines for identifying organisms—A comparison with strongly partitioned radial basis function networks. Ecol. Model. 2001, 146, 57–67. [Google Scholar] [CrossRef]

- Erfani, S.M.; Rajasegarar, S.; Karunasekera, S.; Leckie, C. High-dimensional and large-scale anomaly detection using a linear one-class SVM with deep learning. Pattern Recognit. 2016, 58, 121–134. [Google Scholar] [CrossRef]

- MathWorks. Fitcecoc. Available online: https://www.mathworks.com/help/stats/fitcecoc.html?s_tid=srchtitle (accessed on 10 February 2021).

- Iglesias, J.A. Creating Evolving User Behavior Profiles Automatically. IEEE Trans. Knowl. Data Eng. 2012, 24, 854–867. [Google Scholar] [CrossRef]

- MathWorks. Fitcnb. Available online: https://www.mathworks.com/help/stats/fitcnb.html?searchHighlight=fitcnb&s_tid=srchtitle (accessed on 2 February 2021).

- Edgar, T.W.; Manz, D.O. Research Methods for Cyber Security; Syngress: Cambridge, MA, USA, 2017. [Google Scholar]

- MathWorks. Fitclinear. Available online: https://www.mathworks.com/help/stats/fitclinear.html#namevaluepairarguments (accessed on 3 February 2021).

- Lin, T. Code Comment Analysis for Improving Software Quality. In The Art and Science of Analyzing Software Data; Bird, C., Menzies, T., Zimmermann, T., Eds.; Morgan Kaufmann: San Mateo, CA, USA, 2015; pp. 493–517. [Google Scholar]

- Dev, V.A.; Eden, M.R. Gradient Boosted Decision Trees for Lithology Classification. Comput. Aided Chem. Eng. 2019, 47, 113–118. [Google Scholar] [CrossRef]

- MathWorks. Fitctree. Available online: https://www.mathworks.com/help/stats/fitctree.html?searchHighlight=fitctree&s_tid=srchtitle (accessed on 3 February 2021).

- Oyewale, A.M.; Kasali, A.O.; Phazamile, K.; Abiodun, M.V.; Adeyinka, A.I. Forecasting Inflation Rates Using Artificial Neural Networks. J. Comput. Math. 2019, 9, 201–207. [Google Scholar] [CrossRef]

- Wang, G.; Cheng, G.; Carr, T.R. The application of improved NeuroEvolution of Augmenting Topologies neural network in Marcellus Shale lithofacies prediction. Comput. Geosci. 2013, 54, 50–65. [Google Scholar] [CrossRef]

- Satapathy, S.K.; Dehuri, S.; Jagadev, A.K.; Mishra, S. EEG Signal Classification Using RBF Neural Network Trained with Improved PSO Algorithm for Epilepsy Identification. In EEG Brain Signal Classification for Epileptic Seizure Disorder Detection; Satapathy, S.K., Dehuri, S., Jagadev, A.K., Mishra, S., Eds.; Academic Press: Cambridge, MA, USA, 2019; pp. 67–89. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Volume 1. [Google Scholar]

- Mathworks. Convolutional Neural Network. Available online: https://www.mathworks.com/discovery/convolutional-neural-network-matlab.html?s_tid=srchtitle_convolutional%2520neural%2520network%2520_1 (accessed on 12 August 2021).

- Alexiadis, D.S.; Daras, P. Quaternionic Signal Processing Techniques for Automatic Evaluation of Dance Performances From MoCap Data. IEEE Trans. Multimed. 2014, 16, 1391–1406. [Google Scholar] [CrossRef]

| Type of Feature | Feature ID | The Description of the Features |

|---|---|---|

| Time-domain features | 1–3 | The mean value of the three vectors of x, y, z |

| 4–6 | The variance value of the three vectors of x, y, z | |

| 7–9 | The standard deviation value of the three vectors of x, y, z | |

| 10–12 | The skewness value of the three vectors of x, y, z | |

| 13–15 | The kurtosis value of the three vectors of x, y, z | |

| 16–18 | The quartile deviation value of the three vectors of x, y, z |

| Motions | Teacher A | Teacher B | Kendall Value | ||||

|---|---|---|---|---|---|---|---|

| Good | Pass | Fail | Good | Pass | Fail | ||

| Motion-1 | 16 | 57 | 22 | 15 | 55 | 25 | 0.941 |

| Motion-2 | 21 | 53 | 21 | 24 | 50 | 21 | 0.882 |

| Motion-3 | 26 | 58 | 11 | 23 | 49 | 23 | 0.831 |

| Motion-4 | 22 | 58 | 15 | 19 | 49 | 27 | 0.824 |

| Motion-5 | 20 | 57 | 18 | 20 | 56 | 19 | 0.838 |

| Motion-6 | 23 | 55 | 17 | 20 | 54 | 21 | 0.907 |

| Motion-7 | 29 | 59 | 7 | 26 | 62 | 7 | 0.944 |

| Motion-8 | 61 | 34 | 0 | 61 | 34 | 0 | 0.862 |

| Classifiers | Accuracy (%) | |||||||

|---|---|---|---|---|---|---|---|---|

| Motion-1 | Motion-2 | Motion-3 | Motion-4 | Motion-5 | Motion-6 | Motion-7 | Motion-8 | |

| k-NN | 89.47 1 | 92.63 1 | 91.58 1 | 92.63 1 | 89.47 1 | 92.63 1 | 87.37 | 88.42 1 |

| SVM | 89.47 1 | 84.21 | 80.00 | 92.63 1 | 80.00 | 75.79 | 95.79 1 | 80.00 |

| NB | 81.05 | 83.16 | 74.74 | 90.53 | 77.89 | 80.00 | 82.11 | 76.84 |

| Logistic Regression | 78.95 | 71.58 | 62.11 | 81.05 | 84.21 | 77.89 | 81.05 | 76.84 |

| DT | 73.68 | 65.26 | 65.26 | 61.05 | 65.26 | 62.11 | 73.68 | 65.26 |

| BPNN | 73.68 | 61.05 | 63.16 | 70.53 | 78.95 | 66.32 | 81.05 | 73.68 |

| RBFNN | 83.16 | 67.37 | 75.79 | 75.79 | 78.95 | 75.79 | 84.21 | 70.53 |

| 1D-CNN | 74.74 | 71.58 | 76.84 | 69.47 | 76.84 | 88.42 | 91.58 | 78.95 |

| Classifiers | Accuracy (%) | |||||||

|---|---|---|---|---|---|---|---|---|

| Motion-1 | Motion-2 | Motion-3 | Motion-4 | Motion-5 | Motion-6 | Motion-7 | Motion-8 | |

| k-NN | 89.47 1 | 86.32 1 | 88.42 1 | 91.58 1 | 91.58 1 | 86.32 1 | 85.26 | 86.32 1 |

| SVM | 83.16 | 72.63 | 74.74 | 86.32 | 83.16 | 84.21 | 87.37 1 | 73.68 |

| NB | 78.95 | 78.95 | 68.42 | 88.42 | 80.00 | 81.05 | 84.21 | 75.79 |

| Logistic Regression | 78.95 | 71.58 | 62.11 | 81.05 | 84.21 | 77.89 | 81.05 | 76.84 |

| DT | 73.68 | 65.26 | 65.26 | 61.05 | 65.26 | 62.11 | 73.68 | 65.26 |

| BPNN | 73.68 | 61.05 | 63.16 | 70.53 | 78.95 | 66.32 | 81.05 | 73.68 |

| RBFNN | 83.16 | 67.37 | 75.79 | 75.79 | 78.95 | 75.79 | 84.21 | 70.53 |

| 1D-CNN | 72.63 | 72.63 | 62.11 | 76.84 | 80.00 | 87.37 | 81.05 | 78.95 |

| Classifiers | Processing Time (s) |

|---|---|

| k-NN | 0.008 1 |

| SVM | 4.751 |

| NB | 0.021 |

| Logistics regression | 0.020 |

| DT | 0.010 |

| BPNN | 7.709 |

| RBFNN | 0.063 |

| 1D-CNN | 9.179 |

| Classifiers | Accuracy (%) | Processing Time (s) |

|---|---|---|

| k-NN | 97.63 | 0.055 2 |

| SVM | 99.47 | 0.914 |

| NB | 97.89 | 0.174 |

| Logistics regression | 99.21 | 0.407 |

| DT | 84.47 | 0.087 |

| BPNN | 86.97 | 13.270 |

| RBFNN | 75.53 | 0.295 |

| 1D-CNN | 99.74 1 | 80.958 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Yap, H.J.; Khoo, S. Motion Classification and Features Recognition of a Traditional Chinese Sport (Baduanjin) Using Sampled-Based Methods. Appl. Sci. 2021, 11, 7630. https://doi.org/10.3390/app11167630

Li H, Yap HJ, Khoo S. Motion Classification and Features Recognition of a Traditional Chinese Sport (Baduanjin) Using Sampled-Based Methods. Applied Sciences. 2021; 11(16):7630. https://doi.org/10.3390/app11167630

Chicago/Turabian StyleLi, Hai, Hwa Jen Yap, and Selina Khoo. 2021. "Motion Classification and Features Recognition of a Traditional Chinese Sport (Baduanjin) Using Sampled-Based Methods" Applied Sciences 11, no. 16: 7630. https://doi.org/10.3390/app11167630

APA StyleLi, H., Yap, H. J., & Khoo, S. (2021). Motion Classification and Features Recognition of a Traditional Chinese Sport (Baduanjin) Using Sampled-Based Methods. Applied Sciences, 11(16), 7630. https://doi.org/10.3390/app11167630