A Hybrid Microstructure Piezoresistive Sensor with Machine Learning Approach for Gesture Recognition

Abstract

:1. Introduction

2. System Design and Method

2.1. Sensor Manufacturing

2.2. Design and Measurements

2.3. Gesture Recognition Procedure

3. Application in Interventional Surgical Robot Training

3.1. Master Interface

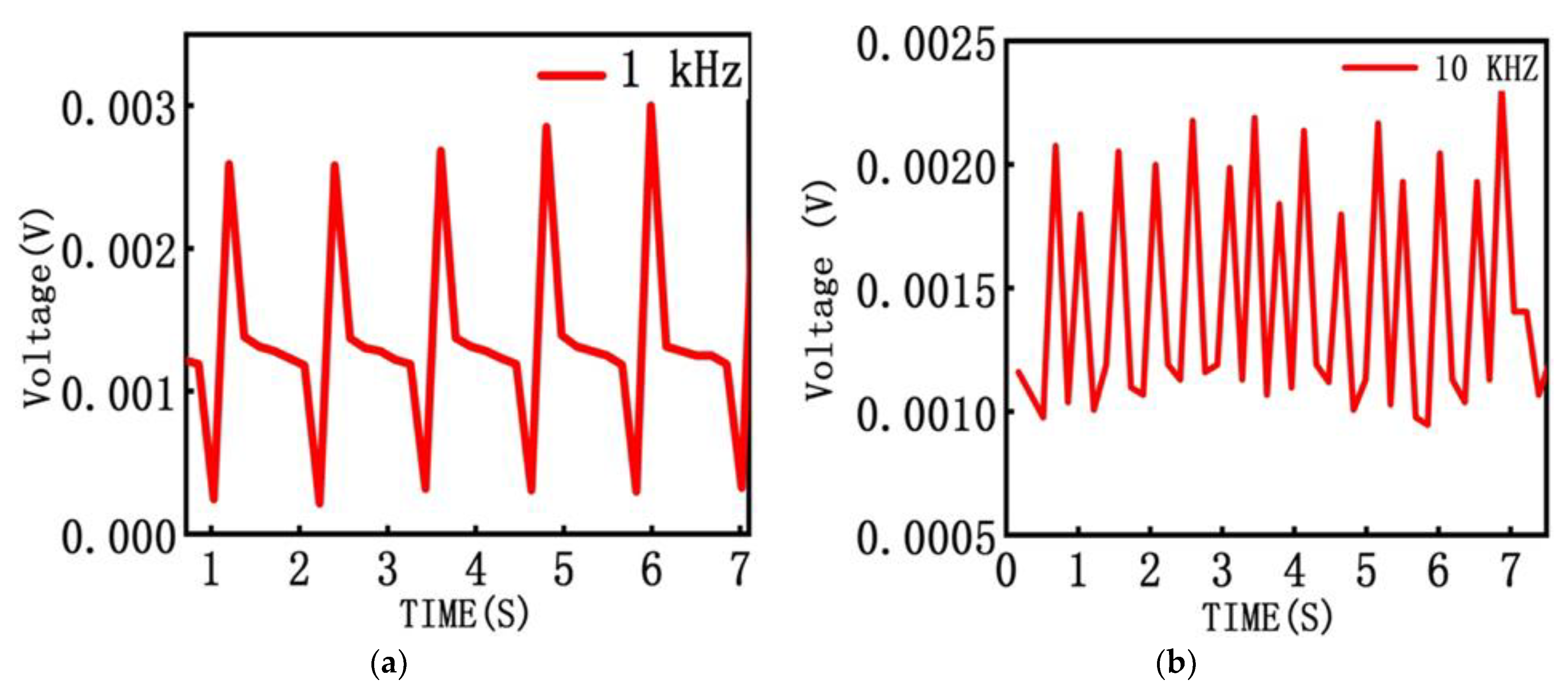

3.2. Sensing Performance

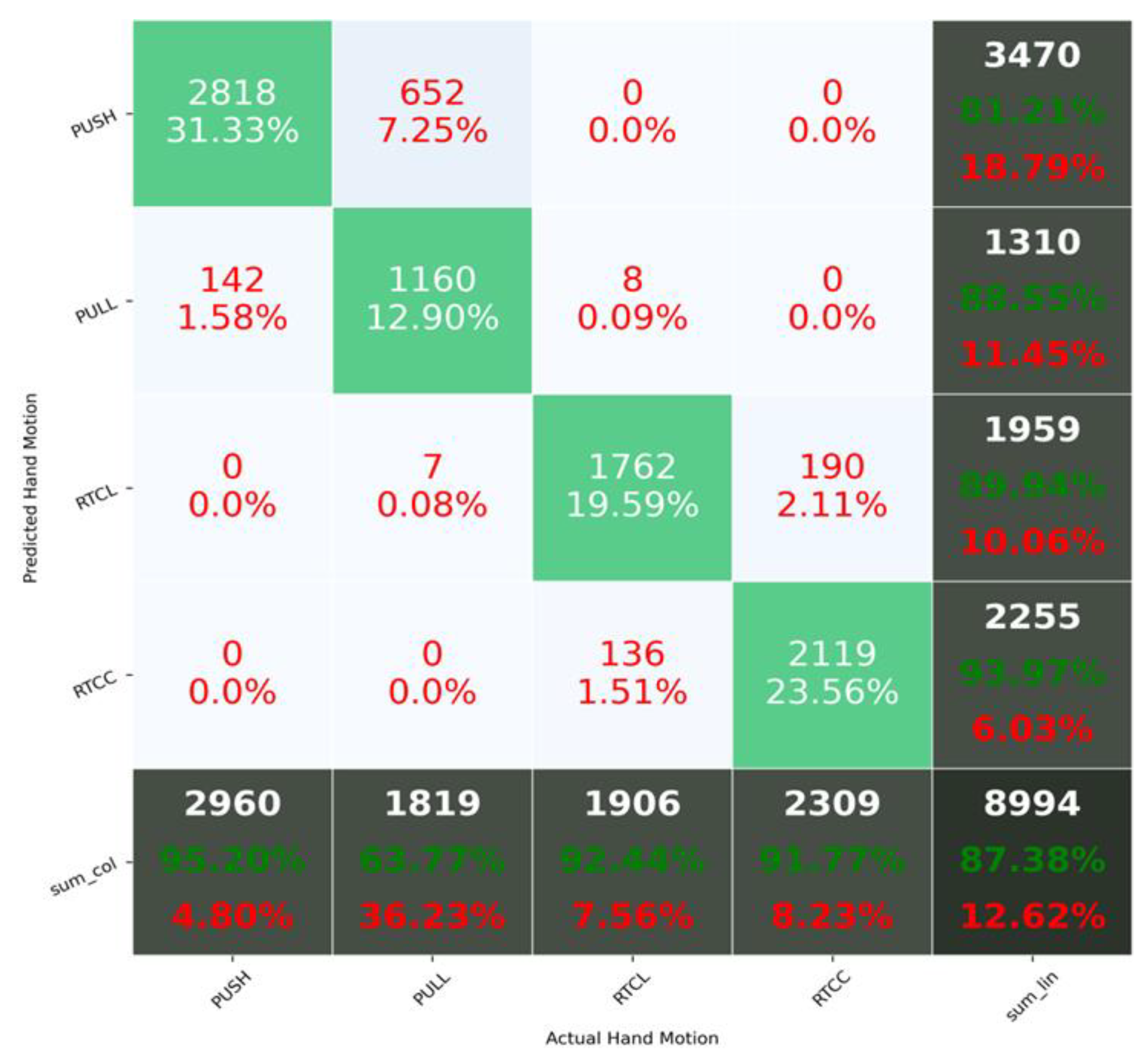

3.3. Motion Analysis

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Amjadi, M.; Pichitpajongkit, A.; Lee, S.; Ryu, S.; Park, I. Highly Stretchable and Sensitive Strain Sensor Based on Silver Nanowire–Elastomer Nanocomposite. ACS Nano 2014, 8, 5154–5163. [Google Scholar] [CrossRef] [PubMed]

- Ho, D.H.; Sun, Q.; Kim, S.Y.; Han, J.T.; Kim, D.H.; Cho, J.H. Stretchable and Multimodal All Graphene Electronic Skin. Adv. Mater. 2016, 28, 2601–2608. [Google Scholar] [CrossRef] [PubMed]

- Al-Handarish, Y.; Omisore, O.M.; Igbe, T.; Han, S.; Li, H.; Du, W.; Zhang, J.; Wang, L. A Survey of Tactile-Sensing Systems and Their Applications in Biomedical Engineering. Adv. Mater. Sci. Eng. 2020, 2020, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Schiavullo, R. Antilatency positional tracking brings 6 degrees of freedom to standalone vr headsets. Virtual Real. 2018, 15, 10. [Google Scholar]

- Chang, J.; Dommer, M.; Chang, C.; Lin, L. Piezoelectric nanofibers for energy scavenging applications. Nano Energy 2012, 1, 356–371. [Google Scholar] [CrossRef]

- Atalay, O.; Atalay, A.; Gafford, J.; Walsh, C. A Highly Sensitive Capacitive-Based Soft Pressure Sensor Based on a Conductive Fabric and a Microporous Dielectric Layer. Adv. Mater. Technol. 2018, 3, 1700237. [Google Scholar] [CrossRef]

- Zhu, G.; Yang, W.; Zhang, T.; Jing, Q.; Chen, J.; Zhou, Y.; Bai, P.; Wang, Z.L. Self-Powered, Ultrasensitive, Flexible Tactile Sensors Based on Contact Electrification. Nano Lett. 2014, 14, 3208–3213. [Google Scholar] [CrossRef]

- Dong, K.; Wu, Z.; Deng, J.; Wang, A.; Zou, H.; Chen, C.; Hu, D.; Gu, B.; Sun, B.; Wang, Z.L. A Stretchable Yarn Embedded Triboelectric Nanogenerator as Electronic Skin for Biomechanical Energy Harvesting and Multifunctional Pressure Sensing. Adv. Mater. 2018, 30, e1804944. [Google Scholar] [CrossRef]

- Sun, Q.; Seung, W.; Kim, B.J.; Seo, S.; Kim, S.-W.; Cho, J.H. Active Matrix Electronic Skin Strain Sensor Based on Piezopotential-Powered Graphene Transistors. Adv. Mater. 2015, 27, 3411–3417. [Google Scholar] [CrossRef]

- Lee, K.Y.; Yoon, H.; Jiang, T.; Wen, X.; Seung, W.; Kim, S.-W.; Wang, Z.L. Fully Packaged Self-Powered Triboelectric Pressure Sensor Using Hemispheres-Array. Adv. Energy Mater. 2016, 6, 1502566. [Google Scholar] [CrossRef]

- Amjadi, M.; Yoon, Y.J.; Park, I. Ultra-stretchable and skin-mountable strain sensors using carbon nanotubes–Ecoflex nanocomposites. Nanotechnology 2015, 26, 375501. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Q.; Liu, X.; Xu, H.; Cheung, M.-S.; Choi, Y.-W.; Huang, H.-C.; Lei, H.-Y.; Shen, X.; Wang, Z.; Wu, Y.; et al. Sliced graphene foam films for dual-functional wearable strain sensors and switches. Nanoscale Horiz. 2017, 3, 35–44. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Tang, C.; Du, X.; Xiong, S.; Xi, S.; Liu, Y.; Shen, X.; Zheng, Q.; Wang, Z.; Wu, Y.; et al. A highly sensitive graphene woven fabric strain sensor for wearable wireless musical instruments. Mater. Horiz. 2017, 4, 477–486. [Google Scholar] [CrossRef]

- Pan, L.; Chortos, A.; Yu, G.; Wang, Y.; Isaacson, S.; Allen, R.; Shi, Y.; Dauskardt, R.; Bao, Z. An ultra-sensitive resistive pressure sensor based on hollow-sphere microstructure induced elasticity in conducting polymer film. Nat. Commun. 2014, 5, 3002. [Google Scholar] [CrossRef] [Green Version]

- Pang, C.; Lee, G.-Y.; Kim, T.-I.; Kim, S.M.; Kim, H.N.; Ahn, S.-H.; Suh, K.-Y. A flexible and highly sensitive strain-gauge sensor using reversible interlocking of nanofibres. Nat. Mater. 2012, 11, 795–801. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Han, Y.; Zhang, X.; Zhou, Z.; Lu, C. Large-Area Compliant, Low-Cost, and Versatile Pressure-Sensing Platform Based on Microcrack-Designed Carbon Black@Polyurethane Sponge for Human-Machine Interfacing. Adv. Funct. Mater. 2016, 26, 6246–6256. [Google Scholar] [CrossRef]

- Wu, W.; Wen, X.; Wang, Z.L. Taxel-Addressable Matrix of Vertical-Nanowire Piezotronic Transistors for Active and Adaptive Tactile Imaging. Science 2013, 340, 952–957. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gong, S.; Schwalb, W.; Wang, Y.; Chen, Y.; Tang, Y.; Si, J.; Shirinzadeh, B.; Cheng, W. A wearable and highly sensitive pressure sensor with ultrathin gold nanowires. Nat. Commun. 2014, 5, 3132. [Google Scholar] [CrossRef] [Green Version]

- Guo, H.; Tan, Y.J.; Chen, G.; Wang, Z.; Susanto, G.J.; See, H.H.; Yang, Z.; Lim, Z.W.; Yang, L.; Tee, B.C.K. Artificially innervated self-healing foams as synthetic piezo-impedance sensor skins. Nat. Commun. 2020, 11, 1–10. [Google Scholar] [CrossRef]

- Cheng, Y.; Wang, R.; Zhai, H.; Sun, J. Stretchable electronic skin based on silver nanowire composite fiber electrodes for sensing pressure, proximity, and multidirectional strain. Nanoscale 2017, 9, 3834–3842. [Google Scholar] [CrossRef] [PubMed]

- Yogeswaran, N.; Dang, W.; Navaraj, W.; Shakthivel, D.; Khan, S.; Polat, E.; Gupta, S.; Heidari, H.; Kaboli, M.; Lorenzelli, L.; et al. New materials and advances in making electronic skin for interactive robots. Adv. Robot. 2015, 29, 1359–1373. [Google Scholar] [CrossRef] [Green Version]

- Alsamhi, S.H.; Ma, O.; Ansari, M.S. Survey on artificial intelligence-based techniques for emerging robotic communication. Telecommun. Syst. 2019, 72, 483–503. [Google Scholar] [CrossRef]

- Gil, B.; Li, B.; Gao, A.; Yang, G.-Z. Miniaturized Piezo Force Sensor for a Medical Catheter and Implantable Device. ACS Appl. Electron. Mater. 2020, 2, 2669–2677. [Google Scholar] [CrossRef] [PubMed]

- Alsamhi, S.H.; Ma, O.; Ansari, M.S. Convergence of Machine Learning and Robotics Communication in Collaborative Assembly: Mobility, Connectivity and Future Perspectives. J. Intell. Robot. Syst. 2019, 98, 541–566. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Kim, S.; Zhang, X.; Daugherty, R.; Lee, E.; Kunnen, G.; Allee, D.R.; Forsythe, E.; Chae, J. Microelectromechanical Systems (MEMS) Based-Ultrasonic Electrostatic Actuators on a Flexible Substrate. IEEE Electron Device Lett. 2012, 33, 1072–1074. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Kautz, J. Hand gesture recognition with 3D convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 1–7. [Google Scholar]

- Chortos, A.; Liu, J.; Bao, J.L.Z. Pursuing prosthetic electronic skin. Nat. Mater. 2016, 15, 937–950. [Google Scholar] [CrossRef] [PubMed]

- Liu, G.Y.; Kong, D.Y.; Hu, S.G.; Yu, Q.; Liu, Z.; Chen, T.; Yin, Y.; Hosaka, S.; Liu, Y. Smart electronic skin having gesture recognition function by LSTM neural network. Appl. Phys. Lett. 2018, 113, 084102. [Google Scholar] [CrossRef]

- Sohn, K.-S.; Chung, J.; Cho, M.-Y.; Timilsina, S.; Park, W.B.; Pyo, M.; Shin, N.; Sohn, K.; Kim, J.S. An extremely simple macroscale electronic skin realized by deep machine learning. Sci. Rep. 2017, 7, 11061. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.-Z.; Bellingham, J.; Dupont, P.E.; Fischer, P.; Floridi, L.; Full, R.; Jacobstein, N.; Kumar, V.; McNutt, M.; Merrifield, R.; et al. The grand challenges ofScience Robotics. Sci. Robot. 2018, 3, eaar7650. [Google Scholar] [CrossRef] [PubMed]

- Al-Handarish, Y.; Omisore, O.M.; Duan, W.; Chen, J.; Zebang, L.; Akinyemi, T.; Du, W.; Li, H.; Wang, L. Facile Fabrication of 3D Porous Sponges Coated with Synergistic Carbon Black/Multiwalled Carbon Nanotubes for Tactile Sensing Applications. Nanomaterials 2020, 10, 1941. [Google Scholar] [CrossRef] [PubMed]

- Xiao, X.; Yuan, L.; Zhong, J.; Ding, T.; Liu, Y.; Cai, Z.; Rong, Y.; Han, H.; Zhou, J.; Wang, Z.L. High-Strain Sensors Based on ZnO Nanowire/Polystyrene Hybridized Flexible Films. Adv. Mater. 2011, 23, 5440–5444. [Google Scholar] [CrossRef] [PubMed]

- Johansson, R.S.; Flanagan, J.R. Coding and use of tactile signals from the fingertips in object manipulation tasks. Nat. Rev. Neurosci. 2009, 10, 345–359. [Google Scholar] [CrossRef] [PubMed]

- Arakeri, T.J.; Hasse, B.A.; Fuglevand, A.J. Object discrimination using electrotactile feedback. J. Neural Eng. 2018, 15, 046007. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Omisore, O.; Duan, W.; Du, W.; Li, W.; Zheng, Y.; Al-Handarish, Y.; Akinyemi, T.; Liu, Y.; Xiong, J.; Wang, L. Automatic tool segmentation and tracking during robotic intravascular catheterization for cardiac interventions. Quant. Imaging Med. Surg. 2021, 11, 2688–2710. [Google Scholar] [CrossRef]

| Classification Methods | Recognition Accuracy on Test Data (%) | ||||

|---|---|---|---|---|---|

| PUSH | PULL | RTCL | RTCC | Aggregate | |

| LSTM + Dense | 81.21 | 88.55 | 89.94 | 93.97 | 87.38 |

| SVM | 78.66 | 83.81 | 89.21 | 91.13 | 84.82 |

| MLP | 82.78 | 83.81 | 90.19 | 94.23 | 87.37 |

| DT | 84.04 | 75.41 | 87.18 | 89.88 | 84.52 |

| KNN | 85.33 | 80.70 | 90.58 | 92.08 | 87.31 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al-Handarish, Y.; Omisore, O.M.; Chen, J.; Cao, X.; Akinyemi, T.O.; Yan, Y.; Wang, L. A Hybrid Microstructure Piezoresistive Sensor with Machine Learning Approach for Gesture Recognition. Appl. Sci. 2021, 11, 7264. https://doi.org/10.3390/app11167264

Al-Handarish Y, Omisore OM, Chen J, Cao X, Akinyemi TO, Yan Y, Wang L. A Hybrid Microstructure Piezoresistive Sensor with Machine Learning Approach for Gesture Recognition. Applied Sciences. 2021; 11(16):7264. https://doi.org/10.3390/app11167264

Chicago/Turabian StyleAl-Handarish, Yousef, Olatunji Mumini Omisore, Jing Chen, Xiuqi Cao, Toluwanimi Oluwadara Akinyemi, Yan Yan, and Lei Wang. 2021. "A Hybrid Microstructure Piezoresistive Sensor with Machine Learning Approach for Gesture Recognition" Applied Sciences 11, no. 16: 7264. https://doi.org/10.3390/app11167264

APA StyleAl-Handarish, Y., Omisore, O. M., Chen, J., Cao, X., Akinyemi, T. O., Yan, Y., & Wang, L. (2021). A Hybrid Microstructure Piezoresistive Sensor with Machine Learning Approach for Gesture Recognition. Applied Sciences, 11(16), 7264. https://doi.org/10.3390/app11167264