Abstract

Random outbreaks of infectious diseases in the past have left a persistent impact on societies. Currently, COVID-19 is spreading worldwide and consequently risking human lives. In this regard, maintaining physical distance has turned into an essential precautionary measure to curb the spread of the virus. In this paper, we propose an autonomous monitoring system that is able to enforce physical distancing rules in large areas round the clock without human intervention. We present a novel system to automatically detect groups of individuals who do not comply with physical distancing constraints, i.e., maintaining a distance of 1 m, by tracking them within large areas to re-identify them in case of repetitive non-compliance and enforcing physical distancing. We used a distributed network of multiple CCTV cameras mounted to the walls of buildings for the detection, tracking and re-identification of non-compliant groups. Furthermore, we used multiple self-docking autonomous robots with collision-free navigation to enforce physical distancing constraints by sending alert messages to those persons who are not adhering to physical distancing constraints. We conducted 28 experiments that included 15 participants in different scenarios to evaluate and highlight the performance and significance of the present system. The presented system is capable of re-identifying repetitive violations of physical distancing constraints by a non-compliant group, with high accuracy in terms of detection, tracking and localization through a set of coordinated CCTV cameras. Autonomous robots in the present system are capable of attending to non-compliant groups in multiple regions of a large area and encouraging them to comply with the constraints.

1. Introduction

In the past few years, infectious diseases have been found to be very challenging and difficult to control due to their transferring effect, resulting in a large impact on society. They can spread at different geographical levels due to their ability of human-to-human transmission. According to the national public health institute named the Centers for Disease Control and Prevention (CDC) in the United States, an infectious disease can be declared as a ‘pandemic’ when a sudden and rapid increase in its cases is seen in the global population. In recent history, various pandemics have been reported. In a report created by Nicholas [1], the history of pandemics over a period of time has been explained with their impact in terms of death toll, which is summarized in Table 1.

Table 1.

The history of pandemics with respect to the death toll.

Based on the history of pandemics presented in this study [1], it is likely that more such pandemics will arise in the future. Therefore, nations should be prepared for them. It is imperative to understand the reasons behind the transmission of such infectious diseases. A large number of epidemiological studies have pointed out that the main path of the spread of such infectious diseases has been human-to-human transmission [2]. This indicates that infectious diseases spread when people maintain direct physical contact with each other. Physical distancing has always been recommended as the most effective safety measure to avoid the spread of such pandemics [3] and is currently being implemented by governments worldwide to slow the spread of the COVID-19 virus [4]. Despite the implementation of such measures, the span of the virus spread has been increasing with time due to the violation of set constraints because of lack of knowledge and carelessness. In this case, continuous monitoring is required to enforce the constraints to control the spread of the virus. It is difficult to manually monitor all areas; therefore, intelligent and autonomous systems are required for efficient and persistent monitoring of set constraints such as the use of facemasks, physical distancing, body temperature checks, etc. Moreover, modern interactive technology platforms such as robots hold potential to be used for the enforcement of those constraints through social interaction with people. Such approaches can be beneficial in reducing human-to-human interaction to potentially curb the spread of the virus. Robotics has a huge potential to play a vital role in the current fight against the COVID-19 virus [5]. Robots can be deployed for various purposes to help curb the spread of the virus. For instance, they can be utilized for mobile surveillance, disinfection, delivery, interactive awareness systems, companion robots, vital signs detection, etc. In past research, a wide range of multi-agent robot systems (MARS) based on heterogeneous distributed sensor networks have been proposed for effective and efficient surveillance in multiple scenarios [6,7]. MARS is a system that comprises fixed agents, i.e., sensors fixed at some location, and single or multiple mobile agents, i.e., robots.

During the current pandemic situation, performing operational tasks, such as surveillance, digital interaction, help desks and medical service provision, using robots has gained huge popularity [8]. Fan et al. [9] presented an autonomous quadruped robot to ensure physical distancing to combat COVID-19. The designed robot was supposed to roam around the place for persistent surveillance to detect violations of physical distancing constraints. In case of any violation, the robot informed the people through verbal cues to maintain a safe distance. Moreover, social robots are playing a vital role in combating COVID-19 by minimizing person-to-person interactions, especially in healthcare services [10,11,12]. Recently, Sathyamoorthy et al. [13] presented a robot system to monitor physical distancing constraints in crowds and enforce them through robots by displaying alert messages on the robot’s mounted display. In the case of persistent non-compliance by a group of persons wandering from one place to another, the robot pursued that group and kept displaying the message. One of the limitations of this system is that while pursuing that group, there is a high possibility that the robot would not be able to attend to other non-compliant groups. Moreover, this study is missing the mechanism to track groups that remained unattended by the robot. Furthermore, there was no long-term tracking of non-compliant groups to further monitor their behavior after receiving the alert message from the robot. Consequently, it was not possible to track repetitive violations using this system. Due to these issues, this system may not be able to effectively enforce physical distancing constraints in large areas such as shopping malls, airports, etc. In this regard, we present an autonomous and interactive monitoring system with large-scale area coverage for effective and efficient monitoring to combat COVID-19 and future pandemics.

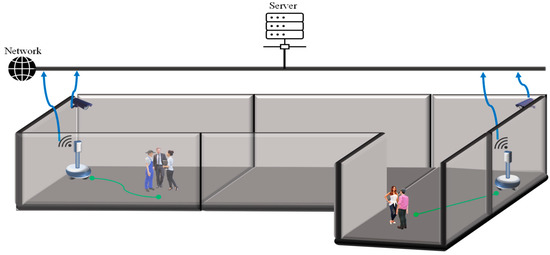

In the present study, we present a cooperative MARS for monitoring and enforcing physical distancing constraints in large areas through human–robot interaction (HRI) to combat COVID-19 and future pandemics. In the present study, a group of persons who violate physical distancing constraints is referred to as a non-compliant group. The aims of the proposed system are as follows: (1) persistent monitoring of large indoor areas using multiple CCTV cameras to detect the violation of physical distancing constraints; (2) interactive encouragement of non-compliant groups to adhere to physical distancing constraints by giving them an alert message through speech-based HRI; (3) long-term tracking and re-identification of non-compliant groups through a multi-camera system to alert them on highest priority and report to the control room in the case of repetitive violations of physical distancing constraints. As shown in Figure 1, the design of the proposed system is based on two types of agents: (1) fixed agents, i.e., calibrated CCTV cameras, and (2) mobile agents, i.e., self-docking autonomous robots with collision-free navigation. Both agents work cooperatively by mutually sharing useful information between each other.

Figure 1.

Multi-agent robot system.

In the present system, the persistent monitoring of physical distancing constraints is performed based on the visual information received from the distributed network of multiple CCTV cameras mounted within the building. The team of robots stay at their docking stations until a violation of physical distancing constraints is detected by the cameras. In the case of a detected violation, the system shares the location of the non-compliant group with the robot that is located closest to the area of the building where the violation was detected. After receiving the location, the robot navigates to the given location to convey an alert message to the target non-compliant group. The system architecture with a detailed description of each functional module is presented in Section 3. The main contributions of the present study are summarized as follows:

- We propose an intelligent and cooperative MARS for the efficient monitoring of physical distancing constraints and interactively enforcing them through HRI to combat COVID-19 and future pandemics. To the best of our knowledge, we are the first to propose such a monitoring system, which is based on a distributed network of multiple cameras and a multi-robot system (MRS) to combat ongoing and future pandemics.

- We develop a pipeline for group re-identification through person re-identification using a deep learning-based technique to track and re-identify non-compliant groups through the multi-camera system. This method ensures the long-term tracking of non-compliant groups that are wandering from one place to another in large areas, attending to them at highest priority through a robot and notifying the security control room in a timely manner in case of repetitive violations.

- Based on our proposed system, we ensured that all non-compliant groups were inclusively tracked and received the alert message about a breach of physical distancing constraints through HRI.

The rest of the paper is organized as follows. Section 2 provides an overview of the existing work related to multi-agent systems (MAS) with regard to surveillance, the effectiveness of physical distancing and the potential of robotics to combat COVID-19 and future pandemics. The proposed system with detailed descriptions of its modules is presented in Section 3. Evaluation metrics and experimental results are described in Section 4. Finally, we conclude our proposed system in Section 5 with a discussion about limitations and future directions.

2. Related Work

In this section, we review the previous literature on robotic systems specifically categorized into MAS for surveillance, the potential of robotics to combat COVID-19, the effectiveness of physical distancing and emerging technologies to monitor physical distancing.

2.1. Multi-Agent Systems for Intelligent Surveillance

Intelligent surveillance includes various tasks such as detection, tracking and understanding different behaviors in various environments [14]. MAS has gained huge attention in recent years due to its broad range of applications such as cooperative surveillance, distributed tracking of objects and intrusion detection [15,16,17]. Various advancements and methods have been proposed and implemented based on MAS to increase efficiency in surveillance. Milella et al. [6] implemented a MARS that is based on fixed and mobile agents for the active surveillance of places such as museums, airports, warehouses, etc. The system was able to detect the intrusion of persons within forbidden areas and send the location to the mobile agent, i.e., a robot, for further exploration of that area. Furthermore, Pennisi et al. [7] proposed an MRS for surveillance through a network of distributed sensors to detect a person through fixed sensors and send a robot to the location of the detected person for inspection and stopping the target person in case of any anomaly by blocking their way. Du et al. [18] presented a strategy for MAS-based surveillance to track an evader through cooperation between mobile agents. In another work, Mostafa et al. [19] proposed an autonomy model based on fuzzy logic to manage the autonomy of a MAS in complex environments. The aim of this model was to assist the autonomy management of the agents by helping them in making competent autonomous decisions. The application of this model was presented in the monitoring of movements of elderly people. In another work, Kariotoglou et al. [20] developed a framework based on stochastic reachability and hierarchical task allocation to solve the dimensionality problem faced by state-space gridding solutions based on dynamic programming for Markov decision processes in autonomous surveillance with a collection of pan-tilt cameras. The authors conducted the experiment with the proposed framework on a setup targeting industrial pan-tilt cameras and mobile robots. A MAS that includes robot as mobile agents is referred to as a MARS. During persistent surveillance through MARS, robots sequentially visit regions of interest (ROIs) based on applied constraints known as temporal logic (TL). Aksaray et al. [21] presented a method to minimize the time between visits of robots to ROIs by sharing the times of visits among them while considering their TLs to enhance efficiency and reduce redundant visits to those regions. Wu et al. [22] proposed an optimal method to sense robots based on less energy consumption for efficiently adjusting the position of mobile relay for maintaining the quality of the wireless link while the robots are moving. In another work, Jahn et al. [23] proposed a distributed technique for a team of robots to plan deformation while they are moving around a region to create a fence for perimeter surveillance and need to take this fence to another region. Scherer et al. [24] introduced multiple heuristics with various planning perspectives for convex-grid graphs and combined them with the tree traversal approach for better communication in the MRS for persistent surveillance with connectivity constraints.

2.2. Role of Robotics during COVID-19

The outbreak of the COVID-19 pandemic has negatively impacted our society. This situation is inevitable and requires modern solutions. COVID-19 has interrupted our usual face-to-face interactions and frustrated us because of the possibility of spreading the virus through physical interaction. The fourth industrial revolution, known as Industry 4.0, should fulfil the requirements to effectively control and manage the COVID-19 pandemic [25]. The presence of intelligent robots surged in various fields, e.g., autonomous driving, medical, rehabilitation, education, companionship, surveillance, information guide, telepresence, etc., to minimize the potential spread of this virus [26]. Robot-assisted surgeries are also being taken into positive consideration in surgical environments [8,27]. Furthermore, Mahdi Tavakoli et al. [28] presented an analysis of the robotics and autonomous systems for healthcare during COVID-19. Based on their analysis, they recommended immediate investment in robotics technology as a good step toward making healthcare services safe for both patients and healthcare workers. Moreover, the ongoing pandemic is affecting the social well-being of people and triggering feelings of loneliness in them. Social and companion robots have been considered as a potential solution to mitigate these feelings of loneliness through continuous social interaction with less fear of spreading the infectious disease [29,30,31,32,33]. Rovenso recently developed a UV disinfectant robot targeting offices and commercial spaces [34]. Moreover, there is another autonomous robot named AIMBOT developed by UBTECH Robotics that performs disinfection tasks at Shenzhen Third Hospital [35].

2.3. Effectiveness of Physical Distancing

Multiple works have simulated the spread of the virus [36,37,38] to show the effectiveness of different social distancing measures. The ratio between the total cases of infections during the entire course of the outbreak is termed as the attack rate [39]. According to Mao [36], the attack rate can be decreased up to 82% if three consecutive days are eliminated from working days of a workplace setting. Within the same setting, the attack rate can be reduced up to 39.22% [37] or 11–20% by maintaining a physical distance of 6 feet between the individuals at the workplace depending upon the frequency of contact between them [38].

2.4. Emerging Technologies to Monitor Physical Distancing

Recently, different methods have been proposed to monitor the physical distancing between people. Workers in the warehouses of Amazon are monitored through CCTV cameras to detect physical distancing breaches [40]. Other techniques are based on the use of wearable devices [41,42]. These devices use the technologies of Bluetooth or ultra-wide band (UWB). Moreover, different companies such as Google and Apple are developing applications to trace the contacts of people so that alert messages can be delivered to the users if they come in close contact with an infected person [43]. In an extensive survey by Nguyen et al. [39], the technologies that can be used to track people to detect if they are following social distancing rules properly are discussed. The pros and cons of these technologies, such as WiFi, RFID, Bluetooth, artificial intelligence and computer vision, are also discussed in this comprehensive survey.

3. Proposed System

In this section, we first describe the hardware architecture used to build the proposed system. Then, we present our method with a detailed description about each functional module.

3.1. Hardware Architecture

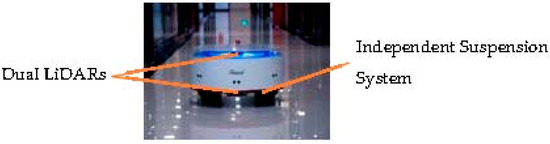

We developed the robot on top of the smart base named ‘EAIBOT SMART’ [44], which is shown in Figure 2. The smart base consists of dual liDAR sensors of type YDLIDAR G4 to map the surrounding area. One of the liDAR sensors was mounted on top while the second was mounted beneath the smart base. Dual liDAR sensors mounted at two different heights made the collision avoidance and mapping system more robust. Collision avoidance was aided by a gyroscope and five ultrasonic sensors mounted in different directions to cover the world at 360°. We used built-in collision avoidance, mapping and navigation in this robot base. Moreover, the smart base had a 10-h battery life, which was long enough for it to survive for longer durations. It had a docking station as well and was able to autonomously navigate to the docking station to automatically put itself on charge.

Figure 2.

EAIBOT SMART.

We equipped our robot with a laptop on top of it to use its RGB camera, speaker and microphone for HRI. This laptop can be replaced with a tablet or any other setup having the sensors mentioned above for HRI. Furthermore, we set up the CCTV RGB cameras with the resolution of 1080, mounted at heights that provide angled views of different locations of the building so as to monitor different areas. We used a machine with the Intel i7 10th generation CPU and an Nvidia RTX 2070 Super GPU to process video streams received from the CCTV cameras.

3.2. Our Method

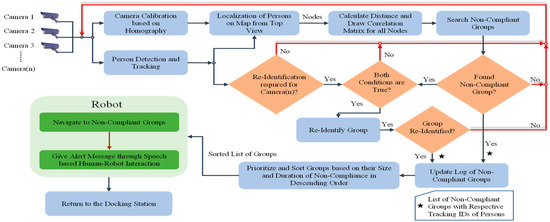

We used the Robot Operating System (ROS) [45] with the ‘kinetic’ distribution named to build our system. The ROS is very efficient in structuring and managing robot applications. Moreover, it ensures a modular and expandable system. The overall system architecture is shown in Figure 3.

Figure 3.

Overall system architecture.

In this section, we describe the main components of our method to detect the violation of physical distancing constraints and enforce them using HRI. The main components of our method are as follows: (1) Detection, localization and tracking of the persons; (2) Search for non-compliant groups based on the violation of physical distancing constraints; (3) Re-identification of the moving non-compliant groups through a multi-camera system; (4) Prioritization of the non-compliant groups; (5) Delivery of alert message to non-compliant groups through speech-based HRI.

3.2.1. Monitoring Physical Distancing Constraints

The criterion used for detecting the violation of physical distancing constraints by non-compliant groups in our method was to detect the physical distance of less than 1 m. All CCTV cameras continuously monitored the environment within their respective fields of view (FoV) to detect non-compliant groups. The main components of this functional module are as follows.

Person Detection and Tracking

Object detection [46,47], localization and tracking have been active areas of research. For person detection and tracking, we used a pipeline based on the tracking algorithm proposed in [48] and the object detection method named ‘You Only Look Once (YOLO)’ version four, i.e., YOLOv4 [49]. According to the results presented in [49], it outperformed state-of-the-art object detection methods such as ‘EfficientDet’ [46] and its own previous version named ‘YOLOv3’ [47] in terms of the average precision and frames per second (FPS) speed. The experimental results showed that the pipeline achieved very good performance in terms of accuracy and speed. Input to this pipeline was RGB images received from the CCTV camera and output was the set of bounding boxes, i.e., top left corner coordinates, width, and height, for the detected persons in the given image. It also generated a unique identity for each detected person that remains same while the person remains in the current FoV of the camera. In order to consume this pipeline for video streams from each CCTV camera within the multi-camera system for monitoring, we used multi-threading and asynchronous calls. Each video stream was handled in an independent thread.

Localization of Detected Persons

All CCTV cameras were mounted in such a way that they provided angled views of the ground plane. In order to accurately calculate the distance between the persons, we preferred the top view of the ground plane, which represents the exact location of persons’ feet on ground. For this purpose, we converted the angled view to the top view by applying the homography matrix to the four reference points on the angled view from CCTV. These reference points were manually selected during camera calibration so that they could cover the maximum area of the FoV of the CCTV camera, as shown in Figure 4.

Figure 4.

CCTV camera calibration: Yellow markers represent (a) pixel coordinates in angled view; (b) Cartesian coordinates in top view.

The conversions of these points were performed by using Equation (1).

In Equation (1), xangledView and yangledView indicate the pixel coordinates of one of the four reference points in the angled image view from the CCTV; xtopView and ytopView represent the same point after conversion to top view; and ‘H’ represents the scaled 3 × 3 homography translation-matrix. To transform the angled view to the top view of any detected person, as shown in Equation (2), we used the middle point of the bottom corners’ points of the bounding box yielded by the detection and tracking pipeline (Section ‘Person Detection and Tracking’) against that detected person. This middle point represented the feet of the detected person.

where represents the pixel coordinates of the feet of the detected person P(k) with a unique ID ‘k’ in the angled image view from the CCTV, and represents the same point after conversion into top view.

Distance Estimation and Search for Non-Compliant Groups

After transforming the pixel coordinates of the detected persons into top view, we estimated the distance between each person. Here, we treated each transformed position as a node. The distance between two nodes was calculated using the formula of Euclidean distance. The pair-wise Euclidean distances between multiple persons are shown in Table 2.

Table 2.

Euclidean distances between persons.

Then, a truth table was created based on this correlation matrix of Euclidean distances. Any connection or Euclidean distance less than one meter between the two nodes was denoted as True (Table 3).

Table 3.

Truth table based on the correlation matrix.

A modified depth-first search algorithm [50,51] was used to find all paths between the nodes in the environment, with no repeated nodes. In an environment with only one path, the algorithm can find this path in ‘O(V + E)’ time, where ‘V’ and ‘E’ represent the number of vertices and edges in the graph, respectively. However, there is a possibility of a very large number of paths in a graph, for instance, ‘O(!n)’ in a graph of order ‘n’. In order to deal with this problem, the search on each path was terminated when it reached the threshold of group size ‘xc’, where ‘c’ represents the number of nodes, i.e., persons, in the group. In our case, we considered ‘xc = 10’ as a threshold value to stop searching. For each returned path, the average position of all the nodes in a group was considered as the position of that group in the map. This information about the position of a non-compliant group was used to navigate the robot. Representation of the list of non-compliant groups of detected persons is shown in Equation (3).

where ‘l’ is the unique identity assigned to the group ‘Gl’, ‘k’ is the unique identity of the person ‘Pk’, ‘c’ is the total number of nodes and ‘tl’ is the duration of non-compliance by the lth non-compliant group ‘Gl’. ‘tl’ was computed by the tracking of non-compliant groups based on unique identities given to the tracked persons.

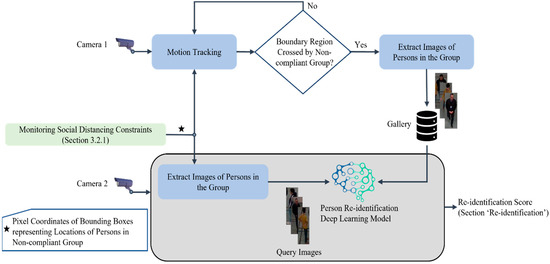

3.2.2. Re-Identification of Non-Compliant Groups

This module of the system ensured the persistent monitoring and tracking of the non-compliant groups that were moving within the environment through a multi-camera setup. It was performed using the motion tracking and the re-identification modules based on the information communication between multiple CCTV cameras through the server. Re-identification was performed in parallel to the detection, tracking and localization of the non-compliant groups, as shown in Figure 3. The duration of non-compliance (tl) by a moving non-compliant group kept incrementing until that group was re-identified while again violating the physical distancing constraints. The flow diagram in Figure 5 shows the overall process of group re-identification.

Figure 5.

Re-identification of non-compliant groups.

Motion Tracking

In the process of the motion tracking of each non-compliant group, we first selected bounding boxes based on the identities of the detected persons belonging to a non-compliant group, yielded by the section ‘Distance Estimation and Search for Non-Compliant Groups’. After selecting the bounding boxes, we found the center position of the non-compliant group in an image received from the CCTV camera by taking the average of the pixel coordinates representing the top leftmost and bottom rightmost corners among all corners of the bounding boxes selected in the previous step. We repeated the previous two steps with the skip of five frames in the video stream to find the position of that group in the next frame. After finding the positions of the group in two different frames, we calculated the Euclidean distance (Equation (3)) between pixel coordinates at both positions of the group. It was considered as a change in position if the calculated distance reached a set threshold value. We divided the FoV of the CCTV camera into boundary regions, which is shown in Figure 6.

Figure 6.

Division of the FoV of a CCTV camera into regions.

If the new position of the moving non-compliant group was located within the boundary regions, images of the persons of that group were extracted based on the bounding boxes yielded by the person detection pipeline, which is discussed in the section ‘Person Detection and Tracking’. Later, these images were stored in the database to re-identify that non-compliant group in the case of being detected through other CCTV cameras while again violating the physical distancing constraints. Information kept in the name of each stored image of a person was person identity and group identity as ‘Plk’.

Re-Identification

We developed a pipeline for group re-identification based on the existing algorithm for person re-identification. In order to perform the re-identification of non-compliant groups, we used a lightweight and state-of-the-art person re-identification deep learning model termed as Omni-Scale Network (OSNet) [52]. We used a pre-trained model, which was trained on six widely used person re-identification image datasets: Market1501 [53], CUHK03 [54], MSMT17 [55], DukeMTMC-reID (Duke) [56,57], GRID [58] and VIPeR [59]. In this person re-identification technique, the database of existing images of the persons, from which the model has to re-identify the target person, is called ‘Gallery’, and the image of the target person is called the ‘Query’ image. For implementation, we used a library for deep learning person re-identification named Torchreid [60], which is based on a well-known machine learning library named PyTorch.

Group re-identification was performed whenever a non-compliant group was detected through any CCTV camera to re-identify if it was previously detected by another camera while violating the physical distancing constraints. We cropped and extracted images of the persons in non-compliant groups detected by the person detection and tracking pipeline (Section ‘Person Detection and Tracking’) through a current CCTV camera for using them as query images in the re-identification module. The Gallery was based on the images of the persons present in moving non-compliant groups, which were tracked and extracted by the motion tracking module (Section ‘Motion Tracking’) We used the term re-identification score ‘Sl’ to determine if the target non-compliant group was re-identified, where ‘l’ represents the unique identity of that group. It was based on how many persons were re-identified from the group based on the query images. ‘Sl = 1’ meant that one person from the query images was re-identified. The target non-compliant group was considered as re-identified in case of ‘Sl ≥ 2’, which means that at least two persons were re-identified from query images. The steps included in the pipeline developed for performing group re-identification are shown in Algorithm 1.

| Algorithm 1 Re-identification of non-compliant group |

| Input: = Extracted images of persons in target non-compliant group as |

| Output: Re-identification Status ( or ), = List of identities of re-identified persons as |

| Steps: |

| 1: |

| 2: |

| 3: Compute Sl |

| 4: If Sl ≥ 2 then return , IDPi |

| 5: Else return , IDPi |

Where ‘l’ represents the identity of the group, ‘k’ represents the identity of the person and ‘D’ represents the Gallery database of the persons belonging to the moving non-compliant groups. Whenever a non-compliant group was re-identified, the corresponding identities of the persons from that group were updated in the database to keep track of them.

3.2.3. Prioritization of Non-Compliant Groups

Prioritization refers to which non-compliant group should be addressed first by the robot. Only those non-compliant groups were considered in this step who were locked at a location and not moving, which was decided based on the section ‘Motion Tracking’. It was performed based on two factors, namely, the size of the non-compliant group (xc) and the duration of non-compliance (tl). Prioritization was performed in a hierarchical way based on these two factors. ‘tl’ was considered first while prioritizing the non-compliant groups because the continuous violation of physical distancing constraints over a longer period can be more dangerous. ‘tl’ was divided into different ranges with a constant difference. It could be ‘0 min ≤ tl ≤ 5 min’, ‘5 min ≤ tl ≤ 10 min’, etc. The non-compliant groups within the higher range of ‘tl’ were given high prioritization based on ‘xc’. The group with a higher value of ‘xc’ was given higher priority. On top of these two factors, a non-compliant group that was re-identified while again violating physical distancing constraints was given highest priority due to its continuity in violation.

3.2.4. Enforcement of Physical Distancing Constraints

After the prioritization of locked non-compliant groups, a prioritized list of locations of these groups was transmitted to the social robot for sending it to these locations one by one and giving alert messages to these non-compliant groups through speech-based HRI. The robot received an updated list of locations of non-compliant groups after a fixed interval of time (i.e., 5 s) after every search for non-compliant groups. Once the robot arrived in the vicinity of the location of a group, it played an audio message to alert the persons in the group about violation and encourage them to maintain a physical distance of at least one meter. Moreover, the robot explained to them the reason that they were approached by it was because they were not abiding by the rule of maintaining a safe physical distance.

4. Experimental Results

In this section, we explain the experimental setup and the metrics used to evaluate the proposed system and provide an analysis of the results obtained during experiments. We tested our system in the main building of our university (Norwegian University of Science and Technology (NTNU)) with a total of 15 participants divided into four different groups. All groups were given demonstrations of the test scenarios, which are discussed in Section 4.1 and Section 4.2 Three cameras were mounted in different locations of the building to monitor three different areas. Overall, 28 experiments were conducted to test the proposed system. The number of violations detected and the successful enforcements performed under different configurations are shown in Table 4.

Table 4.

Overall performance of proposed system with respect to different configurations.

In overall experiments, the system was unable to properly detect the violation on three occasions. In two of them, the reason was that one individual was fully occluded by the other person standing in front them in-line with the camera’s angled view in a two-person group. The third system failure was due to the failure in group re-identification, which occurred due to a large variation in lighting conditions. However, the violation was detected, and enforcement was performed precisely. Details of these experiments and metrics used to evaluate the proposed system and their results are discussed as follows:

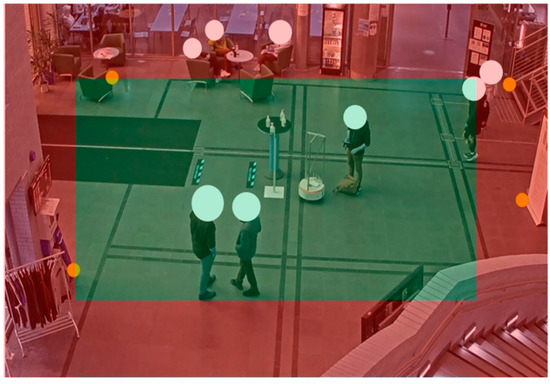

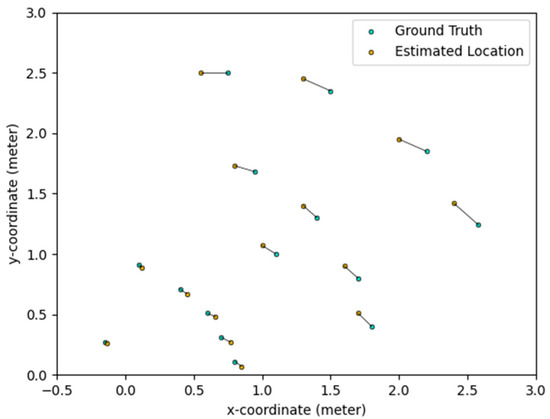

4.1. Accuracy of Non-Compliant Group Localization

This evaluation metric was used to measure the accuracy of localization based on comparison between the ground truth location of the non-compliant group and the location estimated using our method. Prior to the experiments, ground truth locations were manually marked on the 2D map of the ground plane based on the Cartesian coordinate system used by the robot for navigation and localization. Participants were planted at those ground truth locations in order to test the accuracy of our system with respect to the localization of non-compliant groups. The plot of the ground truths as green circles and estimated locations of non-compliant groups as orange circles is shown in Figure 7.

Figure 7.

Localization of non-compliant groups.

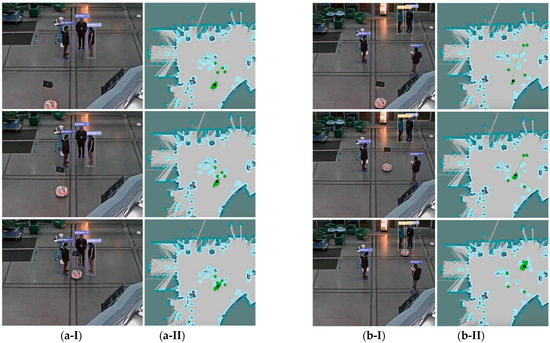

The above plot shows the non-compliant groups being localized with respect to the Cartesian coordinate system fixed to the robot. The maximum error observed between the ground truth and the estimated location was 0.24 m and the average error observed between them was approximately equal to 0.12 m. The detection and localization of the non-compliant groups during two different experiments performed with the proposed system and navigation of the robot to these groups are shown in Figure 8.

Figure 8.

Localization of non-compliant groups and navigation of robot to give the alert message. (a-I,a-II) represent the experiment based on three persons, and (b-I,b-II) represent the experiment based on two persons in a non-compliant group. (a-II,b-II) show the navigation and localization of the robot and the persons belonging to non-compliant group on the map. Green and black markers on map represent the persons and the robot, respectively.

4.2. Accuracy of Re-Identification of Non-Compliant Groups

We designed multiple scenarios in order to test the accuracy of the proposed system in the re-identification of non-compliant groups and the enforcement of physical distancing constraints. As shown in Table 4, eight experiments were based on group re-identification. Groups of participants were asked to violate physical distancing constraints within the FoV of one of the three mounted CCTV cameras and then walk to the other side and perform the same action within the FoV of another CCTV camera. Here, the purpose was to re-identify the non-compliant groups while repeating the violation of physical distancing constraints. We stored the video streams captured by all the mounted CCTV cameras while conducting the experiments for creating our own dataset and testing the overall accuracy of the re-identification deep learning model with respect to the environmental conditions in our designed test scenarios. We used YOLOv4 [49] to crop the images of the persons from all video frames and then annotated each person with a personal as well as camera identity. In this way, we created our own dataset, which consisted of 7687 images of 15 different persons captured through three different CCTV cameras. After annotation of the dataset, we divided it into two categories: Gallery and Query images. Ten percent of the total images were categorized as Query images and rest were used as Gallery images. The results of the re-identification module based on the deep learning model on our created dataset are shown in Table 5.

Table 5.

Accuracy of re-identification module on our dataset.

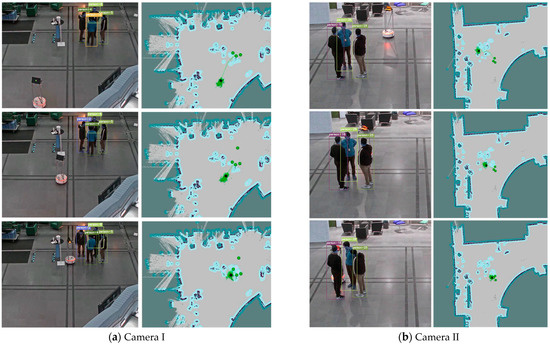

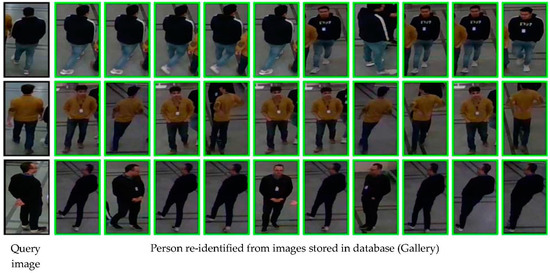

Figure 9 shows the enforcement of physical distancing constraints based on the re-identification of a non-compliant group, and Figure 10 presents some of the results that were generated while testing group re-identification.

Figure 9.

Re-identification of non-compliant group and enforcement of physical distancing constraints through robot. (a) A non-compliant group consisted of four persons detected by Camera I. (b) Same group re-identified by Camera II with re-identification score, while again violating the physical distancing constraints.

Figure 10.

Images of persons re-identified by re-identification module based on images stored in database (Gallery) and given query images.

5. Conclusions, Limitations and Future Work

A novel system for monitoring and enforcing physical distancing constraints in large areas is proposed in this paper, which consists of multiple CCTV cameras and an MRS. Monitoring was conducted using a multi-camera system to detect and track groups of persons who did not comply with physical distancing constraints. We proposed a pipeline for group re-identification to detect repetitive violations of physical distancing constraints by a non-compliant group of individuals. We used an autonomous, collision-free mobile robot for the enforcement of physical distancing constraints by attending to non-compliant groups through HRI and encouraging them to comply with the set constraints. The effectiveness and accuracy of our system were demonstrated in terms of the detection and localization of non-compliant groups, group re-identification in the case of repetitive non-compliance and the enforcement of physical distancing constraints through HRI. We conclude that the monitoring of physical distancing constraints with group re-identification is effective in the long-term tracking of non-compliant groups to detect repetitive violations and notify the security control room in a timely manner to stop them. We also considered the ethical concerns in our system through efficient and secure data gathering and data handling mechanisms.

A limitation of our system is that the re-identification of the non-compliant group is not deployed by the robot. The re-identification of the non-compliant group through the robot would increase the overall accuracy of the system with regard to the enforcement of physical distancing constraints. Due to COVID-19 restrictions, we could only test the system in controlled settings with a low crowd density. Moreover, due to the same reason, we could not evaluate the social impact of our system.

In the future, testing our system in environments with high crowd densities is required to make it more robust. Furthermore, usability tests with security officials need to be conducted to demonstrate the effectiveness of the proposed system. In future studies, we will develop a mechanism based on our MARS to predict the future location from the past motion trajectory of a non-compliant group that is wandering within the environment. This can help to attend to non-compliant groups that are wandering within the area in a timely manner.

Author Contributions

Conceptualization, S.H.H.S. and I.A.H.; methodology, S.H.H.S., O.-M.H.S. and E.G.G.; software, S.H.H.S., O.-M.H.S. and E.G.G.; validation, S.H.H.S., O.-M.H.S. and E.G.G.; formal analysis, S.H.H.S. and I.A.H.; investigation, S.H.H.S., I.A.H., O.-M.H.S. and E.G.G.; writing—original draft preparation, S.H.H.S., O.-M.H.S. and E.G.G.; writing—review and editing, S.H.H.S. and I.A.H.; supervision, S.H.H.S. and I.A.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors are grateful to the Norwegian University of Science and Technology (NTNU) for their support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Le Pan, N. The History of Pandemics. Available online: https://www.visualcapitalist.com/history-of-pandemics-deadliest/ (accessed on 19 January 2021).

- Xu, B.; Gutierrez, B.; Mekaru, S.; Sewalk, K.; Goodwin, L.; Loskill, A.; Cohn, E.L.; Hswen, Y.; Hill, S.C.; Cobo, M.M. Epidemiological data from the COVID-19 outbreak, real-time case information. Sci. Data 2020, 7, 1–6. [Google Scholar] [CrossRef] [PubMed]

- Ahmed, F.; Zviedrite, N.; Uzicanin, A. Effectiveness of workplace social distancing measures in reducing influenza transmission: A systematic review. BMC Public Health 2018, 18, 518. [Google Scholar] [CrossRef] [PubMed]

- Tuzovic, S.; Kabadayi, S. The influence of social distancing on employee well-being: A conceptual framework and research agenda. J. Serv. Manag. 2018, 32, 145–160. [Google Scholar] [CrossRef]

- Yang, G.-Z.; Nelson, B.J.; Murphy, R.R.; Choset, H.; Christensen, H.; Collins, S.H.; Dario, P.; Goldberg, K.; Ikuta, K.; Jacobstein, N. Combating COVID-19—The role of robotics in managing public health and infectious diseases. Sci. Robot. 2020, 5, eabb5589. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Milella, A.; Di Paola, D.; Mazzeo, P.L.; Spagnolo, P.; Leo, M.; Cicirelli, G.; D’Orazio, T. Active surveillance of dynamic environments using a multi-agent system. IFAC Proc. Vol. 2010, 43, 13–18. [Google Scholar] [CrossRef] [Green Version]

- Pennisi, A.; Previtali, F.; Gennari, C.; Bloisi, D.D.; Iocchi, L.; Ficarola, F.; Vitaletti, A.; Nardi, D. Multi-robot surveillance through a distributed sensor network. In Cooperative Robots and Sensor Networks 2015; Springer International Publishing: Cham, Switzerland, 2015; pp. 77–98. [Google Scholar]

- Zemmar, A.; Lozano, A.M.; Nelson, B.J. The rise of robots in surgical environments during COVID-19. Nat. Mach. Intell. 2020, 2, 566–572. [Google Scholar] [CrossRef]

- Fan, T.; Chen, Z.; Zhao, X.; Liang, J.; Shen, C.; Manocha, D.; Pan, J.; Zhang, W. Autonomous Social Distancing in Urban Environments using a Quadruped Robot. arXiv 2020, arXiv:2008.08889. [Google Scholar]

- Aymerich-Franch, L.; Ferrer, I. The implementation of social robots during the COVID-19 pandemic. arXiv 2020, arXiv:2007.03941. [Google Scholar]

- Khan, Z.H.; Siddique, A.; Lee, C.W. Robotics Utilization for Healthcare Digitization in Global COVID-19 Management. Int. J. Environ. Res. Public Health 2020, 17, 3819. [Google Scholar] [CrossRef]

- Lanza, F.; Seidita, V.; Chella, A. Agents and robots for collaborating and supporting physicians in healthcare scenarios. J. Biomed. Inf. 2020, 108, 103483. [Google Scholar] [CrossRef]

- Sathyamoorthy, A.J.; Patel, U.; Savle, Y.A.; Paul, M.; Manocha, D. COVID-robot: Monitoring social distancing constraints in crowded scenarios. arXiv 2020, arXiv:2008.06585. [Google Scholar]

- Kim, I.S.; Choi, H.S.; Yi, K.M.; Choi, J.Y.; Kong, S.G. Intelligent visual surveillance—A survey. Int. J. Control Autom. Syst. 2010, 8, 926–939. [Google Scholar] [CrossRef]

- Cao, M.; Morse, A.S.; Anderson, B.D. Reaching a consensus in a dynamically changing environment: A graphical approach. SIAM J. Control Optim. 2008, 47, 575–600. [Google Scholar] [CrossRef] [Green Version]

- Castanedo, F.; García, J.; Patricio, M.A.; Molina, J.M. Data fusion to improve trajectory tracking in a Cooperative Surveillance Multi-Agent Architecture. Inf. Fusion 2010, 11, 243–255. [Google Scholar] [CrossRef] [Green Version]

- Van der Walle, D.; Fidan, B.; Sutton, A.; Yu, C.; Anderson, B.D. Non-hierarchical UAV formation control for surveillance tasks. In Proceedings of the 2008 American Control Conference, Seattle, WA, USA, 11–13 June 2008; pp. 777–782. [Google Scholar]

- Du, S.-L.; Sun, X.-M.; Cao, M.; Wang, W. Pursuing an evader through cooperative relaying in multi-agent surveillance networks. Automatica 2017, 83, 155–161. [Google Scholar] [CrossRef] [Green Version]

- Mostafa, S.A.; Mustapha, A.; Mohammed, M.A.; Ahmad, M.S.; Mahmoud, M.A. A fuzzy logic control in adjustable autonomy of a multi-agent system for an automated elderly movement monitoring application. Int. J. Med. Inform. 2018, 112, 173–184. [Google Scholar] [CrossRef] [PubMed]

- Kariotoglou, N.; Raimondo, D.M.; Summers, S.J.; Lygeros, J. Multi-agent autonomous surveillance: A framework based on stochastic reachability and hierarchical task allocation. J. Dyn. Syst. Meas. Control 2015, 137, 031008. [Google Scholar] [CrossRef]

- Aksaray, D.; Leahy, K.; Belta, C. Distributed multi-agent persistent surveillance under temporal logic constraints. IFAC-PapersOnLine 2015, 48, 174–179. [Google Scholar]

- Wu, Y.; Zhang, B.; Yi, X.; Tang, Y. Communication-motion planning for wireless relay-assisted multi-robot system. IEEE Wirel. Commun. Lett. 2016, 5, 568–571. [Google Scholar] [CrossRef]

- Jahn, A.; Alitappeh, R.J.; Saldaña, D.; Pimenta, L.C.; Santos, A.G.; Campos, M.F. Distributed multi-robot coordination for dynamic perimeter surveillance in uncertain environments. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Marina Bay Sands Convention Centre, Singapore, 29 May–3 June 2017; pp. 273–278. [Google Scholar]

- Scherer, J.; Rinner, B. Multi-robot persistent surveillance with connectivity constraints. IEEE Access 2020, 8, 15093–15109. [Google Scholar] [CrossRef]

- Javaid, M.; Haleem, A.; Vaishya, R.; Bahl, S.; Suman, R.; Vaish, A. Industry 4.0 technologies and their applications in fighting COVID-19 pandemic. Diabetes Metab. Syndr. Clin. Res. Rev. 2020, 14, 419–422. [Google Scholar] [CrossRef] [PubMed]

- Zeng, Z.; Chen, P.-J.; Lew, A.A. From high-touch to high-tech: COVID-19 drives robotics adoption. Tour. Geogr. 2020, 22, 724–734. [Google Scholar] [CrossRef]

- Kimmig, R.; Verheijen, R.H.; Rudnicki, M. Robot assisted surgery during the COVID-19 pandemic, especially for gynecological cancer: A statement of the Society of European Robotic Gynaecological Surgery (SERGS). J. Gynecol. Oncol. 2020, 31, e59. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tavakoli, M.; Carriere, J.; Torabi, A. Robotics, smart wearable technologies, and autonomous intelligent systems for healthcare during the COVID-19 pandemic: An analysis of the state of the art and future vision. Adv. Intell. Syst. 2020, 2, 2000071. [Google Scholar] [CrossRef]

- Odekerken-Schröder, G.; Mele, C.; Russo-Spena, T.; Mahr, D.; Ruggiero, A. Mitigating loneliness with companion robots in the COVID-19 pandemic and beyond: An integrative framework and research agenda. J. Serv. Manag. 2020, 31, 1149–1162. [Google Scholar] [CrossRef]

- Marchetti, A.; Di Dio, C.; Massaro, D.; Manzi, F. The psychosocial fuzziness of fear in the coronavirus (COVID-19) era and the role of robots. Front. Psychol. 2020, 11, 2245. [Google Scholar] [CrossRef]

- Henkel, A.P.; Čaić, M.; Blaurock, M.; Okan, M. Robotic transformative service research: Deploying social robots for consumer well-being during COVID-19 and beyond. J. Serv. Manag. 2020, 31, 1131–1148. [Google Scholar] [CrossRef]

- Ghafurian, M.; Ellard, C.; Dautenhahn, K. Social Companion Robots to Reduce Isolation: A Perception Change Due to COVID-19. arXiv 2020, arXiv:2008.05382. [Google Scholar]

- Joshi, S.; Collins, S.; Kamino, W.; Gomez, R.; Šabanović, S. Social Robots for Socio-Physical Distancing. In Proceedings of the International Conference on Social Robotics, Golden, CO, USA, 14–18 November 2020; pp. 440–452. [Google Scholar]

- Ackerman, E. Swiss Startup Developing UV Disinfection Robot for Offices and Commercial Spaces. IEEE Spectrum 2020. Available online: https://ieeeusa.org/swiss-startup-developing-uv-disinfection-robot/ (accessed on 5 December 2020).

- Reuter, J.Y.a.T. Aerial Spray and Disinfection. Available online: https://www.weforum.org/agenda/2020/03/three-ways-china-is-using-drones-to-fight-coronavirus/ (accessed on 5 December 2020).

- Mao, L. Agent-based simulation for weekend-extension strategies to mitigate influenza outbreaks. BMC Public Health 2011, 11, 522. [Google Scholar] [CrossRef] [Green Version]

- Kumar, S.; Grefenstette, J.J.; Galloway, D.; Albert, S.M.; Burke, D.S. Policies to reduce influenza in the workplace: Impact assessments using an agent-based model. Am. J. Public Health 2013, 103, 1406–1411. [Google Scholar] [CrossRef] [PubMed]

- Timpka, T.; Eriksson, H.; Holm, E.; Strömgren, M.; Ekberg, J.; Spreco, A.; Dahlström, Ö. Relevance of workplace social mixing during influenza pandemics: An experimental modelling study of workplace cultures. Epidemiol. Infect. 2016, 144, 2031–2042. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Nguyen, C.T.; Saputra, Y.M.; Van Huynh, N.; Nguyen, N.-T.; Khoa, T.V.; Tuan, B.M.; Nguyen, D.N.; Hoang, D.T.; Vu, T.X.; Dutkiewicz, E. Enabling and Emerging Technologies for Social Distancing: A Comprehensive Survey. arXiv 2020, arXiv:2005.02816. [Google Scholar]

- Palmer, A. Amazon Is Rolling out Cameras That Can Detect If Warehouse Workers are Following Social Distancing Rules. Available online: https://www.cnbc.com/2020/06/16/amazon-using-cameras-to-enforce-social-distancing-rules-at-warehouses.html (accessed on 14 December 2020).

- Spacer, S. Keep People Safe and Workplaces Open. Available online: https://www.safespacer.net/ (accessed on 14 December 2020).

- Waltz, E. Back to Work: Wearables Track Social Distancing and Sick Employees in the Workplace. IEEE Spectrum 2020. Available online: https://spectrum.ieee.org/wearables-track-social-distancing-sick-employees-workplace (accessed on 3 July 2020).

- Exposure Notifications: Using Technology to Help Public Health Authorities Fight COVID-19. Available online: https://www.google.com/covid19/exposurenotifications/ (accessed on 14 December 2020).

- Technology, E. EAIBOT SMART. Available online: http://www.eaibot.com/product/Smart (accessed on 10 June 2020).

- Robotics, O. Robot Operating System (ROS). Available online: https://www.ros.org/ (accessed on 9 April 2020).

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 13 June 2020; pp. 10781–10790. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Developers, N. All Simple Paths—NetworkX 1.9.1 Documentation. Available online: https://networkx.org/documentation/networkx-1.9.1/reference/generated/networkx.algorithms.simple_paths.all_simple_paths.html#r277 (accessed on 14 December 2020).

- Sedgewick, R. Algorithms in c, Part 5: Graph Algorithms; Pearson Education: London, UK, 2001. [Google Scholar]

- Zhou, K.; Yang, Y.; Cavallaro, A.; Xiang, T. Omni-scale feature learning for person re-identification. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 3702–3712. [Google Scholar]

- Zheng, L.; Shen, L.; Tian, L.; Wang, S.; Wang, J.; Tian, Q. Scalable person re-identification: A benchmark. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December; pp. 1116–1124.

- Li, W.; Zhao, R.; Xiao, T.; Wang, X. Deepreid: Deep filter pairing neural network for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 152–159. [Google Scholar]

- Wei, L.; Zhang, S.; Gao, W.; Tian, Q. Person transfer gan to bridge domain gap for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 79–88. [Google Scholar]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In European Conference on Computer Vision; Springer: Amsterdam, The Netherlands, 2016; pp. 17–35. [Google Scholar]

- Zheng, Z.; Zheng, L.; Yang, Y. Unlabeled samples generated by gan improve the person re-identification baseline in vitro. In Proceedings of the IEEE International Conference on Computer Vision, Cambridge, MA, USA, 20–23 June 1995; pp. 3754–3762. [Google Scholar]

- Loy, C.C.; Xiang, T.; Gong, S. Multi-camera activity correlation analysis. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1988–1995. [Google Scholar]

- Gray, D.; Brennan, S.; Tao, H. Evaluating appearance models for recognition, reacquisition, and tracking. In Proceedings of the IEEE International Workshop on Performance Evaluation for Tracking and Surveillance (PETS), Rio de Janeiro, Brazil, 14 October 2007; pp. 1–7. [Google Scholar]

- Zhou, K.; Xiang, T. Torchreid: A library for deep learning person re-identification in pytorch. arXiv 2019, arXiv:1910.10093. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).