Abstract

Many NHPP software reliability growth models (SRGMs) have been proposed to assess software reliability during the past 40 years, but most of them have focused on modeling the fault detection process (FDP) in two ways: one is to ignore the fault correction process (FCP), i.e., faults are assumed to be instantaneously removed after the failure caused by the faults is detected. However, in real software development, it is not always reliable as fault removal usually needs time, i.e., the faults causing failures cannot always be removed at once and the detected failures will become more and more difficult to correct as testing progresses. Another way to model the fault correction process is to consider the time delay between the fault detection and fault correction. The time delay has been assumed to be constant and function dependent on time or random variables following some kind of distribution. In this paper, some useful approaches to the modeling of dual fault detection and correction processes are discussed. The dependencies between fault amounts of dual processes are considered instead of fault correction time-delay. A model aiming to integrate fault-detection processes and fault-correction processes, along with the incorporation of a fault introduction rate and testing coverage rate into the software reliability evaluation is proposed. The model parameters are estimated using the Least Squares Estimation (LSE) method. The descriptive and predictive performance of this proposed model and other existing NHPP SRGMs are investigated by using three real data-sets based on four criteria, respectively. The results show that the new model can be significantly effective in yielding better reliability estimation and prediction.

1. Introduction

Software reliability has been viewed as the most significant factor to improve the reliability of safety-critical software systems. Many time-dependent SRGMs have been studied to determine the reliability measures for software over the past four decades [1,2,3,4]. Researchers have developed different models upon different assumptions. Some models make an assumption that once a failure is detected, the errors which cause the failure are immediately corrected and no new errors are brought in simultaneously (i.e., perfect debugging) [5]. Other models take into account an imperfect debugging [6,7], i.e., faults are not always perfectly removed, and new ones can be introduced as a by-product of the fault repair process. However, most of the existing models assume that faults will be instantaneously repaired after being detected. However, it is not realistic and in fact detected faults will become more and more difficult to be corrected as testing progresses. Therefore, it is of great importance to build software reliability models from the viewpoint of the fault correction process, i.e., give the same priority to modeling the fault correction process as the fault detection process.

Schneidewind first modeled the software correction process along with the software detection process by proposing a fault-correction model using a constant time delay in the fault-detection process [8]. Then Xie and Zhao extended Schneidewind’s idea from a constant time delay to a time-dependent delay function [9]. Later, Schneidewind provided an extension of his original model by using a random variable for the time delay following an exponential distribution [10]. Xie et al. further proposed another distributed correction time model as to provide a more flexible modeling of correction processes [11,12] and Peng et al. incorporated a testing effort function and imperfect debugging into the time delay function [13]. Lo and Huang proposed a general framework for modeling software’s fault detection and correction processes and showed that many existing SRGMs based on NHPP could be covered by the proposed approaches [14]. Shu proposed a model from the viewpoint of the fault amount relationship between the two processes [15]. Additionally, some other attempts have been made to model these two processes from different viewpoints, such as Markov chain [16,17,18], finite and infinite server queuing models [19], and quasi-renewal time-delay fault removal model [20]. Researches also suggest that the estimation accuracy of SRGMs could be further improved by considering the influence of some real issues happening during the testing process [21,22], such as testing coverage. Testing coverage is a promising indicator for testing completeness and effectiveness, which can help developers evaluate how much test effort has been spent and help customers estimate the confidence of accepting the software product. Many time-dependent test coverage functions (TCFs) have been proposed by using different distributions, such as logarithmic-exponential [23], S-shaped [24], Rayleigh [25], Weibull and logistic [26] and lognormal [27]. Many TCFs based reliability models have been developed to formulate the relationship between the testing coverage and the number of detected faults, such as the Rayleigh model [25], logarithmic-exponential model [23], beta model, hyper-exponential model [22] and so on [22,24,26].

Therefore, it is of great importance to model dual fault detection and correction processes. In contrast to the existing research that considers the time dependency between fault detection and fault correction processes, in this paper, we will propose a new software reliability model considering both fault detection and correction processes from the viewpoint of fault content, that is, the quantitative dependence between the number of faults detected by the fault detection process and the number of faults corrected by the fault correction process. The fault introduction rate and test coverage are also considered to improve the accuracy of the final derived model.

The remainder of this paper is organized as follows. In Section 2, we first give a brief overview of the fault-detection models’ assumptions and fault-correction process, then build a relationship between the numbers of detected faults and corrected faults, after which we present the proposed model incorporating the fault introduction rate and testing coverage rate, and several existing SRGMs are also presented. In Section 3, we examine the fitting and prediction performance of this model on three sets of software failure data compared with other existing SRGMs. Finally, Section 4 gives the conclusions.

2. Modeling Fault Detection and Fault Correction Processes

2.1. Basic Assumptions of Existing NHPP SRGMs

NHPP is used to describe the failure phenomenon during the testing process. The counting process of an NHPP process is given as follows.

The mean value function (MVF) can be expressed as follows.

where is the fault intensity function.

Most existing SRGMs based on NHPP have the following basic assumptions concerning the software fault detection process:

- The software failures’ occurrence and faults’ removal follow NHPP;

- The software failure intensity at any time is proportional to the number of remaining faults presented at that time;

- The detected faults are immediately removed with certainty and correction of faults takes only negligible time.

According to the above assumptions, the general NHPP model can be proposed by solving the following equation:

where is the total fault content function, is the mean number of detected faults and represents the fault detection rate.

2.2. Considering the Fault-Detection Process and Fault-Correction Process Together

Most existing SRGMs only focus on describing the behavior of the fault detection process and assume that faults will be fixed instantaneously upon detection, but actually most latent software faults may remain uncorrected for a long time due to the complexity of software systems and incomplete comprehension of software by the testers and learning process even after they are detected.

Suppose denotes the mean value of corrected faults by time , and assume that the mean number of faults corrected in the time interval (, ) is proportional to the mean number of detected but not yet corrected faults remaining in the software system. The MVF can be expressed in terms of the following equations:

where is the fault correction rate, and is shown in Equation (3).

2.3. The Relationship between and

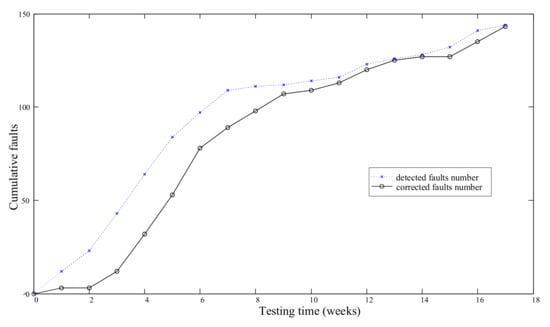

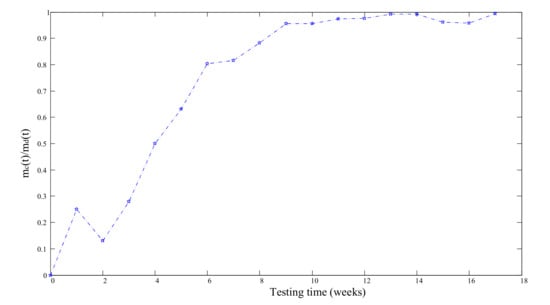

Here we use data collected from testing a software program (Data Set 1, DS-1) [28] to study the relationship between and . Let , the cumulative detected faults and corrected faults are shown in Figure 1 and the value of is shown in Figure 2. Apparently, at the beginning of the testing phase, lots of faults are detected and most of them are simple and easy to be removed, so the difference between the number of corrected faults and detected faults is small, then faults detected become more complicated and difficult to be removed, so the difference becomes larger, then the difference becomes less again. Thus, a concave or S-shaped function can be used to model the ratio of the number of corrected faults to the number of detected faults.

Figure 1.

The number of cumulative detected faults and corrected faults for DS-1.

Figure 2.

The ratio of the number of corrected faults to the number of detected faults for DS-1.

Here we use two S-shaped functions to model . From Table 1, we can see that provides a better descriptive power.

Table 1.

Comparison of two S-shaped functions as for DS-1.

2.4. A New Model with Imperfect Debugging and Testing Coverage

Here we incorporate testing coverage and fault introduction rate into software reliability model.

Suppose denotes the proportion of the code covered by time against the whole code. Obviously, increases with testing time . Firstly, when testing starts, grows at a quick rate as more test cases are executed to examine the software; after a certain point in time, the software becomes stable and less testing coverage take place to realize the residual fault detection, and function becomes flat when the testing comes to the end. Thus, a concave or S-shaped function can be suitable to model the testing coverage function. Obviously, (1 − ) is the percentage of the software code which has not yet been covered by test cases up to time . The derivative of the testing coverage function, , represents the coverage rate. Therefore, could be used to measure the fault detection rate , which is shown in Equation (3).

To build a model incorporating fault-detection process and fault-correction process as well as fault introduction rate and testing coverage, the following assumptions are proposed for this model:

- The software failures’ occurrence follows an NHPP process.

- The software failure rate at any time depends on both the fault detection rate and the number of remaining faults in the software at that time.

- The fault detection rate can be expressed by ; is the percentage of the code that has been examined up to time , is the derivative of the testing coverage function and represents the coverage rate.

- Faults can be introduced during the debugging phase with a constant fault introduction rate and the overall fault content function is linear time-dependent.

- denotes the mean value of corrected faults by time , which is proportional to the mean number of detected but not yet corrected faults remaining in the software system, and represents the relationship between and expressed by ; is the cumulative detected faults.

From Assumption 4, the total fault number function , is a linear function of the expected fault number detected up to time . That is,

where denotes the initial fault number presented in the software system before testing starts and .

Substituting from Equation (5) into Equation (3), and solving it in terms of the initial condition that at , , we can obtain

where refers to the testing coverage function when .

From Assumption 5, once is determined, can be achieved. That is,

Substituting different types of testing coverage functions for and ratio functions of corrected faults number to detected faults number for in Equation (7), we can obtain different MVFs corresponding to them. As mentioned above, the testing coverage function should be a non-negative and non-decreasing function of testing time . So the following function can be used to model the testing coverage function, that is:

where denotes the maximum percentage of testing coverage, is the shape parameter and is the scale parameter. Clearly, when , .

According to the results of Section 2.3, here the following function is used to describe :

Substituting Equations (8) and (9) into Equation (7), we can get the MVF of corrected faults correspondingly:

It can be seen that fault detection process and correction process as well as fault introduction rate and testing coverage are all integrated into the proposed model.

Table 2 lists the existing NHPP models to depict the MVF of fault correction process [14] and fault detection process [24] as well as the proposed new model. All models together will be used for the following comparisons.

Table 2.

Software reliability models and their MVFs.

3. Model Comparisons

3.1. Comparison Criteria and Parameter Estimation Method

3.1.1. Criteria for Models’ Descriptive Power Comparison

Here we use three criteria to judge the performance of the models. The first criterion is the mean value of squared error (Mean Square Error, MSE), which can be calculated as follows:

where represents the number of observations, represents the total number of faults observed by time , denotes the estimated cumulative number of faults up to time , represents the number of parameters in the model. Therefore, the lower the value of MSE, the better the model performs.

The second criterion is correlation index of the regression curve equation (), which can be expressed as follows:

where . Therefore, the larger , the better the model explains the variation in the data.

The last criterion is adjusted (Adjusted ), which can be expressed as follows:

where denotes the value of and represents the model’s predictor number. Therefore, the larger value of Adjusted , the better is the model’s performance.

3.1.2. Criteria for Models’ Predictive Power Comparison

Here we use SSE criterion to examine the predictive power of SRGMs. SSE is the sum of squared error, which is expressed as follows:

Assume that by the end of testing time , totally faults have been detected. Firstly we use the data points up to time (<) to estimate the parameters of , then substituting the estimated parameters in the mean value function yields the prediction value of the cumulative fault number by (<), is the actual number of faults detected by . Then the procedure is repeated for several values of () until .

Therefore, the less SSE, the better is the model’s performance.

3.1.3. Parameter Estimation Method

Once the analytical expression for is derived, the parameters in can be estimated by using the maximum likelihood estimation (MLE) method or the least square estimation (LSE) method. Though estimates from MLE are consistent and asymptotically normally distributed as the sample size increases, sometimes the estimations may not be obtained especially under conditions where is too complex. Here we turn to LSE methods to estimate the models’ parameters.

The sum of the squared distance is given as follows:

where is the cumulative number of faults detected or corrected in time (0, ), and all failure data are denoted in the form of pairs (,) (;).

By taking derivatives of (15) with respect to each parameter, and setting the results equal to zero, we can obtain several equations for the proposed model as follows:

After solving the above equations simultaneously, we can obtain the least square estimates of all parameters for the proposed model.

As noted, solutions of the above Equation (16) are extremely difficult and require either graphical or numerical methods. Under the help of MATLAB, the calculation of the parameters is not a critical problem, though adding additional parameters to make the software reliability model more complex makes the work of parameter estimation more difficult.

3.2. Data Analysis and Model Comparison with Real Application

3.2.1. A Middle-Size Software System Data

Here we examine the performance of the proposed model and compare it with several traditional models using data collected from testing a middle-size software system (Data Set 1, DS-1) [11]. The failure data are recorded by week and are shown in Table 3. In contrast to a traditional software reliability data set, this data set includes not only fault-detection data but also fault-correction data. We use all data points to fit the models and get the parameters estimation of all models. The model parameters, MSE values, values and Adjusted values are listed in Table 4.

Table 3.

A middle-size software system data (DS-1).

Table 4.

Comparison of goodness-of-fit power of SRGMs for of DS-1.

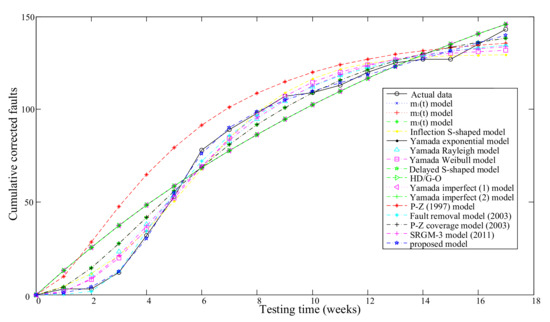

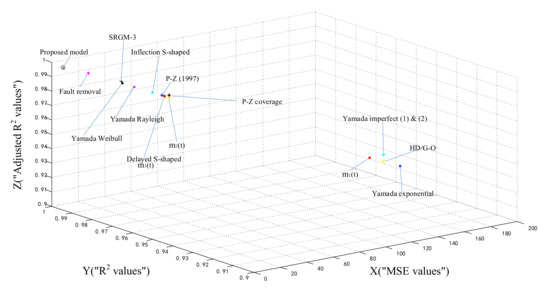

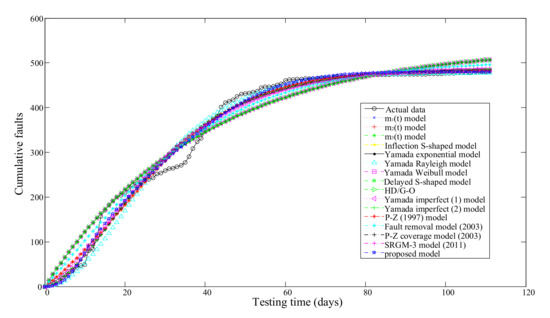

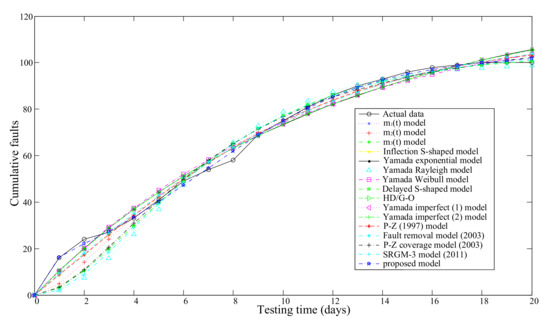

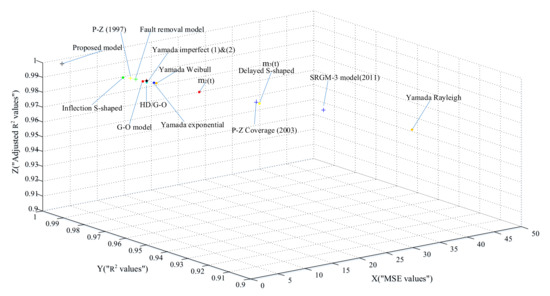

All models’ fitting results for DS-1 are graphically illustrated in Figure 3. Figure 4 is three dimensional and the coordinates X, Y and Z illustrate the values of MSE, and Adjusted respectively. We can see that the proposed model has the best criteria values of MSE, and Adjusted , i.e., the new model shows the best fitting power to the real data set than all other models.

Figure 3.

The comparison fitting results of SRGMs for DS-1.

Figure 4.

(X, Y, Z) represents (MSE, , Adjusted ) values for DS-1.

3.2.2. Monitor and Control System Data

Here we examine models using another software’s testing data collected from testing a software program developed for a real-time monitor and control system (Data Set 2, DS-2) [35]. The failure data are recorded by day and are shown in Table 5. We use all data points to fit the models and estimate the parameters in the models. The fault data set has only detected fault number. The model parameters, MSE values, values and Adjusted values are listed in Table 6.

Table 5.

Failures per day and cumulative failures for DS-2.

Table 6.

Comparison of goodness-of-fit power of SRGMs for DS-2.

From Table 6, we can see that comparing all models using all the three criteria, the new model yields the best criteria values and provides the best descriptive power. All models’ fitting results for DS-2 are graphically illustrated in Figure 5. Figure 6 is three dimensional and the coordinates X, Y and Z illustrate the values of MSE, and Adjusted respectively.

Figure 5.

The comparison fitting results of SRGMs for DS-2.

Figure 6.

(X, Y, Z) represents (MSE, , Adjusted ) values for DS-2.

3.2.3. Tandem Computer Data

Here we examine models using another software’s testing data collected from Tandem Computers Release #1 (Data Set 3, DS-3) [5]. The failure data are recorded by week and are shown in Table 7. We use all data points to fit the models and estimate the parameters in the models. The fault data set also only has the detected fault number. The model parameters, MSE values, values and Adjusted values for goodness-of-fit are listed in Table 8.

Table 7.

Failures per week and cumulative failures for DS-3.

Table 8.

Comparison of goodness-of-fit power of SRGMs for DS-3.

From Table 8, we can see that the new model provides the best descriptive power. The fitting of the models (existing and proposed) to DS-3 is graphically illustrated in Figure 7. Figure 8 is three dimensional and the coordinates X, Y and Z illustrate the values of MSE, and Adjusted respectively.

Figure 7.

The fitting results of comparison SRGMs compared with actual data for DS-3.

Figure 8.

(X, Y, Z) represents (MSE, , Adjusted ) values for DS-3.

3.2.4. Comparison of Models’ Predictive Power

For the predictive power comparison, we divide the data set into two parts, 80% and the remaining 20%, respectively. We use the first 80% of data points to estimate the models’ parameters, then use the remaining data points to compare the models’ predictive power. The SSE values for the prediction are 37.0169, 236.0253 and 23.0348, accordingly shown in Table 9. For DS-1, comparing all models, we find that the new model has the smallest SSE value of 37.0169, which are smaller than the values of other models, e.g., others’ SSE values can be 1.87-times (P-Z coverage model’s 69.0496) and even 22.24-times (Yamada imperfect (1) model’s 823.0770) larger than the value of the proposed model. For DS-2, comparing all models, we find that the new model has the smallest SSE value of 236.0253, which are smaller than the values of other models, e.g., others’ SSE values can be 11.75 times (Inflection S-shaped model’s 2.7742 × 103), even 1468.78 times (Yamada Rayleigh model’s 3.4667 × 105) larger than the value of the proposed model. For DS-3, though SSE = 23.0348 for the proposed model is not the best result, it is only a little larger than the best result, for the smallest SSE value is 6.9655 (given by the delayed S-shaped model), and comparing to other models, we find that the new model’s SSE value is much smaller than the values of other models, e.g., others’ SSE values can be 3.16-times (Yamada Rayleigh model’s 73.4246) and even 555.33-times (SRGM-3 model’s 1.2792 × 104) larger than the value of the proposed model. Additionally, for the delayed S-shaped model, it only provides the best result for DS-3, but does not provide the best results for DS-1 and DS-2. This may indicate that this proposed model gives a better predictive power.

Table 9.

Comparison of SRGMs’ predictive power for DS-1, DS-2 and DS-3.

4. Conclusions

In this paper, the problem of modeling software fault-detection processes and fault-correction processes together with imperfect debugging and testing coverage has been investigated. From the viewpoint of fault amount instead of fault correction time delay, a new SRGT model addressing the fault re-introduction and testing coverage is put forward by introducing the relationship function between the MVF of detected faults and corrected faults. The proposed model is applied to two kinds of data sets: one that contains information not only of detected fault numbers but also corrected fault numbers, and another that contains only detected fault numbers. No matter what kind of data sets, the proposed model gives significantly better goodness-of-fit and prediction results comparing with other existing NHPP models for three real data sets according to four criteria. It should be noted that, though adding more parameters makes the software reliability model more complicated and the task of parameter estimation more difficult, by automating the calculations using software tools it is not a critical problem.

Author Contributions

Conceptualization, Q.L. and H.P.; Data Curation, Q.L.; Formal Analysis, Q.L.; Funding Acquisition, Q.L.; Investigation, Q.L.; Methodology, Q.L. and H.P.; Project Administration, Q.L.; Resources, Q.L. and H.P.; Supervision, H.P.; Writing—Review and Editing, H.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly funded by “National Key Laboratory of Science andTechnology on Reliability and Environmental Engineering of China”, grant number “WDZC2019601A303”.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Erto, P.; Giorgio, M.; Lepore, A. The Generalized Inflection S-Shaped Software Reliability Growth Model. Reliab. IEEE Trans. 2018, 69, 228–244. [Google Scholar] [CrossRef]

- Utkin, L.V.; Coolen, F. A robust weighted SVR-based software reliability growth model. Reliab. Eng. Syst. Saf. 2018, 176, 93–101. [Google Scholar] [CrossRef] [Green Version]

- Saraf, I.; Iqbal, J. Generalized multi-release modelling of software reliability growth models from the perspective of two types of imperfect debugging and change point. Qual. Reliab. Eng. Int. 2019, 35, 2358–2370. [Google Scholar] [CrossRef]

- Jin, C.; Jin, S.W. Parameter optimization of software reliability growth model with S-shaped testing-effort function using improved swarm intelligent optimization. Appl. Soft Comput. 2016, 40, 283–291. [Google Scholar] [CrossRef]

- Pham, H. System Software Reliability; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Yamada, S.; Tokuno, K.; Osaki, S. Software reliability measurement in imperfect debugging environment and its application. Reliab. Eng. Syst. Saf. 1993, 40, 139–147. [Google Scholar] [CrossRef]

- Huang, C.Y.; Lyu, M.R. Estimation and analysis of some generalized multiple change-point software reliability models. Reliab. IEEE Trans. 2011, 60, 498–514. [Google Scholar] [CrossRef]

- Schneidewind, N.F. Analysis of error processes in computer software. Sigplan Not. 1975, 10, 337–346. [Google Scholar] [CrossRef]

- Xie, M.; Zhao, M. The Schneidewind software reliability model revisited. In Proceedings of the Third International Symposium on Software Reliability Engineering, Research Triangle Park, NC, USA, 7–10 October 1992. [Google Scholar]

- Schneidewind, N.F. Modelling the fault correction process. In Proceedings of the 12th International Symposium on Software Reliability Engineering, Los Alamitos, CA, USA, 27–30 November 2001; pp. 185–190. [Google Scholar]

- Xie, M.; Hu, Q.P.; Wu, Y.P.; Ng, S.H. A study of the modeling and analysis of software fault-detection and fault-correction processes. Qual. Reliab. Eng. Int. 2007, 23, 459–470. [Google Scholar] [CrossRef]

- Wu, Y.P.; Hu, Q.P.; Xie, M.; Ng, S.H. Modeling and analysis of software fault detection and correction process by considering time dependency. Reliab. IEEE Trans. 2007, 56, 629–642. [Google Scholar] [CrossRef]

- Peng, R.; Li, Y.F.; Zhang, W.J.; Hu, Q.P. Testing effort dependent software reliability model for imperfect debugging process considering both detection and correction. Reliab. Eng. Syst. Saf. 2014, 126, 37–43. [Google Scholar] [CrossRef] [Green Version]

- Lo, J.H.; Huang, C.Y. An integration of fault detection and correction processes in software reliability analysis. J. Syst. Softw. 2006, 79, 1312–1323. [Google Scholar] [CrossRef]

- Shuy, J.; Liu, H.W.; Wu, Z.B.; Yang, X.Z. A software reliability growth model integrating fault detection and fault correction processes. Chin. High Technol. Lett. 2010, 20, 386–391. (In Chinese) [Google Scholar]

- Gokhale, S.S.; Lyu, M.R.; Trivedi, K.S. Analysis of Software Fault Removal Policies Using a Non-Homogeneous Continuous Time Markov Chain. Softw. Qual. J. 2004, 12, 211–230. [Google Scholar] [CrossRef]

- Gokhale, S.S.; Lyu, M.R.; Trivedi, K.S. Incorporating fault debugging activities into software reliability models: A simulation approach. Reliab. IEEE Trans. 2006, 55, 281–292. [Google Scholar] [CrossRef]

- Jia, L.; Yang, B.; Guo, S.; Park, D.H. Software reliability modeling considering fault correction process. IEICE Trans. Inf. Syst. 2010, 93, 185–188. [Google Scholar] [CrossRef] [Green Version]

- Huang, C.Y.; Huang, W.C. Software reliability analysis and measurement using finite and infinite server queueing models. Reliab. IEEE Trans. 2008, 57, 192–203. [Google Scholar] [CrossRef]

- Hwang, S.; Pham, H. Quasi-renewal time-delay fault-removal consideration in software reliability modeling. Systems, Man and Cybernetics, Part A: Systems and Humans. IEEE Trans. 2009, 39, 200–209. [Google Scholar]

- Huang, C.Y.; Kuo, S.Y.; Lyu, M.R. An assessment of testing-effort dependent software reliability growth models. Reliab. IEEE Trans. 2007, 56, 198–211. [Google Scholar] [CrossRef]

- Cai, X.; Lyu, M.R. Software Reliability Modeling with Test Coverage: Experimentation and Measurement with A Fault-Tolerant Software Project. 18th IEEE Int. Symp. Softw. Reliab. 2007, 2007, 17–26. [Google Scholar] [CrossRef]

- Malaiya, Y.K.; Li, M.N.; Bieman, J.M.; Karcich, R. Software reliability growth with test coverage. Reliab. IEEE Trans. 2002, 51, 420–426. [Google Scholar] [CrossRef] [Green Version]

- Pham, H.; Zhang, X. NHPP software reliability and cost models with testing coverage. Eur. J. Oper. Res. 2003, 145, 443–454. [Google Scholar] [CrossRef]

- Vouk, M.A. Using Reliability Models during Testing with Non-Operational Profiles. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.47.8863&rep=rep1&type=pdf (accessed on 29 July 2021).

- Gokhale, S.; Trivedi, K.S. A time/structure based software reliability model. Ann. Softw. Eng. 1999, 8, 85–121. [Google Scholar] [CrossRef]

- Park, J.-Y.; Lee, G.; Park, J.H. A class of coverage growth functions and its practical application. J. Korean Stat. Soc. 2008, 37, 241–247. [Google Scholar] [CrossRef]

- Zhang, X.; Teng, X.; Pham, H. Considering fault removal efficiency in software reliability assessment. IEEE Trans. Syst. Man, Cybern. Part A Syst. Hum. 2003, 33, 114–120. [Google Scholar] [CrossRef]

- Ohba, M. Inflection S-Shaped Software Reliability Growth Model; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 1984; pp. 144–162. [Google Scholar]

- Yamada, S.; Ohba, M.; Osaki, S. S-shaped reliability growth modeling for software fault detection. Reliab. IEEE Trans. 1983, 12, 475–484. [Google Scholar] [CrossRef]

- Hossain, S.A.; Ram, C.D. Estimating the parameters of a non-homogeneous Poisson process model for software reliability. Reliab.IEEE Trans. 1993, 42, 604–612. [Google Scholar] [CrossRef]

- Yamada, S.; Tokuno, K.; Osaki, S. Imperfect debugging models with fault introduction rate for software reliability assessment. Int. J. Syst. Sci. 1992, 23, 2241–2252. [Google Scholar] [CrossRef]

- Pham, H.; Zhang, X. An NHPP Software Reliability Model and Its Comparison. Int. J. Reliab. Qual. Saf. Eng. 1997, 4, 269–282. [Google Scholar] [CrossRef]

- Kapur, P.K.; Pham, H.; Anand, S.; Yadav, K. A Unified Approach for Developing Software Reliability Growth Models in the Presence of Imperfect Debugging and Error Generation. Reliab. IEEE Trans. 2011, 60, 331–340. [Google Scholar] [CrossRef]

- Tohma, Y.; Yamano, H.; Ohba, M.; Jacoby, R. The estimation of parameters of the hypergeometric distribution and its application to the software reliability growth model. IEEE Trans. Softw. Eng. 1991, 17, 483–489. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).