Respite for SMEs: A Systematic Review of Socio-Technical Cybersecurity Metrics

Featured Application

Abstract

1. Introduction

- RQ1: How are cybersecurity metrics aggregated in socio-technical cybersecurity measurement solutions?

- RQ2: How do aggregation strategies differ in cybersecurity measurement solutions relevant to SMEs and all other solutions?

- –

- RQ2.1: What are the reasons for these differences?

- –

- RQ2.2: Which aggregation strategies can be used in SME cybersecurity measurement solutions, but currently are not?

- RQ3: How do cybersecurity measurement solutions deal with the need for adaptability?

2. Related Work

2.1. Socio-Technical Cybersecurity

2.2. Cybersecurity Metric Reviews

2.3. Aggregation

2.4. Adaptability

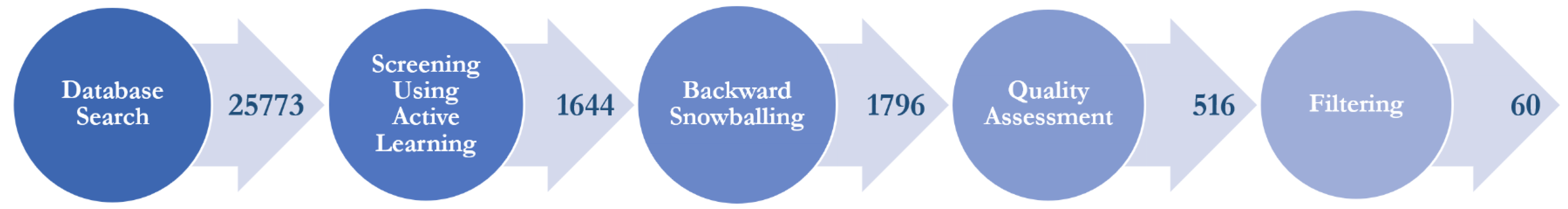

3. Systematic Review Methodology

- AUTHKEY((security ∗ OR cyber ∗)

- AND (assess ∗ OR evaluat ∗ OR measur ∗ OR metric ∗ OR model ∗ OR risk ∗ OR scor ∗))

- AND LANGUAGE(english) AND DOCTYPE(ar OR bk OR ch OR cp OR cr OR re)

- Inclusion criteria:

- –

- I1: The research concerns cybersecurity metrics and discusses how these metrics can be used to assess the security of a (hypothetical) cyber-system.

- –

- I2: The research is a review of relevant papers.

- Exclusion criteria:

- –

- E1: The research does not concern cyber-systems.

- –

- E2: The research does not describe a concrete path towards calculating cybersecurity metrics (only applied if I2 is not applicable).

- –

- E3: The research has been retracted.

- –

- E4: There is a more relevant version of the research that is included.

- –

- E5: The research was automatically excluded due to its assessed irrelevance by the ASReview tool.

- –

- E6: The research does not satisfy the database query criteria on language and document type.

- –

- E7: No full-text version of the research can be obtained.

- –

- E8: The research is of insufficient quality.

- –

- E9: The research does not contain at least one socio-technical cybersecurity metric.

4. Results

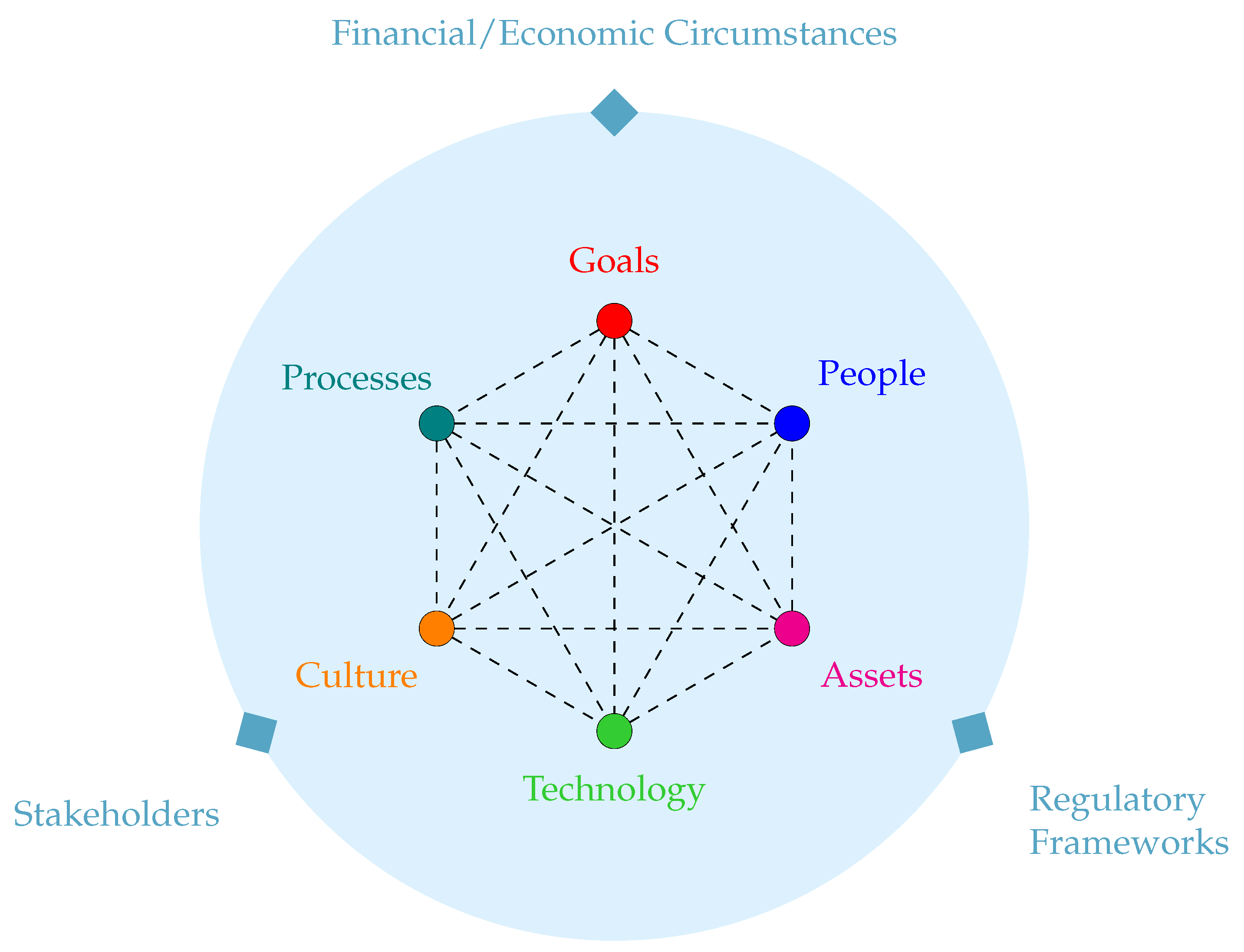

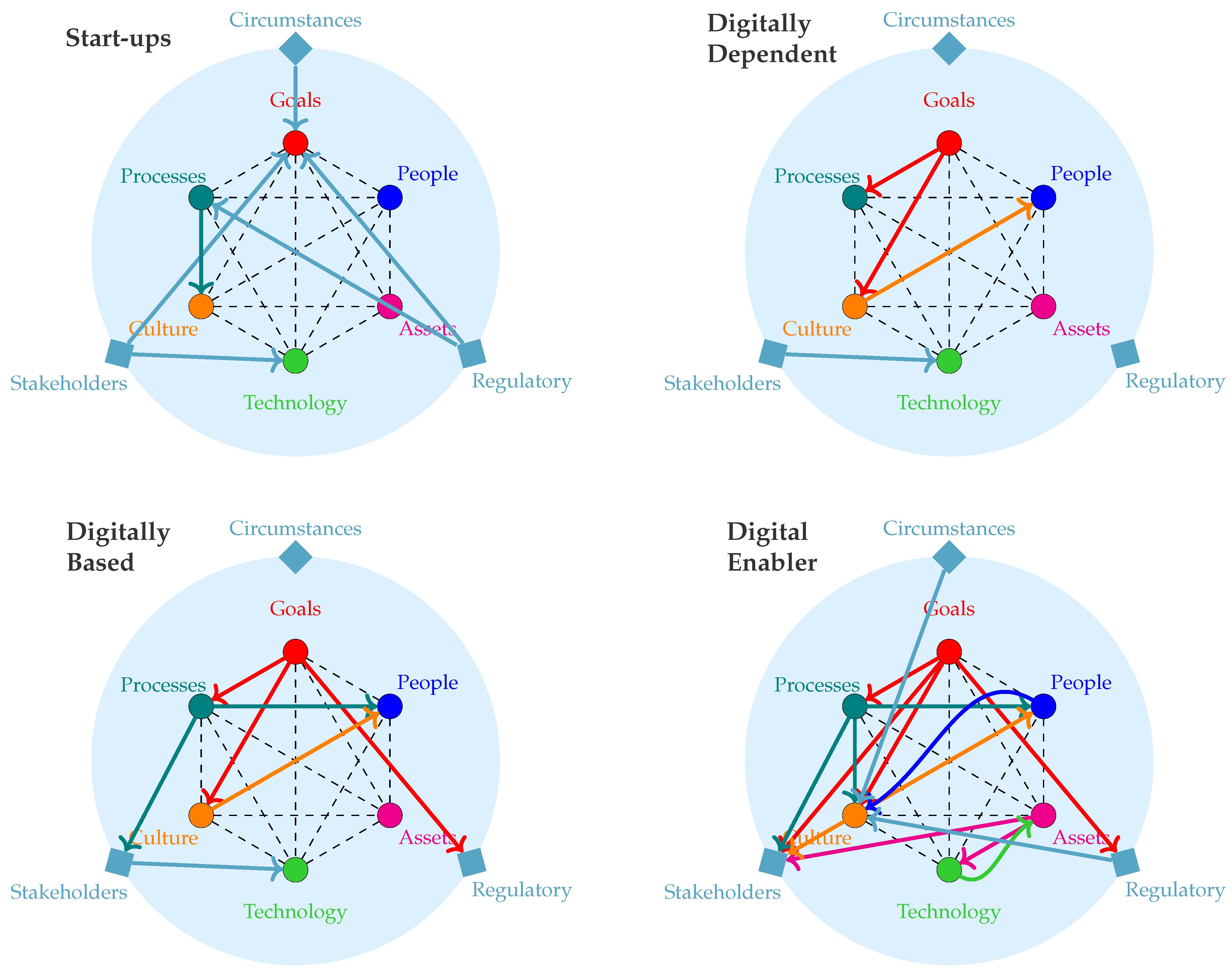

5. Socio-Technical Cybersecurity Framework for SMEs

6. Discussion

Limitations and Threats to Validity

7. Conclusions and Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AB | Ability |

| ADKAR | Awareness, Desire, Knowledge, Ability, Reinforcement |

| ANP | Analytic Network Process |

| AW | Awareness |

| BN | Bayesian Network |

| DE | Desire |

| KN | Knowledge |

| PMT | Protection Motivation Theory |

| RE | Reinforcement |

| RQ | Research Question |

| SDT | Self-Determination Theory |

| SME | Small- and Medium-Sized Enterprises |

| STS | Socio-Technical Systems |

| SYMBALS | SYstematic review Methodology Blending Active Learning and Snowballing |

| WCP | Weighted Complementary Product |

| WLC | Weighted Linear Combination |

| WM | Weighted Maximum |

| WP | Weighted Product |

Appendix A. Data Extraction Items

| Name | Description | Values |

|---|---|---|

| Security assessment concept | The security concept assessed in the paper. | Awareness, maturity, resilience, risk, security, vulnerability |

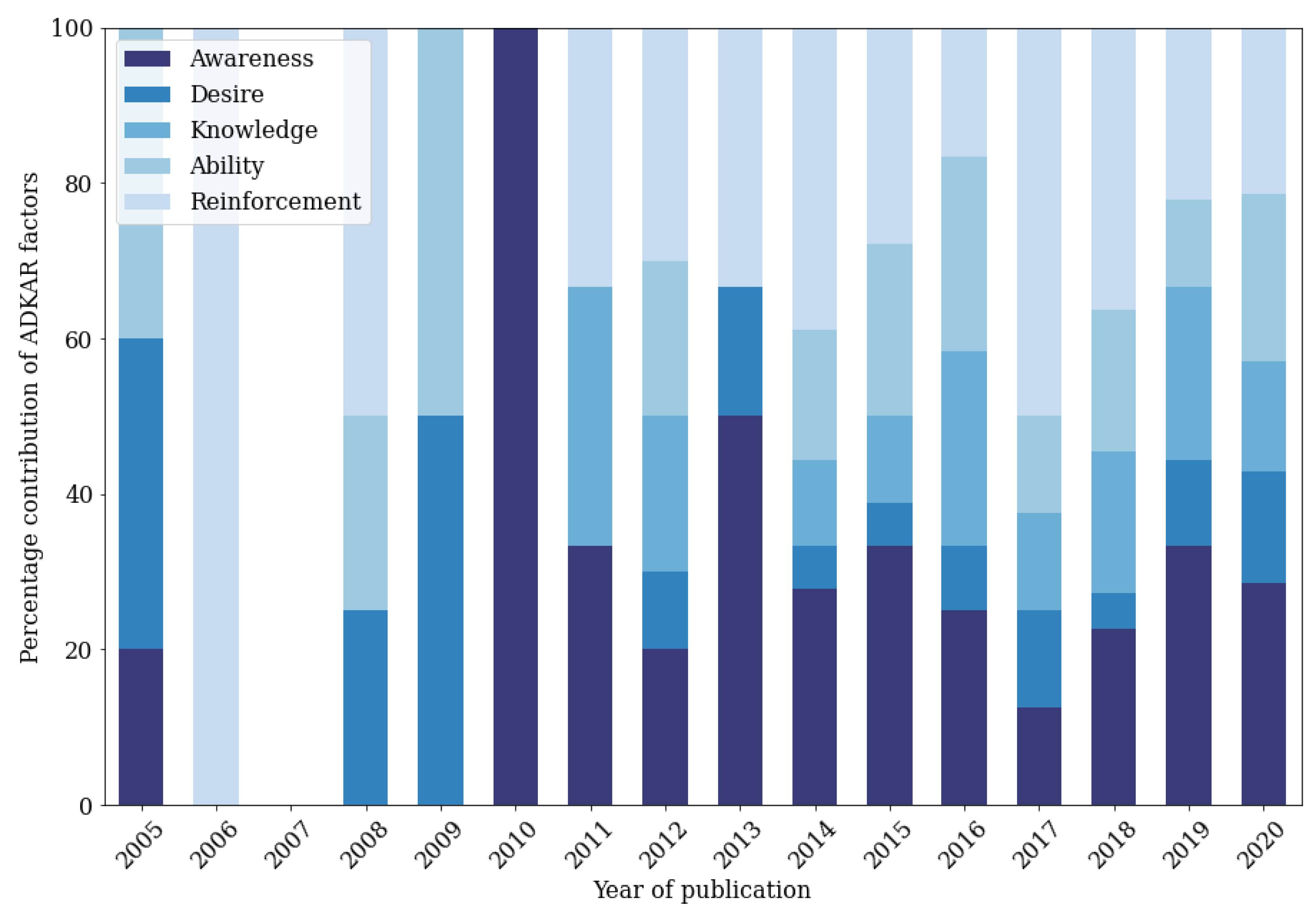

| Human cybersecurity aspects | The human cybersecurity aspect(s) of the ADKAR framework that were considered. | Awareness, desire, knowledge, ability, reinforcement |

| Metric aggregation strategies | The metric aggregation strategy or strategies employed. | Weighted linear combination, weighted product, weighted maximum, weighted complementary product, Bayesian network |

| Metric aggregation description | A description of the employed metric aggregation strategy. | Description text |

| Adaptability action missing data | The suggested response to deal with missing data. | Substitute, communicate, ignore, impossible, not considered |

| Missing data description | Description of the suggested response to deal with missing data. | Description text |

| Adaptability action dirty data | The suggested response to deal with dirty data. | Clean, substitute, communicate, ignore, impossible, not considered |

| Dirty data description | Description of the suggested response to deal with dirty data. | Description text |

| Adaptability action security event | The suggested response to deal with a security event. | No action, parameter tuning, model reformulation, impossible, not considered |

| Security event description | Description of the suggested response to deal with a security event. | Description text |

| Adaptability action concept drift | The suggested response to deal with concept drift. | No action, parameter tuning, model reformulation, impossible, not considered |

| Concept drift description | Description of the suggested response to deal with concept drift. | Description text |

| Concept drift consideration | Description of the author consideration of concept drift. | Description text |

| Research classification | A classification of the research type. | Theoretical, implementation, review |

| Research application area | Application area of the research. | Enterprise, other |

| Enterprise size(s) | For enterprise research, an indication of the enterprise size(s) the solution is applicable for. | Small, medium, large, any |

| Social viewpoint | An indication of which actors were considered from the social viewpoint. | Attacker, defender, both, unclear |

| Real-life threat | An indication of whether the paper considers the real-life threat environment [22]. | Yes, no, unclear |

| Physical dimension | An indication of whether the paper considers the physical dimension of security. | Yes, no, unclear |

| Validation method | The validation method employed in the research [29]. | Hypothetical, empirical, simulation, theoretical |

| Validation method description | A description of the validation method. | Description text |

Appendix B. Detailed Results

| Research | Year | Assessment Concept | Classification | Social Viewpoint |

|---|---|---|---|---|

| Dantu and Kolan [83] | 2005 | Risk | Theoretical | Attacker |

| Depoy et al. [105] | 2005 | Risk | Theoretical | Attacker |

| Hasle et al. [106] | 2005 | Resilience | Theoretical | Defender |

| Villarrubia et al. [107] | 2006 | Maturity | Theoretical | Defender |

| Bhilare et al. [74] | 2008 | Security | Theoretical | Defender |

| Grunske and Joyce [108] | 2008 | Risk | Theoretical | Attacker |

| Sahinoglu [84] | 2008 | Risk | Theoretical | Defender |

| Dantu et al. [85] | 2009 | Risk | Theoretical | Attacker |

| Chen and Wang [87] | 2010 | Risk | Theoretical | Defender |

| Chan [88] | 2011 | Risk | Theoretical | Defender |

| Shin et al. [78] | 2011 | Vulnerability | Theoretical | Defender |

| Bojanc et al. [109] | 2012 | Risk | Theoretical | Defender |

| Lo and Chen [50] | 2012 | Risk | Theoretical | Defender |

| Rantos et al. [110] | 2012 | Awareness | Theoretical | Defender |

| Shameli-Sendi et al. [80] | 2012 | Risk | Theoretical | Defender |

| Bojanc and Jerman-Blažič [111] | 2013 | Risk | Theoretical | Defender |

| Marconato et al. [79] | 2013 | Risk | Theoretical | Defender |

| Taubenberger et al. [112] | 2013 | Risk | Implementation | Defender |

| Alencar Rigon et al. [102] | 2014 | Maturity | Theoretical | Defender |

| Boggs et al. [113] | 2014 | Resilience | Theoretical | Defender |

| Chen et al. [114] | 2014 | Risk | Theoretical | Defender |

| Cheng et al. [115] | 2014 | Awareness | Theoretical | Defender |

| Feng et al. [86] | 2014 | Risk | Theoretical | Defender |

| Manifavas et al. [77] | 2014 | Awareness | Implementation | Defender |

| Silva et al. [81] | 2014 | Risk | Theoretical | Defender |

| Suhartana et al. [116] | 2014 | Risk | Theoretical | Defender |

| Yadav and Dong [117] | 2014 | Risk | Theoretical | Defender |

| Dehghanimohammadabadi and Bamakan [118] | 2015 | Risk | Theoretical | Defender |

| Juliadotter and Choo [119] | 2015 | Risk | Theoretical | Both |

| Otero [120] | 2015 | Risk | Theoretical | Defender |

| Solic et al. [121] | 2015 | Risk | Theoretical | Defender |

| Sugiura et al. [122] | 2015 | Risk | Theoretical | Defender |

| Wei et al. [123] | 2015 | Risk | Theoretical | Defender |

| You et al. [75] | 2015 | Security | Theoretical | Defender |

| Brožová et al. [51] | 2016 | Risk | Theoretical | Defender |

| Brynielsson et al. [124] | 2016 | Awareness | Theoretical | Defender |

| Granåsen and Andersson [125] | 2016 | Resilience | Theoretical | Defender |

| Orojloo and Azgomi [126] | 2016 | Risk | Theoretical | Attacker |

| Aiba and Hiromatsu [127] | 2017 | Risk | Theoretical | Defender |

| Damenu and Beaumont [103] | 2017 | Risk | Implementation | Defender |

| Ramos et al. [41] | 2017 | Review | - | Defender |

| Rass et al. [52] | 2017 | Risk | Theoretical | Defender |

| Alohali et al. [128] | 2018 | Risk | Theoretical | Defender |

| Li et al. [82] | 2018 | Risk | Theoretical | Defender |

| Morrison et al. [43] | 2018 | Review | - | Both |

| Pramod and Bharathi [129] | 2018 | Risk | Theoretical | Defender |

| Proença and Borbinha [89] | 2018 | Maturity | Implementation | Defender |

| Rueda and Avila [130] | 2018 | Risk | Theoretical | Defender |

| Shokouhyar et al. [104] | 2018 | Risk | Theoretical | Defender |

| Stergiopoulos et al. [131] | 2018 | Risk | Theoretical | Defender |

| You et al. [132] | 2018 | Maturity | Theoretical | Defender |

| Akinsanya et al. [133] | 2019 | Maturity | Theoretical | Defender |

| Bharathi [134] | 2019 | Risk | Theoretical | Defender |

| Fertig et al. [135] | 2019 | Awareness | Theoretical | Defender |

| [31] | 2019 | Review | - | Defender |

| Salih et al. [136] | 2019 | Risk | Theoretical | Defender |

| Cadena et al. [34] | 2020 | Review | - | Defender |

| Wirtz and Heisel [137] | 2020 | Risk | Theoretical | Defender |

| Ganin et al. [138] | 2020 | Risk | Theoretical | Defender |

| Luh et al. [90] | 2020 | Risk | Theoretical | Both |

| Research | Application Area | ADKAR Elements | Aggregation Strategies | Real-Life Threat |

|---|---|---|---|---|

| Dantu and Kolan [83] | Other | DE, AB | BN | Yes |

| Depoy et al. [105] | Other | DE, AB | WP, WCP | Yes |

| Hasle et al. [106] | Enterprise (A) | AW | WLC | No |

| Villarrubia et al. [107] | Enterprise (A) | RE | WLC | No |

| Bhilare et al. [74] | Enterprise (M/L) | RE | None | Yes |

| Grunske and Joyce [108] | Other | DE, AB | WP, WM | Yes |

| Sahinoglu [84] | Other | RE | WLC, BN | Yes |

| Dantu et al. [85] | Other | DE, AB | BN | Yes |

| Chen and Wang [87] | Other | AW | WP | No |

| Chan [88] | Enterprise (M/L) | RE | WLC, WP | No |

| Shin et al. [78] | Other | AW, KN | WLC | No |

| Bojanc et al. [109] | Enterprise (A) | RE | WLC, WM | Yes |

| Lo and Chen [50] | Enterprise (M/L) | AW, RE | WLC | No |

| Rantos et al. [110] | Enterprise (A) | AW, DE, KN, AB, RE | WLC | Yes |

| Shameli-Sendi et al. [80] | Enterprise (A) | KN, AB | WC | No |

| Bojanc and Jerman-Blažič [111] | Enterprise (A) | AW, RE | WLC | Yes |

| Marconato et al. [79] | Other | AW, DE | None | No |

| Taubenberger et al. [112] | Enterprise (M/L) | AW, RE | None | No |

| Alencar Rigon et al. [102] | Enterprise (M/L) | RE | WLC | No |

| Boggs et al. [113] | Other | AW | WLC | Yes |

| Chen et al. [114] | Enterprise (M/L) | RE | WLC | No |

| Cheng et al. [115] | Other | AW | WLC, WP, WM | Yes |

| Feng et al. [86] | Enterprise (M/L) | AW, RE | BN | Yes |

| Manifavas et al. [77] | Enterprise (A) | AW, DE, KN, AB, RE | WLC | Yes |

| Silva et al. [81] | Other | RE | WLC, WP | No |

| Suhartana et al. [116] | Enterprise (A) | AB, RE | WLC | Yes |

| Yadav and Dong [117] | Other | AW, KN, AB, RE | None | Yes |

| Dehghanimohammadabadi and Bamakan [118] | Enterprise (M/L) | RE | WLC, WP | Yes |

| Juliadotter and Choo [119] | Other | AW, DE, KN, AB, RE | WLC | Yes |

| Otero [120] | Enterprise (M/L) | AW, AB, RE | None | Yes |

| Solic et al. [121] | Enterprise (A) | AW, KN, AB | WLC | No |

| Sugiura et al. [122] | Enterprise (A) | AW, RE | None | No |

| Wei et al. [123] | Other | AW, AB | WLC | No |

| You et al. [75] | Other | AW, RE | WLC | No |

| Brožová et al. [51] | Enterprise (A) | AW, KN, RE | WLC | No |

| Brynielsson et al. [124] | Other | AW, KN, AB | None | No |

| Granåsen and Andersson [125] | Other | AW, DE, AB, RE | WLC | No |

| Orojloo and Azgomi [126] | Other | KN, AB | WLC, WM, WP | Yes |

| Aiba and Hiromatsu [127] | Enterprise (A) | RE | WLC | Yes |

| Damenu and Beaumont [103] | Enterprise (M/L) | AW, DE, KN, AB, RE | None | No |

| Ramos et al. [41] | Other | RE | WLC | No |

| Rass et al. [52] | Other | RE | WLC | Yes |

| Alohali et al. [128] | Other | AW, KN, AB | WLC, WM | No |

| Li et al. [82] | Other | AW, RE | WP | No |

| Morrison et al. [43] | Other | DE, AB, RE | WLC | No |

| Pramod and Bharathi [129] | Enterprise (A) | AW, KN, RE | WLC, WM | No |

| Proença and Borbinha [89] | Enterprise (M/L) | RE | WM | No |

| Rueda and Avila [130] | Enterprise (M/L) | AW, KN, AB, RE | WLC, WP | No |

| Shokouhyar et al. [104] | Enterprise (M/L) | RE | WLC, WM | No |

| Stergiopoulos et al. [131] | Other | AW, KN, AB, RE | WLC, WP | Yes |

| You et al. [132] | Other | RE | WLC | No |

| Akinsanya et al. [133] | Other | RE | WLC | No |

| Bharathi [134] | Other | AW | WLC | Yes |

| Fertig et al. [135] | Other | AW, DE, KN | None | No |

| Husák et al.[31] | Other | AB | WLC | Yes |

| Salih et al. [136] | Other | AW, KN, RE | WLC | No |

| Cadena et al. [34] | Other | AW | None | No |

| Wirtz and Heisel [137] | Other | AW, KN, AB, RE | WLC, WM | No |

| Ganin et al. [138] | Enterprise (A) | AW, DE, KN, AB, RE | WLC | No |

| Luh et al. [90] | Other | AW, DE, AB, RE | WLC | Yes |

References

- Bassett, G.; Hylender, C.D.; Langlois, P.; Pinto, A.; Widup, S. Data Breach Investigations Report; Technical Report; Verizon: New York, NY, USA, 2021. [Google Scholar]

- Bissell, K.; Lasalle, R.M. Cost of Cybercrime Study; Technical Report; Accenture and Ponemon Institute: Dublin, Ireland, 2019. [Google Scholar]

- Heidt, M.; Gerlach, J.P.; Buxmann, P. Investigating the Security Divide between SME and Large Companies: How SME Characteristics Influence Organizational IT Security Investments. Inf. Syst. Front. 2019, 21, 1285–1305. [Google Scholar] [CrossRef]

- Ponemon Institute. Global State of Cybersecurity in Small and Medium-Sized Businesses; Technical Report, Keeper Security; Ponemon Institute: Traverse City, MI, USA, 2019. [Google Scholar]

- Malatji, M.; Von Solms, S.; Marnewick, A. Socio-Technical Systems Cybersecurity Framework. Inf. Comput. Secur. 2019, 27, 233–272. [Google Scholar] [CrossRef]

- Carías, J.F.; Arrizabalaga, S.; Labaka, L.; Hernantes, J. Cyber Resilience Progression Model. Appl. Sci. 2020, 10, 7393. [Google Scholar] [CrossRef]

- Davis, M.C.; Challenger, R.; Jayewardene, D.N.W.; Clegg, C.W. Advancing Socio-Technical Systems Thinking: A Call for Bravery. Appl. Ergon. 2014, 45, 171–180. [Google Scholar] [CrossRef]

- Gratian, M.; Bandi, S.; Cukier, M.; Dykstra, J.; Ginther, A. Correlating Human Traits and Cyber Security Behavior Intentions. Comput. Secur. 2018, 73, 345–358. [Google Scholar] [CrossRef]

- Shojaifar, A.; Fricker, S.A.; Gwerder, M. Automating the Communication of Cybersecurity Knowledge: Multi-Case Study. In Proceedings of the IFIP Advances in Information and Communication Technology, Maribor, Slovenia, 21–23 September 2020; Drevin, L., Von Solms, S., Theocharidou, M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 110–124. [Google Scholar]

- Benz, M.; Chatterjee, D. Calculated Risk? A Cybersecurity Evaluation Tool for SMEs. Bus. Horizons 2020, 63, 531–540. [Google Scholar] [CrossRef]

- Jaquith, A. Security Metrics: Replacing Fear, Uncertainty, and Doubt; Pearson Education: London, UK, 2007. [Google Scholar]

- Slayton, R. Measuring Risk: Computer Security Metrics, Automation, and Learning. IEEE Ann. Hist. Comput. 2015, 37, 32–45. [Google Scholar] [CrossRef]

- Yigit Ozkan, B.; Spruit, M.; Wondolleck, R.; Burriel Coll, V. Modelling Adaptive Information Security for SMEs in a Cluster. J. Intellect. Cap. 2019, 21, 235–256. [Google Scholar] [CrossRef]

- European DIGITAL SME Alliance. The EU Cybersecurity Act and the Role of Standards for SMEs—Position Paper; Technical Report; European DIGITAL SME Alliance: Brussels, Belgium, 2020. [Google Scholar]

- Cholez, H.; Girard, F. Maturity Assessment and Process Improvement for Information Security Management in Small and Medium Enterprises. J. Softw. Evol. Process 2014, 26, 496–503. [Google Scholar] [CrossRef]

- Mijnhardt, F.; Baars, T.; Spruit, M. Organizational Characteristics Influencing SME Information Security Maturity. J. Comput. Inf. Syst. 2016, 56, 106–115. [Google Scholar] [CrossRef]

- Pendleton, M.; Garcia-Lebron, R.; Cho, J.H.; Xu, S. A Survey on Systems Security Metrics. ACM Comput. Surv. 2016, 49, 62:1–62:35. [Google Scholar] [CrossRef]

- Cho, J.H.; Xu, S.; Hurley, P.M.; Mackay, M.; Benjamin, T.; Beaumont, M. STRAM: Measuring the Trustworthiness of Computer-Based Systems. ACM Comput. Surv. 2019, 51, 1–47. [Google Scholar] [CrossRef]

- Refsdal, A.; Solhaug, B.; Stolen, K. Cyber-Risk Management; SpringerBriefs in Computer Science; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar]

- Böhme, R.; Freiling, F.C. On Metrics and Measurements. In Dependability Metrics: Advanced Lectures; Eusgeld, I., Freiling, F.C., Reussner, R., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2008; pp. 7–13. [Google Scholar]

- Martens, M.; De Wolf, R.; De Marez, L. Investigating and Comparing the Predictors of the Intention towards Taking Security Measures against Malware, Scams and Cybercrime in General. Comput. Hum. Behav. 2019, 92, 139–150. [Google Scholar] [CrossRef]

- Gollmann, D.; Herley, C.; Koenig, V.; Pieters, W.; Sasse, M.A. Socio-Technical Security Metrics. Dagstuhl Rep. 2015, 2015. [Google Scholar] [CrossRef]

- Selbst, A.D.; Boyd, D.; Friedler, S.A.; Venkatasubramanian, S.; Vertesi, J. Fairness and Abstraction in Sociotechnical Systems. In Proceedings of the Conference on Fairness, Accountability, and Transparency, Atlanta, GA, USA, 29–31 January 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 59–68. [Google Scholar] [CrossRef]

- Padayachee, K. Taxonomy of Compliant Information Security Behavior. Comput. Secur. 2012, 31, 673–680. [Google Scholar] [CrossRef]

- Menard, P.; Bott, G.J.; Crossler, R.E. User Motivations in Protecting Information Security: Protection Motivation Theory Versus Self-Determination Theory. J. Manag. Inf. Syst. 2017, 34, 1203–1230. [Google Scholar] [CrossRef]

- Herath, T.; Rao, H.R. Encouraging Information Security Behaviors in Organizations: Role of Penalties, Pressures and Perceived Effectiveness. Decis. Support Syst. 2009, 47, 154–165. [Google Scholar] [CrossRef]

- Hiatt, J. ADKAR: A Model for Change in Business, Government, and Our Community; Prosci: Fort Collins, CO, USA, 2006. [Google Scholar]

- Da Veiga, A. An Approach to Information Security Culture Change Combining ADKAR and the ISCA Questionnaire to Aid Transition to the Desired Culture. Inf. Comput. Secur. 2018, 26, 584–612. [Google Scholar] [CrossRef]

- Verendel, V. Quantified Security Is a Weak Hypothesis: A Critical Survey of Results and Assumptions. In Proceedings of the 2009 Workshop on New Security Paradigms Workshop, Oxford, UK, 8–11 September 2009; Association for Computing Machinery: Oxford, UK, 2009; pp. 37–50. [Google Scholar] [CrossRef]

- Rudolph, M.; Schwarz, R. A Critical Survey of Security Indicator Approaches. In Proceedings of the 2012 Seventh International Conference on Availability, Reliability and Security, Prague, Czech Republic, 20–24 August 2012; pp. 291–300. [Google Scholar] [CrossRef]

- Husák, M.; Komárková, J.; Bou-Harb, E.; Čeleda, P. Survey of Attack Projection, Prediction, and Forecasting in Cyber Security. IEEE Commun. Surv. Tutor. 2019, 21, 640–660. [Google Scholar] [CrossRef]

- Iannacone, M.D.; Bridges, R.A. Quantifiable & Comparable Evaluations of Cyber Defensive Capabilities: A Survey & Novel, Unified Approach. Comput. Secur. 2020, 96, 101907. [Google Scholar] [CrossRef]

- Kordy, B.; Piètre-Cambacédès, L.; Schweitzer, P. DAG-Based Attack and Defense Modeling: Don’t Miss the Forest for the Attack Trees. Comput. Sci. Rev. 2014, 13–14, 1–38. [Google Scholar] [CrossRef]

- Cadena, A.; Gualoto, F.; Fuertes, W.; Tello-Oquendo, L.; Andrade, R.; Tapia, F.; Torres, J. Metrics and Indicators of Information Security Incident Management: A Systematic Mapping Study. In Developments and Advances in Defense and Security; Rocha, Á., Pereira, R.P., Eds.; Springer: Singapore, 2020; pp. 507–519. [Google Scholar]

- Knowles, W.; Prince, D.; Hutchison, D.; Disso, J.F.P.; Jones, K. A Survey of Cyber Security Management in Industrial Control Systems. Int. J. Crit. Infrastruct. Prot. 2015, 9, 52–80. [Google Scholar] [CrossRef]

- Asghar, M.R.; Hu, Q.; Zeadally, S. Cybersecurity in Industrial Control Systems: Issues, Technologies, and Challenges. Comput. Netw. 2019, 165, 106946. [Google Scholar] [CrossRef]

- Eckhart, M.; Brenner, B.; Ekelhart, A.; Weippl, E. Quantitative Security Risk Assessment for Industrial Control Systems: Research Opportunities and Challenges. J. Internet Serv. Inf. Secur. 2019. [Google Scholar] [CrossRef]

- Jing, X.; Yan, Z.; Pedrycz, W. Security Data Collection and Data Analytics in the Internet: A Survey. IEEE Commun. Surv. Tutor. 2019, 21, 586–618. [Google Scholar] [CrossRef]

- Sengupta, S.; Chowdhary, A.; Sabur, A.; Alshamrani, A.; Huang, D.; Kambhampati, S. A Survey of Moving Target Defenses for Network Security. IEEE Commun. Surv. Tutor. 2020. [Google Scholar] [CrossRef]

- Liang, X.; Xiao, Y. Game Theory for Network Security. IEEE Commun. Surv. Tutor. 2013, 15, 472–486. [Google Scholar] [CrossRef]

- Ramos, A.; Lazar, M.; Filho, R.H.; Rodrigues, J.J.P.C. Model-Based Quantitative Network Security Metrics: A Survey. IEEE Commun. Surv. Tutor. 2017, 19, 2704–2734. [Google Scholar] [CrossRef]

- Cherdantseva, Y.; Burnap, P.; Blyth, A.; Eden, P.; Jones, K.; Soulsby, H.; Stoddart, K. A Review of Cyber Security Risk Assessment Methods for SCADA Systems. Comput. Secur. 2016, 56, 1–27. [Google Scholar] [CrossRef]

- Morrison, P.; Moye, D.; Pandita, R.; Williams, L. Mapping the Field of Software Life Cycle Security Metrics. Inf. Softw. Technol. 2018, 102, 146–159. [Google Scholar] [CrossRef]

- He, W.; Li, H.; Li, J. Unknown Vulnerability Risk Assessment Based on Directed Graph Models: A Survey. IEEE Access 2019, 7, 168201–168225. [Google Scholar] [CrossRef]

- Xie, H.; Yan, Z.; Yao, Z.; Atiquzzaman, M. Data Collection for Security Measurement in Wireless Sensor Networks: A Survey. IEEE Internet Things J. 2019, 6, 2205–2224. [Google Scholar] [CrossRef]

- Ray, J.; Marshall, H.; De Sousa, V.; Jean, J.; Warren, S.; Bachand, S. Cyber Threatscape Report. 2020. Available online: https://www.accenture.com/us-en/insights/security/cyber-threatscape-report (accessed on 16 November 2020).

- Riordan, J.; Lippmann, R.P. Threat-Based Risk Assessment for Enterprise Networks. Linc. Lab. J. 2016, 22, 33–45. [Google Scholar]

- Ferguson, N.; Schneier, B. Practical Cryptography; Wiley: Hoboken, NJ, USA, 2003. [Google Scholar]

- Lippmann, R.P.; Riordan, J.F.; Yu, T.; Watson, K.K. Continuous Security Metrics for Prevalent Network Threats: Introduction and First Four Metrics; Technical Report; Massachusetts Institute of Technology Lexington Lincoln Lab: Lexington, KY, USA, 2012. [Google Scholar]

- Lo, C.C.; Chen, W.J. A Hybrid Information Security Risk Assessment Procedure Considering Interdependences between Controls. Expert Syst. Appl. 2012, 39, 247–257. [Google Scholar] [CrossRef]

- Brožová, H.; Šup, L.; Rydval, J.; Sadok, M.; Bednar, P. Information Security Management: ANP Based Approach for Risk Analysis and Decision Making. AGRIS Line Pap. Econ. Inform. 2016, 8, 1–11. [Google Scholar] [CrossRef][Green Version]

- Rass, S.; Alshawish, A.; Abid, M.A.; Schauer, S.; Zhu, Q.; Meer, H.D. Physical Intrusion Games—Optimizing Surveillance by Simulation and Game Theory. IEEE Access 2017, 5, 8394–8407. [Google Scholar] [CrossRef]

- Evesti, A.; Ovaska, E. Comparison of Adaptive Information Security Approaches. ISRN Artif. Intell. 2013, 2013. [Google Scholar] [CrossRef]

- Baars, T.; Mijnhardt, F.; Vlaanderen, K.; Spruit, M. An Analytics Approach to Adaptive Maturity Models Using Organizational Characteristics. Decis. Anal. 2016, 3, 5. [Google Scholar] [CrossRef]

- de las Cuevas, P.; Mora, A.M.; Merelo, J.J.; Castillo, P.A.; García-Sánchez, P.; Fernández-Ares, A. Corporate Security Solutions for BYOD: A Novel User-Centric and Self-Adaptive System. Comput. Commun. 2015, 68, 83–95. [Google Scholar] [CrossRef]

- Kim, W.; Choi, B.J.; Hong, E.K.; Kim, S.K.; Lee, D. A Taxonomy of Dirty Data. Data Min. Knowl. Discov. 2003, 7, 81–99. [Google Scholar] [CrossRef]

- Widmer, G.; Kubat, M. Learning in the Presence of Concept Drift and Hidden Contexts. Mach. Learn. 1996, 23, 69–101. [Google Scholar] [CrossRef]

- van Haastrecht, M.; Sarhan, I.; Yigit Ozkan, B.; Brinkhuis, M.; Spruit, M. SYMBALS: A Systematic Review Methodology Blending Active Learning and Snowballing. Front. Res. Metrics Anal. 2021, 6. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering; Technical Report; Keele University and Durham University Joint Report: Keele, UK, 2007. [Google Scholar]

- Liberati, A.; Altman, D.G.; Tetzlaff, J.; Mulrow, C.; Gøtzsche, P.C.; Ioannidis, J.P.A.; Clarke, M.; Devereaux, P.J.; Kleijnen, J.; Moher, D. The PRISMA Statement for Reporting Systematic Reviews and Meta-Analyses of Studies That Evaluate Health Care Interventions: Explanation and Elaboration. J. Clin. Epidemiol. 2009, 62, e1–e34. [Google Scholar] [CrossRef] [PubMed]

- Moher, D.; Shamseer, L.; Clarke, M.; Ghersi, D.; Liberati, A.; Petticrew, M.; Shekelle, P.; Stewart, L.A.; PRISMA-P Group. Preferred Reporting Items for Systematic Review and Meta-Analysis Protocols (PRISMA-P) 2015 Statement. Syst. Rev. 2015, 4, 1. [Google Scholar] [CrossRef] [PubMed]

- van de Schoot, R.; de Bruin, J.; Schram, R.; Zahedi, P.; de Boer, J.; Weijdema, F.; Kramer, B.; Huijts, M.; Hoogerwerf, M.; Ferdinands, G.; et al. An Open Source Machine Learning Framework for Efficient and Transparent Systematic Reviews. Nat. Mach. Intell. 2021, 3, 125–133. [Google Scholar] [CrossRef]

- Wohlin, C. Guidelines for Snowballing in Systematic Literature Studies and a Replication in Software Engineering. In Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering, London, UK, 13–14 May 2014; Association for Computing Machinery: London, UK, 2014; pp. 1–10. [Google Scholar] [CrossRef]

- Mourão, E.; Pimentel, J.F.; Murta, L.; Kalinowski, M.; Mendes, E.; Wohlin, C. On the Performance of Hybrid Search Strategies for Systematic Literature Reviews in Software Engineering. Inf. Softw. Technol. 2020, 123, 106294. [Google Scholar] [CrossRef]

- Rose, M.E.; Kitchin, J.R. Pybliometrics: Scriptable Bibliometrics Using a Python Interface to Scopus. SoftwareX 2019, 10, 100263. [Google Scholar] [CrossRef]

- Harrison, H.; Griffin, S.J.; Kuhn, I.; Usher-Smith, J.A. Software Tools to Support Title and Abstract Screening for Systematic Reviews in Healthcare: An Evaluation. BMC Med Res. Methodol. 2020, 20, 7. [Google Scholar] [CrossRef]

- Brereton, P.; Kitchenham, B.A.; Budgen, D.; Turner, M.; Khalil, M. Lessons from Applying the Systematic Literature Review Process within the Software Engineering Domain. J. Syst. Softw. 2007, 80, 571–583. [Google Scholar] [CrossRef]

- Stolfo, S.; Bellovin, S.M.; Evans, D. Measuring Security. IEEE Secur. Priv. 2011, 9, 60–65. [Google Scholar] [CrossRef]

- Noel, S.; Jajodia, S. Metrics Suite for Network Attack Graph Analytics. In Proceedings of the 9th Annual Cyber and Information Security Research Conference, Oak Ridge, TN, USA, 8–10 April 2014; Association for Computing Machinery: New York, NY, USA, 2014; pp. 5–8. [Google Scholar] [CrossRef]

- Spruit, M.; Roeling, M. ISFAM: The Information Security Focus Area Maturity Model. In Proceedings of the ECIS 2014, Atlanta, GA, USA, 9–11 June 2014. [Google Scholar]

- Allodi, L.; Massacci, F. Security Events and Vulnerability Data for Cybersecurity Risk Estimation. Risk Anal. 2017, 37, 1606–1627. [Google Scholar] [CrossRef]

- Cormack, G.V.; Grossman, M.R. Engineering Quality and Reliability in Technology-Assisted Review. In Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval, Pisa, Italy, 17–21 July 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 75–84. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, H.; Huang, X.; Yang, S.; Babar, M.A.; Tang, H. Quality Assessment of Systematic Reviews in Software Engineering: A Tertiary Study. In Proceedings of the 19th International Conference on Evaluation and Assessment in Software Engineering, Nanjing, China, 27–29 April 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 1–14. [Google Scholar] [CrossRef]

- Bhilare, D.S.; Ramani, A.; Tanwani, S. Information Security Assessment and Reporting: Distributed Defense. J. Comput. Sci. 2008, 4, 864–872. [Google Scholar]

- You, Y.; Oh, S.; Lee, K. Advanced Security Assessment for Control Effectiveness. In Information Security Applications; Lecture Notes in Computer Science; Rhee, K.H., Yi, J.H., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 383–393. [Google Scholar]

- Yang, N.; Singh, T.; Johnston, A. A Replication Study of User Motivation in Protecting Information Security Using Protection Motivation Theory and Self Determination Theory. AIS Trans. Replication Res. 2020, 6. [Google Scholar] [CrossRef]

- Manifavas, C.; Fysarakis, K.; Rantos, K.; Hatzivasilis, G. DSAPE—Dynamic Security Awareness Program Evaluation. In Human Aspects of Information Security, Privacy, and Trust; Lecture Notes in Computer Science; Tryfonas, T., Askoxylakis, I., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 258–269. [Google Scholar]

- Shin, Y.; Meneely, A.; Williams, L.; Osborne, J.A. Evaluating Complexity, Code Churn, and Developer Activity Metrics as Indicators of Software Vulnerabilities. IEEE Trans. Softw. Eng. 2011, 37, 772–787. [Google Scholar] [CrossRef]

- Marconato, G.V.; Kaâniche, M.; Nicomette, V. A Vulnerability Life Cycle-Based Security Modeling and Evaluation Approach. Comput. J. 2013, 56, 422–439. [Google Scholar] [CrossRef][Green Version]

- Shameli-Sendi, A.; Shajari, M.; Hassanabadi, M.; Jabbarifar, M.; Dagenais, M. Fuzzy Multi-Criteria Decision-Making for Information Security Risk Assessment. Open Cybern. Syst. J. 2012, 6, 26–37. [Google Scholar] [CrossRef]

- Silva, M.M.; de Gusmão, A.P.H.; Poleto, T.; e Silva, L.C.; Costa, A.P.C.S. A Multidimensional Approach to Information Security Risk Management Using FMEA and Fuzzy Theory. Int. J. Inf. Manag. 2014, 34, 733–740. [Google Scholar] [CrossRef]

- Li, X.; Li, H.; Sun, B.; Wang, F. Assessing Information Security Risk for an Evolving Smart City Based on Fuzzy and Grey FMEA. J. Intell. Fuzzy Syst. 2018, 34, 2491–2501. [Google Scholar] [CrossRef]

- Dantu, R.; Kolan, P. Risk Management Using Behavior Based Bayesian Networks. In Intelligence and Security Informatics; Lecture Notes in Computer Science; Kantor, P., Muresan, G., Roberts, F., Zeng, D.D., Wang, F.Y., Chen, H., Merkle, R.C., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 115–126. [Google Scholar]

- Sahinoglu, M. An Input–Output Measurable Design for the Security Meter Model to Quantify and Manage Software Security Risk. IEEE Trans. Instrum. Meas. 2008, 57, 1251–1260. [Google Scholar] [CrossRef]

- Dantu, R.; Kolan, P.; Cangussu, J. Network Risk Management Using Attacker Profiling. Secur. Commun. Netw. 2009, 2, 83–96. [Google Scholar] [CrossRef]

- Feng, N.; Wang, H.J.; Li, M. A Security Risk Analysis Model for Information Systems: Causal Relationships of Risk Factors and Vulnerability Propagation Analysis. Inf. Sci. 2014, 256, 57–73. [Google Scholar] [CrossRef]

- Chen, M.K.; Wang, S.C. A Hybrid Delphi-Bayesian Method to Establish Business Data Integrity Policy: A Benchmark Data Center Case Study. Kybernetes 2010, 39, 800–824. [Google Scholar] [CrossRef]

- Chan, C.L. Information Security Risk Modeling Using Bayesian Index. Comput. J. 2011, 54, 628–638. [Google Scholar] [CrossRef]

- Proença, D.; Borbinha, J. Information Security Management Systems—A Maturity Model Based on ISO/IEC 27001. In Business Information Systems; Lecture Notes in Business Information Processing; Abramowicz, W., Paschke, A., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 102–114. [Google Scholar]

- Luh, R.; Temper, M.; Tjoa, S.; Schrittwieser, S.; Janicke, H. PenQuest: A Gamified Attacker/Defender Meta Model for Cyber Security Assessment and Education. J. Comput. Virol. Hacking Tech. 2020, 16, 19–61. [Google Scholar] [CrossRef]

- Malatji, M.; Marnewick, A.; von Solms, S. Validation of a Socio-Technical Management Process for Optimising Cybersecurity Practices. Comput. Secur. 2020, 95, 101846. [Google Scholar] [CrossRef]

- Carías, J.F.; Borges, M.R.S.; Labaka, L.; Arrizabalaga, S.; Hernantes, J. Systematic Approach to Cyber Resilience Operationalization in SMEs. IEEE Access 2020, 8, 174200–174221. [Google Scholar] [CrossRef]

- AlHogail, A. Design and Validation of Information Security Culture Framework. Comput. Hum. Behav. 2015, 49, 567–575. [Google Scholar] [CrossRef]

- da Veiga, A.; Astakhova, L.V.; Botha, A.; Herselman, M. Defining Organisational Information Security Culture—Perspectives from Academia and Industry. Comput. Secur. 2020, 92, 101713. [Google Scholar] [CrossRef]

- Sittig, D.F.; Singh, H. A Socio-Technical Approach to Preventing, Mitigating, and Recovering from Ransomware Attacks. Appl. Clin. Inform. 2016, 7, 624–632. [Google Scholar] [CrossRef]

- Yigit Ozkan, B.; Spruit, M. Addressing SME Characteristics for Designing Information Security Maturity Models. In Proceedings of the IFIP Advances in Information and Communication Technology, Mytilene, Greece, 8–10 July 2020; Clarke, N., Furnell, S., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 161–174. [Google Scholar]

- Kam, H.J.; Menard, P.; Ormond, D.; Crossler, R.E. Cultivating Cybersecurity Learning: An Integration of Self-Determination and Flow. Comput. Secur. 2020, 96, 101875. [Google Scholar] [CrossRef]

- Zimmermann, V.; Renaud, K. Moving from a “human-as-Problem” to a “human-as-Solution” Cybersecurity Mindset. Int. J. Hum. Comput. Stud. 2019, 131, 169–187. [Google Scholar] [CrossRef]

- Liu, Y.; Sarabi, A.; Zhang, J.; Naghizadeh, P.; Karir, M.; Bailey, M.; Liu, M. Cloudy with a Chance of Breach: Forecasting Cyber Security Incidents. In Proceedings of the 24th {USENIX} Security Symposium ({USENIX} Security 15), Washington, DC, USA, 12–14 August 2015; pp. 1009–1024. [Google Scholar]

- Casola, V.; De Benedictis, A.; Rak, M.; Villano, U. Toward the Automation of Threat Modeling and Risk Assessment in IoT Systems. Internet Things 2019, 7, 100056. [Google Scholar] [CrossRef]

- Manadhata, P.K.; Wing, J.M. An Attack Surface Metric. IEEE Trans. Softw. Eng. 2011, 37, 371–386. [Google Scholar] [CrossRef]

- Alencar Rigon, E.; Merkle Westphall, C.; Ricardo dos Santos, D.; Becker Westphall, C. A Cyclical Evaluation Model of Information Security Maturity. Inf. Manag. Comput. Secur. 2014, 22, 265–278. [Google Scholar] [CrossRef]

- Damenu, T.K.; Beaumont, C. Analysing Information Security in a Bank Using Soft Systems Methodology. Inf. Comput. Secur. 2017, 25, 240–258. [Google Scholar] [CrossRef]

- Shokouhyar, S.; Panahifar, F.; Karimisefat, A.; Nezafatbakhsh, M. An Information System Risk Assessment Model: A Case Study in Online Banking System. Int. J. Electron. Secur. Digit. Forensics 2018, 10, 39–60. [Google Scholar] [CrossRef]

- Depoy, J.; Phelan, J.; Sholander, P.; Smith, B.; Varnado, G.B.; Wyss, G. Risk Assessment for Physical and Cyber Attacks on Critical Infrastructures. In Proceedings of the MILCOM 2005—2005 IEEE Military Communications Conference, Atlantic City, NJ, USA, 17–20 October 2005; Volume 3, pp. 1961–1969. [Google Scholar] [CrossRef]

- Hasle, H.; Kristiansen, Y.; Kintel, K.; Snekkenes, E. Measuring Resistance to Social Engineering. In Information Security Practice and Experience; Lecture Notes in Computer Science; Deng, R.H., Bao, F., Pang, H., Zhou, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 132–143. [Google Scholar]

- Villarrubia, C.; Fernández-Medina, E.; Piattini, M. Metrics of Password Management Policy. In Proceedings of the Computational Science and Its Applications—ICCSA 2006, Glasgow, UK, 8–11 May 2006; Lecture Notes in Computer Science. Gavrilova, M., Gervasi, O., Kumar, V., Tan, C.J.K., Taniar, D., Laganá, A., Mun, Y., Choo, H., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1013–1023. [Google Scholar] [CrossRef]

- Grunske, L.; Joyce, D. Quantitative Risk-Based Security Prediction for Component-Based Systems with Explicitly Modeled Attack Profiles. J. Syst. Softw. 2008, 81, 1327–1345. [Google Scholar] [CrossRef]

- Bojanc, R.; Jerman-Blažič, B.; Tekavčič, M. Managing the Investment in Information Security Technology by Use of a Quantitative Modeling. Inf. Process. Manag. 2012, 48, 1031–1052. [Google Scholar] [CrossRef]

- Rantos, K.; Fysarakis, K.; Manifavas, C. How Effective Is Your Security Awareness Program? An Evaluation Methodology. Inf. Secur. J. Glob. Perspect. 2012, 21, 328–345. [Google Scholar] [CrossRef]

- Bojanc, R.; Jerman-Blažič, B. A Quantitative Model for Information-Security Risk Management. Eng. Manag. J. 2013, 25, 25–37. [Google Scholar] [CrossRef]

- Taubenberger, S.; Jürjens, J.; Yu, Y.; Nuseibeh, B. Resolving Vulnerability Identification Errors Using Security Requirements on Business Process Models. Inf. Manag. Comput. Secur. 2013, 21, 202–223. [Google Scholar] [CrossRef]

- Boggs, N.; Du, S.; Stolfo, S.J. Measuring Drive-by Download Defense in Depth. In Proceedings of the International Workshop on Recent Advances in Intrusion Detection, Gothenburg, Sweden, 17–19 September 2014; Lecture Notes in Computer Science. Stavrou, A., Bos, H., Portokalidis, G., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 172–191. [Google Scholar] [CrossRef]

- Chen, J.; Pedrycz, W.; Ma, L.; Wang, C. A New Information Security Risk Analysis Method Based on Membership Degree. Kybernetes 2014, 43, 686–698. [Google Scholar] [CrossRef]

- Cheng, Y.; Deng, J.; Li, J.; DeLoach, S.A.; Singhal, A.; Ou, X. Metrics of Security. In Cyber Defense and Situational Awareness; Kott, A., Wang, C., Erbacher, R.F., Eds.; Advances in Information Security; Springer International Publishing: Cham, Switzerland, 2014; pp. 263–295. [Google Scholar]

- Suhartana, M.; Pardamean, B.; Soewito, B. Modeling of Risk Factors in Determining Network Security Level. J. Secur. Appl. 2014. [Google Scholar] [CrossRef]

- Yadav, S.; Dong, T. A Comprehensive Method to Assess Work System Security Risk. Commun. Assoc. Inf. Syst. 2014, 34. [Google Scholar] [CrossRef]

- Dehghanimohammadabadi, M.; Bamakan, S.M.H. A Weighted Monte Carlo Simulation Approach to Risk Assessment of Information Security Management System. Int. J. Enterp. Inf. Syst. 2015, 11, 63–78. [Google Scholar]

- Juliadotter, N.V.; Choo, K.K.R. CATRA: Conceptual Cloud Attack Taxonomy and Risk Assessment Framework. In The Cloud Security Ecosystem; Syngress: Rockland, ME, USA, 2015. [Google Scholar] [CrossRef]

- Otero, A.R. An Information Security Control Assessment Methodology for Organizations’ Financial Information. Int. J. Account. Inf. Syst. 2015, 18, 26–45. [Google Scholar] [CrossRef]

- Solic, K.; Ocevcic, H.; Golub, M. The Information Systems’ Security Level Assessment Model Based on an Ontology and Evidential Reasoning Approach. Comput. Secur. 2015, 55, 100–112. [Google Scholar] [CrossRef]

- Sugiura, M.; Suwa, H.; Ohta, T. Improving IT Security Through Security Measures: Using Our Game-Theory-Based Model of IT Security Implementation. In Proceedings of the International Conference on Human-Computer Interaction: Design and Evaluation, Los Angeles, CA, USA, 2–7 August 2015; Lecture Notes in Computer Science. Kurosu, M., Ed.; Springer International Publishing: Cham, Switzerland, 2015; pp. 82–95. [Google Scholar] [CrossRef]

- Wei, L.; Yong-feng, C.; Ya, L. Information Systems Security Assessment Based on System Dynamics. J. Secur. Appl. 2015. [Google Scholar] [CrossRef]

- Brynielsson, J.; Franke, U.; Varga, S. Cyber Situational Awareness Testing. In Combatting Cybercrime and Cyberterrorism: Challenges, Trends and Priorities; Akhgar, B., Brewster, B., Eds.; Advanced Sciences and Technologies for Security Applications; Springer International Publishing: Cham, Switzerland, 2016; pp. 209–233. [Google Scholar]

- Granåsen, M.; Andersson, D. Measuring Team Effectiveness in Cyber-Defense Exercises: A Cross-Disciplinary Case Study. Cogn. Technol. Work 2016, 18, 121–143. [Google Scholar] [CrossRef]

- Orojloo, H.; Azgomi, M.A. Predicting the Behavior of Attackers and the Consequences of Attacks against Cyber-Physical Systems. Secur. Commun. Netw. 2016, 9, 6111–6136. [Google Scholar] [CrossRef]

- Aiba, R.; Hiromatsu, T. Improvement of Verification of a Model Supporting Decision-Making on Information Security Risk Treatment by Using Statistical Data. J. Disaster Res. 2017, 12, 1060–1072. [Google Scholar] [CrossRef]

- Alohali, M.; Clarke, N.; Furnell, S. The Design and Evaluation of a User-Centric Information Security Risk Assessment and Response Framework. Int. J. Adv. Comput. Sci. Appl. 2018. [Google Scholar] [CrossRef]

- Pramod, D.; Bharathi, S.V. Developing an Information Security Risk Taxonomy and an Assessment Model Using Fuzzy Petri Nets. J. Cases Inf. Technol. 2018, 20. [Google Scholar] [CrossRef]

- Rueda, S.; Avila, O. Automating Information Security Risk Assessment for IT Services. In Proceedings of the International Conference on Applied Informatics, Bogota, Colombia, 1–3 November 2018; Communications in Computer and Information Science. Florez, H., Diaz, C., Chavarriaga, J., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 183–197. [Google Scholar] [CrossRef]

- Stergiopoulos, G.; Gritzalis, D.; Kouktzoglou, V. Using Formal Distributions for Threat Likelihood Estimation in Cloud-Enabled IT Risk Assessment. Comput. Netw. 2018, 134, 23–45. [Google Scholar] [CrossRef]

- You, Y.; Oh, J.; Kim, S.; Lee, K. Advanced Approach to Information Security Management System Utilizing Maturity Models in Critical Infrastructure. KSII Trans. Internet Inf. Syst. 2018, 12, 4995–5014. [Google Scholar]

- Akinsanya, O.O.; Papadaki, M.; Sun, L. Towards a Maturity Model for Health-Care Cloud Security (M2HCS). Inf. Comput. Secur. 2019, 28, 321–345. [Google Scholar] [CrossRef]

- Bharathi, S.V. Forewarned Is Forearmed: Assessment of IoT Information Security Risks Using Analytic Hierarchy Process. Benchmarking Int. J. 2019, 26, 2443–2467. [Google Scholar] [CrossRef]

- Fertig, T.; Schütz, A.E.; Weber, K.; Müller, N.H. Measuring the Impact of E-Learning Platforms on Information Security Awareness. In Proceedings of the International Conference on Learning and Collaboration Technologies, Designing Learning Experiences, Orlando, FL, USA, 26–31 July 2019; Lecture Notes in Computer Science. Zaphiris, P., Ioannou, A., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 26–37. [Google Scholar] [CrossRef]

- Salih, F.I.; Bakar, N.A.A.; Hassan, N.H.; Yahya, F.; Kama, N.; Shah, J. IOT Security Risk Management Model for Healthcare Industry. Malays. J. Comput. Sci. 2019, 131–144. [Google Scholar] [CrossRef]

- Wirtz, R.; Heisel, M. Model-Based Risk Analysis and Evaluation Using CORAS and CVSS. In Proceedings of the International Conference on Evaluation of Novel Approaches to Software Engineering, Prague, Czech Republic, 5–6 May 2020; Communications in Computer and Information Science. Damiani, E., Spanoudakis, G., Maciaszek, L.A., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 108–134. [Google Scholar] [CrossRef]

- Ganin, A.A.; Quach, P.; Panwar, M.; Collier, Z.A.; Keisler, J.M.; Marchese, D.; Linkov, I. Multicriteria Decision Framework for Cybersecurity Risk Assessment and Management. Risk Anal. 2020, 40, 183–199. [Google Scholar] [CrossRef]

| Research | Year | Focus Area | Social Factors Evaluated |

|---|---|---|---|

| Current paper | 2021 | Generic | ✓ |

| Verendel [29] | 2009 | Generic | × |

| Rudolph and Schwarz [30] | 2012 | Generic | × |

| Pendleton et al. [17] | 2016 | Generic | ✓ |

| Cho et al. [18] | 2019 | Generic | ✓ |

| Husák et al. [31] | 2019 | Attack Prediction | ✓ |

| Iannacone and Bridges [32] | 2020 | Cyber Defense | × |

| Kordy et al. [33] | 2014 | Directed Acyclic Graphs | × |

| Cadena et al. [34] | 2020 | Incident Management | ✓ |

| Knowles et al. [35] | 2015 | Industrial Control Systems | ✓ |

| Asghar et al. [36] | 2019 | Industrial Control Systems | ✓ |

| Eckhart et al. [37] | 2019 | Industrial Control Systems | × |

| Jing et al. [38] | 2019 | Internet Security | × |

| Sengupta et al. [39] | 2020 | Moving Target Defense | × |

| Liang and Xiao [40] | 2013 | Network Security | × |

| Ramos et al. [41] | 2017 | Network Security | ✓ |

| Cherdantseva et al. [42] | 2016 | SCADA Systems | ✓ |

| Morrison et al. [43] | 2018 | Software Security | × |

| He et al. [44] | 2019 | Unknown Vulnerabilities | × |

| Xie et al. [45] | 2019 | Wireless Networks | × |

| Aggregation | Injective | Idempotent | Prioritisation | Dependence | Weakest link |

|---|---|---|---|---|---|

| Weighted linear combination | ✓ | ✓ | ✓ | × | × |

| Weighted product | ✓ | × | ✓ | × | × |

| Weighted maximum | × | ✓ | ✓ * | × | ✓ * |

| Weighted complementary product | ✓ | × | ✓ * | × | ✓ * |

| Bayesian network | ✓ | × | ✓ | ✓ | × |

| Source | Results | Unique |

|---|---|---|

| Scopus | 21,964 | 21,964 |

| Web of Science | 7889 | 1782 |

| ACM Digital Library | 2000 | 660 |

| IEEE Xplore | 2000 | 1256 |

| PubMed Central | 660 | 111 |

| Total | 34,513 | 25,773 |

| Aspect | Criterion | SD | D | N | A | SA |

|---|---|---|---|---|---|---|

| Reporting | There is a clear statement of the research aims. | 0 | 4 | 7 | 28 | 21 |

| There is an adequate description of the research context. | 0 | 6 | 11 | 17 | 26 | |

| The paper is based on research. | 0 | 3 | 3 | 16 | 38 | |

| Rigour | Metrics used in the study are clearly defined. | 0 | 10 | 19 | 16 | 15 |

| Metrics are adequately measured and validated. | 1 | 24 | 22 | 8 | 5 | |

| The data analysis is sufficiently rigorous. | 0 | 21 | 17 | 14 | 8 | |

| Credibility | Findings are clearly stated and related to research aims. | 0 | 8 | 19 | 25 | 8 |

| Limitations and threats to validity are adequately discussed. | 30 | 18 | 8 | 2 | 2 | |

| Relevance | The study is of value to research and/or practice. | 0 | 9 | 12 | 28 | 11 |

| ADKAR | Abbreviation | Related Concepts |

|---|---|---|

| Awareness | AW | Consciousness |

| Desire | DE | Motivation, loyalty, attendance |

| Knowledge | KN | Understanding |

| Ability | AB | Behaviour, capability, capacity, experience, skill |

| Reinforcement | RE | Culture, education, evaluation, policy, training |

| ADKAR Elements | Aggregation Strategy Classes | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Assessment Concept | Total | AW | DE | KN | AB | RE | WLC | WP | WM | WCP | BN | None |

| Risk | 40 | 24 | 9 | 14 | 19 | 28 | 27 | 10 | 7 | 1 | 4 | 4 |

| Awareness | 5 | 5 | 3 | 4 | 3 | 2 | 3 | 1 | 1 | 0 | 0 | 2 |

| Maturity | 5 | 0 | 0 | 0 | 0 | 5 | 4 | 0 | 1 | 0 | 0 | 0 |

| Resilience | 3 | 3 | 1 | 0 | 1 | 1 | 3 | 0 | 0 | 0 | 0 | 0 |

| Security | 2 | 1 | 0 | 0 | 0 | 2 | 1 | 0 | 0 | 0 | 0 | 1 |

| Vulnerability | 1 | 1 | 0 | 1 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| ADKAR | |||||||

|---|---|---|---|---|---|---|---|

| Social Viewpoint | Total | AW | DE | KN | AB | RE | Real-Life Threat |

| Defender | 52 | 33 | 7 | 17 | 17 | 37 | 18 |

| Attacker | 5 | 0 | 4 | 1 | 5 | 0 | 5 |

| Both | 3 | 2 | 3 | 1 | 3 | 3 | 2 |

| Classification | |||

|---|---|---|---|

| Aggregation Strategy | Theoretical | Implementation | Review |

| WLC | 38 | 1 | 3 |

| WP | 11 | 0 | 0 |

| WM | 8 | 1 | 0 |

| WCP | 1 | 0 | 0 |

| BN | 4 | 0 | 0 |

| None | 7 | 2 | 1 |

| Application Area | ||||

|---|---|---|---|---|

| Property | Values | Any Enterprise | M/L Enterprise | Other |

| ADKAR | AW | 9 | 6 | 20 |

| DE | 3 | 1 | 10 | |

| KN | 7 | 2 | 10 | |

| AB | 6 | 3 | 16 | |

| RE | 11 | 13 | 15 | |

| Aggregation | WLC | 13 | 7 | 22 |

| WP | 0 | 3 | 8 | |

| WM | 2 | 2 | 5 | |

| WCP | 0 | 0 | 1 | |

| BN | 0 | 1 | 3 | |

| None | 1 | 4 | 5 | |

| Socio-Technical Aspects | ||||||

|---|---|---|---|---|---|---|

| SME Category | Goals | People | Culture | Processes | Technology | Assets |

| Start-ups | Realise cybersecurity necessity [5] due to external environment factors. Move from a non-existent cybersecurity culture to initial, informal cybersecurity measures [5,10,91]. | Define training plans and start creating cybersecurity awareness [92]. | Initial cybersecurity policies and procedures show management commitment, ensuring employee support [93,94]. | No standardised processes yet [5]. SME gains awareness on cybersecurity policies, processes, procedures, standards and regulation. | Employ a threat-based risk assessment tool requiring no knowledge of SME assets, using no/intuitive aggregation. External support needed to understand and implement countermeasures. | Understand relevant and critical cybersecurity asset types [92]. |

| Digitally-dependent | Start formalising cybersecurity processes. Define, manage, and communicate cybersecurity strategy [5,10,91,92]. | Continue building awareness [94]. Stimulate desire through knowledge acquisition [93]. Evaluate gaps in ability [92]. | Management support and cybersecurity trainings stimulate employees [94] and change their perception [93]. | Formulate basic (reactive) cybersecurity policies, processes, and procedures [5,94]; likely not yet universally applied across business units [5]. | Employ a threat-based risk assessment tool using no/intuitive aggregation. External support needed to implement countermeasures. | Systematically identify and document relevant assets and their baseline configurations [92]. |

| Digitally-based | Establish a formal cybersecurity programme that facilitates continuous improvement and compliance with regulation [5,10,91,92]. | Advance cybersecurity knowledge and ability through clearly communicated and documented trainings [5,92,94]. | Regular communication and education [94], backed by rewards and deterrents [93], ensures secure employee behaviour [93,94]. | Processes defined and documented proactively, communicated via awareness and training sessions [5,94]. Information sharing agreements defined [92]. | Use a risk assessment framework or maturity model with adequately motivated aggregation. Implement basic countermeasures [92], external support needed for complex countermeasures. | Manage asset changes and periodically maintain assets [92]. |

| Digital enablers | Embed and automate cybersecurity processes [5,10,15,91], which, combined with collaborative stakeholder relationships [92], promote internal and external trust in the SME cybersecurity posture [94]. | Employees mutually reinforce their cybersecurity abilities, possibly captured in official cybersecurity roles [94]. | Regular evaluations [5,15] stimulate naturally secure behaviour [94], where national culture and regulations are recognised [93]. An environment of trust with stakeholders exists [92,94]. | Successive comparisons of assessment results facilitate continuous process improvement [5,15]. Business continuity plan defined and communicated to external stakeholders [92]. | Use a risk assessment framework or maturity model with advanced aggregation. Independently implement countermeasures [92] and actively detect anomalies [92], with the help of automated tools [5]. | Identify and document internal and external dependencies of assets, to help in determining the SME attack surface. Actively monitor assets [92]. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

van Haastrecht, M.; Yigit Ozkan, B.; Brinkhuis, M.; Spruit, M. Respite for SMEs: A Systematic Review of Socio-Technical Cybersecurity Metrics. Appl. Sci. 2021, 11, 6909. https://doi.org/10.3390/app11156909

van Haastrecht M, Yigit Ozkan B, Brinkhuis M, Spruit M. Respite for SMEs: A Systematic Review of Socio-Technical Cybersecurity Metrics. Applied Sciences. 2021; 11(15):6909. https://doi.org/10.3390/app11156909

Chicago/Turabian Stylevan Haastrecht, Max, Bilge Yigit Ozkan, Matthieu Brinkhuis, and Marco Spruit. 2021. "Respite for SMEs: A Systematic Review of Socio-Technical Cybersecurity Metrics" Applied Sciences 11, no. 15: 6909. https://doi.org/10.3390/app11156909

APA Stylevan Haastrecht, M., Yigit Ozkan, B., Brinkhuis, M., & Spruit, M. (2021). Respite for SMEs: A Systematic Review of Socio-Technical Cybersecurity Metrics. Applied Sciences, 11(15), 6909. https://doi.org/10.3390/app11156909