A Particle PHD Filter for Dynamic Grid Map Building towards Indoor Environment

Abstract

1. Introduction

2. Particle Realization of PHD Filter under Grid Map

2.1. Multi-Object Bayesian Filter and PHD Filter Based on Random Finite Set

2.2. Measurement-Driven Particle PHD Filter Realization Based on Grid Map

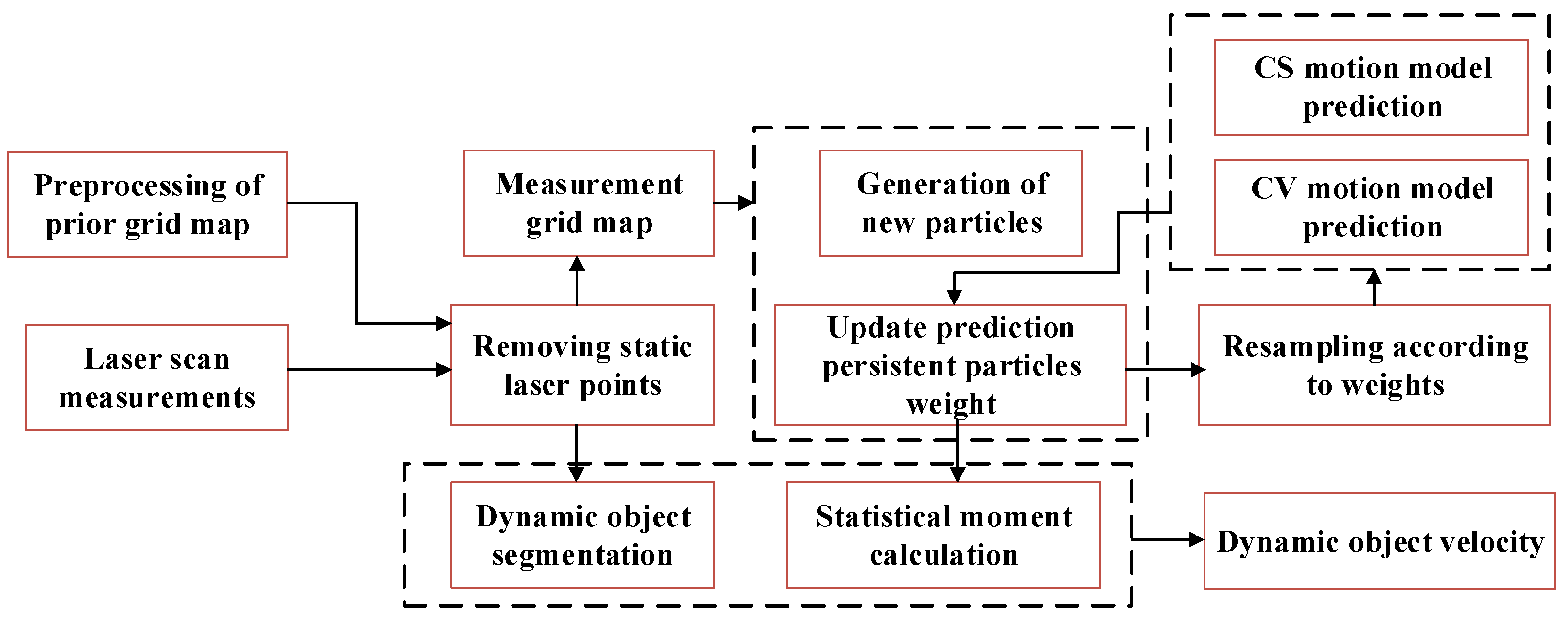

3. The Proposed Framework for Building Dynamic Grid Map

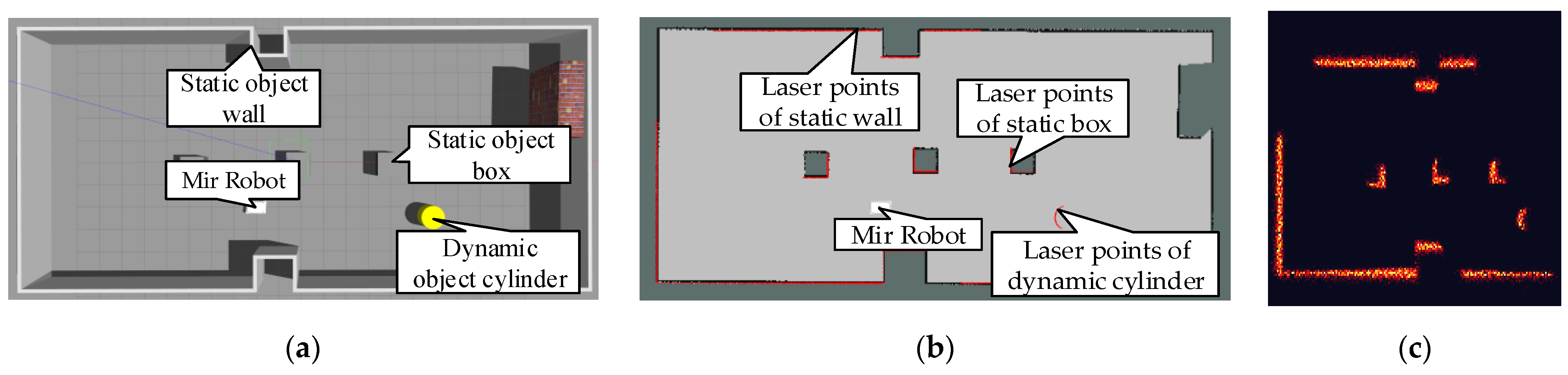

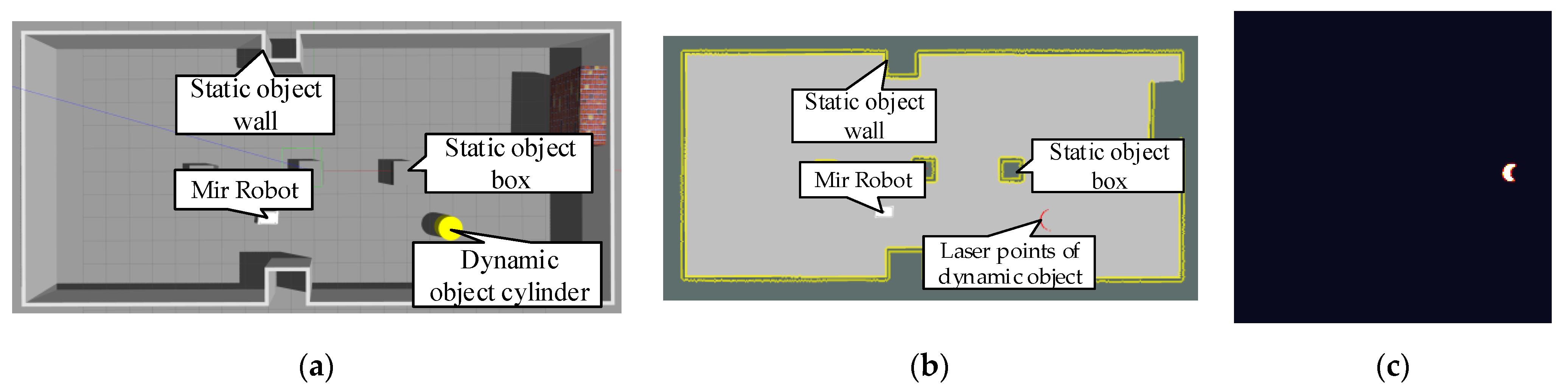

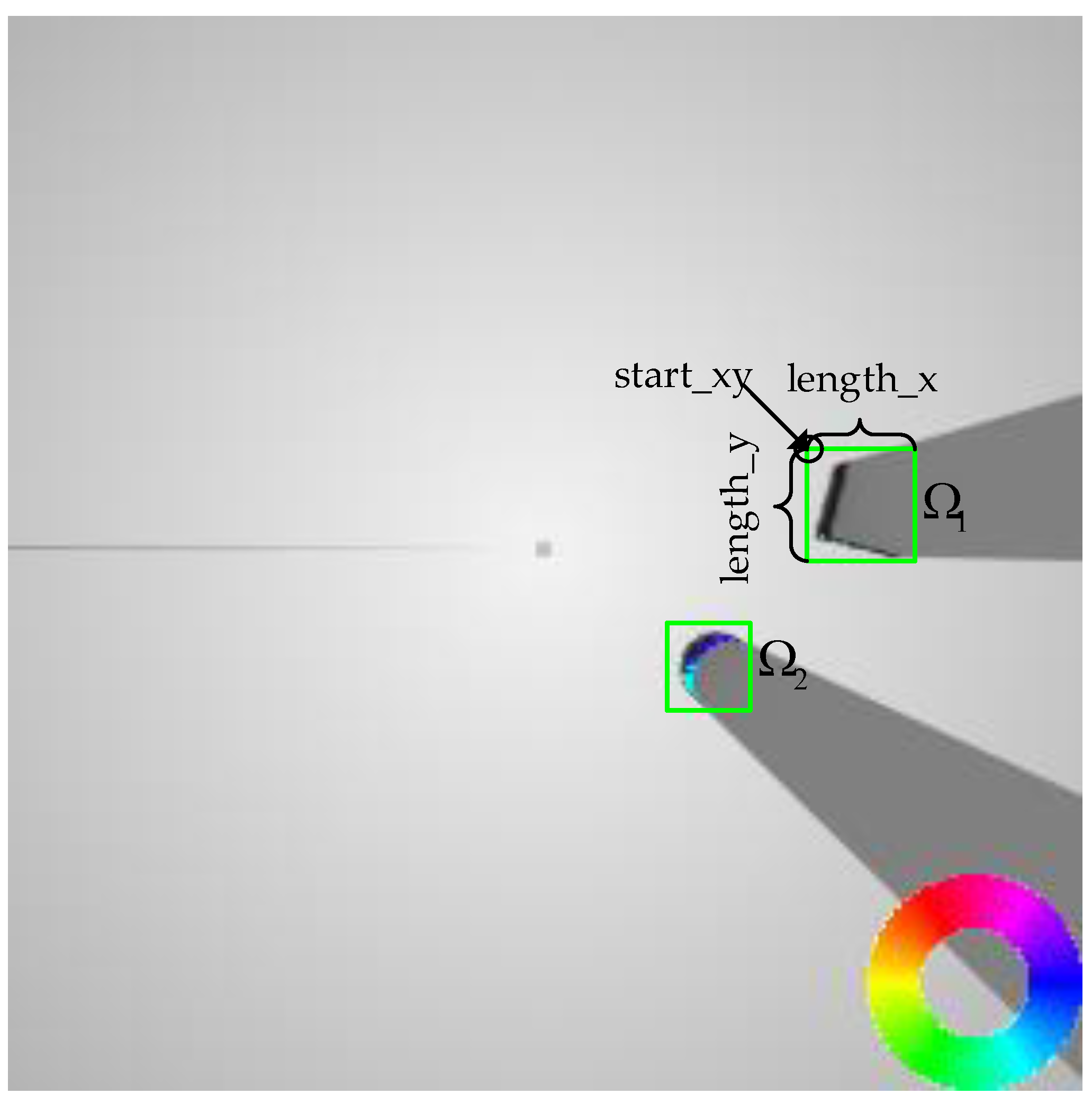

3.1. Preprocessing of LIDAR Measurements

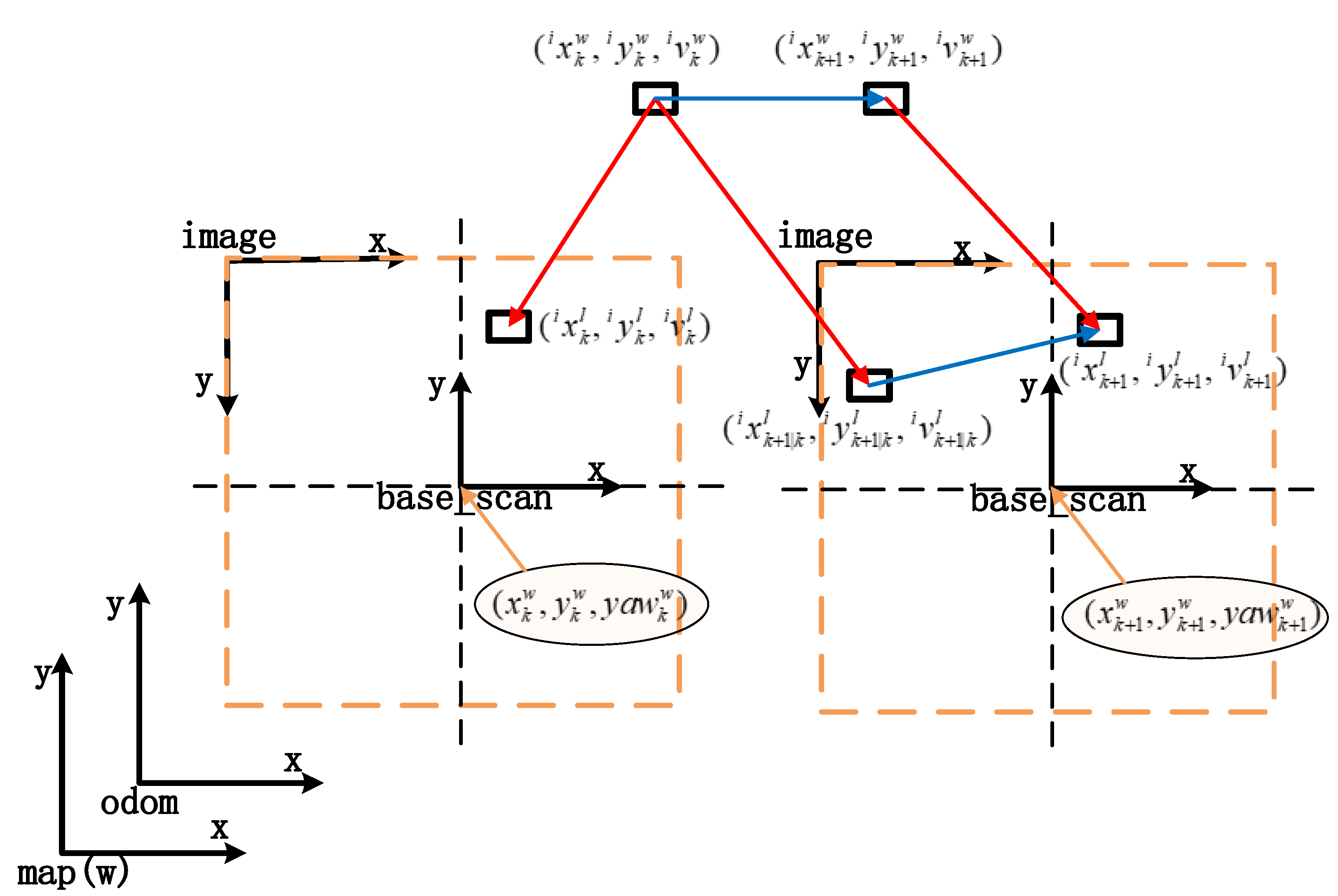

3.2. Particle State Updating with Robot Motion

- Obtaining the position of robot

- The transformation from the base_scan (robot) coordinate system to the odom coordinate system can be obtained through the odometry topic published by Gazebo or using TF_Listener, and the transformation of the odom and map coordinate system can be predefined in a roslaunch file in a simulation environment. In the actual positioning process, the pose of the robot can be obtained by slam positioning or Monte Carlo positioning in navigation.

- 2.

- Update of particle position information

- The scale coordinates of the particle in a local dynamic grid map system of time are .

- The scale translational coordinates of the base_scan coordinate system relative to the local dynamic grid map coordinate system are .

- The scale coordinates of the particle in the base_scan coordinate system of time are .

- Coordinates of the particle in the world system:

- Coordinate transformation from the world coordinate system to the coordinate system:

- 3.

- Update of particles velocity

- Assume that the particle velocities at time in the dynamic grid map coordinate system are .

- The particle velocities at time in the base coordinate system are

- When converting the velocity of the particle to the local dynamic grid coordinate system of time , we need to multiply by minus one.

3.3. Motion Model Design

3.4. Particle Weights Update

3.5. Dynamic Object Segmentation and State Estimation

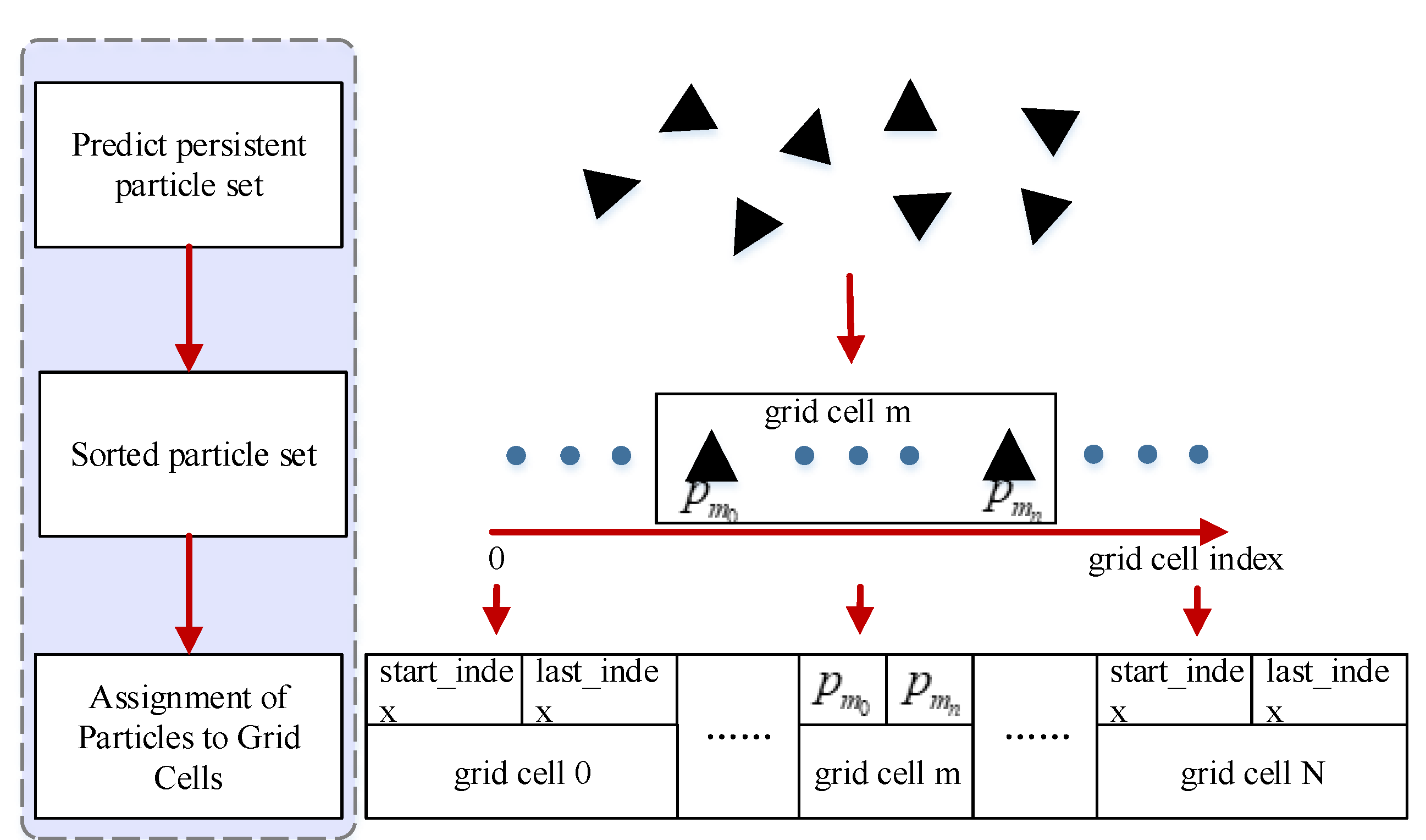

3.6. Parallel Implementation

- Use the GPU to calculate the polar coordinate measurement grid map in parallel. Assuming that the grid in the polar coordinate system corresponds to the laser measurement , the inverse sensor model can be used to calculate the occupancy probability of the grid cell :At the same time, it is also necessary to use measurement to estimate the free mass value of grid cell . For its calculation, please refer to the paper [26].

- After calculating the measurement grid map in polar coordinates, it needs to be converted into the measurement grid map in Cartesian coordinates. Here, the polar coordinate grid needs to be correctly mapped to the grid under Cartesian coordinates, and the value in the grid can be interpolated correctly. Here, it is necessary to correctly map the grid cell in polar coordinates to the corresponding grid cell in Cartesian coordinates and correctly interpolate and in the grid cell. In the paper, different conversion methods are compared, including the exact algorithm and the sampling approach. Here, we use the better texture mapping method and use the OpenGL library to convert the measurement grid map in the polar coordinate system to the measurement grid map in the Cartesian coordinate system.

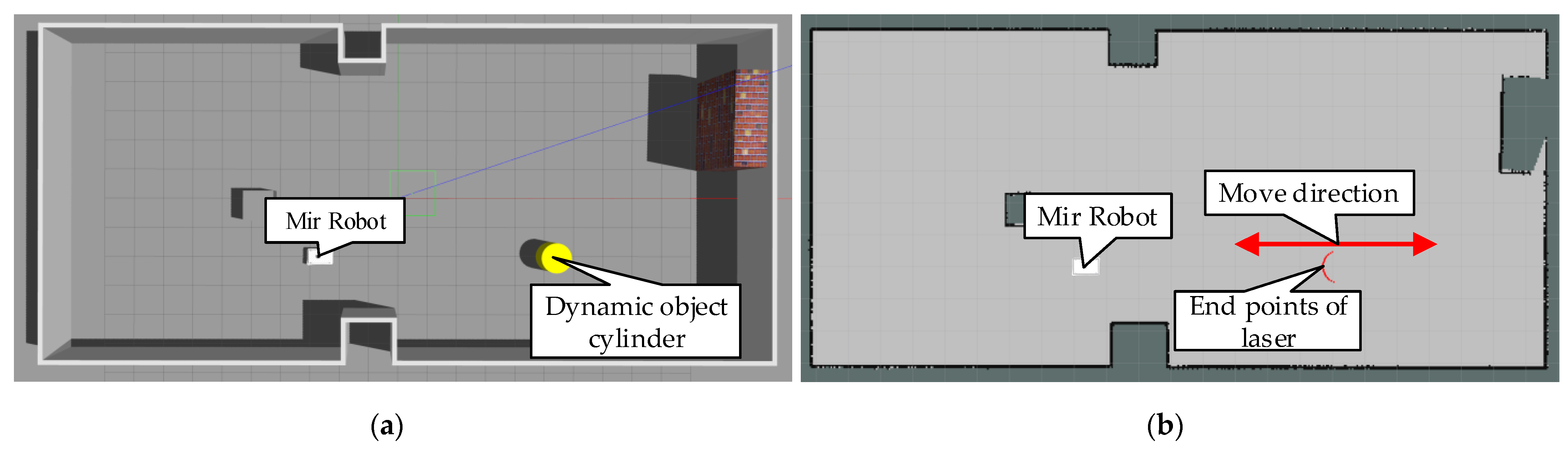

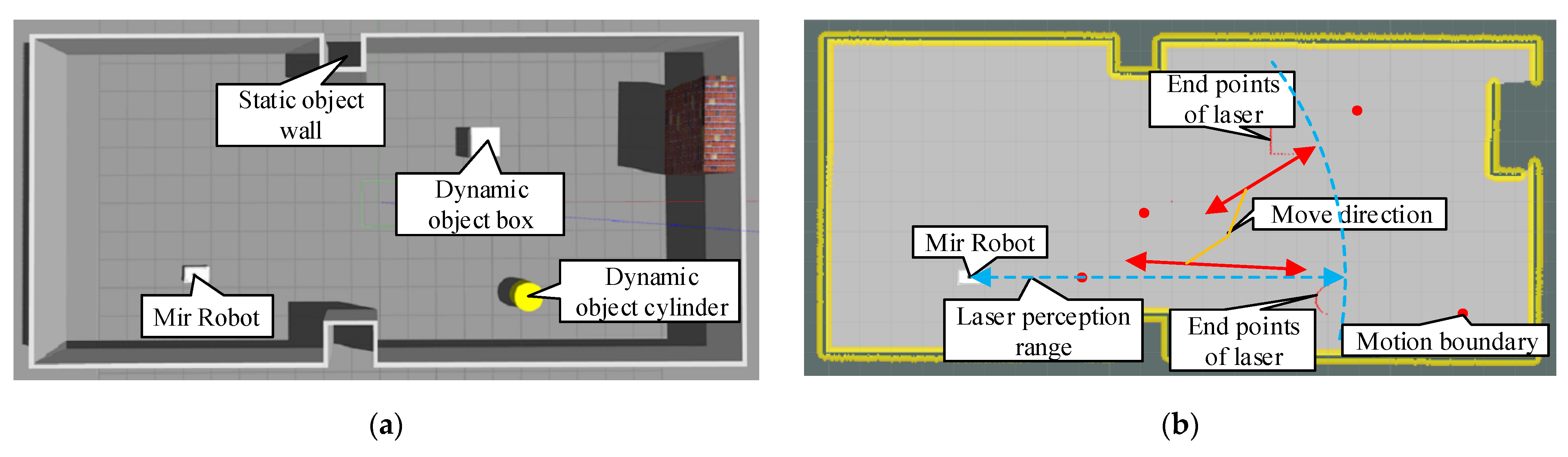

4. Simulation

4.1. Simulation Settings

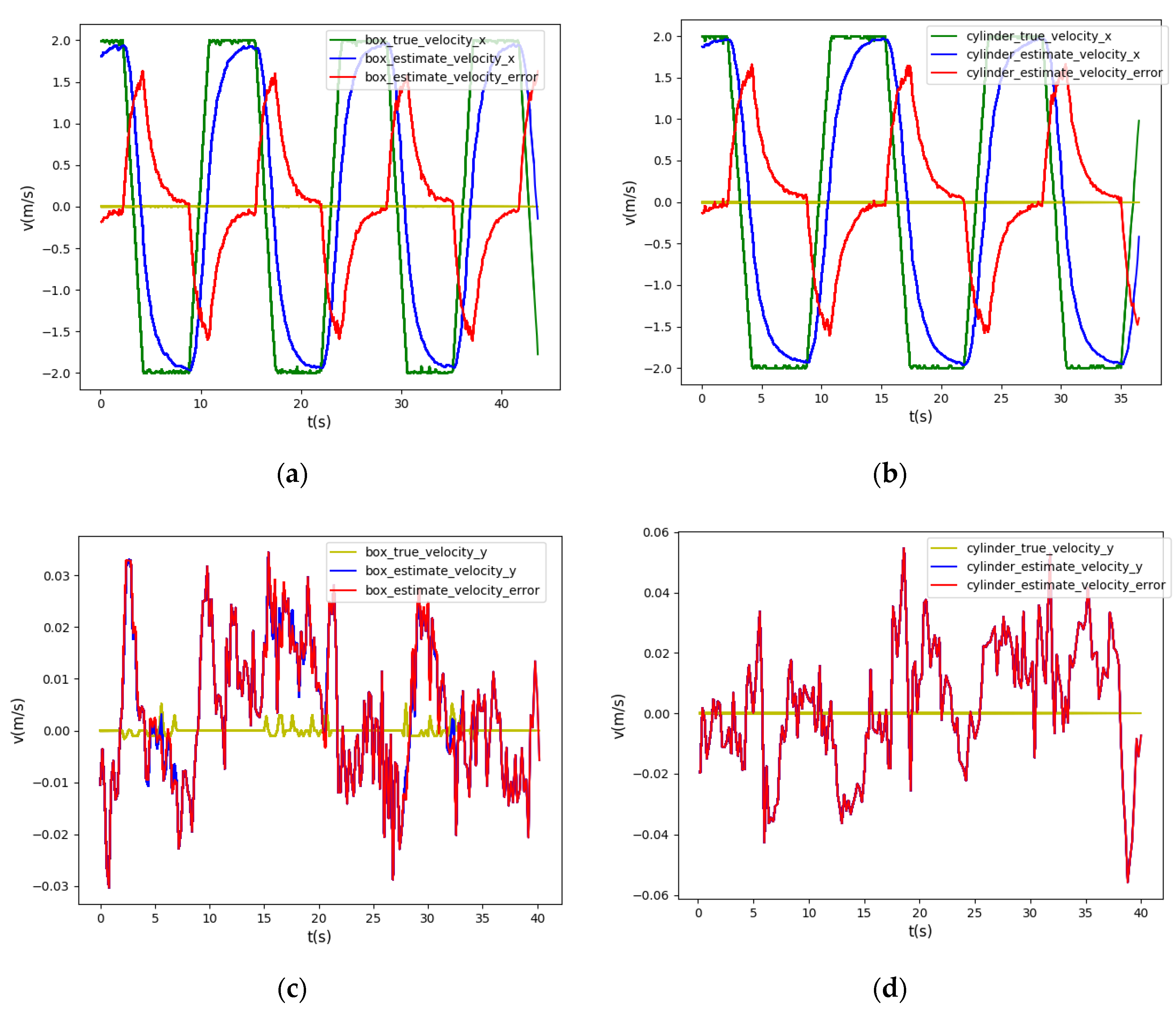

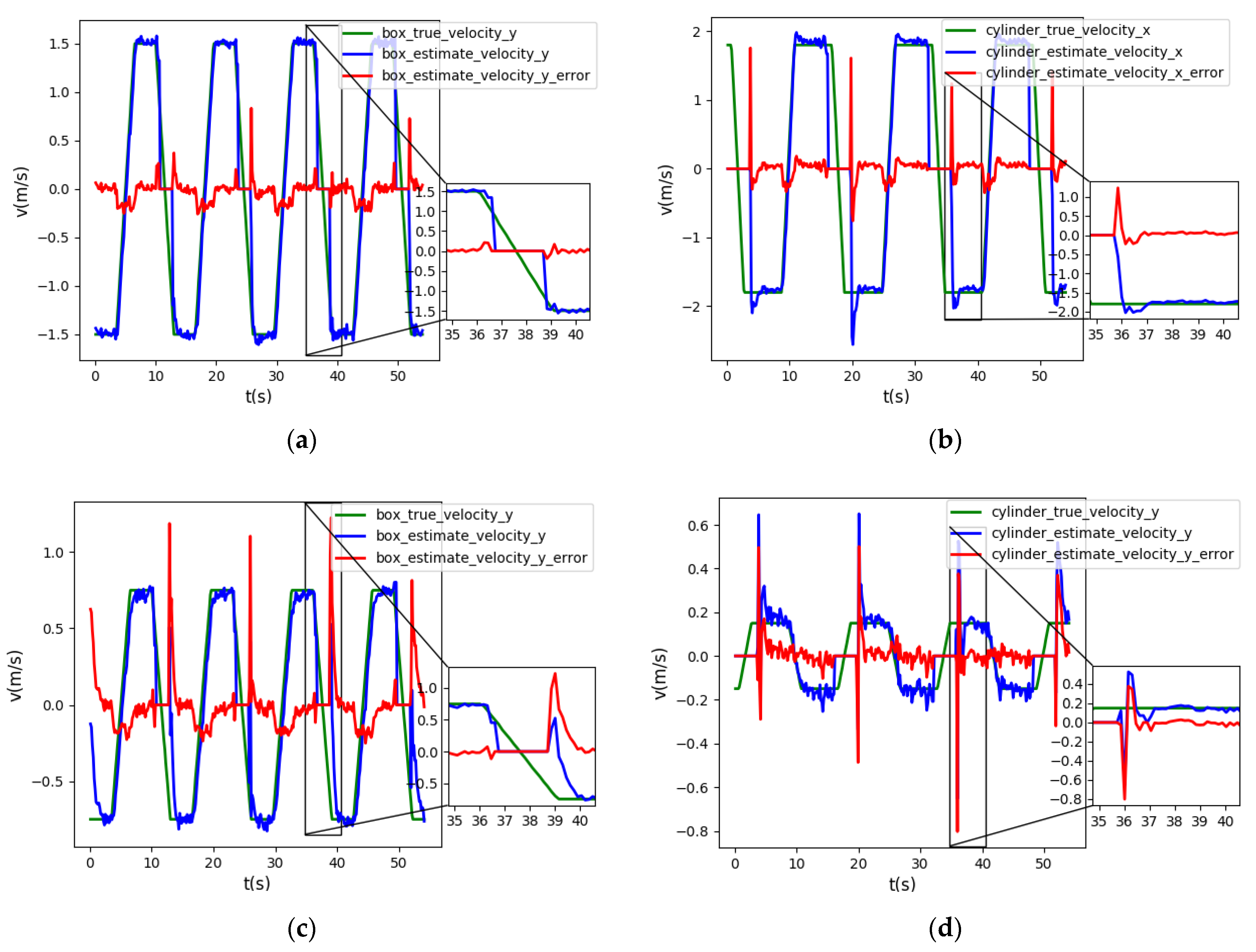

4.2. Accuracy Comparison of Speed Estimation

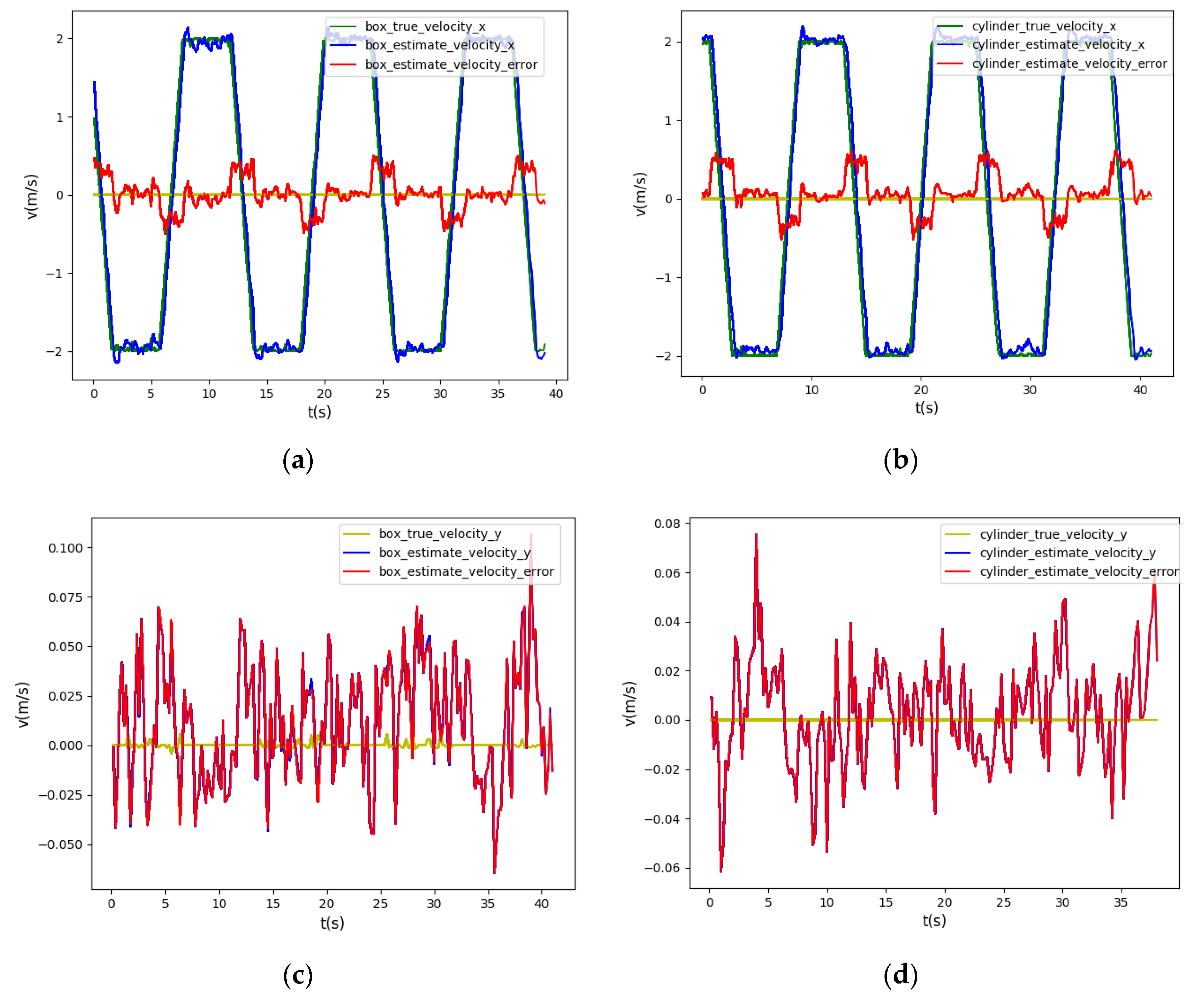

4.2.1. The Robot Is Stationary

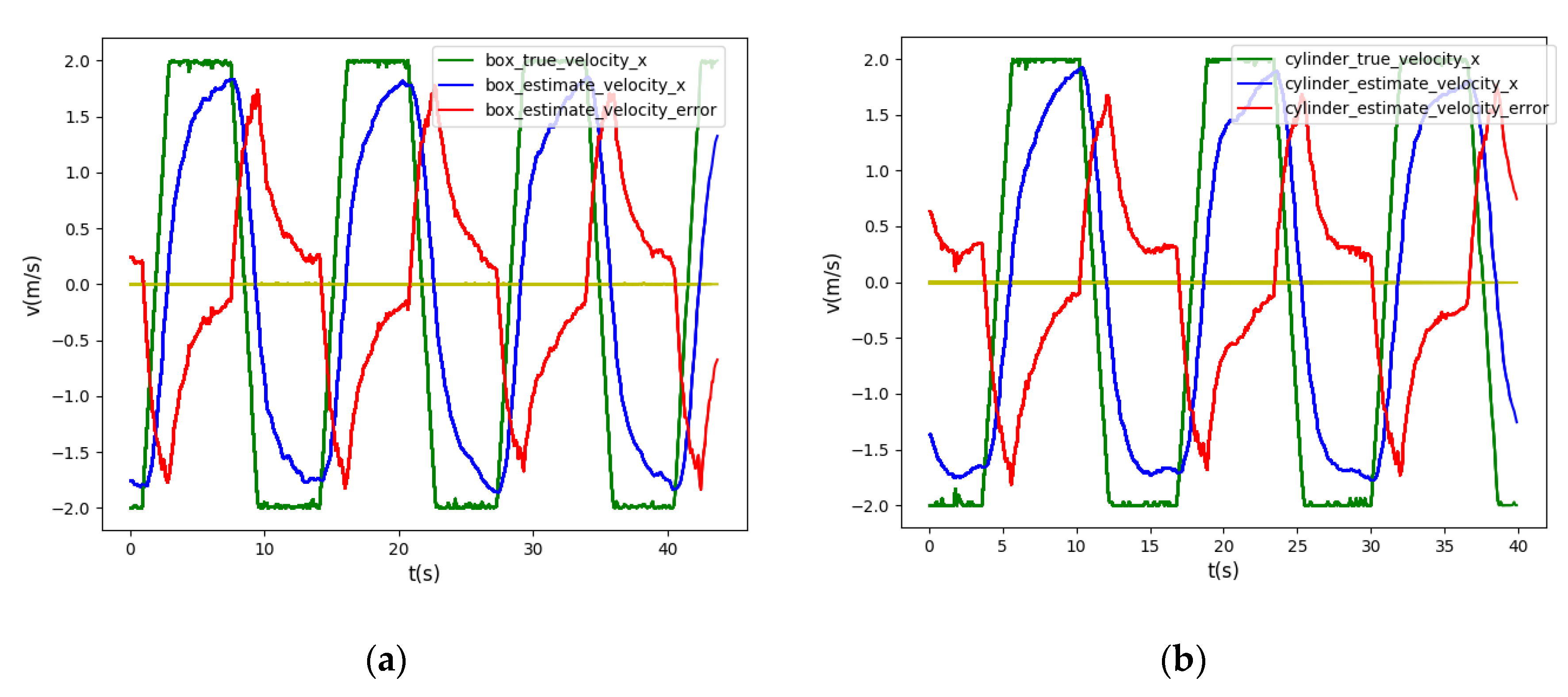

4.2.2. The Robot Is in Motion

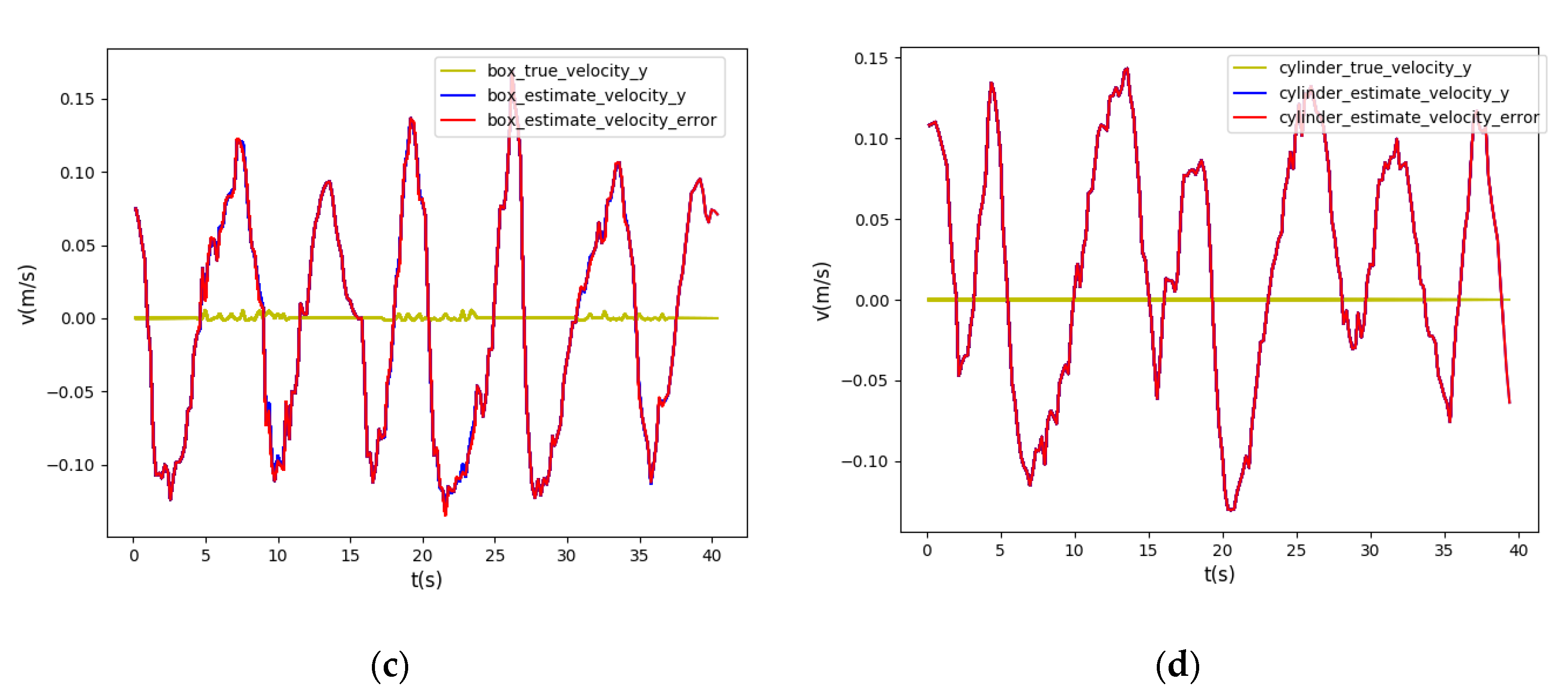

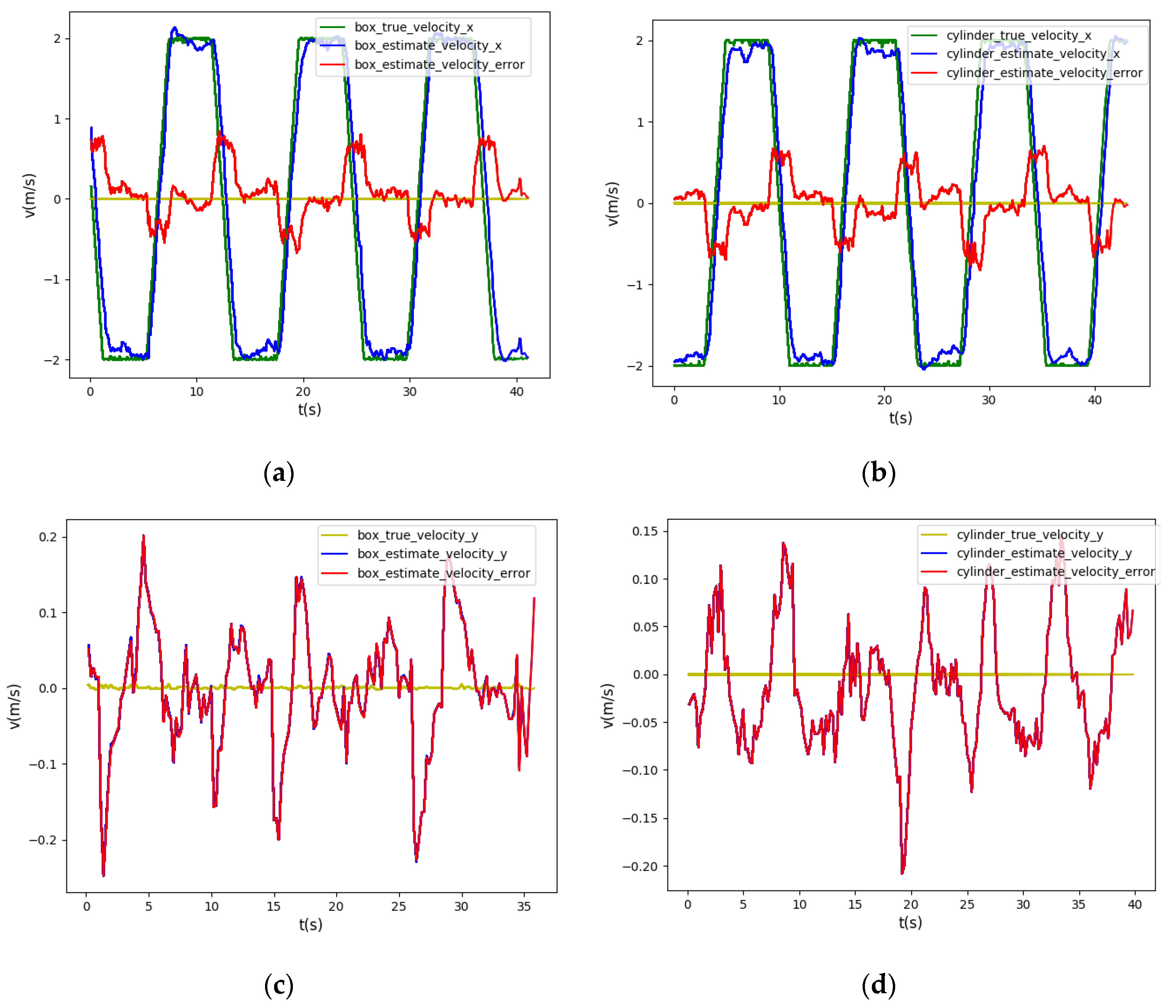

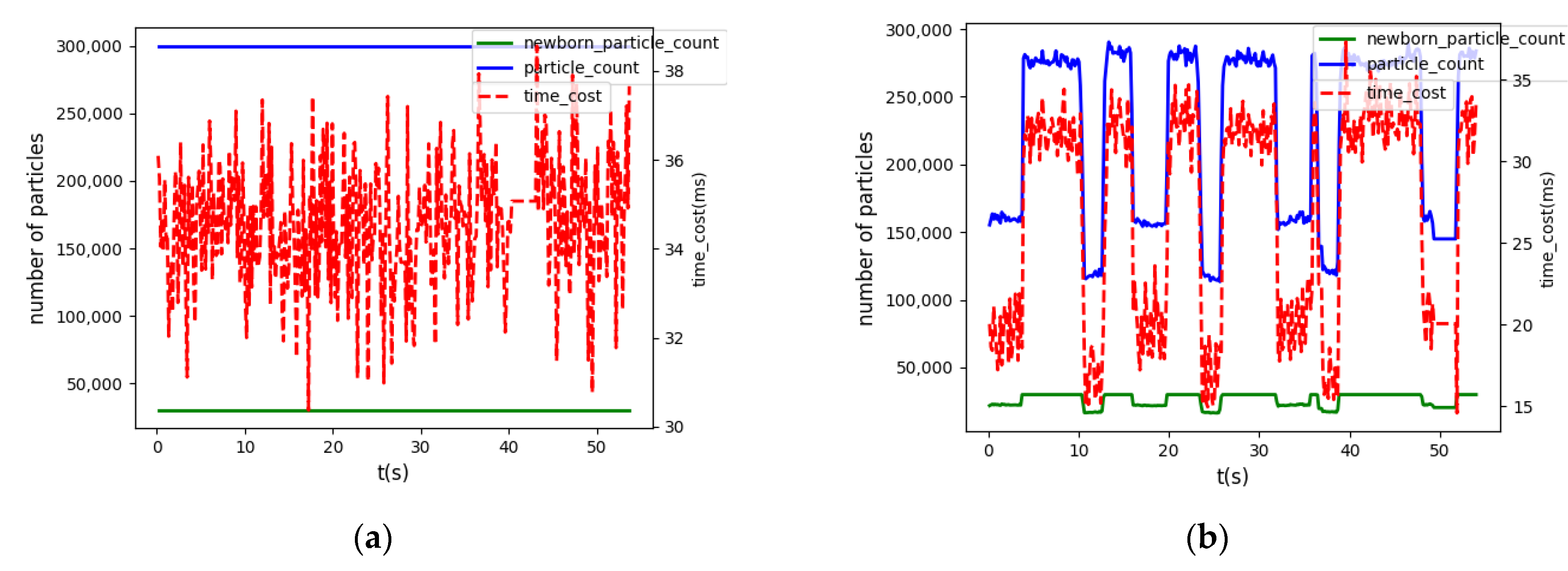

4.3. Speed Estimation of the Dynamic Objects Entering and Leaving the Robot’s Sensing Area

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zeng, L.; Bone, G.M. Mobile robot collision avoidance in human environments. Int. J. Adv. Robot. Syst. 2013, 10, 41. [Google Scholar] [CrossRef]

- Zhu, J.; Zhao, S.; Zhao, R. Path Planning for Autonomous Underwater Vehicle Based on Artificial Potential Field and Modified RRT. In Proceedings of the 2021 International Conference on Computer, Control and Robotics (ICCCR), Shanghai, China, 8–10 January 2021; pp. 21–25. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, J.; Bai, X. An Approach for Multi-Objective Obstacle Avoidance Using Dynamic Occupancy Grid Map. In Proceedings of the 2020 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 13–16 October 2020; pp. 1209–1215. [Google Scholar] [CrossRef]

- Goodman, I.R.; Mahler, R.P.S.; Nguyen, H.T. Mathematics of Data Fusion; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013; Volume 37, ISBN 9788578110796. [Google Scholar]

- Alexander, L.; Allen, S.; Bindoff, N.L. Statistical Multisource-Multitarget Information Fusion; Artech House, Inc.: Norwood, MA, USA, 2013; Volume 1, ISBN 9788578110796. [Google Scholar]

- Ristic, B. Particle Filters for Random Set Models; Springer: New York, NY, USA, 2013. [Google Scholar]

- Vo, B.N.; Singh, S.; Doucet, A. Sequential Monte Carlo methods for multi-target filtering with random finite sets. IEEE Trans. Aerosp. Electron. Syst. 2005, 41, 1224–1245. [Google Scholar] [CrossRef]

- Reuter, S.; Wilking, B.; Wiest, J.; Munz, M.; Dietmayer, K. Real-time multi-object tracking using random finite sets. IEEE Trans. Aerosp. Electron. Syst. 2013, 49, 2666–2678. [Google Scholar] [CrossRef]

- Whiteley, N.; Singh, S.; Godsill, S. Auxiliary particle implementation of probability hypothesis density filter. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 1437–1454. [Google Scholar] [CrossRef]

- Mahler, R.P.S. Multitarget Bayes Filtering via First-Order Multitarget Moments. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 1152–1178. [Google Scholar] [CrossRef]

- Mahler, R. PHD filters of higher order in target number. IEEE Trans. Aerosp. Electron. Syst. 2007, 43, 1523–1543. [Google Scholar] [CrossRef]

- Vo, B.T.; Vo, B.N.; Cantoni, A. Analytic implementations of the cardinalized probability hypothesis density filter. IEEE Trans. Signal Process. 2007, 55, 3553–3567. [Google Scholar] [CrossRef]

- Vo, B.T.; Vo, B.N.; Cantoni, A. The cardinality balanced multi-target multi-Bernoulli filter and its implementations. IEEE Trans. Signal Process. 2009, 57, 409–423. [Google Scholar] [CrossRef]

- Deusch, H.; Reuter, S.; Dietmayer, K. The Labeled Multi-Bernoulli SLAM Filter. IEEE Signal Process. Lett. 2015, 22, 1561–1565. [Google Scholar] [CrossRef]

- Vo, B.N.; Vo, B.T.; Beard, M. Multi-Sensor Multi-Object Tracking with the Generalized Labeled Multi-Bernoulli Filter. IEEE Trans. Signal Process. 2019, 67, 5952–5967. [Google Scholar] [CrossRef]

- Danescu, R.; Oniga, F.; Nedevschi, S. Modeling and tracking the driving environment with a particle-based occupancy grid. IEEE Trans. Intell. Transp. Syst. 2011, 12, 1331–1342. [Google Scholar] [CrossRef]

- Danescu, R.; Oniga, F.; Nedevschi, S. Particle grid tracking system for stereovision based environment perception. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 987–992. [Google Scholar] [CrossRef]

- Negre, A.; Rummelhard, L.; Laugier, C. Hybrid sampling Bayesian Occupancy Filter. In Proceedings of the 2014 IEEE Intelligent Vehicles Symposium Proceedings, Dearborn, MI, USA, 8–11 June 2014; pp. 1307–1312. [Google Scholar] [CrossRef]

- Tanzmeister, G.; Thomas, J.; Wollherr, D.; Buss, M. Grid-based mapping and Tracking in dynamic environments using a uniform evidential environment representation. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 6090–6095. [Google Scholar] [CrossRef]

- Luo, X.; Yang, C.; Chen, R.; Shi, Z. Improved SMC-PHD Filter for Multi-target. In Proceedings of the 2016 IEEE 84th Vehicular Technology Conference (VTC-Fall), Montreal, QC, Canada, 18–21 September 2016. [Google Scholar]

- Bao, Z.; Jiang, Q.; Liu, F. A PHD-Based Particle Filter for Detecting and Tracking Multiple Weak Targets. IEEE Access 2019, 7, 145843–145850. [Google Scholar] [CrossRef]

- Steyer, S.; Tanzmeister, G.; Wollherr, D. Object tracking based on evidential dynamic occupancy grids in urban environments. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 1064–1070. [Google Scholar] [CrossRef][Green Version]

- Zheng, J.; Gao, M.; Yu, H. Road-map aided VSIMM-GMPHD filter for ground moving target tracking. In Proceedings of the 2018 IEEE 4th International Conference on Computer and Communications (ICCC), Chengdu, China, 7–10 December 2018; pp. 190–195. [Google Scholar] [CrossRef]

- Nuss, D.; Yuan, T.; Krehl, G.; Stuebler, M.; Reuter, S.; Dietmayer, K. Fusion of laser and radar sensor data with a sequential Monte Carlo Bayesian occupancy filter. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Korea, 28 June–1 July 2015; pp. 1074–1081. [Google Scholar] [CrossRef]

- Nuss, D.; Reuter, S.; Thom, M.; Yuan, T.; Krehl, G.; Maile, M.; Gern, A.; Dietmayer, K. A random finite set approach for dynamic occupancy grid maps with real-time application. Int. J. Robot. Res. 2018, 37, 841–866. [Google Scholar] [CrossRef]

- Mahler, R.P. An Introduction to Multisource-Multitarget Statistics and its Applications; Lockheed Martin: Bethesda, MA, USA, 2000. [Google Scholar]

- Mahler, R. Statistical Multisource-Multitarget Information Fusion; Artech House, Inc.: Norwood, MA, USA, 2007. [Google Scholar]

- Vo, B.-N.; Singh, S.; Doucet, A. Sequential monte carlo implementation of the phd filter for multi-target tracking. In Proceedings of the Sixth International Conference of Information Fusion, Cairns, QLD, Australia, 8–11 July 2003; pp. 792–799. [Google Scholar] [CrossRef]

- Mahler, R.P.S. A Theoretical Foundation for the Stein-Winter “Probability Hypothesis Density (PHD)” Multitarget Tracking Approach; Army Research Office: Alexandria, VA, USA, 2000. [Google Scholar]

- Ristic, B.; Clark, D.; Vo, B.N. Improved SMC implementation of the PHD filter. In Proceedings of the 2010 13th International Conference on Information Fusion, Edinburgh, UK, 26–29 July 2010. [Google Scholar] [CrossRef]

- Zhan, R.H.; Gao, Y.Z.; Hu, J.M.; Zhang, J. SMC-PHD based multi-target track-before-detect with nonstandard point observations model. J. Cent. South Univ. 2015, 22, 232–240. [Google Scholar] [CrossRef]

- Nuss, D.; Wilking, B.; Wiest, J.; Deusch, H.; Reuter, S.; Dietmayer, K. Decision-free true positive estimation with grid maps for multi-object tracking. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), Hague, The Netherlands, 6–9 October 2013; pp. 28–34. [Google Scholar] [CrossRef]

- Yguel, M.; Aycard, O.; Laugier, C. Efficient GPU-based construction of occupancy grids using several laser range-finders. Int. J. Veh. Auton. Syst. 2008, 6, 48–83. [Google Scholar] [CrossRef]

- Homm, F.; Kaempchen, N.; Ota, J.; Burschka, D. Efficient Occupancy Grid Computation on the GPU with Lidar and Radar for Road Boundary Detection. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 1006–1013. [Google Scholar]

- Kim, S.; Cho, J.; Park, D. Moving-target position estimation using GPU-based particle filter for IoT sensing applications. Appl. Sci. 2017, 7, 1152. [Google Scholar] [CrossRef]

- Murray, L.M.; Lee, A.; Jacob, P.E. Parallel Resampling in the Particle Filter. J. Comput. Graph. Stat. 2016, 25, 789–805. [Google Scholar] [CrossRef]

| Parameter | Description | Value |

|---|---|---|

| M_p | Persistent number per point | 2500 |

| M_b | Birth number per point | 500 |

| P_b | Birth probability per point | 0.01 |

| Mean velocity of newborn particles | 0 | |

| Variance of newborn particles velocity | 30 | |

| P_s | Persistence probability | 0.95 |

| Normally distributed variance of noise position | 0.1 | |

| Normally distributed variance of noise velocity | 2.0 | |

| Maximum positive accelerations | 25 | |

| Maximum negative accelerations | 25 |

| Object | CV Model(m/s) | The System(m/s) | Improvement | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | S.D. | RMSE | MAE | S.D. | RMSE | MAE | S.D. | ||

| cylinder | x | 0.7098 | 0.5012 | 0.7069 | 0.2148 | 0.1518 | 0.2052 | 69.73% | 69.71% | 70.97% |

| y | 0.0207 | 0.0170 | 0.0205 | 0.0262 | 0.0208 | 0.0263 | −26.57% | −22.35% | −28.29% | |

| box | x | 0.7029 | 0.4983 | 0.7016 | 0.2036 | 0.1424 | 0.2029 | 71.03% | 71.42% | 71.08% |

| y | 0.0142 | 0.0114 | 0.0138 | 0.0179 | 0.0120 | 0.0159 | −26.05% | −5.26% | −15.27% | |

| Object | CV Model(m/s) | The System(m/s) | Improvement | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | MAE | S.D. | RMSE | MAE | S.D. | RMSE | MAE | S.D. | ||

| cylinder | x | 0.7596 | 0.6089 | 0.7218 | 0.2577 | 0.2124 | 0.2576 | 66.73% | 65.71% | 64.97% |

| y | 0.0761 | 0.0652 | 0.0727 | 0.0694 | 0.0538 | 0.0667 | 8.80% | 17.48% | 8.25% | |

| box | x | 0.8210 | 0.6763 | 0.8167 | 0.2910 | 0.2044 | 0.2827 | 64.55% | 69.77% | 65.38% |

| y | 0.0774 | 0.0682 | 0.0774 | 0.0856 | 0.0622 | 0.0838 | −10.59% | 8.79% | −8.26% | |

| Dynamic Object | Velocity Direction | Mean of Peak Error (m/s) | Convergence Time(s) | ||

|---|---|---|---|---|---|

| Nuss’s Method | The Proposed Method | Nuss’s Method | The Proposed Method | ||

| box | x-direction | 1.1899 | 0.4654 | 2.9625 | 0.312 |

| y-direction | 0.7971 | 1.0833 | 3.1125 | 0.825 | |

| cylinder | x-direction | 1.7644 | 1.5356 | 2.925 | 0.751 |

| y-direction | 0.2788 | 0.6005 | 2.55 | 1.275 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Zhao, C.; Wei, Y. A Particle PHD Filter for Dynamic Grid Map Building towards Indoor Environment. Appl. Sci. 2021, 11, 6891. https://doi.org/10.3390/app11156891

Liu Y, Zhao C, Wei Y. A Particle PHD Filter for Dynamic Grid Map Building towards Indoor Environment. Applied Sciences. 2021; 11(15):6891. https://doi.org/10.3390/app11156891

Chicago/Turabian StyleLiu, Yanjie, Changsen Zhao, and Yanlong Wei. 2021. "A Particle PHD Filter for Dynamic Grid Map Building towards Indoor Environment" Applied Sciences 11, no. 15: 6891. https://doi.org/10.3390/app11156891

APA StyleLiu, Y., Zhao, C., & Wei, Y. (2021). A Particle PHD Filter for Dynamic Grid Map Building towards Indoor Environment. Applied Sciences, 11(15), 6891. https://doi.org/10.3390/app11156891