Comparative Analysis of Current Approaches to Quality Estimation for Neural Machine Translation

Abstract

:1. Introduction

- Which mPLM is best for QE sub-tasks?

- Does the input order of the source sentence and the MT output sentence affect the performance of the model?

- We conduct comparative experiments on finetuning mPLMs for a QE task, which is different from research concerning the performance improvement of the WMT shared-task competition. This quantitative analysis allows us to revisit the pure performance of mPLMs for the QE task. To the best of our knowledge, we are the first to conduct such research;

- Through a comparative analysis concerning how to construct an appropriate input structure for QE, we reveal that the performance can be improved by simply changing the input order of the source sentence and the MT output;

- In the process of finetuning mPLMs, we only use data officially distributed in WMT20 (without external knowledge or data augmentation) and use the official test set to ensure objectivity for all experiments.

2. Related Work and Background

3. Multilingual Pretrained Language Models for QE

3.1. Multilingual BERT

3.2. Cross-Lingual Language Model

3.3. XLM-RoBERTa

3.4. Multilingual BART

4. Brief Introduction of the WMT20 QE Sub-Tasks

4.1. Sub-Task 1

4.2. Sub-Task 2

5. Question 1: Which mPLM Is Best for QE Tasks?

5.1. Dataset Details

5.2. Model Details

- XLM-R-base: Pretraining was performed with 220M parameters, 12 layers, 8 heads, and 768 hidden states.

- XLM-R-large: Pretraining was performed using 550M parameters. The hidden states were expanded to 1024, and 24 layers, and 16 heads were used, which is twice the scale of the base model.

- mBERT: The model parameters of mBERT were 110M, 12 layers, 768 hidden states, and 12 heads.

- mBART: mBART was pretrained with 610M parameters, 24 layers, 1024 hidden states, and 16 heads.

- XLM-CLM: A pretrained CLM for English and German. In total, 6 layers, 1024 hidden states, and 8 heads were used.

- XLM-MLM: A pretrained MLM for English and German. In total, 6 layers, 1024 hidden states, and 8 heads were used.

- XLM-MLM-17: Pretraining was conducted by expanding the MLM into 17 languages. It was trained using 570M parameters, 16 layers, 1280 hidden states, and 16 heads.

- XLM-MLM-100: Pretraining was conducted by expanding the MLM into 100 languages. It was trained using 570M parameters, 16 layers, 1280 hidden states, and 16 heads.

- XLM-TLM: TLM was performed for 15 languages. In total, 12 layers, 1024 hidden states, and 8 heads were used.

5.3. Experimental Results for Question 1

5.3.1. Sub-Task 1

5.3.2. Sub-Task 2

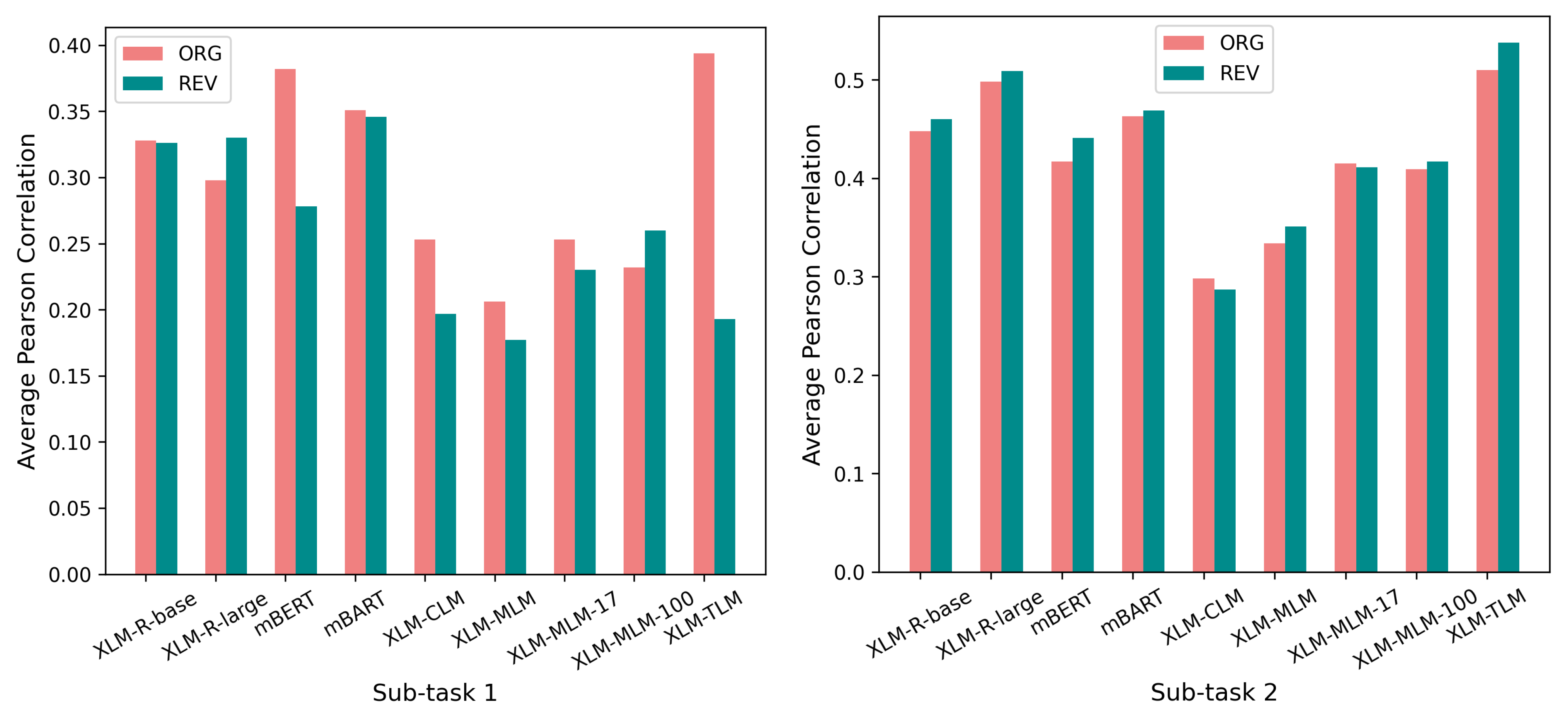

6. Question 2: Does the Input Order of the Source Sentence and the MT Output Sentence Affect the Performance of the Model?

6.1. Revisiting the QE Input Structure

6.2. Experimental Results for Question 2

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Specia, L.; Shah, K.; De Souza, J.G.; Cohn, T. QuEst-A translation quality estimation framework. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Sofia, Bulgaria, 4–9 August 2013; pp. 79–84. [Google Scholar]

- Specia, L.; Raj, D.; Turchi, M. Machine translation evaluation versus quality estimation. Mach. Transl. 2010, 24, 39–50. [Google Scholar] [CrossRef]

- do Carmo, F.; Shterionov, D.; Moorkens, J.; Wagner, J.; Hossari, M.; Paquin, E.; Schmidtke, D.; Groves, D.; Way, A. A review of the state-of-the-art in automatic post-editing. Mach. Transl. 2020, 1–43. [Google Scholar] [CrossRef]

- Moura, J.; Vera, M.; van Stigt, D.; Kepler, F.; Martins, A.F. Ist-unbabel participation in the wmt20 quality estimation shared task. In Proceedings of the Fifth Conference on Machine Translation, Online, 19–20 November 2020; pp. 1029–1036. [Google Scholar]

- Nakamachi, A.; Shimanaka, H.; Kajiwara, T.; Komachi, M. Tmuou submission for wmt20 quality estimation shared task. In Proceedings of the Fifth Conference on Machine Translation, Online, 19–20 November 2020; pp. 1037–1041. [Google Scholar]

- Rubino, R. Nict kyoto submission for the wmt’20 quality estimation task: Intermediate training for domain and task adaptation. In Proceedings of the Fifth Conference on Machine Translation, Online, 19–20 November 2020; pp. 1042–1048. [Google Scholar]

- Pires, T.; Schlinger, E.; Garrette, D. How multilingual is multilingual bert? arXiv 2019, arXiv:1906.01502. [Google Scholar]

- Lample, G.; Conneau, A. Cross-lingual language model pretraining. arXiv 2019, arXiv:1901.07291. [Google Scholar]

- Conneau, A.; Khandelwal, K.; Goyal, N.; Chaudhary, V.; Wenzek, G.; Guzmán, F.; Grave, E.; Ott, M.; Zettlemoyer, L.; Stoyanov, V. Unsupervised cross-lingual representation learning at scale. arXiv 2019, arXiv:1911.02116. [Google Scholar]

- Conneau, A.; Lample, G.; Rinott, R.; Williams, A.; Bowman, S.R.; Schwenk, H.; Stoyanov, V. XNLI: Evaluating cross-lingual sentence representations. arXiv 2018, arXiv:1809.05053. [Google Scholar]

- Lewis, P.; Oğuz, B.; Rinott, R.; Riedel, S.; Schwenk, H. Mlqa: Evaluating cross-lingual extractive question answering. arXiv 2019, arXiv:1910.07475. [Google Scholar]

- Lee, D. Two-phase cross-lingual language model fine-tuning for machine translation quality estimation. In Proceedings of the Fifth Conference on Machine Translation, Online, 19–20 November 2020; pp. 1024–1028. [Google Scholar]

- Baek, Y.; Kim, Z.M.; Moon, J.; Kim, H.; Park, E. Patquest: Papago translation quality estimation. In Proceedings of the Fifth Conference on Machine Translation, Online, 19–20 November 2020; pp. 991–998. [Google Scholar]

- Fomicheva, M.; Sun, S.; Yankovskaya, L.; Blain, F.; Chaudhary, V.; Fishel, M.; Guzmán, F.; Specia, L. Bergamot-latte submissions for the wmt20 quality estimation shared task. In Proceedings of the Fifth Conference on Machine Translation, Online, 19–20 November 2020. [Google Scholar]

- Specia, L.; Blain, F.; Fomicheva, M.; Fonseca, E.; Chaudhary, V.; Guzmán, F.; Martins, A.F.T. Findings of the WMT 2020 Shared Task on Quality Estimation. In Proceedings of the Fifth Conference on Machine Translation, Online, 19–20 November 2020; pp. 743–764. [Google Scholar]

- Zhou, L.; Ding, L.; Takeda, K. Zero-shot translation quality estimation with explicit cross-lingual patterns. arXiv 2020, arXiv:2010.04989. [Google Scholar]

- Ranasinghe, T.; Orasan, C.; Mitkov, R. TransQuest: Translation Quality Estimation with Cross-lingual Transformers. In Proceedings of the 28th International Conference on Computational Linguistics, Barcelona, Spain, 12 December 2020; pp. 5070–5081. [Google Scholar] [CrossRef]

- Liu, Y.; Gu, J.; Goyal, N.; Li, X.; Edunov, S.; Ghazvininejad, M.; Lewis, M.; Zettlemoyer, L. Multilingual denoising pre-training for neural machine translation. Trans. Assoc. Comput. Linguist. 2020, 8, 726–742. [Google Scholar] [CrossRef]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. BLEU: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

- Banerjee, S.; Lavie, A. METEOR: An automatic metric for MT evaluation with improved correlation with human judgments. In Proceedings of the Acl Workshop on Intrinsic and Extrinsic Evaluation Measures for Machine Translation and/or Summarization, Ann Arbor, MI, USA, 29 June 2005; pp. 65–72. [Google Scholar]

- Shah, K.; Cohn, T.; Specia, L. A bayesian non-linear method for feature selection in machine translation quality estimation. Mach. Transl. 2015, 29, 101–125. [Google Scholar] [CrossRef] [Green Version]

- Cohn, T.; Specia, L. Modelling annotator bias with multi-task gaussian processes: An application to machine translation quality estimation. In Proceedings of the 51st Annual Meeting of the Association for Computational Linguistics, Sofia, Bulgaria, 4–9 August 2013; Volume 1, pp. 32–42. [Google Scholar]

- Hardmeier, C.; Nivre, J.; Tiedemann, J. Tree kernels for machine translation quality estimation. In Proceedings of the Seventh Workshop on Statistical Machine Translation, Montréal, QC, Canada, 7–8 June 2012; pp. 109–113. [Google Scholar]

- Soricut, R.; Bach, N.; Wang, Z. The SDL language weaver systems in the WMT12 quality estimation shared task. In Proceedings of the Seventh Workshop on Statistical Machine Translation, Montreal, QC, Canada, 7–8 June 2012; pp. 145–151. [Google Scholar]

- Moreau, E.; Vogel, C. Quality estimation: An experimental study using unsupervised similarity measures. In Proceedings of the Seventh Workshop on Statistical Machine Translation, Montréal, QC, Canada, 7–8 June 2012; pp. 120–126. [Google Scholar]

- Felice, M.; Specia, L. Linguistic features for quality estimation. In Proceedings of the Seventh Workshop on Statistical Machine Translation, Montréal, QC, Canada, 7–8 June 2012; pp. 96–103. [Google Scholar]

- Scarton, C.; Specia, L. Exploring consensus in machine translation for quality estimation. In Proceedings of the Ninth Workshop on Statistical Machine Translation, Baltimore, MD, USA, 26–27 June 2014; pp. 342–347. [Google Scholar]

- Luong, N.Q.; Lecouteux, B.; Besacier, L. LIG system for WMT13 QE task: Investigating the usefulness of features in word confidence estimation for MT. In Proceedings of the 8th Workshop on Statistical Machine Translation, Sofia, Bulgaria, 8–9 August 2013; pp. 386–391. [Google Scholar]

- Kim, H.; Lee, J.H. Recurrent neural network based translation quality estimation. In Proceedings of the First Conference on Machine Translation, Berlin, Germany, 11–12 August 2016; Volume 2, pp. 787–792. [Google Scholar]

- Patel, R.N. Translation quality estimation using recurrent neural network. arXiv 2016, arXiv:1610.04841. [Google Scholar]

- Kim, H.; Lee, J.H.; Na, S.H. Predictor-estimator using multilevel task learning with stack propagation for neural quality estimation. In Proceedings of the Second Conference on Machine Translation, Copenhagen, Denmarkm, 7–8 September 2017; pp. 562–568. [Google Scholar]

- Wang, J.; Fan, K.; Li, B.; Zhou, F.; Chen, B.; Shi, Y.; Si, L. Alibaba submission for WMT18 quality estimation task. In Proceedings of the Third Conference on Machine Translation: Shared Task Papers, Brussels, Belgium, 31 October–1 November 2018; pp. 809–815. [Google Scholar]

- Kepler, F.; Trénous, J.; Treviso, M.; Vera, M.; Góis, A.; Farajian, M.A.; Lopes, A.V.; Martins, A.F. Unbabel’s Participation in the WMT19 Translation Quality Estimation Shared Task. arXiv 2019, arXiv:1907.10352. [Google Scholar]

- Kim, H.; Lim, J.H.; Kim, H.K.; Na, S.H. QE BERT: bilingual BERT using multi-task learning for neural quality estimation. In Proceedings of the Fourth Conference on Machine Translation, Florence, Italy, 1–2 August 2019; Volume 3, pp. 85–89. [Google Scholar]

- Ranasinghe, T.; Orasan, C.; Mitkov, R. TransQuest at WMT2020: Sentence-Level Direct Assessment. arXiv 2020, arXiv:2010.05318. [Google Scholar]

- Wang, M.; Yang, H.; Shang, H.; Wei, D.; Guo, J.; Lei, L.; Qin, Y.; Tao, S.; Sun, S.; Chen, Y.; et al. Hw-tsc’s participation at wmt 2020 quality estimation shared task. In Proceedings of the Fifth Conference on Machine Translation, Online, 19–20 November 2020; pp. 1056–1061. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Wenzek, G.; Lachaux, M.A.; Conneau, A.; Chaudhary, V.; Guzman, F.; Joulin, A.; Grave, E. Ccnet: Extracting high quality monolingual datasets from web crawl data. arXiv 2019, arXiv:1911.00359. [Google Scholar]

- Lewis, M.; Liu, Y.; Goyal, N.; Ghazvininejad, M.; Mohamed, A.; Levy, O.; Stoyanov, V.; Zettlemoyer, L. Bart: Denoising sequence-to-sequence pre-training for natural language generation, translation, and comprehension. arXiv 2019, arXiv:1910.13461. [Google Scholar]

- Snover, M.; Dorr, B.; Schwartz, R.; Micciulla, L.; Makhoul, J. A study of translation edit rate with targeted human annotation. In Proceedings of the Association for Machine Translation in the Americas, Cambridge, MA, USA, 8–12 August 2006; Volume 200. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C.; Moi, A.; Cistac, P.; Rault, T.; Louf, R.; Funtowicz, M.; et al. HuggingFace’s Transformers: State-of-the-art natural language processing. arXiv 2019, arXiv:1910.03771. [Google Scholar]

- Park, C.; Yang, Y.; Park, K.; Lim, H. Decoding strategies for improving low-resource machine translation. Electronics 2020, 9, 1562. [Google Scholar] [CrossRef]

- Lee, C.; Yang, K.; Whang, T.; Park, C.; Matteson, A.; Lim, H. Exploring the Data Efficiency of Cross-Lingual Post-Training in Pretrained Language Models. Appl. Sci. 2021, 11, 1974. [Google Scholar] [CrossRef]

| Sub-Task 1 | Sub-Task 2 | |||||

|---|---|---|---|---|---|---|

| Train | Dev | Test | Train | Dev | Test | |

| # Instance | 7000 | 1000 | 1000 | 7000 | 1000 | 1000 |

| # SRC Token | 98,127 | 14,102 | 14,043 | 114,980 | 16,519 | 16,371 |

| # MT Token | 97,453 | 14,003 | 14,019 | 112,342 | 16,160 | 16,154 |

| Average Score | −0.008 | −0.049 | 0.040 | 0.318 | 0.312 | 0.312 |

| Median Score | 0.162 | 0.211 | 0.319 | 0.3 | 0.295 | 0.286 |

| Pearson | MAE | RMSE | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Max | Min | Average | Min | Max | Average | Min | Max | Average | |

| XLM-R-base | 0.380 | 0.280 | 0.328 | 0.459 | 0.479 | 0.473 | 0.648 | 0.679 | 0.665 |

| XLM-R-large | 0.338 | 0.242 | 0.298 | 0.480 | 0.520 | 0.495 | 0.685 | 0.713 | 0.698 |

| mBERT | 0.407 | 0.322 | 0.382 | 0.452 | 0.468 | 0.458 | 0.642 | 0.672 | 0.655 |

| mBART | 0.402 | 0.306 | 0.351 | 0.465 | 0.534 | 0.490 | 0.642 | 0.729 | 0.677 |

| XLM-CLM | 0.296 | 0.168 | 0.253 | 0.474 | 0.516 | 0.489 | 0.683 | 0.703 | 0.691 |

| XLM-MLM | 0.219 | 0.192 | 0.206 | 0.493 | 0.526 | 0.503 | 0.693 | 0.728 | 0.708 |

| XLM-MLM-17 | 0.318 | 0.143 | 0.253 | 0.465 | 0.525 | 0.490 | 0.670 | 0.731 | 0.696 |

| XLM-MLM-100 | 0.256 | 0.191 | 0.232 | 0.482 | 0.536 | 0.498 | 0.690 | 0.702 | 0.695 |

| XLM-TLM | 0.442 | 0.336 | 0.394 | 0.451 | 0.683 | 0.517 | 0.631 | 0.805 | 0.681 |

| Pearson | MAE | RMSE | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Max | Min | Average | Min | Max | Average | Min | Max | Average | |

| XLM-R-base | 0.456 | 0.438 | 0.448 | 0.146 | 0.156 | 0.150 | 0.189 | 0.204 | 0.195 |

| XLM-R-large | 0.507 | 0.489 | 0.498 | 0.141 | 0.155 | 0.145 | 0.178 | 0.204 | 0.186 |

| mBERT | 0.435 | 0.389 | 0.417 | 0.149 | 0.182 | 0.160 | 0.189 | 0.230 | 0.204 |

| mBART | 0.475 | 0.452 | 0.463 | 0.142 | 0.148 | 0.144 | 0.179 | 0.195 | 0.184 |

| XLM-CLM | 0.309 | 0.275 | 0.298 | 0.158 | 0.161 | 0.159 | 0.196 | 0.200 | 0.198 |

| XLM-MLM | 0.358 | 0.303 | 0.334 | 0.156 | 0.160 | 0.158 | 0.194 | 0.199 | 0.197 |

| XLM-MLM-17 | 0.433 | 0.408 | 0.415 | 0.149 | 0.157 | 0.154 | 0.188 | 0.192 | 0.190 |

| XLM-MLM-100 | 0.421 | 0.381 | 0.409 | 0.152 | 0.164 | 0.158 | 0.190 | 0.207 | 0.198 |

| XLM-TLM | 0.522 | 0.498 | 0.510 | 0.152 | 0.222 | 0.177 | 0.199 | 0.273 | 0.227 |

| Pearson | MAE | RMSE | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Max | Min | Average | Avg Diff | Min | Max | Average | Min | Max | Average | |

| XLM-R-base | 0.365 | 0.272 | 0.326 | −0.002 | 0.462 | 0.495 | 0.481 | 0.653 | 0.698 | 0.670 |

| XLM-R-large | 0.394 | 0.260 | 0.330 | +0.032 | 0.447 | 0.508 | 0.479 | 0.644 | 0.729 | 0.681 |

| mBERT | 0.402 | 0.106 | 0.278 | −0.104 | 0.453 | 0.553 | 0.498 | 0.648 | 0.762 | 0.700 |

| mBART | 0.388 | 0.277 | 0.346 | −0.005 | 0.436 | 0.543 | 0.478 | 0.664 | 0.693 | 0.674 |

| XLM-CLM | 0.268 | 0.147 | 0.197 | −0.056 | 0.483 | 0.515 | 0.502 | 0.688 | 0.714 | 0.698 |

| XLM-MLM | 0.250 | 0.128 | 0.177 | −0.029 | 0.517 | 0.557 | 0.540 | 0.694 | 0.751 | 0.727 |

| XLM-MLM-17 | 0.267 | 0.172 | 0.230 | −0.023 | 0.482 | 0.502 | 0.491 | 0.682 | 0.713 | 0.693 |

| XLM-MLM-100 | 0.314 | 0.189 | 0.260 | +0.028 | 0.503 | 0.587 | 0.544 | 0.666 | 0.748 | 0.709 |

| XLM-TLM | 0.234 | 0.141 | 0.193 | −0.201 | 0.563 | 1.115 | 0.896 | 0.739 | 1.237 | 1.061 |

| Pearson | MAE | RMSE | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Max | Min | Average | Avg Diff | Min | Max | Average | Min | Max | Average | |

| XLM-R-base | 0.464 | 0.453 | 0.460 | +0.012 | 0.144 | 0.153 | 0.148 | 0.184 | 0.199 | 0.191 |

| XLM-R-large | 0.523 | 0.501 | 0.509 | +0.011 | 0.140 | 0.144 | 0.142 | 0.178 | 0.188 | 0.183 |

| mBERT | 0.449 | 0.434 | 0.441 | +0.024 | 0.147 | 0.179 | 0.162 | 0.185 | 0.229 | 0.207 |

| mBART | 0.478 | 0.463 | 0.469 | +0.006 | 0.141 | 0.151 | 0.145 | 0.179 | 0.196 | 0.187 |

| XLM-CLM | 0.297 | 0.283 | 0.287 | −0.011 | 0.159 | 0.162 | 0.160 | 0.197 | 0.205 | 0.199 |

| XLM-MLM | 0.364 | 0.333 | 0.351 | +0.017 | 0.153 | 0.159 | 0.156 | 0.193 | 0.200 | 0.196 |

| XLM-MLM-17 | 0.420 | 0.405 | 0.411 | −0.004 | 0.154 | 0.218 | 0.172 | 0.190 | 0.273 | 0.217 |

| XLM-MLM-100 | 0.442 | 0.405 | 0.417 | +0.008 | 0.151 | 0.183 | 0.161 | 0.187 | 0.220 | 0.196 |

| XLM-TLM | 0.552 | 0.526 | 0.538 | +0.028 | 0.156 | 0.168 | 0.163 | 0.204 | 0.218 | 0.212 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Eo, S.; Park, C.; Moon, H.; Seo, J.; Lim, H. Comparative Analysis of Current Approaches to Quality Estimation for Neural Machine Translation. Appl. Sci. 2021, 11, 6584. https://doi.org/10.3390/app11146584

Eo S, Park C, Moon H, Seo J, Lim H. Comparative Analysis of Current Approaches to Quality Estimation for Neural Machine Translation. Applied Sciences. 2021; 11(14):6584. https://doi.org/10.3390/app11146584

Chicago/Turabian StyleEo, Sugyeong, Chanjun Park, Hyeonseok Moon, Jaehyung Seo, and Heuiseok Lim. 2021. "Comparative Analysis of Current Approaches to Quality Estimation for Neural Machine Translation" Applied Sciences 11, no. 14: 6584. https://doi.org/10.3390/app11146584

APA StyleEo, S., Park, C., Moon, H., Seo, J., & Lim, H. (2021). Comparative Analysis of Current Approaches to Quality Estimation for Neural Machine Translation. Applied Sciences, 11(14), 6584. https://doi.org/10.3390/app11146584