English–Welsh Cross-Lingual Embeddings

Abstract

1. Introduction

- Cross-lingual embeddings: we train, evaluate, and release a wealth of cross-lingual English–Welsh word embeddings.

- Train and test dictionary data: we release to the community a bilingual English–Welsh dictionary with a fixed train/test split, to foster reproducible research in Welsh NLP development.

- Sentiment analysis: we train, evaluate, and release a Welsh sentiment analysis system, fine-tuned on the domain of movie reviews.

- Qualitative analysis: we analyze some of the properties (in terms of nearest neighbors) of the cross-lingual spaces, and discuss them in the context of avenues for future work.

2. Background

2.1. Welsh Language NLP

2.2. Cross-Lingual Embeddings

3. Materials

3.1. Corpora

3.2. Text Corpus Creation

3.3. Word Embeddings

3.4. Bilingual Dictionary

4. Methods

5. Results

5.1. Quantitative Evaluation

5.2. Qualitative Evaluation

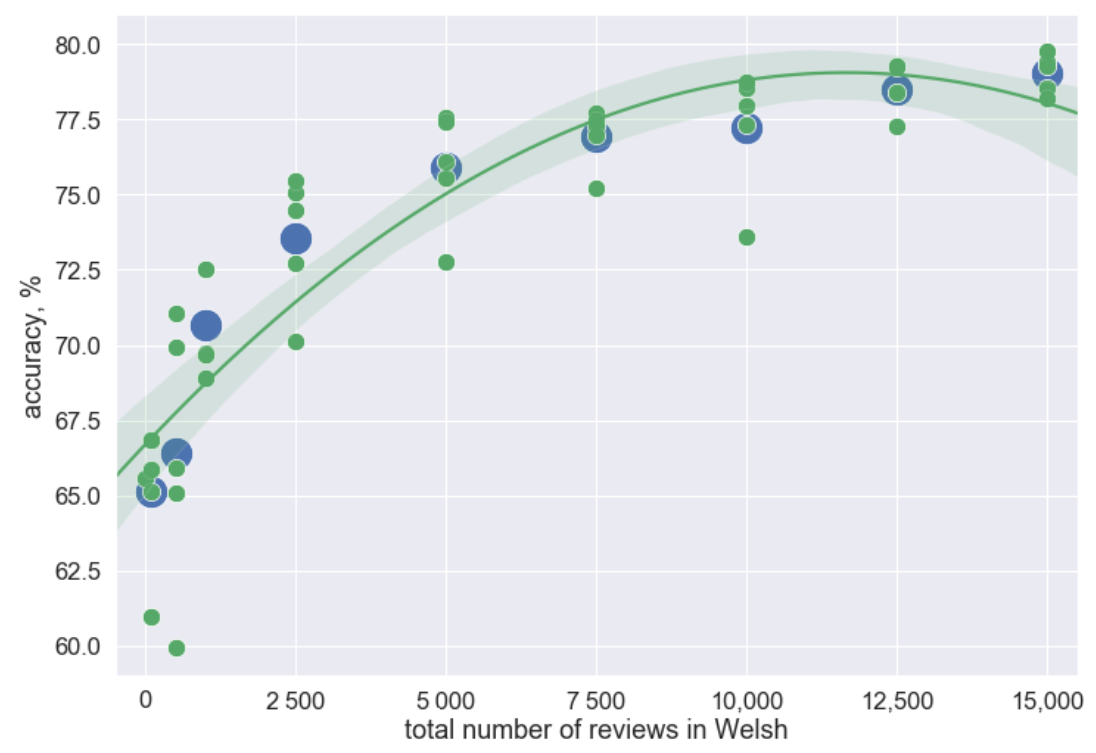

5.3. Extrinsic Evaluation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Vulić, I.; Moens, M.F. Bilingual word embeddings from non-parallel document-aligned data applied to bilingual lexicon induction. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing, Beijing, China, 26–31 July 2015; Short Papers. Volume 2, pp. 719–725. [Google Scholar]

- Tsai, C.T.; Roth, D. Cross-lingual wikification using multilingual embeddings. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 589–598. [Google Scholar]

- Mogadala, A.; Rettinger, A. Bilingual word embeddings from parallel and non-parallel corpora for cross-language text classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 692–702. [Google Scholar]

- Camacho-Collados, J.; Doval, Y.; Martínez-Cámara, E.; Espinosa-Anke, L.; Barbieri, F.; Schockaert, S. Learning Cross-Lingual Word Embeddings from Twitter via Distant Supervision. In Proceedings of the International AAAI Conference on Web and Social Media, Atlanta, GA, USA, 8–11 June 2020; Volume 14, pp. 72–82. [Google Scholar]

- Doval, Y.; Camacho-Collados, J.; Anke, L.E.; Schockaert, S. Improving Cross-Lingual Word Embeddings by Meeting in the Middle. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 294–304. [Google Scholar]

- Mikolov, T.; Le, Q.V.; Sutskever, I. Exploiting similarities among languages for machine translation. arXiv 2013, arXiv:1309.4168. [Google Scholar]

- Conneau, A.; Lample, G.; Ranzato, M.; Denoyer, L.; Jégou, H. Word Translation Without Parallel Data. In Proceedings of the ICLR, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Artetxe, M.; Labaka, G.; Agirre, E. Learning principled bilingual mappings of word embeddings while preserving monolingual invariance. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 2289–2294. [Google Scholar]

- Artetxe, M.; Labaka, G.; Agirre, E. Generalizing and improving bilingual word embedding mappings with a multi-step framework of linear transformations. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence (AAAI-18), New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Adams, O.; Makarucha, A.; Neubig, G.; Bird, S.; Cohn, T. Cross-lingual word embeddings for low-resource language modeling. In Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics, Valencia, Spain, 3–7 April 2017; Long Papers. Volume 1, pp. 937–947. [Google Scholar]

- Neale, S.; Donnelly, K.; Watkins, G.; Knight, D. Leveraging lexical resources and constraint grammar for rule-based part-of-speech tagging in Welsh. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation, Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; pp. 3111–3119. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- Artetxe, M.; Labaka, G.; Agirre, E. A robust self-learning method for fully unsupervised cross-lingual mappings of word embeddings. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Volume 1, pp. 789–798. [Google Scholar] [CrossRef]

- Piao, S.; Rayson, P.; Knight, D.; Watkins, G. Towards a Welsh Semantic Annotation System. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation, Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Jones, D.; Eisele, A. Phrase-based statistical machine translation between English and Welsh. In Proceedings of the 5th SALTMIL Workshop on Minority Languages at the 5th International Conference on Language Resources and Evaluation, Genoa, Italy, 24–26 May 2006. [Google Scholar]

- Spasić, I.; Owen, D.; Knight, D.; Artemiou, A. Unsupervised Multi–Word Term Recognition in Welsh. In Proceedings of the Celtic Language Technology Workshop, Dublin, Ireland, 23 August 2014; pp. 1–6. [Google Scholar]

- Spasić, I.; Greenwood, M.; Preece, A.; Francis, N.; Elwyn, G. FlexiTerm: A flexible term recognition method. J. Biomed. Semant. 2013, 4, 27. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Klementiev, A.; Titov, I.; Bhattarai, B. Inducing crosslingual distributed representations of words. In Proceedings of the COLING 2012, Mumbai, India, 8–15 December 2012; pp. 1459–1474. [Google Scholar]

- Zou, W.Y.; Socher, R.; Cer, D.M.; Manning, C.D. Bilingual Word Embeddings for Phrase-Based Machine Translation. In Proceedings of the EMNLP, Seattle, WA, USA, 18–21 October 2013; pp. 1393–1398. [Google Scholar]

- Kneser, R.; Ney, H. Improved backing-off for m-gram language modeling. In Proceedings of the 1995 International Conference on Acoustics, Speech, and Signal Processing, New York, NY, USA, 9–12 May 1995; Volume 1, pp. 181–184. [Google Scholar]

- Lauly, S.; Larochelle, H.; Khapra, M.M.; Ravindran, B.; Raykar, V.; Saha, A. An autoencoder approach to learning bilingual word representations. arXiv 2014, arXiv:1402.1454. [Google Scholar]

- Kočiskỳ, T.; Hermann, K.M.; Blunsom, P. Learning bilingual word representations by marginalizing alignments. arXiv 2014, arXiv:1405.0947. [Google Scholar]

- Coulmance, J.; Marty, J.M.; Wenzek, G.; Benhalloum, A. Trans-gram, fast cross-lingual word-embeddings. arXiv 2016, arXiv:1601.02502. [Google Scholar]

- Wang, R.; Zhao, H.; Ploux, S.; Lu, B.L.; Utiyama, M.; Sumita, E. A novel bilingual word embedding method for lexical translation using bilingual sense clique. arXiv 2016, arXiv:1607.08692. [Google Scholar]

- Faruqui, M.; Dyer, C. Improving vector space word representations using multilingual correlation. In Proceedings of the 14th Conference of the European Chapter of the Association for Computational Linguistics, Gothenbourg, Sweden, 26–30 April 2014; pp. 462–471. [Google Scholar]

- Radovanović, M.; Nanopoulos, A.; Ivanović, M. Hubs in space: Popular nearest neighbors in high-dimensional data. J. Mach. Learn. Res. 2010, 11, 2487–2531. [Google Scholar]

- Dinu, G.; Lazaridou, A.; Baroni, M. Improving zero-shot learning by mitigating the hubness problem. In Proceedings of the ICLR Workshop Track, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Smith, S.L.; Turban, D.H.; Hamblin, S.; Hammerla, N.Y. Offline bilingual word vectors, orthogonal transformations and the inverted softmax. In Proceedings of the ICLR, Toulon, France, 24–26 April 2017. [Google Scholar]

- Xing, C.; Wang, D.; Liu, C.; Lin, Y. Normalized word embedding and orthogonal transform for bilingual word translation. In Proceedings of the 2015 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Denver, CO, USA, 31 May–5 June 2015; pp. 1006–1011. [Google Scholar]

- Zhang, M.; Liu, Y.; Luan, H.; Sun, M. Adversarial training for unsupervised bilingual lexicon induction. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics, Beijing, China, 26–31 July 2015 pp. 1959–1970. [Google Scholar]

- Zhang, M.; Liu, Y.; Luan, H.; Sun, M. Earth mover’s distance minimization for unsupervised bilingual lexicon induction. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 1924–1935. [Google Scholar]

- Artetxe, M.; Labaka, G.; Agirre, E. Learning bilingual word embeddings with (almost) no bilingual data. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; Volume 1, pp. 451–462. [Google Scholar]

- Søgaard, A.; Ruder, S.; Vulić, I. On the Limitations of Unsupervised Bilingual Dictionary Induction. arXiv 2018, arXiv:1805.03620. [Google Scholar]

- Doval, Y.; Camacho-Collados, J.; Espinosa-Anke, L.; Schockaert, S. On the Robustness of Unsupervised and Semi-supervised Cross-lingual Word Embedding Learning. In Proceedings of the 12th Language Resources and Evaluation Conference, Marseille, France, 11–16 May 2020; pp. 4013–4023. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language models are unsupervised multitask learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. Roberta: A robustly optimized bert pretraining approach. arXiv 2019, arXiv:1907.11692. [Google Scholar]

- Alghanmi, I.; Anke, L.E.; Schockaert, S. Combining BERT with Static Word Embeddings for Categorizing Social Media. In Proceedings of the Sixth Workshop on Noisy User-generated Text (W-NUT 2020), Collocated with EMNLP 2020, 16 November 2020; pp. 28–33. Available online: https://www.aclweb.org/portal/content/6th-workshop-noisy-user-generated-text-wnut-2020 (accessed on 15 July 2021).

- Scannell, K.P. The Crúbadán Project: Corpus building for under-resourced languages. In Proceedings of the 3rd Web as Corpus Workshop, Louvain-la-Neuve, Belgium, 1 January 2007; Volume 4, pp. 5–15. [Google Scholar]

- Knight, D.; Loizides, F.; Neale, S.; Anthony, L.; Spasić, I. Developing computational infrastructure for the CorCenCC corpus: The National Corpus of Contemporary Welsh. Lang. Resour. Eval. 2020, 1–28. [Google Scholar] [CrossRef]

- Donnelly, K. Kynulliad3: A corpus of 350,000 Aligned Welsh-English Sentences from the Third Assembly (2007–2011) of the National Assembly for Wales. 2013. Available online: http://cymraeg.org.uk/kynulliad3 (accessed on 15 July 2021).

- Ellis, N.C.; O’Dochartaigh, C.; Hicks, W.; Morgan, M.; Laporte, N. Cronfa Electroneg o Gymraeg (ceg): A 1 Million Word Lexical Database and Frequency Count for Welsh. 2001. Available online: https://www.bangor.ac.uk/canolfanbedwyr/ceg.php.en (accessed on 15 July 2021).

- Prys, D.; Jones, D.; Roberts, M. DECHE and the Welsh National Corpus Portal. In Proceedings of the First Celtic Language Technology Workshop, Doublin, Ireland, 23 August 2014; pp. 71–75. [Google Scholar]

- Uned Technolegau Iaith/Language Technologies Unit, Prifysgol Bangor University. Welsh-English Equivalents File. 2016. Available online: https://github.com/techiaith (accessed on 15 July 2021).

- Pennington, J.; Socher, R.; Manning, C.D. GloVe: Global vectors for word representation. In Proceedings of the EMNLP, Doha, Quatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Zennaki, O.; Semmar, N.; Besacier, L. A neural approach for inducing multilingual resources and natural language processing tools for low-resource languages. Nat. Lang. Eng. 2019, 25, 43–67. [Google Scholar] [CrossRef]

- Vulić, I.; Moens, M.F. Bilingual distributed word representations from document-aligned comparable data. J. Artif. Intell. Res. 2016, 55, 953–994. [Google Scholar] [CrossRef]

- Doval, Y.; Camacho-Collados, J.; Espinosa-Anke, L.; Schockaert, S. Meemi: A simple method for post-processing cross-lingual word embeddings. arXiv 2019, arXiv:1910.07221. [Google Scholar]

- Xu, R.; Yang, Y.; Otani, N.; Wu, Y. Unsupervised Cross-lingual Transfer of Word Embedding Spaces. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 2465–2474. [Google Scholar]

- Espinosa-Anke, L.; Schockaert, S. Syntactically aware neural architectures for definition extraction. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; Volume 2, pp. 378–385. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the EMNLP, Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Compasutation 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Maas, A.; Daly, R.E.; Pham, P.T.; Huang, D.; Ng, A.Y.; Potts, C. Learning word vectors for sentiment analysis. In Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies, Prtland, OR, USA, 19–24 June 2011; pp. 142–150. [Google Scholar]

- Wu, Y.; Schuster, M.; Chen, Z.; Le, Q.V.; Norouzi, M.; Macherey, W.; Krikun, M.; Cao, Y.; Gao, Q.; Macherey, K.; et al. Google’s Neural Machine Translation System: Bridging the Gap between Human and Machine Translation. arXiv 2016, arXiv:1609.08144. [Google Scholar]

- Balahur, A.; Turchi, M. Multilingual sentiment analysis using machine translation? In Proceedings of the 3rd Workshop in Computational Approaches to Subjectivity and Sentiment Analysis, Jeju, Korea, 12 July 2012; pp. 52–60. [Google Scholar]

| Corpus | Numb. Words |

|---|---|

| Welsh Wikipedia | 21,233,177 |

| Proceedings of the Welsh Assembly 1999–2006 | 11,527,963 |

| Proceedings of the Welsh Assembly 2007–2011 | 8,883,870 |

| The Bible | 749,573 |

| OPUS translated texts | 1,224,956 |

| Welsh Government translation memories | 1,857,267 |

| Proceedings of the Welsh Assembly 2016–2020 | 17,117,715 |

| Cronfa Electroneg o Gymraeg | 1,046,800 |

| An Crúbadán | 22,572,066 |

| DECHE | 2,126,153 |

| BBC Cymru Fyw | 14,791,835 |

| Gwerddon | 732,175 |

| Welsh-medium websites | 7,388,917 |

| CorCenCC | 10,630,657 |

| S4C subtitles | 26,931,013 |

| Model | Dim. | MF | CW | Retrieval | Acc. |

|---|---|---|---|---|---|

| fastText | 500 | 6 | 6 | CSLS | 22.92 |

| fastText | 500 | 6 | 4 | CSLS | 21.85 |

| fastText | 500 | 6 | 8 | CSLS | 21.75 |

| word2vec | 300 | 6 | 4 | CSLS | 21.75 |

| word2vec | 500 | 6 | 8 | CSLS | 21.46 |

| word2vec | 300 | 6 | 6 | CSLS | 21.46 |

| word2vec | 500 | 6 | 4 | CSLS | 21.46 |

| word2vec | 300 | 6 | 8 | CSLS | 21.36 |

| word2vec | 500 | 6 | 6 | CSLS | 21.36 |

| fastText | 500 | 3 | 4 | CSLS | 20.46 |

| fastText | 500 | 3 | 8 | CSLS | 19.75 |

| fastText | 500 | 3 | 6 | CSLS | 19.36 |

| word2vec | 300 | 3 | 8 | CSLS | 19.22 |

| word2vec | 500 | 3 | 6 | CSLS | 19.22 |

| word2vec | 500 | 3 | 8 | CSLS | 19.18 |

| word2vec | 300 | 3 | 6 | CSLS | 18.83 |

| fastText | 300 | 6 | 4 | CSLS | 18.57 |

| word2vec | 300 | 6 | 4 | invsoftmax | 18.48 |

| word2vec | 300 | 6 | 8 | invsoftmax | 18.48 |

| word2vec | 300 | 6 | 8 | NN | 18.43 |

| word_cy | Closest English Words to word_cy | word_en | Closest Welsh Words to word_en |

|---|---|---|---|

| nofio (swim) | swim, swimming, kayak, paddling, rowing, waterski, swam, watersport, iceskating, canoe | swim | nofio (swim), deifio (diving), cerdded (walking), blymio (diving), padlo (paddling), arnofio (floating), sblasio (splashing), troelli (spinning), neidio (jumping) |

| glaw (rain) | rain, snow, fog, heavyrain, downpour, rainstorm, heavyrains, snowfall, rainy, mist | rain | glaw (rain), eira (snow), cenllysg (hail), wlith (dew), cawodydd (showers), rhew (frost), taranau (thunder), barrug (frost), genllysg (hail), dafnau (drops) |

| hapus (happy) | happy, pleased, glad, grateful, delighted, thankful, anxious, eager, fortunate, confident | happy | hapus (happy), bodlon (satisfied), ffeind (kind), llawen (joyful), rhyfedd (strange), trist (sad), llon (cheerful), cysurus (comfortable), hoenus (cheerful), nerfus (nervous) |

| meddalwedd (software) | software, application, computer, system, tool, ibm, hardware, technology, database, device | software | meddalwedd (software), feddalwedd (software), caledwedd (hardware), dyfeisiau (devices), amgryptio (encryption), dyfeisiadau (inventions), cymwysiadau (applications), algorithm (algorithm), dyfais (device), ategyn (plugin) |

| ffrangeg (French language) | Arabic, Hebrew, Hindi, Arabiclanguage, language, urdu, sanskrit, haitiancreole, English | French | ffrengig (french), sbaenaidd (Spanish), almaeneg (German language), archentaidd (Argentinian), gwyddelig (Irish), twrcaidd (Turkish), llydewig (Breton), almaenaidd (German), danaidd (Danish), imperialaidd (imperial) |

| croissant (croissant) | frenchfries, yogurt, applesauce, currysauce, mulledwine, mozzarellacheese, noodlesoup, buñuelo, chilisauce, misosoup | croissant | bisgedi (biscuits), twmplenni (dumplings), byns (buns), teisennau (cakes), bacwn (bacon), caramel (caramel), melysfwyd (confectionery), cwstard (custard), marmaled (marmalade) |

| gwario (spend money) | expend, invest, reinvest, pay, allot, allocate, disburse, economize, retrench, accrue | spend | treulio (spend time), dreulio (spend time), gwario (spend money), aros (wait), threulio (spend time), gwastraffu (wasting), dychwelyd (returning), nychu (languishing), hala (spend money), byw (live) |

| hiraeth (longing) | longing, sadness, yearning, sorrow, anguish, loneliness, grief, feeling, ennui, heartache | longing | hiraeth (longing), galar (grief), anwyldeb (dearness), tristwch (sadness), nwyd (passion), gorfoledd (exultation), tosturi (compassion), tynerwch (tenderness), nwyf (vivacity), hyfrydwch (loveliness) |

| caerdydd (Cardiff) | Docklands, Southbank, Brisbane, Frankston, downtown, Thessaloniki, Coquitlam, Melbourne, Bayside, Glasgow | Cardiff | Abertawe (Awansea), nantporth (Nantporth), aberystwyth (Aberystwyth), llanelli (Llanelli), glynebwy (Ebbw Vale), caerdydd (Cardiff), porthcawl (Porthcawl), llandudno (Llandudno), awyr (sky), wrecsam (Wrexham) |

| Tonypandy (Tonypandy) | Edgewareroad, Blakelaw, Upperdicker, Ainleytop, Bilsthorpe, Romanby, Killay, Llanwonno, Penllergaer, Greenrigg | Tonypandy | aberdâr (Aberdare), Senghennydd (Senghennydd), aberpennar (Mountain Ash), brynaman (Brynamman), aberdar (Aberdare), coedpoeth (Coedpoeth), llanidloes (Llanidloes), brynbuga (Usk), penycae (Penycae), tymbl (Tumble) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Espinosa-Anke, L.; Palmer, G.; Corcoran, P.; Filimonov, M.; Spasić, I.; Knight, D. English–Welsh Cross-Lingual Embeddings. Appl. Sci. 2021, 11, 6541. https://doi.org/10.3390/app11146541

Espinosa-Anke L, Palmer G, Corcoran P, Filimonov M, Spasić I, Knight D. English–Welsh Cross-Lingual Embeddings. Applied Sciences. 2021; 11(14):6541. https://doi.org/10.3390/app11146541

Chicago/Turabian StyleEspinosa-Anke, Luis, Geraint Palmer, Padraig Corcoran, Maxim Filimonov, Irena Spasić, and Dawn Knight. 2021. "English–Welsh Cross-Lingual Embeddings" Applied Sciences 11, no. 14: 6541. https://doi.org/10.3390/app11146541

APA StyleEspinosa-Anke, L., Palmer, G., Corcoran, P., Filimonov, M., Spasić, I., & Knight, D. (2021). English–Welsh Cross-Lingual Embeddings. Applied Sciences, 11(14), 6541. https://doi.org/10.3390/app11146541