Abstract

In the past few decades, metaheuristics have demonstrated their suitability in addressing complex problems over different domains. This success drives the scientific community towards the definition of new and better-performing heuristics and results in an increased interest in this research field. Nevertheless, new studies have been focused on developing new algorithms without providing consolidation of the existing knowledge. Furthermore, the absence of rigor and formalism to classify, design, and develop combinatorial optimization problems and metaheuristics represents a challenge to the field’s progress. This study discusses the main concepts and challenges in this area and proposes a formalism to classify, design, and code combinatorial optimization problems and metaheuristics. We believe these contributions may support the progress of the field and increase the maturity of metaheuristics as problem solvers analogous to other machine learning algorithms.

1. Introduction

Combinatorial optimization problems (COPs), especially real-world COPs, are challenging because they are difficult to formulate and are generally hard to solve [1,2,3]. Additionally, choosing the proper “solver algorithm” and defining its best configuration is also a difficult task due to the existence of several solvers characterized by different parametrizations. Metaheuristics are widely recognized as powerful solvers for COPs, even for hard optimization problems [2,4,5,6,7,8]. In some situations, they are the only feasible approach due to the dimensionality of the search space that characterizes the COP at hand.

Metaheuristics (MH) are general heuristics that work at a meta-level, and they can be applied in a wide variety of optimization problems. They are problem-agnostic techniques [4,9,10,11,12,13] that establish an iterative search process to find an optimal solution for a given problem [6,14,15]. Metaheuristics are extensively used in several areas such as finance, production management, production, healthcare, financial, telecommunication, and computing applications that are NP-hard in nature [2,10,16].

Nevertheless, a determined metaheuristic cannot have a great performance in all categories of optimization problems because its approach may not work well in several other problems, a hypothesis already proved by “No Free Lunch” theorems [14,15,17,18,19]. These points have “engaged” the research community to develop a vast number of metaheuristics [6,15,19].

Theoretically, metaheuristics are not tied to a specific problem, thus allowing the same heuristic to be used to solve different types of COPs. Metaheuristics can be adapted to incorporate problem-specific knowledge [4]. However, in practical terms, these incorporations always require some level of customization to adapt them to the peculiarities of a COP. For instance, it is not plug-and-play as occurs with Scikit Learn, where any data and parameters are passed as input for the classifiers.

Consequently, this customization requires a set of efforts to effectively ensure that the metaheuristics can properly manipulate the COP solutions’ representation, such as methods for exploration and exploitation compatible with the solutions’ encoding. Moreover, the COP formulation also needs to provide features (data and behaviors) required by metaheuristics to guarantee the proper functioning of the search process for finding an optimal solution.

The lack of rules and formalisms to standardize the formulation of COPs and a software design pattern to develop metaheuristics makes this activity more challenging and makes it difficult the progress and consolidation of the field. The absence of standardization and the lack of guidelines to organize COP and metaheuristics [5,7,9,12,17,18] make difficult the classification and comparison of metaheuristics’ behaviors and performance.

Consequently, this leads researchers to start “almost” from scratch [3,5,9,16,18] by building their own version of the algorithm. Consequently, this situation decreases the potential of COP (as a “piece of software”) and metaheuristic reuse. Especially because they were not designed as independent software units, the interoperability among COPs and metaheuristics without code changes is, most of the time, impossible. Because of this, extra efforts are needed to develop or adjust the metaheuristics to deal with the specific problem [3,4,7,20], reducing the time spent on the analysis and tuning the results.

This study aims to provide a thorough review of the COP and MH by presenting the main concepts, algorithms, and challenges. Using these review inputs as a basis, this research proposes a standardization and a framework for COP and MH, named ToGO (Towards Global Optimal). Therefore, this is a review paper accompanied by a framework proposal to provide answers to open questions and well-known issues raised by the research community. From these perspectives, to guide this research, we formulated five research questions. They were conceived as guidelines to conduct the research and organize the concepts, definitions, discussions, decisions, and conclusions.

1.1. Research Objective and Questions

This review paper aims to organize a body of knowledge by arranging the fundamental concepts and building blocks necessary to design and implement COPMH. Consequently, this paper has two main objectives. Section 1 provides a review of the field and Section 2 propose a standardization. In the review, two central points will be researched: in Section 1.1, the most important concepts and definitions (COPMH) and in Section 1.2 the main challenges of the field. For the second objective, this work must consider at least three classes of behavior: combinatorial optimization problem and its building blocks (Section 2.1), metaheuristics interface and interactions (Section 2.2), and typical operations that a framework structure should consider (Section 2.3). To address these objectives, we formulated five research questions. Before proceeding to the description of each research question, it is important to highlight that RQ-1 and RQ-2 are concerned with the review objectives of this paper. Moreover, they were designed, especially RQ-1, to provide essential inputs to the other research questions (RQ-3, RQ-4, and RQ-5).

RQ-1: What are the fundamental concepts of COP (combinatorial optimization problem) and metaheuristics?

Rationale: The response to this question aims to offer the necessary knowledge to comprehend this research and its fundamental concepts. These concepts are vital for the reader and provide a broad perspective as a starting point in the COP and metaheuristics field. It also must support the understanding of the standard proposed and its definitions in this study.

RQ-2: What are the main challenges of the COP and metaheuristics research field?

Rationale: This question intends to draw attention to fundamental issues of the COP and metaheuristics research field by investigating several papers and other pieces of knowledge. Consequently, this question also can lead to future studies that may require community attention. Additionally, some of these open questions can provide valuable contributions to RQ-3, RQ-4, and RQ-5, considering that some issues can be treated through some guidelines and formalism.

RQ-3: What are the features of COP and metaheuristics that could be developed as independent methods in a framework structure?

Rationale: By answering this question, the main idea is to identify the elements that should be part of a framework structure because they can be reused in other situations by any COP or heuristic (without coding efforts in future problems). Consequently, avoiding the development of components that can be reused. Additionally, it also reduces the efforts to formulate a problem or develop a metaheuristic, make them leaner and simpler to define.

RQ-4: How can we develop a body of knowledge to formulate combinatorial optimization problems in a standard way?

Rationale: This question has many facets. It involves different aspects, which englobes the conceptual and practical perspectives to design and implement COP in a generic way. Therefore, a problem standard must consider a wide range of different problem specificities and several aspects of communication with heuristics and framework methods. The objective behind this is to provide guidelines to formulate COP in a standard way that can be manipulated by any method that knows its standard.

RQ-5: How can we define a standard design for metaheuristics completely without coding links to the problems?

Rationale: The answer to this question should provide a software pattern for metaheuristics, defining a set of behaviors and standard API (Application Programming Interface). Furthermore, a metaheuristics standard must follow a problem standard that also provides its own API. Therefore, it must know how to manipulate the COP by being aware of its behaviors. Consequently, the answer to RQ-4 will support the answer to this question.

1.2. Out of Scope (Delimitations)

This review paper does not consider publication intensity. Consequently, it does not list the main sources of research such as conferences, proceedings, journals, etc. The list of references provides several papers that may guide the reader through this research area.

2. Materials and Methods

This chapter provides a comprehensive review of the fundamental definitions of combinatorial optimization problems and metaheuristics. Consequently, this section supplies the needed knowledge to the reader to facilitate the understanding of these subjects and the standard proposed in this work. Therefore, this section also aims to answer RQ-1: What are the main concepts of COP (combinatorial optimization problem) and metaheuristics?

2.1. Optimization

Optimization can be summarized as a collection of techniques and algorithms [6,8,16,21,22] to find the best possible solution (optimal or near-optimal) iteratively. From a searching approach perspective, they can be organized into three classes of methods: (1) enumerative, (2) deterministic, and (3) stochastic [10,23,24,25,26].

The enumerative methods are based on a brute force approach because it inspects all possible solutions in the search space. For an understandable reason, it always will find the optimal global solution. However, computationally speaking, this approach only works in small search spaces. On the other hand, the most challenging problems, with a vast search space, may require an enormous amount of time to list all solutions, something not feasible most of the time. In these cases, different approaches are required.

The deterministic methods can be seen as an extension of the enumerative approach. They use knowledge of the problem to develop alternative ways to navigate search space. Deterministic methods are excellent problem-solvers for a wide variety of situations, but they are problem-dependent. Consequently, they have difficulties when it is not possible to apply the knowledge of the problem effectively. Deterministic methods receive this classification because their parameters’ values and the initialization approach determine their result. In other words, if a particular configuration is used, the output solution will always be the same.

The stochastic methods are problem-agnostic and include uncertainty. They move in the search space using a sophisticated navigation model, applying a probabilistic approach—random but guided by probabilities—to explore the search space [27,28]. Since the stochastic methods are based on randomness aspects, the solution returned will almost always be different. Therefore, it is not possible to determine which will be the final solution and its performance [29]. They are an excellent alternative in scenarios where the data are unpredictable, the problem knowledge cannot be used, and the other approaches (enumerative and deterministic) do not have a good performance or are not feasible.

2.2. Optimization Problem

Human beings face difficulties daily—challenging or contradictory situations—that need to be resolved to reach the desired purpose. These situations, in practical terms, can be called problems. Theoretically, from a psychological perspective, a problem can be summed up as difficulty that triggers an analytical attitude of a subject to acquire knowledge to overcome it [30,31,32]. From a computer science perspective, a problem is a task that an algorithm can solve or answer. From a practical perspective, computational problems can be broadly classified into six main categories of problems: ordering, counting, searching, decision, function, and optimization. Concerning ordering, counting, and searching, their own definitions describe their objectives as problems. A decision problem is a class of problems that decides after the analysis of a situation. The return of this type of problem is an answer (a judgment) that only is determined by two possible outputs {“Yes”, “No”} or {1, 0} [30,31]. Whereas a function problem is an extension of the decision, any data type can be returned.

An optimization problem (OP) is a class of problems resolved by optimization methods that aim to find an optimal solution in a vast set of possible solutions [2,23,33,34,35]. Consequently, the output of an optimization problem is the solution itself.

Definition 1.

An optimization problem can be formalized in the following way:

where:

- P represents the optimization problem;

- S symbolizes the search space of the problem domain;

- f represents the objective function (OF); and

- Ω corresponds to the set of problem’s constraints.

The search space S is defined through a set of variables , generally called decision variables or design variables. They can be seen as the data or the dimensions that are explored to search for a solution. The search space may be subject to a set of constraints Ω or restrictions. These restrictions are a set of definitions used to specify whether a solution is feasible or not. In other words, they regulate whether a solution can be accepted according to the rules of the problem domain. The f fitness function or objective function is used to assess and determine the quality of the solutions and guide the search process.

Definition 2.

A fitness function has as an output a real number (), often called fitness.

This number enables the possibility to compare the performance of all possible solutions of a certain optimization problem [6,23,34,36].

Combinatorial Optimization Problems

Optimization problems are divided into two categories of problems, (1) continuous and (2) combinatorial problems [35]. Continuous optimization problems are composed of continuous decision variables, and they can generate an infinite number of valid solutions. Combinatorial optimization problems (COP)—also known as discrete optimization problems—are defined by discrete decision variables and their elements. The solution of a COP is represented by an arrangement of these elements. Since a solution is a combination (or permutation) of elements, a COP has a finite number of solutions. The definition of the encoding rules between combinations or permutations will depend on the needs of the problem and its solution representation.

Example 1.

Considering Travel Salesman Problem (TSP), a solution is a route formed by an ordered arrangement of cities. In other words, the order of the cities is essential, and their repetition is not allowed. Therefore, this solution’s encoding must follow the permutation rules where the elements cannot be repeated.

Continuous optimization can use derivatives and gradients to describe the slope of a function [35]. However, combinatorial optimization does not count with this support. Consequently, combinatorial problems generally are more challenging problems to be solved. They are usually NP-hard problems, and there is no deterministic method capable of solving them in polynomial-time [30,37,38]. In real problems, the search space often is vast, and the landscape is commonly unknown. Moreover, the performance of COP methods requires good strategies to balance the exploration and exploitation to navigate the search and find optimal solutions. These characteristics turn combinatorial optimization problems into a challenging activity [38,39].

2.3. Combinatorial Optimization Problem Classification System

After an extensive review of the combinatorial optimization problem (COP)—capturing different aspects explored in various studies—some critical definitions are organized jointly in this paper. The objective is to consolidate several dimensions of COP classification, providing a bigger view of a COP. However, it is noteworthy this work does not aim to create a classification system for COP. Still, it can provide a preliminary view that can be used as an input for future studies with the clear intention to develop a classification system of COP that the community can widely adopt.

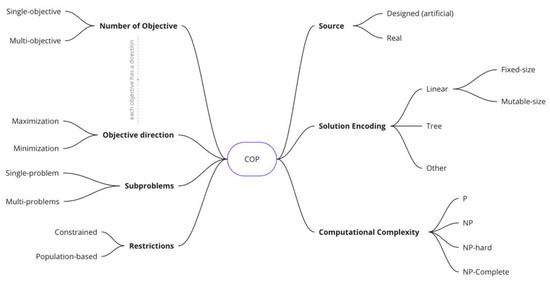

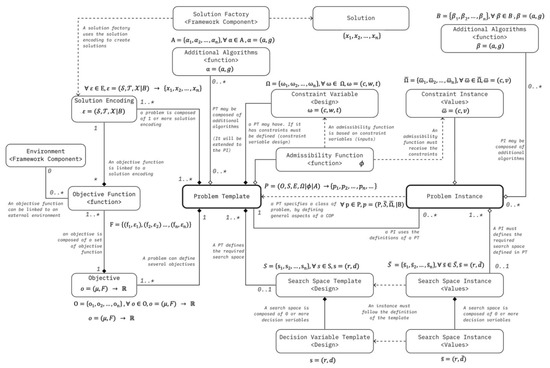

In this paper, we intend to offer a complementary perspective for the formal description of the optimization problems. We consider this activity essential for the study’s objectives: to propose a standard and formalism to support the formulation, design, and implementation of COP in a standard way. There, considering this aspiration, the organization provided by this work is synthesized in the following Figure 1. Moreover, a brief description of each dimension is available in the subsequent sections.

Figure 1.

Combinatorial optimization problem—building blocks.

2.3.1. Number of Objectives: Single Objective Versus Multi-Objective

From a perspective of the number of objectives, the COP can have two behaviors: (1) single-objective and (2) multi-objective combinatorial optimization problems (MOCOP) [40,41,42,43,44,45]. In a single-objective problem, the fittest solution is easily determined by the best value returned by the fitness function. In contrast, in multi-objective problems, the optimization process must consider all objectives simultaneously [38]. The performance of a solution in each criterion is not enough, and an analysis that considers all criteria together must support this process. Moreover, the objectives may potentially conflict and concur with each other [19,24,40,41,42,46,47]. Thus, MOCOPs require additional efforts to assess, compare, and determine which solution performs better than others, balancing multiple criteria.

In practical terms, assessing the solutions’ quality is performed by a function that supports the selection process. Essentially, this function receives a set of solutions and the problem’s objectives. As a result, it returns a set of “best” solutions that are chosen by a specific assessment approach. Several approaches can be used to perform this evaluation. They can be split into two categories of methods: (1) Pareto and (2) non-Pareto [45].

Remark 1.

These functions used to assess multi-objective problems are generic. Consequently, other optimization problems can reuse these functions because they often are problem-agnostic. However, rarely a particular problem may provide a specific multi-objective assessment. For instance, one method that considers the other fitness values in a weighted formula.

2.3.2. Optimization Objective Direction

Definition 3.

Each objective has an objective function and an objective direction. A fitness function has two main purposes: (1) they determine the quality of the solutions and (2) guide the search by indicating promising solutions [9,40]. The objective direction can be divided into two categories of optimization: (1) minimization or (2) maximization.

In general terms, when the lowest fitness defines the best performance, then it is a (1) minimization problem, and in this particular case, the fitness function also can be called “cost function”. On the other hand, when the highest fitness determines the best performance, it is a (2) maximization problem. In this case, the fitness function also can be called “value function” or “utility function”.

2.3.3. Subproblems (Single-Problem Versus Multi-Problem)

Some problems, due to their complexity, need to be split into subproblems in order to be adequately resolved (“divide to conquest” strategy). Therefore, the problems, considering the number of subproblems, can be classified into two classes: (1) single optimization problems (single-problems), which are composed of only one problem, and (2) multiple optimization problems (multi-problems) [48,49,50], which are a non-trivial arrangement of two or more problems. A point that needs to be emphasized is that a problem can be composed of two subproblems (multi-problems), where the first subproblem has one objective (single objective) and the second subproblem has two objectives (multi-objective).

Multi-objective problems require extra efforts to select the optimal solutions (Pareto optimal) and also demand additional work because it is necessary to balance the results of the subproblems to attend to the global perspective of the higher-level problem. The higher-level problem may have global objectives that must also be achieved, and they cannot be entirely perceived by the parts separately.

A typical example is the artificial problem named Traveling Thief Problem (TTP) proposed by Bonyadi et al. (2013) [48]. It is an optimization problem composed of two well-known problems: TSP (Travel Salesman Problem) and KP (Knapsack Problem), organized in a higher-level problem. By way of example, guaranteeing the best loading ships and logistics may not ensure the best profitability. In other words, “the fittest” profitability may not be guaranteed by “the fittest” ship loading solution (equivalent to KP part) and “the fittest” ship scheduling (equivalent to TSP part).

2.3.4. Unconstrained Versus Constrained

In general terms, constraints are a set of prerequisites—that sometimes are necessary—to determine if a solution is valid, according to the criteria of a specific problem. Consequently, an optimization problem can be (1) constrained or (2) unconstrained [6]. Therefore, in an unconstrained problem, all generated solutions are feasible. Oppositely, a constrained problem is subjected to some criteria to be viable.

The constraints typically are divided into two types, (1) “hard” or (2) “soft”. The “hard constraints” are mandatory prerequisites. They must be satisfied, and if a constrain violation happens, the algorithm must reject the solution. “Soft constraints” are more flexible; they are not mandatory, but desirable. If a constrain violation occurs, the algorithm should not discard the solution. In this case, it could be addressed in the fitness function, making the performance worse and the solution less suitable. Due to this possibility, the “soft constraints” are sometimes called cost components.

2.3.5. Artificial Versus Real-World Problem

Optimization problems, considering their origin, can be (1) “artificially” created by someone or (2) identified in the real world, requiring efforts to understand and specify them. [16,49,50]. Artificial or designed optimization problems are proposed by someone, generally as an abstraction and reduced view of reality. In this situation, the author defines a synthetic and controlled environment. All variables and criteria are mapped and previously specified.

By way of illustration, the required decision variables are explicitly defined to the specific objective of the artificial problem. Consequently, the definition of the solution encoding is facilitated, and they embrace all dimensions required. The objective functions are precise because they were designed to determine which is the best solution. Since the problem is proposed, there is no doubt about the concepts and criteria behind its definition. A good example of designed problems is the Travel Salesman Problem (TSP) proposed by Hamilton and Kirkman.

In contrast, real-world optimization problems are not artificially proposed by anyone. They are challenging and contradictory situations that arise on a daily basis. Consequently, first, they need to be identified, and after being identified, they also must demand a solution. Since a solution is required, a set of efforts can start to understand and design them. However, the reality is more challenging to be captured. Generally, they must be investigated and consider many dimensions of the subject (systemic view).

Moreover, there is no direct feedback to inform if the problem is defined correctly or not. Therefore, their representation will demand a certain level of knowledge about the problem. In other words, the people who will formalize and develop the problem must know its context and peculiarities.

2.3.6. Solution Encoding

In combinatorial optimization problems, any solution is an arrangement of elements, combinations, or permutations. They follow a type of representation (or shape) and encoding rules that arrange the values of the search space’s dimensions (variables) to form a solution. The most common types of representation are (1) a linear sequence of elements (as an array) and a (2) tree that organizes the elements recursively. However, any other data structure can be used as a type of encoding. It will depend on the necessity of the problem to represent a solution. Arrays and trees are the most frequent because they are easy to manipulate and transform, but, by way of example, (3) a graph representation that links nodes (a linked list) can also be found. Other formats of representation require heuristics that can handle their data structure and transform them properly.

Each type of representation may be subjected to some encoding rules that regulate how they are created and how they can be manipulated. Solutions of linear shapes are based on set values (symbols). They must consider the size (fixed versus mutable), the elements’ type of arrangement, and if the elements can be repeated or if their order is important. Solutions of three shapes generally follow a language composed of functions, terminals, and constants.

2.3.7. Problem Data Environment

As mentioned before, the objective functions (OF) are responsible for determining the quality of the solutions. By standard, the OF interacts directly with the decision variables (data) to determine the solution’s quality. However, OF also can interact with a simulated environment. In this scenario, the simulated environment receives a solution and returns feedback which is interpreted by an OF that concludes the solution’s quality [10]. It is analogous to the functioning of reinforcement learning (RL), where agents interact with an environment and receive feedback (state and rewards).

The usage of simulation in optimization is an essential tool to deal with particular scenarios of problems [10,11,27,51] where a simulation is required. Consequently, the COP can be divided into three classes: (1) decision variable-based, (2) simulation environment-based, and (3) both. The challenge of simulation usage in COP is the design and implementation of the simulated environment properly for each type of problem.

2.3.8. Problem’s Computational Complexity

The search space, which is the set of admissible solutions for an optimization problem, is usually exponential with respect to the size of the input. This happens for the vast majority of the real-world combinatorial problems [35]. In particular, the size of the input is determined according to the specific problem. For instance, solving an instance of the minimum spanning tree problem requires in input a weighted graph, and the input size corresponds to the number of nodes and edges of such a graph. This example suggests that different instances of the same optimization problem are characterized by a different size of the input. As a consequence, it is not possible, for non-trivial problems, to define an algorithm that provides an optimal solution for all the possible problem instances within a time constraint that is polynomial in the input size [52]. For this reason, it is fundamental to take into account the time needed by an algorithm to provide the final solution. The time also depends on the hardware available, and the same algorithm executed on the same instance, but different computers may provide significantly different running times. For this reason, to perform a fair comparison, it is common to consider a different concept that is the computational complexity of an algorithm. The computational complexity allows calculating the time an algorithm needs to return the final answer or solution as a function of the input size. Thus, computational complexity is the commonly used method for comparing the running time of two different algorithms because it is independent of the hardware on which the algorithms are executed. Computational complexity considers the number of basic operations to be performed during the execution of the algorithm and uses this information to provide an indication of the running time as a function of the input size. For instance, an algorithm that must visit all the vertices V of a graph given in input has a complexity of O(V). In other words, its complexity is linear with respect to the input size (i.e., the number of nodes of the graph). To distinguish between easy and difficult decision problems, one should consider the class of problems that are solvable by a deterministic Turing machine in polynomial time and problems that are solvable by a nondeterministic Turing machine in polynomial time. Without going into formal detail related to the Turing machines, it is possible to provide an intuitive definition of the two classes of problems, which are known as (1) P and (2) NP [35]. In particular, a decision problem is in P if and only if it can be solved by an algorithm in polynomial time. On the other hand, a problem is called NP (nondeterministic polynomial) if its solution can be guessed and verified in polynomial time; nondeterministic means that no particular rule is followed to make the guess. Thus, the algorithm used to solve a problem in NP consists of two steps. The first consists of a guess (generated in a nondeterministic way) about the solution, while the second step consists of a deterministic algorithm that verifies if the guess is a solution to the problem [48,53]. Finally, if a problem is NP and all other NP problems are polynomial-time reducible to it, the problem is NP-complete. In other terms, determining an efficient algorithm for any NP-complete problem implies that an efficient algorithm can be found for all the NP problems.

This distinction is important in the context of metaheuristics: problems that are in NP are commonly addressed using metaheuristics. In this case, the algorithms run in polynomial time and provide a solution that approximates the optimal one.

2.4. Heuristics

Before proceeding further to a more in-depth description of metaheuristics, it is necessary to briefly present what a heuristic is. According to Judea Pearl (Pearl, 1984), heuristics are criteria, methods, or principles for deciding which among several alternative courses of action promises to be most efficient in order to achieve some goal. They represent compromises between two requirements: (1) the need to make such criteria simple, and at the same time, (2) the desire to see them discriminate correctly between good and bad choices. In general terms, heuristics in computer science may be summed up as the usage of useful principles and search methods designed to resolve problems in an exploratory way, especially when it is not feasible to find an exact solution due to its complexity in a feasible amount of time. Therefore, it tends to involve a certain degree of uncertainty and the result is often not deterministic.

The heuristics, considering how they create solutions, is often divided into three categories: (1) constructive and (2) improvement, and (3) hybrid heuristics [54]. The constructive approach generates a solution element by element by analyzing the decision variables. For instance, they take the best next piece without considering the entire solution until they form a complete solution. In the improvement approach, the method generally starts with a random initial solution that is improved iteratively, altering some parts to search for a better solution. Hybrid heuristics, as the name suggests, uses both approaches, aiming to take advantage of their methods.

2.5. Metaheuristics

Glover introduced the term “metaheuristics” (Glover, 1986) by combining two words, meta (Greek prefix metá, “beyond” in the sense of “higher-level”) and heuristic (Greek heuriskein or euriskein, “find” or “discover” or “search”). Metaheuristics are habitually described as (1) problem-independent algorithms that can be (2) adapted to incorporate problem-specific knowledge and definitions [4,27,33]. Consequently, they can resolve several categories of optimization problems efficiently in a flexible way because they do not have “strong” links with any specific problem.

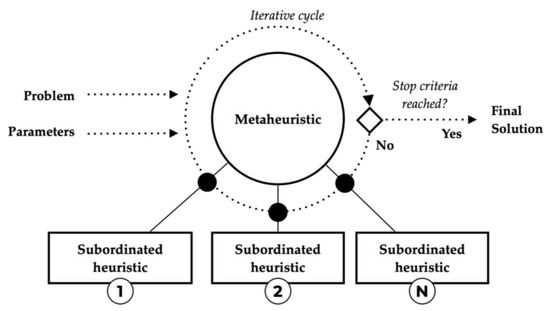

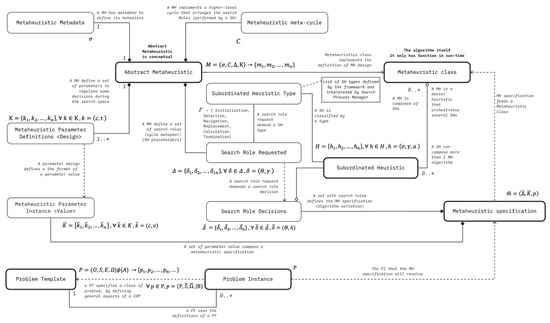

A metaheuristic (MH) can be seen as a higher-level heuristic, composed of a master heuristic (metaheuristic cycle) that drives and coordinates a set of subordinated heuristics (SH)—in an iterative cycle—to explore the search space [2,4,6,14,55,56,57]. This relationship between master heuristics and subordinated heuristics (SH) is illustrated in Figure 2. Each subordinate heuristic performs a different role in the metaheuristic’s search strategy. Thereby, the MH cycle arranges the SH to work together—in a higher level of abstraction—to search for an optimal solution for a given problem in a coordinated way.

Figure 2.

Metaheuristics and subordinated heuristics relationship.

Informally, it is possible to say that the metaheuristics perform “blind exploration” in a vast search space that they do not know. Consequently, they cannot “see” the whole landscape to identify “hills” and “valleys” (optimal solutions). For that reason, the strategies used by the heuristics (master and subordinated) to navigate in the search space are crucial to investigate and map promising spots of the search space. Thus, everything that a metaheuristic knows about the landscape is the “visited places” (solutions) and the quality of these solutions (fitness). Therefore, after spending considerable time exploring the search space, the algorithm will have some information about parts of the search space. As a result, the best solution found is the return of the algorithm.

A metaheuristic will be successful in discovering optimal solutions on a given optimization problem if it can conduct a good balance between exploration and exploitation [2,16,34,58,59]. Exploration and exploitation are related concepts in some sense because both techniques are employed to navigate and examine the search space. However, there is a subtle difference between them. Exploration can be seen as diversification because it aims to identify promising areas in the search space with high-quality solutions. In contrast, exploitation can be understood as intensification because it intensifies the search in a good region (solutions with excellent quality) of the search space to find a better solution [2,4,34,44,58].

2.6. Metaheuristic System of Classifications

The classification of metaheuristics is a fuzzy and debatable topic. There are various ways and distinct dimensions to classify the metaheuristics [12,15,19,53,57,60,61,62]. Some researchers generally choose the most appropriate classification criteria according to their point of view or work [12,19,62]. This issue will be better explained in Section 3.1, Lack of Guidelines, to organize the field.

It is essential to highlight that this article does not offer exhaustive analysis and does not intend to propose a classification system for metaheuristics. Even so, after conducting an extensive review, we observed some frequent classifications and distinctive perspectives of how to classify metaheuristics. For this reason, this study attempted to unify and accommodate all of them in a single view. As a result, we believe that this organization can be used as an input that may contribute to a future study focused on creating a classification system for metaheuristics. Additionally, the real idea behind this analysis is to understand all possible perspectives of metaheuristics and use this information to support the creation of a formalism to design and develop metaheuristics.

Moreover, after seeing a significant number of different classification methods, we believe that the most appropriate way to classify metaheuristics is to simplify the organization. It should be through the usage of several complementary orthogonal dimensions—in other words, a system analogous to a tag classification, where the dimensions are independent and do not have links. We also understand that hierarchical relationships among different dimensions should be avoided. The idea behind this is to avoid the potential problems of incompatibility when multiple dimensions are arranged together.

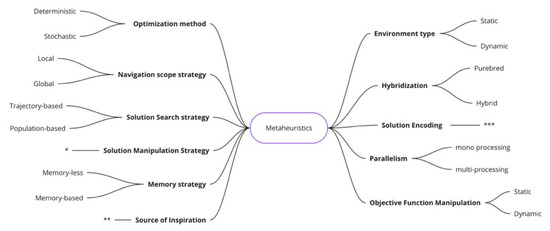

Figure 3 synthesizes our work to unify and organize the most important and distinctive dimensions found in this revision. The next sections will provide a brief explanation of each one of them.

Figure 3.

The most common dimensions of metaheuristics classifications found in the literature. * The Solution Manipulation Strategy requires a deeper explanation of how the metaheuristics can be subdivided. ** The source of inspiration has several different views in the literature. *** The same division found in the COP system of classification.

2.6.1. Optimization Method

This dimension organizes the metaheuristic according to its optimization method. They are divided into (1) deterministic and (2) stochastic [23,24,63]. An explanation of these methods was already provided in Section 2.1, Optimization.

2.6.2. Navigation Scope Strategy

This dimension aims to point out the differences in a fundamental aspect of metaheuristics’ navigation system by analyzing its meta-strategy to cover the search space. Essentially, the methods in this perspective can be distributed into two groups of navigation scope: (1) local and (2) global searchers [6,34,64].

Remark 2.

The term “navigation scope strategy” is defined in this study because it was considered the most suitable term to organize the definition for (1) local and (2) global searchers.

The (1) local searchers are specialized in seeking the nearest optimum solution until achieving the peak of the region (e.g., Hill Climbing). On the other hand, the (2) global searchers attempt to examine other areas of the search space, even if it involves moving to worse solutions during the search (e.g., Genetic Algorithms).

2.6.3. Solution Search Strategy

The solution search strategy dimension also reveals another vital aspect of the metaheuristics navigation system. It is also related to the way a metaheuristic covers the search space. However, in this case, it is associated with the number of solutions that are optimized in each iteration. Regarding this characteristic, the methods can be split into (1) trajectory-based (single solution) or (2) population-based (set of solutions) [2,16,34,65,66,67].

Remark 3.

The term “solution search strategy” is defined in this study because it was pondered as the most appropriate term to arrange the definition for (1) trajectory-based (single solution) or (2) population-based.

The trajectory-based methods work by improving a single solution iteratively, moving from one solution to another, forming a trajectory (track). In contrast, population-based metaheuristics operate by improving a set of solutions (population) simultaneously. In other words, some operations are applied in each iteration, and the current population is replaced by a new one (with some improved solutions). A metaheuristic population-based can be further divided into single-population (Section 2.1) or multi-population (Section 2.2). Multi-population is generally used to increase the diversity of the solutions [68] and establish a parallel search through subpopulations that evolve together. Moreover, a multi-population search may require additional mechanisms and methods to manipulate individuals among populations.

2.6.4. Solution Creation Strategy

This dimension also reveals another crucial aspect of the metaheuristics navigation system. In this case, it defines the strategy used to generate and transform solutions during the search process. It also can be considered one of the most important behaviors of a metaheuristic. The solution creation and manipulation strategy can be divided into two main classes of algorithm behavior: (1) combination and (2) movement [12].

The (1) combination strategy, as the name suggests, is the group of algorithms that are based on operations that are applied to mix the content of some solutions to form other solutions. Additionally, they did not elect a reference solution to be followed. Therefore, any solution of a set of solutions can be used to form the new ones. The Genetic Algorithm (GA) is the most known and symbolic algorithm of this group. They can still be subdivided into two groups, mixture (Section 1.1) and stigmergy (Section 1.2).

The methods based on “mixture” perform a direct combination of existing solutions to form a new one. The Genetic Algorithm (GA) is the most famous metaheuristic that uses the combination strategy. The algorithms based on stigmergy, on another side, use an indirect combination strategy, by using an intermediary structure got from the various solutions used to produce new solutions. Ant Colony Optimization (ACO) is the most known algorithm in this category.

On the other hand, the algorithms that employ (2) movement strategy use a pattern found in the current set of solutions—by using a differential vector that calculates the intensity and direction of the movement—to generate a new solution. In addition, these algorithms define a solution used as the reference and it is followed by others. The newly developed solutions can replace the reference solution or compete with other followers and replace them. The Particle Swarm Optimization is the most emblematic algorithm of this category.

The methods based on movement can be further split into three subdivisions, all population (Section 2.1), representative (Section 2.2), and group (Section 2.3). As the name suggests, all population members define the movement in all population (Section 2.1). However, each member has a different level of influence based on the distance of the reference solution. In representative (Section 2.2), each solution is influenced by a reduced representative solution group (PSO is classified in this subdivision). The group-based algorithms (Section 2.3) just consider a subset of solutions to form the new solution. These groups do not use representative criteria. They use neighborhood or subpopulations strategy to define the groups to support the calculation and generate new solutions.

Remark 4.

It is noteworthy to emphasize, to organize this dimension, we used as the main reference the inputs provided by Molina et al., 2020 [12]. They offered an outstanding critical analysis and recommendations about metaheuristics classification. Essentially, we used Chapter 4 (Taxonomy as Behavior), where they analyzed several metaheuristics and organized them according to the mechanism employed to build and modify solutions. It should be noted that this study adapted the nomenclature to accommodate these inputs with other perspectives. For instance, “Solution Creation” was renamed to “Combination” (1), and “Differential Vector movement” was renamed to “Movement” (2). Moreover, the term “Solution Creation and Manipulation Strategy” is created to arrange this dimension because the metaheuristics can have many other types of behaviors.

2.6.5. Source of Inspiration

In the past few decades, the creation of metaheuristics algorithms was intense [6,12,15,69,70,71,72] due to its success in solving hard problems. These algorithms usually obtain their inspiration from some characteristics found in nature [13,70,73,74,75,76,77,78,79]. Nevertheless, some researchers have criticized the excess of new metaheuristics more focused on its metaphor than their quality as optimization methods [12,15,80]. This issue will be discussed in-depth in Section 3, which covers research challenges and gaps.

Furthermore, the classification of algorithms from a source of inspiration is a fuzzy topic in the literature [6,12,15,19,20]. It is common to find divergent points of view to classify them according to the inspiration applied. This confusion is generally related to the absence of ground rules or due to partial views that do not consider a more extensive classification system [12].

Moreover, some “subfields” are more mature or successful than others. Consequently, it is easier to perceive some families of algorithms than others (e.g., Evolutionary Algorithms and Swarm Intelligence). Despite this, even for these well-known families, it is common to see distinct ways to organize them, especially in a more extensive system that considers other types of inspirations. In other words, a family can be found at different levels of the hierarchy in literature. For instance, some classifications organize swarm intelligence inside the bio-inspired, and others consider swarm intelligence at the same bio-inspired level.

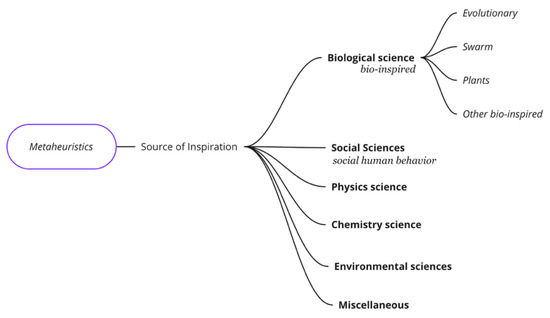

We believe, as a ground-rule, that science already has an organization to classify the areas of study (sciences). Metaheuristics did not need to reinvent the wheel. Since they are based on science areas, the taxonomy of inspiration sources should also follow a similar structural organization but considering a more straightforward way with fewer hierarchy levels. Based on this assumption, this study arranged the most found source of inspiration presented in the literature. Again, it is not an exhaustive list but rather a unified view of the most relevant sources of inspiration, and it is illustrated in Figure 4.

Figure 4.

Families of metaheuristics by source inspiration or metaphor used.

Remark 5.

This paper does not have the objective to go deeper into this subject. Many studies have already stressed this analysis of the source of inspiration. For further information about this, please consult the list of references. This section contains a list of recommended readings. You can find a section with excellent reviews about the “source of inspiration” and its implications.

2.6.6. Memory Strategy

This is a dimension that can classify metaheuristics according to the presence of the memory. Basically, the metaheuristics can be classified as (1) memory-less and (2) memory-based [36]. Memory-less are metaheuristics that do not have any way to store information during the search process and only consider the current state of solution(s). An example of memory-less is Simulated Annealing (SA). In contrast, memory-based metaheuristics use a mechanism to accumulate information during the iterative process to guide the search. An example of memory-based metaheuristics is Tabu Search (TS).

2.6.7. Purebred Versus Hybrid

A metaheuristic can be (1) purebred or (2) hybrid [34,81,82,83,84,85]. A purebred is focused on its methods only. Meanwhile, a hybrid metaheuristic generally has a method as a basis and uses pieces of other metaheuristics or employs any other auxiliary methods [15] to take advantage of other approaches.

2.6.8. Data Environment

A metaheuristic can interact directly with (1) the search variables or a (2) simulated environment. The metaheuristics that take advantage of simulation are also known as simheuristics. They extend the standard metaheuristics by combining metaheuristics (optimization) and simulation [11,51,86].

In fact, the interaction with decision variables or a simulated environment is performed by the Objective Function. Therefore, this dimension is linked to the behavior of the Objective Function (covered in Section 2.3.7, Problem Data Environment). Consequently, as well as the decision variables, the simulated environment must be provided by the problem, since they are problem-related. Thus, it must be transparent for the metaheuristics. Nevertheless, simheuristics may require some framework components to support the usage of simulated environment and other components (e.g., fuzzy component [51]).

2.6.9. Solution’s Encoding

Each metaheuristic method is prepared to deal with a specific type of solution encoding. Consequently, metaheuristics can only solve problems with an encoding that they can manipulate. Likewise, a particular solution’s encoding can only be resolved by a restricted number of metaheuristics that know how to handle its encoding. Thus, it is possible to classify metaheuristics according to the encoding that they can manage. The differences in the solution encoding are covered in Section 2.3.6, Solution Encoding.

By way of illustration, Genetic Algorithms (GA) and their variations of subordinated heuristics are designed to manipulate a linear sequence of characters (an array of elements). In comparison, Genetic Programming (GP) is prepared to deal with elements organized recursively in a tree.

2.6.10. Parallel Processing

The capacity to perform parallel processing is another characteristic of the metaheuristics, and it can be seen as an improvement to explore the search space more efficiently. The metaheuristics can be classified into (1) mono processing and (2) multiple processing. The mono processing is not prepared to perform parallel processing. On the other hand, the multi-processing has an architecture prepared to perform parallel processing and consolidate these multiple searches [2,6,16]. It is an option to improve any metaheuristic because they can incorporate and activate this capability when needed. In the literature, there are several strategies of parallelization to reduce the time of the search and explore more areas of the search space simultaneously.

2.6.11. Capacity to Alter the Fitness Function

Considering the capacity to manipulate the fitness function, the MH can be subdivided into two categories, (1) static and (2) dynamic [9,11,82]. The static category is the standard way, where the MHs use the objective function as the problem designed it. In contrast, some algorithms may change the OF behavior by incorporating some obtained knowledge during the search process. The main idea behind this is trying to escape local optima [87]. Guided Local Search (GLS) is an example of MH with this capacity.

2.7. Metaheuristics Require Experiments to Evaluate Algorithms’ Performance

The performance evaluation is an imperative criterion for metaheuristic. Experiments support the determining of how well a metaheuristic (MH) investigates the search space. Additionally, considering the consequences of the “No Free Lunch” theorems, it is hard to know in advance which MH will perform better than others. Moreover, since the most successful MH are stochastic—due to their probabilistic nature (uncertainty)—the results of MH tend to vary. Therefore, by considering an instance of a problem, the same algorithm may have variances in its performance. Consequently, to compare a set of metaheuristics’ performances and determine which is the best, it is necessary to design and execute an experiment to conduct an appropriate evaluation.

An experiment for metaheuristics evaluation involves a set of coordinated steps in a controlled environment, and an experiment should guarantee its reproducibility and comparability. In a summarized way, an experiment considers the following steps: (1) objectives definition, (2) design, (3) execution, and (4) conclusions [5,58,87,88,89].

The first step, (1) objectives definition, aims to define the experiments’ drivers, goals, and research questions. The design step (2) intends to specify, plan, and prepare the experiment. It commonly considers the following activities: defining the measures and terminology, selecting the metaheuristics, defining the parametrization tuning strategy, determining the report format, etc. Each algorithm may have several possible parameters configuration, and the parametrization tuning strategy defines how these configurations will be tested. It is essential to highlight that parameter setting directly influences the algorithm’s performance [90] and it varies by the given problem. Generally speaking, it can happen manually by defining a set of possible configurations, or it can be conducted automatically, supported by an optimization method. Furthermore, when it is not a real problem, it should be necessary to choose benchmark problems and their “data” to compare performance among designed problems.

The step experiment execution (3) is dedicated to performing the experiment, running each configuration, and collecting its data. It is necessary to emphasize that each configuration must be executed enough times to conclude whether a specific configuration is effectively better than others (e.g., thirty times may be sufficient to make conclusions statistically). Consequently, the data analysis must consider a statistical approach to understand the variance to determine whether the best performances are statistically. Consequently, it regards dispersion statistics measures (e.g., mean, median, standard deviation, and outliers’ analysis) organized in visualizations or reports to support the decision. The final step, (4) conclusions, aims to analyze the results and organize the conclusions of the experiment.

3. Challenges, Research Gaps, and Issues

This section aims to synthesize the main challenges, research gaps, and issues found in this research. Several papers provided valuable inputs for this topic in their conclusions. At the same time, we also decided to consider our experience and difficulties in developing COP and metaheuristics. While it is not an exhaustive review, by covering these points, this section offers a satisfactory answer for RQ-2: What are the main challenges of the COP and metaheuristics research field? This section is organized by arranging the main findings into groups according to the subject. A brief description of each one of them is available in the following subsections.

3.1. Lack of Guidelines to Organize the Field

As mentioned in Section 2.6, the classification of metaheuristics is a fuzzy and debatable topic [3,12,19,57,60,61,62]. Because of this, it is common to find several different ways to classify metaheuristics in the literature [91]. Additionally, researchers tend to choose the most appropriate classification criteria according to their point of view [12,19] or their subfield. Moreover, some researchers have mixed up some concepts and definitions, and it is common to see contradictory classifications in the literature.

These divergent perspectives are one of the challenges for the organization and progress of the metaheuristics field. Many researchers point that the root cause of this phenomenon lies in the absence of a consistent definition and guidelines to organize the field [3,12,15,19,57]. Considering all mentioned issues—and many others not covered in this study—we emphasize the necessity for an in-depth review to develop guidelines to classify and organize the metaheuristics.

Nevertheless, we also understand that the definition of a classification system requires consensus and community adoption. Consequently, we argue that it is an open question that requires a study with this purpose. Therefore, we believe it is a promising line of research for future work.

Furthermore, a system of classification could be supported by a database to classify metaheuristics and organize their metadata. Consequently, this database could consider all the orthogonal dimensions to register metaheuristics. It also could be extended to an open-source pool of metaheuristics supported by a framework.

3.2. Focus in Developing New Algorithms Versus “No Focus in Consolidation”

Due to the large number of algorithms, it gradually became clear that part of these algorithms does not necessarily lead to suitable optimization methods [15]. Thereby, some researchers have started to criticize the excess of new metaheuristics algorithm publications [12,13,15,19,57]. The main argument lies in the overvaluation of the source of inspiration. Some researchers tend to be more concerned with the algorithm’s metaphor and novelty than with their quality as optimization methods.

Sörensen [15,57] called this phenomenon of “metaphor fallacy” he expects that this mindset turns quickly. He argued that most “novel” metaheuristics based on a new metaphor take the field of metaheuristics a step backward rather than forward. Therefore, the community must be more concerned about developing more efficient methods and avoid the “metaphor fallacy” trap.

Many researchers also argue that the metaheuristics’ behaviors are more important than the source of inspiration [12,19,92]. Generally, the metaphor is more ludic and generally does not explain the real functioning of the algorithm. Because of this, the metaphor used may be difficult to understand the algorithm’s proposal because it can overshadow the actual functioning.

Furthermore, these new algorithms (the novelty) are often an extension of existing algorithms with little functional alterations [16,91,92]; they can be seen as variants, and the metaphors are highlighted as the novelty. Molina et al., 2020 [12], also analyzed the most influential algorithms in their review, and they state that five algorithms (their standard implementations) influenced the majority of other algorithms. According to them, the algorithms most influential are (1) Genetic Algorithms (GA), (2) Particle Swarm Optimization (PSO), (3) Ant Colony Optimization (ACO), (4) Differential Evolution (DE), and (5) Artificial Bee Colony (ABC).

This issue must highlight two aspects. Firstly, this research production trend may be related to the existing publication mindset, where the chances to publish a new algorithm are high. Weinand et al., 2021 [91], pointed out that the intensity of Combinatorial Optimization grew exponentially since 1989. Secondly, obviously, the new algorithms were crucial to the development of the metaheuristics field. All the proposed metaphors helped to bring different ways to explore the search space, such as anarchy societies, ants, bees, black holes, cats, cuckoos, consultants, clouds, dolphins, fishes, flower pollination, gravity, water drops, and so on so forth. The critic is related to the overestimation of the source of inspiration as a primary driver with few concerns whether it effectively presents novelty for optimization methods (innovation in search strategy perspective).

This issue is an open question in the past few years, at least since 2013 when Sörensen published “Metaheuristic—the metaphor exposed” [57] and the attention for this has increased. It is a research mindset that requires change because, in some sense, the progress and consolidation of the metaheuristics field are difficult. The answer for this challenge may demand a methodology to define COP and MH and provide a fair way to compare metaheuristics features, strategies, and their performance.

3.3. Experiments and Performance Evaluation Issues

Conventionally, the field of optimization problems has focused on metaheuristic performance, where an algorithm is considered good if it has a good performance compared to some benchmark [15,47]. Additionally, the studies that proved superiority through a comparison have higher chances of being published (also known as the “up-the-wall game”).

Nevertheless, in recent years, some researchers have perceived issues in some studies that focus on evaluating the performance of new metaheuristics [6,18,92,93]. The main cause lies in the fact that some experiments may induce inaccurate analysis because they do not promote fair performance comparisons among metaheuristics. This may lead to biased analysis. For example, the set of problems used as benchmarking could be selected because the new algorithm performs well in these cases. Another example is that standard versions of successful algorithms, such as genetic algorithms, are used in the comparison, but the field has evolved since its creation, and there are many variations of GA that added new features that improved the canonical version of the algorithm.

Another aspect of the performance issues is related to the fact that experiments generally only use dispersion statistics measures (e.g., mean, median, standard deviation, and outliers’ analysis) or use basic statistical tests. Some researchers such as Boussaïd and Hussain [2,6] have pointed out the necessity to add other statistical analyses to promote more sophisticated comparisons and evaluate other aspects of metaheuristic performance.

3.4. Absence of Formalism to Design and Develop COP and Metaheuristics

In the literature review, no evidence was found that a standardization or formalism to design and implement COP and metaheuristics exists. There are some clues or definitions of how to implement them, but there is no standard that could be used as a reference and followed to build COP, metaheuristics, or subordinated heuristics in an integrated way. This absence of standardization leads to different ways to represent COPs and makes difficult its reuse. Generally, researchers understand the basic functioning of the algorithm and implement their own version and adds the problem definition.

Although metaheuristics are problem-agnostic, they often have some level of attachment to the problem in practice (code). Additionally, they are also attached to subordinated heuristics, and vice versa. In other words, in most implementations, it is not easy to separate the code of the subordinated heuristics, metaheuristics, and problems. Therefore, in practical terms, several efforts are necessary to prepare the algorithms for the needs of a specific problem before starting any search for a solution.

Consequently, this absence of standardization leads researchers to start “almost” from scratch by creating their own version of the algorithm and adjusting the metaheuristics to deal with the specific problem or developing the required subordinated heuristics (related to the solutions encoding manipulation). After this, several tests are necessary to check if they return the expected result and avoid errors and bugs. Moreover, these tasks are time-consuming because they demand preparation, which reduces the time spent on the analysis and tuning the results [4,6,15,20].

To complete the view of this issue, it requires an analogy with other more mature methods of machine learning (e.g., neural network, clustering, decision trees, etc.) For instance, a decision tree is an algorithm that resolves classification tasks; they classify data in classes. In informal terms, they receive as input (1) data, (2) a set of features to analyze, and (3) parameter settings, and as a result, they return (4) a solution (classification model). Evaluation functions evaluate the returned solution and determine its quality as a classifier. All these steps occur without any change in the structure of the algorithm. In other words, it does not demand any code changes, and only new data were provided (analogous to problem instance).

Therefore, the idea is not to eliminate the flexibility that enables the metaheuristic and COP extensibility. The goal is to define a standard behavior to eliminate the dependency and links of metaheuristics and COP building blocks and increase the reuse and reducing or eliminating the necessity of code changes. While you just need to provide a problem instance and analyze the solutions, keeping the possibility to add new components every time it is necessary. Consequently, a standard to design and develop COP and MH is vital to avoid starting from scratch and it is the basis for the next issue.

3.5. Support Structure to Not Start from Scratch

This issue highlights the necessity of a software framework to solve COP by using metaheuristics without building algorithms from scratch [9,20] and reusing a set of built-in components and functions [4,15,20]. Because of this, a software framework must also provide facilities that support its functioning and help its users achieve their objectives.

Generally speaking, the foundational premise to support a software framework is defined by the common behavior of its components and their API (Application Interface Programming). These elements define how the components can communicate with each other and keep their independence and generalization aspects to potentialize their reuse.

Considering a COP and MH framework, the definition of two levels of standards is required. The first level is related to the core components, which define the standard to design and implement COP and MH (a necessity explained in the previous section). The second level is concerned with defining the standard of the auxiliary components that supports MH in the search activities and other required supplemental tasks. The challenge is to identify these auxiliary components and implement them as stand-alone software structures which can be reused by any other components. The other challenge is to keep the framework capacity for extensibility of existing algorithms or add new ones.

Again, to complete the view of this issue, an analogy also needs to be provided. In this case, a comparison with a more mature machine learning framework is necessary. For instance, Scikit Learn provides several machine learning algorithms and many auxiliary functions required to build and evaluate the machine learning models. Additionally, it does not require any changes in the structure of the algorithms. Only the data and the algorithm configuration must be provided. Furthermore, Scikit Learn is open source and is widely used by academics and industry. Therefore, we argue that the same maturity level should be required of a metaheuristic framework.

Therefore, this issue aims to emphasize the necessity of a COP and MH Methodological and Software Framework to consolidate and promote the progress of this field. A framework with these characteristics must be an open-source project supported by the community. Moreover, it also should promote more cooperation of different metaheuristics approaches than only in competition or hybridization. It also opens space for metaheuristics ensembles, for instance.

4. Results: Standardization, Methodology, and Framework for COP and Metaheuristics

This section, supported by the answers of RQ-1 and RQ-2, aims to present a proposal for combinatorial optimization problems (COP) and metaheuristics standards. It covers a formalism to design and implement COP and metaheuristics, a methodology to design problems, and a conceptual framework. Moreover, this section will address the answers for RQ-3, RQ-4, and RQ-5.

4.1. Framework Structure

This section aims to provide an answer for RQ-3: What are the features of COP and metaheuristics that could be developed as independent methods in a framework structure? It leads us to investigate and identify which are the vital elements that can make part of a software framework and support COP and MH experiments. By identifying these elements, the objective is to avoid rework and spend time developing components that should already be available; in other words, “not reinvent the wheel” all the time. Additionally, it also reduces the possibility of bugs and errors because these general components were already tested and checked before. Therefore, the idea is to take advantage of these components by reusing them (without code changes) and focusing on the problem definition or developing a new metaheuristic (or subordinated heuristics), increasing productivity and efficiency.

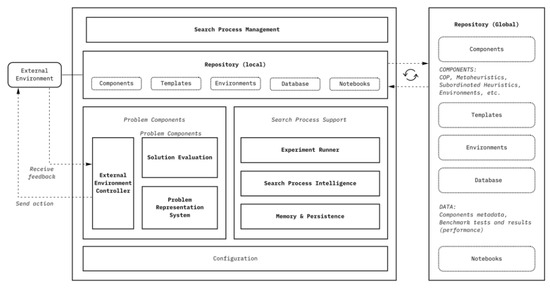

The following sections provide a brief description of these essential components arranged by this study. In Figure 5 is the high-level view of the proposed architecture with these important support components.

Figure 5.

Proposed framework architecture—high-level view of the main components.

Nevertheless, before proceeding to the explanation of the components, we would like to highlight the main higher-level features expected of a framework for COP and MH, and they are the following:

- Extensibility of software components;

- Flexibility to exchange behavior of the components;

- Componentization driven (component-based development); and

- Cooperation among algorithms.

By considering the infinitude of problems and metaheuristics algorithms, a framework for COP and MH needs to be conceived with the ability of extensibility. In other words, it must be able to add “pieces of software” (POS) without affecting the structure or core code of the framework. We are talking specifically about the possibility of adding problem templates—since they are also “programs”—and metaheuristics or subordinated heuristics methods, extending the framework. Consequently, it would ultimately increase the framework’s capabilities in solving combinatorial problems. It requires a core level of components and a software standard definition that should be followed by the new pieces of software, and supports the extensibility capacity.

Extensibility, accompanied by the flexibility to change components’ behavior, is essential to make algorithms more effective in searching. An algorithm behavior can be modified by configuration or by exchanging its software pieces (subcomponents). Additionally, the ideal behavior change should be possible both in the design time and in the run-time without code changes in the basic structure. It also should not consider hierarchical inheritance where a class inherits from a master class because it is hard to maintain. Moreover, a few changes in the base class can easily break the other subclasses that reuse the master class. It should consider a software design pattern that avoids this condition and prioritize the change in subcomponents to incorporate or modify behaviors.

Moreover, this flexibility capacity must consider at least two levels, (1) operational and (2) strategical. The operation must provide the functionality of exchange behaviors. In other words, make possible the concrete exchanges of parameters or subcomponents no matter the moment. It also requires a well-designed API and a software design pattern and standards. The strategical level must follow the search, recognize situations (e.g., when the MH is trapped in a local optimum), recommend changes when needed, and comprehend the results and feedback of its recommendations.

A componentization-driven framework comes with superpowers because it enables the possibility of writing and deploying new software pieces independently. They take advantage of framework existing services, and they are developed only focused on their objectives. Therefore, these new “pieces of software” (e.g., COP templates and instances, metaheuristics, or subordinated heuristics) can run above the existing framework environment. To increase their reusability potential, a repository is required, where the authors could share and keep these “pieces of software” and the community can easily use them. The cooperation among metaheuristics is also a crucial high-level feature. A well-designed API and a software design pattern can provide an environment where metaheuristics could cooperate to resolve a particular problem simultaneously or asynchronously.

4.1.1. Repository (Local and Global)

The repository is designed to maintain custom components, widgets, and templates developed to take advantage of framework preexisting services. These components must follow the software design pattern (standards) defined by the framework. By way of illustration, these components can be (1) combinatorial optimization problem templates, (2) metaheuristics, (3) subordinated heuristic, (4) external environment, (5) benchmark test (problem instances and a particular search configuration), etc.

The repository was designed into two levels, (1) global and (2) local. Global repo is accessible to everyone, and the community sustains it; they can download or upload components. The local repo represents local storage, where a person who works in a COP experiment downloaded components from the global repo or kept his/her developed new “pieces of software”.

Furthermore, the repository must be supported by a database that maintains information about the components. The concrete component in the repository must have metadata to describe them, and it should be stored in the database. Additionally, COP or metaheuristics could consider the preliminary classification system presented in Section 2.3 and Section 2.6 as a primary reference to form their metadata. Consequently, each type of component should be split into categories, and each type of component defines its own metadata. It should also consider the component author/owner (code), algorithm creator, paper that proposed the metaheuristics (if it is sharable—copyright), etc. Therefore, these components can be tagged properly according to their type. This metadata system also can support a search engine, enabling the filtering of the components by some criteria. Additionally, this database could also supply the main concepts of metaheuristics and COP (analogous to a wiki).

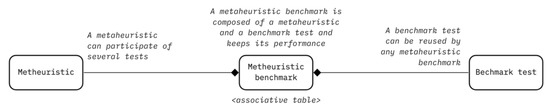

Moreover, the repository must provide a benchmarking process to compare metaheuristic performance. Thus, the database also should keep benchmarks of metaheuristic performance. This section of the database, in a summarized way, needs to keep two types of information. The first type of information is the benchmark test, and they can be seen as templates that the community will reuse. A benchmark test template comprises several problem instances (data) designed to evaluate metaheuristic performance in investigating these search spaces (data). The second category of information results from metaheuristic performance after performing a specific benchmark test (template). These tests must follow an experimental design and provide statistical information about their performances. Figure 6 shows the basic relationship between metaheuristics and benchmark tests.

Figure 6.

Basic relationship between metaheuristic and benchmark test.

The critical success factor is to develop wide and challenging tests by covering several aspects to defy the metaheuristics capacity. Consequently, a good test could consider different levels of difficulty and search space landscapes. These tests also could consider different types of problems such as routing, planning, scheduling, etc. Additionally, they can consider problems with a huge number of dimensions and so on so forth. The objective behind this is to generate reference tests that can be used as scientific standards to appraise and certify metaheuristics’ performance.

Therefore, from a community perspective, it demands proposals of challenging benchmark tests by covering several aspects to defy the metaheuristics capacity. We believe it is an open question, and it could be a good research line to develop the field. Research questions such as the following could be addressed. (1) What tests are capable of evaluating the capacity of a metaheuristic as a searcher method? Or still, (2) what are the dimensions that should be explored and considered in these tests?

Thus, several metaheuristics can be subjected to the same batch of tests and their performance can be compared. Additionally, the tests already performed do not need to be executed again because they are already stored in the database. Furthermore, considering a new metaheuristic, only this algorithm should perform the same batch of tests and after this, its results can be compared with the entire database.

Furthermore, a new metaheuristic to be added in the global repository should provide the metadata information and must be checked by some standard tests that evaluate and keep this information in the database. Consequently, any person who is interested in using the algorithm can know several characteristics and the general performance of the algorithm. The database could also provide a benchmarking view, where it is possible to compare all tested metaheuristics by test or still considering all available tests.

As much the community feeds this database, more complete will be the knowledge, and more conclusions can be found about metaheuristics. For example, for a new metaheuristic, it is possible to understand in advance its general performance. If it is not performing well, the researcher can try other things and test again. It is also possible to assume in which scenarios (kind of problems) a metaheuristic may work well. Other more general guesses also can be made, such as which behaviors are more suitable for a determined class of problems, and so on.

4.1.2. Search Process Management

The Search Process Management (SPM) component aims to encapsulate and orchestrate the search process in a standard way. It acts like a “wrapper” and “adapter”, and it is responsible for preparing and automatizing the search process by interpreting all specifications and instantiating all the objects that are necessary to perform a specific search process (e.g., concrete versions of COP and MH). No matter the metaheuristic used, it also will provide the same functioning mechanism, in other words, the same API. Consequently, it will interact directly with the concrete metaheuristics, and it must be transparent for the author of the experiment. He/she only needs to pass some parameters to SPM such as (1) problem (template and instance), (2) metaheuristic (or metaheuristics list), (3) metaheuristics settings, and (4) search settings. The only mutable spec is the metaheuristics settings (3) because they depend on their parameters’ definition.