Predicting the Compressive Strength of Concrete Using an RBF-ANN Model

Abstract

:1. Introduction

2. The Concrete Mix Proportioning

3. The RBF Artificial Neural Network

4. The Algorithm for Determining the Centers of RBFs and Their Shape Parameters

- Step 1: Randomly set initial values for and . ( and )

- Step 2: Use Equation (12) to obtain the threshold and the weights .

- Step 3: Calculate the error for each piece of the training data (), then sum up the total square error .

- Step 4: If the total square error is the smallest so far, save the values of , , and in a file.

- Step 5: If the smallest has not been updated for 500,000 times of training iteration, reset and with random values and go back to Step 2.

- Step 6: Use Equation (19) to set the stepping parameter .

- Step 7: Update all the values of and by using Equations (17) and (18).

- Step 8: Repeat Steps 2 to 7 and stop when the required number of iterations is achieved.

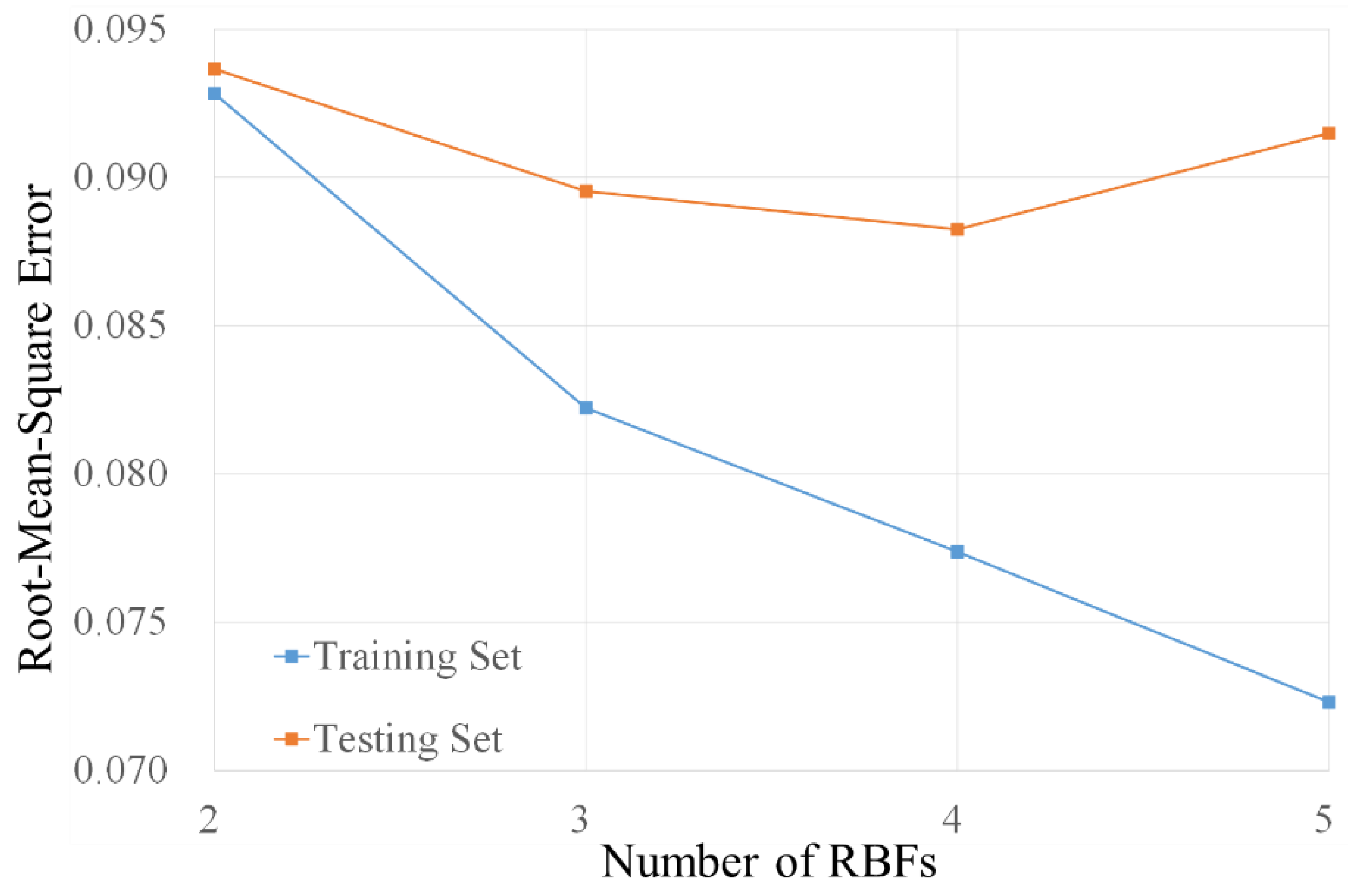

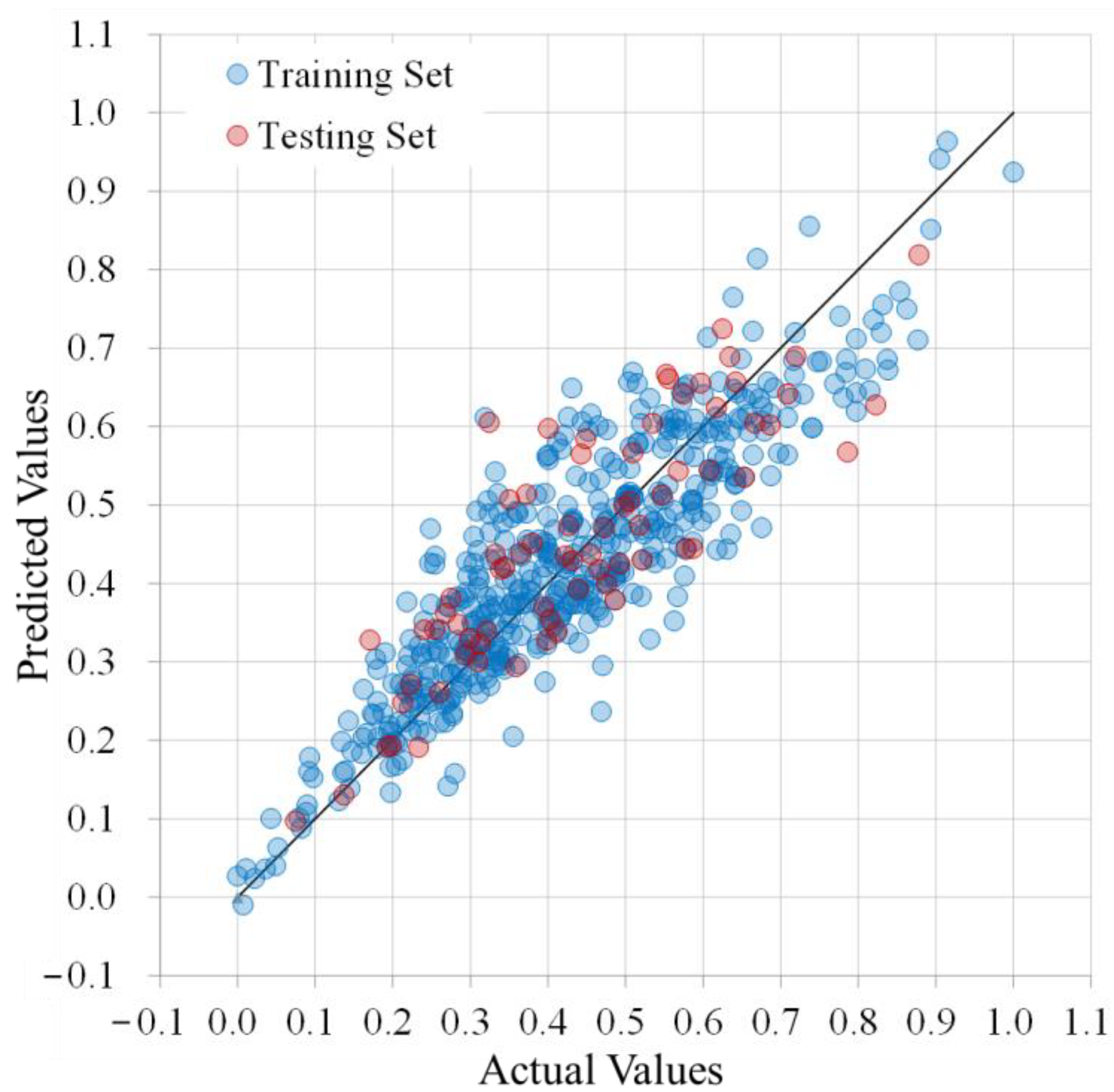

5. The Results of Training and Testing

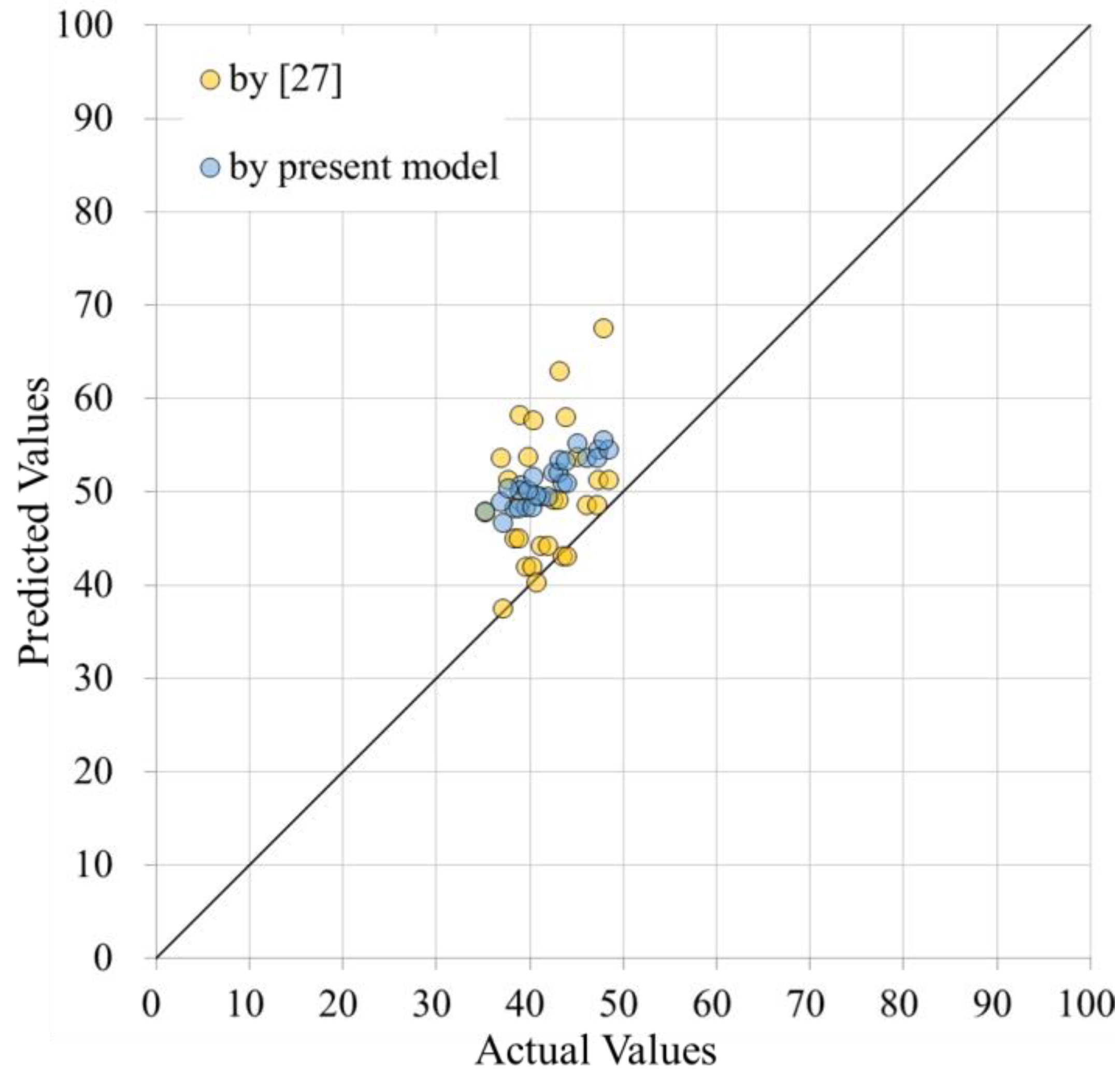

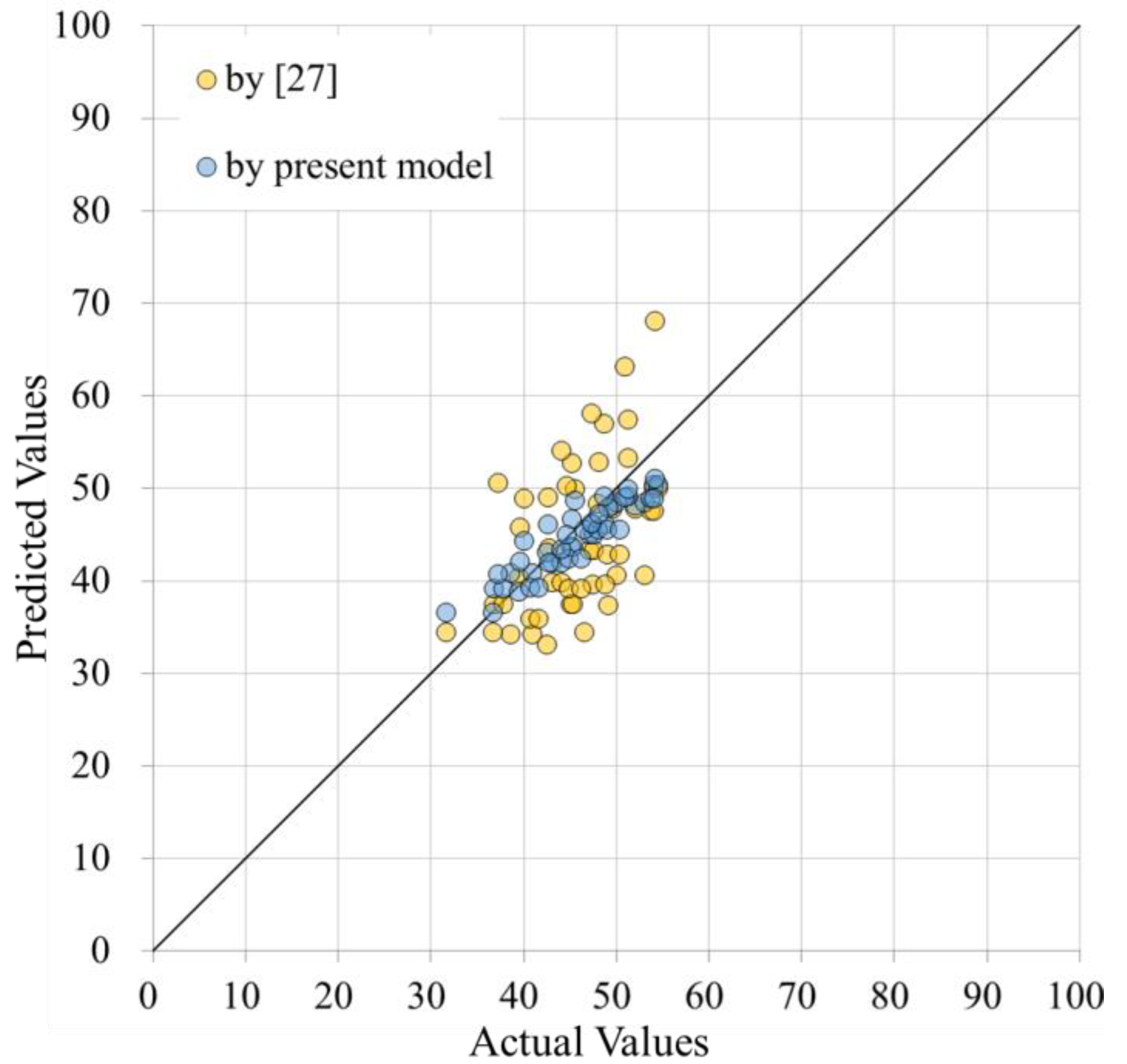

6. The Verification

7. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Al-Manaseer, A.A.; Dalal, T.R. Concrete containing plastic aggregates. Concr. Int. 1997, 19, 47–52. [Google Scholar]

- Jin, W.; Meyer, C.; Baxter, S. Glasscrete—Concrete with glass aggregate. ACI Mater. J. 2000, 97, 208–213. [Google Scholar]

- Coutinho, J.S. The combined benefits of CPF and RHA in improving the durability of concrete structures. Cem. Concr. Compos. 2003, 25, 51–59. [Google Scholar] [CrossRef]

- Batayneh, M.; Marie, I.; Asi, I. Use of selected waste materials in concrete mixes. Waste Manag. 2007, 27, 1870–1876. [Google Scholar] [CrossRef]

- Siddique, R. Waste Materials and By-Products in Concrete; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Paul, S.C.; Panda, B.; Garg, A. A novel approach in modelling of concrete made with recycled aggregates. Measurement 2018, 115, 64–72. [Google Scholar] [CrossRef]

- Tsivilis, S.; Parissakis, G. A mathematical-model for the prediction of cement strength. Cem. Concr. Res. 1995, 25, 9–14. [Google Scholar] [CrossRef]

- Kheder, G.F.; Al-Gabban, A.M.; Abid, S.M. Mathematical model for the prediction of cement compressive strength at the ages of 7 and 28 days within 24 hours. Mater. Struct. 2003, 36, 693–701. [Google Scholar] [CrossRef]

- Akkurt, S.; Tayfur, G.; Can, S. Fuzzy logic model for the prediction of cement compressive strength. Cem. Concr. Res. 2004, 34, 1429–1433. [Google Scholar] [CrossRef] [Green Version]

- Hwang, K.; Noguchi, T.; Tomosawa, F. Prediction model compressive strength development of fly-ash concrete. Cem. Concr. Res. 2004, 34, 2269–2276. [Google Scholar] [CrossRef]

- Zelić, J.; Rušić, D.; Krstulović, R. A mathematical model for prediction of compressive strength in cement-silica fume blends. Cem. Concr. Res. 2004, 34, 2319–2328. [Google Scholar] [CrossRef]

- Zain, M.F.M.; Abd, S.M. Multiple regressions model for compressive strength prediction of high performance concrete. J. Appl. Sci. 2009, 9, 155–160. [Google Scholar] [CrossRef]

- Ni, H.-G.; Wang, J.-Z. Prediction of compressive strength of concrete by neural networks. Cem. Concr. Res. 2000, 30, 1245–1250. [Google Scholar] [CrossRef]

- Lee, S.-C. Prediction of concrete strength using artificial neural networks. Eng. Struct. 2003, 25, 849–857. [Google Scholar] [CrossRef]

- Öztaş, A.; Pala, M.; Özbay, E.; Kanca, E.; Çaĝlar, N.; Bhatti, M.A. Predicting the compressive strength and slump of high strength concrete using neural network. Constr. Build. Mater. 2006, 20, 769–775. [Google Scholar] [CrossRef]

- Topçu, I.B.; Sarıdemir, M. Prediction of compressive strength of concrete containing fly ash using artificial neural networks and fuzzy logic. Comput. Mater. Sci. 2008, 41, 305–311. [Google Scholar] [CrossRef]

- Alshihri, M.M.; Azmy, A.M.; El-Bisy, M.S. Neural networks for predicting compressive strength of structural light weight concrete. Constr. Build. Mater. 2009, 23, 2214–2219. [Google Scholar] [CrossRef]

- Bilim, C.; Atiş, C.D.; Tanyildizi, H.; Karahan, O. Predicting the compressive strength of ground granulated blast furnace slag concrete using artificial neural network. Adv. Eng. Softw. 2009, 40, 334–340. [Google Scholar] [CrossRef]

- Sobhani, J.; Najimi, M.; Pourkhorshidi, A.R.; Parhizkar, T. Prediction of the compressive strength of no-slump concrete: A comparative study of regression, neural network and ANFIS models. Constr. Build. Mater. 2010, 24, 709–718. [Google Scholar] [CrossRef]

- Atici, U. Prediction of the strength of mineral admixture concrete using multivariable regression analysis and an artificial neural network. Expert Syst. Appl. 2011, 38, 9609–9618. [Google Scholar] [CrossRef]

- Chou, J.-S.; Chiu, C.-K.; Farfoura, M.; Al-Taharwa, I. Optimizing the prediction accuracy of concrete compressive strength based on a comparison of data-mining techniques. J. Comput. Civ. Eng. 2011, 25, 242–253. [Google Scholar] [CrossRef]

- Duan, Z.H.; Kou, S.C.; Poon, C.S. Prediction of compressive strength of recycled aggregate concrete using artificial neural networks. Constr. Build. Mater. 2013, 40, 1200–1206. [Google Scholar] [CrossRef]

- Chopra, P.; Sharma, R.K.; Kumar, M. Artificial Neural Networks for the Prediction of Compressive Strength of Concrete. Int. J. Appl. Sci. Eng. 2015, 13, 187–204. [Google Scholar]

- Nikoo, M.; Moghadam, F.T.; Sadowski, L. Prediction of Concrete Compressive Strength by Evolutionary Artificial Neural Networks. Adv. Mater. Sci. Eng. 2015, 2015, 849126. [Google Scholar] [CrossRef]

- Chopar, P.; Sharma, R.K.; Kumar, M. Prediction of Compressive Strength of Concrete Using Artificial Neural Network and Genetic Programming. Adv. Mater. Sci. Eng. 2016, 2016, 7648467. [Google Scholar] [CrossRef] [Green Version]

- Hao, C.-Y.; Shen, C.-H.; Jan, J.-C.; Hung, S.-K. A Computer-Aided Approach to Pozzolanic Concrete Mix Design. Adv. Civ. Eng. 2018, 2018, 4398017. [Google Scholar]

- Lin, C.-J.; Wu, N.-J. An ANN Model for Predicting the Compressive Strength of Concrete. Appl. Sci. 2021, 11, 3798. [Google Scholar] [CrossRef]

- Segal, R.; Kothari, M.L.; Madnani, S. Radial basis function (RBF) network adaptive power system stabilizer. IEEE Trans. Power Syst. 2000, 15, 722–727. [Google Scholar] [CrossRef]

- Mai-Duy, N.; Tran-Cong, T. Numerical solution of differential equations using multiquadric radial basis function networks. Neural Netw. 2001, 14, 185–199. [Google Scholar] [CrossRef] [Green Version]

- Ding, S.; Xu, L.; Su, C.; Jin, F. An optimizing method of RBF neural network based on genetic algorithm. Neural Comput. Appl. 2012, 21, 333–336. [Google Scholar] [CrossRef]

- Li, Y.; Wang, X.; Sun, S.; Ma, X.; Lu, G. Forecasting short-term subway passenger flow under special events scenarios using multiscale radial basis function networks. Transp. Res. Part C Emerg. Technol. 2017, 77, 306–328. [Google Scholar] [CrossRef]

- Aljarah, C.I.; Faris, H.; Mirjalili, S.; Al-Madi, N. Training radial basis function networks using biogeography-based optimizer. Neural Comput. Appl. 2018, 29, 529–553. [Google Scholar] [CrossRef]

- Hong, H.; Zhang, Z.; Guo, A.; Shen, L.; Sun, H.; Liang, Y.; Wu, F.; Lin, H. Radial basis function artificial neural network (RBF ANN) as well as the hybrid method of RBF ANN and grey relational analysis able to well predict trihalomethanes levels in tap water. J. Hydrol. 2020, 591, 125574. [Google Scholar] [CrossRef]

- Karamichailidou, D.; Kaloutsa, V.; Alexandridis, A. Wind turbine power curve modeling using radial basis function neural networks and tabu search. Renew. Energy 2021, 163, 2137–2152. [Google Scholar] [CrossRef]

- Jiang, L.H.; Malhotra, V.M. Reduction in water demand of non-air-entrained concrete incorporating large volumes of fly ash. Cem. Concr. Res. 2000, 30, 1785–1789. [Google Scholar] [CrossRef]

- Demirboğa, R.; Türkmen, İ.; Karakoç, M.B. Relationship between ultrasonic velocity and compressive strength for high-volume mineral-admixtured concrete. Cem. Concr. Res. 2004, 34, 2329–2336. [Google Scholar] [CrossRef]

- Yen, T.; Hsu, T.-H.; Liu, Y.-W.; Chen, S.-H. Influence of class F fly ash on the abrasion–erosion resistance of high-strength concrete. Constr. Build. Mater. 2007, 21, 458–468. [Google Scholar] [CrossRef]

- Oner, A.; Akyuz, S. An experimental study on optimum usage of GGBS for the compressive strength of concrete. Cem. Concr. Compos. 2007, 29, 505–514. [Google Scholar] [CrossRef]

- Durán-Herrera, A.; Juárez, C.A.; Valdez, P.; Bentz, D.P. Evaluation of sustainable high-volume fly ash concretes. Cem. Concr. Compos. 2011, 33, 39–45. [Google Scholar] [CrossRef]

- Ham, F.M.; Kostanic, I. Principles of Neurocomputing for Science & Engineering; McGraw-Hill Higher Education: New York, NY, USA, 2001. [Google Scholar]

- Wu, N.-J.; Chang, K.-A. Simulation of free-surface waves in liquid sloshing using a domain-type meshless method. Int. J. Numer. Methods Fluids 2011, 67, 269–288. [Google Scholar] [CrossRef]

| Factors | Symbol | Maximum | Minimum | Unit | |

|---|---|---|---|---|---|

| Inputs | water | x1 | 295 | 116.5 | kg/m3 |

| cement | x2 | 643 | 74 | kg/m3 | |

| fine aggregate (sand) | x3 | 1293 | 30 | kg/m3 | |

| coarse aggregate (gravel) | x4 | 1226 | 436 | kg/m3 | |

| blast furnace slag | x5 | 440 | 0 | kg/m3 | |

| fly ash | x6 | 330 | 0 | kg/m3 | |

| superplasticizer | x7 | 27.17 | 0 | kg/m3 | |

| Output | 28-day compressive strength | y | 95.3 | 5 | MPa |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, N.-J. Predicting the Compressive Strength of Concrete Using an RBF-ANN Model. Appl. Sci. 2021, 11, 6382. https://doi.org/10.3390/app11146382

Wu N-J. Predicting the Compressive Strength of Concrete Using an RBF-ANN Model. Applied Sciences. 2021; 11(14):6382. https://doi.org/10.3390/app11146382

Chicago/Turabian StyleWu, Nan-Jing. 2021. "Predicting the Compressive Strength of Concrete Using an RBF-ANN Model" Applied Sciences 11, no. 14: 6382. https://doi.org/10.3390/app11146382

APA StyleWu, N.-J. (2021). Predicting the Compressive Strength of Concrete Using an RBF-ANN Model. Applied Sciences, 11(14), 6382. https://doi.org/10.3390/app11146382