Use Learnable Knowledge Graph in Dialogue System for Visually Impaired Macro Navigation

Abstract

:Featured Application

Abstract

1. Introduction

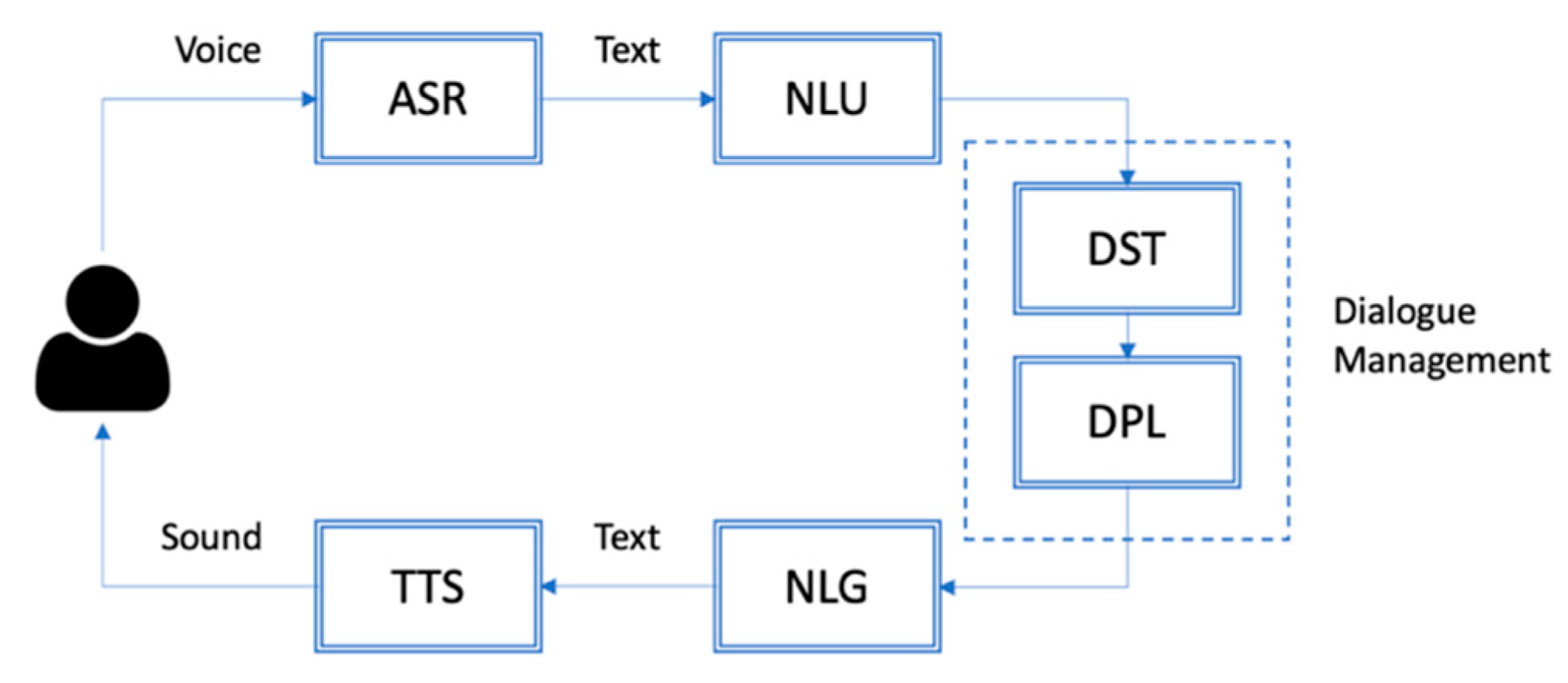

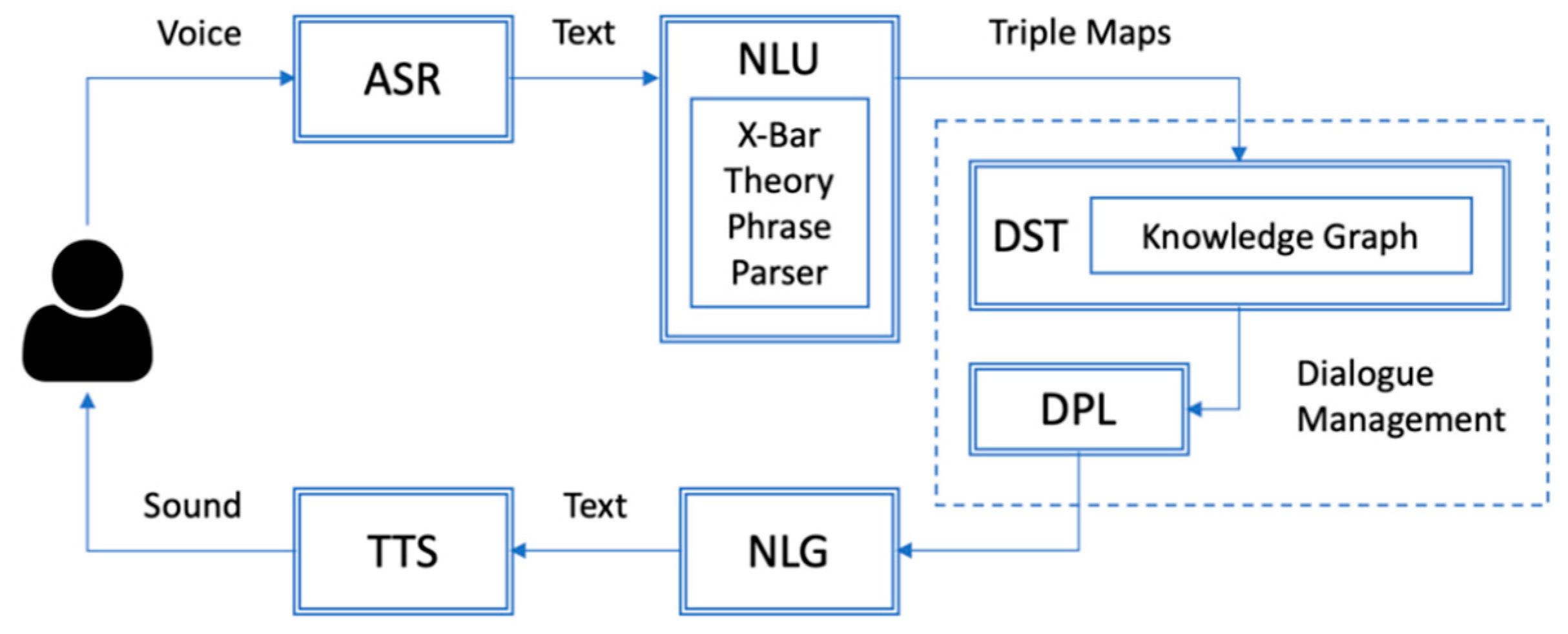

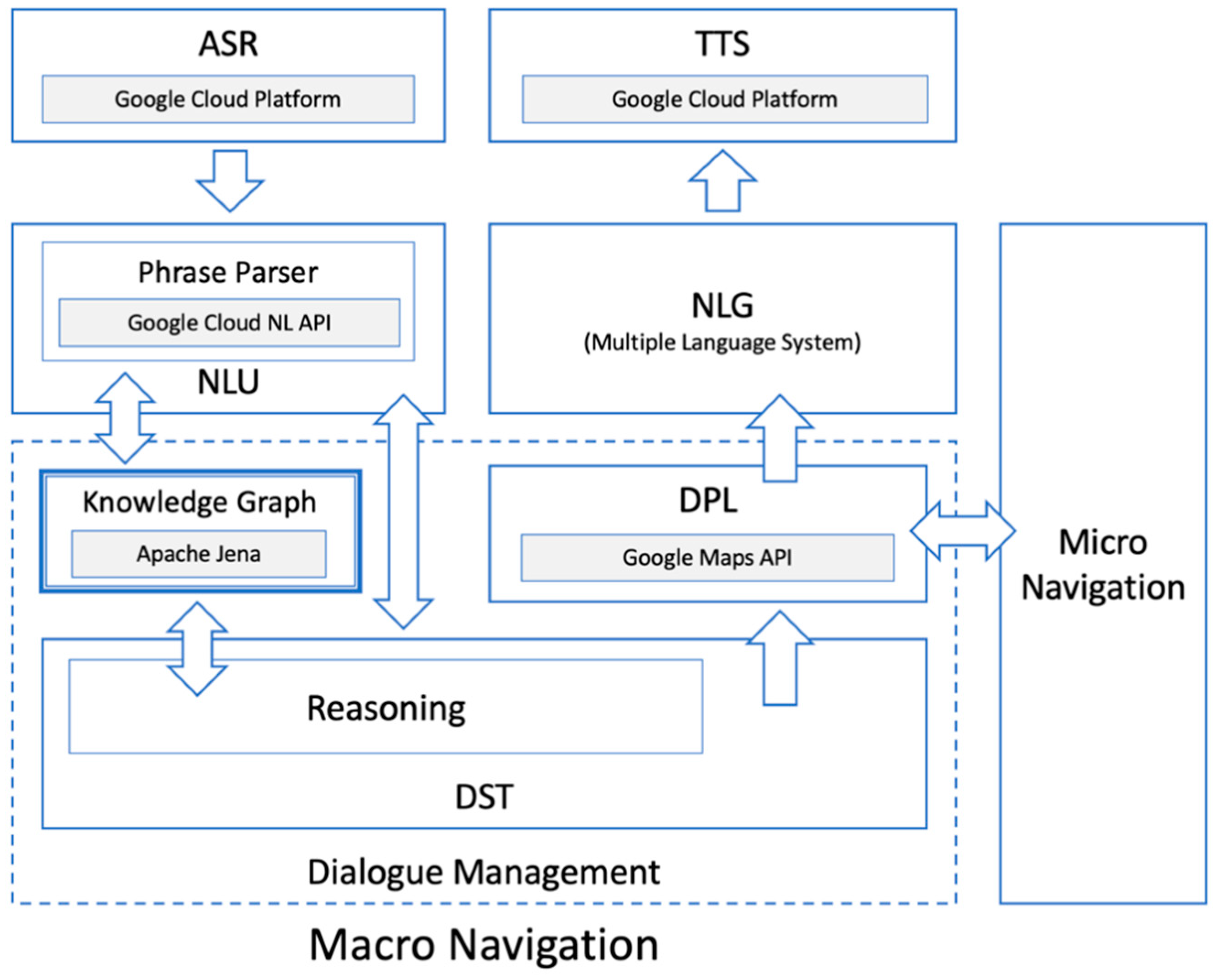

2. Methods

- (1)

- NLU: Maps natural language sentences input by users into machine-readable structured semantic representations.

- (2)

- DST: Tracks users’ needs and determines the current conversation status. It integrates the user’s current input and all previous conversations in order to understand the meaning by reasoning with context. For dialogue systems, this module is the most significant.

- (3)

- DPL: Determines the action of the system based on the current dialogue state. Also known as Strategy Optimization. The action of the system must conform to the user’s intention.

- (4)

- NLG: It transforms the decision of DLP into a natural language to respond to the user, and the voice is sent out by the TTS.

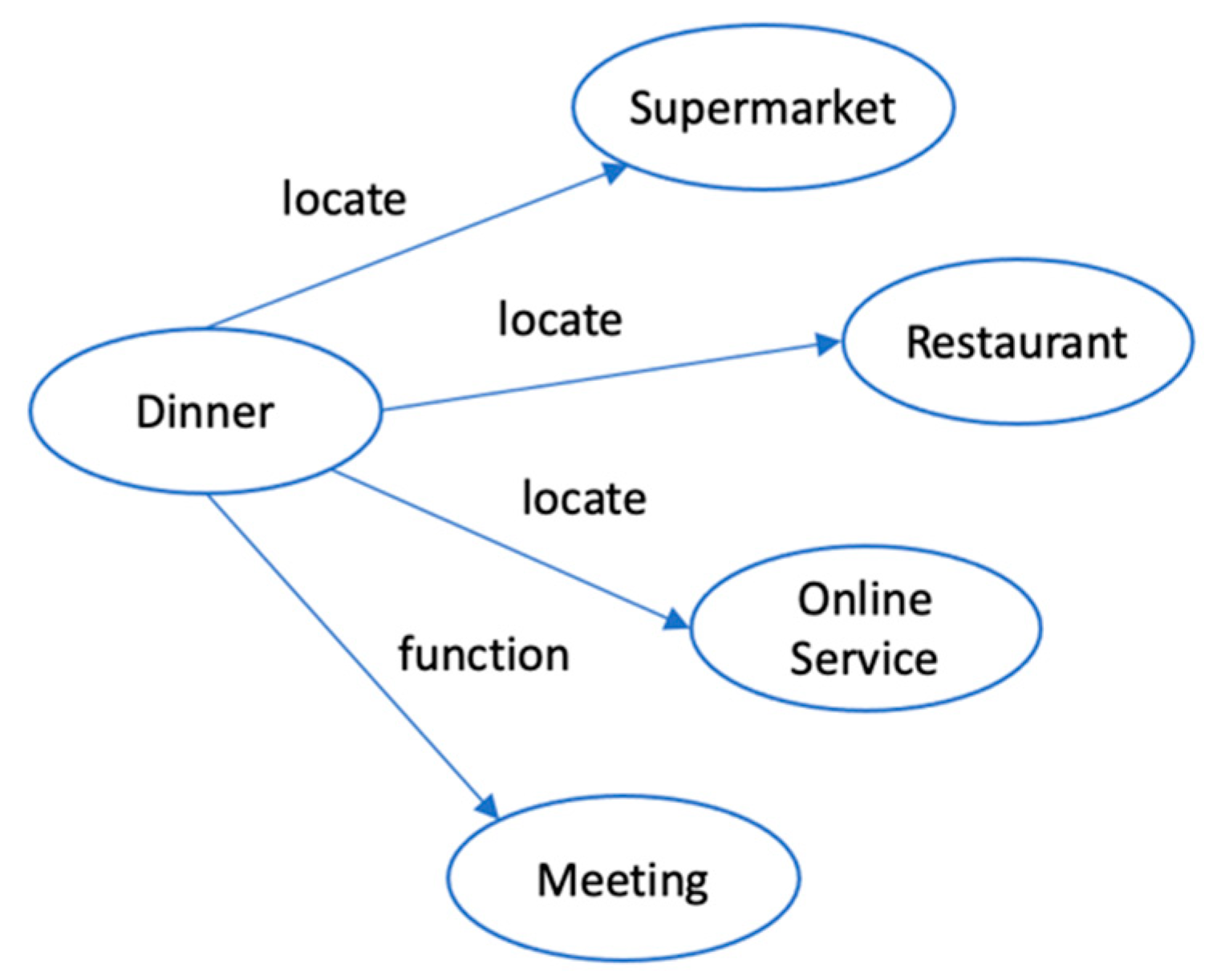

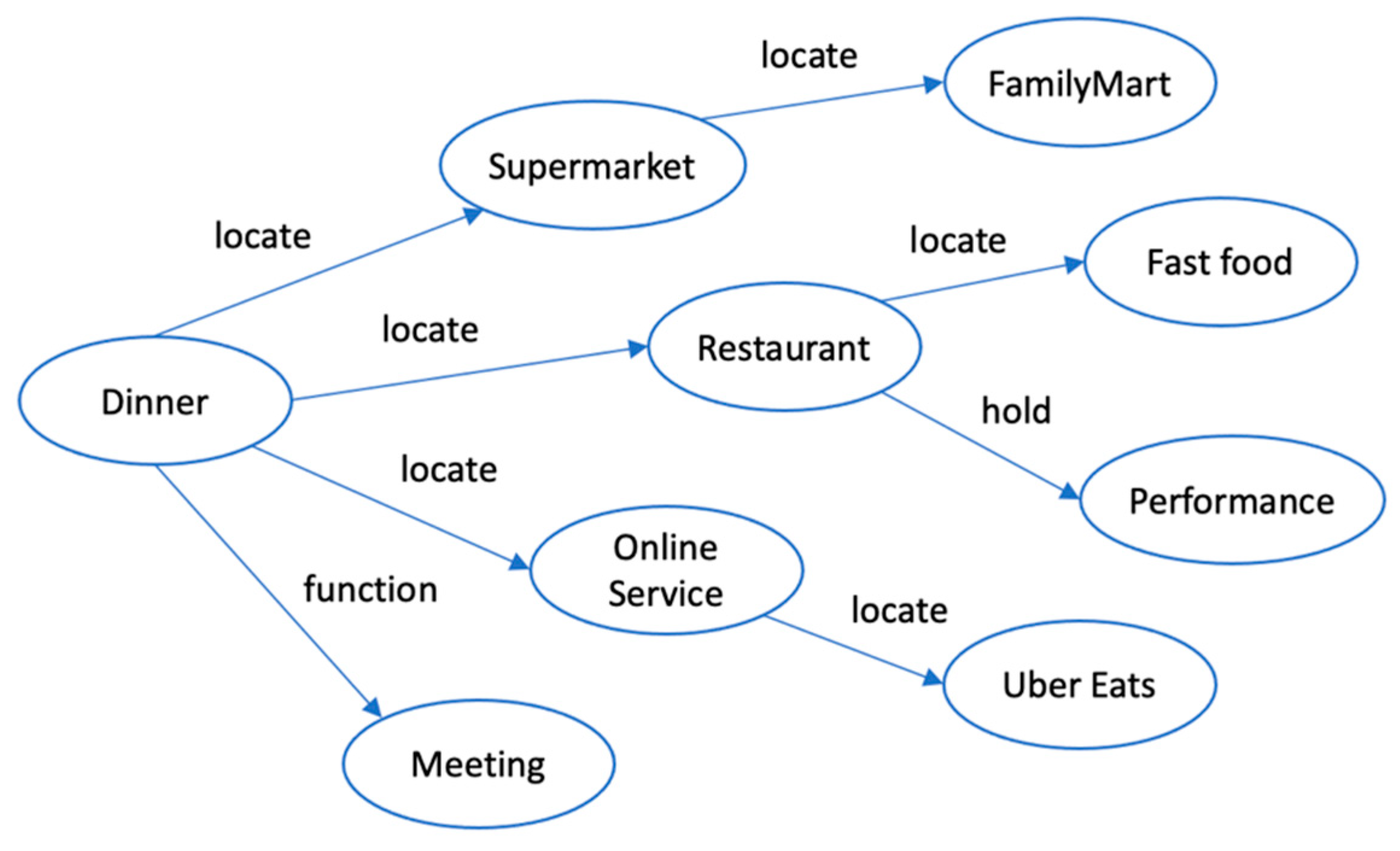

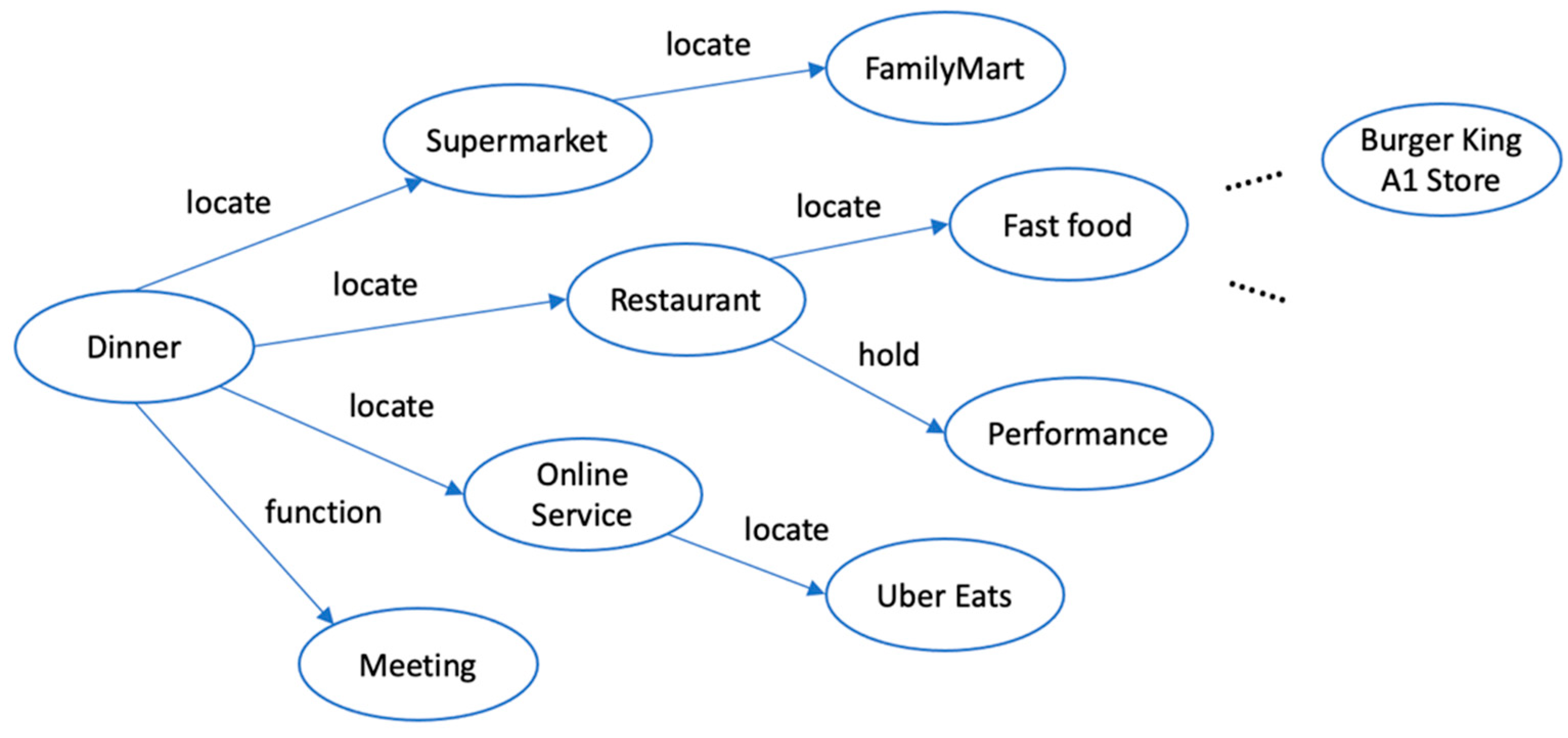

2.1. Knowledge Graph Integration

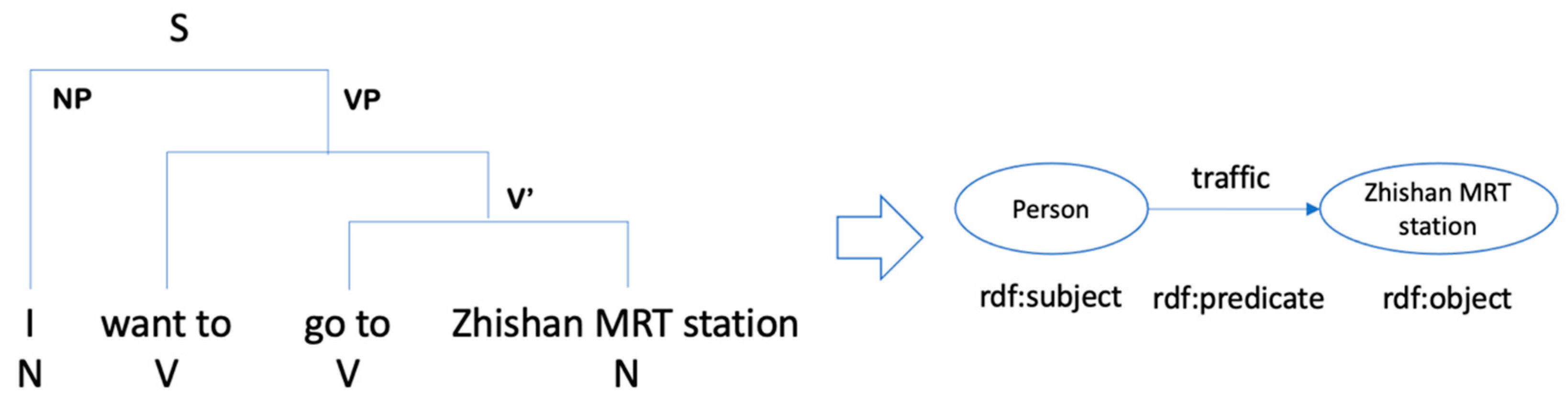

2.2. Syntax Analysis

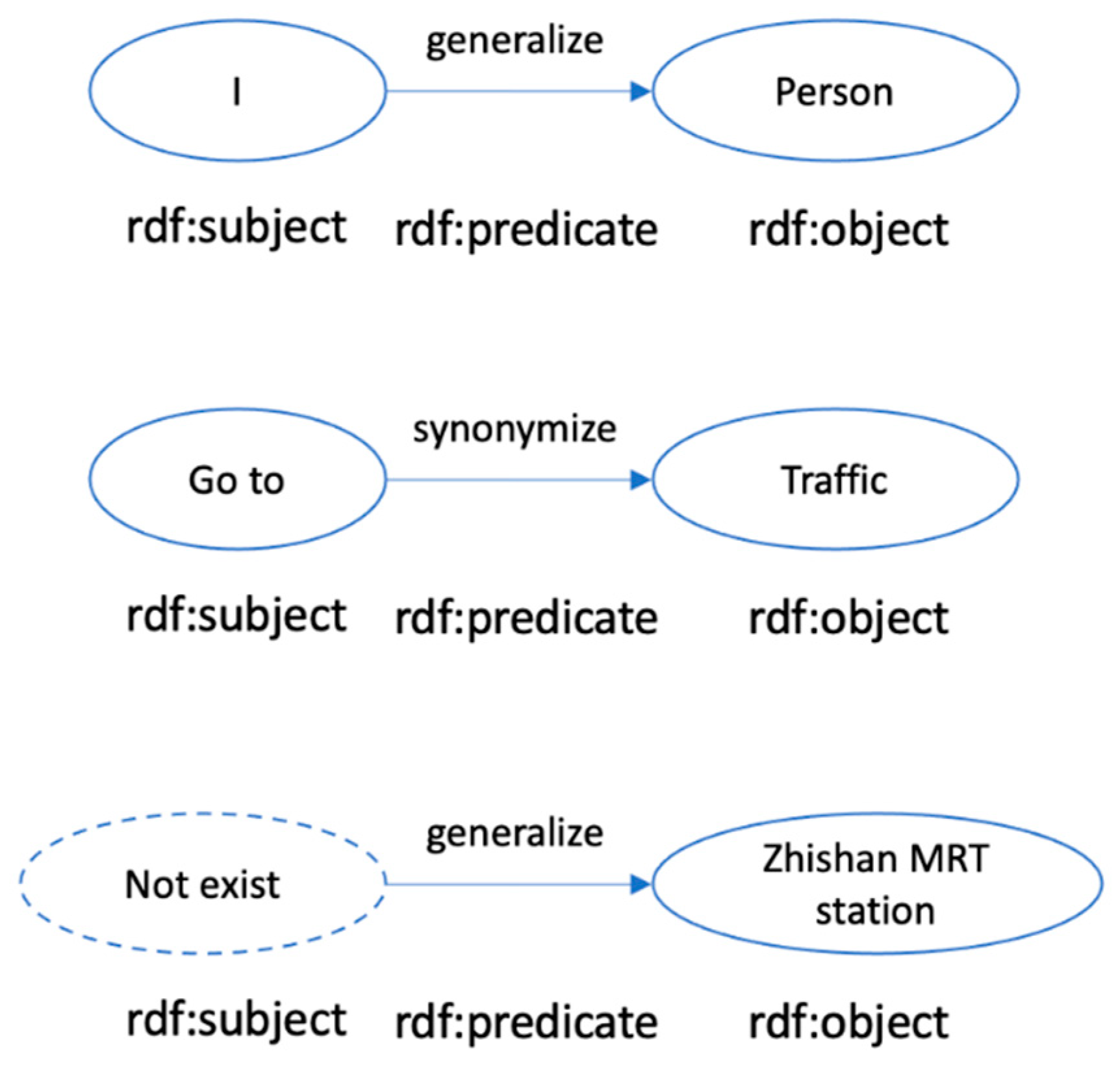

2.3. Reasoning with Knowledge Graph

3. Results

3.1. Dialogue Experiment

3.2. Outdoor Test

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

References

- Norgate, S.H. Accessibility of urban spaces for visually impaired pedestrians. Proc. Inst. Civ. Eng. Munic. Eng. 2012, 165, 231–237. [Google Scholar] [CrossRef] [Green Version]

- Md, M.I.; Muhammad, S.S.; Kamal, Z.Z.; Md, M.A. Developing Walking Assistants for Visually Impaired People: A Review. IEEE Sens. J. 2019, 8, 2814. [Google Scholar]

- Chen, C.H.; Wu, M.C.; Wang, C.C. Cloud-based Dialog Navigation Agent System for Service Robots. Sens. Mater. 2019, 31, 1871–1891. [Google Scholar] [CrossRef]

- Bradley, N.A.; Dunlop, M.D. An Experimental Investigation into Wayfinding Directions for Visually Impaired People. Pers. Ubiquitous Comput. 2005, 9, 395–403. [Google Scholar] [CrossRef] [Green Version]

- Strothotte, T.; Fritz, S.; Michel, R.; Raab, A.; Petrie, H.; Johnson, V.; Reichert, L.; Schalt, A. Development of Dialogue Systems for the Mobility Aid for Blind People: Initial Design and Usability Testing. In Proceedings of the Second Annual ACM Conference on Assistive Technologies, Vancouver, BC, Canada, 15 April 1996; pp. 139–144. [Google Scholar]

- Fruchterman, J. Archenstoneís orientation tools: Atlas Speaks and Strider. In Proceedings of the Conference on Orientation and Navigation Systems for Blind Persons, Hatfield, UK, 1–2 February 1995. [Google Scholar]

- Golledge, R.G.; Klatzky, R.L.; Loomis, J.M.; Speigle, J.; Tietz, J. A geographical information system for a GPS based personal guidance system. Int. J. Geogr. Inf. Sci. 1998, 12, 727–749. [Google Scholar] [CrossRef]

- Nicholas, A.B.; Mark, D.D. Investigating Context-Aware Clues to Assist Navigation for Visually Impaired People; University of Strathclyde: Glasgow, Scotland, UK, 2002. [Google Scholar]

- Shekhar, J.; Deepak, B.; Siddharth, P. HCI Guidelines for Designing Website for Blinds. Int. J. Comput. Appl. 2014, 103, 30. [Google Scholar]

- Stina, O. Designing Interfaces for the Visually Impaired: Contextual Information and Analysis of User Needs; Umeå University: Umeå, Sweden, 2017; pp. 14–17. [Google Scholar]

- McTear, M.; Zoraida, C.; David, G. The Conversational Interface: Talking to Smart Devices; Springer: New York, NY, USA, 2016; pp. 214–218. [Google Scholar]

- Hongshen, C.; Xiaorui, L.; Dawei, Y.; Jiliang, T. A Survey on Dialogue Systems: Recent Advances and New Frontiers; Data Science and Engineering Lab, Michigan State University: East Lansing, MI, USA, 2017; p. 1. [Google Scholar]

- Zhuosheng, Z.; Jiangtong, L.; Pengfei, Z.; Hai, Z.; Gongshen, L. Modeling Multi-Turn Conversation with Deep Utterance Aggregation; Shanghai Jiao Tong University: Shanghai, China, 2018; pp. 3740–3752. [Google Scholar]

- Kornai, A.; Pullum, G.K. The X-Bar Theory of Phrase Structure. Linguist. Soc. Am. 1990, 66, 24–50. [Google Scholar]

- Arthur, W.B. Inductive Reasoning and Bounded Rationality. Am. Econ. Rev. 1994, 84, 406–411. [Google Scholar]

- Feng, N.; Ce, Z.; Christopher, R.; Jude, S. Elementary: Large-scale knowledge-base construction via machine learning and statistical inference. Int. J. Semant. Web Info. Sys. 2012, 8, 1–21. [Google Scholar]

- Zhao, Y.J.; Li, Y.L.; Lin, M. A Review of the Research on Dialogue Management of Task-Oriented Systems. J. Phys. Conf. Ser. 2019, 1267, 12–25. [Google Scholar]

- Google Cloud Platform. Available online: https://cloud.google.com/ (accessed on 1 March 2021).

- Graham, K.; Jeremy, J.C.; Brian, M. RDF 1.1 Concepts and Abstract Syntax. W3C Recommendation 2014. Available online: https://www.w3.org/TR/rdf11-concepts/ (accessed on 1 March 2021).

- Aprilia, W. English Passive Voice: An X-Bar Theory Analysis. Indones. J. Engl. Lang. Stud. 2018, 4, 69–75. [Google Scholar]

- Cloud Natural Language API. Available online: https://cloud.google.com/natural-language/ (accessed on 1 March 2021).

- Pearl, J.; Azaria, P. Graphoids: A Graph-Based Logic for Reasoning about Relevance Relations; University of California: Los Angeles, CA, USA, 1985; pp. 357–363. [Google Scholar]

- Francesco, M.D.; Maurizio, L.; Daniele, N. Reasoning in Description Logics. Principles of Knowledge Representation; CSLI-Publications: Stanford, CA, USA, 1996; pp. 191–236. [Google Scholar]

- Ni, L.; Tom, M.M.; William, W.C. Random Walk Inference and Learning in a Large Scale Knowledge Base. In Proceedings of the 2011 Conference on Empirical Methods in Natural Language Processing, Edinburgh, UK, 27–31 July 2011; pp. 529–539. [Google Scholar]

- Antoine, B.; Nicolas, U.; Alberto, G.D. Translating Embeddings for Modeling Multi-Relational Data; Neural Information Processing Systems (NIPS): South Lake Tahoe, CA, USA, 2013; pp. 2787–2795. [Google Scholar]

- Luis, A.G.; Christina, T.; Katja, H. Association Rule Mining under Incomplete Evidence in Ontological Knowledge Bases. In Proceedings of the 22nd International Conference on World Wide Web, Rio de Janeiro, Brazil, 13 May 2013; pp. 413–422. [Google Scholar]

- Xiong, W.; Thien, H.; William, Y.W. DeepPath: A Reinforcement Learning Model for Knowledge Graph Reasoning. arXiv 2017, arXiv:1707.06690. [Google Scholar]

- Ni, L.; William, W.C. Relational retrieval using a combination of path-constrained random walks. Mach. Learn. 2010, 81, 53–67. [Google Scholar]

- Google Maps Platform. Available online: https://cloud.google.com/maps-platform/ (accessed on 1 March 2021).

- Apache Jena. Available online: https://jena.apache.org/ (accessed on 1 March 2021).

| Speaker | Words | Action |

|---|---|---|

| User | Hi, partner. | System activated |

| Navigator | Hi, is there anything I can help? | |

| User | I would like to go to Seven-Eleven Xue Cheng Store. | Reasoning |

| Navigator | Let’s go now. | Start micro-navigation |

| Speaker | Words | Action |

|---|---|---|

| User | Hi, partner. | System activated |

| Navigator | Hi, is there anything I can help? | |

| User | I am going to work. | |

| Navigator | Your most frequent working place is Engineering Building 5. Are you going there? | Reasoning |

| User | Okay, please take me there. | |

| Navigator | Let’s go now. | Start micro-navigation |

| Speaker | Words | Action |

|---|---|---|

| User | Hi, partner. | System activated |

| Navigator | Hi, is there anything I can help? | |

| User | I’m hungry, can you take me to dinner? | |

| Navigator | Would you like to find a restaurant for dinner? | Reasoning |

| User | Yes. | |

| Navigator | Okay, I will look for nearby restaurants for you now. | Search maps |

| Navigator | There is a Burger King A1 store nearby that you visited last week. Do you want to go? | Found target |

| User | No, can I go to a Chinese restaurant? | |

| Navigator | Okay, I will look for a nearby Chinese restaurant for you now. | Search maps |

| Navigator | There is a 4-star Manfulou restaurant nearby. Are you going to go? | Found target |

| User | Okay, please take me there. | |

| Navigator | Let’s go now. | Start micro-navigation |

| Speaker | Words | Location |

|---|---|---|

| User | Hi, partner. |  |

| Navigator | Is there anything I can help? | |

| User | Please take me to the MRT station. | |

| Navigator | Okay, let me check, please wait. | |

| Navigator | There is Dayuan Pilot Station 200 m nearby. May I take you there? | |

| User | Yes, that’s right. | |

| Navigator | (DPL drives the micro-navigation) Please walk straight along the sidewalk. | |

| User | Hi, partner. |  |

| Navigator | Hello, how can I help you? | |

| User | I want to go to the convenience store first. | |

| Navigator | Okay, let me check, please wait. | |

| Navigator | There is a FamilyMart convenience store 50 m ahead. You are on your way to the MRT station. Do you want to go to that convenience store first? | |

| User | Okay, please take me there. | |

| Navigator | You have arrived at FamilyMart convenience store, please let me know if you want to continue to the MRT station. |  |

| (Later) | ||

| User | Hi, partner. | |

| Navigator | Hello, how can I help you? | |

| User | I am going to the MRT station. | |

| Navigator | Okay, now I will continue to take you to Dayuan Pilot Station. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, C.-H.; Shiu, M.-F.; Chen, S.-H. Use Learnable Knowledge Graph in Dialogue System for Visually Impaired Macro Navigation. Appl. Sci. 2021, 11, 6057. https://doi.org/10.3390/app11136057

Chen C-H, Shiu M-F, Chen S-H. Use Learnable Knowledge Graph in Dialogue System for Visually Impaired Macro Navigation. Applied Sciences. 2021; 11(13):6057. https://doi.org/10.3390/app11136057

Chicago/Turabian StyleChen, Ching-Han, Ming-Fang Shiu, and Shu-Hui Chen. 2021. "Use Learnable Knowledge Graph in Dialogue System for Visually Impaired Macro Navigation" Applied Sciences 11, no. 13: 6057. https://doi.org/10.3390/app11136057

APA StyleChen, C.-H., Shiu, M.-F., & Chen, S.-H. (2021). Use Learnable Knowledge Graph in Dialogue System for Visually Impaired Macro Navigation. Applied Sciences, 11(13), 6057. https://doi.org/10.3390/app11136057