Autonomous Mobile Robot Navigation in Sparse LiDAR Feature Environments

Abstract

:1. Introduction

1.1. Path Planning and Trajectory-Tracking Algorithms

1.2. AGV Localization Algorithms

1.3. Contributions

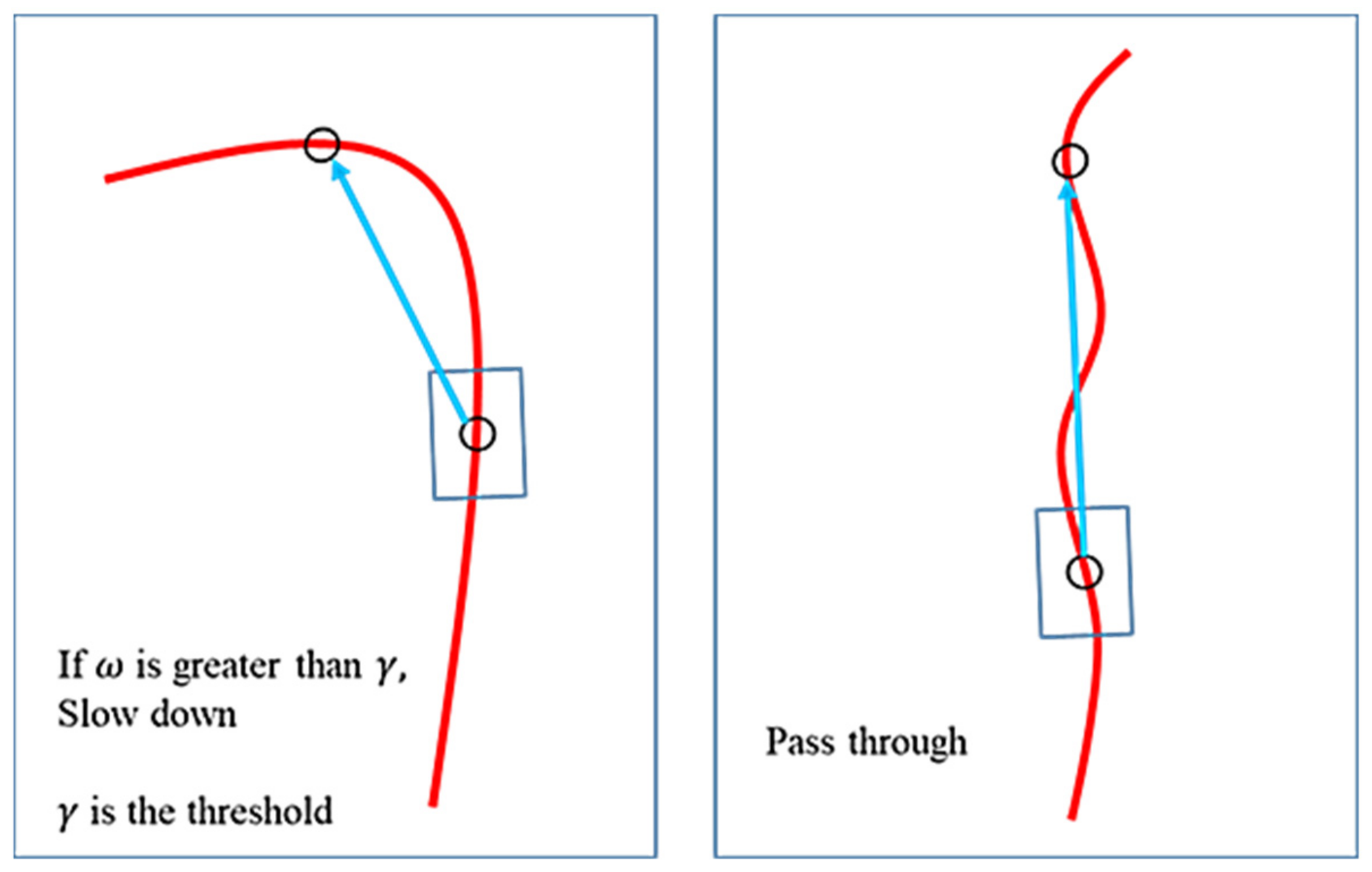

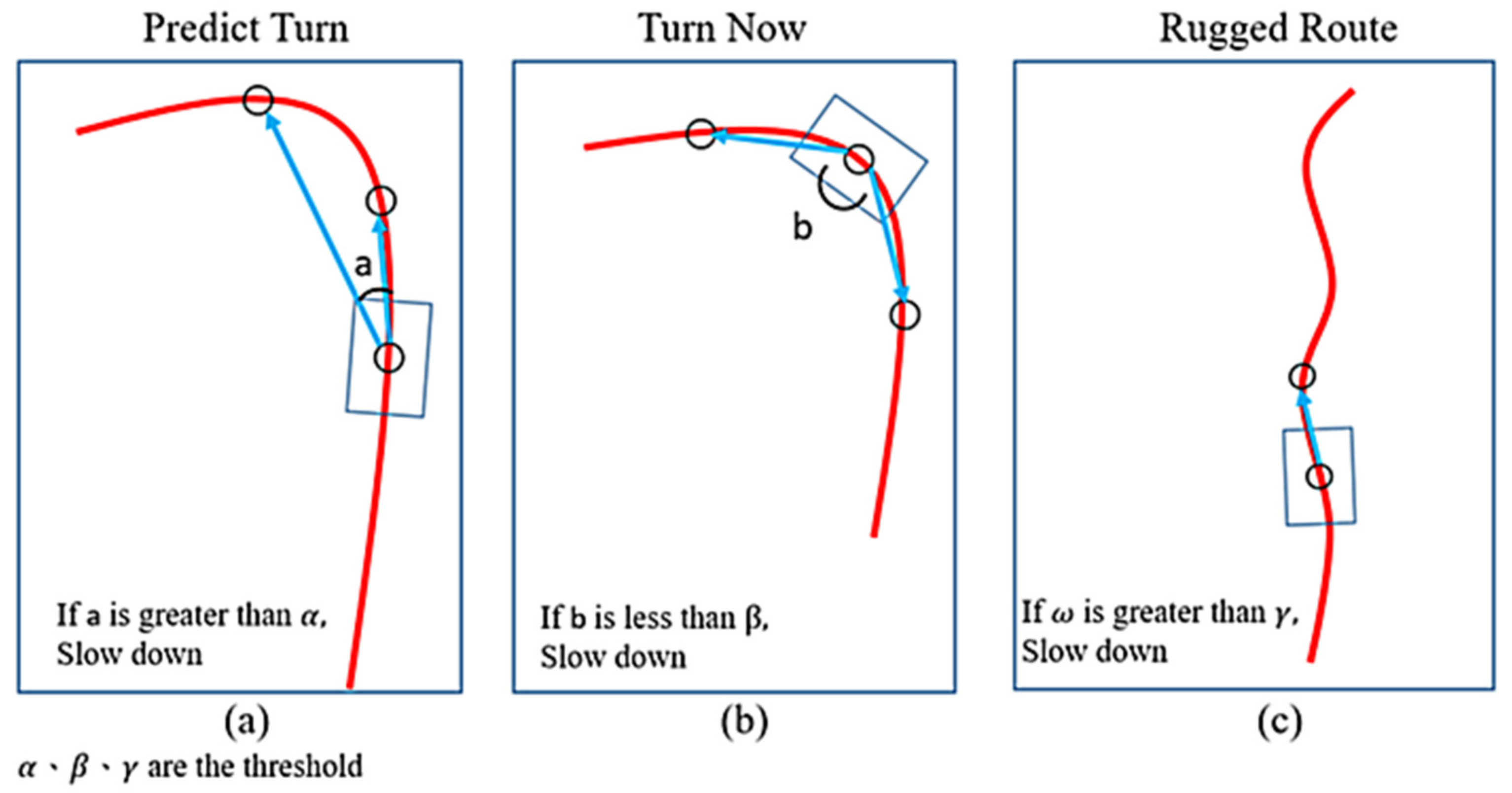

- For trajectory tracking, we adopt the PP algorithm and improves it. The traditional PP algorithm often causes errors when it encounters a turn because it is overdue to decelerate speed. Therefore, an improved PP algorithm is proposed that incorporates turning prediction-based deceleration to reduce the impact caused by late attempts at deceleration.

- To solve the kidnapped-robot problem, we combine 2D LiDAR point-cloud features with a deep convolutional network-based classifier to distinguish the current situation for selecting SLAM or odometry localization system. Thus, if the AGV is in a situation where SLAM fails to determine robot position, the task can still be continued.

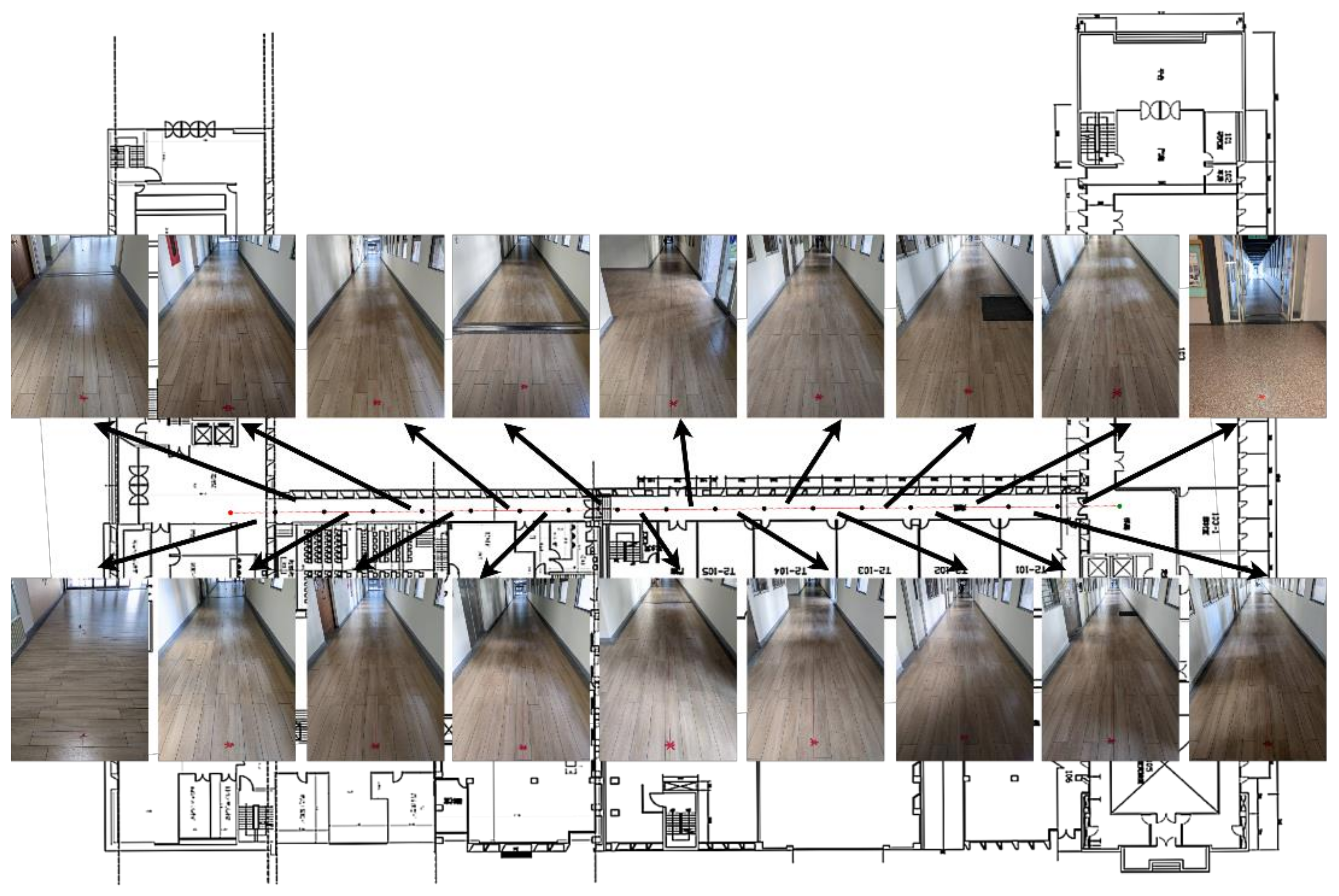

- In addition, practical experiments in the long corridor terrain are carried out to verify the feasibility of the proposed system.

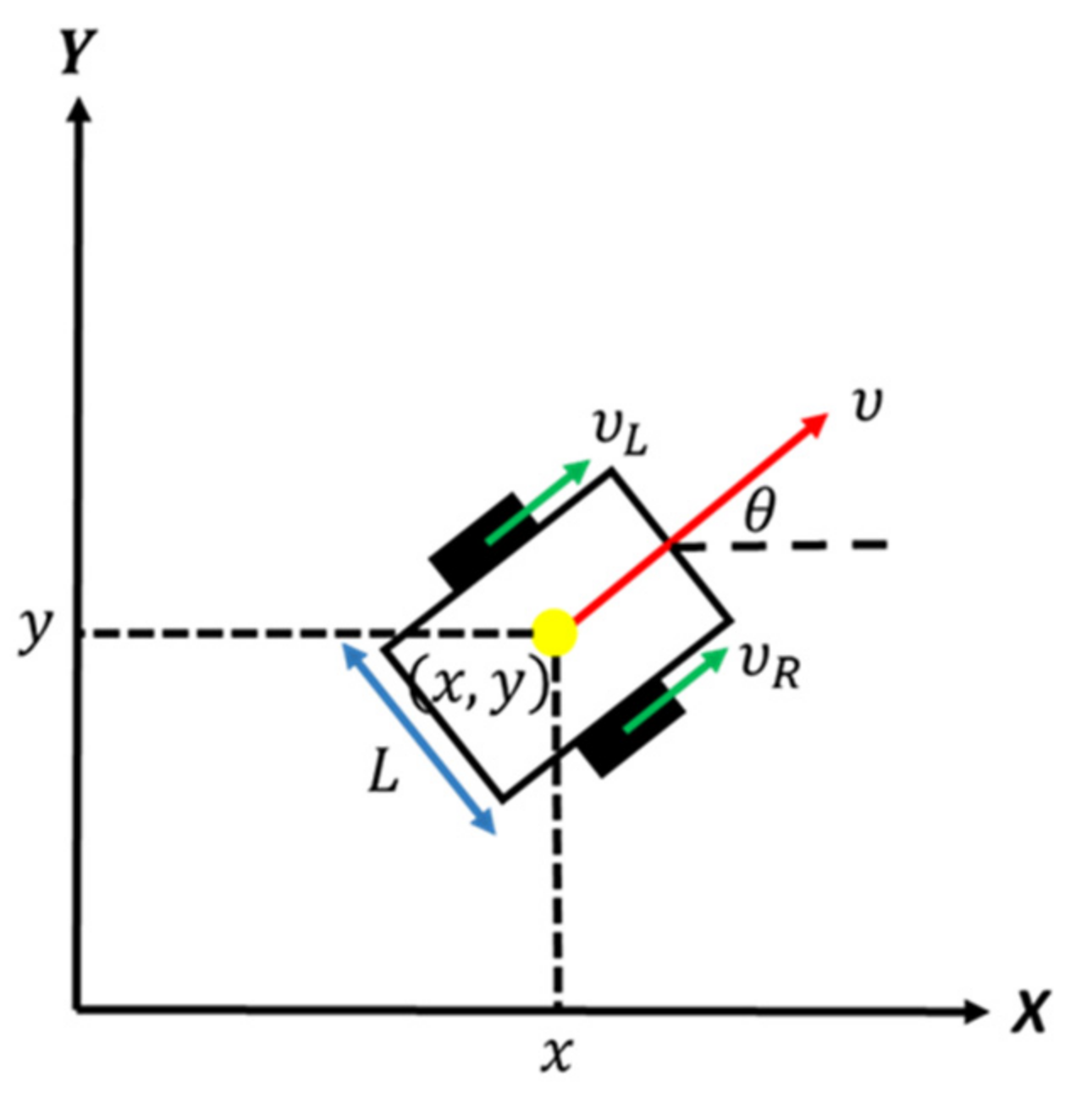

2. Robot System

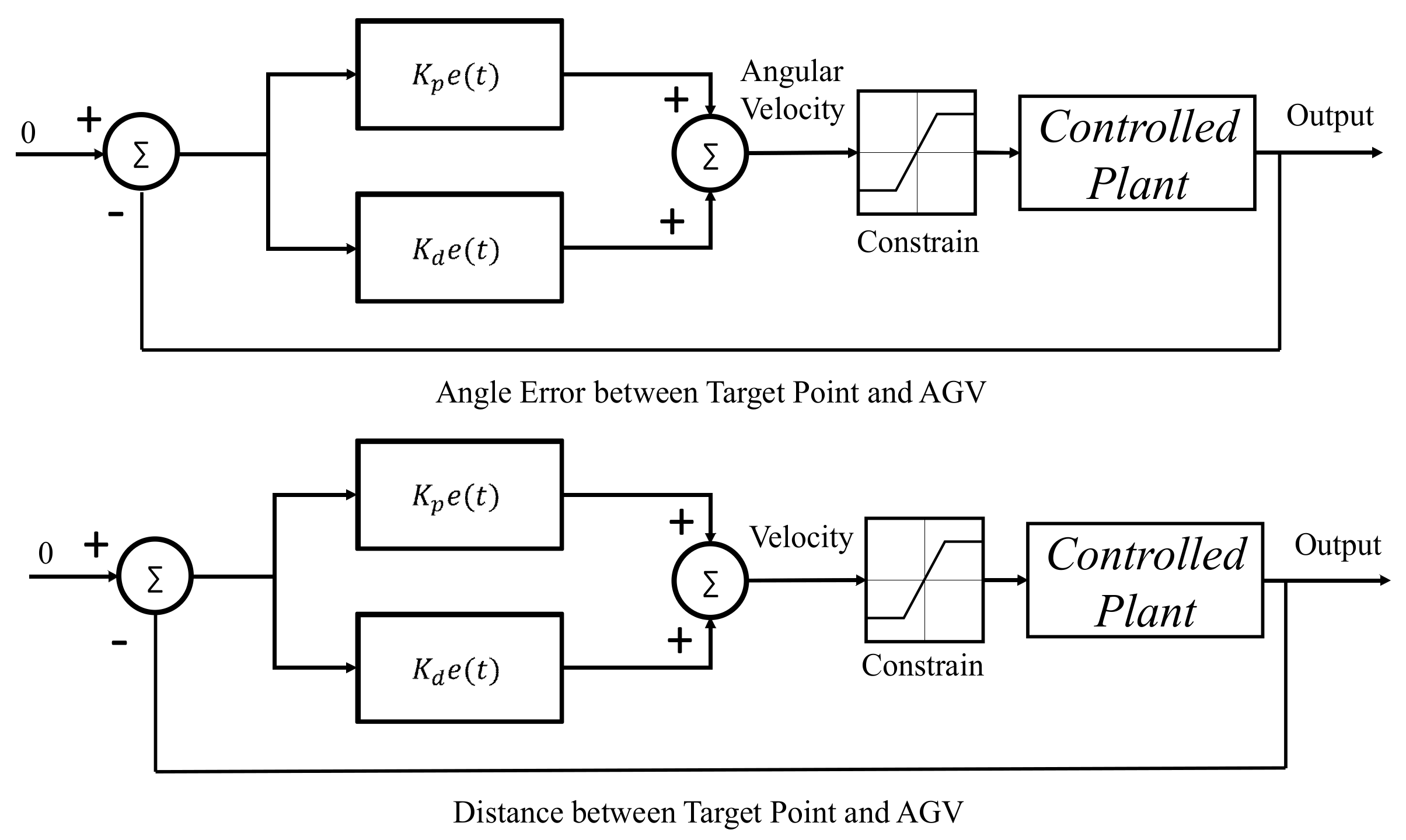

3. Design of Trajectory-Tracking System

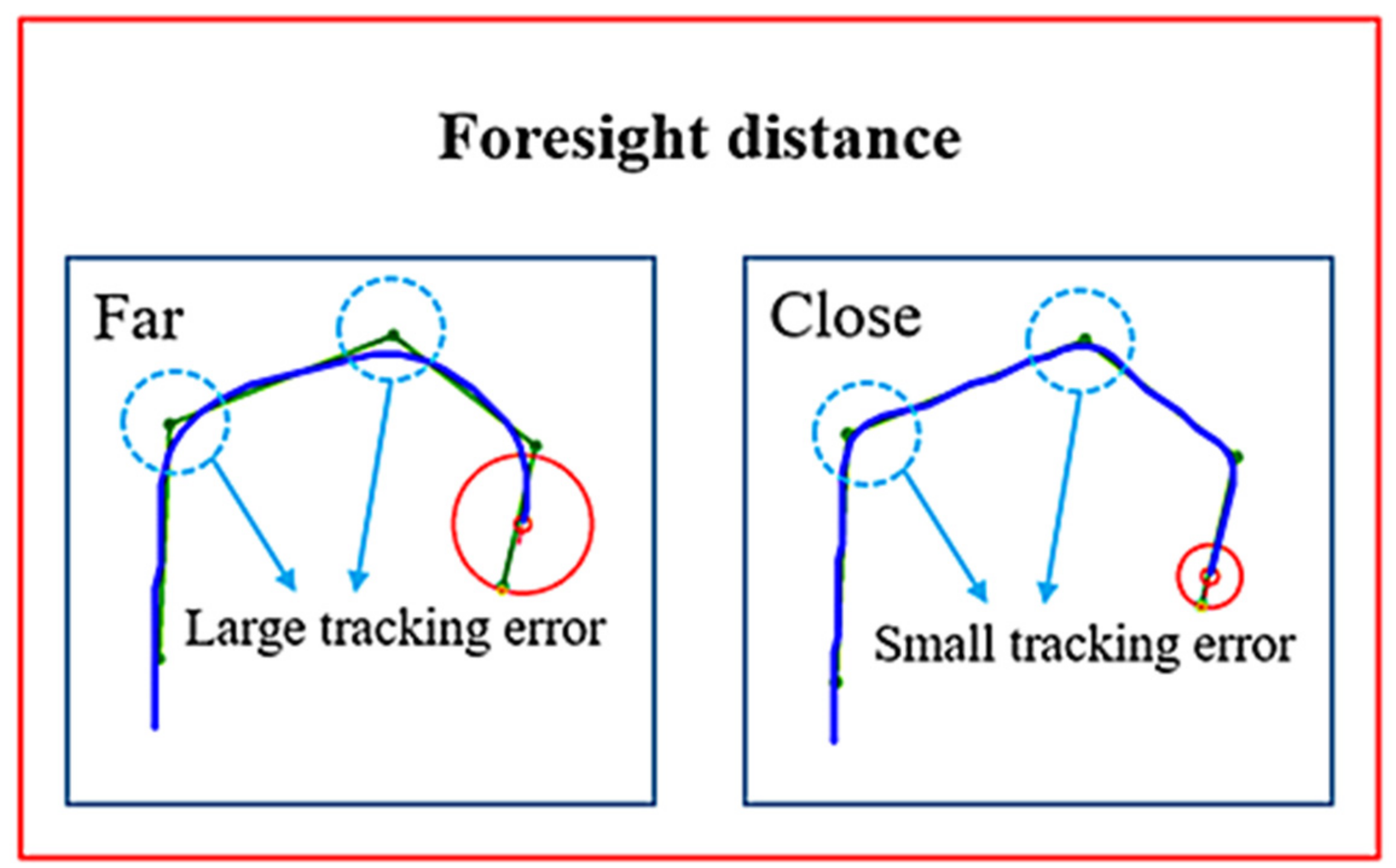

3.1. Introduction of Pure Pursuit (PP) Algorithm

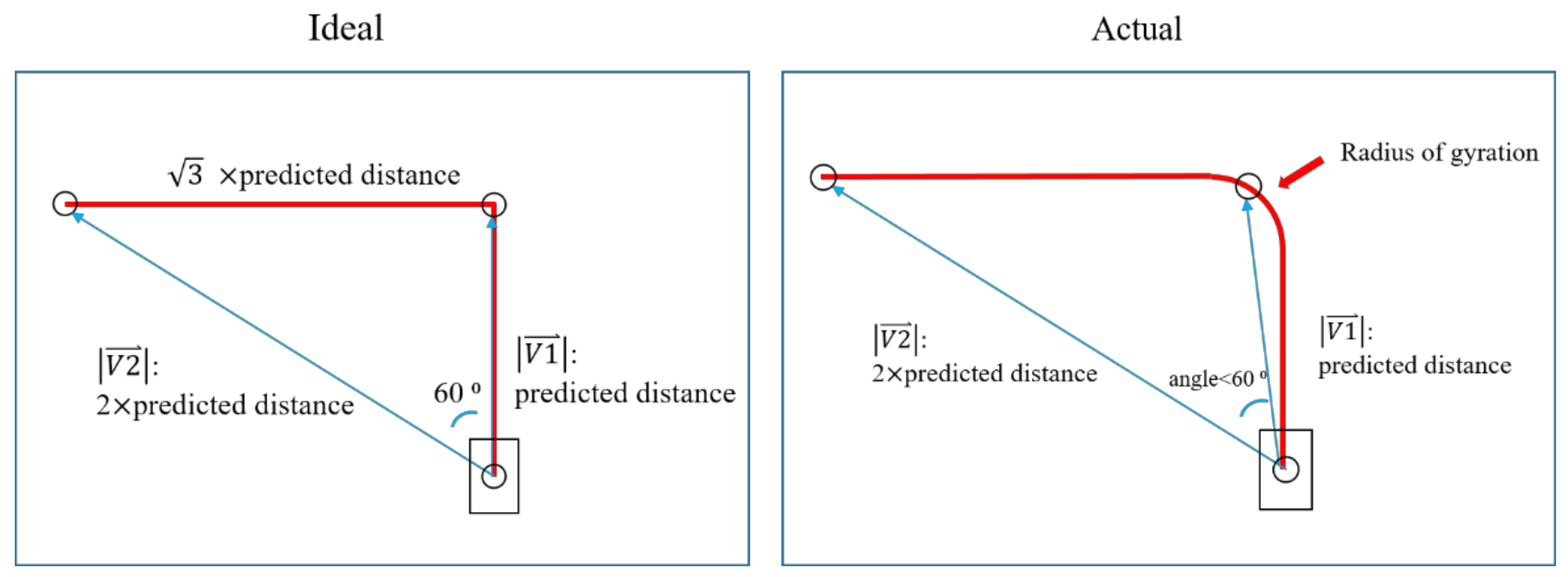

3.2. Improved Pure Pursuit Algorithm

4. The Localization Switching Method in the Structure-Less Environment

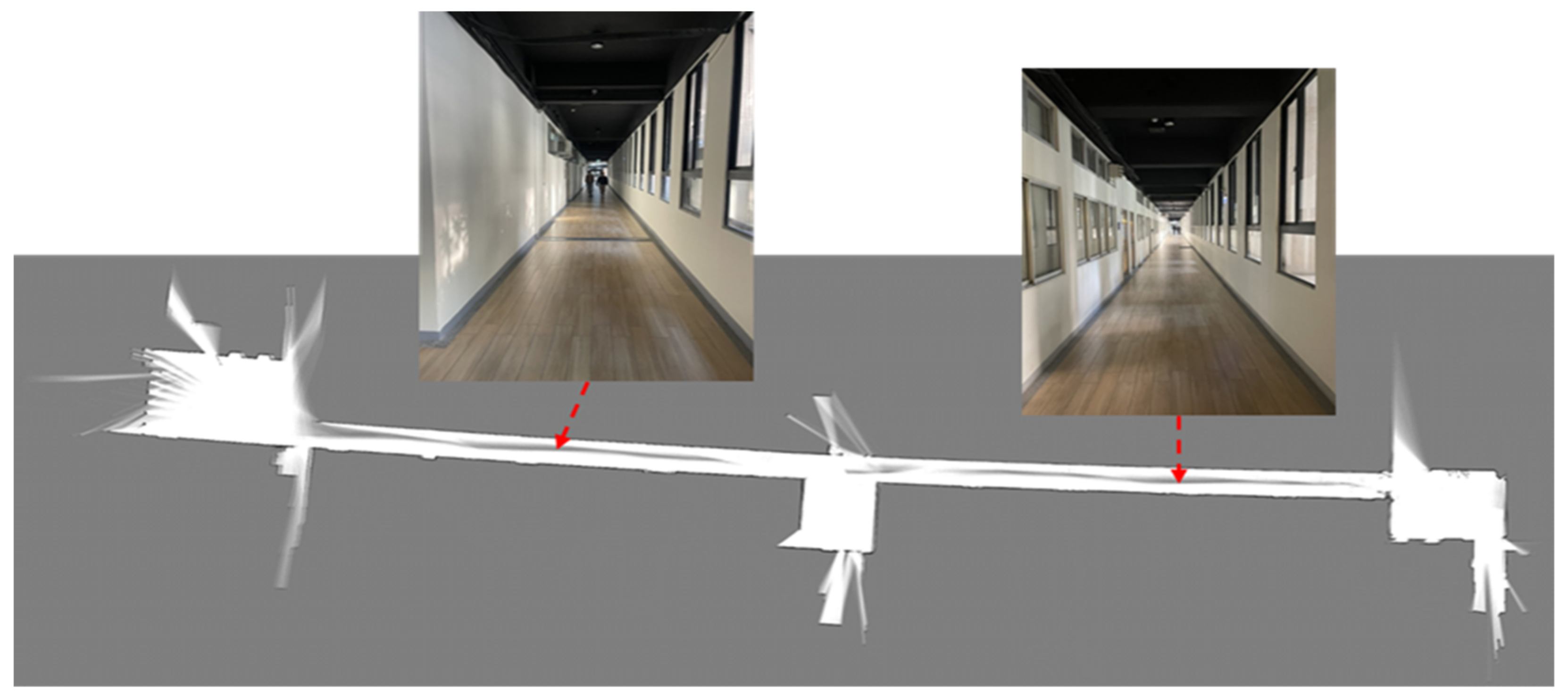

4.1. Two-Dimensional (2D) LiDAR SLAM

4.2. Deep Learning-Based Corridor Recognition for Switching Localization Systems

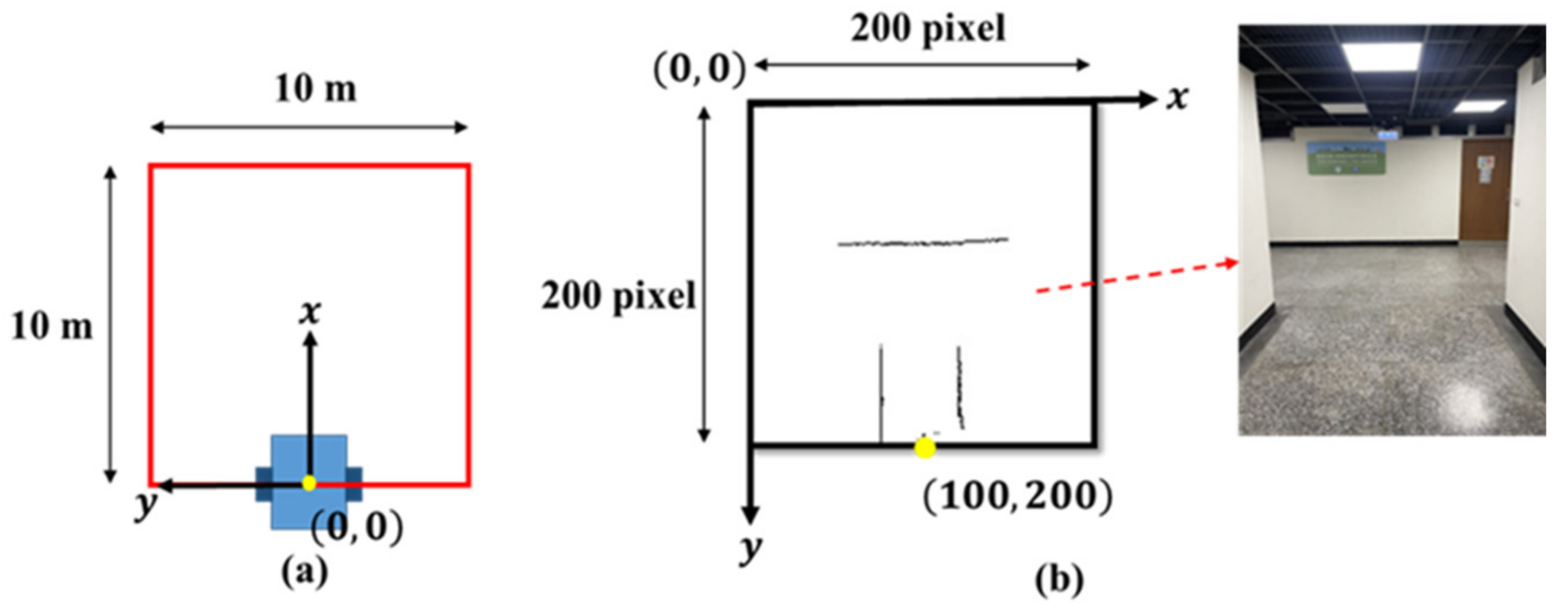

- First, to collect images that represent the current area, we need to convert the LiDAR point-cloud data into 2D images by the following formula:where is the position of the point-cloud on the picture, is the position of the point-cloud on real world, is the transfer matrix from the LiDAR point-cloud position to the image point-cloud position and is the offset of the LiDAR point-cloud position from the image point-cloud position. To convert the real scale to image pixels, and we set a pixel equal to with is the image resolution. The point-cloud range is set within a square of with the center of the mobile robot as the base, as shown in Figure 10a. Finally, the point-cloud information is drawn on the two-dimensional picture with the map coordinates as the center of the mobile robot through a conversion matrix, as shown in Figure 10b.

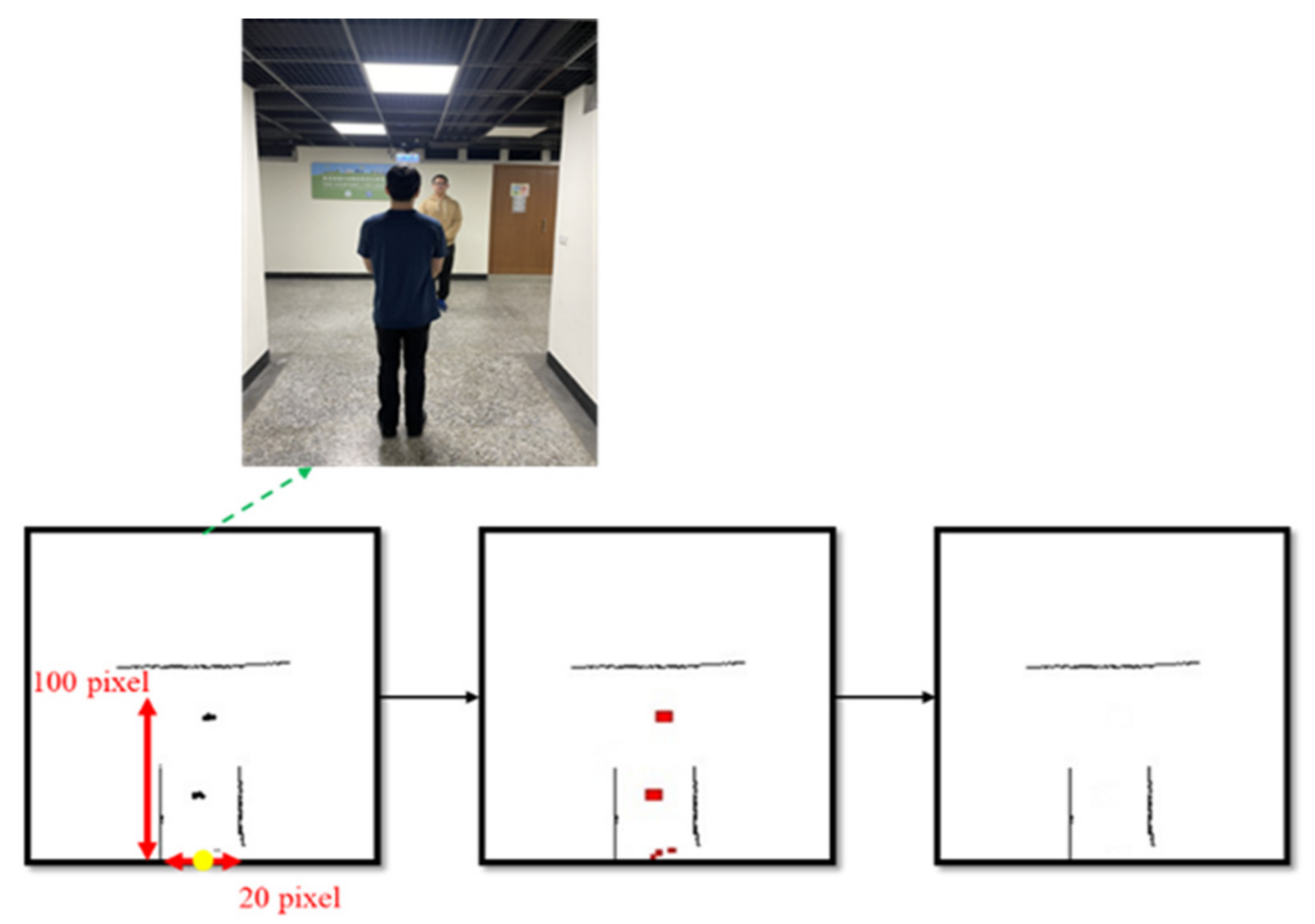

- When putting the 2D point-cloud image into the deep neural network for recognition, it is found that if there are people in the image, this will cause noise, and the recognition performance of the corridor is poor. Therefore, image edge detection is used to empirically determine the Region of Interests (ROI) of and in the range of the image. The ROI content then is filtered noise, as shown in Figure 11. After image preprocessing, it is put into a deep neural network to determine corridor area.

- For the corridor recognition network, we use 2 different InceptionV3 [18] and LeNet-5 [24] architectures. Despite having impressive performance in classification tasks, most deep neural networks require powerful hardware support for their heavy computation, leading to difficulties deploying the deep learning method into edge devices such as AGV. In this paper, we choose the lightweight deep neural networks, which have a small number of parameters but still give a good performance, to implement on our system. In the Inception V3-based corridor classification model, we apply the fine-tuning approach to adopt ImageNet for speeding up the training phase and the model accuracy. Moreover, we also define a lightweight model, inspiring by LeNet. The proposed LeNet-inspired model, shown in Table 1, has fewer parameters than the InceptionV3-based model but keeps a good classification performance.

- When a long corridor area is detected by the trained deep neural networks, the AGV avoids the mislocalization problem by switching the SLAM localization system into the IMU-based Odometer localization system.

5. Experimental Results

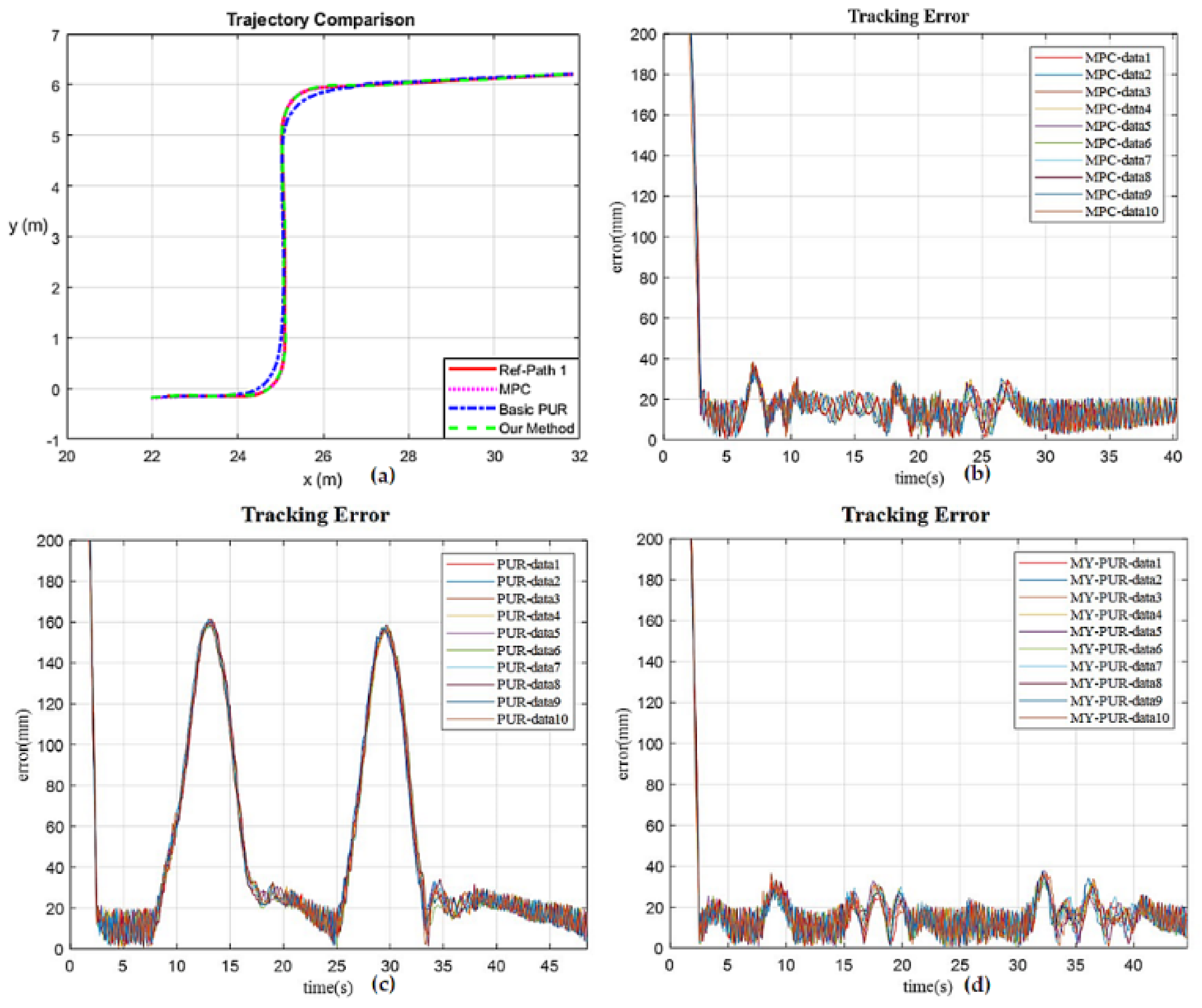

5.1. Trajectory-Tracking Accuracy Experiment

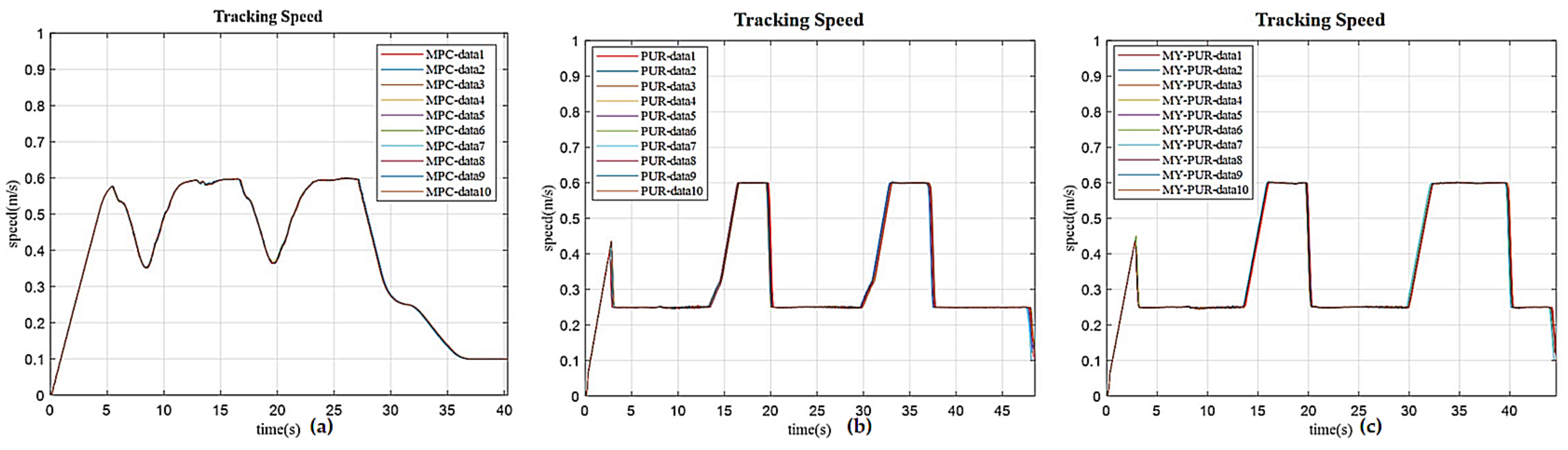

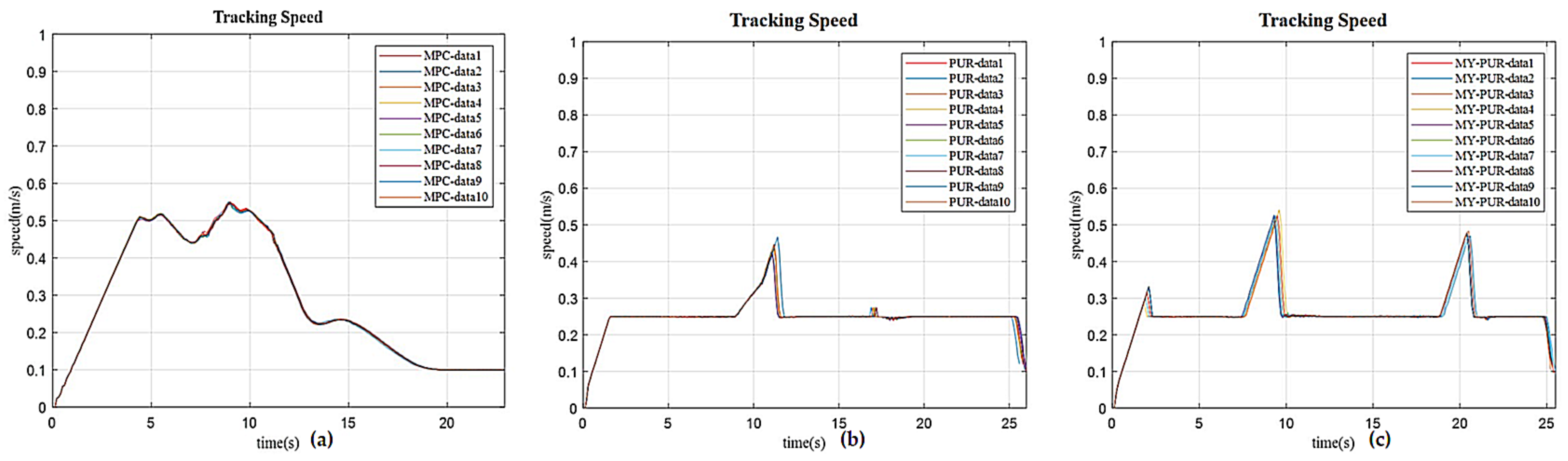

5.2. Trajectory-Tracking Speed Experiment

5.3. Verifying the Deep Learning-Based Localization Switching Method to Solve Corridor Effect

5.3.1. Evaluation of the Deep Learning-Based Corridor Recognition Method

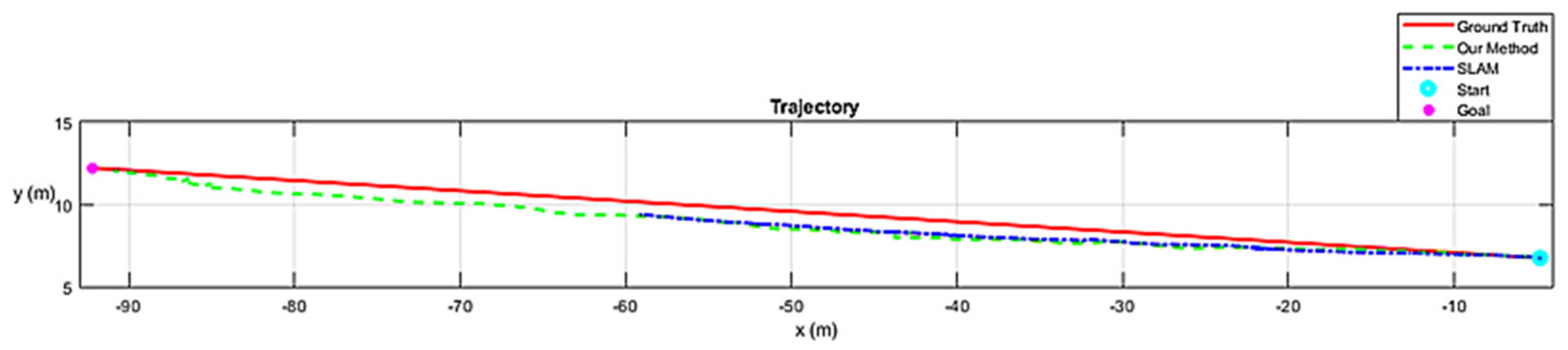

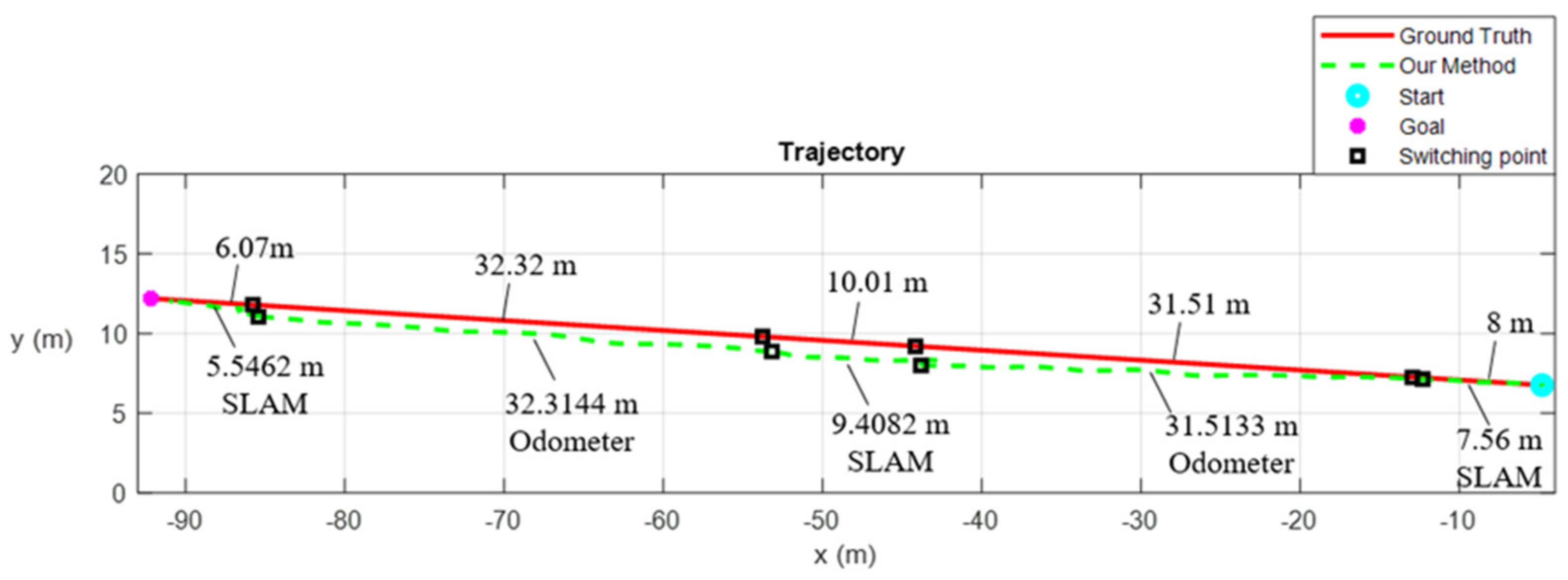

5.3.2. Verification of the Localization Switching Method in Practice

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chang, L.; Shan, L.; Li, J.; Dai, Y. The Path Planning of Mobile Robots Based on an Improved A* Algorithm. In Proceedings of the 2019 IEEE 16th International Conference on Networking, Sensing and Control (ICNSC), Banff, AB, Canada, 9–11 May 2019; pp. 257–262. [Google Scholar]

- Zeng, Z.; Sun, W.; Wu, W.; Xue, M.; Qian, L. An Efficient Path Planning Algorithm for Mobile Robots. In Proceedings of the 2019 IEEE 15th International Conference on Control and Automation (ICCA), Edinburgh, UK, 16–19 July 2019; pp. 487–493. [Google Scholar]

- Li, Y.; Huang, Z.; Xie, Y. Path planning of mobile robot based on improved genetic algorithm. In Proceedings of the 2020 3rd International Conference on Electron Device and Mechanical Engineering (ICEDME), Suzhou, China, 1–3 May 2020; pp. 691–695. [Google Scholar]

- Zhang, L.; Zhang, Y.; Li, Y. Mobile Robot Path Planning Based on Improved Localized Particle Swarm Optimization. IEEE Sens. J. 2020, 21, 6962–6972. [Google Scholar] [CrossRef]

- Wu, H.; Si, Z.; Li, Z. Trajectory Tracking Control for Four-Wheel Independent Drive Intelligent Vehicle Based on Model Predictive Control. IEEE Access 2020, 8, 73071–73081. [Google Scholar] [CrossRef]

- Li, S.; Li, Z.; Yu, Z.; Zhang, B.; Zhang, N. Dynamic trajectory planning and tracking for autonomous vehicle with obstacle avoidance based on model predictive control. IEEE Access 2019, 7, 132074–132086. [Google Scholar] [CrossRef]

- Yang, T.; Li, Z.; Yu, J. Trajectory Tracking Control of Surface Vehicles: A Prescribed Performance Fixed-Time Control Approach. IEEE Access 2020, 8, 209441–209451. [Google Scholar] [CrossRef]

- Amer, N.H.; Hudha, K.; Zamzuri, H.; Aparow, V.R.; Abidin, A.F.Z.; Kadir, Z.A.; Murrad, M. Adaptive Trajectory Tracking Controller for an Armoured Vehicle: Hardware-in-the-loop Simulation. In Proceedings of the 2018 57th Annual Conference of the Society of Instrument and Control Engineers of Japan (SICE), Nara, Japan, 11–14 September 2018; pp. 462–467. [Google Scholar]

- Yan, F.; Li, B.; Shi, W.; Wang, D. Hybrid visual servo trajectory tracking of wheeled mobile robots. IEEE Access 2018, 6, 24291–24298. [Google Scholar] [CrossRef]

- Chen, Y.; Shan, Y.; Chen, L.; Huang, K.; Cao, D. Optimization of Pure Pursuit Controller based on PID Controller and Low-pass Filter. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3294–3299. [Google Scholar]

- Wang, W.-J.; Hsu, T.-M.; Wu, T.-S. The improved pure pursuit algorithm for autonomous driving advanced system. In Proceedings of the 2017 IEEE 10th International Workshop on Computational Intelligence and Applications (IWCIA), Hiroshima, Japan, 11–12 November 2017; pp. 33–38. [Google Scholar]

- Wang, R.; Li, Y.; Fan, J.; Wang, T.; Chen, X. A Novel Pure Pursuit Algorithm for Autonomous Vehicles Based on Salp Swarm Algorithm and Velocity Controller. IEEE Access 2020, 8, 166525–166540. [Google Scholar] [CrossRef]

- Yousif, K.; Bab-Hadiashar, A.; Hoseinnezhad, R. An Overview to Visual Odometry and Visual SLAM: Applications to Mobile Robotics. Intell. Ind. Syst. 2015, 1, 289–311. [Google Scholar] [CrossRef]

- Rozenberszki, D.; Majdik, A.L. LOL: Lidar-only Odometry and Localization in 3D point cloud maps. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 4379–4385. [Google Scholar]

- Zhao, S.; Zhang, H.; Wang, P.; Nogueira, L.; Scherer, S. Super Odometry: IMU-centric LiDAR-Visual-Inertial Estimator for Challenging Environments. arXiv 2021, arXiv:2104.14938. [Google Scholar]

- Cho, H.; Kim, E.K.; Kim, S. Indoor SLAM application using geometric and ICP matching methods based on line features. Robot. Auton. Syst. 2018, 100, 206–224. [Google Scholar] [CrossRef]

- Millane, A.; Oleynikova, H.; Nieto, J.; Siegwart, R.; Cadena, C. Free-Space Features: Global Localization in 2D Laser SLAM Using Distance Function Maps. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1271–1277. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Chen, J.; Ye, P.; Sun, Z. Pedestrian Detection and Tracking Based on 2D Lidar. In Proceedings of the 2019 6th International Conference on Systems and Informatics (ICSAI), Shanghai, China, 2–4 November 2019; pp. 421–426. [Google Scholar]

- Luo, R.C.; Hsiao, T.J. Kidnapping and Re-Localizing Solutions for Autonomous Service Robotics. In Proceedings of the IECON 2018—44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018; pp. 2552–2557. [Google Scholar]

- Luo, R.C.; Yeh, K.C.; Huang, K.H. Resume navigation and re-localization of an autonomous mobile robot after being kidnapped. In Proceedings of the 2013 IEEE International Symposium on Robotic and Sensors Environments (ROSE), Washington, DC, USA, 21–23 October 2013; pp. 7–12. [Google Scholar]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef] [Green Version]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

| Layer | Kernel Size | Input Size |

|---|---|---|

| Conv | ||

| Batch norm | - | |

| Avg Pooling | ||

| Conv | ||

| Batch norm | - | |

| Avg Pooling | ||

| Conv | ||

| Batch norm | - | |

| Avg Pooling | ||

| Linear | - | |

| Linear | - | 256 |

| Trajectory-Tracking Algorithm | Maximum Error (mm) | Average Error (mm) | Standard Deviation of Error (mm) |

|---|---|---|---|

| Double-L-shaped path (14.9 m) | |||

| MPC | 35.959 | 14.644 | ±0.131 |

| PP | 160.215 | 48.158 | ±0.289 |

| Our improved PP | 35.967 | 14.892 | ±0.223 |

| S-shaped path (8.2 m) | |||

| MPC | 34.282 | 19.329 | ±0.449 |

| PP | 202.026 | 91.625 | ±0.885 |

| Our improved PP | 44.609 | 15.742 | ±0.330 |

| Trajectory-Tracking Algorithm | Average Speed (m/s) | Task Time (s) | Standard Deviation of Speed (s) |

|---|---|---|---|

| Double-L-shaped path (14.9 m) | |||

| MPC | 0.396 | 40.31 | ±0.070 |

| PP | 0.322 | 48.42 | ±0.125 |

| Our improved PP | 0.358 | 44.63 | ±0.078 |

| S-shaped path (8.2 m) | |||

| MPC | 0.287 | 22.92 | ±0.060 |

| PP | 0.248 | 25.93 | ±0.118 |

| Our improved PP | 0.262 | 25.43 | ±0.064 |

| Models | Accuracy (%) | Number of Parameters |

|---|---|---|

| SVM [25] | 80% | - |

| InceptionV3-based model | 100% | ~22 million |

| LeNet-based model | 100% | ~1.1 million |

| Experiment | Track Length (m) |

|---|---|

| Ground Truth | 88 |

| SLAM | 54.4 |

| Our Method | 86.3 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nguyen, P.T.-T.; Yan, S.-W.; Liao, J.-F.; Kuo, C.-H. Autonomous Mobile Robot Navigation in Sparse LiDAR Feature Environments. Appl. Sci. 2021, 11, 5963. https://doi.org/10.3390/app11135963

Nguyen PT-T, Yan S-W, Liao J-F, Kuo C-H. Autonomous Mobile Robot Navigation in Sparse LiDAR Feature Environments. Applied Sciences. 2021; 11(13):5963. https://doi.org/10.3390/app11135963

Chicago/Turabian StyleNguyen, Phuc Thanh-Thien, Shao-Wei Yan, Jia-Fu Liao, and Chung-Hsien Kuo. 2021. "Autonomous Mobile Robot Navigation in Sparse LiDAR Feature Environments" Applied Sciences 11, no. 13: 5963. https://doi.org/10.3390/app11135963

APA StyleNguyen, P. T.-T., Yan, S.-W., Liao, J.-F., & Kuo, C.-H. (2021). Autonomous Mobile Robot Navigation in Sparse LiDAR Feature Environments. Applied Sciences, 11(13), 5963. https://doi.org/10.3390/app11135963