Deep-Feature-Based Approach to Marine Debris Classification

Abstract

1. Introduction

2. Materials and Methods

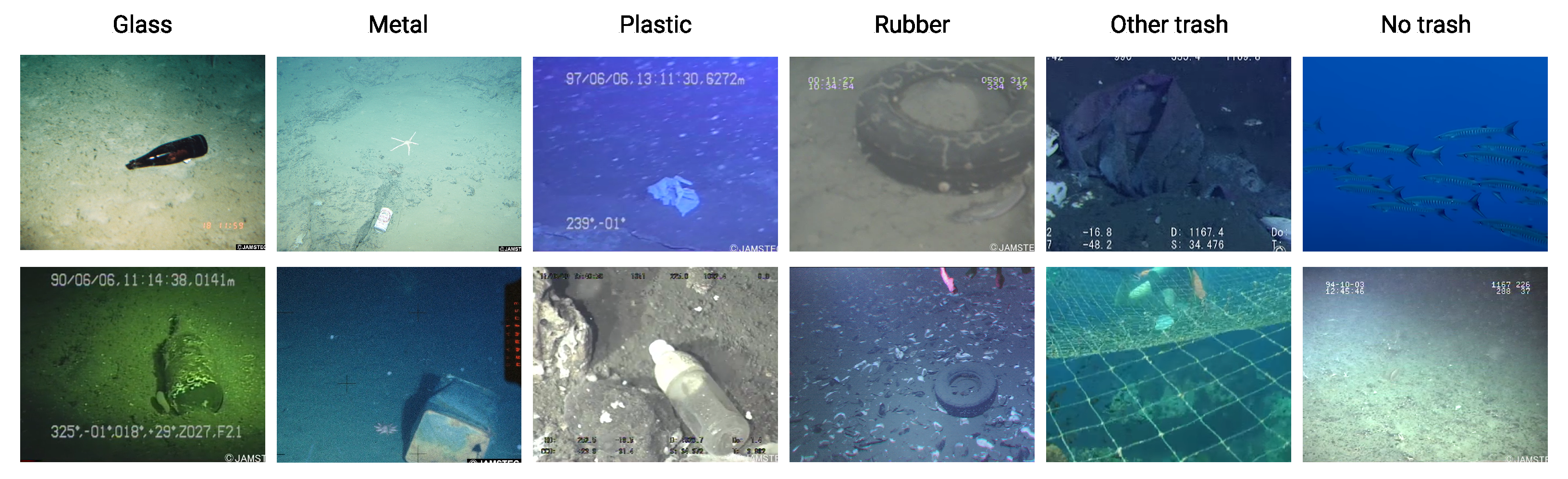

2.1. Dataset

2.2. Deep Convolutional Architectures

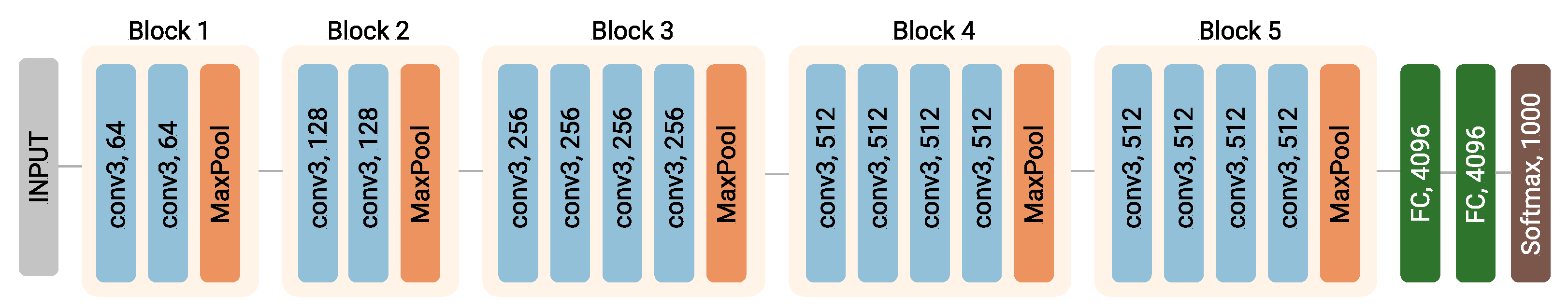

2.2.1. VGG19

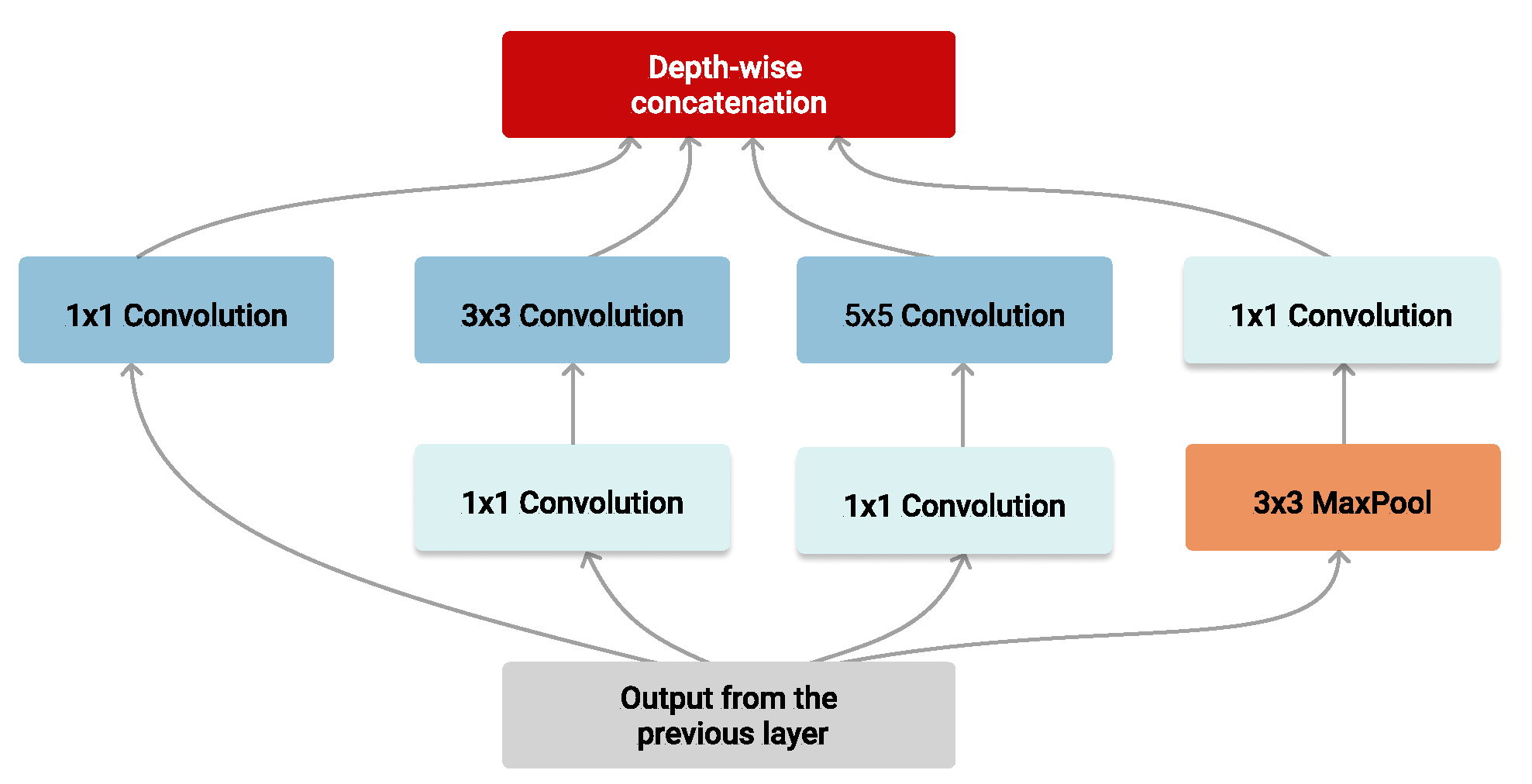

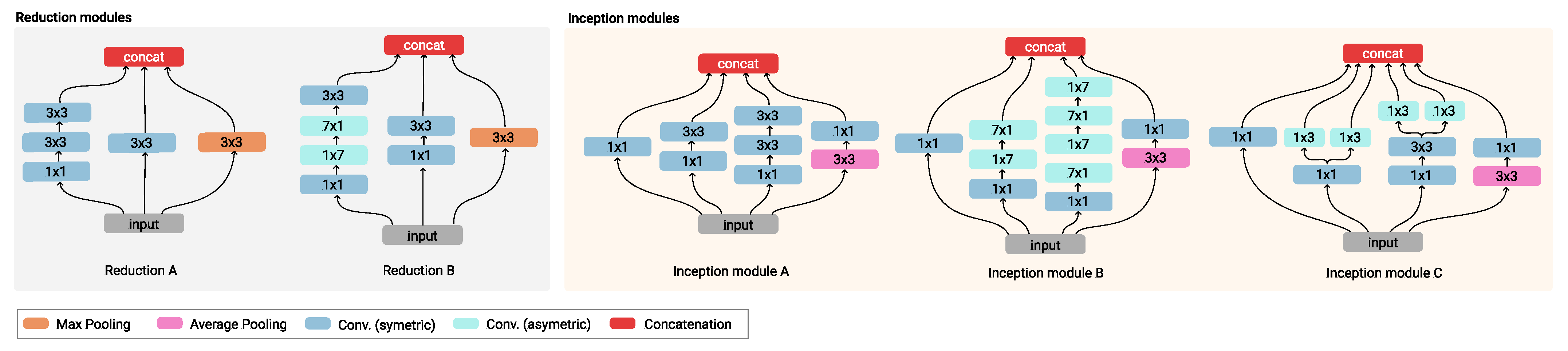

2.2.2. InceptionV3

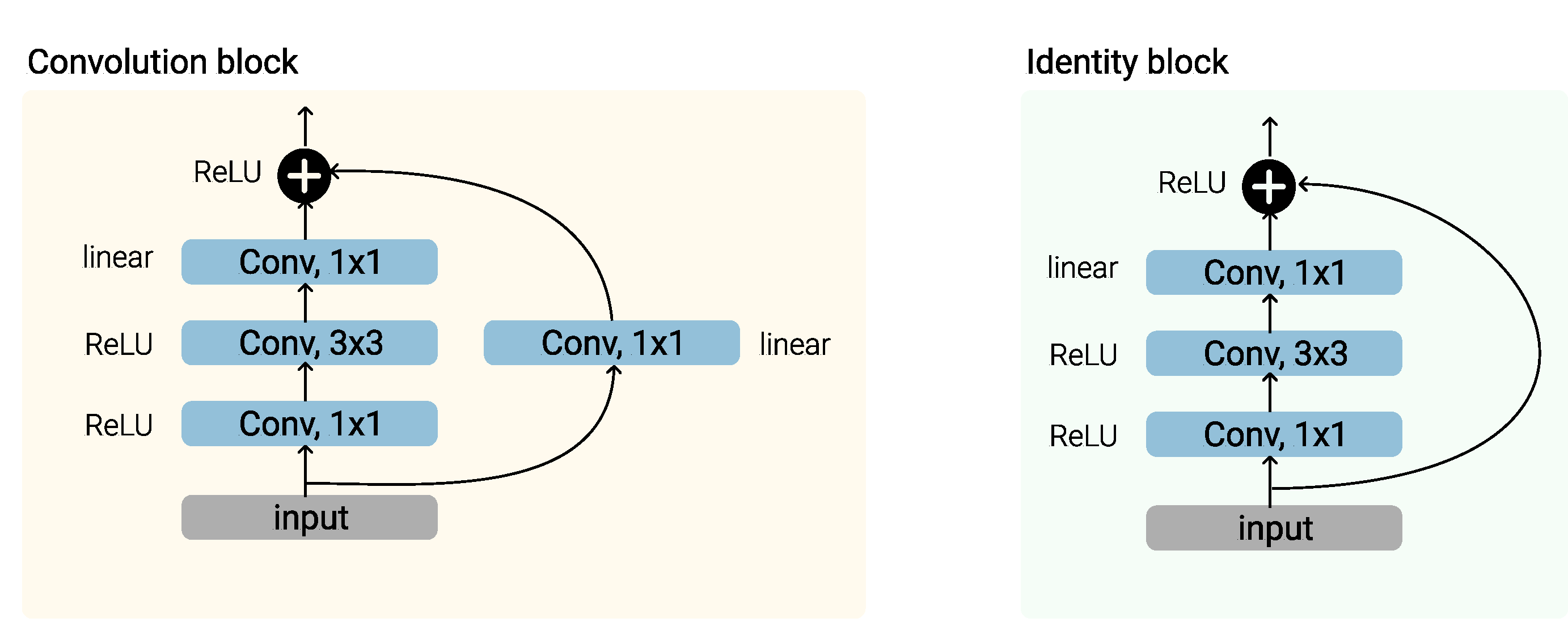

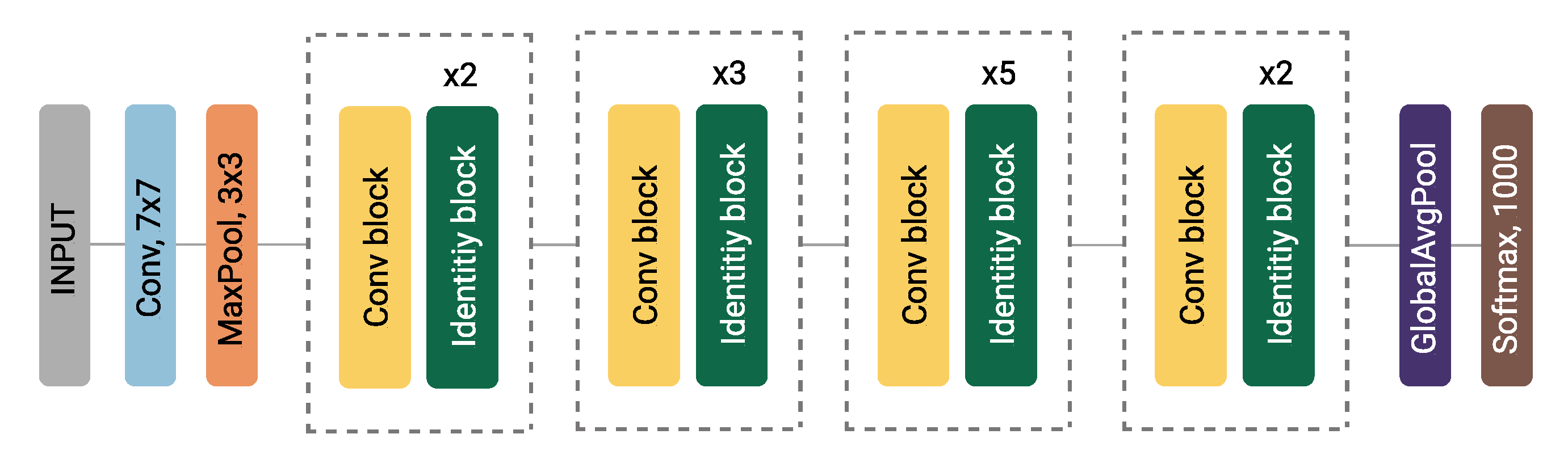

2.2.3. ResNet50

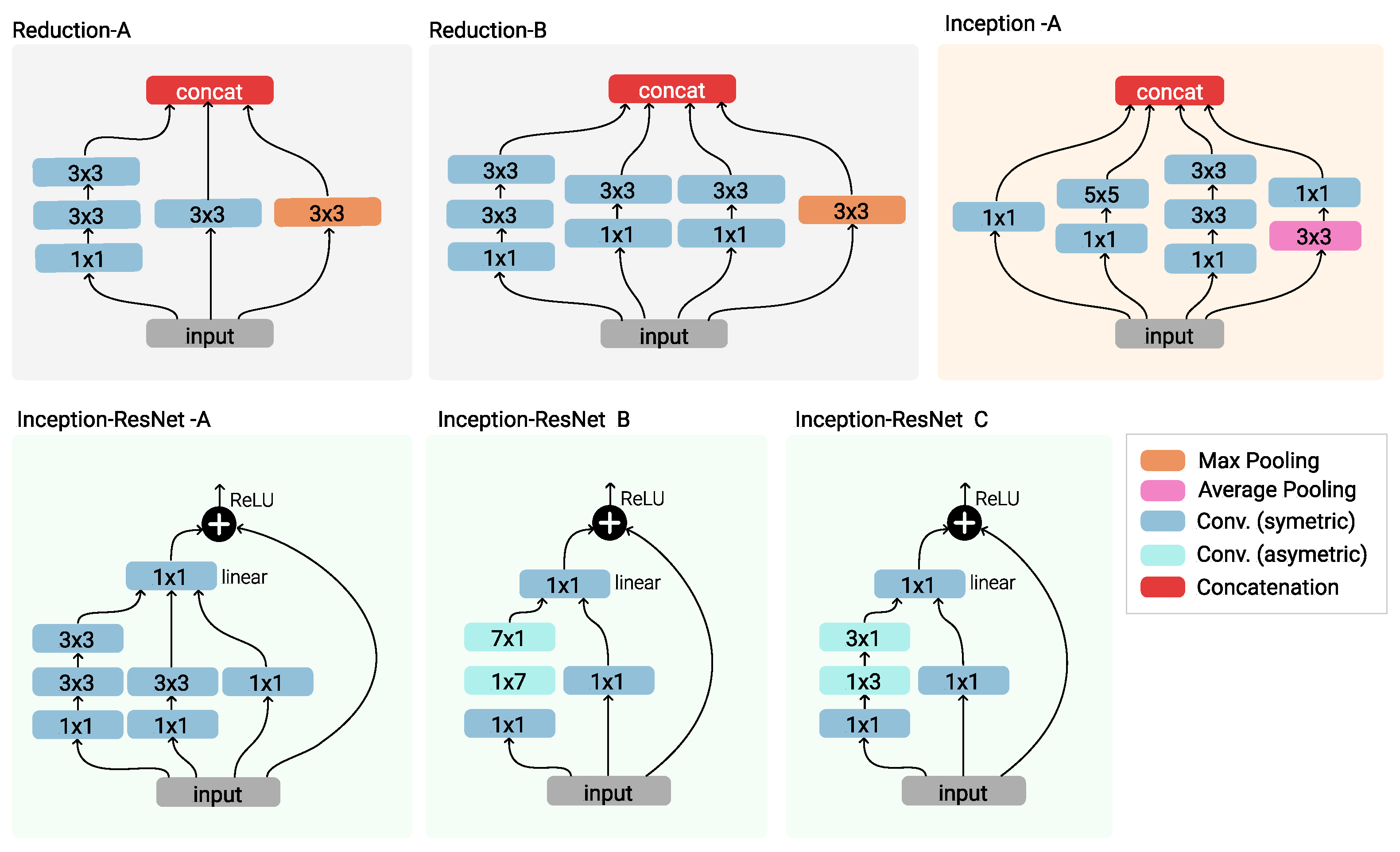

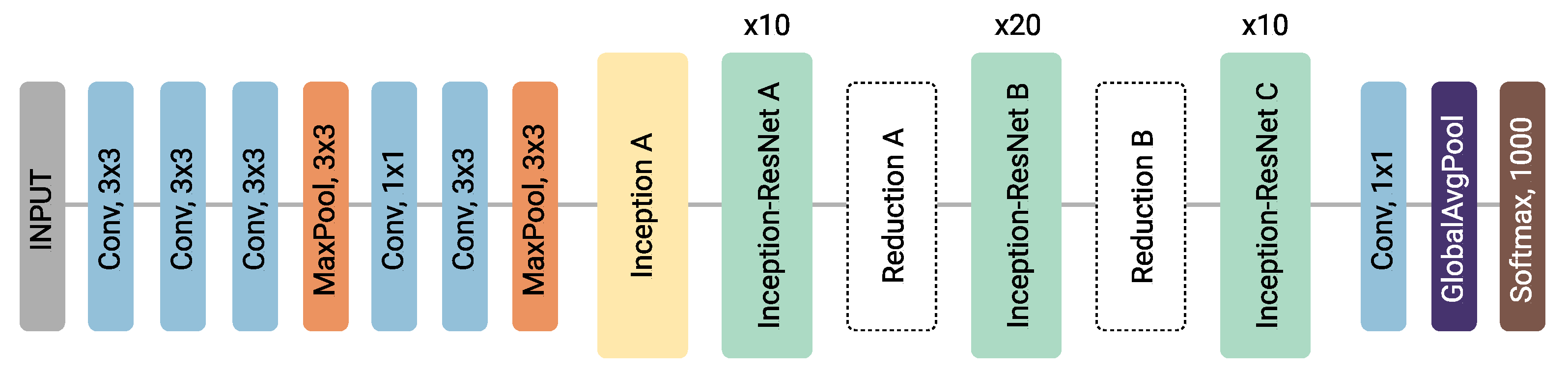

2.2.4. Inception-ResNetV2

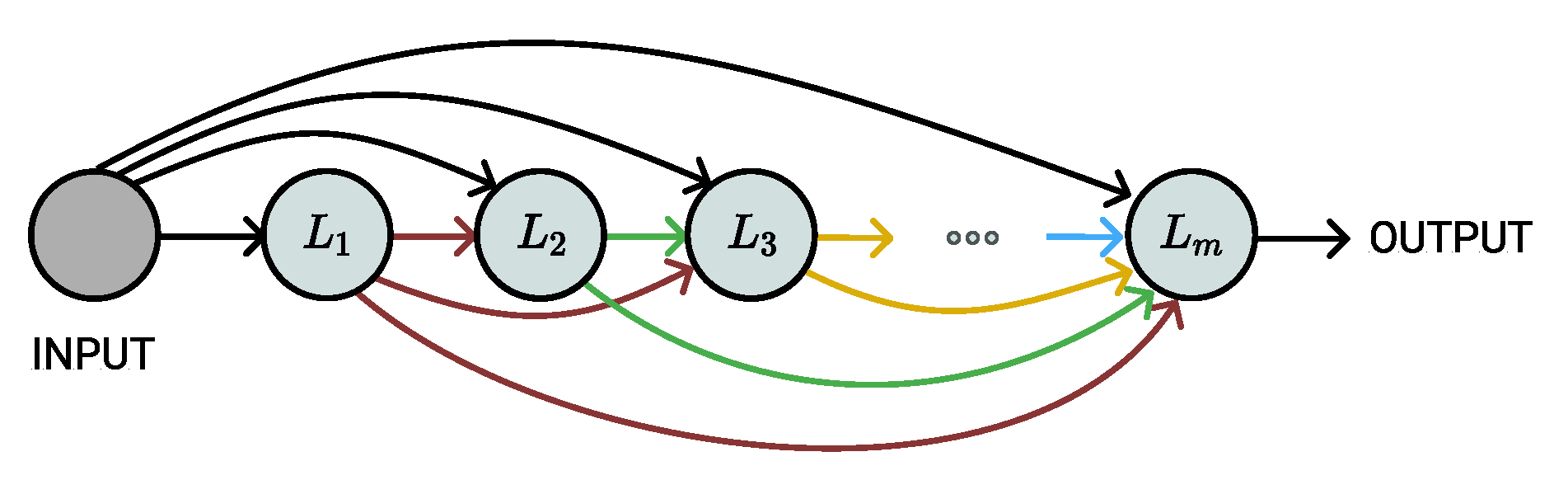

2.2.5. DenseNet121

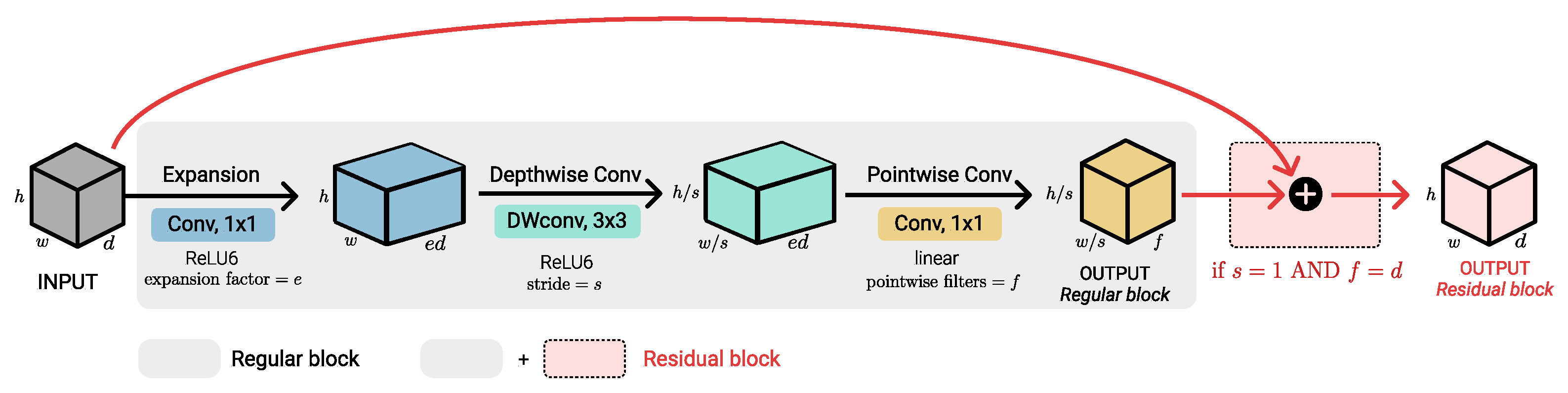

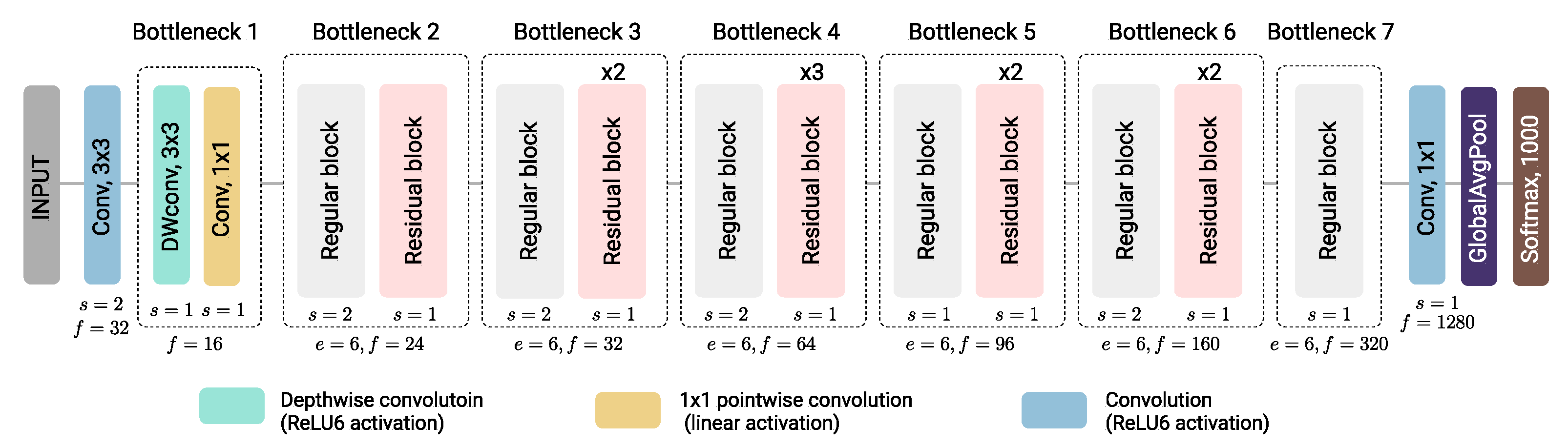

2.2.6. MobileNetV2

2.3. Machine Learning Classifiers

2.3.1. Random Forests

2.3.2. k-Nearest Neighbors

2.3.3. Support Vector Machines

2.3.4. Naive Bayes

2.3.5. Logistic Regression

3. Experiments

3.1. Experimental Setup

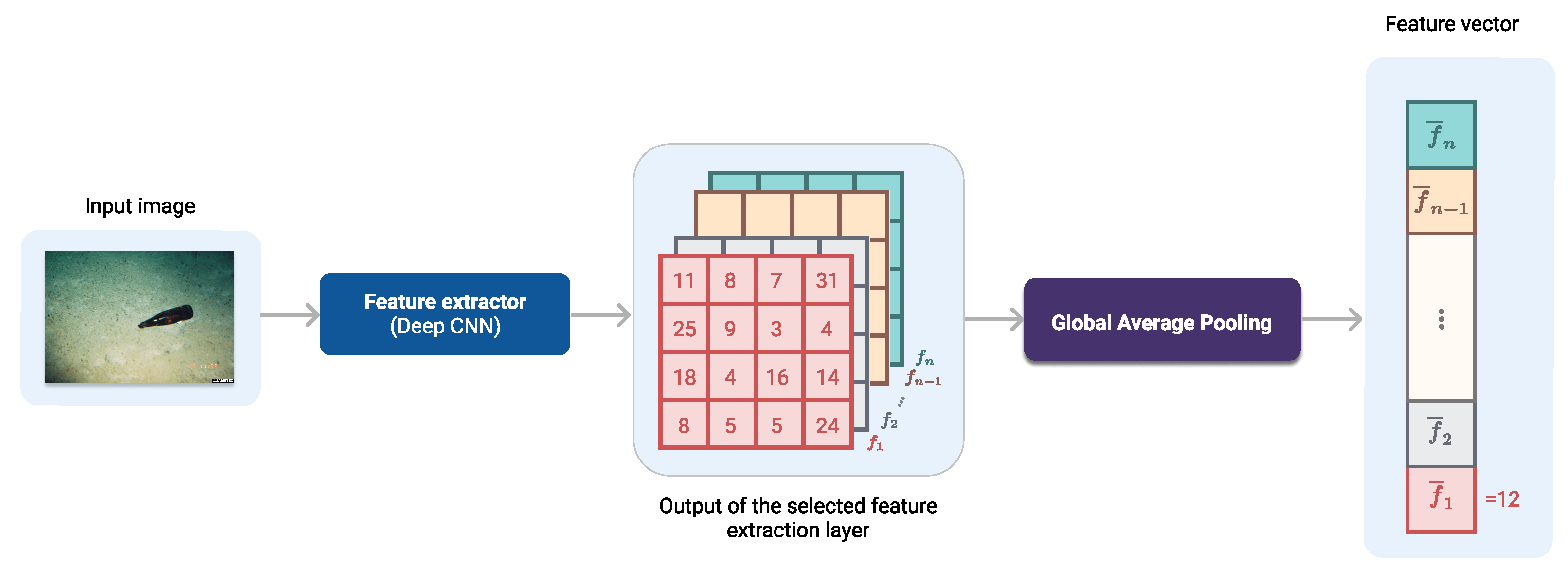

3.2. Extraction of Features

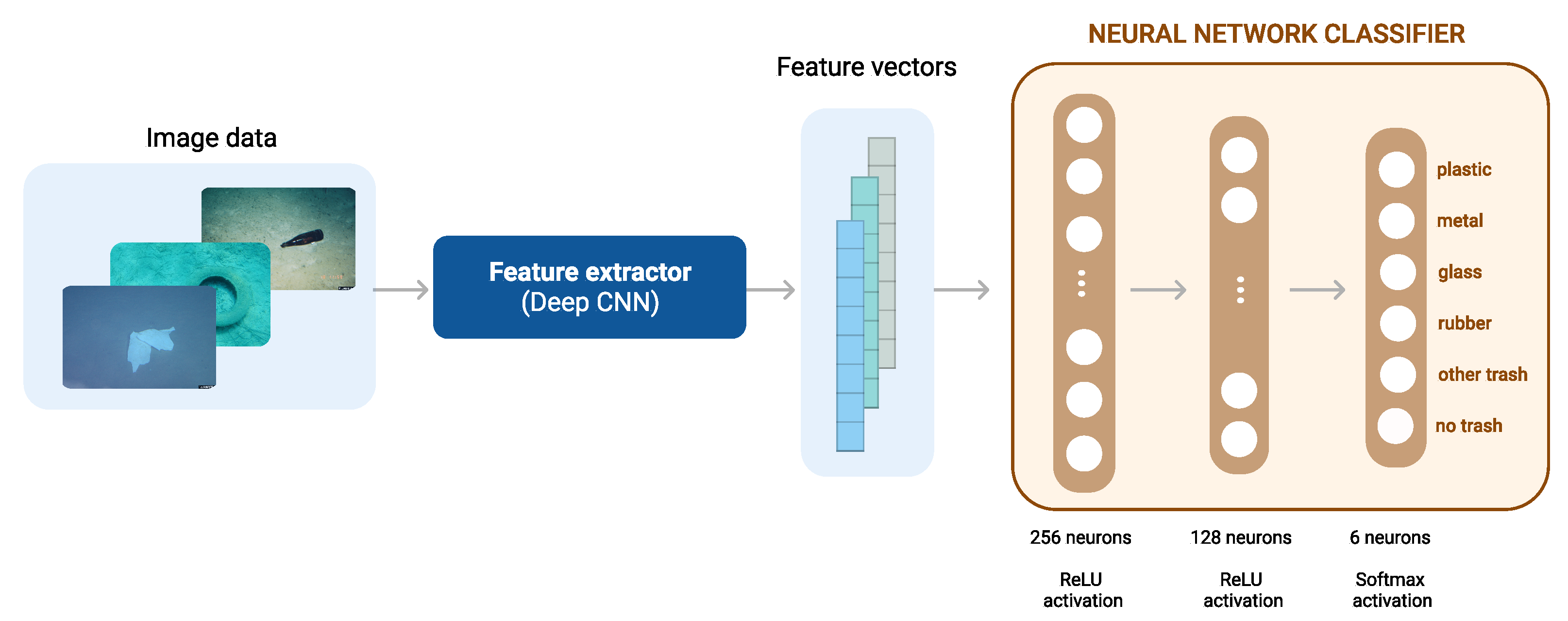

3.3. Simple Neural Network Architecture

3.4. Evaluation Metrics

- (True Positive): number of correctly classified instances of class i, i.e., ;

- (False Positive): number of instances falsely classified as class i, ;

- (False Negative): number of instances classified as actually belonging to class i, .

4. Results

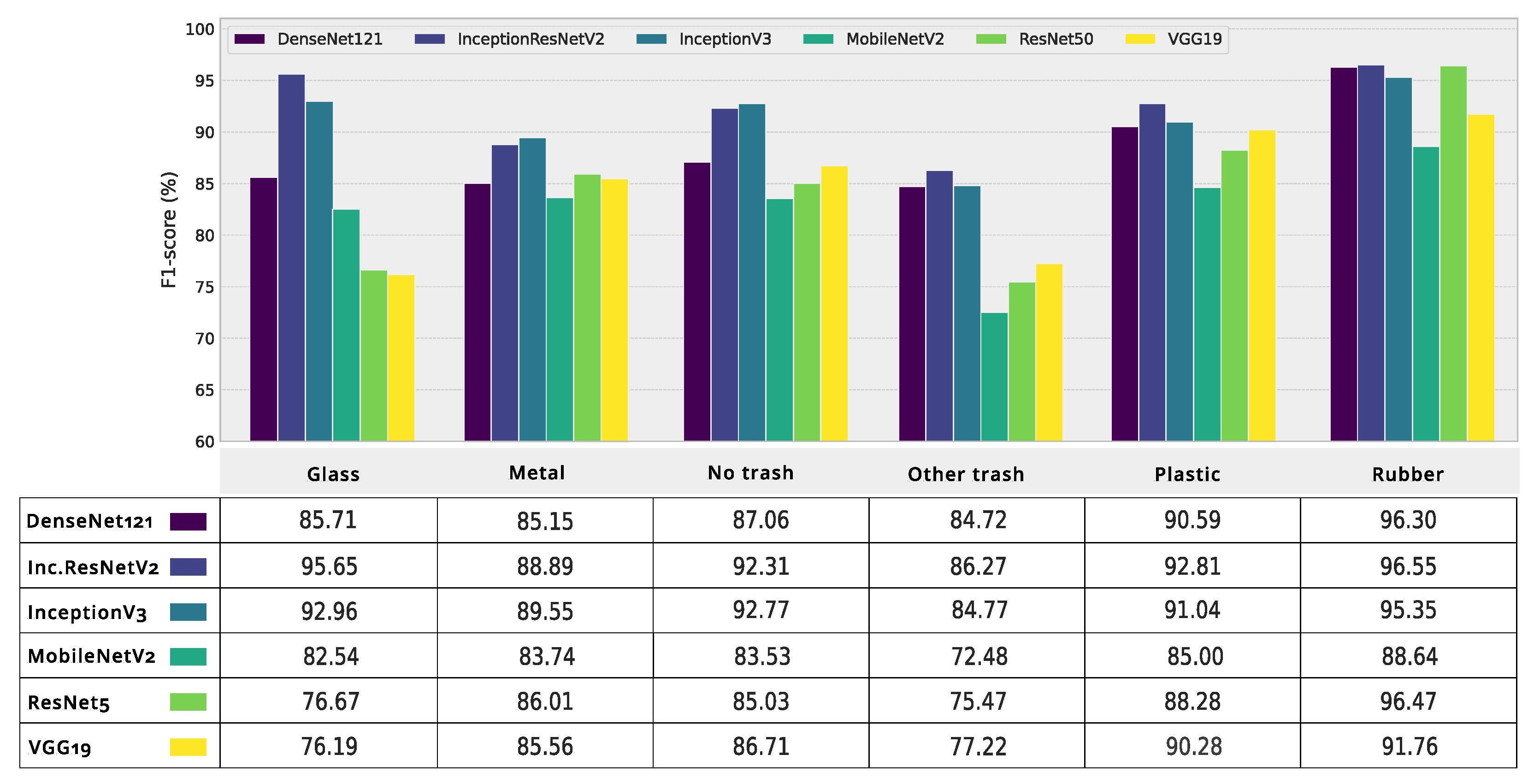

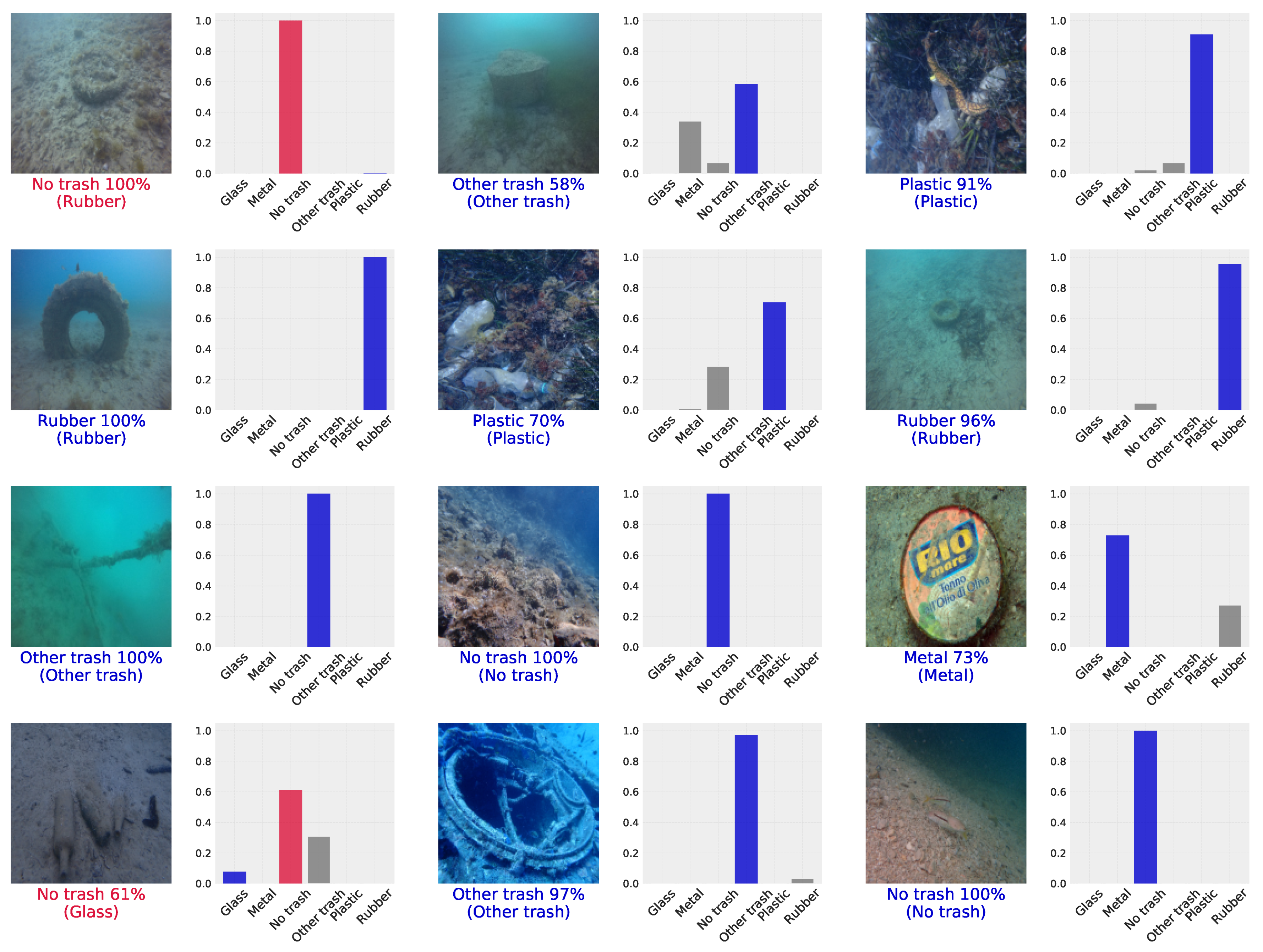

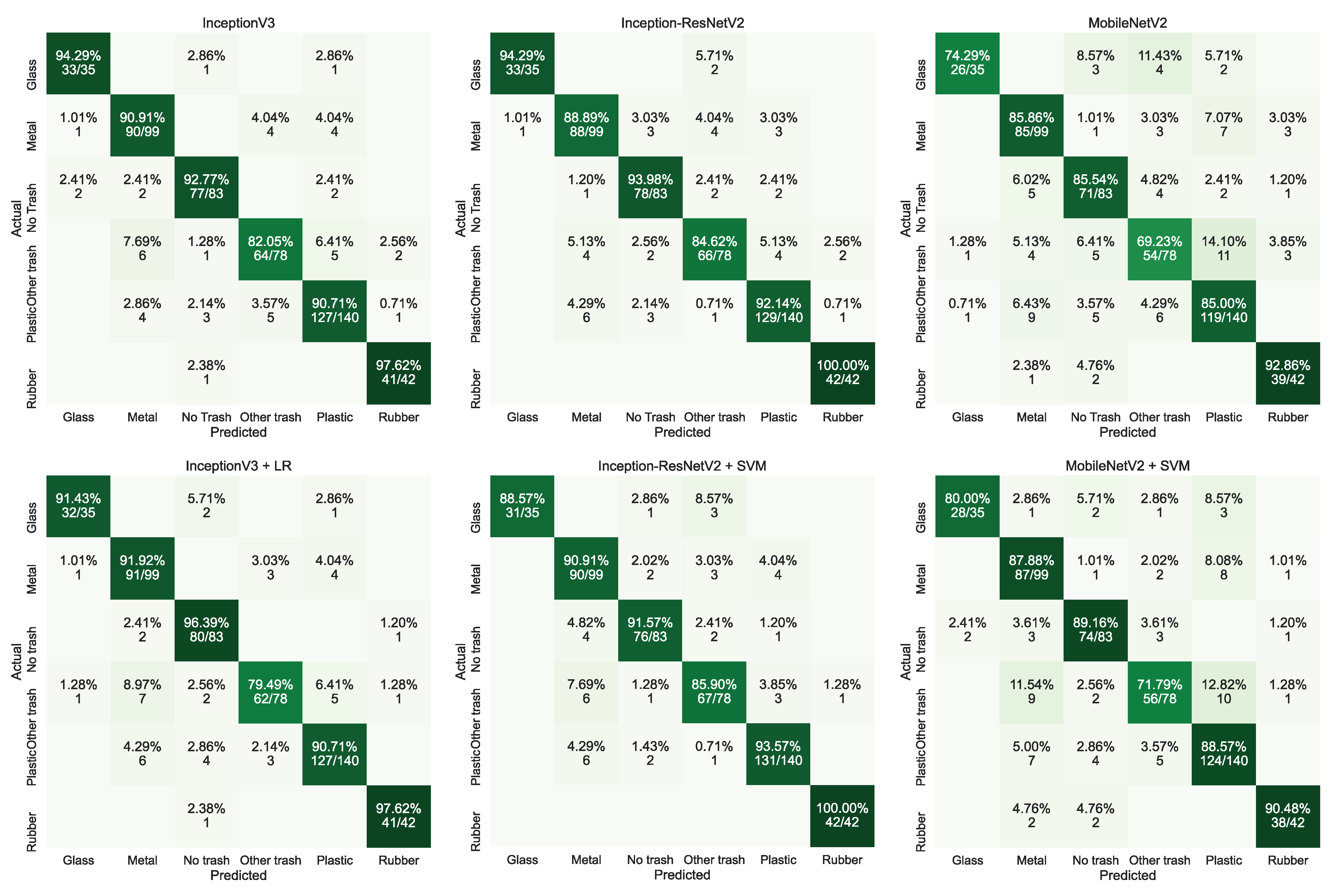

4.1. Deep CNN Architectures

4.2. Deep Feature Classification with Conventional ML Classifiers

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| CNN | Convolutional neural network |

| DL | Deep learning |

| FC | Fully connected |

| FFE | Fixed feature extractor |

| FLS | Forward looking sonar |

| FT | Fine-tuning |

| KNN | K-nearest neighbor |

| LR | Logistic regression |

| ML | Machine learning |

| NB | Naive Bayes |

| NN | Neural network |

| RF | Random forest |

| SSD | Single-shot detector |

| SVM | Support vector machine |

References

- Sheavly, S.; Register, K. Marine debris & plastics: Environmental concerns, sources, impacts and solutions. J. Polym. Environ. 2007, 15, 301–305. [Google Scholar] [CrossRef]

- Savoca, M.S.; Wohlfeil, M.E.; Ebeler, S.E.; Nevitt, G.A. Marine plastic debris emits a keystone infochemical for olfactory foraging seabirds. Sci. Adv. 2016, 2, e1600395. [Google Scholar] [CrossRef]

- Pfaller, J.B.; Goforth, K.M.; Gil, M.A.; Savoca, M.S.; Lohmann, K.J. Odors from marine plastic debris elicit foraging behavior in sea turtles. Curr. Biol. 2020, 30, R213–R214. [Google Scholar] [CrossRef]

- Lusher, A.; Hollman, P.; Mendoza-Hill, J. Microplastics in Fisheries and Aquaculture: Status of Knowledge on Their Occurrence and Implications for Aquatic Organisms and Food Safety; FAO: Rome, Italy, 2017. [Google Scholar]

- Smith, M.; Love, D.C.; Rochman, C.M.; Neff, R.A. Microplastics in seafood and the implications for human health. Curr. Environ. Health Rep. 2018, 5, 375–386. [Google Scholar] [CrossRef]

- Meeker, J.D.; Sathyanarayana, S.; Swan, S.H. Phthalates and other additives in plastics: Human exposure and associated health outcomes. Philos. Trans. R. Soc. B: Biol. Sci. 2009, 364, 2097–2113. [Google Scholar] [CrossRef]

- Newman, S.; Watkins, E.; Farmer, A.; Ten Brink, P.; Schweitzer, J.P. The economics of marine litter. In Marine Anthropogenic Litter; Springer: Cham, The Netherlands, 2015; pp. 367–394. [Google Scholar] [CrossRef]

- Williams, A.; Rangel-Buitrago, N. Marine Litter: Solutions for a Major Environmental Problem. J. Coast. Res. 2019, 35, 648–663. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Tan, M.; Le, Q. Efficientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the International Conference on Machine Learning (ICML), Long Beach, CA, USA, 10–15 June 2019; pp. 6105–6114. [Google Scholar]

- Brock, A.; De, S.; Smith, S.L.; Simonyan, K. High-Performance Large-Scale Image Recognition Without Normalization. arXiv 2021, arXiv:2102.06171. [Google Scholar]

- Tao, A.; Sapra, K.; Catanzaro, B. Hierarchical multi-scale attention for semantic segmentation. arXiv 2020, arXiv:2005.10821. [Google Scholar]

- Zhu, Y.; Sapra, K.; Reda, F.A.; Shih, K.J.; Newsam, S.; Tao, A.; Catanzaro, B. Improving semantic segmentation via video propagation and label relaxation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8856–8865. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- LeCun, Y.; Boser, B.; Denker, J.S.; Henderson, D.; Howard, R.E.; Hubbard, W.; Jackel, L.D. Backpropagation applied to handwritten zip code recognition. Neural Comput. 1989, 1, 541–551. [Google Scholar] [CrossRef]

- Hubel, D.H.; Wiesel, T.N. Receptive fields and functional architecture of monkey striate cortex. J. Physiol. 1968, 195, 215–243. [Google Scholar] [CrossRef]

- Fukushima, K. Neocognitron. Scholarpedia 2007, 2, 1717. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified Linear units Improve Restricted Boltzmann Machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Valdenegro-Toro, M. Submerged marine debris detection with autonomous underwater vehicles. In Proceedings of the 2016 International Conference on Robotics and Automation for Humanitarian Applications (RAHA), Amritapuri, India, 18–20 December 2016; pp. 1–7. [Google Scholar]

- Panwar, H.; Gupta, P.; Siddiqui, M.K.; Morales-Menendez, R.; Bhardwaj, P.; Sharma, S.; Sarker, I.H. AquaVision: Automating the detection of waste in water bodies using deep transfer learning. Case Stud. Chem. Environ. Eng. 2020, 2, 100026. [Google Scholar] [CrossRef]

- Kylili, K.; Kyriakides, I.; Artusi, A.; Hadjistassou, C. Identifying floating plastic marine debris using a deep learning approach. Environ. Sci. Pollut. Res. 2019, 26, 17091–17099. [Google Scholar] [CrossRef]

- Kylili, K.; Hadjistassou, C.; Artusi, A. An intelligent way for discerning plastics at the shorelines and the seas. Environ. Sci. Pollut. Res. 2020, 27, 42631–42643. [Google Scholar] [CrossRef]

- Fulton, M.; Hong, J.; Islam, M.J.; Sattar, J. Robotic detection of marine litter using deep visual detection models. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 5752–5758. [Google Scholar] [CrossRef]

- Politikos, D.V.; Fakiris, E.; Davvetas, A.; Klampanos, I.A.; Papatheodorou, G. Automatic detection of seafloor marine litter using towed camera images and deep learning. Mar. Pollut. Bull. 2021, 164, 111974. [Google Scholar] [CrossRef]

- Musić, J.; Kružić, S.; Stančić, I.; Alexandrou, F. Detecting Underwater Sea Litter Using Deep Neural Networks: An Initial Study. In Proceedings of the 2020 5th International Conference on Smart and Sustainable Technologies (SpliTech), Split, Croatia, 23–26 September 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Lorenzo-Navarro, J.; Castrillón-Santana, M.; Santesarti, E.; De Marsico, M.; Martínez, I.; Raymond, E.; Gómez, M.; Herrera, A. SMACC: A System for Microplastics Automatic Counting and Classification. IEEE Access 2020, 8, 25249–25261. [Google Scholar] [CrossRef]

- Fallati, L.; Polidori, A.; Salvatore, C.; Saponari, L.; Savini, A.; Galli, P. Anthropogenic Marine Debris assessment with Unmanned Aerial Vehicle imagery and deep learning: A case study along the beaches of the Republic of Maldives. Sci. Total Environ. 2019, 693, 133581. [Google Scholar] [CrossRef] [PubMed]

- Kako, S.; Morita, S.; Taneda, T. Estimation of plastic marine debris volumes on beaches using unmanned aerial vehicles and image processing based on deep learning. Mar. Pollut. Bull. 2020, 155, 111127. [Google Scholar] [CrossRef] [PubMed]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. CVPR09, 2009. Available online: http://www.image-net.org/ (accessed on 2 May 2021).

- Sharif Razavian, A.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN features off-the-shelf: An astounding baseline for recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 806–813. [Google Scholar] [CrossRef]

- Donahue, J.; Jia, Y.; Vinyals, O.; Hoffman, J.; Zhang, N.; Tzeng, E.; Darrell, T. Decaf: A deep convolutional activation feature for generic visual recognition. In Proceedings of the International Conference on Machine Learning, PMLR, Bejing, China, 22–24 June 2014; pp. 647–655. [Google Scholar]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? arXiv 2014, arXiv:1411.1792. [Google Scholar]

- Azizpour, H.; Sharif Razavian, A.; Sullivan, J.; Maki, A.; Carlsson, S. From generic to specific deep representations for visual recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 36–45. [Google Scholar] [CrossRef]

- Ben Jabra, M.; Koubaa, A.; Benjdira, B.; Ammar, A.; Hamam, H. COVID-19 Diagnosis in Chest X-rays Using Deep Learning and Majority Voting. Appl. Sci. 2021, 11, 2884. [Google Scholar] [CrossRef]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-level classification of skin cancer with deep neural networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. ChestX-ray8: Hospital-scale chest X-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2097–2106. [Google Scholar] [CrossRef]

- Karri, S.P.K.; Chakraborty, D.; Chatterjee, J. Transfer learning based classification of optical coherence tomography images with diabetic macular edema and dry age-related macular degeneration. Biomed. Opt. Express 2017, 8, 579–592. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Zhang, D.; Sun, Y.; Nanehkaran, Y. Using deep transfer learning for image-based plant disease identification. Comput. Electron. Agric. 2020, 173, 105393. [Google Scholar] [CrossRef]

- Qi, H.; Liang, Y.; Ding, Q.; Zou, J. Automatic Identification of Peanut-Leaf Diseases Based on Stack Ensemble. Appl. Sci. 2021, 11, 1950. [Google Scholar] [CrossRef]

- Jeon, H.K.; Kim, S.; Edwin, J.; Yang, C.S. Sea Fog Identification from GOCI Images Using CNN Transfer Learning Models. Electronics 2020, 9, 311. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Rezaee, M.; Mohammadimanesh, F.; Zhang, Y. Very Deep Convolutional Neural Networks for Complex Land Cover Mapping Using Multispectral Remote Sensing Imagery. Remote Sens. 2018, 10, 1119. [Google Scholar] [CrossRef]

- Iqbal Hussain, M.A.; Khan, B.; Wang, Z.; Ding, S. Woven Fabric Pattern Recognition and Classification Based on Deep Convolutional Neural Networks. Electronics 2020, 9, 1048. [Google Scholar] [CrossRef]

- Japan Agency for Marine Earth Science and Technology, Deep-sea Debris Database. Available online: http://www.godac.jamstec.go.jp/catalog/dsdebris/metadataList?lang=en (accessed on 14 March 2021).

- O’Mahony, N.; Campbell, S.; Carvalho, A.; Harapanahalli, S.; Hernandez, G.V.; Krpalkova, L.; Riordan, D.; Walsh, J. Deep Learning vs. Traditional Computer Vision. In Advances in Computer Vision; Arai, K., Kapoor, S., Eds.; Springer International Publishing: Cham, The Netherlands, 2020; pp. 128–144. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Vapnik, V.N. The Nature of Statistical Learning Theory; Springer: New York, NY, USA, 1995. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Systems. 2015. Available online: https://www.tensorflow.org/ (accessed on 17 June 2021).

- Chollet, F. Keras. 2015. Available online: https://github.com/fchollet/keras (accessed on 2 May 2021).

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on International Conference on Machine Learning, Lille, France, 7–9 July 2015; Volume 37, pp. 448–456. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Wilson, D.R.; Martinez, T.R. The general inefficiency of batch training for gradient descent learning. Neural Netw. 2003, 16, 1429–1451. [Google Scholar] [CrossRef]

- Masters, D.; Luschi, C. Revisiting small batch training for deep neural networks. arXiv 2018, arXiv:1804.07612. [Google Scholar]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Opitz, J.; Burst, S. Macro f1 and macro f1. arXiv 2019, arXiv:1911.03347. [Google Scholar]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Anscombe, F.J. Graphs in Statistical Analysis. Am. Stat. 1973, 27, 17–21. [Google Scholar]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2580–2605. [Google Scholar]

- Zhu, L.; Spachos, P. Towards Image Classification with Machine Learning Methodologies for Smartphones. Mach. Learn. Knowl. Extr. 2019, 1, 59. [Google Scholar] [CrossRef]

- Sykora, P.; Kamencay, P.; Hudec, R.; Benco, M.; Sinko, M. Comparison of Feature Extraction Methods and Deep Learning Framework for Depth Map Recognition. In Proceedings of the 2018 New Trends in Signal Processing (NTSP), Liptovsky Mikulas, Slovakia, 10–12 October 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Postorino, M.N.; Versaci, M. A geometric fuzzy-based approach for airport clustering. Adv. Fuzzy Syst. 2014, 2014. [Google Scholar] [CrossRef]

- Mahmoudi, M.R.; Baleanu, D.; Qasem, S.N.; Mosavi, A.; Band, S.S. Fuzzy clustering to classify several time series models with fractional Brownian motion errors. Alex. Eng. J. 2021, 60, 1137–1145. [Google Scholar] [CrossRef]

- Xu, K.; Pedrycz, W.; Li, Z.; Nie, W. Optimizing the prototypes with a novel data weighting algorithm for enhancing the classification performance of fuzzy clustering. Fuzzy Sets Syst. 2021, 413, 29–41. [Google Scholar] [CrossRef]

| Class | Images | Trainig Set | Test Set |

|---|---|---|---|

| Glass | 178 | 143 | 35 |

| Metal | 497 | 398 | 99 |

| Plastic | 700 | 560 | 140 |

| Rubber | 211 | 169 | 42 |

| Other trash | 390 | 312 | 78 |

| No trash | 419 | 336 | 83 |

| ∑ | 2395 | 1918 | 477 |

| Model | Input Shape | Total Parameters | Feature Vector Size |

|---|---|---|---|

| VGG19 [22] | 20.02 M | 512 | |

| InceptionV3 [51] | 21.80 M | 2048 | |

| ResNet50 [52] | 23.59 M | 2048 | |

| Inception-ResNetV2 [53] | 54.34 M | 1536 | |

| DenseNet121 [56] | 7.04 M | 1024 | |

| MobileNetV2 [56] | 2.26 M | 1280 |

| Model | Fixed Feature Extractor | Fine-Tuning |

|---|---|---|

| VGG19 | ||

| InceptionV3 | ||

| ResNet50 | ||

| Inception-ResNetV2 | ||

| DenseNet121 | ||

| MobileNetV2 |

| Layer | Kernel Size | Filters | Stride | Input Size |

|---|---|---|---|---|

| Conv | 64 | 1 | ||

| Conv | 64 | 1 | ||

| MaxPool | - | 2 | ||

| Conv | 128 | 1 | ||

| Conv | 128 | 1 | ||

| MaxPool | - | 2 | ||

| Conv | 512 | 1 | ||

| GlobalAvgPool | - | - | - | |

| Softmax | - | - | - |

| Model | Scheme | Accuracy (%) | Precision (%) | Recall (%) | F1-Score (%) | Kappa | ||

|---|---|---|---|---|---|---|---|---|

| Macro | Weighted | Macro | Macro | Weighted | ||||

| FFE | 77.15 | 80.22 | 77.87 | 77.23 | 78.15 | 76.87 | 0.71 | |

| VGG19 | FT | 85.74 | 85.79 | 85.89 | 83.94 | 84.62 | 85.64 | 0.82 |

| FFE + FT | 86.16 | 86.55 | 86.16 | 85.80 | 86.15 | 86.13 | 0.83 | |

| FFE | 81.34 | 82.62 | 82.14 | 81.45 | 81.75 | 81.46 | 0.77 | |

| InceptionV3 | FT | 90.57 | 90.82 | 90.54 | 91.39 | 91.07 | 90.53 | 0.88 |

| FFE + FT | 89.94 | 91.14 | 90.12 | 89.46 | 90.13 | 89.91 | 0.87 | |

| FFE | 79.04 | 82.15 | 79.68 | 79.07 | 80.15 | 79.02 | 0.74 | |

| ResNet50 | FT | 85.12 | 86.60 | 85.34 | 83.51 | 84.65 | 85.02 | 0.81 |

| FFE + FT | 85.53 | 86.31 | 85.59 | 86.82 | 86.51 | 85.51 | 0.82 | |

| FFE | 81.97 | 84.63 | 82.44 | 81.02 | 82.54 | 81.99 | 0.77 | |

| Inception-ResNetV2 | FT | 91.40 | 91.91 | 91.40 | 92.32 | 92.08 | 91.38 | 0.89 |

| FFE + FT | 90.78 | 91.97 | 90.81 | 91.20 | 91.46 | 90.66 | 0.88 | |

| FFE | 83.02 | 85.07 | 83.72 | 83.81 | 84.19 | 83.14 | 0.79 | |

| DenseNet121 | FT | 88.05 | 89.19 | 88.29 | 87.61 | 88.26 | 88.03 | 0.85 |

| FFE + FT | 87.21 | 87.90 | 87.38 | 86.54 | 87.05 | 87.16 | 0.84 | |

| FFE | 79.04 | 79.83 | 78.99 | 77.91 | 78.56 | 78.76 | 0.74 | |

| MobileNetV2 | FT | 82.60 | 83.57 | 82.65 | 82.13 | 82.61 | 82.49 | 0.78 |

| FFE + FT | 81.76 | 81.55 | 82.32 | 79.84 | 80.52 | 81.87 | 0.77 | |

| Model | Classifier | Accuracy (%) | F1-Score (%) | Kappa |

|---|---|---|---|---|

| VGG19 (FFE + FT) | NN | 86.16 | 86.15 | 0.83 |

| RF | 80.08 | 78.84 | 0.75 | |

| SVM | 84.28 | 83.57 | 0.80 | |

| NB | 68.97 | 67.04 | 0.61 | |

| LR | 80.71 | 79.95 | 0.76 | |

| KNN | 73.79 | 72.53 | 0.67 | |

| InceptionV3 (FT) | NN | 90.57 | 91.07 | 0.88 |

| RF | 88.47 | 88.42 | 0.86 | |

| SVM | 90.57 | 90.89 | 0.88 | |

| NB | 88.47 | 88.60 | 0.86 | |

| LR | 90.78 | 91.28 | 0.88 | |

| KNN | 89.52 | 90.13 | 0.87 | |

| ResNet50 (FFE + FT) | NN | 85.53 | 86.51 | 0.82 |

| RF | 83.02 | 82.83 | 0.79 | |

| SVM | 86.16 | 87.07 | 0.83 | |

| NB | 81.55 | 82.03 | 0.77 | |

| LR | 85.95 | 86.97 | 0.82 | |

| KNN | 79.66 | 79.47 | 0.74 | |

| Inception-ResNetV2 (FT) | NN | 91.40 | 92.08 | 0.89 |

| RF | 90.99 | 91.80 | 0.89 | |

| SVM | 91.61 | 92.27 | 0.90 | |

| NB | 90.99 | 91.75 | 0.89 | |

| LR | 90.99 | 91.60 | 0.89 | |

| KNN | 90.15 | 90.94 | 0.88 | |

| DenseNet121 (FT) | NN | 88.05 | 88.26 | 0.85 |

| RF | 85.74 | 85.52 | 0.82 | |

| SVM | 88.05 | 88.53 | 0.85 | |

| NB | 83.02 | 83.52 | 0.79 | |

| LR | 86.79 | 86.58 | 0.83 | |

| KNN | 84.28 | 84.91 | 0.80 | |

| MobileNetV2 (FT) | NN | 82.60 | 82.61 | 0.78 |

| RF | 84.07 | 83.56 | 0.80 | |

| SVM | 85.32 | 85.62 | 0.82 | |

| NB | 84.49 | 84.40 | 0.81 | |

| LR | 83.02 | 83.47 | 0.79 | |

| KNN | 82.39 | 83.14 | 0.78 |

| Dataset | Classes | Network | Problem | Reported Result |

|---|---|---|---|---|

| J-EDI dataset (Fulton et al. [27]) | 3 classes: Plastic, ROV, Bio | YOLOv2 FT | object detection | maAP = 47.9 |

| Tiny-YOLO | object detection | mAP = 31.6 | ||

| Faster RCNN (with InceptionV2) | object detection | mAP = 81.0 | ||

| SSD MultiBox (with MobileNetV2) | object detection | mAP = 67.4 | ||

| ARIS Explorerx 3000 FLS household marine debris dataset (Valdenegro-Toro [23]) | 6 classes: Metal, Glass, Cardboard, Rubber, Plastic, Background | CNN (four layers) | classification | accuracy = 97.1 |

| object detection | correct detect. = 70.8 | |||

| Underwater Sea Litter dataset natural and synthetic data (Musić et al. [29]) | 5 classes: Cardboard, Glass, Paper, Metal, Plastic | VGG16 | classification | accuracy = 85.0 |

| custom CNN | classification | accuracy = 81.5 | ||

| YOLOv3 (threshold 0.75) | object detection | recall = 43.0 precision = 71.0 accuracy = 36.0 | ||

| LIFE DEBAG Seafloor Marine Litter dataset (Politikos et al. [28]) | 11 classes: pl. bags, pl. bottles, pl. sheets, pl. cups, tires, big object, pl. caps, small pl. sheets, cans, fishing nets, unspecified | Mask R-CNN (IoU threshold 25%) | object detection | mAP = 66.0 |

| Mask R-CNN (IoU threshold 50%) | object detection | mAP = 62.0 | ||

| Mask R-CNN (IoU threshold 75%) | object detection | maP = 45.0 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marin, I.; Mladenović, S.; Gotovac, S.; Zaharija, G. Deep-Feature-Based Approach to Marine Debris Classification. Appl. Sci. 2021, 11, 5644. https://doi.org/10.3390/app11125644

Marin I, Mladenović S, Gotovac S, Zaharija G. Deep-Feature-Based Approach to Marine Debris Classification. Applied Sciences. 2021; 11(12):5644. https://doi.org/10.3390/app11125644

Chicago/Turabian StyleMarin, Ivana, Saša Mladenović, Sven Gotovac, and Goran Zaharija. 2021. "Deep-Feature-Based Approach to Marine Debris Classification" Applied Sciences 11, no. 12: 5644. https://doi.org/10.3390/app11125644

APA StyleMarin, I., Mladenović, S., Gotovac, S., & Zaharija, G. (2021). Deep-Feature-Based Approach to Marine Debris Classification. Applied Sciences, 11(12), 5644. https://doi.org/10.3390/app11125644