1. Introduction

Action recognition is an important task in various video analytic fields, such as real-time intelligent monitoring, human–computer interaction, and autonomous driving systems [

1,

2,

3]. Due to the nature of video processing, a high-speed or real-time processing is an essential condition of action recognition. To recognize an action, machine learning or deep-learning-based classifiers generally use motion features in a video sequence. Since the actions may or may not be continued for the entire frame, the video is treated as a frame acquired from an image sequence.

Since action recognition can be considered as an extended classification task for a set of multiple, temporally related image frames, many image-classification-based approaches were proposed using a convolutional neural network (CNN). The two-dimensional (2D) convolution neural net using 2D features can effectively recognize characteristics of an object. Alexnet is the first, simplest image classification network, which consists of eight 2D convolutional layers, max-pooling layers, dropout layers, and fully connected layers [

4]. Since Alexnet, various CNN-based models with deeper layers were proposed to improve the classification performance. VGG16 consists of 16 convolutional layers of

filters, pooling layers, and fully-connected layers [

5]. Similarly, various high-performance CNN-based classification models were proposed, such as Googlenet and Densenet [

6,

7]. However, since 2D CNN features learn only spatial context, there is a limit to the inclusion of temporal features in a systematic way. Furthermore, it is difficult to recognize an action in a video frame composed of multiple images using a single image classification algorithm.

To process a video clip with multiple frames, Karpathy et al. used a 2D CNN structure to train 2D features for action recognition, and fused a part of the architecture to use additional information [

8]. However, Karpathy’s model can train only spatial features, and temporal features are applied to the final classification layer. If the frames are individually processed in the network, the temporal relation between each frame cannot be fully incorborated.

A 3D convolutional neural net (C3D) was proposed to overcome the structural limitation of the 2D CNN for action recognition. Since input, convolution filters, and feature maps in the C3D are all three-dimensional, it can learn both spatial and temporal information using a relatively small computation amount. Ji et al. extracted features using 3D convolution, and obtained motion information included in temporarily adjacent frames for action recognition [

9]. Tran et al. proposed a C3D that reduces the number of parameters while maintaining the minimum kernel size [

10]. Tran’s C3D takes 16 frames as an input, which is not sufficient to analyze a long-term video. Although existing C3D-based approaches include some spatial–temporal information, they cannot completely represent the temporal relationship between each frame. To incorporate the inter-frame relationship, both spatial and temporal information need to be taken into account at the same time. In this context, optical-flow-based methods estimate motion between two temporally adjacent pixels in video frames. Lucas and Kanade used optical flow to recognize actions [

11]. Specifically, they set up a window for each pixel in a frame, and matched the window in the next frame. However, this method is not suitable for real-time action recognition since pixel-wise computation requires a very large amount of computation.

To solve these problems, this paper presents a novel deep learning model that can estimate spatio-temporal features in the input video. The proposed model can recognize actions by combining spatial information and adjacent short-term pixel difference information. Since a 2D CNN module is used to estimate deep spatial features, the proposed method can recognize actions in real-time. In addition, by fusing the proposed model to the C3D, we can improve the recognition accuracy by analyzing the temporal relationship between frames. Major contributions of the proposed work include:

By creating a deep feature map using a differential image, the network can analyze the temporal relationship. The proposed deep feature map can recognize actions using 2D CNN with temporal features;

Since a human action is a series of action elements, it can be considered as a sequence. In this context, we propose a deep learning model to increase the accuracy of action recognition by assigning a high weight to an important action;

Using the late fusion with C3D, we can improve the recognition accuracy, and prove that the temporal relation is important.

The paper is organized as follows:

Section 2 describes related works for action recognition.

Section 3 defines the short-term pixel-difference image, and presents the moving average network for action recognition. After

Section 4 presents experimental results,

Section 5 concludes the paper.

2. Related Works

In the action recognition research field, various methods are proposed to represent the temporal information of a video. Prior to the deep learning approach, the feature-engineering-based methods were the mainstream way to express the movement of an object in a video frame using hand-crafted features. A histogram of oriented optical flow (HOOF) was used to express motions [

12]. HOOF represents the direction and speed of an actual flow as a vector. This is an operation between each pixel in temporally adjacent frames, and represents the optical flow of a moving object, excluding the stationary region in the video. The optical flow is considered as a histogram for each action and is classified as a corresponding action histogram.

Recently, various deep learning approaches were proposed for action recognition using CNNs. The proposed network uses VGG16 as a baseline model for image classification, as shown in

Figure 1. All layers in VGG16 consist of 4096 3 × 3 convolutional layers, which increases the nonlinearity and accuracy of action recognition.

Recognition of a video requires more computation than that of a single image, since it requires temporal features as well as spatial features. In this context, various studies are being conducted to process the relationship between spatial and temporal information. A 3D convolutional neural net (C3D) was proposed to train the temporal features of input video. The C3D uses both temporal and spatial features from input video. Features in the C3D layers create blocks including both spatial and temporal coordinates. The C3D block promotes spatial–temporal learning for part of the consecutive frames in the convolution features.

Figure 2 shows the structure of 2D and 3D convolution features.

3. Proposed Action Recognition Network

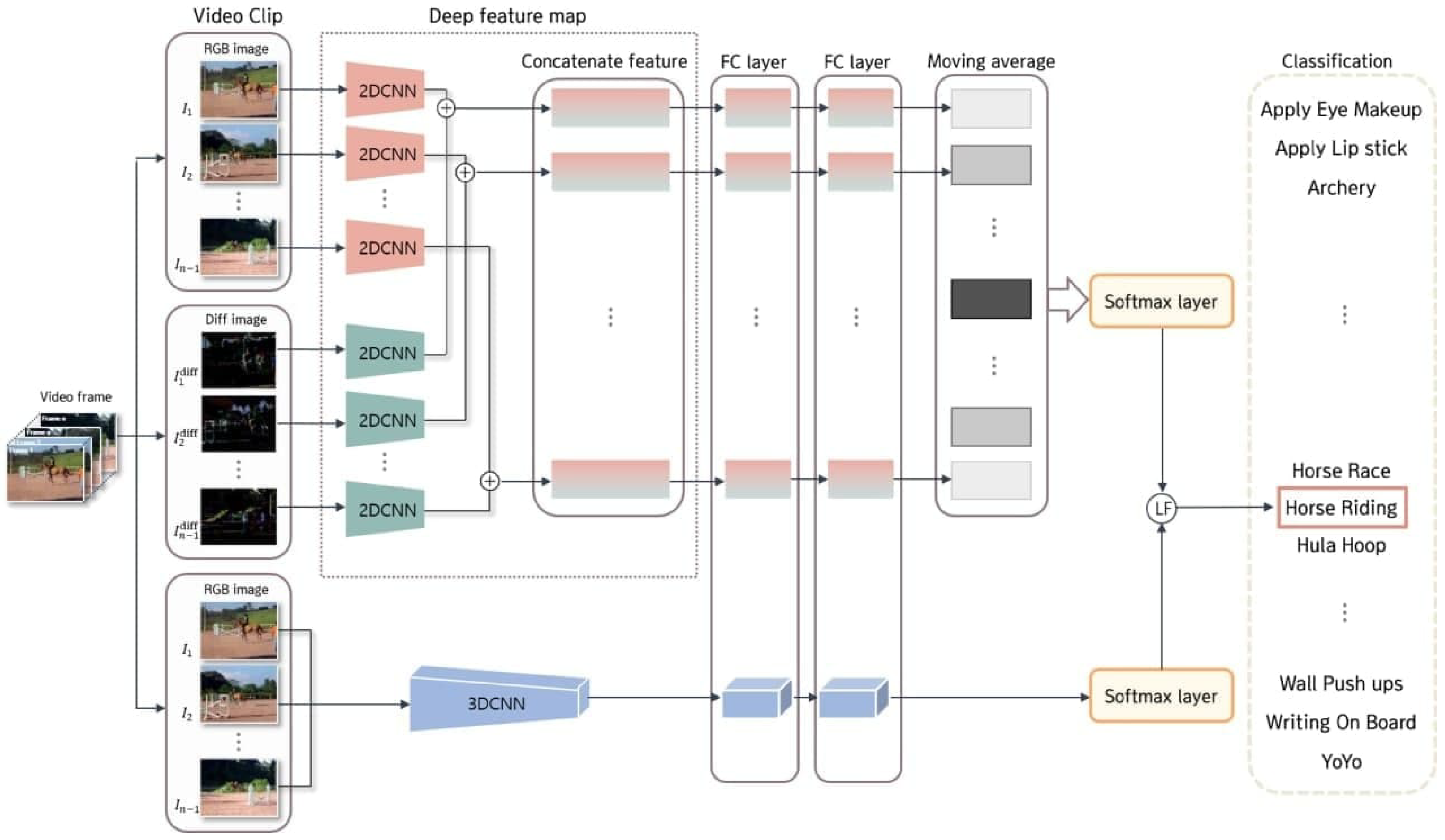

In this section, we present a novel action recognition network (ARN) using deep feature maps and a bidirectional exponential moving average, as shown in

Figure 3. ARN consists of four sequential steps: (i) video frame sampling, (ii) the generation of deep feature maps that include temporal features, (iii) bidirectional exponential moving average network that assigns a high weight to an important action, and (iv) calculation of the action class loss and classification using late fusion with the C3D. The aforementioned four steps are described in the following subsections, respectively.

3.1. Video Frame Sampling

To implement the proposed method in an efficient manner, we sample video frames, where a single action continues for ten seconds, for example, 300 frames are generated assuming the frame rate 30 frame per second (fps). Due to the temporal redundancy between video frames, we need to sample the video to make a shorter video clip that preserves the action information.

Let

represent a set of the initial video frames, then the set of sampled video frames is expressed as

, for

, where

The sampled video frames have a uniform interval, and provide an ordered continuity between frames. In this work, we used and resized each frame to , based on the experimental best performance and computational efficiency.

3.2. Generation of Deep Feature Maps

In this subsection, we present a novel method to generate deep feature maps for accurate, efficient action recognition. Since action recognition requires both spatial and temporal information, we combined two types of feature maps of RGB and differential images. In addition, the generated feature maps not only include spatio-temporal information but also temporally continuous information between adjacent frames using temporal relations.

The RGB image feature map is generated to use the spatial information of the input video clip, which is expressed as

where

represents the

n-th sampled video frame,

the pixel coordinate, and

the bottleneck feature map of the VGG16 backbone network.

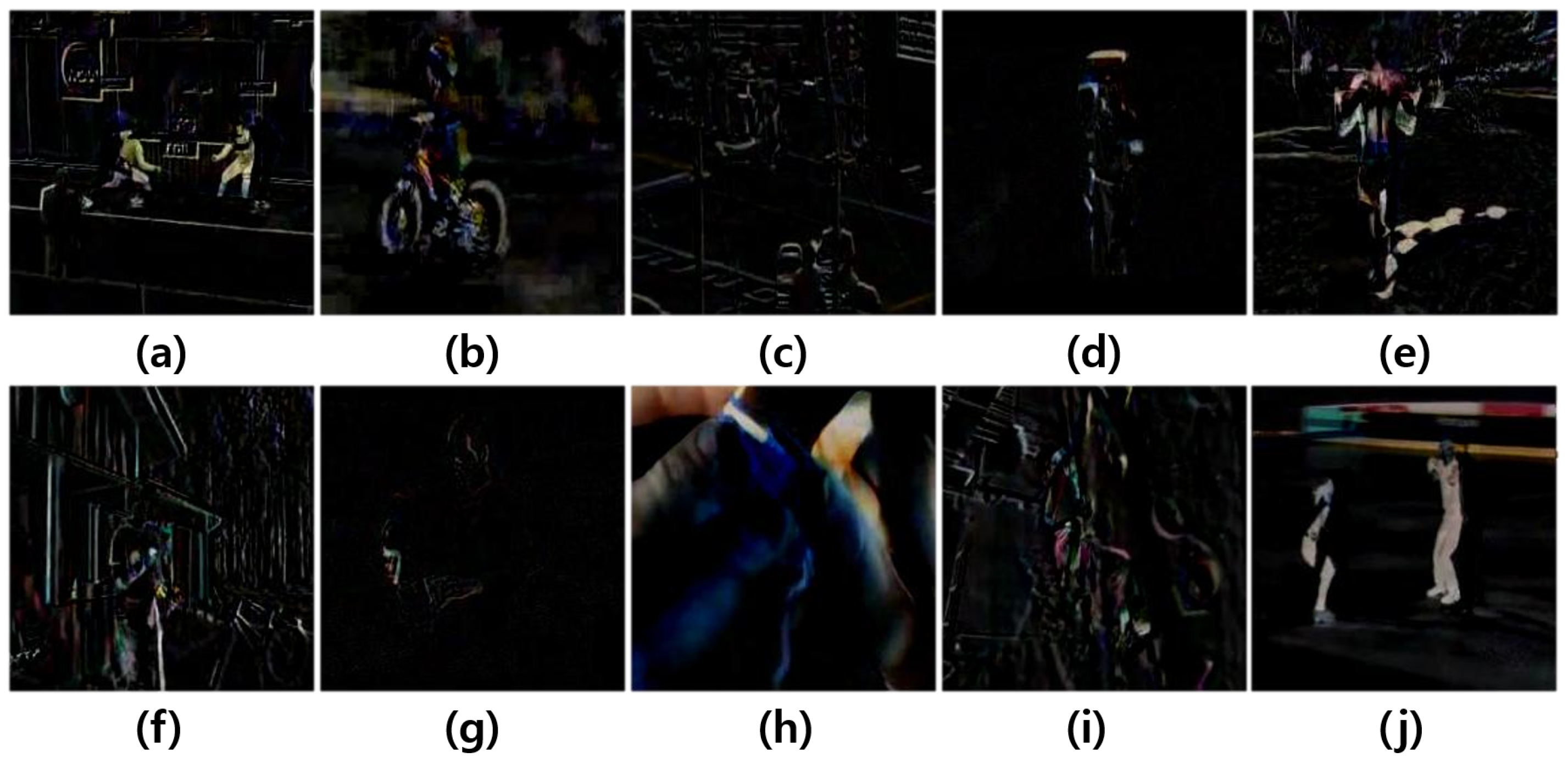

The differential feature map is generated using RGB frame with spatial information. Specifically, the RGB space of the sampled frames contains spatial information, and each sequence of frames contains temporal information. We generate a deep feature map using the temporal relationship between temporally adjacent frames by calculating pixel-wise difference between adjacent frames

Figure 4 shows the results of differential images from a set of selected UCF101 dataset [

13]. A differential image does not have a still background, but moving objects.

Figure 4 is a class containing human hands. When we generate a differential image from a video taken by a stationary camera, only the moving hand region mixing with the upper body of a person is detected. In the case of

Figure 4a,f, some background regions remain due to the movement of the camera, but the pixel value of a person with major movements can be differentiated from background.

The differential image feature map is generated using the differential image as

The differential image feature map returns a bottleneck feature map through the backbone VGG16 network in the same manner as the RGB image feature map.

When learning actions from a video, it is important to train the spatio–temporal information of the action. Therefore, we generated a deep feature map by concatenating RGB image and differential feature maps. Using the extracted feature maps, we computed the feature vector

with multiple fully-connected layers as

where

represents a feature map concatenation operator, and

the fully-connected layer for the

n-th feature map. Consequently, the generated deep feature map is a combination of two feature maps with spatio-temporal information, and enables spatio-temporal learning in the entire network.

3.3. Bidirectional Exponential Moving Average

In this subsection, we present a bidirectional exponential moving average method to assign a high weight to an important action. In general, a single action in a video consists of a sequence of action elements as shown in

Figure 5. More specifically, an action contains preparation, implementation and completion. In this paper, we assume that the most important information is included in the middle, that is, the implementation element.

Based on that assumption, we assign a higher weight to the middle frames using the bidirecitonal exponential moving average, which recursively computes the weight as follows

where

represents the value of bidirectional exponential moving average,

adjusts the weight of bidirectional exponential moving average. In this work,

was experimentally selected.

The final loss value is determined as the mean of the first and last values of

as

where

and

are weighted around the middle feature map sequence in the form of a fully connected layer.

3.4. Late Fusion and Combined Loss

In this subsection, we present a method to improve the accuracy of the proposed algorithm, and prove that the temporal relation matters. The late fusion operation combines previously generated information and the result of C3D, which is the same as the deep feature map using the sampled video clip given in

Section 3.1, and the input has a size of

.

In this paper, the late fusion operation uses the soft-max value of the bidirectional exponential moving average, based on the deep feature map and the softmax value of C3D.

where

represents the last fully connected layer of the C3D network. To combine the temporal information of the C3D network and the temporal relationship information of the proposed network, each softmax value is fused with the same weight.

The proposed method classifies the action using the bidirectional exponential moving average net using a deep feature map and a late fusion with C3D. Therefore, the loss function performs an operation on each label through least square

To reduce the loss value, Adam optimizer was used as the optimization algorithm [

14].

5. Conclusions

We proposed a novel action recognition network (ARN) using a short-term pixel-difference and bidirectional moving average. The proposed ARN generates deep features using the short-term pixel-difference image to combine spatio–temporal information and the temporal relationship between adjacent frames. The proposed network gives a higher weight to the middle frames to train important action elements. Finally, the previously generated information and result of C3D are fused to improve the performance of the proposed network. The late fusion result proves that the temporal relationship is important in improving the recognition performance.

Experimental results showed that ARN succeeded in action recognition with a small dataset. A combination of short-term pixel-difference-based deep features and bidirectional moving average significantly improved the performance of the baseline network. Although the ARN additionally takes temporal information into account, it does not require additional computation compared with 2D CNN. As a result, the ARN is suitable for real-time action recognition in terms of both recognition accuracy and computational efficiency.