Abstract

Epilepsy is a common neurological disease characterized by spontaneous recurrent seizures. Resection of the epileptogenic tissue may be needed in approximately 25% of all cases due to ineffective treatment with anti-epileptic drugs. The surgical intervention depends on the correct detection of epileptogenic zones. The detection relies on invasive diagnostic techniques such as Stereotactic Electroencephalography (SEEG), which uses multi-modal fusion to aid localizing electrodes, using pre-surgical magnetic resonance and intra-surgical computer tomography as the input images. Moreover, it is essential to know how to measure the performance of fusion methods in the presence of external objects, such as electrodes. In this paper, a literature review is presented, applying the methodology proposed by Kitchenham to determine the main techniques of multi-modal brain image fusion, the most relevant performance metrics, and the main fusion tools. The search was conducted using the databases and search engines of Scopus, IEEE, PubMed, Springer, and Google Scholar, resulting in 15 primary source articles. The literature review found that rigid registration was the most used technique when electrode localization in SEEG is required, which was the proposed method in nine of the found articles. However, there is a lack of standard validation metrics, which makes the performance measurement difficult when external objects are presented, caused primarily by the absence of a gold-standard dataset for comparison.

1. Introduction

Epilepsy is a neurological disease affecting approximately 50 million people worldwide [1]. Between 25% and 30% of cases are untreatable with anti-epileptic drugs [2,3]. In those cases, resection of the seizure focus area may be necessary [4].

The resection surgery for pharmacoresistant epilepsy relies on the correct detection of the epileptogenic tissue [5,6]. The detection depends on invasive diagnostic techniques such as Stereotactic Electroencephalography (SEEG) [7]. SEEG measures electric signals using deep electrodes implanted in the brain. The implantation is guided using a Magnetic Resonance Image (MRI) with a stereotactic frame affixed to the head prior to the implantation. After the implantation, a Computer Tomography (CT) image is taken to obtain the localization of the electrodes, and finally, an image fusion is performed between the pre-implantation MRI and the post-implantation CT. Image fusion is a powerful technique because it synthesizes the localization of the electrodes and the structural anatomical information in a single image [8,9]. However, the presence of external objects may affect the performance of fusion techniques. The literature review focuses primarily on fusion techniques between images of MRI and CT, with special attention on the software tools, evaluation metrics, and presence of external objects.

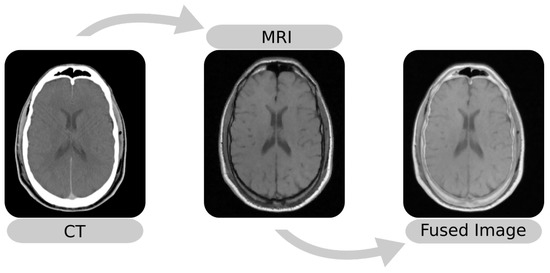

The image fusion (Figure 1) maps images into the same coordinate system and then blends the aligned result into an output image. Among the methods for image fusion, characteristics such as imaging modalities determine the performance of a procedure. For example, methods that use the Mean-Squared Difference (MSD) as the optimization metric perform better in single-modality fusion [10,11]. Thus, it is essential to know the performance of these techniques, especially in applications such as SEEG that involve external objects.

Figure 1.

Example of multi-modal image fusion between an MRI and a CT. The images were taken from Patient 001 of the RIRE dataset [12].

This paper aims to present an overview of the main methods, tools, metrics, and databases used in multi-modal image fusion. This review contributes to the literature by summarizing the main techniques of image fusion, distinguishing between the two main steps of registration and merging.

2. Materials and Methods

The literature review followed the methodology proposed by Kitchenham [13], which is specific to software engineering. Moreover, Kitchenham’s method aligns with the PRISMA methodology [14]. Table 1 illustrates the section defined by Kitchenham for systematic literature reviews and the equivalent to the PRISMA checklist.

Table 1.

The Kitchenham methodology vs. the PRISMA methodology.

The review included three steps: First the review planning was conducted using the research questions. Second, the review was conducted by applying the search strategy. Third, the collected information was synthesized to answer the research questions. The next section presents details on the review stages.

2.1. Planning

Based on the necessity for multi-modal image fusion in diagnostic exams with external objects, such as SEEG, where errors in the fusion could lead to inaccurate detection of the epileptogenic tissue, the following research questions were proposed, focusing mainly on the multi-modal fusion between CT and MRI:

- (i)

- What are the existing methods of brain image fusion using CT and MRI?

- (ii)

- What are the tools used to fuse multi-modal brain images?

- (iii)

- What are the metrics used to validate and compare image fusion methods?

2.2. Conducting the Review

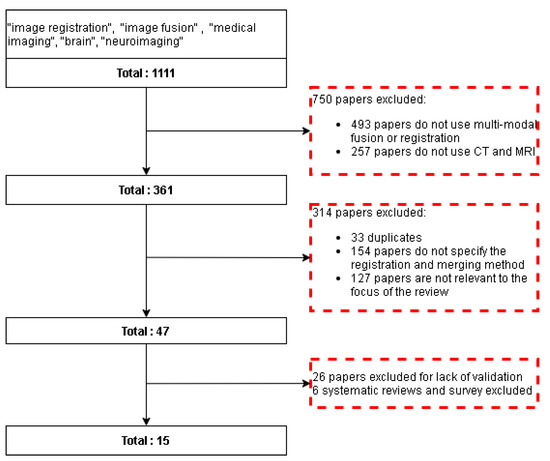

The databases and search engines of Scopus, IEEE, PubMed, Springer, and Google Scholar were useful to conduct the review, because they include articles from the medical and engineering area. The following keywords were used to select articles about multi-modal brain image fusion: “image registration”, “image fusion”, “medical imaging”, “brain”, “neuroimaging”, “computer tomography”, “CT”, “magnetic resonance imaging”, and “MRI”. The dates of the selected articles were between January 2010 and April 2021, obtaining a total of 1111 papers in the first stage. Afterwards, the following inclusion criteria were used: (i) studies about multi-modal image fusion or registration; (ii) studies that used brain images; and (iii) studies that specified the registration and merging method. This stage resulted in a total of 361 papers. Finally, two exclusion criteria were used: (i) studies without validation of the procedure; and (ii) studies that did not use CT or MRI. The final stage returned a total of 15 papers (Table 2 and Figure 2).

Table 2.

The fifteen original research articles selected.

Figure 2.

Flow diagram for the literature review. Red dotted squares represent the excluded papers.

Three criteria were used to evaluate the quality of the papers: (i) complete description of the image fusion or registration method; (ii) complete description of the validation; and (iii) complete description of the databases used for validation.

3. Results

This section is divided into three parts according to the research questions (Section 2.1): methods of image fusion (Section 3.1), performance metrics (Section 3.2), and image fusion tools (Section 3.3).

3.1. Methods of Image Fusion

Image fusion is a technique that composes an image with better information from multiple inputs, which has two steps: registration and merging. The first step transforms all input images into a common standard to represent the same object or phenomena, while the second step merges all aligned images [30]. The article was organized taking the image registration and image merging separately.

3.1.1. Image Registration

Image registration consists of finding a transformation T such that it aligns a moving image with a fixed image , by minimizing a cost function C, where the vector contains the parameters for the transform (Equation (1)) [31,32].

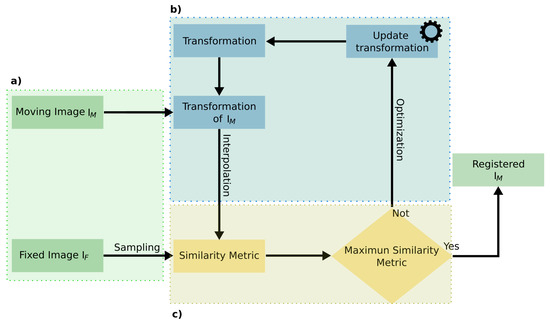

Figure 3 summarizes the procedure where a transformation was applied to . Then, a similarity metric between and was optimized by updating the transform.

Figure 3.

Registration procedure. (a) Input images for the registration. (b) Optimization of the transformation for . (c) Measurement of the similarity metric to compare the transformed with .

The registration procedure could be classified based on two criteria: (i) the transformation (Figure 3b), where the procedure was sub-classified into rigid and non-rigid; (ii) the optimization metric, where the registration was sub-classified according to the similarity metric (Figure 3c). Considering these criteria, first, the concept of transformation and similarity metrics is presented, and then, the results are summarized.

Registration: Transformation

The first type of method is the rigid registration, which applies a single transformation to the entire . This method can be categorized, depending on the transformation type and degrees of freedom, into [31]:

- (i)

- translation: translation only (three degrees of freedom in 3D);

- (ii)

- Euler: rotation and translation (six degrees of freedom in 3D);

- (iii)

- similarity: rotation, scaling, and translation (nine degrees of freedom in 3D);

- (iv)

- affine: translation, rotation, scaling, and shearing (12 degrees of freedom in 3D).

These transformations define the degrees of freedom of the deformation model. The affine transformation has the most degrees of freedom in comparison.

The second type of method is the non-rigid registration, which are computationally more complex than rigid registration, since this requires finding local deformations of the moving image. Non-rigid methods are sub-classified into two main categories based on the deformation model: transformations derived from physical models and transformation derived from interpolation and approximation models [33].

Registration: Metrics

The metric or cost function is an essential part of the registration procedure, which also determines the type of transformation and optimization [32,34]. The most common metrics are:

- (i)

- Mean-Squared Difference (MSD): measures the average gray difference between the and voxels;

- (ii)

- Correlation Coefficient (CC): measures the gray-level similarities between the and voxels [35];

- (iii)

- Normalized Correlation Coefficient (NCC): is the CC after normalizing the images;

- (iv)

- Mutual Information (MI): measures the dependency between two variables (moving and fixed image) and is calculated using the entropy, the probability joint histogram distribution, and the Parzen window estimation [35];

- (v)

- Normalized Mutual Information (NMI): an alternative to the MI metric that solves the drawbacks such as misregistration in small overlapping regions [10].

The most common metrics in multi-modal brain registration are the MI and the NMI, which rely on the probability distribution between the images [10,11].

Registration Methods Literature

Among the 15 selected original research articles, seven proposed methods for rigid registration (Table 3). Taimouri’s method is an example of a non-rigid registration method with the use of the MI to localize deep electrodes in SEEG [17]. This method uses the Insight ToolKit (ITK), an open-source toolkit for medical image registration and segmentation [36]. Finally, the method was validated using images of ten patients, five males and five females, aged between eight and seventeen years. For the accuracy measurement, a photograph of the electrodes was taken from a digital camera, and then, the electrodes of the resulting MRI were projected in the 2D photograph. The accuracy was the error between the electrodes from the photography and the electrodes from the 3D MRI. While the method yielded a low average localization error of 1.31 ± 0.69 mm for 385 electrodes, the accuracy measurement procedure did not consider the error of the projection, which implies uncertainty if the error comes from the registration or the projection procedure.

Table 3.

Articles about multi-modal image registration methods.

Stieglitz et al. [18] used non-rigid transformation with MRI and CT to evaluate if the registration improved the localization of the SEEG electrodes. For the registration, Stieglitz used BrainLab iPlan, which is a radiosurgery planning software for rigid registration, and for non-rigid registration, the Automated Elastic image Fusion algorithm (AEF) and the Guided Elastic image Fusion algorithm (GEF) were used. The non-rigid registration improved the fusion, but there were unclear results in the localization of the electrodes, caused mainly by the evaluation method, which measured the localization performance using the distance between the electrodes and the brain cortex and not the real position of the electrodes in the brain. Another cause for the unclear results was the lack of images for the validation, which was present in other studies that used intracranial electrodes, such as the method proposed by Dykstra et al. [21] or the method proposed by Hermesa et al. [20], which used the MI and the NMI, respectively, for the registration.

The MI and the NMI also have some drawbacks due to Shannon’s entropy, which assumes voxel value independence, but images lack this characteristic. However, a change in the sampling reduces this problem by selecting the less dependent voxel. With this premise, Freiman et al. [15] in 2011 proposed a sampling method using the curvelet transform, which is an extension of the wavelet transform, more suitable for two-dimensional and three-dimensional signals. Freiman et al. used a sampling method with a rigid registration, using the Retrospective Image Registration Evaluation database (RIRE), a public database used to validate registration methods. The method yields better results in the Target Registration Error (TRE) compared to methods based on uniform and gradient-based sampling.

Some authors have used metrics different from the previous ones, such as the multi-modal registration procedure proposed by Panda et al. [16]. Panda et al.’s method uses Evolutionary Rigid-Body Docking (ERBD) algorithms, which is a docking technique that predicts the optimal configuration between two molecules. This registration takes the input images as molecules and minimizes the energy between the images using evolutionary algorithms. The ERBD composes the energy from two metrics: the interaction energy and the MI. Panda et al. developed this method using MATLAB, and it only works with 2D images, causing difficulties in the validation for the use of only a few slices of a patient from the RIRE dataset. For clinical use, this method requires a further development and validation for 3D images.

Another example of a registration procedure using a metric different from the MI is the method proposed by Rühaak et al. [19]. This method uses the Normalized Gradient Field distance (NGF), which works on the assumption that two images are similar if a change in intensity happens in the same locations (edges). Rühaak et al. developed this method using C++ and validated it with images from 20 DBS studies, measuring the error against the manual registration with three experts, yielding an average registration error of 0.95 ± 0.29 mm. The proposed method by Rühaak et al. can be implemented in other studies that use external objects such as SEEG, and the validation method can be applied to evaluate the registration performance. However, this validation method could lead to subjective error due to the manual registration.

The disadvantages of the MI are the susceptibility to local minimum convergence and the joint density calculation complexity. Computing the smoothest cost function with different kernels in the Parzen window probability estimation reduces the first problem [10]. The last problem can be decreased using a different similarity metric, such as the Normalized Gradient Fields (NGFs), which measure the angle between two image gradients in specific locations. The NGF is faster to compute and has a similar performance to the MI registration methods [37,38].

3.1.2. Image Merging Methods

Merging is the process of combining the aligned images. These methods are classified into three categories: Multi-Scale (MSD), non-Multi-Scale (non-MSD), and hybrids [39]. This section presents an overview of these methods. Table 4 shows the found articles about emerging methods.

Table 4.

Articles about multi-modal image merging.

Multi-Scale Decomposition Methods

These are methods that transform an input image into a multi-scale representation [30]. The most common techniques are the pyramidal and transform domain methods [39]. The first method decomposes the image into an array of different scales, then combines decomposed images using fusion rules. The Laplacian Pyramid Transform (LPT) and the local Laplacian Filter (LLF) are examples of pyramidal methods. In contrast, the domain transform methods decompose an image into lower approximations, then fuses every approximation into a single image. Examples of these techniques are: (i) the Discrete Wavelet Transform (DWT); (ii) the Discrete Ripple Transform (DRT); and (iii) the Non-Subsampled Shearlet Transform (NSST).

An example of a Multi-Scale Decomposition (MSD) is the proposed technique by Patel et al., which combines the DWT and the DRT, which is robust to discontinuities in edges and contours [24]. This method consists of the following steps:

- Taking input images, specifically CT and MRI, and aligning them to the same magnitude;

- Applying the DWT to align images;

- Obtaining the wavelet coefficient map from the aligned images;

- Applying the DRT to the wavelet coefficient maps and obtaining an initial fused image;

- Applying the inverse DWT and obtaining the fused image.

Patel validated the algorithm against DWT methods using two pairs of MRI and CT images. This validation uses the metric of the Root-Mean-Squared Error (RMSE) and Peak-Signal-to-Noise-Ratio (PSNR), where Patel’s method exhibited the best performance with a PSNR of 20.56 and an RMSE of 572.23. In contrast, the DWT obtained a PSNR and RMSE of 17.27 and 1219.30, respectively [24]. The method proposed by Patel requires further validation with more images, from any public multi-modal dataset.

Extensions to the DWT are used to reduce some problems of the DWT, such as discontinuities in the edge and contours presented in two-dimensional signals [40]. One example of these extensions is the NSST, which improves the preservation of multi-dimensional signal features [40]. Based on this, Padma Ganasala and Vinod Kumar developed a framework for multi-modal medical image fusion in 2014 [25]. This framework uses the NSST to obtain the high and low frequency components of an image, to combine them separately, and then reconstruct the fused image using the inverse NSST.

Padma and Vinod tested their method using nine pairs of MRI and CT images, from patients with a severe cardiovascular accident. This method was compared against four different fusion algorithms: (i) image fusion in the Intensity-Hue-Saturation (IHS) space; (ii) Non-Subsampled Contourlet Transform Fusion (NSCT); (iii) image fusion using the NSST in the IHS color space; and (iv) image fusion based on NSCT in the IHS color space. The result showed that the method of Padma and Vinod achieved the best performance in the MI, with a score of 2.99. In comparison, the closest method in performance was the NSCT technique in the IHS color space with an MI of 84.28.

Another example of the NSST for image fusion is the method proposed by Nair et al. in 2021 [29]. Nair et al. used a non-rigid registration with B-splines to align the input images and used MATLAB to implement the algorithm. For the validation, ten pairs of grayscale and four pairs of color images were used. This method uses objective and subjective performance metrics to validate it against a non-denoising fusion method. Nair et al.’s study demonstrated that denoising the image improves the fusion procedure at the cost of increased execution time.

Another example of MSD, which solves some drawbacks of the DWT, is the proposed method by Na et al. [28]. Na et al. used a Guided Filter (GF), a technique that takes two inputs, an original image and a guided image, to obtain an output image with the information of the input image and some characteristics of the guided image. Na et al. used the DWT to decompose the image and used the GF to improve the weighted maps using the approximation coefficient from the inputs as the guided image. Na et al. validated the procedure with 15 pairs of CT and MRI images against two DWT methods; one using choose-max fusion and the second one using intuitionistic fuzzy inference fusion. The validation was performed using the metrics of the standard deviation, average gradient, and edge strength. The method of Na et al. showed better performance with all the images. However, the method requires further validation because the comparison was performed individually for every image pair, without using any statistical methodology for the selected image sample.

Non-Multi-Scale Decomposition Methods

The non-MSDs are the methods outside the MSD category. Some examples of these are the sub-space and the artificial neural network methods [30,39].

The sub-space methods project a high-dimensional input image into a lower-dimensional space, achieving better efficiency due to the fact that the manipulation of lower-dimensional data requires less memory. A well-known example of sub-space techniques is the Principal Component Analysis (PCA) method [30].

The artificial network methods use a mathematical model to process information, which uses concepts inspired by biological neural networks, where the main advantage is the capability to predict, analyze, and infer the information of a dataset [39].

The Pulse-Coupled Neural Network (PCNN) is one of the most-used NNs, which was developed by Eckhorn, based on the cat visual cortex [41,42].

One example of the PCNN in image fusion is the technique developed by Xu et al., who used PCNN with the quantum-behaved particle swarm optimization [26]. Xu et al. validated the method against five different fusion techniques, using five pairs of MRI and CT images. The compared methods were: (i) Laplacian pyramid; (ii) the PCNN; (iii) the dual-channel PCNN; (iv) the PCNN with differential evolution algorithms; and (v) the dual-channel PCNN with PSO evolutionary learning. In the evaluation, the Xu technique obtained better performance in the MI and Structural Similarity Index (SSIM), scoring 1.72 and 0.77, respectively.

Hybrid Methods

Lastly, some methods use a combination of MSD and non-MSD. These techniques usually have better performance in feature preservation, but have a drawback in the computing times. An example of a hybrid method is the approach of Kong et al., who used the NSST with the PCNN to fuse MRI and CT images [43]. This method was compared against the techniques of the DWT, NSST, PCNN, and NSCT, using the metrics of the RMSE, PSNR, MI, and Structural Similarity Index (SSIM). The results evidenced that the Kong method obtained the best performance, scoring 1.64, 43.84, 0.91, and 0.99 in the RMSE, PSNR, MI, and SSIM, respectively. However, Kong et al.’s method had a drawback in the computation time, with an execution time of 6.279 seconds, which was higher than the method with the best-performing time, the DWT, with an execution time of 0.317 seconds.

Kavitha proposed a hybrid method in 2010, which uses the Integer Wavelet Transform (IWT) and neuro-fuzzy algorithms to combine the wavelet coefficient maps [27]. Kavitha tested his technique against the DWT methods, using two pairs of MRI and CT images. The results showed that the Kavitha method performed better, with a score of 13.005 and 0.051 in the EN and MI, respectively, which were superior to the DWT score of 5.514 and 0.046, respectively.

3.2. Performance Metrics

Neither of the techniques are perfect, and the performance depends on properties such as the imaging modality. For this reason, it is necessary to compare and validate the techniques in specific scenarios. This validation can be subjective or objective: the subjective validation depends on the human visual evaluation, while the objective evaluation uses quantitative performance metrics. Objective metrics are classified into two types: metric-based with the resulting features and metric-based with signal distortion [44]. The first category measures the transference of feature information into the fused image. Examples of these metrics are the MI, SSIM, and the image quality index from Wang and Bovik (Q) [45]. The second category measures the distortion in the fused image, for instance the RMSE, the Standard Deviation (STD), the PSNR, and the Entropy (EN).

3.2.1. Entropy

The EN estimates the amount of information presented in the images that is calculated with Equation (2), where is the probability of the intensity distribution of the pixel x and N is the number of possible pixel values, 255 for an eight-bit image depth [39,44].

3.2.2. Mutual Information

This estimates the amount of information transferred from the source image into the fused image. The MI is computed with Equation (3) [39].

where is the input image, is the fused image, is the joint entropy between and , and and are the marginal entropy of and , respectively.

3.2.3. Structural Similarity Index

This measures the preservation of the structural information, separating the image into three components: luminance I, contrast C, and structure S. This metric is calculated using Equation (4) [44].

where is the input image, is the fused image, and and are the mean of and , respectively, and are the standard deviation of and , respectively, and and are constants to avoid instability when or is close to zero [46].

3.2.4. Universal Image Quality Index

The Universal Image Quality Index (UIQI) measures the structural similarities between a source image and the fused image [45]. This metric is a specific form of the SSIM, when , which is computed with Equation (5) [46].

3.2.5. Root-Mean-Squared Error

This measures the variance of the arithmetic square root [44]. The RMSE is computed with Equation (6) for 2D images.

where and are the pixel value of the input and fused images, respectively, in the position x and y, and M and N are the width and the height of the image, respectively.

3.2.6. Standard Deviation

3.2.7. Peak-Signal-to-Noise Ratio

The PSNR is computed using the RMSE with Equation (8) [44].

where M and N are the width and the height of the image, respectively.

The suggested method by Maurer et al. is also a powerful tool for the validation of fusion algorithms [47]. This method requires fiducial markers to calculate the Fiducial Registration Error (FRE) and the Target Registration Error (TRE). The FRE measures the distance between corresponding fiducial markers after the registration, while the TRE measures the distance between corresponding points different from the fiducials used for the registration. The measurement of these metrics requires databases with fiducial points, such as the RIRE project [12] or the Non-rigid Image Registration Evaluation Project (NIREP) [48]. Some authors created automatic methods to quantify the registration accuracy, which does not rely on databases. An example is the method proposed by Hauler et al. [22], who used feature detectors to obtain matching points in the images and compute the Euclidean distances between those points. Hauler’s method using the Harris detector yielded comparable results to the use of the RIRE dataset, with the drawback of being insensitive to the presence of large misregistration.

3.3. Image Fusion Tools

Image fusion is a complex procedure that requires the coding of the registration and merging steps. For this reason, software tools were created to facilitate the implementation of image fusion.

Table 5 shows the main tools in image fusion based on the investigation performed by Keszei et al. [35].

Table 5.

Image fusion tools.

4. Discussion

This study provided a literature review about multi-modal image fusion, using articles between January 2010 and April 2021. The main techniques in image fusion and registration were described, and an overview of the main validation metrics and tools used for image fusion was given.

Regarding the first research question, “What are the existing methods of brain image fusion using CT and MRI?”, the procedure was divided into two steps: registration and merging. This review found developments in the use of registration to localize electrodes in SEEG. However, the lack of validation data with external objects was found to be a common problem [17,18,20,21]. This problem led to the use of manual registration as a gold-standard for validation, which produced unclear results for the possible subjective errors. The use of datasets such as the RIRE or NIREP for validation can also lead to unclear results due to the lack of external objects in the images. With respect to the image merging techniques, there were methods related to machine learning algorithms. However, there was a lack of developments specific to problems that require external objects in multi-modal imaging.

Concerning the second research question, “What are the tools used to fuse multi-modal brain images?”, twenty-two tools were found for the registration and fusion of medical images, and from these, sixteen were open source. The most-used platforms for the development of these tools were ITK and MATLAB, which were used by seven of the found tools (Table 2).

As regards the third research question, “What are the metrics used to validate and compare image fusion methods?”, the two main validation methods were: using targets/fiducial points and using specific quantitative metrics. The first one measures the registration procedure using target points to compute the error between the registered moving image and a gold-standard. The necessity of target points causes difficulty in the error calculation, mainly in problems that lack public gold-standard databases such as SEEG.

From the articles found (Table 2), the image merging methods were validated using metrics based on the resulting features and signal distortion. These metrics measure how the information is transferred to the fused images. In contrast, the registration validations measure the distortion in the resulting image, which commonly requires a gold-standard. To the authors’ knowledge, the only gold-standard for multi-modal brain images are the RIRE and NIREP datasets, which cannot be used in specific conditions such as Intracranial Electroencephalography (iEEG). Some authors have used manually registered images [19] or digital photos from the implantation of electrodes in the iEEG to obtain a 3D model of the position of the electrodes and used it as the gold-standard [17,20].

Finally, this review documented the main techniques and tools for the multi-modal fusion of brain images, specifically in the exam of SEEG. There is a lack of merging method for this specific scenario and some problems in the validation methodology for the registration procedure, caused by the lack of a gold-standard dataset with external objects.

5. Conclusions

Image fusion is a powerful tool used in the localization of epileptogenic tissue in SEEG [17,18,20,21]. The methods for image fusion in SEEG focus mainly on the registration procedure, with a lack of implementation. This review also revealed that the most common platforms were MATLAB and ITK, which were used in nine of the found articles.

The literature search did not reveal a standard validation method when external objects were present. This lack of standardization complicates the comparison of different fusion techniques, which also causes unclear accuracy results related to electrode localization. Based on this, it is necessary to create a standard validation method for medical images that requires the localization of external objects, including a gold-standard dataset, similar to the RIRE or NIREP.

Author Contributions

Conceptualization, J.P., C.M. and M.T.; methodology, J.P., C.M. and M.T.; validation, J.P., C.M. and M.T.; formal analysis, J.P.; investigation, J.P.; resources, J.P.; data curation, J.P.; writing—original draft preparation, J.P.; writing—review and editing, J.P., C.M., M.T. and A.H.; visualization, J.P.; supervision, C.M., M.T. and A.H.; project administration, C.M. and M.T.; funding acquisition, M.T. All authors read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Acknowledgments

Claudia Mazo holds a grant from the Enterprise Ireland EI and from the European Union’s Horizon 2020 research and innovation program under the Marie Slodowska-Curie Grant Agreement No. 713654.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| SEEG | Stereotactic Electroencephalography |

| MRI | Magnetic Resonance Imaging |

| CT | Computer Tomography |

| AED | Anti-Epileptic Drugs |

| MSD | Mean Squared Difference |

| DBS | Deep Brain Stimulation |

| CC | Correlation Coefficient |

| NCC | Normalized Correlation Coefficient |

| MI | Mutual Information |

| NMI | Normalized Mutual Information |

| ITK | Insight ToolKit |

| RIRE | Retrospective Image Registration Evaluation Project |

| TRE | Target Registration Error |

| ERBD | Evolutionary Rigid-Body Docking |

| NGF | Normalized Gradient Field distance |

| MSD | Multi-scale Decomposition Methods |

| LPT | Laplacian Pyramid Transform |

| DWT | Discrete Wavelet Transforms |

| DRT | Discrete Ripple Transform |

| NSST | Non-Subsampled Shearlet Transform |

| RMSE | Root Mean Square Error |

| PSNR | Peak-Signal-to-Noise Ratio |

| HUE | Intensity-Hue-Saturation |

| NSCT | Non-Subsampled Contourlet Transform Fusion |

| Non-MSD | Non-Multi-Scale Decomposition |

| PCA | Principal Component Analysis |

| ANN | Artificial Neural Network |

| PCNN | Pulse-Coupled Neural Network |

| SSIM | Structural Similarity Index |

| IWT | Integer Wavelet Transform |

| EN | Entropy |

| STD | Standard deviation |

| NIREP | Non-rigid Image Registration Evaluation Project |

References

- Fiest, K.M.; Sauro, K.M.; Wiebe, S.; Patten, S.B.; Kwon, C.S.; Dykeman, J.; Pringsheim, T.; Lorenzetti, D.L.; Jetté, N. Prevalence and incidence of epilepsy: A systematic review and meta-analysis of international studies. Neurology 2017, 88, 296–303. [Google Scholar] [CrossRef]

- Brodie, M.; Barry, S.; Bamagous, G.; Norrie, J.; Kwan, P. Patterns of treatment response in newly diagnosed epilepsy. Neurology 2012, 78. [Google Scholar] [CrossRef] [PubMed]

- World Health Organization. Epilepsy: A Public Health Imperative; World Health Organization: Geneva, Switzerland, 2019. [Google Scholar]

- Baumgartner, C.; Koren, J.P.; Britto-Arias, M.; Zoche, L.; Pirker, S. Presurgical epilepsy evaluation and epilepsy surgery. F1000Research 2019, 8. [Google Scholar] [CrossRef] [PubMed]

- Minotti, L.; Montavont, A.; Scholly, J.; Tyvaert, L.; Taussig, D. Indications and limits of stereoelectroencephalography (SEEG). Neurophysiol. Clin. 2018, 48. [Google Scholar] [CrossRef] [PubMed]

- Jayakar, P.; Gotman, J.; Harvey, A.S.; Palmini, A.; Tassi, L.; Schomer, D.; Dubeau, F.; Bartolomei, F.; Yu, A.; Kršek, P.; et al. Diagnostic utility of invasive EEG for epilepsy surgery: Indications, modalities, and techniques. Epilepsia 2016, 57. [Google Scholar] [CrossRef] [PubMed]

- Iida, K.; Otsubo, H. Stereoelectroencephalography: Indication and Efficacy. Neurol. Med. Chir. 2017, 57, 375–385. [Google Scholar] [CrossRef]

- Gross, R.E.; Boulis, N.M. (Eds.) Neurosurgical Operative Atlas: Functional Neurosurgery, 3rd ed.; Thieme/AANS: New York, NY, USA, 2018. [Google Scholar]

- Perry, M.S.; Bailey, L.; Freedman, D.; Donahue, D.; Malik, S.; Head, H.; Keator, C.; Hernandez, A. Coregistration of multimodal imaging is associated with favourable two-year seizure outcome after paediatric epilepsy surgery. Epileptic Disord. 2017, 19, 40–48. [Google Scholar] [CrossRef]

- Xu, R.; Chen, Y.W.; Tang, S.; Morikawa, S.; Kurumi, Y. Parzen-Window Based Normalized Mutual Information for Medical Image Registration. IEICE Trans. 2008, 91-D. [Google Scholar] [CrossRef]

- Oliveira, F.P.M.; Tavares, J.M.R.S. Medical image registration: A review. Comput. Methods Biomech. Biomed. Eng. 2014, 17. [Google Scholar] [CrossRef]

- West, J.; Fitzpatrick, J.M.; Wang, M.Y.; Dawant, B.M.; Maurer, C.R.J.; Kessler, R.M.; Maciunas, R.J.; Barillot, C.; Lemoine, D.; Collignon, A.; et al. Comparison and Evaluation of Retrospective Intermodality Brain Image Registration Techniques. J. Comput. Assist. Tomogr. 1997, 21, 554–568. [Google Scholar] [CrossRef]

- Kitchenham, B.; Charters, S. Guidelines for Performing Systematic Literature Reviews in Software Engineering. 2007. Available online: https://userpages.uni-koblenz.de/~laemmel/esecourse/slides/slr.pdf (accessed on 1 June 2021).

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ 2021, 372. [Google Scholar] [CrossRef]

- Freiman, M.; Werman, M.; Joskowicz, L. A curvelet-based patient-specific prior for accurate multi-modal brain image rigid registration. Med. Image Anal. 2011, 15, 125–132. [Google Scholar] [CrossRef] [PubMed]

- Panda, R.; Agrawal, S.; Sahoo, M.; Nayak, R. A novel evolutionary rigid body docking algorithm for medical image registration. Swarm Evol. Comput. 2017, 33. [Google Scholar] [CrossRef]

- Taimouri, V.; Akhondi-Asl, A.; Tomas-Fernandez, X.; Peters, J.M.; Prabhu, S.P.; Poduri, A.; Takeoka, M.; Loddenkemper, T.; Bergin, A.M.R.; Harini, C.; et al. Electrode localization for planning surgical resection of the epileptogenic zone in pediatric epilepsy. Int. J. Comput. Assist. Radiol. Surg. 2014, 9, 91–105. [Google Scholar] [CrossRef] [PubMed]

- Stieglitz, L.H.; Ayer, C.; Schindler, K.; Oertel, M.F.; Wiest, R.; Pollo, C. Improved Localization of Implanted Subdural Electrode Contacts on Magnetic Resonance Imaging With an Elastic Image Fusion Algorithm in an Invasive Electroencephalography Recording. Oper. Neurosurg. 2014, 10, 506–513. [Google Scholar] [CrossRef] [PubMed]

- Rühaak, J.; Derksen, A.; Heldmann, S.; Hallmann, M.; Meine, H. Accurate CT-MR image registration for deep brain stimulation: A multi-observer evaluation study. In Proceedings of the International Society for Optics and Photonics, Orlando, FL, USA, 20 March 2015. [Google Scholar] [CrossRef]

- Hermes, D.; Miller, K.J.; Noordmans, H.J.; Vansteensel, M.J.; Ramsey, N.F. Automated electrocorticographic electrode localization on individually rendered brain surfaces. J. Neurosci. Methods 2010, 185, 293–298. [Google Scholar] [CrossRef]

- Dykstra, A.R.; Chan, A.M.; Quinn, B.T.; Zepeda, R.; Keller, C.J.; Cormier, J.; Madsen, J.R.; Eskandar, E.N.; Cash, S.S. Individualized localization and cortical surface-based registration of intracranial electrodes. NeuroImage 2012, 59, 3563–3570. [Google Scholar] [CrossRef] [PubMed]

- Hauler, F.; Furtado, H.; Jurisic, M.; Polanec, S.H.; Spick, C.; Laprie, A.; Nestle, U.; Sabatini, U.; Birkfellner, W. Automatic quantification of multi-modal rigid registration accuracy using feature detectors. Phys. Med. Biol. 2016, 61, 5198. [Google Scholar] [CrossRef]

- van Rooijen, B.D.; Backes, W.H.; Schijns, O.E.; Colon, A.; Hofman, P.A. Brain Imaging in Chronic Epilepsy Patients After Depth Electrode (Stereoelectroencephalography) Implantation: Magnetic Resonance Imaging or Computed Tomography? Neurosurgery 2013, 73. [Google Scholar] [CrossRef]

- Patel, J.M.; Parikh, M.C. Medical image fusion based on Multi-Scaling (DRT) and Multi-Resolution (DWT) technique. In Proceedings of the 2016 International Conference on Communication and Signal Processing (ICCSP), Melmaruvathur, Tamilnadu, India, 6–8 April 2016. [Google Scholar] [CrossRef]

- Ganasala, P.; Kumar, V. Multimodality medical image fusion based on new features in NSST domain. Biomed. Eng. Lett. 2014, 4. [Google Scholar] [CrossRef]

- Xu, X.; Shan, D.; Wang, G.; Jiang, X. Multimodal medical image fusion using PCNN optimized by the QPSO algorithm. Appl. Soft Comput. 2016, 46. [Google Scholar] [CrossRef]

- Kavitha, C.T.; Chellamuthu, C. Multimodal medical image fusion based on Integer Wavelet Transform and Neuro-Fuzzy. In Proceedings of the 2010 International Conference on Signal and Image Processing, Chennai, India, 15–17 December 2010; pp. 296–300. [Google Scholar] [CrossRef]

- Na, Y.; Zhao, L.; Yang, Y.; Ren, M. Guided filter-based images fusion algorithm for CT and MRI medical images. IET Image Process. 2018, 12, 138–148. [Google Scholar] [CrossRef]

- Nair, R.R.; Singh, T. MAMIF: Multimodal adaptive medical image fusion based on B-spline registration and non-subsampled shearlet transform. Multimed. Tools Appl. 2021, 80. [Google Scholar] [CrossRef]

- Mitchell, H.B. Image Fusion: Theories, Techniques and Applications; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Klein, S.; Staring, M.; Murphy, K.; Viergever, M.A.; Pluim, J.P.W. elastix: A Toolbox for Intensity-Based Medical Image Registration. IEEE Trans. Med. Imaging 2010, 29. [Google Scholar] [CrossRef]

- El-Gamal, F.E.Z.A.; Elmogy, M.; Atwan, A. Current trends in medical image registration and fusion. Egypt. Inform. J. 2016, 17, 99–124. [Google Scholar] [CrossRef]

- Sotiras, A.; Davatzikos, C.; Paragios, N. Deformable medical image registration: A survey. IEEE Trans. Med. Imaging 2013, 32, 1153–1190. [Google Scholar] [CrossRef] [PubMed]

- Andrade, N.; Faria, F.A.; Cappabianco, F.A.M. A Practical Review on Medical Image Registration: From Rigid to Deep Learning Based Approaches. In Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Parana, Brazil, 29 October–1 November 2018. [Google Scholar] [CrossRef]

- Keszei, A.P.; Berkels, B.; Deserno, T.M. Survey of Non-Rigid Registration Tools in Medicine. J. Digit. Imaging 2017, 30. [Google Scholar] [CrossRef]

- Yoo, T.; Ackerman, M.; Lorensen, W.; Schroeder, W.; Chalana, V.; Aylward, S.; Metaxas, D.; Whitaker, R. Engineering and Algorithm Design for an Image Processing API: A Technical Report on ITK—The Insight Toolkit. Stud. Health Technol. Inform. 2002, 85. [Google Scholar] [CrossRef]

- Rühaak, J.; König, L.; Hallmann, M.; Papenberg, N.; Heldmann, S.; Schumacher, H.; Fischer, B. A fully parallel algorithm for multimodal image registration using normalized gradient fields. In Proceedings of the 2013 IEEE 10th International Symposium on Biomedical Imaging, San Francisco, CA, USA, 7–11 April 2013. [Google Scholar] [CrossRef]

- Haber, E.; Modersitzki, J. Intensity Gradient Based Registration and Fusion of Multi-modal Images. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2006; Larsen, R., Nielsen, M., Sporring, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Dogra, A.; Goyal, B.; Agrawal, S. From Multi-Scale Decomposition to Non-Multi-Scale Decomposition Methods: A Comprehensive Survey of Image Fusion Techniques and Its Applications. IEEE Access 2017, 5, 16040–16067. [Google Scholar] [CrossRef]

- Easley, G.; Labate, D.; Lim, W.Q. Sparse directional image representations using the discrete shearlet transform. Appl. Comput. Harmon. Anal. 2008, 25. [Google Scholar] [CrossRef]

- Eckhorn, R. Neural mechanisms of scene segmentation: Recordings from the visual cortex suggest basic circuits for linking field models. IEEE Trans. Neural Netw. 1999, 10, 464–479. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Wang, S.; Zhu, Y.; Ma, Y. Review of Image Fusion Based on Pulse-Coupled Neural Network. Arch. Comput. Methods Eng. 2016, 23. [Google Scholar] [CrossRef]

- Kong, W.; Liu, J. Technique for image fusion based on nonsubsampled shearlet transform and improved pulse-coupled neural network. Opt. Eng. 2013, 52. [Google Scholar] [CrossRef]

- Du, J.; Li, W.; Lu, K.; Xiao, B. An overview of multi-modal medical image fusion. Neurocomputing 2016, 215, 3–20. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C. A universal image quality index. IEEE Signal Process. Lett. 2002, 9. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Maurer, C.R., Jr.; McCrory, J.J.; Fitzpatrick, J.M. Estimation of accuracy in localizing externally attached markers in multimodal volume head images. In Medical Imaging 1993: Image Processing; Loew, M.H., Ed.; SPIE: Bellingham, DC, USA, 1993; Volume 1898. [Google Scholar] [CrossRef]

- Christensen, G.E.; Geng, X.; Kuhl, J.G.; Bruss, J.; Grabowski, T.J.; Pirwani, I.A.; Vannier, M.W.; Allen, J.S.; Damasio, H. Introduction to the Non-rigid Image Registration Evaluation Project (NIREP). In Biomedical Image Registration; Pluim, J.P.W., Likar, B., Gerritsen, F.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).