HP-SFC: Hybrid Protection Mechanism Using Source Routing for Service Function Chaining

Abstract

:1. Introduction

- A novel SF labeling technique for traffic steering and rerouting in SFC that reduces the flow table occupancy in the software switches and Service Function Forwarders (SFFs) and improves network capacity;

- A new and simplified flow entries’ update process for traffic re-routing, which parallelizes the sending of update messages and requires fewer flow entry updates, consequently reducing the recovery delay and control overhead;

- A hybrid protection approach that combines the merits of local and global protection to balance the tradeoff between end-to-end transmission delay and the cost of a protection mechanism in terms of additional resources in network entities;

- A comprehensive evaluation and analysis of HP-SFC in Mininet with two distinct topologies representing a data center and enterprise networks.

2. Failure Recovery in SFC and Challenges

2.1. SFC Creation and Operation

2.2. Segment Routing

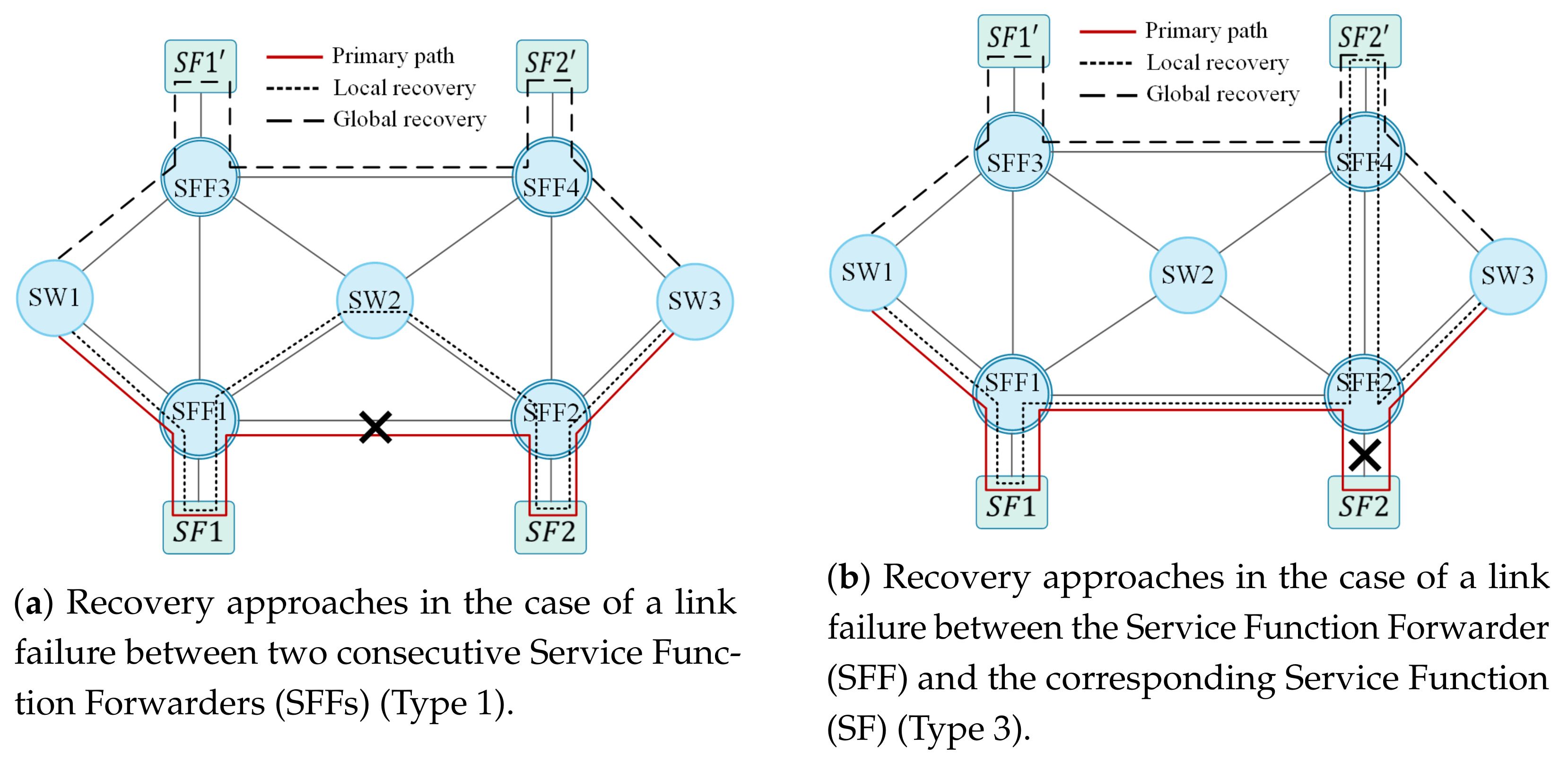

2.3. Limitations of Conventional Failure Recovery Mechanisms in SFC

2.4. Software-Defined Failure Recovery Studies’ Review

3. Hybrid Protection Mechanism for SFC

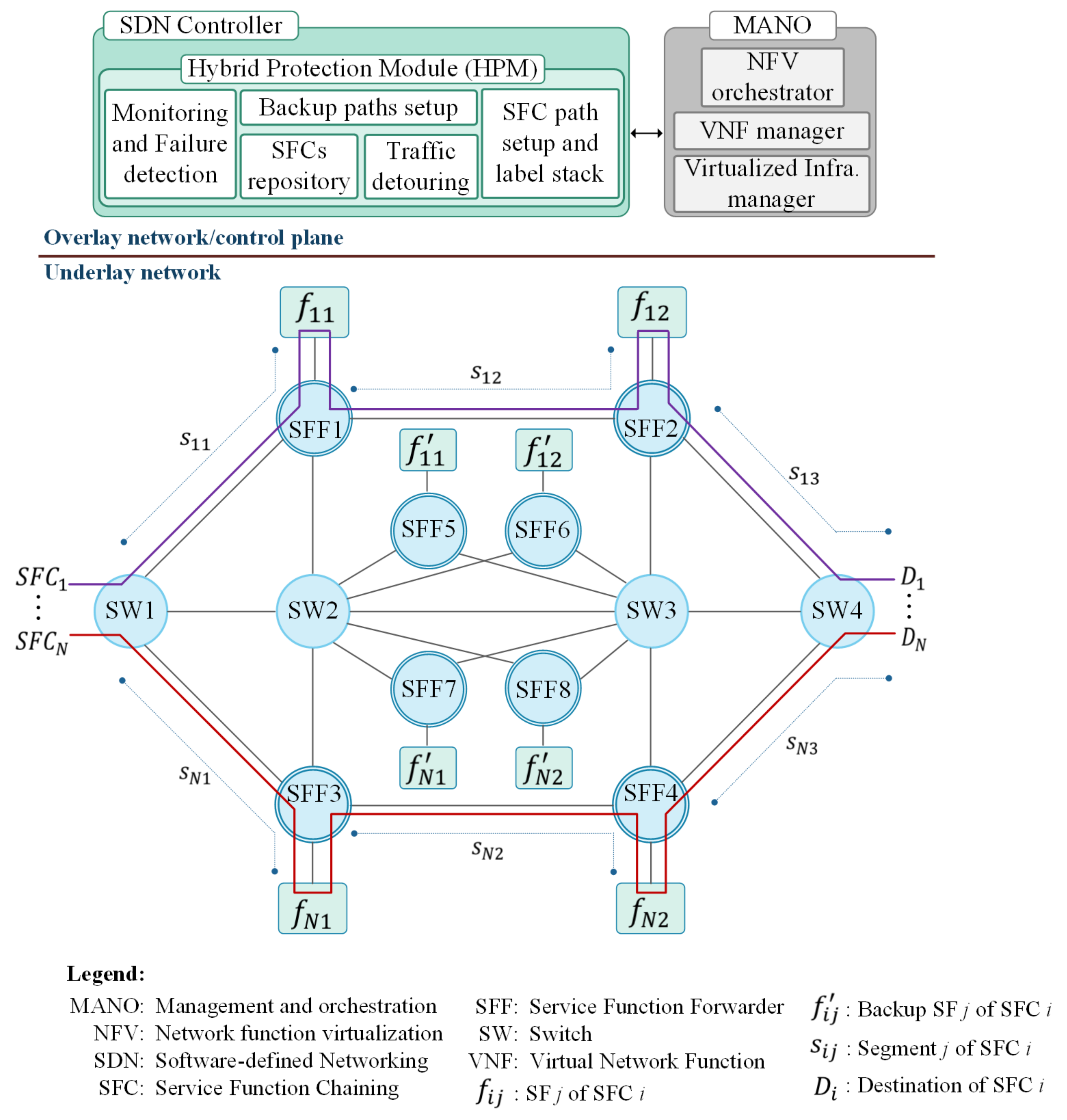

3.1. System Model and Architecture

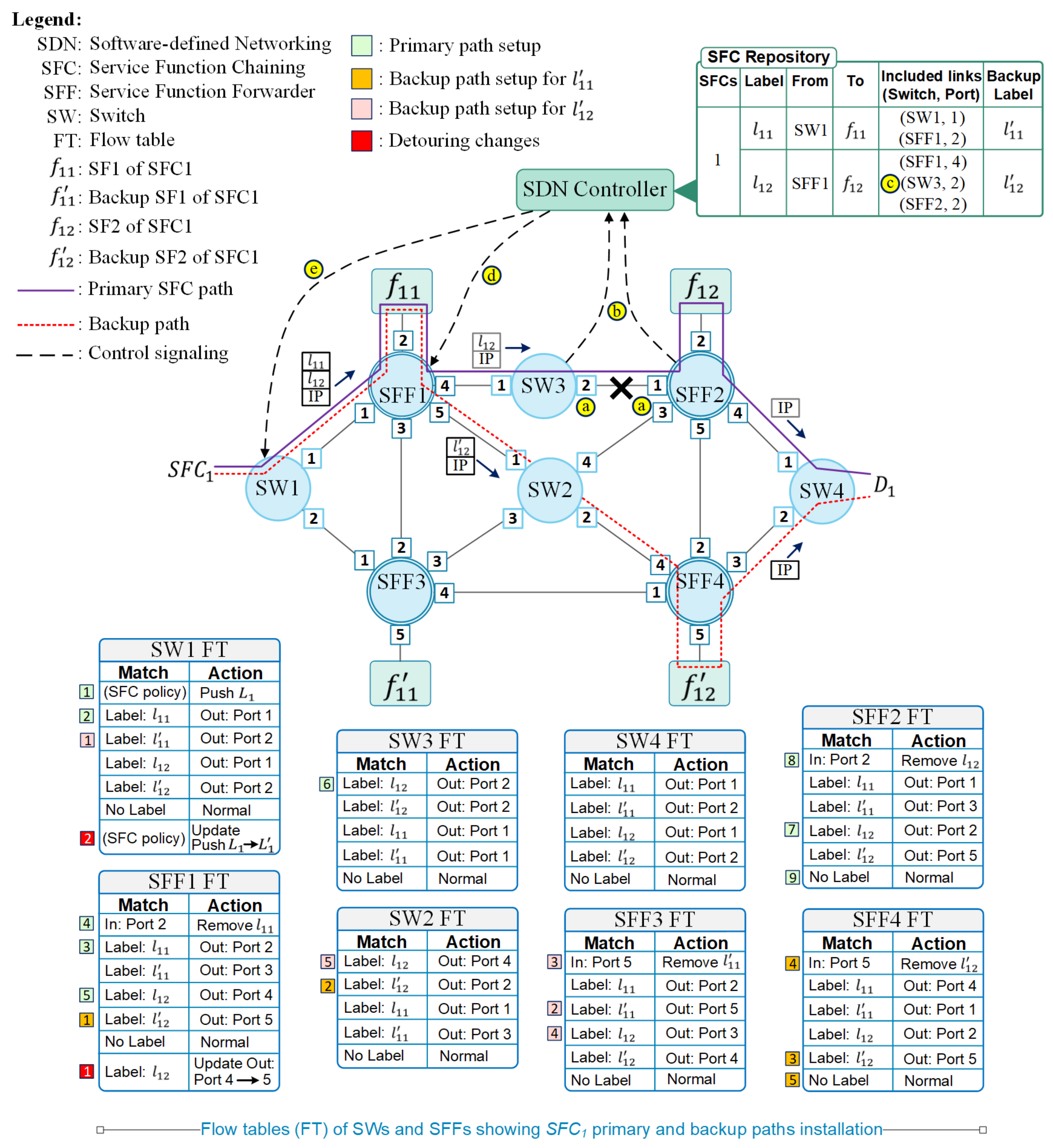

3.2. SFC Paths Installation

3.3. Traffic Detouring in the Case of Failure

- 1

- SW1 flow entry to add an MPLS header with label stack to the incoming packet;

- 2

- SW1 flow entry to match the top label () in the stack and forward the packet to SFF1;

- 3

- SFF1 flow entry to match the top label () in the stack and forward the packet to ;

- 4

- SFF1 flow entry to remove the top label () in the stack of the packet that is received back from ;

- 5

- SFF1 flow entry to match the new top label () in the stack and forward the packet towards SFF2;

- 6

- SFF2 flow entry to match the top label () in the stack and forward the packet to ;

- 7

- SFF2 flow entry to remove the top label () in the stack of the packet that is received from ;

- 8

- SFF2 flow entry to match the destination IP of the packet and forward the packet to SW4, as there is no remaining label in the stack.

- 1

- SFF1 flow entry to match the label and forward the packet to SW2;

- 2

- SW2 flow entry to match top label () in the stack and forward the packet SFF4;

- 3

- SFF4 flow entry to match the top label () in the stack and forward the packet to ;

- 4

- SFF4 flow entry to remove the top label () in the stack of the packet that is received from ;

- 5

- SFF4 flow entry to match the destination IP of the packet and forward the packet to SW4, as there is no remaining label in the stack.

- 1

- The action field of the SFF1 flow entry that matches label is updated to forward the packets to SW2;

- 2

- The SW1 flow entry that adds the MPLS header is updated with the new label stack () where is replaced by .

4. HP-SFC Performance Analysis

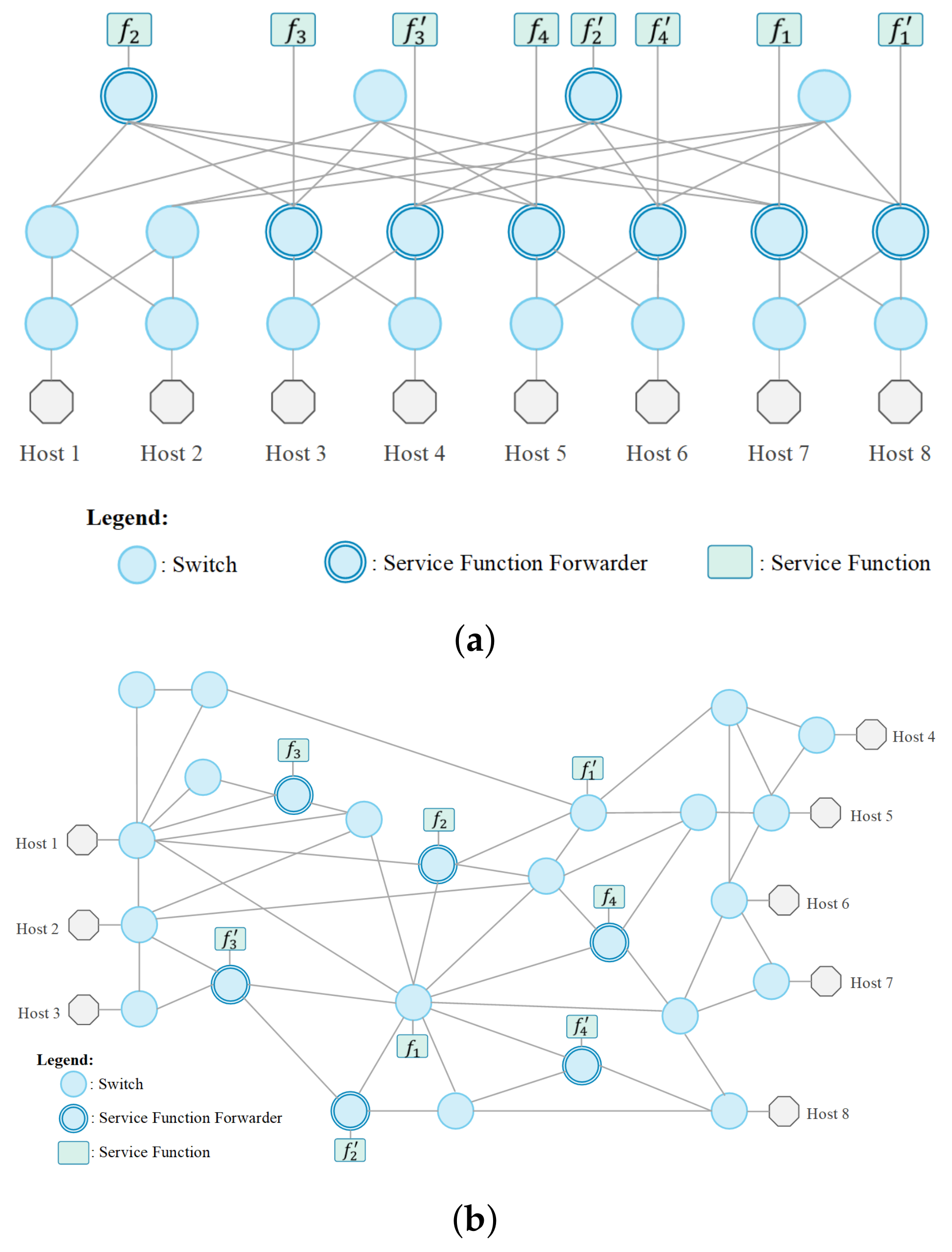

4.1. Implementation

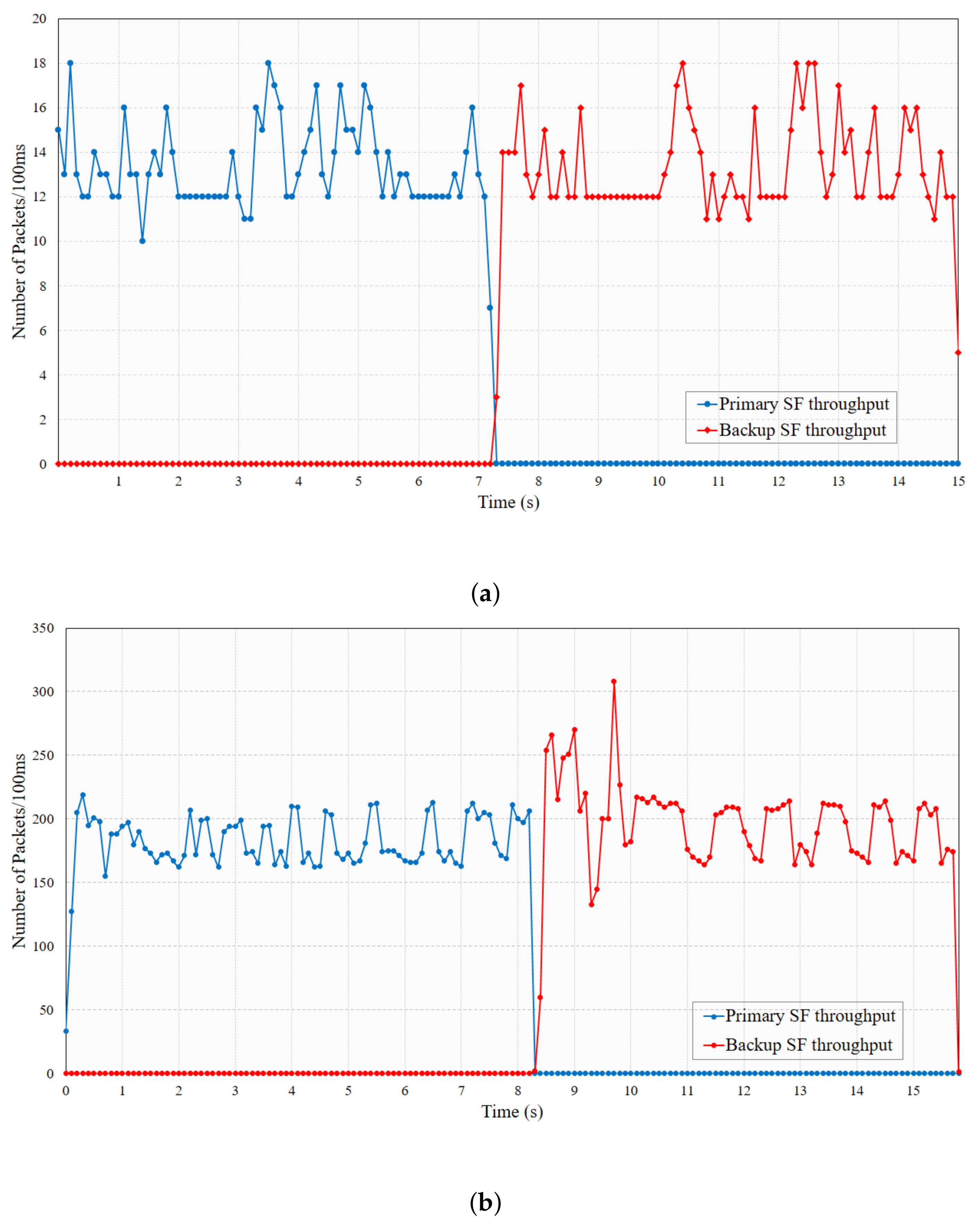

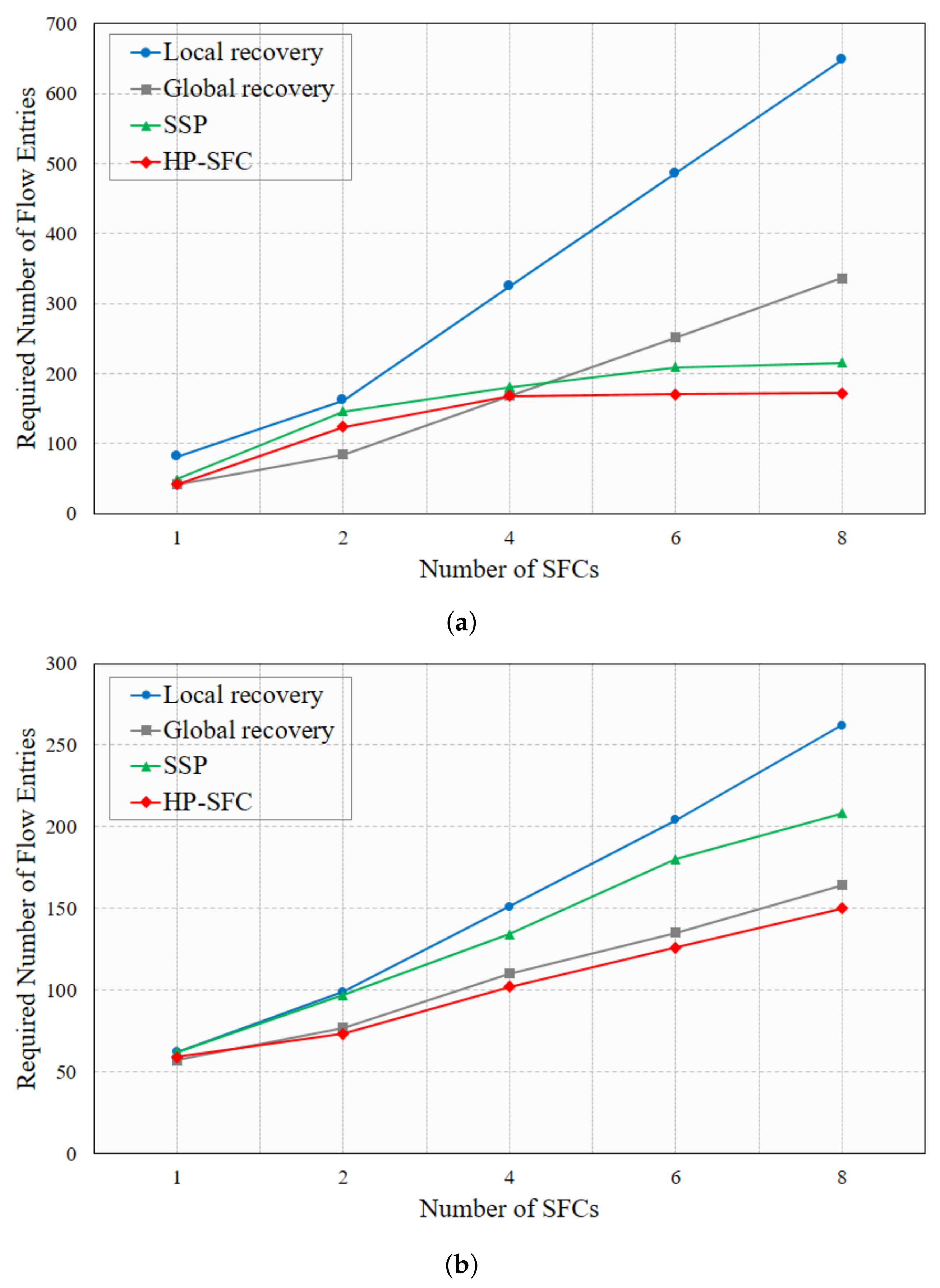

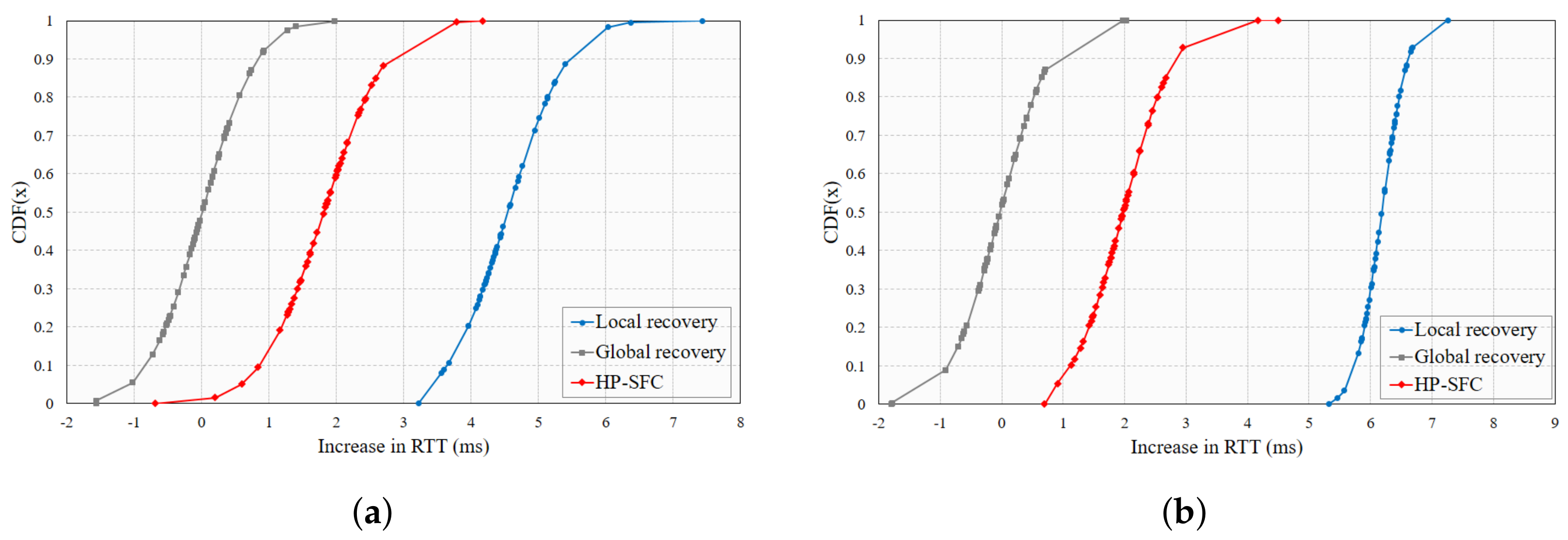

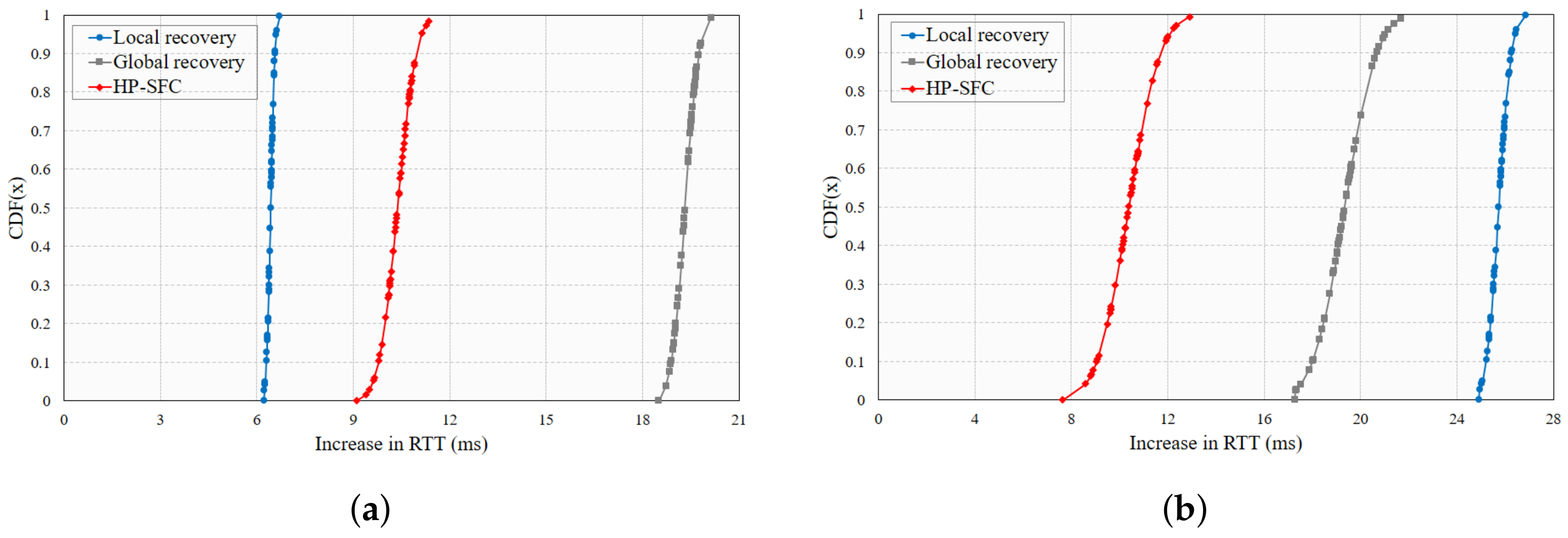

4.2. Results and Evaluation

5. Conclusions and Future Improvements

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Challa, R.; Zalyubovskiy, V.V.; Raza, S.M.; Choo, H.; De, A. Network Slice Admission Model: Tradeoff Between Monetization and Rejections. IEEE Syst. J. 2020, 14, 657–660. [Google Scholar] [CrossRef]

- Lee, D.; Raza, S.M.; Kim, M.; Choo, H. Cost Effective Control Plane Design for Service Assurance in Software Defined Service Function Chaining. In Future Data and Security Engineering. Big Data, Security and Privacy, Smart City and Industry 4.0 Applications; Dang, T.K., Küng, J., Takizawa, M., Chung, T.M., Eds.; Springer: Singapore, 2020; pp. 387–400. [Google Scholar]

- Cisco. White Paper: Cisco Annual Internet Report (2018–2023). Available online: https://www.cisco.com/c/en/us/solutions/collateral/executive-perspectives/annual-internet-report/white-paper-c11-741490.pdf (accessed on 20 March 2021).

- Thorat, P.; Raza, S.M.; Kim, D.S.; Choo, H. Rapid recovery from link failures in software-defined networks. J. Commun. Netw. 2017, 19, 648–665. [Google Scholar] [CrossRef]

- Thorat, P.; Jeon, S.; Raza, S.M.; Challa, R.; Choo, H. Scalable and Efficient Forwarding Table Design for Multi-Link Failover in OpenFlow-Enabled Networks. IETE Tech. Rev. 2017, 34, 27–38. [Google Scholar] [CrossRef]

- Soualah, O.; Mechtri, M.; Ghribi, C.; Zeghlache, D. A link failure recovery algorithm for Virtual Network Function chaining. In Proceedings of the 2017 IFIP/IEEE Symposium on Integrated Network and Service Management (IM), Lisbon, Portugal, 8–12 May 2017; pp. 213–221. [Google Scholar] [CrossRef]

- Rahman, M.R.; Boutaba, R. SVNE: Survivable Virtual Network Embedding Algorithms for Network Virtualization. IEEE Trans. Netw. Serv. Manag. 2013, 10, 105–118. [Google Scholar] [CrossRef]

- Filsfils, C.; Previdi, S.; Ginsberg, L.; Decraene, B.; Litkowski, S.; Shakir, R. Segment Routing Architecture. RFC 8402 2018. [Google Scholar] [CrossRef]

- Yu, H.; Anand, V.; Qiao, C.; Sun, G. Cost Efficient Design of Survivable Virtual Infrastructure to Recover from Facility Node Failures. In Proceedings of the 2011 IEEE International Conference on Communications (ICC), Kyoto, Japan, 5–9 June 2011; pp. 1–6. [Google Scholar] [CrossRef]

- Ayoubi, S.; Chen, Y.; Assi, C. Towards Promoting Backup-Sharing in Survivable Virtual Network Design. IEEE/ACM Trans. Netw. 2016, 24, 3218–3231. [Google Scholar] [CrossRef]

- Jeong, H.; Raza, S.M.; Tien Nguyen, D.; Kim, S.; Kim, M.; Choo, H. Control Plane Design for Failure Protection in Software Defined Service Function Chains. In Proceedings of the 2020 14th International Conference on Ubiquitous Information Management and Communication (IMCOM), Taichung, Taiwan, 3–5 January 2020; pp. 1–6. [Google Scholar] [CrossRef]

- ETSI. Network Functions Virtualisation (NFV); Architectural Framework; GS NFV 002 v1.2.1; 2014. [Google Scholar] [CrossRef]

- Mijumbi, R.; Serrat, J.; Gorricho, J.; Latre, S.; Charalambides, M.; Lopez, D. Management and orchestration challenges in network functions virtualization. IEEE Commun. Mag. 2016, 54, 98–105. [Google Scholar] [CrossRef]

- Dan, L.; Julong, L.; Yuxiang, H. Central Control over Distributed Service Function Path. KSII Trans. Internet Inf. Syst. 2020, 14, 577–594. [Google Scholar] [CrossRef]

- Quinn, P.; Elzur, U.; Pignataro, C. Network Service Header (NSH). RFC 8300 2018. [Google Scholar] [CrossRef]

- Scholl, T.; Mullooly, J.; Smith, D.; Jaeger, W. Label Edge Router Forwarding of IPv4 Option Packets. RFC 6178 2011. [Google Scholar] [CrossRef] [Green Version]

- Gill, P.; Jain, N.; Nagappan, N. Understanding Network Failures in Data Centers: Measurement, Analysis, and Implications. SIGCOMM Comput. Commun. Rev. 2011, 41, 350–361. [Google Scholar] [CrossRef]

- Kong, J.; Kim, I.; Wang, X.; Zhang, Q.; Cankaya, H.C.; Xie, W.; Ikeuchi, T.; Jue, J.P. Guaranteed-Availability Network Function Virtualization with Network Protection and VNF Replication. In Proceedings of the GLOBECOM 2017—2017 IEEE Global Communications Conference, Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Abdelsalam, A.; Clad, F.; Filsfils, C.; Salsano, S.; Siracusano, G.; Veltri, L. Implementation of virtual network function chaining through segment routing in a linux-based NFV infrastructure. In Proceedings of the 2017 IEEE Conference on Network Softwarization (NetSoft), Bologna, Italy, 3–7 July 2017; pp. 1–5. [Google Scholar] [CrossRef] [Green Version]

- Giorgetti, A.; Sgambelluri, A.; Paolucci, F.; Cugini, F.; Castoldi, P. Segment routing for effective recovery and multi-domain traffic engineering. IEEE/OSA J. Opt. Commun. Netw. 2017, 9, A223–A232. [Google Scholar] [CrossRef]

- Thorat, P.; Jeon, S.; Choo, H. Enhanced local detouring mechanisms for rapid and lightweight failure recovery in OpenFlow networks. Comput. Commun. 2017, 108, 78–93. [Google Scholar] [CrossRef]

- Ko, K.; Son, D.; Hyun, J.; Li, J.; Han, Y.; Hong, J.W. Dynamic failover for SDN-based virtual networks. In Proceedings of the 2017 IEEE Conference on Network Softwarization (NetSoft), Bologna, Italy, 3–7 July 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Open Network Foundation. OpenFlow Switch Specification Version 1.5.1. TS-025. 2015. Available online: https://opennetworking.org/wp-content/uploads/2014/10/openflow-switch-v1.5.1.pdf (accessed on 4 June 2012).

- Knight, S.; Nguyen, H.X.; Falkner, N.; Bowden, R.; Roughan, M. The Internet Topology Zoo. IEEE J. Sel. Areas Commun. 2011, 29, 1765–1775. [Google Scholar] [CrossRef]

- Kumar, S.; Tufail, M.; Majee, S.; Captari, C.; Homma, S. Service Function Chaining Use Cases In Data Centers. Internet-Draft draft-ietf-sfc-dc-use-cases-06, Internet Engineering Task Force, 2017. Work in Progress. Available online: https://datatracker.ietf.org/doc/html/draft-ietf-sfc-dc-use-cases (accessed on 4 June 2021).

- Wang, E.; Leung, K.; Felix, J.; Iyer, J.; Patel, P. Service Function Chaining Use Cases for Network Security. Internet-Draft draft-wang-sfc-ns-use-cases-03, Internet Engineering Task Force, 2017. Work in Progress. Available online: https://datatracker.ietf.org/doc/html/draft-wang-sfc-ns-use-cases-03 (accessed on 4 June 2021).

- Xiang, Z.; Seeling, P. Chapter 11—Mininet: An instant virtual network on your computer. In Computing in Communication Networks; Fitzek, F.H., Granelli, F., Seeling, P., Eds.; Academic Press: Cambridge, MA, USA, 2020; pp. 219–230. [Google Scholar] [CrossRef]

- Jian, S.; Ruoyu, X.; ShiMing, Y.; BaoWei, W.; Jiuru, W. Redundant rule Detection for Software-Defined Networking. KSII Trans. Internet Inf. Syst. 2020, 14, 2735–2751. [Google Scholar] [CrossRef]

- Thorat, P.; Dubey, N.K. Pre-provisioning Protection for Faster Failure Recovery in Service Function Chaining. In Proceedings of the 2020 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 2–4 July 2020; pp. 1–6. [Google Scholar] [CrossRef]

| Source Host | Ordered Service Functions | Destination Host | |

|---|---|---|---|

| SFC1 | Host 1 | SF1 | Host 8 |

| SFC2 | Host 8 | SF1 → SF2 → SF3 | Host 3 |

| SFC3 | Host 7 | SF1 → SF2 | Host 1 |

| SFC4 | Host 4 | SF2 → SF4 | Host 6 |

| SFC5 | Host 3 | SF3 | Host 2 |

| SFC6 | Host 2 | SF2 → SF3 → SF4 | Host 5 |

| SFC7 | Host 2 | SF2 → SF3 | Host 4 |

| SFC8 | Host 3 | SF3 → SF1 | Host 7 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Raza, S.M.; Jeong, H.; Kim, M.; Choo, H. HP-SFC: Hybrid Protection Mechanism Using Source Routing for Service Function Chaining. Appl. Sci. 2021, 11, 5245. https://doi.org/10.3390/app11115245

Raza SM, Jeong H, Kim M, Choo H. HP-SFC: Hybrid Protection Mechanism Using Source Routing for Service Function Chaining. Applied Sciences. 2021; 11(11):5245. https://doi.org/10.3390/app11115245

Chicago/Turabian StyleRaza, Syed M., Haekwon Jeong, Moonseong Kim, and Hyunseung Choo. 2021. "HP-SFC: Hybrid Protection Mechanism Using Source Routing for Service Function Chaining" Applied Sciences 11, no. 11: 5245. https://doi.org/10.3390/app11115245

APA StyleRaza, S. M., Jeong, H., Kim, M., & Choo, H. (2021). HP-SFC: Hybrid Protection Mechanism Using Source Routing for Service Function Chaining. Applied Sciences, 11(11), 5245. https://doi.org/10.3390/app11115245