Decision Making with STPA through Markov Decision Process, a Theoretic Framework for Safe Human-Robot Collaboration

Abstract

1. Introduction

- The introduction of the system’s state and action space partitioning based on group of safe, unsafe and recovery states resulting from their association with the respective actions, that outline the system’s current safety level.

- The interpretation of the STPA control structure as a decision making tool (based on POMDP models) to be utilized in the operational phase in order to decide on the most appropriate next action that will bring the system into a safer state.

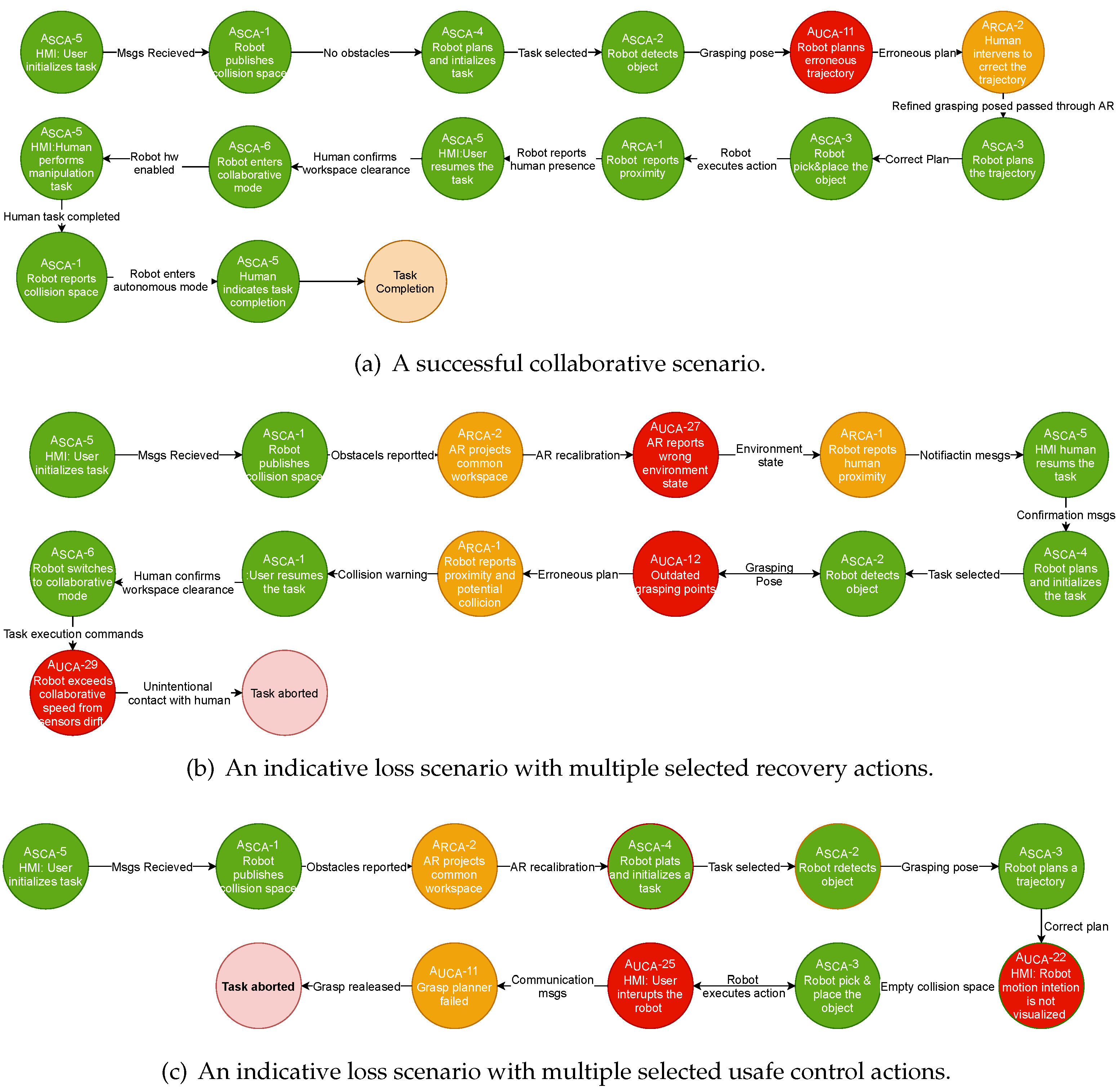

- The application of STPA on a classic HRC topology and the evaluation of our decision making method with the formulated policy graph.

2. Related Work

3. Theoretical Background and Problem Formulation

3.1. STPA Principals

- Identify the losses considering all the stakeholders of the system;

- Identify system level hazards and link them to losses;

- Define system level constraints;

- Model the general control structure;

- Identify unsafe control actions;

- Identify causes of unsafe control.

3.2. STPA as Component of a Decision Making Tool

3.2.1. Markov Decision Processes

3.2.2. State Uncertainty in Markov Decision Processes

4. Methodology

4.1. STPA-Related POMDP Formulation

- denotes the States space that determines the condition of the environment, the operator, the robot and the peripheral monitoring components at each time t.

- denotes the Actions space that comprises all the system actions (including nominal CAs and UCAs) that the ecosystem agents (i.e., robot and operator) are able to perform so as to complete the collaborative task.

- denotes the Observations space that comprises the outcomes of each above mentioned action, but under the assumption that an observation partially describes the state of the previous entities.

- comprises a Reward function that determines the restrictions imposed by penalizing or favoring specific selected actions (A) during the HRC (S).

- The probability distribution of the initial state comprises the likelihood about the environment, the robot, the operator and the subordinate components to be in specific state s at the time such as:

- The probability distribution of the state transition comprises the probability of propagating to state given that the domain is in state s and the system selects an action a and its respective expression is provided as:

- The probability distribution of the observations comprises the uncertainty of the model to receive a specific observation , e.g., feedback, considering that the ecosystem is in state s and the prompting model has selected to perform the action a, also expressed as:

- The probability distribution about the current state of the ecosystem to be in s, being partially observable through observation . Since it is not possible to define the current state with complete certainty, a belief distribution is maintained to express the history of the selected actions and state transitions of the domain such as at time t, the operator, the robot, the environment and the subordinate monitoring components are at state s considering the sequence of past combination of actions and observations as follows:

4.2. Prompting on Different Safety Levels

5. Application of STPA with POMDP in Collaborative Tasks

- Sequential tasks, in which the robot performs one task and after its completion the human performs the next task (e.g., the robot places the components on the workstation and, later, the human handles these components).

- Simultaneous collaboration tasks, in which the robot and the operator work together on the same component in order to complete a task (e.g., the robot holds a heavy component tight while the human processes its subordinate parts).

- Investigate the system structure, goals, components, requirements, functions and components interaction.

- Elicit the system requirements and safety constraints.

- Identify the potential accidents and unacceptable losses and the hazards at system level.

- Draw the functional control diagram of the system.

- Apply step 2 and step 3 of STPA to the control structure.

- Refine the safety requirements and constraints.

5.1. Process Analysis

- Accident 1: The robot injures the human while a task is performed in the common shared workspace.

- Accident 2: The robot injures the human while they work in separate workspaces.

- SK1: Manufacturing Company: where idle and unusable robots hinder the return on investment and lead to the reduction of productivity. The identified losses could be:

- -

- L-1: Loss of/or damage to robot,

- -

- L-2: Loss of profit,

- -

- L-3: Loss of/or damage to objects outside/inside the collaborative area (e.g., infrastructure damages).

- SK2: Human operator: where the human life can be threatened from injuries, while also the user experience from the involvement in robotic tasks can be reduced. The identified losses for this stakeholder group could be:

- -

- L-4: Loss of human life or injury to people,

- -

- L-5: Loss of trust in automation.

5.2. Identification of System-Level Hazards

- H-1: Robot exceeds maximum set collaborative speed while human inserts its workspace (L-4, L-5).

- Workspace monitoring

- -

- H-1.1 Workspace monitoring does not report accurately the human position relative to the robot.

- H-2: Robot violates operator’s personal space while not being in collaborative mode (L-3, L-4, L-5).

- Workspace monitoring

- -

- H-2.1 Occlusions in proximity sensor hinder human position.

- H-3: Robot does not complete the grasping task (L-2, L-3, L-5).

- Grasping points inference

- -

- H-3.1 Selected grasping points is out of robot’s reach;

- -

- H-3.2 Grasped object slips from robot’s end effector.

- H-4: Robot does not complete the manipulation task (L-2, L-3, L-5).

- Trajectory plan

- -

- H-4.1 Robot cannot compute an inverse kinematics solution to reach the grasping goal;

- -

- H-4.2 Trajectory planning time out due to cluttered environment.

- H-5: Robot keeps moving when an un-intentional contact occurs (L-1, L-2, L-3, L-4, L-5).

- Hardware sensing

- -

- H-5.1 Force/torque sensor does not measure the excessive forces.

- H-6: Robot performs unexpected and abrupt movements that are not foreseen in the collaboration flaw (L-2, L-3, L-4, L-5).

- Task planning

- -

- H-6.1 Robot state does not resemble the monitoring feedback.

- H-7: Human performs unexpected movements that are not foreseen in the collaboration flaw (L-1, L-2, L-3, L-4).

- Communication

- -

- H-7.1 HMI reports outdated manufacturing state;

- -

- H-7.2 AR visualizes outdated robot state.

- SLC-H-1: Robot must satisfy collaborative standards while operating in collaborative mode.

- -

- SC-H-1.1 Excessive speeds should be detected to prevent unintentional contact with human.

- SLC-H-2: Robot must satisfy minimum separation distances from operator while not being in collaborative mode.

- -

- SC-H-2.1 The human personal space should be monitored to prevent unintentional contacts.

- SLC-H-3: Robot grasping should be maintained within object handling affordances.

- -

- SC-H-3.1 Grasp stability should be monitored during object transfer.

- SLC-H-4: Robot manipulation should be applied within manipulation affordances.

- -

- SC-H-4.1 Human–robot workspace and robot collision area should be continuously monitored and updated.

- SLC-H-5: Robot must differentiate and respond among intentional and unintentional collisions.

- -

- SC-H-5.1 Real time switch among collaborative and non-collaborative mode.

- SLC-H-6: Robot should follow a planned sequence of tasks.

- -

- SC-H-6.1 Robot should move to the next task upon confirmation of completion of the current one.

- SLC-H-7: Human should follow a planned sequence of tasks.

- -

- SC-H-7.1 The planned sequence of tasks should be mutually apprehended by robot and human.

5.3. Feasibility Study

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bragança, S.; Costa, E.; Castellucci, I.; Arezes, P.M. A brief overview of the use of collaborative robots in industry 4.0: Human role and safety. In Occupational and Environmental Safety and Health; Springer: Berlin/Heidelberg, Germany, 2019; pp. 641–650. [Google Scholar]

- Vysocky, A.; Novak, P. Human-Robot collaboration in industry. Sci. J. 2016, 9, 903–906. [Google Scholar] [CrossRef]

- Zacharaki, A.; Kostavelis, I.; Gasteratos, A.; Dokas, I. Safety bounds in human robot interaction: A survey. Saf. Sci. 2020, 127, 104667. [Google Scholar] [CrossRef]

- Munoz, L. Ergonomics in the Industry 4.0: Collaborative robots. J. Ergon. 2017, 7, e173. [Google Scholar] [CrossRef]

- Patalas-Maliszewska, J.; Krebs, I. A model of the tacit knowledge transfer support tool: CKnow-board. In International Conference on Information and Software Technologies; Springer: Berlin/Heidelberg, Germany, 2016; pp. 30–41. [Google Scholar]

- Ballestar, M.T.; Díaz-Chao, Á.; Sainz, J.; Torrent-Sellens, J. Knowledge, robots and productivity in SMEs: Explaining the second digital wave. J. Bus. Res. 2020, 108, 119–131. [Google Scholar] [CrossRef]

- ISO. Robots and Robotic Devices–Safety Requirements for Industrial Robots–Part 2: Robot Systems and Integration; International Organization for Standardization: Geneva, Switzerland, 2011. [Google Scholar]

- BSI Group. Robots and Robotic Devices—Collaborative Robots (ISO/TS 15066: 2016); BSI Standards Publication: London, UK, 2016. [Google Scholar]

- Zacharaki, A.; Kostavelis, I. Dependability Levels on Autonomous Systems: The Case Study of a Crisis Management Robot. In Robotic Systems: Concepts, Methodologies, Tools, and Applications; IGI Global: Xanthi, Greece, 2020; pp. 1377–1390. [Google Scholar]

- International Organization for Standardization (ISO). Safety of Machinery—General Principles for Design—Risk Assessment and Risk Reduction; International Organization for Standardization: Geneva, Switzerland, 2010. [Google Scholar]

- Askarpour, M.; Mandrioli, D.; Rossi, M.; Vicentini, F. SAFER-HRC: Safety analysis through formal verification in human–robot collaboration. In International Conference on Computer Safety, Reliability, and Security; Springer: Berlin/Heidelberg, Germany, 2016; pp. 283–295. [Google Scholar]

- Guiochet, J.; Machin, M.; Waeselynck, H. Safety-critical advanced robots: A survey. Robot. Auton. Syst. 2017, 94, 43–52. [Google Scholar] [CrossRef]

- Dhillon, B.S.; Fashandi, A. Safety and reliability assessment techniques in robotics. Robotica 1997, 15, 701–708. [Google Scholar] [CrossRef]

- Zhuo-Hua, D.; Zi-xing, C.; Jin-xia, Y. Fault diagnosis and fault tolerant control for wheeled mobile robots under unknown environments: A survey. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, Barcelona, Spain, 18–22 April 2005; pp. 3428–3433. [Google Scholar]

- Guiochet, J. Hazard analysis of human–robot interactions with HAZOP–UML. Saf. Sci. 2016, 84, 225–237. [Google Scholar] [CrossRef]

- Ishimatsu, T.; Leveson, N.G.; Thomas, J.; Katahira, M.; Miyamoto, Y.; Nakao, H. Modeling and hazard analysis using STPA. In Proceedings of the 4th IAASS Conference, Making Safety Matter, Huntsville, AL, USA, 19–21 May 2010. [Google Scholar]

- Sulaman, S.M.; Beer, A.; Felderer, M.; Höst, M. Comparison of the FMEA and STPA safety analysis methods–A case study. Softw. Qual. J. 2019, 27, 349–387. [Google Scholar] [CrossRef]

- Bensaci, C.; Zennir, Y.; Pomorski, D. A Comparative Study of STPA Hierarchical Structures in Risk Analysis: The Case of a Complex Multi-Robot Mobile System. In Proceedings of the 2018 2nd European Conference on Electrical Engineering and Computer Science (EECS), Bern, Switzerland, 20–22 December 2018; pp. 400–405. [Google Scholar]

- Gleirscher, M.; Johnson, N.; Karachristou, P.; Calinescu, R.; Law, J.; Clark, J. Challenges in the Safety-Security Co-Assurance of Collaborative Industrial Robots. arXiv 2020, arXiv:2007.11099. [Google Scholar]

- Bensaci, C.; Zennir, Y.; Pomorski, D. A New Approach to System Safety of human-multi-robot mobile system control with STPA and FTA. Alger. J. Signals Syst. 2020, 5, 79–85. [Google Scholar] [CrossRef]

- Bensaci, C.; Zennir, Y.; Pomorski, D.; Innal, F.; Liu, Y.; Tolba, C. STPA and Bowtie risk analysis study for centralized and hierarchical control architectures comparison. Alex. Eng. J. 2020, 59, 3799–3816. [Google Scholar] [CrossRef]

- Vicentini, F.; Askarpour, M.; Rossi, M.G.; Mandrioli, D. Safety assessment of collaborative robotics through automated formal verification. IEEE Trans. Robot. 2019, 36, 42–61. [Google Scholar] [CrossRef]

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human–robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Kostavelis, I.; Vasileiadis, M.; Skartados, E.; Kargakos, A.; Giakoumis, D.; Bouganis, C.S.; Tzovaras, D. Understanding of human behavior with a robotic agent through daily activity analysis. Int. J. Soc. Robot. 2019, 11, 437–462. [Google Scholar] [CrossRef]

- Knegtering, B.; Pasman, H. The safety barometer: How safe is my plant today? Is instantaneously measuring safety level utopia or realizable? J. Loss Prev. Process. Ind. 2013, 26, 821–829. [Google Scholar] [CrossRef]

- Chatzimichailidou, M.M.; Karanikas, N.; Dokas, I. Measuring safety through the distance between system states with the RiskSOAP indicator. J. Saf. Stud. 2016, 2, 5–17. [Google Scholar] [CrossRef][Green Version]

- Zeleskidis, A.; Dokas, I.M.; Papadopoulos, B. A novel real-time safety level calculation approach based on STPA. MATEC Web of Conferences. Edp Sci. 2020, 314, 01001. [Google Scholar]

- Alemzadeh, H.; Chen, D.; Lewis, A.; Kalbarczyk, Z.; Raman, J.; Leveson, N.; Iyer, R. Systems-theoretic safety assessment of robotic telesurgical systems. In International Conference on Computer Safety, Reliability, and Security; Springer: Cham, Switzerland, 2014; pp. 213–227. [Google Scholar]

- Geist, M.; Scherrer, B.; Pietquin, O. A theory of regularized markov decision processes. In Proceedings of the 36th International Conference on Machine Learning, Long Beach, CA, USA, 9–15 June 2019; pp. 2160–2169. [Google Scholar]

- Alizadeh, S.; Sriramula, S. Reliability modelling of redundant safety systems without automatic diagnostics incorporating common cause failures and process demand. ISA Trans. 2017, 71, 599–614. [Google Scholar] [CrossRef]

- Cassandra, A.R. A survey of POMDP applications. In Working Notes of AAAI 1998 Fall Symposium on Planning with Partially Observable Markov Decision Processes; 1998; Volume 1724, Available online: http://www.cassandra.org/arc/papers/applications.pdf (accessed on 1 January 2021).

- Spaan, M.T. Partially observable Markov decision processes. In Reinforcement Learning; Springer: Berlin/Heidelberg, Germany, 2012; pp. 387–414. [Google Scholar]

- Kostavelis, I.; Giakoumis, D.; Malassiotis, S.; Tzovaras, D. A pomdp design framework for decision making in assistive robots. In Proceedings of the International Conference on Human-Computer Interaction, Vancouver, BC, Canada, 9–14 July 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 467–479. [Google Scholar]

- Littman, M.L. A tutorial on partially observable Markov decision processes. J. Math. Psychol. 2009, 53, 119–125. [Google Scholar] [CrossRef]

- Shani, G.; Pineau, J.; Kaplow, R. A survey of point-based POMDP solvers. Auton. Agents-Multi-Agent Syst. 2013, 27, 1–51. [Google Scholar] [CrossRef]

- Meuleau, N.; Kim, K.E.; Kaelbling, L.P.; Cassandra, A.R. Solving POMDPs by searching the space of finite policies. arXiv 2013, arXiv:1301.6720. [Google Scholar]

- Cassandra, A.R. Pomdp.Org. 2003–2021. Available online: https://www.pomdp.org/code// (accessed on 1 January 2021).

| Symbol | Description |

|---|---|

| S | The set of different states of the environment |

| s | A specific system state |

| A | The set of different actions that can take place |

| a | A specific action |

| The set of all observations appear upon the action space | |

| A unique observation based on an action a | |

| The probability distribution of the initial state s | |

| T | The probability distribution of the transition among states |

| O | The probability distribution of the observations |

| π | Optimal policy |

| The safety related partitioned state space | |

| A specific unsafe state | |

| A specific recovery state | |

| A specific safe state | |

| The safety related action space | |

| A specific unsafe control action | |

| A specific recovery control action | |

| A specific safe control action |

| Control Action | Not Providing Causes Hazard | Providing Causes Hazard | Too Early, Too Late, Out of Order | Stopped Too Soon, Applied Too Long |

|---|---|---|---|---|

| A-1: Report the collision space [Cobot Controller] | A-1: Collision space during HRI is not reported due to scene registration errors [H-1.1] | A-2: Erroneous collision space is reported when human pose estimation is inaccurate [H-1.1] | A-3: Collision space is reported too late due to ROS communication interruptions [H-1.1] A-4: Outdated collision space is reported due to communication bandwidth [H-6.1] | - |

| A-1: Report the proximity [Monitoring Controller] | A-5: Monitoring does not report human’s insertion into robot’s workspace due to visual occlusions [H-2.1,H-6.1] | A-6: Monitoring reports noisy proximity measurements in presence of reflections to the optical sensors [H-1.1, H-2.1, H-6.1] | A-7: Monitoring reports obsolete proximity measurements due to ROS bandwidth [H-1.1, H-2.1, H-6.1] | - |

| A-2: Object Detection [Monitoring Controller] | A-8: Vision system does not provide a target goal to the end-effector when the object classifier fails [H-3] | A-9: Vision system provides a target goal to the end-effector with offset due to estimation errors [H-3] | A-10: Vision system provides un-ordered sequence of grasping points [H-3.1, H-3.2] | - |

| A-3: Grasping and Manipulation [Cobot Controller] | A-11: Grasp planner fails to provide a grasping strategy when the current robot state is unknown [H-3, H-4.1, H4.2] | A-12: Manipulation planner infers a grasping strategy with outdated grasping points when object has been moved in the scene [H-3.1, H-4 ] | A-13: Trajectory planner infers a sequence of grasping points before the end-effector reach a pre-grasping pose due to ROS latency [H-3.1, H-3.2] | A-14: Grasp stops early due to erroneous estimation of release surface [H-3.2, H-4.2] |

| A-4: Task planning [Cell Controller] | A-15: Task planner does not provide a task ID when unregistered robot state occurs [H-6.1] A-16: Task planner does not provide a robot task execution msg when vision system fails to return a pose observations [H-4] | A-17: Task planner infers a wrong task ID when human bypasses the scenario flaw with HMI [H-7.1, H-7.2] A-18: Task planner provides a wrong robot task execution msgs when vision system infers erroneous poses [H-6, H-6.1] A-19: Tasks planner halts when receiving unexpected monitoring msgs during collaborative mode | A-20: Task planner provides shuffled robot task execution msgs due to inaccurate robot/environment state [H-7.1, H-7.2] | A-21: Task planner terminates the task too late by skipping specific operation states [H-6.1, H-7.1, H-7.2] |

| A-5: HMI interface [Operator and Monitoring Controller] | A-22: HMI interface does not visualize the current task state when ROS communication is disturbed [H-6.1,H-7.1] A-23: HMI interface does not provide error diagnostic msgs when robot states are not reported [H-5,H-6.1, H-7.1] | A-24: HMI interface provides access to low-level control, interrupts robot’s trajectory [H-4,H-5.1] | A-25: HMI interface allows interruption before robot completes a task when collaborative mode is not enabled[H-1, H-2, H-5] | - |

| A-2: AR engine [AR Controller] | A-26: AR engine does not provide robot/workspace registered information in presence of severe occlusions [H-7.1,H-7.2] | A-27: AR engine provides wrong environment/robot state when localization error occurs [H-7.1,H-7.2] | A-28: AR engine visualizes robot/ environment state with time shift due to ROS communication latency [H-7.1,H-7.2, H-6.1] | - |

| A-6: Robot HW sensors [Vision and HW Controller] | A-29: Vision system does not provide frames due to ROS bandwidth issues [H-2.1] A-30: Robot does not provide force/torque measurements due to ROS bandwidth issues [H-5.1] | A-31: vision system reports erroneous depth measurements due to unexpected reflections [H-3,H-4, H-6] A-32: Robot reports erroneous force excess due to sensor calibration drift [H-5.1] | A-33: Robot vision system provides input with delay, due to ROS synchronization issues [H-3,H-4, H-6] A-34: Robot force sensor reports force/torque measurements with smaller frequency than the low-level controller due to payload excess [H-5.1] | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zacharaki, A.; Kostavelis, I.; Dokas, I. Decision Making with STPA through Markov Decision Process, a Theoretic Framework for Safe Human-Robot Collaboration. Appl. Sci. 2021, 11, 5212. https://doi.org/10.3390/app11115212

Zacharaki A, Kostavelis I, Dokas I. Decision Making with STPA through Markov Decision Process, a Theoretic Framework for Safe Human-Robot Collaboration. Applied Sciences. 2021; 11(11):5212. https://doi.org/10.3390/app11115212

Chicago/Turabian StyleZacharaki, Angeliki, Ioannis Kostavelis, and Ioannis Dokas. 2021. "Decision Making with STPA through Markov Decision Process, a Theoretic Framework for Safe Human-Robot Collaboration" Applied Sciences 11, no. 11: 5212. https://doi.org/10.3390/app11115212

APA StyleZacharaki, A., Kostavelis, I., & Dokas, I. (2021). Decision Making with STPA through Markov Decision Process, a Theoretic Framework for Safe Human-Robot Collaboration. Applied Sciences, 11(11), 5212. https://doi.org/10.3390/app11115212