Stress Analysis with Dimensions of Valence and Arousal in the Wild

Abstract

1. Introduction

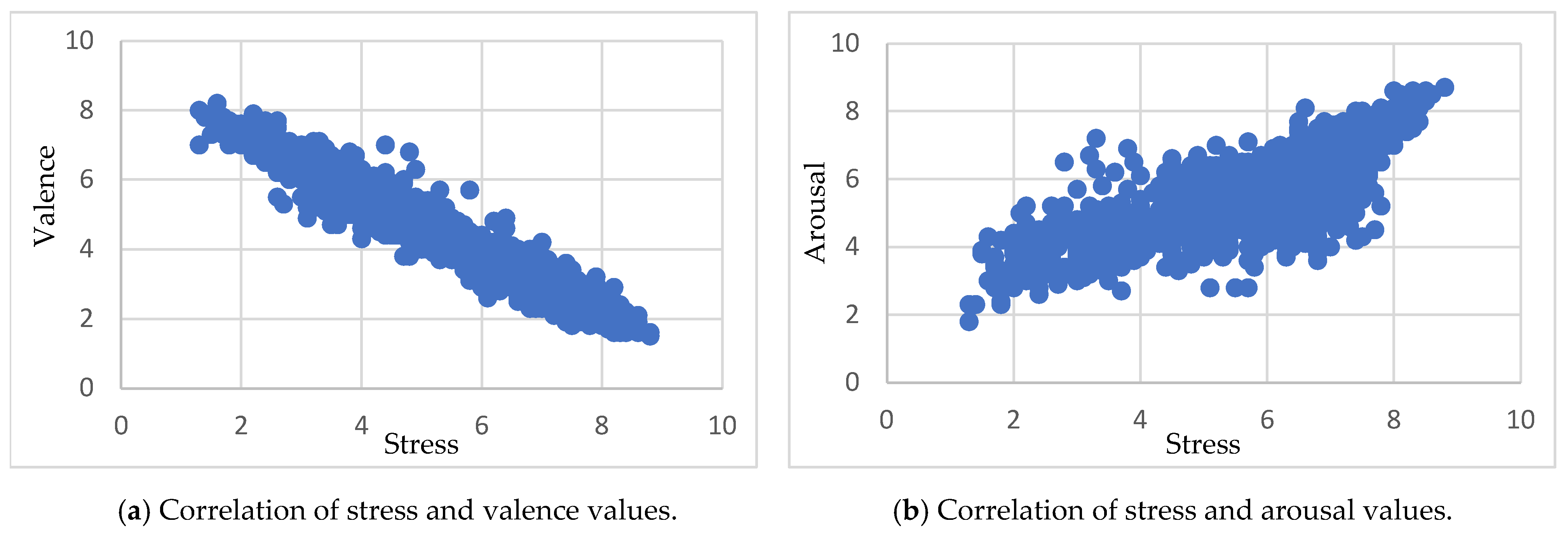

- Firstly, we analyze the relationship between stress and continuous dimensions of valence and arousal.

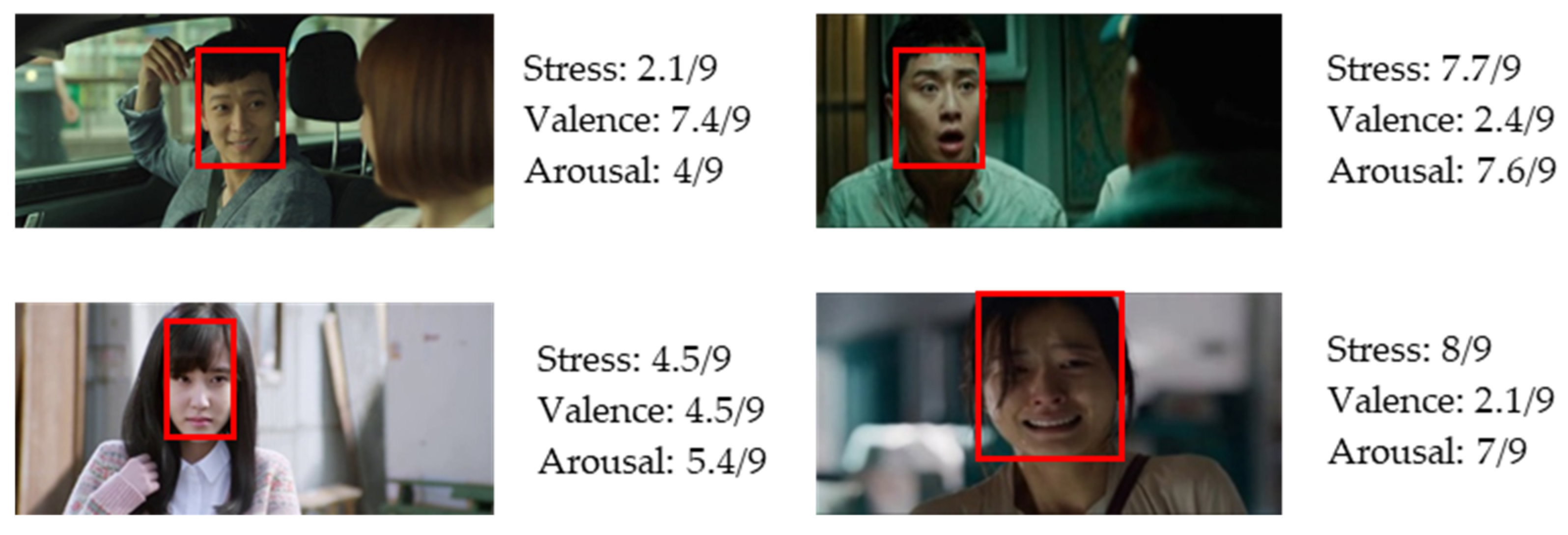

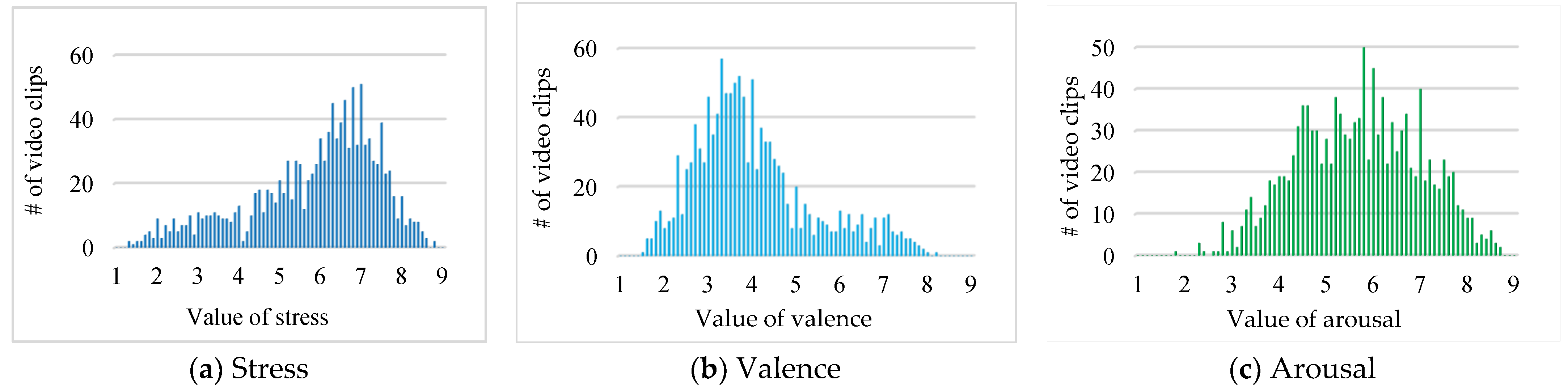

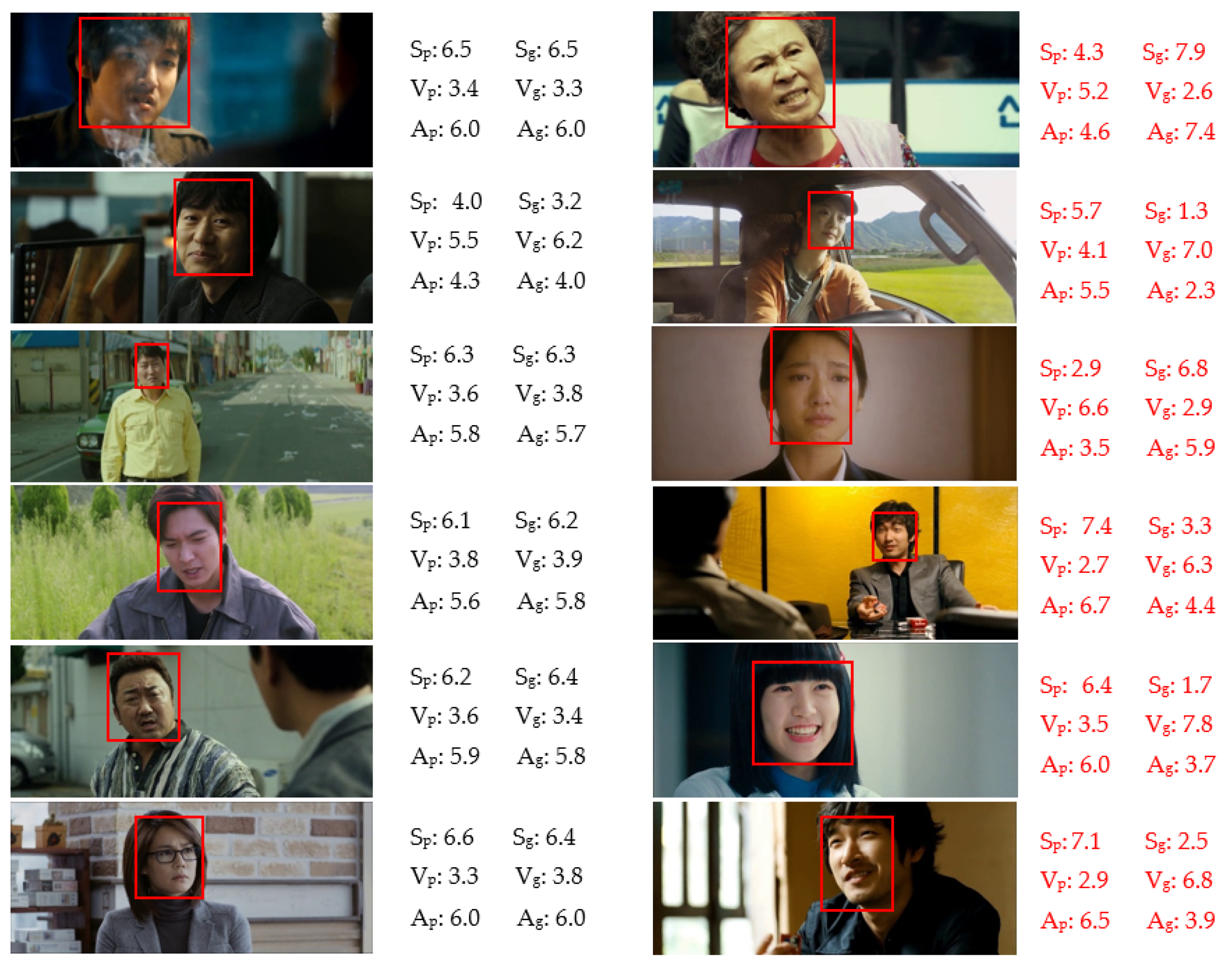

- The second, the SADVAW dataset, which is constructed to evaluate multiple stress levels in continuous dimensions of valence and arousal based on facial features. The SADVAW dataset consists of 1236 video clips extracted from 41 Korean movies. Video clips are extracted from many characters with different backgrounds, closer to the real-world environment. The video clips were evaluated on a 9-level scale for each stress, valence, and arousal class.

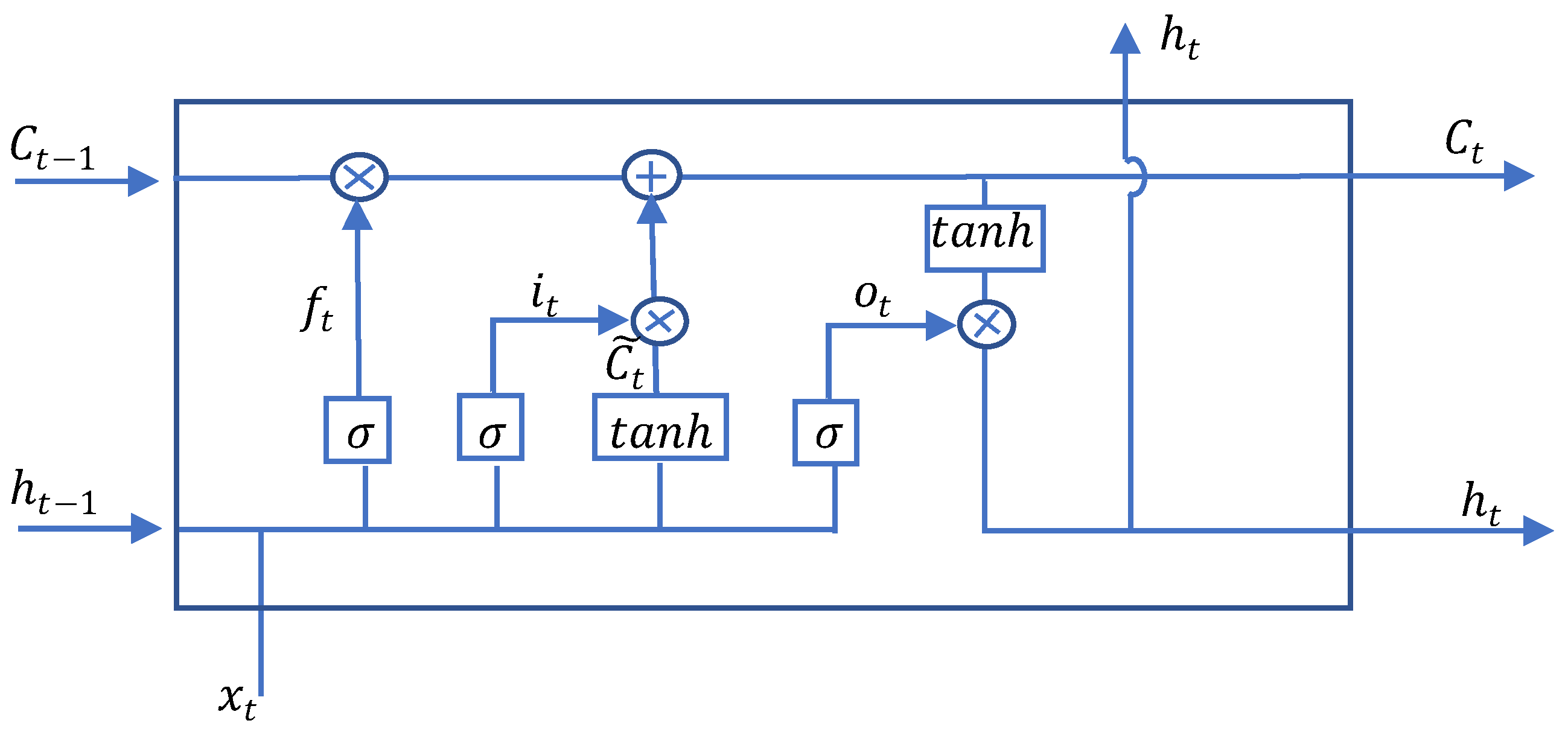

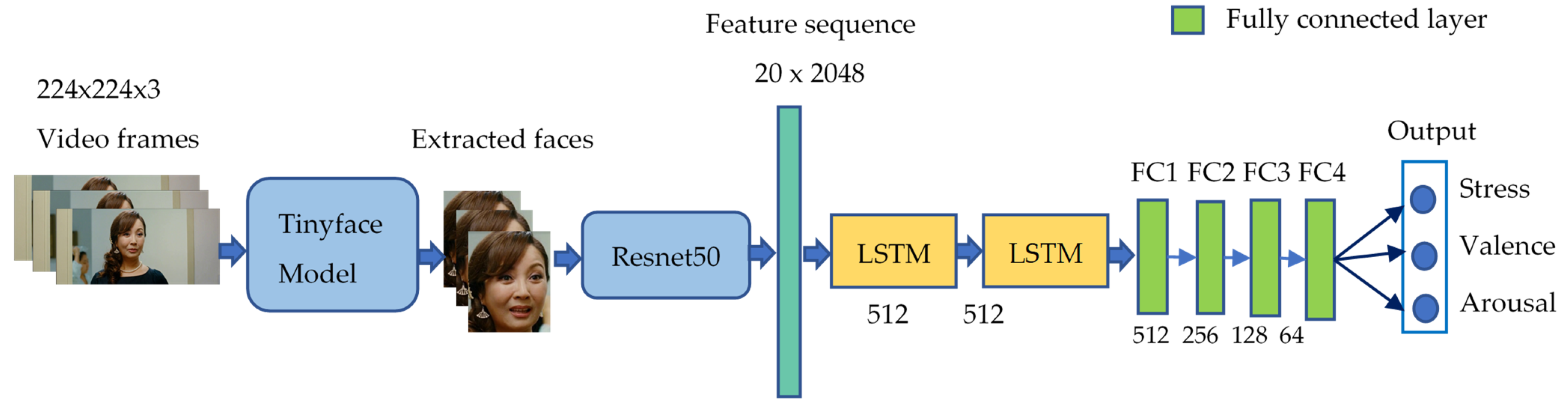

- The third contribution is developing a baseline model based on deep learning techniques for stress level detection. In particular, we first detect and extract human faces using TinyFace [12] model. Then, we use ResNet [13] (pp. 770–778) to extract an important feature vector for each frame of the video clip. The sequence of features is trained by Long Short-Term Memory (LSTM) [14], followed by fully connected layers to predict the stress, arousal, and valence level. Based on the SADVAW dataset, we determined the correlation of stress with valence and arousal. Furthermore, we aim to use this analysis for stress detection systems through images/videos captured from real-world situations.

2. Related Work

2.1. Existing Stress Dataset

2.2. LSTM Architecture

3. SADVAW Dataset and Stress Analysis

3.1. Dataset Overview

3.2. Annotators and Evaluation Process

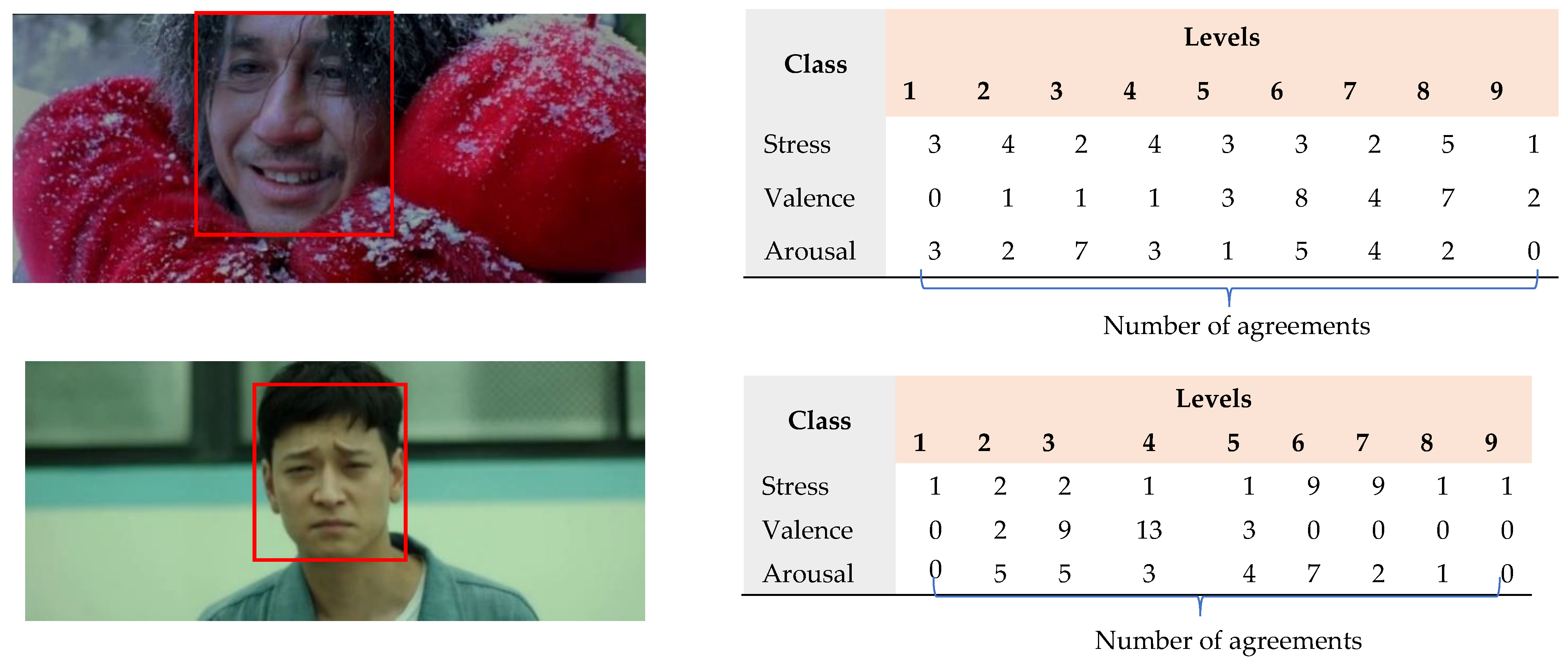

3.3. Annotation Agreement

3.4. Stress Analysis

4. Baseline Model Experiments

4.1. Experimental Setup

4.2. Evaluation Metrics

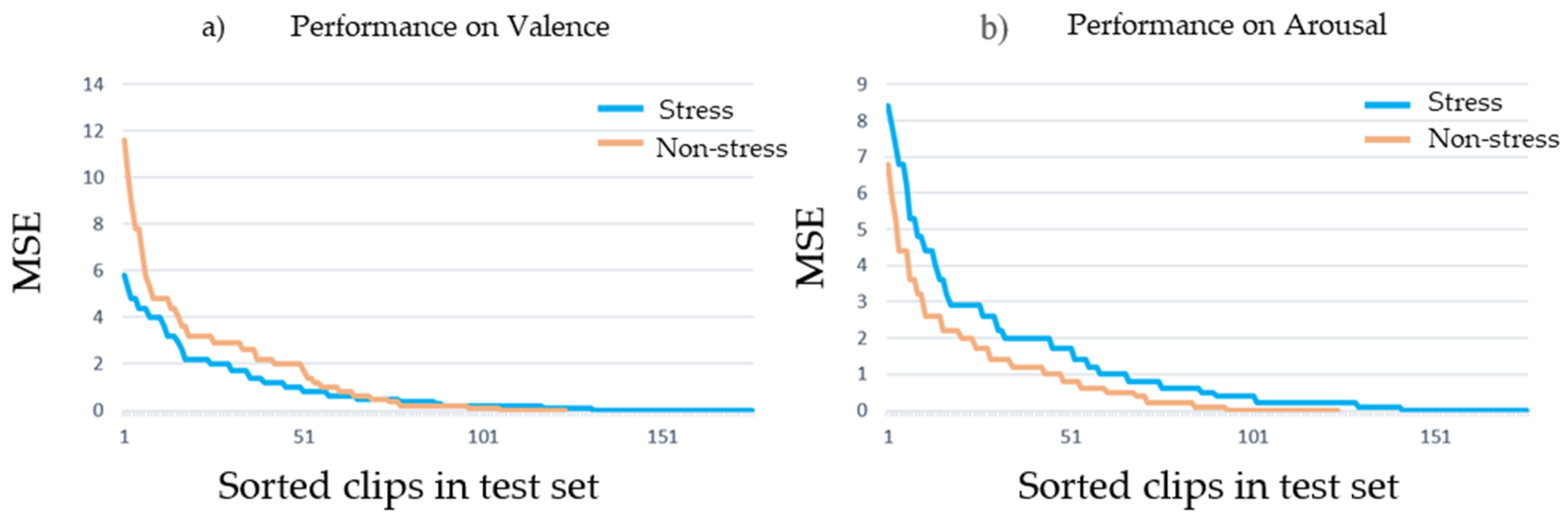

4.3. Experimental Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Posner, J.; Russell, J.A.; Peterson, B.S. The circumplex model of affect: An integrative approach to affective neuroscience, cognitive development, and psychopathology. Dev. Psychopathol. 2005. [Google Scholar] [CrossRef] [PubMed]

- Lewis, P.A.; Critchley, H.D.; Rotshtein, P.; Dolan, R.J. Neural correlates of processing valence and arousal in affective words. Cereb. Cortex. 2007. [Google Scholar] [CrossRef] [PubMed]

- Oliveira, A.; Teixeira, M.; Fonseca, I.B.B.; Oliveira, M.; Kornbrot, D.; Msetfi, R. Macrae Joint model-parameter validation of self-estimates of valence and arousal: Probing a differential-weighting model of affective intensity. Meet. Int. Soc. Psychophys. 2006, 22, 245–250. [Google Scholar]

- Alvarado, N. Arousal and valence in the direct scaling of emotional response to film clips. Motiv. Emot. 1997. [Google Scholar] [CrossRef]

- Christianson, S.-A. Emotional stress and eyewitness memory: A critical review. Psychol. Bull. 1992. [Google Scholar] [CrossRef]

- Barrett, L.F.; Russell, J.A. The structure of current affect: Controversies and emerging consensus. Curr. Dir. Psychol. Sci. 1999. [Google Scholar] [CrossRef]

- Wichary, S.; Mata, R.; Rieskamp, J. Probabilistic inferences under emotional stress: How arousal affects decision processes. J. Behav. Decis. Mak. 2016. [Google Scholar] [CrossRef]

- Carneiro, D.; Novais, P.; Augusto, J.C.; Payne, N. New methods for stress assessment and monitoring at the workplace. IEEE Trans. Affect. Comput. 2019. [Google Scholar] [CrossRef]

- Alberdi, A.; Aztiria, A.; Basarab, A. Towards an Automatic Early Stress Recognition System for Office Environments Based on Multimodal Measurements: A Review; Elsevier: Amsterdam, The Netherlands, 2016. [Google Scholar]

- Can, Y.S.; Arnrich, B.; Ersoy, C. Stress detection in daily life scenarios using smart phones and wearable sensors: A Survey. J. Biomed. Inform. 2019, 92, 103139. [Google Scholar] [CrossRef]

- Schmidt, P.; Reiss, A.; Duerichen, R.; van Laerhoven, K. Wearable Affect and stress recognition: A review. arXiv 2018, arXiv:1811.08854. [Google Scholar]

- Hu, P.; Ramanan, D. Finding tiny faces. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, P.; Reiss, A.; Duerichen, R.; Van Laerhoven, K. Introducing WeSAD, a multimodal dataset for wearable stress and affect detection. In Proceedings of the ICMI 2018 International Conference on Multimodal Interaction, Boulder, CO, USA, 10–20 October 2018. [Google Scholar]

- Jaiswal, M.; Bara, C.-P.; Luo, Y.; Burzo, M.; Mihalcea, R.; Provost, E.M. MuSE: A multimodal dataset of stressed emotion. In Proceedings of the Language Resources and Evaluation, Marseille, France, 11–16 May 2020. [Google Scholar]

- Koldijk, S.; Sappelli, M.; Verberne, S.; Neerincx, M.A.; Kraaij, W. The swell knowledge work dataset for stress and user modeling research. In Proceedings of the ICMI 2014 International Conference on Multimodal Interaction, Bogazici University, Istanbul, Turkey, 12–16 November 2014. [Google Scholar]

- Chen, G. A Gentle Tutorial of Recurrent Neural Network with Error Backpropagation. arXiv 2016, arXiv:1610.02583. [Google Scholar]

- Wöllmer, M.; Kaiser, M.; Eyben, F.; Schuller, B.; Rigoll, G. LSTM-modeling of continuous emotions in an audiovisual affect recognition framework. Image. Vis. Comput. 2013. [Google Scholar] [CrossRef]

- Avots, E.; Sapiński, T.; Bachmann, M.; Kamińska, D. Audiovisual emotion recognition in wild. In Proceedings of the Machine Vision and Applications, Tokyo, Japan, 27–31 May 2019. [Google Scholar]

- Chatterjee, A.; Gupta, U.; Chinnakotla, M.K.; Srikanth, R.; Galley, M.; Agrawal, P. Understanding emotions in text using deep learning and big data. Comput. Hum. Behav. 2019. [Google Scholar] [CrossRef]

- Ng, J.Y.H.; Hausknecht, M.; Vijayanarasimhan, S.; Vinyals, O.; Monga, R.; Toderici, G. Beyond short snippets: Deep networks for video classification. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Graves, A.; Mohamed, A.R.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the ICASSP, IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013. [Google Scholar]

- Graves, A.; Jaitly, N. Towards end-to-end speech recognition with recurrent neural networks. In Proceedings of the 31st International Conference on Machine Learning, ICML 2014, Beijing, China, 21–26 June 2014. [Google Scholar]

- Khanh, T.L.B.; Kim, S.H.; Lee, G.; Yang, H.J.; Baek, E.T. Korean video dataset for emotion recognition in the wild. Multimed. Tools Appl. 2020. [Google Scholar] [CrossRef]

- Pritha Bhandari Standard Deviation|A Step by Step Guide with Formulas. Available online: https://www.scribbr.com/statistics/standard-deviation/ (accessed on 20 December 2020).

- Stephanie Glen T Test (Student’s T-Test): Definition and Examples—Statistics How To. Available online: https://www.statisticshowto.com/probability-and-statistics/t-test/ (accessed on 9 January 2021).

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 2015. [Google Scholar] [CrossRef]

- Stephanie Glen Relative Error: Definition, Formula, Examples—Statistics How to. Available online: https://www.statisticshowto.com/relative-error/ (accessed on 8 March 2021).

- Jaiswal, S.; Song, S.; Valstar, M. Automatic prediction of depression and anxiety from behaviour and personality attributes. In Proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction, ACII 2019, Cambridge, UK, 3–6 September 2019. [Google Scholar]

- Nicolaou, M.A.; Gunes, H.; Pantic, M. Continuous prediction of spontaneous affect from multiple cues and modalities in valence-arousal space. IEEE Trans. Affect. Comput. 2011. [Google Scholar] [CrossRef]

- Muszynski, M.; Tian, L.; Lai, C.; Moore, J.; Kostoulas, T.; Lombardo, P.; Pun, T.; Chanel, G. Recognizing induced emotions of movie audiences from multimodal information. IEEE Trans. Affect. Comput. 2019. [Google Scholar] [CrossRef]

| Dataset | Number of Videos | Length per Video Clip | Setting | Target Objects |

|---|---|---|---|---|

| SADVAW (proposed) | 1236 | 2–4 (s) | Movie | Stress, valence, arousal |

| WeSAD [15] | 15 | 36 (min) | Lab | Baseline, stress, amusement |

| MuSE [16] | 28 | 45 (min) | Lab | Activation, valence, stress |

| SWELL-KW [17] | 25 | 2 (h) | Lab | Stress, valence, arousal, mental effort, frustration, task load |

| Class | Description |

|---|---|

| Stress (1–9) | How stressed does the person feel? (non-stress–stress) |

| Valence (1–9) | How positive or negative is their emotion? (negative–positive) |

| Arousal (1–9) | What is the agitation level of the person? (inactive–active) |

| (a) The percentage of videos with the agreement of n (max(n) = 27) annotators on each value of stress (%) | ||||||||||

| # of People (n) | Values of Stress | Total | ||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ||

| 1 | 2.05 | 3.04 | 3.64 | 4.32 | 2.67 | 0.93 | 1.11 | 2.06 | 2.7 | 22.52 |

| n ≥ 2 | 2.64 | 5.27 | 6.77 | 7.5 | 11.74 | 14.54 | 13.36 | 10.27 | 5.39 | 77.48 |

| Total | 4.69 | 8.31 | 10.41 | 11.82 | 14.41 | 15.47 | 14.47 | 12.33 | 8.09 | 100 |

| (b) The percentage of videos with the agreement of n (max(n) = 27) annotators on each value of valence (%) | ||||||||||

| # of People (n) | Values of Valence | Total | ||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ||

| 1 | 4.07 | 3.12 | 1.56 | 1.17 | 3.65 | 3.12 | 1.96 | 1.78 | 0.97 | 21.4 |

| n ≥ 2 | 5 | 11.88 | 16.54 | 17.83 | 12.25 | 7.16 | 4.29 | 2.56 | 1.09 | 78.6 |

| Total | 9.07 | 15 | 18.1 | 19 | 15.9 | 10.28 | 6.25 | 4.34 | 2.06 | 100 |

| (c) The percentage of videos with the agreement of n (max(n) = 27) annotators on each value of arousal (%) | ||||||||||

| # of People (n) | Values of Arousal | Total | ||||||||

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | ||

| 1 | 1.82 | 3.56 | 2.96 | 2.75 | 1.76 | 0.49 | 1.64 | 3.27 | 2.81 | 21.06 |

| n ≥ 2 | 1.95 | 6.18 | 8.24 | 9.85 | 12.77 | 15.16 | 12.98 | 8.04 | 3.77 | 78.94 |

| Total | 3.77 | 9.74 | 11.2 | 12.6 | 14.53 | 15.65 | 14.62 | 11.31 | 6.58 | 100 |

| Valence | Arousal | |

|---|---|---|

| Stress | −0.96 | 0.78 |

| Valence | Arousal | |||

|---|---|---|---|---|

| Stress | Non-Stress | Stress | Non-Stress | |

| Mean | 3.13 | 5.24 | 6.38 | 4.73 |

| Variance | 0.37 | 1.32 | 1.11 | 0.93 |

| t-stat | −56.34 | 11.18 | ||

| P(T ≤ t) one-tail | 2 × 10−269 | 5.70 × 10−28 | ||

| Layer (Type) | Output Shape | Parameters |

|---|---|---|

| Input | (20, 2048) | 0 |

| Lstm_1 (LSTM) | (20, 512) | 5,244,928 |

| Lstm_2 (LSTM) | 512 | 2,099,200 |

| dense_1(FC) | 512 | 262,656 |

| dense_2(FC) | 256 | 131,328 |

| dense_3(FC) | 128 | 32,896 |

| dense_4(FC) | 64 | 8256 |

| Dimension | MSE | MRE (%) | PCC |

|---|---|---|---|

| Stress | 1.66 | 22.16 | 0.52 |

| Valence | 1.23 | 22.71 | 0.53 |

| Arousal | 1.13 | 15.42 | 0.46 |

| Mean | 1.34 | 20.10 | 0.50 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tran, T.-D.; Kim, J.; Ho, N.-H.; Yang, H.-J.; Pant, S.; Kim, S.-H.; Lee, G.-S. Stress Analysis with Dimensions of Valence and Arousal in the Wild. Appl. Sci. 2021, 11, 5194. https://doi.org/10.3390/app11115194

Tran T-D, Kim J, Ho N-H, Yang H-J, Pant S, Kim S-H, Lee G-S. Stress Analysis with Dimensions of Valence and Arousal in the Wild. Applied Sciences. 2021; 11(11):5194. https://doi.org/10.3390/app11115194

Chicago/Turabian StyleTran, Thi-Dung, Junghee Kim, Ngoc-Huynh Ho, Hyung-Jeong Yang, Sudarshan Pant, Soo-Hyung Kim, and Guee-Sang Lee. 2021. "Stress Analysis with Dimensions of Valence and Arousal in the Wild" Applied Sciences 11, no. 11: 5194. https://doi.org/10.3390/app11115194

APA StyleTran, T.-D., Kim, J., Ho, N.-H., Yang, H.-J., Pant, S., Kim, S.-H., & Lee, G.-S. (2021). Stress Analysis with Dimensions of Valence and Arousal in the Wild. Applied Sciences, 11(11), 5194. https://doi.org/10.3390/app11115194