1. Introduction

An overset mesh approach is useful for problems involving multiple moving components. Rotor blades are examples of multiple moving components in helicopter configuration [

1]. The generation of a single mesh covering all components is possible if the mesh topology is limited to unstructured mesh. However, in the vicinity of boundaries, structured mesh may also be desired to accurately capture flow characteristics such as drag in viscous flows and shock in inviscid flows. Even if satisfactory single mesh is generated, in subsequent time steps, due to relative motion of components, the mesh may stretch excessively lowering the accuracy of flow solution unless proper mesh deformation technique is implemented. Mesh stretching can be avoided by regenerating the single grid in each time step, which is time consuming. An overset mesh approach allows component meshes to be generated only once and independently with the desired mesh topology.

Since each mesh is independent from other meshes, it is necessary to assemble the meshes in order to identify different cell types. The cell types are mainly

the field cells on which flow solution is computed;

the receptor cells which interpolate flow variables across overlapping mesh cells;

the hole cells which are excluded from flow solution due to overlapping invalid regions of the domain, such as holes.

The identification of cell types is the core task of the assembler and is called donor search. In each time step, the assembler identifies and transfers the cell types to flow solver.

It is desired that the workload be distributed among multiple computing resources in order to reduce the total assembly and flow solution duration. The initial input mesh system is usually partitioned with unweighted graph partitioning, which produces partitions containing an approximately equal number of cells based on cell connectivity. With overset mesh methodology, in the absence of cell-to-cell connectivity, overset meshes require interpolation of flow variables across overlapping mesh cells. The mesh cells are partitioned spatially so that the ones which occupy the same region reside in the same partition and, therefore, the communication time for interpolation of variables is diminished. Although spatial partitioning reduces the total run time by localizing the interpolation of flow variables, it does not alleviate load imbalance. Spatial partitioning is performed adaptively by refining the most loaded bin of an octree until balanced partitions are obtained.

Several assemblers [

2,

3,

4,

5,

6,

7,

8,

9,

10] have managed to reduce total run time. To the knowledge of present authors, load balancing in the context of overset grid methodology is ignored in all but in only one of the studies given in [

11], where load balance was maintained dynamically and adaptively by examining the task duration of each processor and by transferring the workload across processors in subsequent time steps. However, the mesh system was partitioned based solely on cell connectivity and, therefore, overlapping mesh blocks had to be exchanged for donor search and processed information needed to be sent back to the host processor. In this study, time is saved by eliminating the need for sending processed information back to the host processor since overlapping mesh blocks reside in the same partitions. This is similar to spatial decomposition volume [

6], where mesh blocks are registered spatially into predefined geometric volumes such as coaxial cylinders and Cartesian mesh depending on the geometry of problem. This study improves upon [

6] by using adaptive spatial refinement without predefined shapes in order to achieve load balance independent of problem geometry.

A generic helicopter [

12] configuration is simulated in order to evaluate performance of the developed parallel assembler and flow solver in terms of load distribution, scalability and memory usage.

In

Section 2, details of adaptive spatial partitioning and load balancing are explained. In

Section 3, donor search and auxiliary algorithms are presented. Details about governing equations solved by the developed solver are presented in

Section 6. CAD models/meshes for the simulated test case are explained in

Section 7. In

Section 8, results of the test case are presented and discussed and finally, in the last section, conclusions are drawn based on the results.

2. Spatial Partitioning and Load Balancing

Unlike the single mesh approach, the overset mesh technique involves interpolation of flow variables across overlapping meshes. In a parallel environment, it is mandatory to decompose mesh system (all the meshes including component and background meshes) and store overlapping mesh blocks in the same processors. In this work, this process is termed as spatial partitioning akin to spatial decomposition volume (SDV) [

6]. In [

6], the mesh system is decomposed into predetermined volumes based on the expected path of the component meshes. As an example, rotor blade meshes are decomposed into cylindrical volumes, whereas wing–pylon–store configuration is decomposed into Cartesian volumes. One of the drawbacks of SDV is the fact that the decomposition volume is problem dependent while the other is that no load balancing is performed. In this work, an octree is used for both adaptive spatial partitioning and load balancing. Adaptive spatial partitioning is similar to decomposition with Cartesian volumes but, in addition, it has the merit of adaptive partitioning of spatial domain, eliminating problem-dependent decomposition.

First, the mesh system is partitioned by the graph-partitioner, METIS [

13], with the default (unweighted vertices) setting so that each processor contains approximately the same number of cells. Even decomposition of mesh system is suitable for applications for which execution time of tasks are expected to be a function of number of cells. Typical applications are flow solvers involving iterative solution of the same discretized governing equations on all cells.

Each processor reads separate mesh files generated by METIS and stores local mesh blocks (which are a subset of a mesh). For clarity, local data stored in a processor can have different traits than their counterparts in other processors, whereas global data identical in every processor are obtained by combining local data.

In order to organize mesh blocks spatially, a global octree is constructed to divide domain into eight non-overlapping bins (subsets) recursively. The preference of octree over other types of trees such as binary or quad trees is natural for three-dimensional geometries. Each bin in the octree is axis-aligned as shown in

Figure 1. Dimensions of the global octree are obtained by all-gathering and maximizing the dimensions of the axis-aligned bounding box (AABB) of local mesh blocks.

Processors register mesh cells in local mesh blocks to bins of octree based on the intersection of AABB of the mesh cell and the bin. Next, the load in each bin is calculated by summing up the number of cells. Bin loads are all-reduced with addition. The bins of octree are input to METIS as graph-vertices. The load of each bin corresponds to the weight of each graph-vertex. The connectivity of bins are also provided to METIS in order to obtain a connected graph, which is needed for contiguous partitions. The METIS_PartGraphKway function of METIS computes a k-way partitioning where k is the number of processors. If no partitioning with less than 10% imbalance is found, the most loaded bin of octree is refined. Once a satisfactory partitioning is obtained after all refinements, each partition (containing multiple bins) are distributed to respective processor. Finally, the mesh blocks corresponding to the same parent meshes in partitions are merged in order to reduce intra-processor communication. Note that ParMETIS, which is the parallel version of METIS, cannot be used at this stage since distribution of octree bins to processors in unknown.

With spatial partitioning, the spatial domain is decomposed into regions, and each region is assigned to a specific processor. As the mesh system is non-stationary, different mesh cells arrive to and leave the regions the processors are assigned with. On the other hand, the traditional approach is to assign certain mesh cells to processors regardless of the motion the of mesh system. One of the merits of the traditional approach is that cell-to-cell connectivity does not need to be maintained since there is no disconnection/reconnection of moving cells. In addition, no time is spent for re-organization of mesh system spatially. However, the overlapping mesh blocks are still required to be brought together regardless of the approach followed. The traditional approach solves this problem by transferring overlapping mesh blocks and sending processed information back to the host processor. The downside of this approach is that the communication has to be repeated twice in every time step. In this study, time is saved by eliminating the need for sending processed information back to the host processor since overlapping mesh blocks reside in the same partitions.

4. Hole Cutting

The hole cutting task is for determining hole cells. One of the hole cutting methods involves usage of a hole map, which is a Cartesian mesh used to represent holes approximately. When hole maps are used in conjunction with the core donor search algorithm (explained in the next section), hole cells are determined exactly. There are exact methods which are not required to be used in conjunction with donor search. Direct cutter, for example, cuts a hole profile on overlapping mesh and determines interior hole cells with flood algorithm. However, direct cutting is found to have robustness issues with three-dimensional geometries.

Hole mapping requires all wall and outer boundaries to be present in each processor. Therefore, local wall and outer boundaries are all-gathered. A Cartesian mesh which initially has one sub-block is made to fit around wall boundaries. If any sub-block of the Cartesian mesh intersects both wall and outer boundaries, the Cartesian mesh is refined by doubling each dimension. That is, the initial

Cartesian mesh becomes

and so on. Once no sub-block is left to intersect both wall and outer boundaries, the sub-blocks which intersect wall boundaries serve as an approximate hole profile. Next, flood algorithm identifies sub-blocks representing the volume of hole. The procedure is identical to that in [

11], where implementation details can be found.

If the initial AABB of wall boundary does not intersect the outer boundary, there is no refinement required, and the AABB of wall boundaries is sufficient to represent the hole inside wall boundaries.

5. Core Algorithm

In the first time step, the donor search is performed with alternating digital tree (ADT) [

17], which is a space partitioning binary tree. ADT tree splits the spatial domain into halves with axis-aligned cutting planes, recursively. The axis of cutting plane alternates in cyclic order in accordance with the levels in ADT. In three-dimensional space, there are three cutting planes with cutting axes parallel to the

x-,

y-, and

z-axis. The position of the cutting plane along the cutting axis depends on the number of dimensions required to define an object to be inserted. For example, if a point (0D) is to be inserted to an ADT, positions of cutting planes would be (

x,

y,

z) coordinates of the point along the respective cutting axes. Moreover, if the inserted object is a rectangle, the positions of cutting planes would be the six (

,

,

,

,

,

) dimensions of the rectangle in the respective orientations of the cutting plane. In general, objects to be inserted to ADT are n-orthotopes or hyperrectangles. In the example above, point and rectangle are 1- and 2-orthotopes. In this work, the objects are AABB, which is 3-orthotope, of mesh cells. Therefore, both the domain and objects to be inserted are six-dimensional.

For visualization of the insertion operation into ADT,

Figure 3 demonstrates the insertion of points into ADT in two-dimensional space. On the root level, point A is the median in the

x-axis, and it is associated with the whole domain,

. Domain

is divided by a plane with cutting axis parallel to the

x-axis at point A. Next, the cutting axis is changed to be the

y-axis. Point B, which is one of the points in the left half of

and is the median in the

y-axis, is assigned to

, which is the left half of

. Point C is inserted similar to Point B except that it belongs to the right half of

. The rest of the points are inserted into sub-branches of the tree in the same fashion.

The search operation on ADT is similar to insertion. Given a query point, AABBs which overlap the query point are returned. If the query point is outside the hypercube of the ADT, the search operation returns an empty array, meaning that none of AABBs in the tree overlaps the query point. Assuming that the query point is inside the hypercube of the tree, the search operates recursively by always starting from the root node. First, it is tested whether the query point is contained by the AABB in the current node. If so, the AABB is added to the array which is to be returned after the recursive search operation is completed. Regardless of containment of the query point by the AABB, the query point is redirected to one or both halves of the current node by testing the dimension of the query point against the cutting axis associated with the current level.

that the geometric shape of mesh cells are polyhedra which are not axis-aligned in an unstructured grid. However, ADT accepts only axis-aligned objects to be inserted. Therefore, given a mesh block,

, AABB and associated ID of each mesh cell are inserted into the respective ADT

. Once an ADT tree is constructed for every mesh block, the candidate donor cell for each mesh cell in

is searched out by providing the centroid (query point) of the cell as input to all trees

where

. As the output of search operation, each tree

returns an array of IDs corresponding to the mesh cells in

. The query point is tested for containment by each polyhedron corresponding to the returned ID. The mesh cell which contains the query point is added to the list of candidate donors. The procedure is shown in Algorithm 1.

| Algorithm 1: Donor search using ADT |

![Applsci 11 05132 i001 Applsci 11 05132 i001]() |

In the first time step, ADT is used for the donor search. In subsequent time steps, the donor search is performed with a stencil walk algorithm if the cell had a donor in the previous time step; otherwise, ADT is used as in the first time step. Referring to

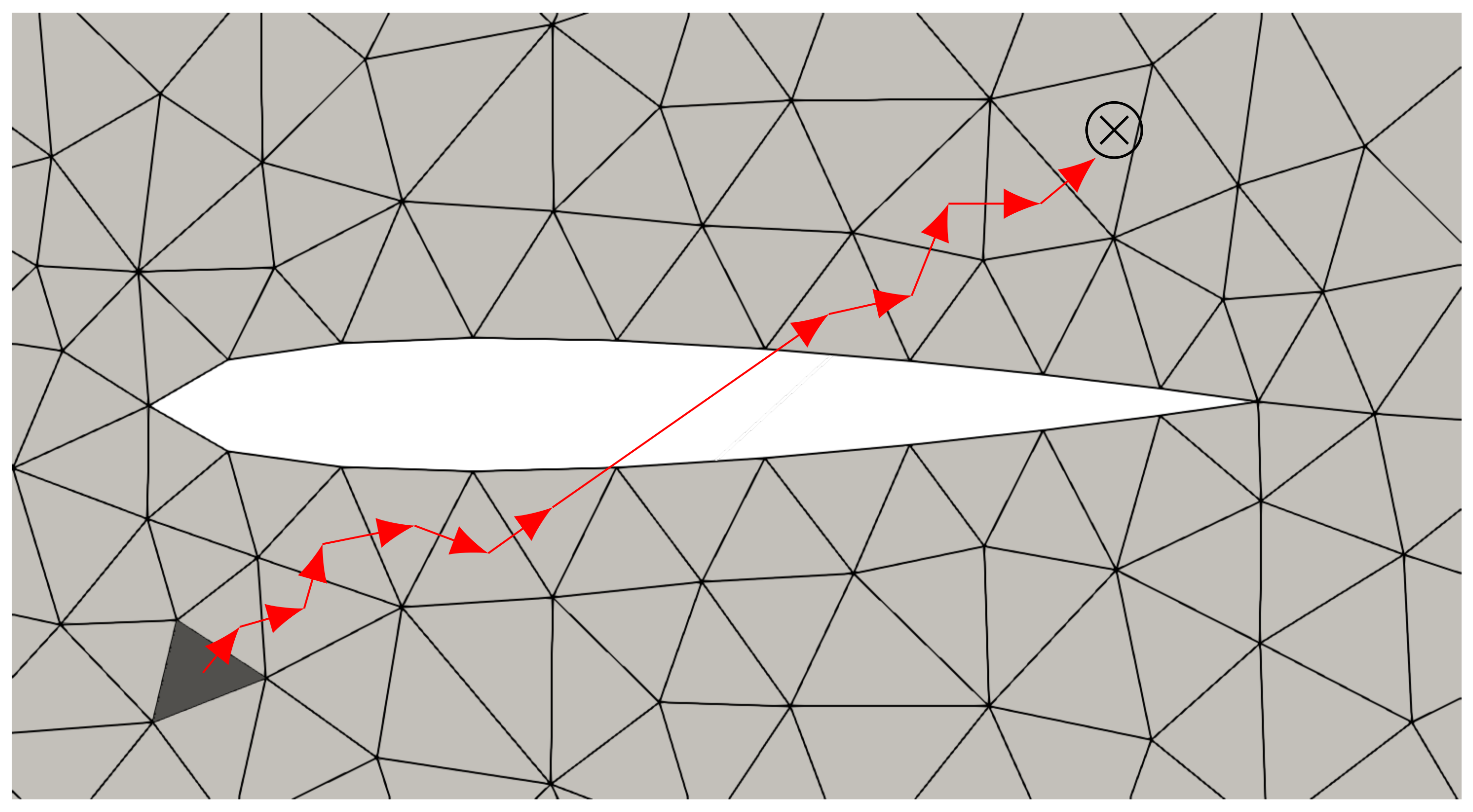

Figure 4, the stencil walk algorithm starts with choosing a starting or seed cell (shaded) and walking toward the query point (marked with a cross) by using cell-to-cell connectivity. First, a search line is drawn between the centroid of the seed cell and the query point. The search line is tested for intersection by the faces of the seed cell. The current cell (which was initially the seed cell) is updated to be the neighbor cell which shares the intersected face. When the current cell is near a boundary and, therefore, has no neighbor which shares the intersected face, all other faces of that boundary are tested for intersection by the search line. If an intersected boundary face is found, the current cell is updated to be the interior cell of that boundary. If no other boundary face is found, then the algorithm stops indicating that no cell containing the query point is found. When none of the faces of the current cell intersects the search line, the current cell is added to the list of candidate donor cells.

The reason for using the stencil walk algorithm in subsequent time steps is the absence of seed cells in the first time step. For receptors, the seeds are chosen to be the centroid of donor cells. For other cell types, seeds are not available and, therefore, as mentioned, ADT is used for the donor search. Since the stencil walk algorithm involves costly geometric intersections tests, a high number of walks is avoided by not picking random seed cells and using ADT whenever proper seed cells are not available.

After the donor search, if no candidate donor cell is found for a mesh cell, the query point is either outside of or inside a hole (if one exists) in . If has a hole, and if the query point is inside any of the bins of hole map of , then the mesh cell is labeled as hole cell. Once a mesh cell is labeled as a hole cell, its type cannot be changed regardless of interaction of the mesh cell with other mesh blocks. Otherwise, if the mesh cell is not a hole cell, it is labeled as field cell.

If a candidate donor cell is found and the volume of the query cell is larger than that of the candidate donor cell, then the mesh cell is labeled as receptor cell. A receptor cell may have multiple candidate donor cells. In this case, the candidate donor cell which has the smallest volume is assigned as the donor of the mesh cell. If the mesh cell has a smaller volume than a candidate donor cell, the mesh cell is labeled as a field cell. The reason for choosing the cell with smaller volume is to represent the domain with the highest resolution possible. Another option is to choose the cell closest to respective boundary. This kind of selection is beneficial in preserving boundary layer cells.

The cells which have a hole neighbor or are located next to an intergrid boundary are labeled as mandatory receptor cells. These receptor cells serve as intergrid boundary conditions.

The volume of intersection between mesh blocks can be reduced in order to save time by avoiding redundant interpolation of variables from donors to receptors. Minimization of the overlapped region is performed by excluding receptors having no field neighbor from the flow solution.

Once the donor search is completed, if the assembler and the solver have different partitioning layouts, the assembler maps cell types and donor IDs to the layout of the solver. In the case of sharing of the same partitioning layout, no further operation is required.

6. Governing Equations

The integral form of Euler equations is

where

V and

A are the volume and area of a cell and

and

are the vectors of conserved variables and interface fluxes:

u is the velocity component in face-normal direction,

v and

w are tangential velocity components,

E is total energy, and

p is static pressure. Euler equations are used in x-split form [

18] and, therefore, velocity components are aligned in Cartesian coordinates such that

u,

v, and

w are parallel to the

x-,

y-, and

z-axis.

For non-Cartesian unstructured meshes, to calculate the interface flux, conserved variables are rotated to face-normal coordinate system with transformation matrix:

where

and

are found from components of face-normal

:

The Riemann problem is approximately solved at each cell interface with HLLC solver [

18] in order to compute interface flux. When providing left and right states to the Riemann solver, the interface velocities are corrected by subtracting the rotated mesh velocity

from the rotated velocity such that

Discretized equations are solved with dual time stepping approach with

where superscripts

s and

n denote states in pseudo and real times. The parameter

is real time step and

is pseudo time step calculated from

where

is the maximum absolute eigenvalue among all faces of a cell. Equation (

6) is solved until steady state for maximum 100 pseudo time steps. When the left hand side of the equation vanishes, the original unsteady equation recovers. Interface flux

is evaluated explicitly as a function of conservative variable vector in previous pseudo time step, that is

.

7. CAD Models and Meshes

The assembler and solver are tested on the generic helicopter configuration [

12] consisting of a fuselage, pylon, and four NACA0012-profiled rotor blades.

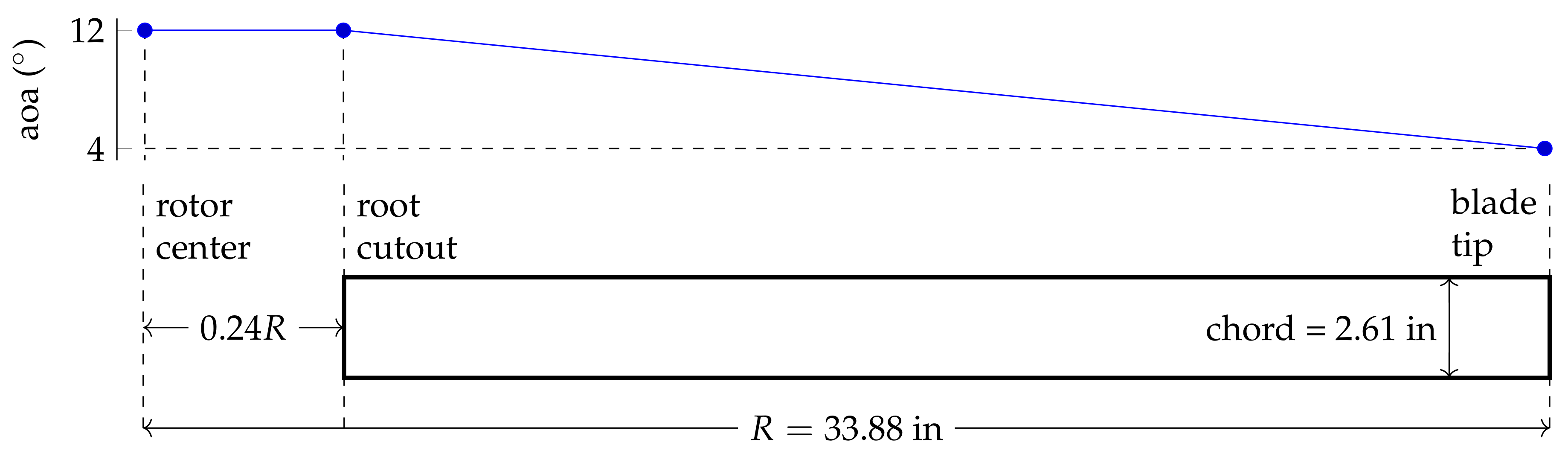

In [

12], several collective pitch angles were tested, whereas in this simulation only 4

of collective pitch is considered. Together with 8

of linear twist, the angle of attack ranges from 8

at the tip of a blade to 12

at the root cut. A sketch of a blade is shown in

Figure 5.

The shapes of the fuselage and pylon are defined with super-ellipse equations:

is the non-dimensional longitudinal coordinate with limits [0, 1.997] for fuselage and [0.400, 1.018] for pylon and

l is the desired length of fuselage which is 78.57 inches. Dimensions given in imperial units in [

12] were not converted to metric units to avoid ambiguity. Coefficients (

to

) are shown in

Table A1 in appendices. Cross-sectional coordinates

and

are obtained with

where

ranges from 0 to 2

and

r is defined as

Equation (

8) and the coefficients provided in [

12] are modified since their original counterparts caused problems such as division by zero. Equations (

8) and (

9) are different from the counterparts by modulus operator around

, and

and

. The modified coefficients are encircled in

Table A1 and

Table A2.

Figure 6 shows CAD models of fuselage, pylon, four rotor blades, and hub modeled with FreeCAD [

19]. Leading and trailing faces of fuselage are blunted slightly in order to have satisfactory mesh quality. The hub is modeled as a cylinder with diameter 0.1 m and length 0.05 m, and extensions which connect the hub to the blades have the same profile and angle of attack with the roots of respective blades. The extensions span from the hub such that they overlap 5% of respective blades.

CAD models are transferred to the mesh generator, GMSH [

20], to generate tetrahedral mesh for each component. Rotor blades have cylindrical meshes with diameter

and length

. Fuselage has a spherical mesh with diameter of 20 inches enclosing all blade and hub meshes. The hub also has a spherical mesh with diameter scaling with root cutout length such that

.

Figure 7 shows cross-sections of the fuselage, one of the blades, and the hub. Element size

s on fuselage, blade, and hub surfaces are 3, 1, and 2 mm. Volumetric element size is calculated with

for all components where

d is the shortest distance from respective wall surface.

Table 1 shows the number of cells in each mesh.

Figure 8 shows a slice of field cells after donor search. Each color corresponds to a different mesh. In the figure, there are cells that are undesirably larger than the surrounding cells such the one enclosed in a yellow circle. These cells were receptors having candidate donors which themselves were mandatory receptors, or by definition, they were orphans. Since they have to be the donors of mandatory receptors, they are converted to field cells.

Figure 9a shows receptors of a slice of fuselage mesh. It is clear that the receptors which only neighbor other receptors do not contribute to flow solution but increase simulation run time by interpolating flow variables across donors.

Figure 9b shows the same mesh after exclusion of redundant receptors.

8. Results

Test cases are run on TRUBA’s [

21] clusters. In order to reduce queue time, computations are run on different set of nodes with different specifications, as shown in

Table 2. In this work, hyperthreading is not used and virtual processors are mapped to cores; therefore, processor is an alias for core for the rest of the paper. Since a node can have a maximum of 20 cores, for a job requesting more than 20 processors, cores cannot fit into a single node but they will belong to different nodes. Therefore, data transfer takes place between cores having both shared memory and distributed memories. Open MPI’s byte transfer layer (BTL) framework uses the best component such as shared memory, TCP, Infiniband, and so on for data transfer between cores. Again, in order to reduce queue time, the number of nodes is left to be determined by the resource manager. The results in this section are obtained by using maximum allowable number of processors per user (128) usually distributed over 7–10 nodes.

The most intensive memory usage is observed during data exchange between processors. Exchange of spatial partitions during load (re)balance and exchange of mesh cells after mesh motion are two major tasks involving intensive MPI communication. Although it is verified by Valgrind [

22] that no memory leak occurs, memory usage increases after the mentioned tasks. It is expected that the reason for increasing memory usage is memory fragmentation caused by frequent allocation/deallocation of buffers leaving fragmented chunks of memory space. An obvious solution is using fixed buffers for data exchange. However, after load rebalance partitioning layout changes, therefore, the buffers used for data exchange have to be deallocated. Once memory usage hits the memory limit (8 GB per core), the simulation is halted by resource manager. In order not to lose progress, crucial data structures of assembler and solver are serialized with Boost’s serialization library (which is also used by Boost MPI for data transfer), and written to binary archives for every quarter (

) rotation. Processors read deserialized data from respective archives and restart the simulation with reset memory space.

The most time consuming tasks per time step are shown in

Figure 10. These tasks remain as the most time consuming in all time steps. However, note that

Rebalance may not be needed for all time steps. The execution time of

Solve is based on the maximum number of pseudo time steps (100) to solve Euler equations. Since the assembler constitutes less time compared to the solver, it is unnecessary to optimize load balance for the assembler. As discussed in

Section 2, it is possible to have separate partitions for the assembler and the flow solver in order to optimize load balance differently. However, having two separate partitions requires mapping of data across different partitioning layouts. The case with two different partitions is also tested, and it is found that mapping of data increases the total run time of simulation.

The frequency of load rebalance depends on relative time-cost of load rebalancing and the solver. In the case of 100 pseudo time steps for the solver, rebalancing is more costly than the solver and, therefore,

Rebalance is called when the ratio of the maximum to the minimum number of cells across processors exceeds 1000. The rebalance threshold is not the optimum and can be improved by an algorithm involving relative costs of tasks.

Figure 11 shows the run time per time step with and without rebalance. Areas under each curve represent total run time over quarter rotation of rotor. The time saved with load rebalancing is 13%, which adds up periodically for every quarter rotation due to the fact that the initial spatial partitioning is restored for every quarter rotation.

The downside of the proposed load balancing method is that the whole load balancing procedure (octree construction and adaptive refinement) should be repeated from scratch. One of the main reasons of high cost of load rebalancing is the high number of bins resulting from the refinement of octree. As explained in

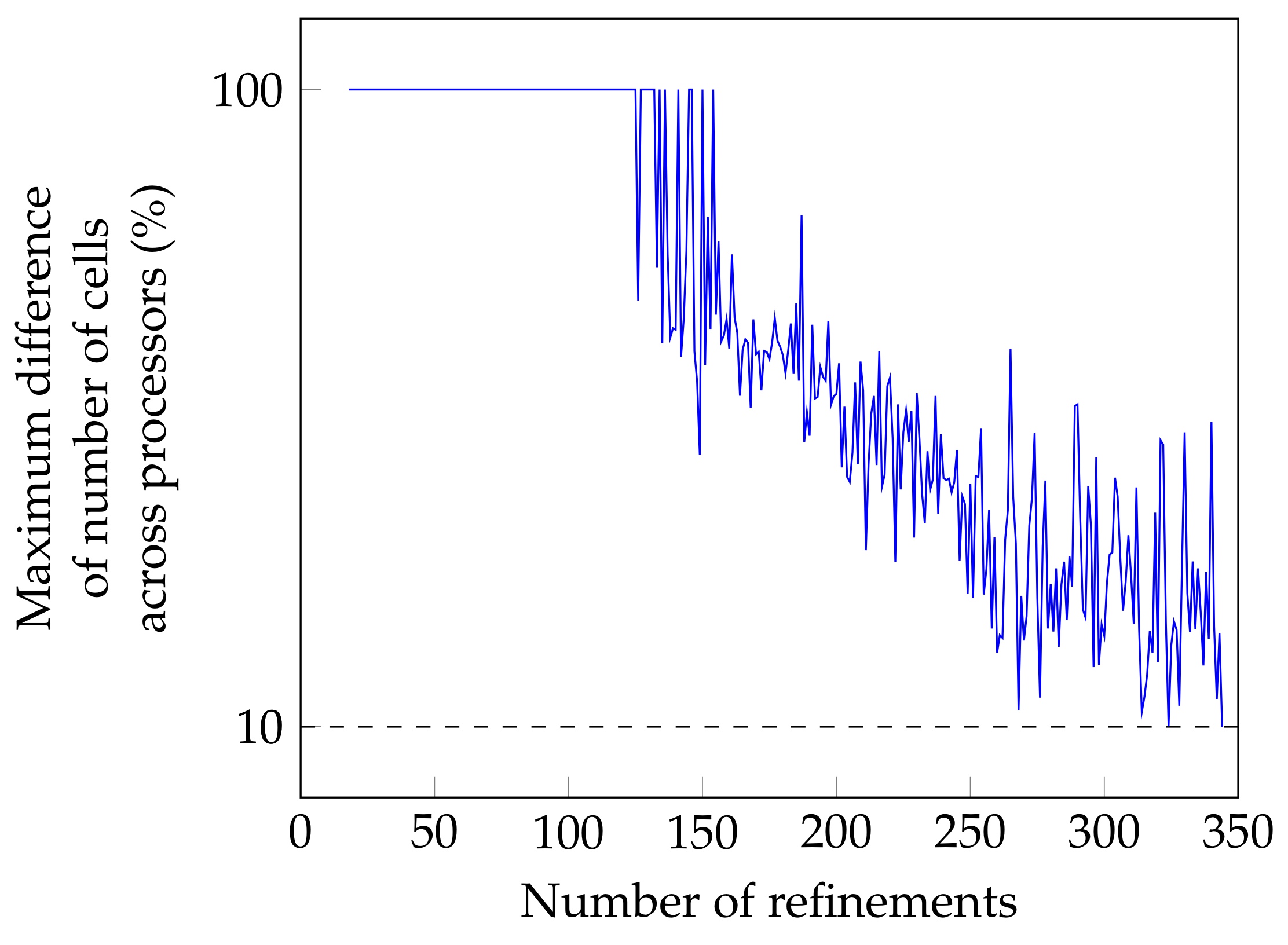

Section 2, bins are merged after refinement of octree in order to reduce communication among bins. Time spent on merging of octree bins is proportional to the number of octree bins. In order to reduce load imbalance across processors below 10%, 344 refinement steps are executed, as shown in

Figure 12 resulting in 1040 bins. Merging all 1040 bins into 128 (the number of processors) bins causes the load balancing to be the most time consuming task. Frequency of load rebalancing is also related to the number of merged octree bins. The higher the number of merged octree bins, the more is the number of mesh cells that move across octree bins after mesh motion in every time step.

The tasks Exchange and Reconnect are for exchange of mesh cells after mesh motion and reconnection of arrival mesh cells to the local mesh blocks. Exchange has to be called in every time step in order to keep mesh cells in correct partitions/processors even though load rebalancing is to be executed.

Figure 13 shows the total run time and speed-up for different number of processors. Speed-up is calculated by dividing run time by the run time for 64 processors. One of the reasons for speed-up, as expected, is distribution of computational load among more computational resources. Another reason for speed-up, in the particular case of partially shared memory computing, may be the increase in shared memory. Resource manager allocates 8 GB memory per core. Although memory is provided per core basis, cores are free to read/write any portion of cumulative shared memory space assigned to a user.

The number of ‘walks’ in the stencil walk algorithm ranged from 3 to more than 1000. Whenever the number of walks exceeded 1000, the donor search algorithm switched from stencil walking to ADT. Usage of the stencil walk algorithm saves 5% percent of the time of donor search with only using ADT in every time step. However, in problems where the time step is sufficiently high and current donor cells are sufficiently far away from the seeds, the high number of cell-to-cell walks will cause the stencil walk algorithm to perform slower than ADT.

Although some of the tasks (e.g., donor search) are common in research papers pertaining to overset mesh methodology [

2,

6,

11], due to the lack of exact definitions and implementation details of tasks, comparisons of parallel performance parameters can be misleading even if better results are obtained. In terms of partitioning method, this paper is an improvement over Suggar++ [

6] by usage of adaptive refinement instead of predetermined volumes for spatial partitioning and, therefore, it is useful to compare parallel performance results of Suggar++ with the results obtained in this paper. In [

6], the wall clock time from the combination of tasks that are hole cut, donor search, and overlap minimization and respective speed-up is presented for the case of helicopter fuselage and blades for up to 8 processors. Since problem sizes (the number of cells) are different, it is appropriate to compare speed-up results only.

Figure 14 shows speed-up for the mentioned tasks. The main inhibitor of speed-up is hole cutting task as it requires inter-processor communication for AABB of mesh walls. However, for up to 8 processors, the communication cost across processors is not substantial. Donor search and overlap minimization are completely local tasks, meaning that there is no inter-processor communication required. Therefore, speed-up for these three tasks remains nearly linear for up to 8 processors. Note that in this work, hole cutting is an integral part of donor search opposed to Suggar++’s direct hole cutting which is a standalone task. As implementation details of Suggar++ are unknown, it is unclear why Suggar++ shows such non-linear behavior. It should also be noted that spatial partitioning is a memory intensive operation. Since memory cannot explicitly be requested and is provided per core basis in this work, some of the modules such as the flow solver have to be deactivated in order to obtain performance results for 8 and less number processors.