Towards Energy Efficiency in Data Centers: An Industrial Experience Based on Reuse and Layout Changes

Abstract

:1. Introduction

- Is it possible to achieve better energy efficiency in data centers just by changing the equipment’s layout and taking advantage of legacy resources?

- How to maintain the sustainable consumption of physical and energy resources in the data center despite the growing demand for processing and storage?

- What know-how has been learned in the face of the challenges of implementing a data center in the context of tropical climate?

2. Related Works

3. Proposed Solution to Improve Cooling Efficiency

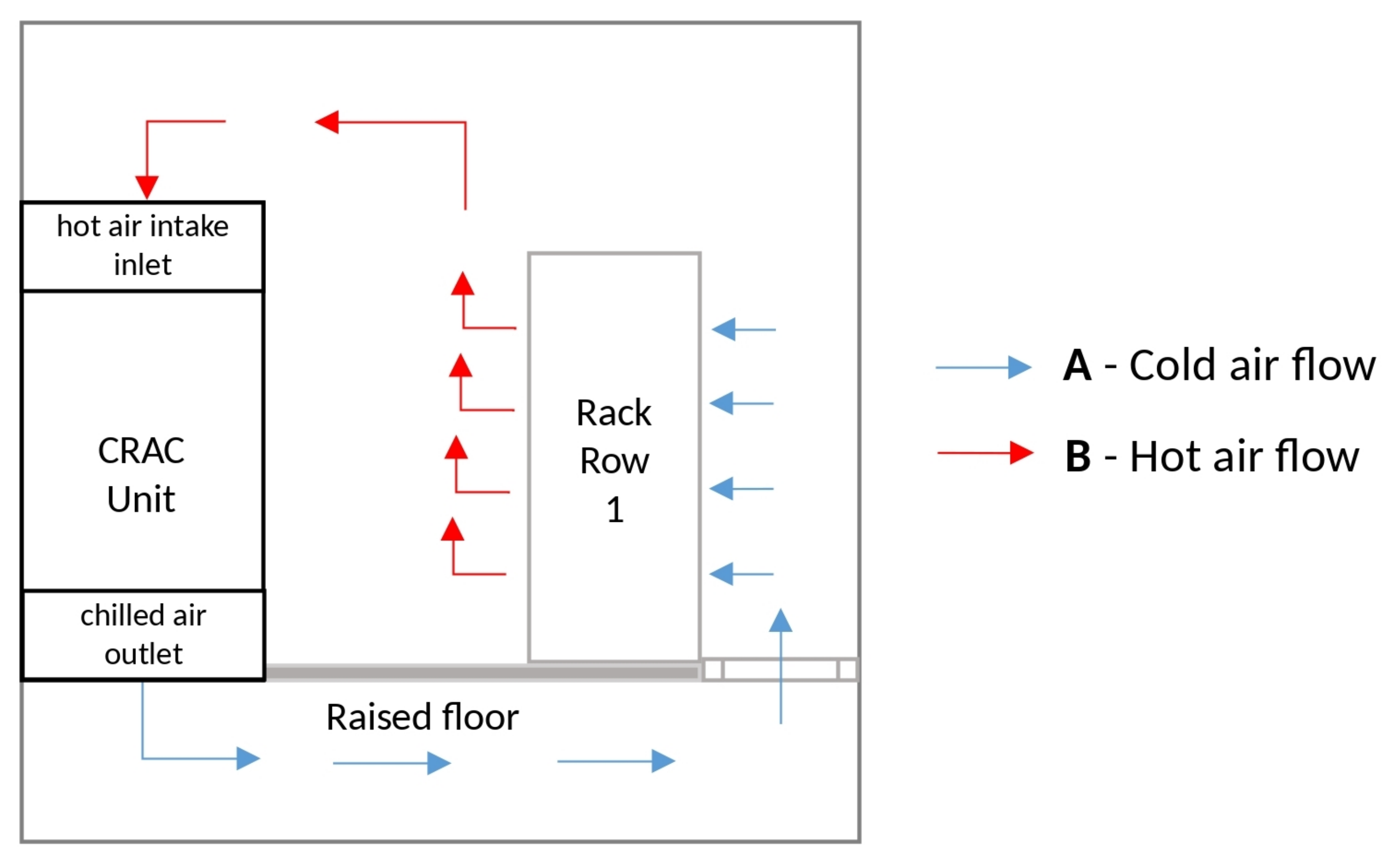

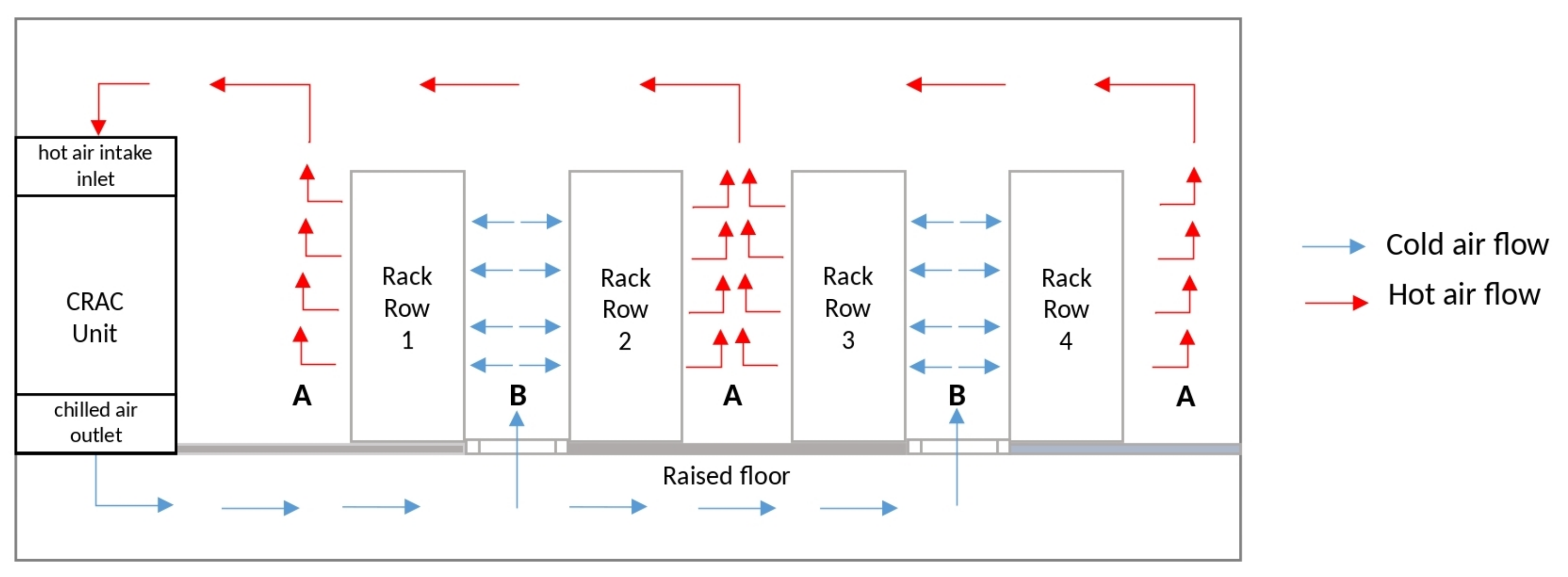

3.1. Cooling with Insufflation Downwards (DownFlow)

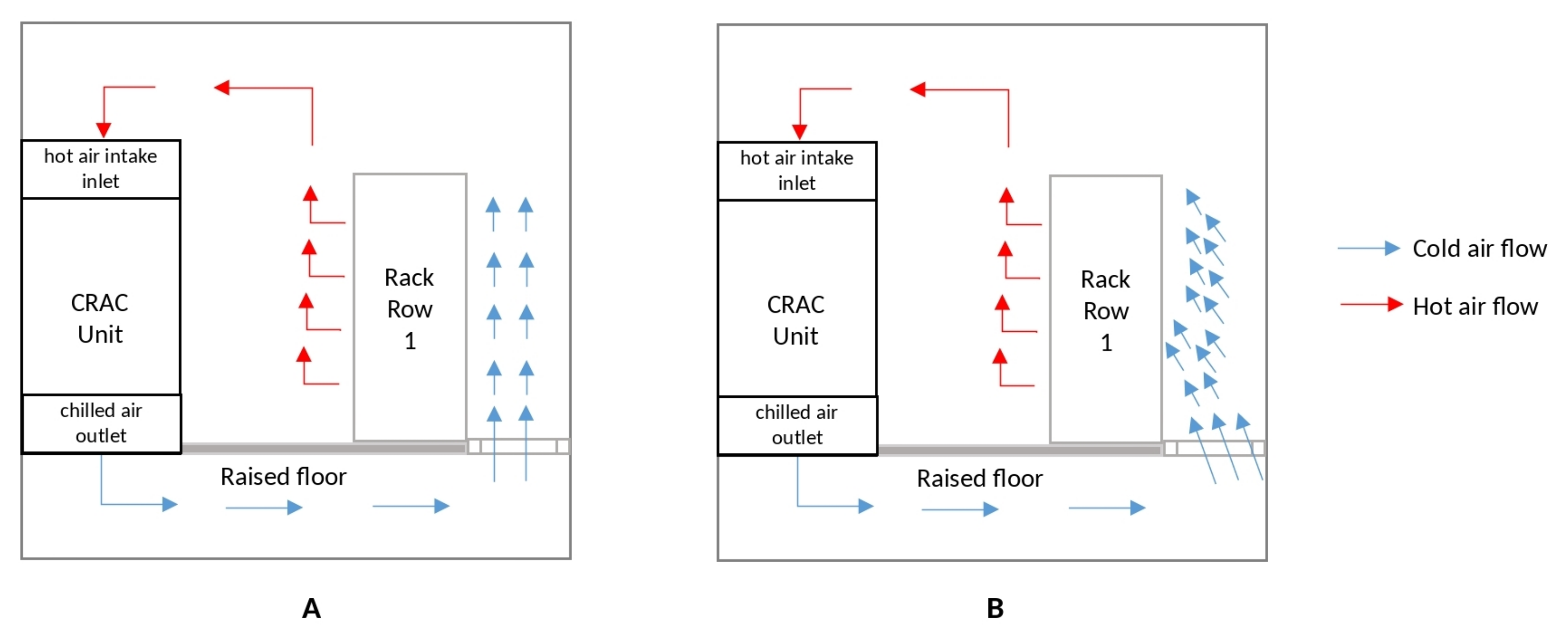

3.2. High Flow Air Diffusers

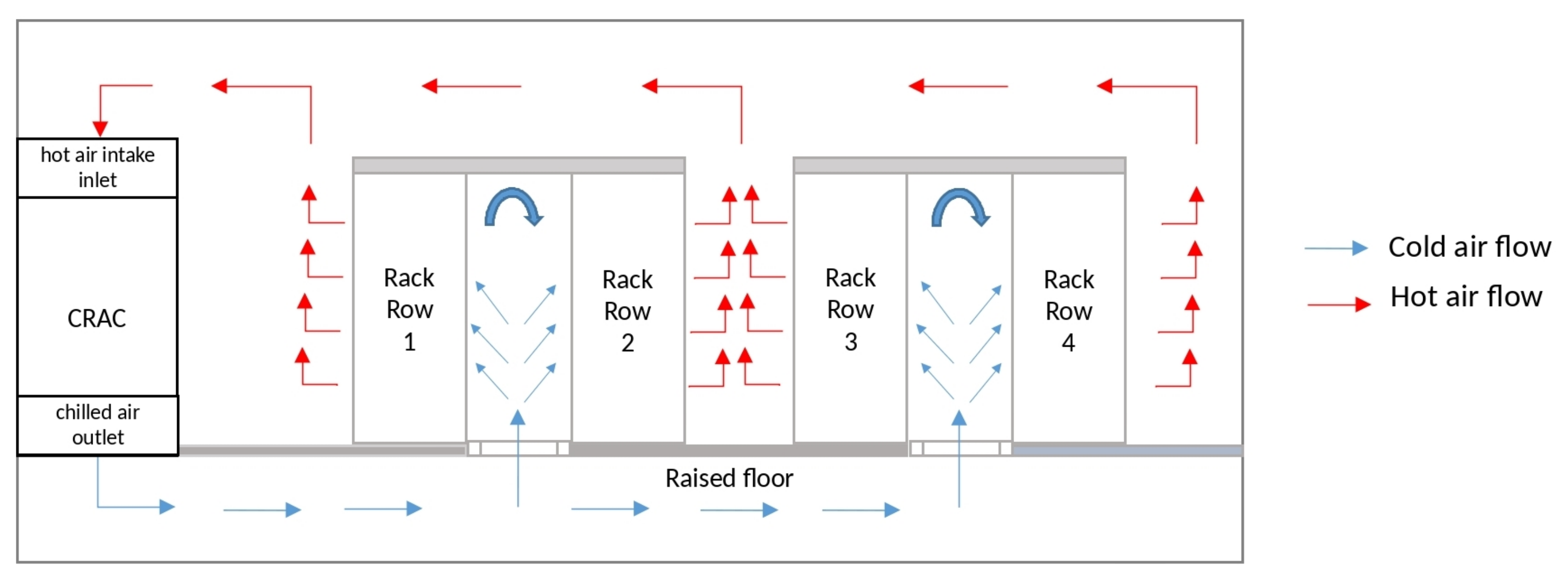

3.3. Cold Aisle Confinement

- Lower implementation cost —adhering to the context and the search for making the new data center construction cost planning as small as possible without compromising the quality of air conditioning;

- Implementation simplicity—installing doors and a roof for the basic confinement of the aisle. The low implementation complexity helped to not compromise the planned schedule for putting the new data center into production;

- Increased operating time without direct power—during an event that causes the initialization of the auxiliary power systems that supply the CRAC (e.g., generator set) to fail to start, or even an eventual delay in starting it, the confinement of the cold aisle creates an internal bank of cold air storage that provides the servers a significant additional running time before they shut down due to excessive temperature. This attribute related to this type of aisle confinement makes it possible to have greater certainty that the automated process of shutting down our 346 pieces of old equipment, added to the more 80 new ones, would have the necessary time to be completed without being compromised by the excess of heat.

4. Results

- April 2018–April 2019—Period before moving to the new data center, from April 2018, when these servers were installed in the old data center, until April 2019, when we shut down these eight servers to change the building;

- May 2019–January 2020—Period after the change of building, from May 2019, when we started the initialization of the eight servers already in the new data center, until January 2020, when we set the cutoff point of this study.

5. Conclusions

- Better electricity efficiency in our new data center;

- Results of better cooling performance for old and new servers, even if geographically the new data center is located in a city with a humid tropical climate with an average temperature of 27 °C (with peaks of 37 °C).

- Since our equipment works smoothly in the temperature range of 20–22 °C, the servers’ input temperature value and the current value measured in the environment is around 15 and 16 °C. We use this difference in such a way as to reduce the operation time of the CRACs. This would reduce electricity consumption and consequently reduce expenses;

- The option to bring more servers into the new data center, since the refrigerated thermal load has clearance for this if we use 22 °C as the maximum input temperature limit in the cold aisles.

- Explore the techniques pointed out by industrial and practical related works, providing other practical experimentation results;

- Develop a methodology to optimize the energy efficiency based on software solution;

- Develop a new measure to evaluate the impact of the direction of the air in data centers.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Hasan, M.M.; Popp, J.; Oláh, J. Current landscape and influence of big data on finance. J. Big Data 2020, 7, 1–17. [Google Scholar] [CrossRef]

- Klimek, M. Financial Optimization of the Resource-Constrained Project Scheduling Problem with Milestones Payments. Appl. Sci. 2021, 11, 661. [Google Scholar] [CrossRef]

- De Aguiar, E.J.; Faiçal, B.S.; Krishnamachari, B.; Ueyama, J. A Survey of Blockchain-Based Strategies for Healthcare. ACM Comput. Surv. 2020, 53. [Google Scholar] [CrossRef] [Green Version]

- Giuntini, F.T.; Cazzolato, M.T.; dos Reis, M.d.J.D.; Campbell, A.T.; Traina, A.J.; Ueyama, J. A review on recognizing depression in social networks: Challenges and opportunities. J. Ambient Intell. Humaniz. Comput. 2020, 11, 4713–4729. [Google Scholar] [CrossRef]

- Althnian, A.; AlSaeed, D.; Al-Baity, H.; Samha, A.; Dris, A.B.; Alzakari, N.; Abou Elwafa, A.; Kurdi, H. Impact of Dataset Size on Classification Performance: An Empirical Evaluation in the Medical Domain. Appl. Sci. 2021, 11, 796. [Google Scholar] [CrossRef]

- Fischer, C.; Pardos, Z.A.; Baker, R.S.; Williams, J.J.; Smyth, P.; Yu, R.; Slater, S.; Baker, R.; Warschauer, M. Mining Big Data in Education: Affordances and Challenges. Rev. Res. Educ. 2020, 44, 130–160. [Google Scholar] [CrossRef] [Green Version]

- Neto, J.R.; Boukerche, A.; Yokoyama, R.S.; Guidoni, D.L.; Meneguette, R.I.; Ueyama, J.; Villas, L.A. Performance evaluation of unmanned aerial vehicles in automatic power meter readings. Ad Hoc Netw. 2017, 60, 11–25. [Google Scholar] [CrossRef]

- Giuntini, F.T.; Beder, D.M.; Ueyama, J. Exploiting self-organization and fault tolerance in wireless sensor networks: A case study on wildfire detection application. Int. J. Distrib. Sens. Netw. 2017, 13, 1550147717704120. [Google Scholar] [CrossRef]

- Filho, G.P.R.; Meneguette, R.I.; Maia, G.; Pessin, G.; Gonçalves, V.P.; Weigang, L.; Ueyama, J.; Villas, L.A. A fog-enabled smart home solution for decision-making using smart objects. Future Gener. Comput. Syst. 2020, 103, 18–27. [Google Scholar] [CrossRef]

- Safara, F.; Souri, A.; Baker, T.; Al Ridhawi, I.; Aloqaily, M. PriNergy: A priority-based energy-efficient routing method for IoT systems. J. Supercomput. 2020, 76, 8609–8626. [Google Scholar] [CrossRef]

- Data Center Market—Global Outlook and Forecast 2020–2025. 2020. Available online: https://www.researchandmarkets.com/reports/4986841/data-center-market-global-outlook-and-forecast (accessed on 29 April 2021).

- Salehi, P.; Zhang, K.; Jacobsen, H.A. On Delivery Guarantees in Distributed Content-Based Publish/Subscribe Systems. In Middleware ’20, Proceedings of the 21st International Middleware Conference, Delft, The Netherlands, 7–11 December 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 61–73. [Google Scholar] [CrossRef]

- Hasenburg, J.; Stanek, F.; Tschorsch, F.; Bermbach, D. Managing Latency and Excess Data Dissemination in Fog-Based Publish/Subscribe Systems. In Proceedings of the 2020 IEEE International Conference on Fog Computing (ICFC), Sydney, NSW, Australia, 21–24 April 2020; pp. 9–16. [Google Scholar] [CrossRef]

- Pereira Rocha Filho, G.; Yukio Mano, L.; Demetrius Baria Valejo, A.; Aparecido Villas, L.; Ueyama, J. A Low-Cost Smart Home Automation to Enhance Decision-Making based on Fog Computing and Computational Intelligence. IEEE Lat. Am. Trans. 2018, 16, 186–191. [Google Scholar] [CrossRef]

- Shvachko, K.; Kuang, H.; Radia, S.; Chansler, R. The hadoop distributed file system. In Proceedings of the 2010 IEEE 26th Symposium on Mass Storage Systems and Technologies (MSST), Incline Village, NV, USA, 3–7 May 2010; pp. 1–10. [Google Scholar]

- Widodo, R.N.S.; Abe, H.; Kato, K. HDRF: Hadoop data reduction framework for hadoop distributed file system. In Proceedings of the 11th ACM SIGOPS Asia-Pacific Workshop on Systems, Tsukuba, Japan, 24–25 August 2020; pp. 122–129. [Google Scholar]

- Elkawkagy, M.; Elbeh, H. High Performance Hadoop Distributed File System. Int. J. Netw. Distrib. Comput. 2020, 8, 119–123. [Google Scholar] [CrossRef]

- Salloum, S.; Dautov, R.; Chen, X.; Peng, P.X.; Huang, J.Z. Big data analytics on Apache Spark. Int. J. Data Sci. Anal. 2016, 1, 145–164. [Google Scholar] [CrossRef] [Green Version]

- Dagdia, Z.C.; Zarges, C.; Beck, G.; Lebbah, M. A distributed rough set theory based algorithm for an efficient big data pre-processing under the spark framework. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 911–916. [Google Scholar]

- Jianpeng, H.; Xiyang, J.; Yifei, T.; Feng, G. Research of elevator real time monitoring method based on spark. Mater. Sci. Eng. 2020, 758, 012078. [Google Scholar] [CrossRef]

- Ichinose, A.; Takefusa, A.; Nakada, H.; Oguchi, M. A study of a video analysis framework using Kafka and spark streaming. In Proceedings of the 2017 IEEE International Conference on Big Data (Big Data), Boston, MA, USA, 11–14 December 2017; pp. 2396–2401. [Google Scholar]

- Hiraman, B.R.; Viresh, M.C.; Abhijeet, C.K. A study of Apache Kafka in big data stream processing. In Proceedings of the 2018 International Conference on Information Communication, Engineering and Technology (ICICET), Pune, India, 29–31 August 2018; pp. 1–3. [Google Scholar]

- De Alwis, B.; Sillito, J. Why are software projects moving from centralized to decentralized version control systems? In Proceedings of the 2009 ICSE Workshop on Cooperative and Human Aspects on Software Engineering, Vancouver, BC, Canada, 17 May 2009; pp. 36–39. [Google Scholar]

- Correa, E.S.; Fletscher, L.A.; Botero, J.F. Virtual Data Center Embedding: A Survey. IEEE Lat. Am. Trans. 2015, 13, 1661–1670. [Google Scholar] [CrossRef]

- Jiadi, L.; Yang, H.; Huan, L.; Xinli, Z.; WenJing, L. Research on Data Center Operation and Maintenance Management Based on Big Data. In Proceedings of the 2020 International Conference on Computer Engineering and Application (ICCEA), Guangzhou, China, 18–20 March 2020; pp. 124–127. [Google Scholar] [CrossRef]

- Rocha, E.; Leoni Santos, G.; Endo, P.T. Analyzing the impact of power subsystem failures and checkpoint mechanisms on availability of cloud applications. IEEE Lat. Am. Trans. 2020, 18, 138–146. [Google Scholar] [CrossRef]

- Li, Y.; Wang, X.; Luo, P.; Pan, Q. Thermal-aware hybrid workload management in a green datacenter towards renewable energy utilization. Energies 2019, 12, 1494. [Google Scholar] [CrossRef] [Green Version]

- Song, Z.; Zhang, X.; Eriksson, C. Data center energy and cost saving evaluation. Energy Proc. 2015, 75, 1255–1260. [Google Scholar] [CrossRef] [Green Version]

- Liu, L.; Zhang, Q.; Zhai, Z.J.; Yue, C.; Ma, X. State-of-the-art on thermal energy storage technologies in data center. Energy Build. 2020, 226, 110345. [Google Scholar] [CrossRef]

- Patterson, M.K. The effect of data center temperature on energy efficiency. In Proceedings of the 2008 11th Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems, Orlando, FL, USA, 28–31 May 2008; pp. 1167–1174. [Google Scholar] [CrossRef] [Green Version]

- Han, J.W.; Zhao, C.J.; Yang, X.T.; Qian, J.P.; Fan, B.L. Computational modeling of airflow and heat transfer in a vented box during cooling: Optimal package design. Appl. Therm. Eng. 2015, 91, 883–893. [Google Scholar] [CrossRef]

- Prabha, B.; Ramesh, K.; Renjith, P.N. A Review on Dynamic Virtual Machine Consolidation Approaches for Energy-Efficient Cloud Data Centers. In Data Intelligence and Cognitive Informatics; Jeena Jacob, I., Kolandapalayam Shanmugam, S., Piramuthu, S., Falkowski-Gilski, P., Eds.; Springer: Singapore, 2021; pp. 761–780. [Google Scholar]

- Varshni, Y. Temperature dependence of the energy gap in semiconductors. Physica 1967, 34, 149–154. [Google Scholar] [CrossRef]

- Gerwin, H.; Scherer, W.; Teuchert, E. The TINTE Modular Code System for Computational Simulation of Transient Processes in the Primary Circuit of a Pebble-Bed High-Temperature Gas-Cooled Reactor. Nucl. Sci. Eng. 1989, 103, 302–312. [Google Scholar] [CrossRef]

- Ehlers, T.A.; Chaudhri, T.; Kumar, S.; Fuller, C.W.; Willett, S.D.; Ketcham, R.A.; Brandon, M.T.; Belton, D.X.; Kohn, B.P.; Gleadow, A.J.; et al. Computational Tools for Low-Temperature Thermochronometer Interpretation. Rev. Mineral. Geochem. 2005, 58, 589–622. [Google Scholar] [CrossRef]

- Satheesh, A.; Muthukumar, P.; Dewan, A. Computational study of metal hydride cooling system. Int. J. Hydrogen Energy 2009, 34, 3164–3172. [Google Scholar] [CrossRef]

- Grilli, F.; Pardo, E.; Stenvall, A.; Nguyen, D.N.; Yuan, W.; Gömöry, F. Computation of Losses in HTS Under the Action of Varying Magnetic Fields and Currents. IEEE Trans. Appl. Supercond. 2014, 24, 78–110. [Google Scholar] [CrossRef]

- Shahid, S.; Agelin-Chaab, M. Analysis of Cooling Effectiveness and Temperature Uniformity in a Battery Pack for Cylindrical Batteries. Energies 2017, 10, 1157. [Google Scholar] [CrossRef] [Green Version]

- Murray, A.V.; Ireland, P.T.; Wong, T.H.; Tang, S.W.; Rawlinson, A.J. High Resolution Experimental and Computational Methods for Modelling Multiple Row Effusion Cooling Performance. Int. J. Turbomach. Propuls. Power 2018, 3, 4. [Google Scholar] [CrossRef] [Green Version]

- Nebot-Andrés, L.; Llopis, R.; Sánchez, D.; Catalán-Gil, J.; Cabello, R. CO2 with Mechanical Subcooling vs. CO2 Cascade Cycles for Medium Temperature Commercial Refrigeration Applications Thermodynamic Analysis. Appl. Sci. 2017, 7, 955. [Google Scholar] [CrossRef] [Green Version]

- Heller, B.; Seetharaman, S.; Mahadevan, P.; Yiakoumis, Y.; Sharma, P.; Banerjee, S.; McKeown, N. Elastictree: Saving energy in data center networks. In Proceedings of the 7th USENIX Symposium on Networked Systems Design and Implementation (NSDI ’10), San Jose, CA, USA, 28–30 April 2010; Volume 10, pp. 249–264. [Google Scholar]

- McKeown, N.; Anderson, T.; Balakrishnan, H.; Parulkar, G.; Peterson, L.; Rexford, J.; Shenker, S.; Turner, J. OpenFlow: Enabling innovation in campus networks. ACM SIGCOMM Comput. Commun. Rev. 2008, 38, 69–74. [Google Scholar] [CrossRef]

- Han, Y.; Li, J.; Chung, J.Y.; Yoo, J.H.; Hong, J.W.K. SAVE: Energy-aware virtual data center embedding and traffic engineering using SDN. In Proceedings of the 2015 1st IEEE Conference on Network Softwarization (NetSoft), London, UK, 13–17 April 2015; pp. 1–9. [Google Scholar]

- Ma, X.; Zhang, Z.; Su, S. Energy-aware virtual data center embedding. J. Inf. Process. Syst. 2020, 16, 460–477. [Google Scholar]

- Sun, P.; Guo, Z.; Liu, S.; Lan, J.; Wang, J.; Hu, Y. SmartFCT: Improving Power-Efficiency for Data Center Networks with Deep Reinforcement Learning. Comput. Netw. 2020, 179, 107255. [Google Scholar] [CrossRef]

- Saadi, Y.; El Kafhali, S. Energy-efficient strategy for virtual machine consolidation in cloud environment. Soft Comput. 2020, 14845–14859. [Google Scholar] [CrossRef]

- MirhoseiniNejad, S.; Moazamigoodarzi, H.; Badawy, G.; Down, D.G. Joint data center cooling and workload management: A thermal-aware approach. Future Gener. Comput. Syst. 2020, 104, 174–186. [Google Scholar] [CrossRef]

- Kaffes, K.; Sbirlea, D.; Lin, Y.; Lo, D.; Kozyrakis, C. Leveraging application classes to save power in highly-utilized data centers. In Proceedings of the 11th ACM Symposium on Cloud Computing, Virtual Event, USA, 19–21 October 2020; pp. 134–149. [Google Scholar] [CrossRef]

- IBM Knowledge Center. Raised Floors. 2020. Available online: https://www.ibm.com/support/knowledgecenter/en/POWER8/p8ebe/p8ebe_raisedfloors.htm (accessed on 28 January 2021).

- Beitelmal, A.H. Numerical investigation of data center raised-floor plenum. In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Houston, TX, USA, 13–19 November 2015; Volume 57496, p. V08AT10A046. [Google Scholar]

- Ni, J.; Jin, B.; Zhang, B.; Wang, X. Simulation of thermal distribution and airflow for efficient energy consumption in a small data centers. Sustainability 2017, 9, 664. [Google Scholar] [CrossRef] [Green Version]

- Wan, J.; Gui, X.; Kasahara, S.; Zhang, Y.; Zhang, R. Air flow measurement and management for improving cooling and energy efficiency in raised-floor data centers: A survey. IEEE Access 2018, 6, 48867–48901. [Google Scholar] [CrossRef]

- Access Floor Systems. 24′′ × 24′′ Floor Grill. 2018. Available online: https://www.accessfloorsystems.com/index.php/products/air-flow-options/grills/24-inch-x-24-inch-grill.html (accessed on 25 March 2021).

- Tate Access Floors. Multi-Zone Opposed Blade Damper. 2018. Available online: https://www.tateinc.com/en-us/products/airflow-panels-and-controls/airflow-controls/multi-zone-opposed-blade-damper (accessed on 25 March 2021).

- Lu, H.; Zhang, Z.; Yang, L. A review on airflow distribution and management in data center. Energy Build. 2018, 179, 264–277. [Google Scholar] [CrossRef]

- Abreu, M. Contenção do Corredor Quente ou do Corredor Frio? Qual a Melhor Técnica? 2020. Available online: https://blog.innotechno.com.br/contencao-do-corredor-quente-ou-do-corredor-frio-qual-melhor-tecnica/ (accessed on 11 May 2021).

- Shrivastava, S.K.; Ibrahim, M. Benefit of cold aisle containment during cooling failure. In Proceedings of the International Electronic Packaging Technical Conference and Exhibition, Maui, HI, USA, 6–11 July 2003; Volume 55768, p. V002T09A021. [Google Scholar]

- Fulton, J. Control of Server Inlet Temperatures in Data Centers-A Long Overdue Strategy; White Paper; AFCO Systems: Farmingdale, NY, USA, 2006; Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.95.1066&rep=rep1&type=pdf (accessed on 11 May 2021).

- Brady, G.A.; Kapur, N.; Summers, J.L.; Thompson, H.M. A case study and critical assessment in calculating power usage effectiveness for a data centre. Energy Convers. Manag. 2013, 76, 155–161. [Google Scholar] [CrossRef]

- Zaman, S.K.; Khan, A.u.R.; Shuja, J.; Maqsood, T.; Mustafa, S.; Rehman, F. A Systems Overview of Commercial Data Centers: Initial Energy and Cost Analysis. Int. J. Inf. Technol. Web Eng. 2019, 14, 42–65. [Google Scholar] [CrossRef] [Green Version]

| Work | Approach | Energy Save |

|---|---|---|

| Heller et al. [41] | Central control software | up to 50% |

| Han et al. [43] | Software-defined networks(SDN) | 18.75% |

| Song et al. [28] | Air controller, layout and location changes | 35% |

| Ma et al. [44] | Heuristic and optimization algorithms | 11% to 28% |

| Sun et al. [45] | Deep learning by reinforcement and SDN | around 12% |

| Saadi et al. [46] | Optimizing the workload cost function | 21% on average |

| MirhoseiniNejad et al. [47] | Low complexity holistic model | around 11% |

| Kaffes et al. [48] | Allocation mechanism of different sets of cores | 9% |

| Equipment | Average Inlet Temperature (°C)—April 18–April 19 (SD) | Average Inlet Temperature (°C)—May 19–January 20 (SD) | Average Temperature Difference (°C) | Percentage of Reduction |

|---|---|---|---|---|

| GPU Server 01 | 25.76 () | 15.35 () | 10.41 | 40% |

| GPU Server 02 | 22.60 () | 15.27 () | 7.33 | 32% |

| GPU Server 03 | 26.83 () | 16.31 () | 10.53 | 39% |

| GPU Server 04 | 25.55 () | 15.21 () | 10.34 | 40% |

| GPU Server 05 | 22.77 () | 15.31 () | 7.47 | 33% |

| GPU Server 06 | 28.18 () | 15.26 () | 12.91 | 46% |

| GPU Server 07 | 28.04 () | 15.28 () | 12.76 | 46% |

| GPU Server 08 | 27.34 () | 15.21 () | 12.13 | 44% |

| Average | 25.88 | 15.40 | 10.48 | 41% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Machado, R.d.S.; Pires, F.d.S.; Caldeira, G.R.; Giuntini, F.T.; Santos, F.d.S.; Fonseca, P.R. Towards Energy Efficiency in Data Centers: An Industrial Experience Based on Reuse and Layout Changes. Appl. Sci. 2021, 11, 4719. https://doi.org/10.3390/app11114719

Machado RdS, Pires FdS, Caldeira GR, Giuntini FT, Santos FdS, Fonseca PR. Towards Energy Efficiency in Data Centers: An Industrial Experience Based on Reuse and Layout Changes. Applied Sciences. 2021; 11(11):4719. https://doi.org/10.3390/app11114719

Chicago/Turabian StyleMachado, Romulos da S., Fabiano dos S. Pires, Giovanni R. Caldeira, Felipe T. Giuntini, Flávia de S. Santos, and Paulo R. Fonseca. 2021. "Towards Energy Efficiency in Data Centers: An Industrial Experience Based on Reuse and Layout Changes" Applied Sciences 11, no. 11: 4719. https://doi.org/10.3390/app11114719