X-Reality Museums: Unifying the Virtual and Real World Towards Realistic Virtual Museums

Abstract

1. Introduction

2. Related Work

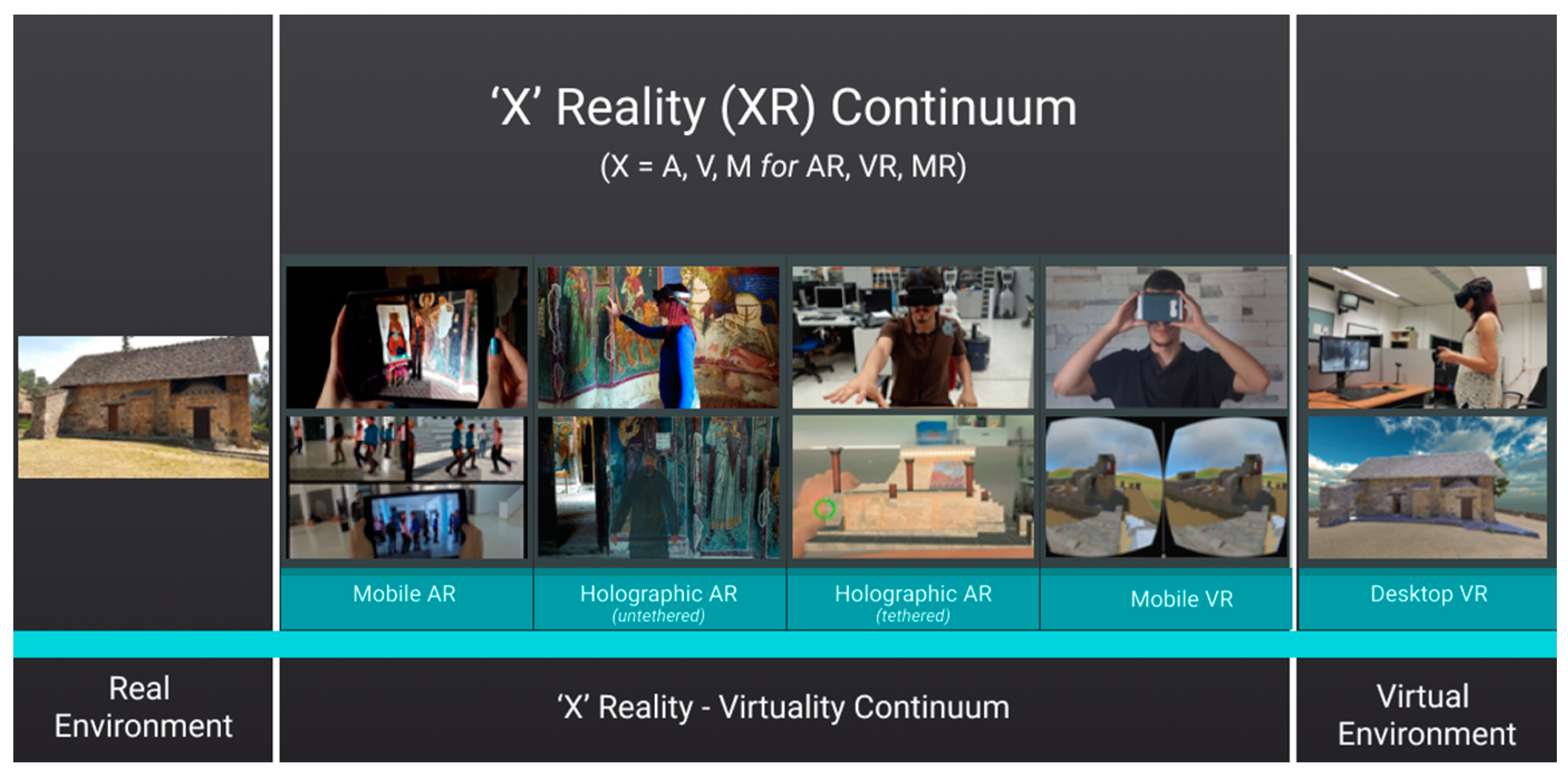

2.1. Extended Reality (XR)

2.2. Diminished Reality

2.3. True Mediated Reality

2.4. Natural Multimodal Interaction

2.5. Summary of Future Challenges

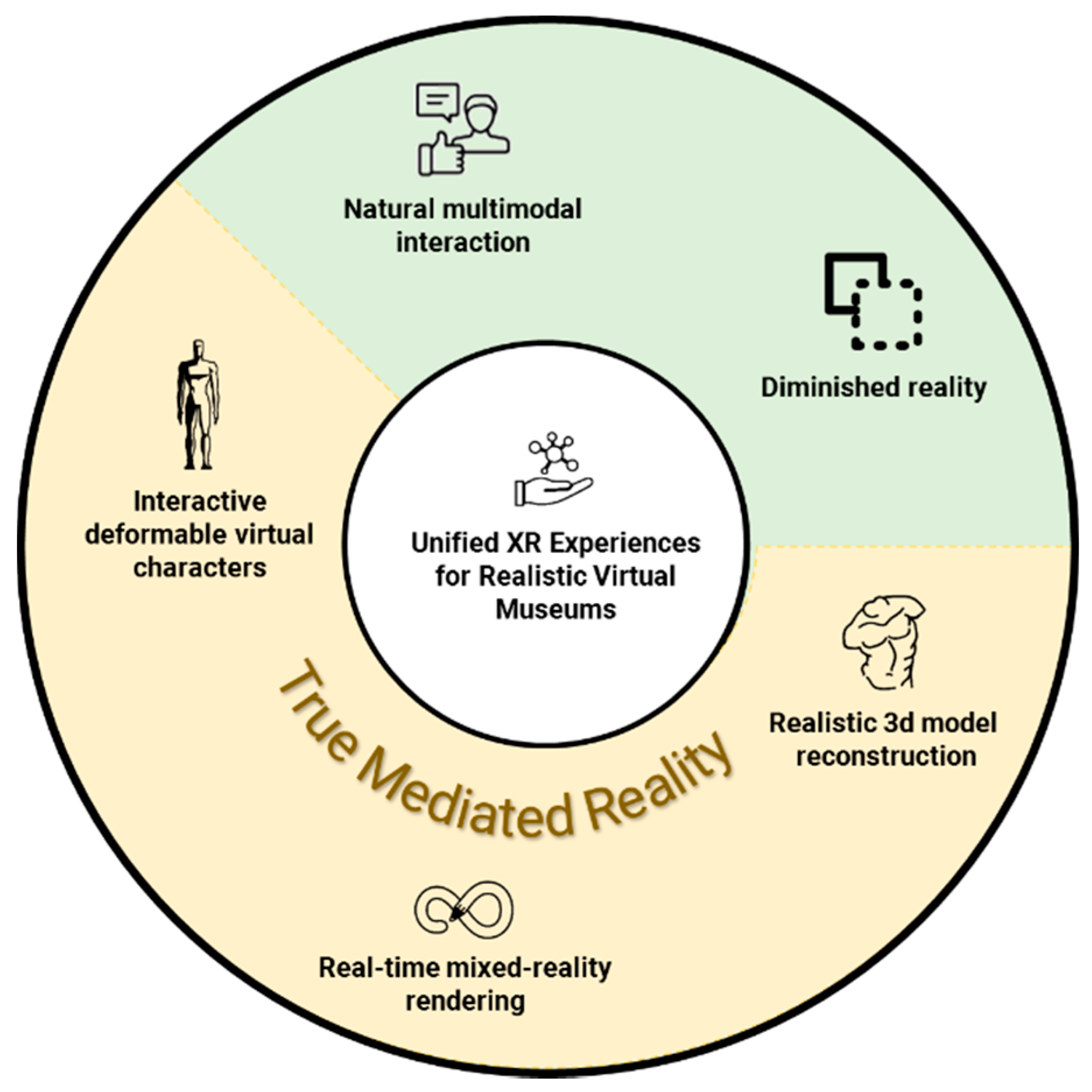

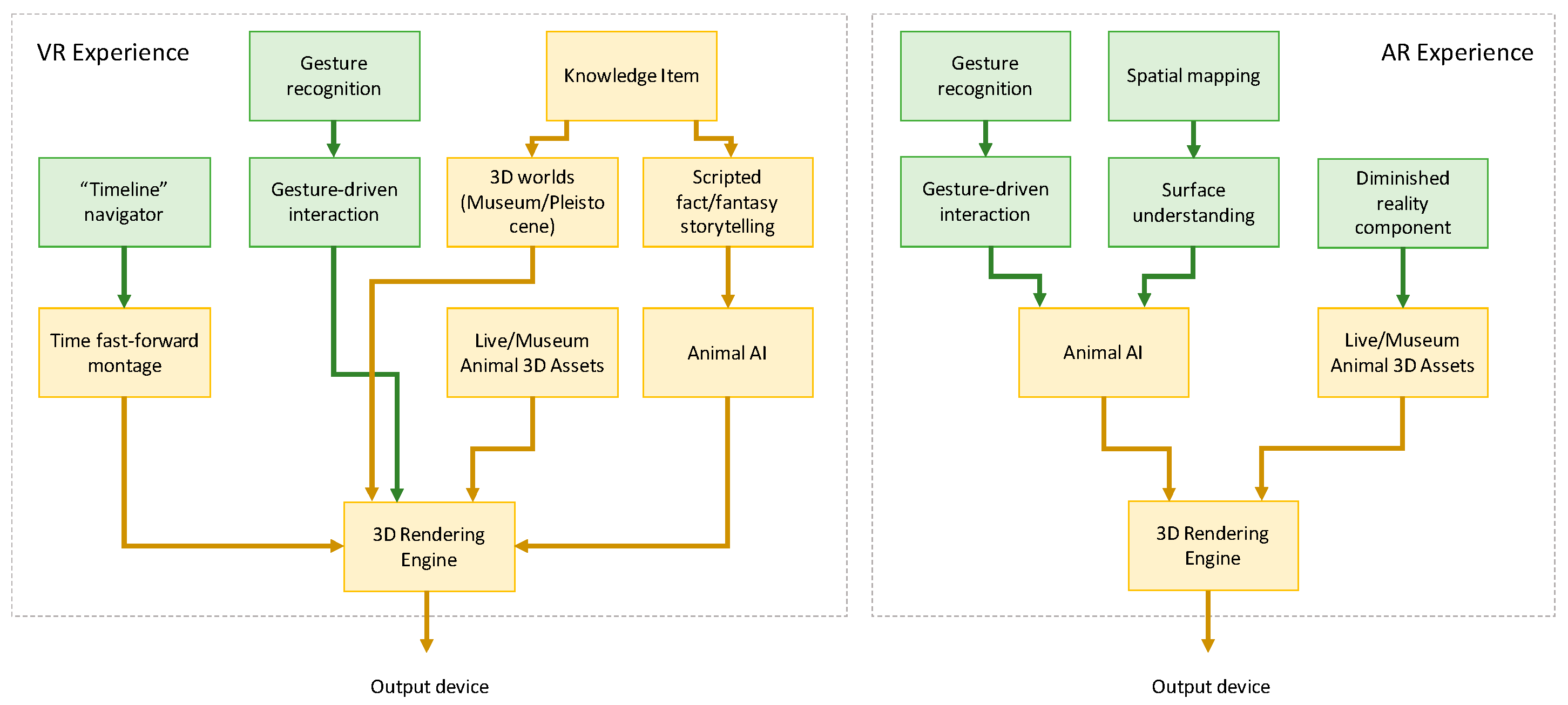

3. A Conceptual Architecture for Unifying XR Experiences for Realistic Virtual Museums

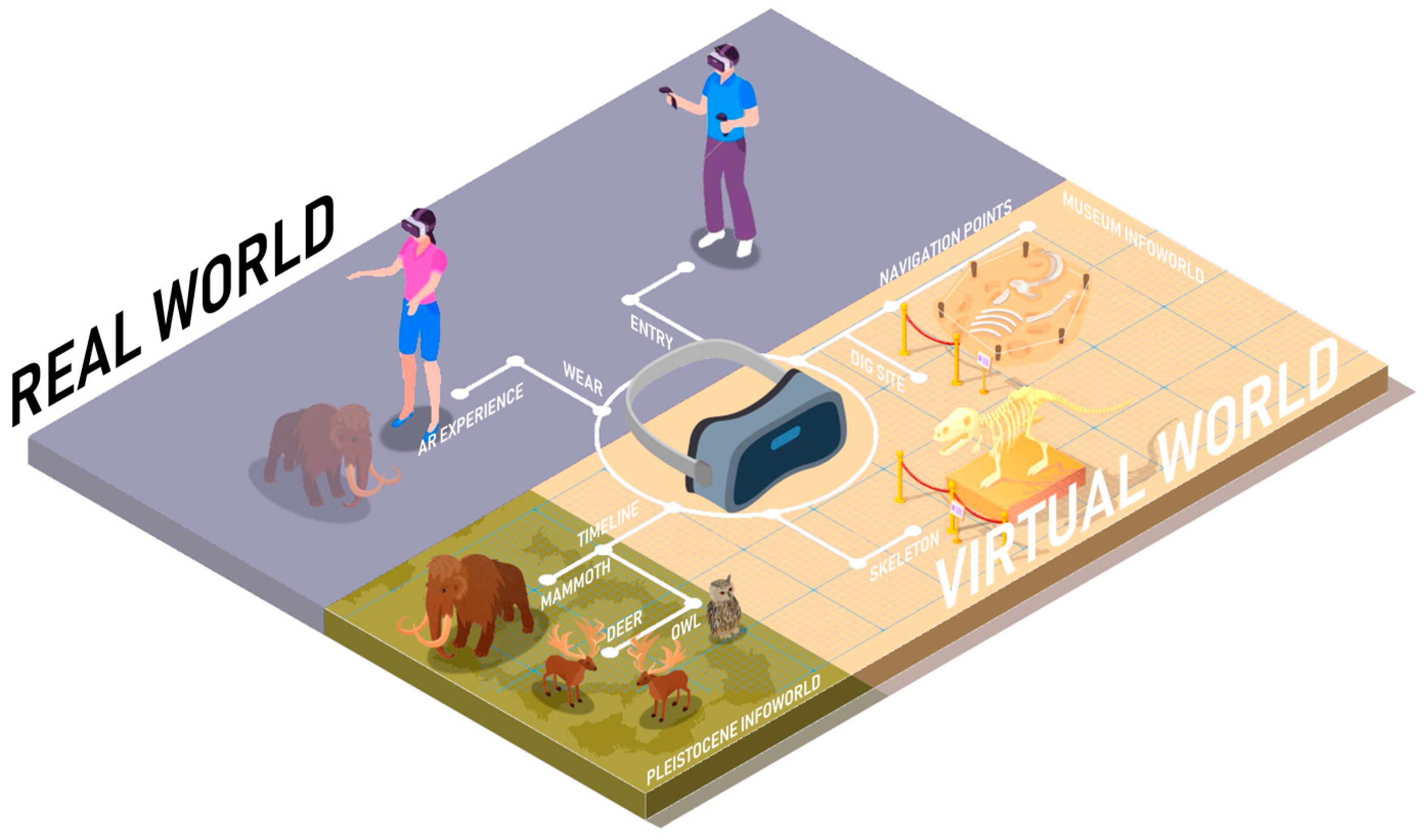

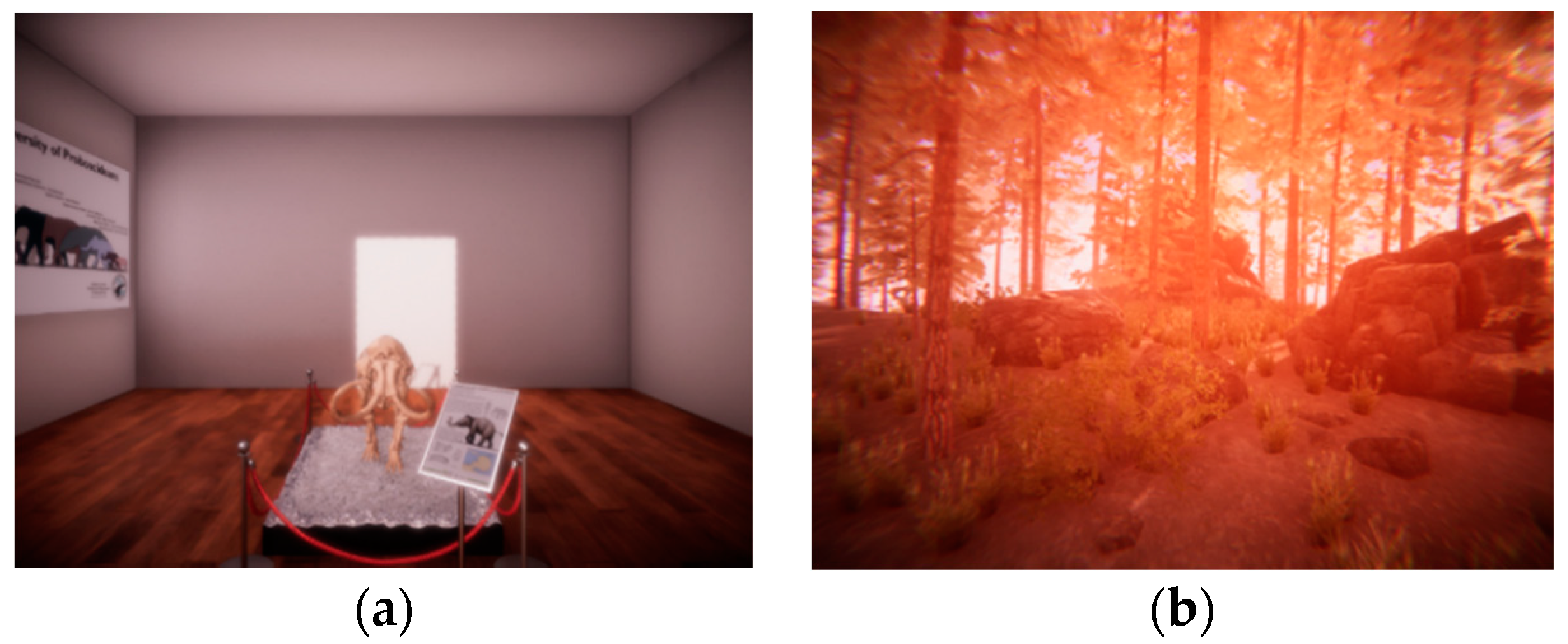

4. Case Study: XR Natural History Museum

4.1. XR Systems and Applications

4.2. Mapping to the Proposed Conceptual Model

4.3. Operational Setup and Evaluation Framework

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sacco, P.L. Culture 3.0: A New Perspective for the EU 2014–2020 Structural Funds Programming; European Expert Network on Culture: Brussels, Belgium, 2011. [Google Scholar]

- Ott, M.; Pozzi, F. Towards a new era for Cultural Heritage Education: Discussing the role of ICT. Comput. Hum. Behav. 2011, 27, 1365–1371. [Google Scholar] [CrossRef]

- Ioannides, M.; Davies, R. ViMM-Virtual Multimodal Museum: A Manifesto and Roadmap for Europe’s Digital Cultural Heritage. In Proceedings of the IEEE 2018 International Conference on Intelligent Systems, Funchal, Portugal, 25–27 September 2018; pp. 343–350. [Google Scholar] [CrossRef]

- Sylaiou, S.; Kasapakis, V.; Dzardanova, E.; Gavalas, D. Leveraging mixed reality technologies to enhance museum visitor experiences. In Proceedings of the IEEE 2018 International Conference on Intelligent Systems, Funchal, Portugal, 25–27 September 2018; pp. 595–601. [Google Scholar] [CrossRef]

- Majd, M.; Safabakhsh, R. Impact of machine learning on improvement of user experience in museums. In Proceedings of the IEEE 2017 Artificial Intelligence and Signal Processing (AISP), Shiraz, Iran, 25–27 October 2017; pp. 195–200. [Google Scholar] [CrossRef]

- Caggianese, G.; De Pietro, G.; Esposito, M.; Gallo, L.; Minutolo, A.; Neroni, P. Discovering Leonardo with artificial intelligence and holograms: A user study. Pattern Recognit. Lett. 2020, 131, 361–367. [Google Scholar] [CrossRef]

- Kiourt, C.; Pavlidis, G.; Koutsoudis, A.; Kalles, D. Multi-agents based virtual environments for cultural heritage. In Proceedings of the 2017 IEEE XXVI International Conference on Information, Communication and Automation Technologies (ICAT), Sarajevo, Bosnia and Herzegovina, 26–28 October 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Next Generation Internet—Interactive Technologies. Available online: https://ec.europa.eu/digital-single-market/en/next-generation-internet-interactive-technologies (accessed on 21 December 2020).

- 5 Trends Emerge in the Gartner Hype Cycle for Emerging Technologies. 2018. Available online: //www.gartner.com/smarterwithgartner/5-trends-emerge-in-gartner-hype-cycle-for-emerging-technologies-2018/ (accessed on 21 December 2020).

- Ptukhin, A.; Serkov, K.; Khrushkov, A.; Bozhko, E. Prospects and modern technologies in the development of VR/AR. In Proceedings of the IEEE 2018 Ural Symposium on Biomedical Engineering, Radioelectronics and Information Technology, Yekaterinburg, Russia, 7–8 May 2018; pp. 169–173. [Google Scholar] [CrossRef]

- Bekele, M.K.; Pierdicca, R.; Frontoni, E.; Malinverni, E.S.; Gain, J. A survey of augmented, virtual, and mixed reality for cultural heritage. J. Comput. Cult. Herit. 2018, 11, 1–36. [Google Scholar] [CrossRef]

- Cochrane, N. VFX-1 Virtual Reality Helmet by Forte. Game Bytes Magazine, 11 November 1994, p. 21. Available online: http://www.ibiblio.org/GameBytes/issue21/flooks/vfx1.html (accessed on 24 November 2020).

- Burdea, G.C.; Coiffet, P. Virtual Reality Technology, 2nd ed.; John Wiley & Sons: New Jersey, NJ, USA, 2017. [Google Scholar]

- Kugler, L. Why virtual reality will transform a workplace near you. Commun. ACM 2017, 60, 15–17. [Google Scholar] [CrossRef]

- Heinonen, M. Adoption of VR and AR Technologies in the Enterprise. Master’s Thesis, Lappeenranta University of Technology, Lappeenranta, Finland, 2017. [Google Scholar]

- PricewaterhouseCoopers. A Decade of Digital: Keeping Pace with Transformation. Available online: https://www.pwc.com/us/en/advisory-services/digital-iq/assets/pwc-digital-iq-report.pdf (accessed on 21 December 2020).

- Billinghurst, M.; Clark, A.; Lee, G. A survey of augmented reality. Found. Trends Hum. Comput. Interact. 2015, 8, 73–272. [Google Scholar] [CrossRef]

- Henderson, S.J.; Feiner, S.K. Augmented reality in the psychomotor phase of a procedural task. In Proceedings of the 10th IEEE International Symposium on Mixed and Augmented Reality, Basel, Switzerland, 26–29 October 2011; pp. 191–200. [Google Scholar] [CrossRef]

- Van Krevelen, D.F.W.; Poelman, R. A survey of augmented reality technologies, applications and limitations. Int. J. Virtual Real. 2010, 9, 1–20. [Google Scholar] [CrossRef]

- Boom, D.V. Pokemon Go Has Crossed 1 Billion in Downloads. Available online: https://www.cnet.com/news/pokemon-go-has-crossed-1-billion-in-downloads/ (accessed on 21 December 2020).

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Ohta, Y.; Tamura, H. Mixed Reality: Merging Real and Virtual Worlds; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Margetis, G.; Ntoa, S.; Antona, M.; Stephanidis, C. Augmenting natural interaction with physical paper in ambient intelligence environments. Multimed. Tools Appl. 2019, 78, 13387–13433. [Google Scholar] [CrossRef]

- Koutlemanis, P.; Zabulis, X. Tracking of multiple planar projection boards for interactive mixed-reality applications. Multimed. Tools Appl. 2017, 77, 17457–17487. [Google Scholar] [CrossRef]

- Margetis, G.; Grammenos, D.; Zabulis, X.; Stephanidis, C. iEat: An interactive table for restaurant customers’ experience enhancement. In Proceedings of the International Conference on Human-Computer Interaction 2013, Las Vegas, NV, USA, 21–26 July 2013; p. 666. [Google Scholar] [CrossRef]

- Kang, J. AR teleport: Digital reconstruction of historical and cultural-heritage sites for mobile phones via movement-based interactions. Wirel. Pers. Commun. 2013, 70, 1443–1462. [Google Scholar] [CrossRef]

- Papadaki, E.; Zabulis, X.; Ntoa, S.; Margetis, G.; Koutlemanis, P.; Karamaounas, P.; Stephanidis, C. The book of Ellie: An interactive book for teaching the alphabet to children. In Proceedings of the 2013 IEEE International Conference on Multimedia and Expo Workshops, San Jose, CA, USA, 15–19 July 2013; pp. 1–6. [Google Scholar] [CrossRef]

- Papaefthymiou, M.; Kateros, S.; Georgiou, S.; Lydatakis, N.; Zikas, P.; Bachlitzanakis, V.; Papagiannakis, G. Gamified AR/VR character rendering and animation-enabling technologies. In Mixed Reality and Gamification for Cultural Heritage; Ioannides, M., Magnenat-Thalmann, N., Papagiannakis, G., Eds.; Springer: Cham, Switzerland, 2017; pp. 333–357. [Google Scholar] [CrossRef]

- Karakottas, A.; Papachristou, A.; Doumanoqlou, A.; Zioulis, N.; Zarpalas, D.; Daras, P. Augmented VR. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Reutlingen, Germany, 18–22 March 2018; p. 1. [Google Scholar] [CrossRef]

- Simsarian, K.T.; Akesson, K.P. Windows on the world: An example of augmented virtuality. In Proceedings of the 6th International Conference on Man-Machine Interaction Intelligent Systems in Business, Montpellier, France, 28–30 May 1997. [Google Scholar]

- Regenbrecht, H.; Ott, C.; Wagner, M.; Lum, T.; Kohler, P.; Wilke, W.; Mueller, E. An augmented virtuality approach to 3D videoconferencing. In Proceedings of the Second IEEE and ACM International Symposium on Mixed and Augmented Reality, Tokyo, Japan, 10 October 2003; pp. 290–291. [Google Scholar] [CrossRef]

- Apostolakis, K.C.; Alexiadis, D.S.; Daras, P.; Monaghan, D.; O’Connor, N.E.; Prestele, B.; Eisert, P.; Richard, G.; Zhang, Q.; Izquierdo, E.; et al. Blending real with virtual in 3DLife. In Proceedings of the 14th International IEEE Workshop on Image Analysis for Multimedia Interactive Services (WIAMIS 2013), Paris, France, 3–5 July 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Drossis, G.; Ntelidakis, A.; Grammenos, D.; Zabulis, X.; Stephanidis, C. Immersing users in landscapes using large scale displays in public spaces. In Distributed, Ambient, and Pervasive Interactions, DAPI 2015, Lecture Notes in Computer Science; Streitz, N., Markopoulos, P., Eds.; Springer: Cham, Switzerland, 2015; Volume 9189, pp. 152–162. [Google Scholar] [CrossRef]

- Christaki, K.; Apostolakis, K.C.; Doumanoglou, A.; Zioulis, N.; Zarpalas, D.; Daras, P. Space Wars: An AugmentedVR Game. In MultiMedia Modeling, MMM 2019, Lecture Notes in Computer Science; Kompatsiaris, I., Huet, B., Mezaris, V., Gurrin, C., Cheng, W.H., Vrochidis, S., Eds.; Springer: Cham, Switzerland, 2019; Volume 11296, pp. 566–570. [Google Scholar] [CrossRef]

- Grammenos, D.; Margetis, G.; Koutlemanis, P.; Zabulis, X. Paximadaki, the game: Creating an advergame for promoting traditional food products. In Proceedings of the 16th International Academic MindTrek Conference, Tampere, Finland, 3–5 October 2012; pp. 287–290. [Google Scholar] [CrossRef]

- Zikas, P.; Bachlitzanakis, V.; Papaefthymiou, M.; Kateros, S.; Georgiou, S.; Lydatakis, N.; Papagiannakis, G. Mixed reality serious games and gamification for smart education. In Proceedings of the 2016 European Conference on Games Based Learning, Paisley, UK, 6–7 October 2016; p. 805. [Google Scholar]

- Albert, A.; Hallowell, M.R.; Kleiner, B.; Chen, A.; Golparvar-Fard, M. Enhancing construction hazard recognition with high-fidelity augmented virtuality. J. Constr. Eng. Manag. 2014, 140. [Google Scholar] [CrossRef]

- Chen, A.; Golparvar-Fard, M.; Kleiner, B. SAVES: A safety training augmented virtuality environment for construction hazard recognition and severity identification. In Proceedings of the 13th International Conference on Construction Applications of Virtual Reality, London, UK, 30–31 October 2013; pp. 373–383. [Google Scholar]

- Paul, P.; Fleig, O.; Jannin, P. Augmented virtuality based on stereoscopic reconstruction in multimodal image-guided neurosurgery: Methods and performance evaluation. IEEE Trans. Med. Imaging 2005, 24, 1500–1511. [Google Scholar] [CrossRef] [PubMed]

- Coleman, B. Using Sensor Inputs to Affect Virtual and Real Environments. IEEE Pervasive Comput. 2009, 8, 16–23. [Google Scholar] [CrossRef]

- Mann, S.; Havens, J.C.; Iorio, J.; Yuan, Y.; Furness, T. All Reality: Values, taxonomy, and continuum, for Virtual, Augmented, eXtended/MiXed (X), Mediated (X, Y), and Multimediated Reality/Intelligence. In Proceedings of the AWE 2018 Conference, Santa Clara, CA, USA, 30 May–1 June 2018. [Google Scholar]

- Fast-Berglund, Å.; Gong, L.; Li, D. Testing and validating Extended Reality (xR) technologies in manufacturing. Procedia Manuf. 2018, 25, 31–38. [Google Scholar] [CrossRef]

- Lee, Y.; Moon, C.; Ko, H.; Lee, S.H.; Yoo, B. Unified Representation for XR Content and its Rendering Method. In Proceedings of the 25th ACM International Conference on 3D Web Technology, Seoul, Korea, 9–13 November 2020; pp. 1–10. [Google Scholar] [CrossRef]

- Extended Reality (XR = Augmented Reality + Virtual Reality + Mixed Reality) Marketplace 2018–2023. Available online: https://www.researchandmarkets.com/reports/4658849/extended-reality-xr-augmented-reality (accessed on 24 November 2020).

- Kenderdine, S. Embodiment, Entanglement, and Immersion in Digital Cultural Heritage. In A New Companion to Digital Humanities; Schreibman, S., Siemens, R., Unsworth, J., Eds.; John Wiley & Sons: Chichester, UK, 2015; pp. 22–41. ISBN 9781118680605. [Google Scholar]

- Barbot, B.; Kaufman, J.C. What makes immersive virtual reality the ultimate empathy machine? Discerning the underlying mechanisms of change. Comput. Hum. Behav. 2020, 111, 106431. [Google Scholar] [CrossRef]

- Kidd, J. With New Eyes I See: Embodiment, empathy and silence in digital heritage interpretation. Int. J. Herit. Stud. 2019, 25, 54–66. [Google Scholar] [CrossRef]

- Mann, S.; Fung, J. Videoorbits on eye tap devices for deliberately diminished reality or altering the visual perception of rigid planar patches of a real world scene. In Proceedings of the International Symposium on Mixed Reality (ISMR2001), Yokohama, Japan, 14–15 March 2001; pp. 48–55. [Google Scholar]

- Mori, S.; Ikeda, S.; Saito, H. A survey of diminished reality: Techniques for visually concealing, eliminating, and seeing through real objects. IPSJ Trans. Comput. Vis. Appl. 2017, 9, 1–14. [Google Scholar] [CrossRef]

- Kido, D.; Fukuda, T.; Yabuki, N. Diminished reality system with real-time object detection using deep learning for onsite landscape simulation during redevelopment. Environ. Model. Softw. 2020, 131, 104759. [Google Scholar] [CrossRef]

- Dhamo, H.; Navab, N.; Tombari, F. Object-driven multi-layer scene decomposition from a single image. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 5369–5378. [Google Scholar] [CrossRef]

- Yeh, R.A.; Chen, C.; Lim, T.Y.; Schwing, A.G.; Hasegawa-Johnson, M.; Do, M.N. Semantic Image Inpainting with Deep Generative Models. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5485–5493. [Google Scholar] [CrossRef]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Free-Form Image Inpainting with Gated Convolution. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 4471–4480. [Google Scholar] [CrossRef]

- Hartholt, A.; Fast, E.; Reilly, A.; Whitcup, W.; Liewer, M.; Mozgai, S. Ubiquitous virtual humans: A multi-platform framework for embodied AI agents in XR. In Proceedings of the 2019 IEEE International Conference on Artificial Intelligence and Virtual Reality (AIVR), San Diego, CA, USA, 9–11 December 2019; pp. 308–3084. [Google Scholar] [CrossRef]

- Ioannides, M.; Magnenat-Thalmann, N.; Papagiannakis, G. Mixed Reality and Gamification for Cultural Heritage; Springer: Cham, Switzerland, 2017; ISBN 9783319496078. [Google Scholar]

- Magnenat-Thalmann, N.; Foni, A.; Papagiannakis, G.; Cadi-Yazli, N. Real Time Animation and Illumination in Ancient Roman Sites. Int. J. Virtual Real. 2007, 6, 11–24. [Google Scholar]

- Papaefthymiou, M.; Feng, A.; Shapiro, A.; Papagiannakis, G. A fast and robust pipeline for populating mobile AR scenes with gamified virtual characters. In Proceedings of the SIGGRAPH Asia Mobile Graphics and Interactive Applications, Kobe, Japan, 2–6 November 2015. [Google Scholar] [CrossRef]

- Kasap, Z.; Magnenat-Thalmann, N. Intelligent virtual humans with autonomy and personality: State-of-the-art. Intell. Decis. Technol. 2007. [Google Scholar] [CrossRef]

- Papanikolaou, P.; Papagiannakis, G. Real-Time Separable Subsurface Scattering for Animated Virtual Characters. In GPU Computing and Applications; Cai, Y., See, S., Eds.; Springer: Singapore, 2015; pp. 53–67. ISBN 9789812871343. [Google Scholar]

- Alexiadis, D.S.; Zioulis, N.; Zarpalas, D.; Daras, P. Fast deformable model-based human performance capture and FVV using consumer-grade RGB-D sensors. Pattern Recognit. 2018, 79, 260–278. [Google Scholar] [CrossRef]

- Frueh, C.; Sud, A.; Kwatra, V. Headset removal for virtual and mixed reality. In Proceedings of the ACM SIGGRAPH 2017 Talks on SIGGRAPH ’17, Los Angeles, CA, USA, 30 July–3 August 2017; pp. 1–2. [Google Scholar] [CrossRef]

- Sandor, C.; Fuchs, M.; Cassinelli, A.; Li, H.; Newcombe, R.; Yamamoto, G.; Feiner, S. Breaking the Barriers to True Augmented Reality. arXiv 2015, arXiv:1512.05471. [Google Scholar]

- Valli, A. The design of natural interaction. Multimed. Tools Appl. 2008, 38, 295–305. [Google Scholar] [CrossRef]

- Brondi, R.; Alem, L.; Avveduto, G.; Faita, C.; Carrozzino, M.; Tecchia, F.; Bergamasco, M. Evaluating the Impact of Highly Immersive Technologies and Natural Interaction on Player Engagement and Flow Experience in Games. In Entertainment Computing—ICEC 2015; Chorianopoulos, K., Divitini, M., Baalsrud Hauge, J., Jaccheri, L., Malaka, R., Eds.; Springer: Cham, Switzerland, 2015; Volume 9353, pp. 169–181. ISBN 9783319245898. [Google Scholar]

- Stephanidis, C. Human Factors in Ambient Intelligence Environments. In Handbook of Human Factors and Ergonomics; John Wiley & Sons: Hoboken, NJ, USA, 2012; pp. 1354–1373. ISBN 9781118131350. [Google Scholar]

- Bastug, E.; Bennis, M.; Medard, M.; Debbah, M. Toward Interconnected Virtual Reality: Opportunities, Challenges, and Enablers. IEEE Commun. Mag. 2017, 55, 110–117. [Google Scholar] [CrossRef]

- McTear, M.; Callejas, Z.; Griol, D. Conversational Interfaces: Past and Present. In The Conversational Interface; Springer: Cham, Switzerland, 2016; pp. 51–72. ISBN 9783319329673. [Google Scholar]

- Otter, D.W.; Medina, J.R.; Kalita, J.K. A survey of the usages of deep learning for natural language processing. IEEE Trans. Neural Netw. Learn. Syst. 2020, 1–21. [Google Scholar] [CrossRef]

- Dean, D.; Edson, G. Handbook for Museums; Routledge: London, UK, 2013; ISBN 9781135908379. [Google Scholar]

- Carrozzino, M.; Bergamasco, M. Beyond virtual museums: Experiencing immersive virtual reality in real museums. J. Cult. Herit. 2010, 11, 452–458. [Google Scholar] [CrossRef]

- Jung, T.; Tom Dieck, M.C.; Lee, H.; Chung, N. Effects of Virtual Reality and Augmented Reality on Visitor Experiences in Museum. In Proceedings of the Information and Communication Technologies in Tourism, Bilbao, Spain, 2–5 February 2016; pp. 621–635. [Google Scholar]

- Margetis, G.; Papagiannakis, G.; Stephanidis, C. Realistic Natural Interaction with Virtual Statues in X-Reality Environments. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 801–808. [Google Scholar] [CrossRef]

- Apostolakis, K.C.; Margetis, G.; Stephanidis, C. Pleistocene Crete: A narrative, interactive mixed reality exhibition that brings prehistoric wildlife back to life. In Proceedings of the 2020 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Recife, Brazil, 9–13 November 2020; pp. 237–240. [Google Scholar] [CrossRef]

- Darley, A. Simulating natural history: Walking with Dinosaurs as hyper-real edutainment. Sci. Cult. 2003, 12, 227–256. [Google Scholar] [CrossRef]

- Poulakakis, N.; Parmakelis, A.; Lymberakis, P.; Mylonas, M.; Zouros, E.; Reese, D.S.; Glaberman, S.; Caccone, A. Ancient DNA forces reconsideration of evolutionary history of Mediterranean pygmy elephantids. Biol. Lett. 2006, 2, 451–454. [Google Scholar] [CrossRef]

- Weesie, P.D. A Pleistocene endemic island form within the genus Athene: Athene cretensis n. sp. (Aves, Strigiformes) from Crete. In Proceedings of the Koninklijke Nederlands Akademie van Wetenschappen Amsterdam. Series B, Physical Sciences; North-Holland Pub. Co.: Amsterdam, The Netherlands, 1976; Volume 85, pp. 323–336. [Google Scholar]

- De Vos, J. Pleistocene deer fauna in Crete: Its adaptive radiation and extinction. Tropics 2000, 10, 125–134. [Google Scholar] [CrossRef][Green Version]

- Liu, Y. Evaluating visitor experience of digital interpretation and presentation technologies at cultural heritage sites: A case study of the old town, Zuoying. Built Herit. 2020, 4, 1–15. [Google Scholar] [CrossRef]

| Diminished Reality | |

| Challenges |

|

| Future Directions |

|

| True Mediated Reality | |

| Challenges |

|

| Future Directions |

|

| Natural Multimodal Interaction | |

| Challenges |

|

| Future Directions |

|

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Margetis, G.; Apostolakis, K.C.; Ntoa, S.; Papagiannakis, G.; Stephanidis, C. X-Reality Museums: Unifying the Virtual and Real World Towards Realistic Virtual Museums. Appl. Sci. 2021, 11, 338. https://doi.org/10.3390/app11010338

Margetis G, Apostolakis KC, Ntoa S, Papagiannakis G, Stephanidis C. X-Reality Museums: Unifying the Virtual and Real World Towards Realistic Virtual Museums. Applied Sciences. 2021; 11(1):338. https://doi.org/10.3390/app11010338

Chicago/Turabian StyleMargetis, George, Konstantinos C. Apostolakis, Stavroula Ntoa, George Papagiannakis, and Constantine Stephanidis. 2021. "X-Reality Museums: Unifying the Virtual and Real World Towards Realistic Virtual Museums" Applied Sciences 11, no. 1: 338. https://doi.org/10.3390/app11010338

APA StyleMargetis, G., Apostolakis, K. C., Ntoa, S., Papagiannakis, G., & Stephanidis, C. (2021). X-Reality Museums: Unifying the Virtual and Real World Towards Realistic Virtual Museums. Applied Sciences, 11(1), 338. https://doi.org/10.3390/app11010338