Abstract

In this study, we proposed an interval type-2 fuzzy neural network (IT2FNN) based on an improved particle swarm optimization (PSO) method for prediction and control applications. The noise-suppressing ability of the proposed IT2FNN was superior to that of the traditional type-1 fuzzy neural network. We proposed dynamic group cooperative particle swarm optimization (DGCPSO) with superior local search ability to overcome the local optimum problem of traditional PSO. The proposed model and related algorithms were verified through the accuracy of prediction and wall-following control of a mobile robot. Supervised learning was used for prediction, and reinforcement learning was used to achieve wall-following control. The experimental results demonstrated that DGCPSO exhibited superior prediction and wall-following control.

1. Introduction

Neural networks (NNs) and fuzzy NNs (FNNs) have been widely applied in various applications involving system identification prediction and control. For example, artificial NNs can be used to accurately predict cemented paste backfill strength [1]. Nonlinear autoregressive functions with an external input NN are combined with Simscape electronic systems to achieve high-accuracy identification in DC motors [2]. In autonomous robot control, fuzzy logic exhibits high toughness and noise-suppressing ability [3]. Reinforcement learning has been used in autonomous driving technology to provide convenience and to avoid crashes caused by driver errors. However, this approach has limited accuracy, and adaptive control is absent [4]. Anish and Parhi [5] connected infrared sensors to an adaptive neuro-fuzzy inference system to realize obstacle detection. The steering angle and speed were appropriately adjusted to achieve navigation and collision safety. In intelligent omnidirectional robots, a proportional-integral controller was designed for adjusting the steering angle to a predetermined final desired position to achieve satisfactory navigation and obstacle avoidance [6].

The traditional type-1 fuzzy system (T1FS) comprises two conceptual components: a set of rules and a reasoning mechanism. The rules contain fuzzy rule definitions; the variable provides a degree of membership for each fuzzy rule. The reasoning mechanism involves counting the degree of membership obtained by each rule to obtain the firing strength [7,8,9]. A neural fuzzy system combined with approximation exhibits superior chaotic time-series prediction and is considerably compact when compared with a traditional FNN [10]. However, noise from the environment can adversely affect T1FS performance [11]. An interval type-2 fuzzy system (IT2FS) was developed to overcome drawbacks of T1FS [12]. However, the computational complexity of this system is high. To overcome the computational complexity problem of the IT2FS, the type-reducer method was used [13]. A type-2 FNN (T2FNN) was applied to an actual wind farm, and the results were simulated to verify the validity and applicability of the approach for accurate wind energy prediction of the power system control center [14]. Kim and Chwa [15] and Mendel [16] have adopted the IT2FS for solving control and prediction problems, improving the flexible of the type-2 fuzzy system and the error tolerance of the controller [17]. Furthermore, to reduce the uncertainty caused by large differences between patient parameters, the interval type-2 FNN (IT2FNN) was used as the controller for surgical anesthesia stimulation, and the backpropagation (BP) algorithm trained the parameters of the IT2FNN controller online [18].

The BP algorithm exhibits fast convergence and local optimum susceptibility; the steepest descent technique obtains an error function for adjusting the parameters in the network model. Therefore, this process is typically used in the parameter learning process of the FNN [19]. Although the BP algorithm has fast convergence, it is susceptible to the local optimum problem. Therefore, some researchers have adopted evolutionary computation methods to solve the parameter optimization problem [20,21,22]. Evolutionary algorithms based on various biological behavior models, such as artificial bee colony (ABC), ant colony optimization (ACO), differential evolution (DE), the whale optimization algorithm (WOA), and particle swarm optimization (PSO), have been derived by observing nature [23,24,25]. In this study, PSO was used because of its fast convergence and relatively few parameter settings. These advantages are offset by instability and susceptibility to the local optimum problem. Quantum-based particle swarm optimization (QPSO) can improve on PSO performance. However, the QPSO method is susceptible to premature convergence [26]. Cooperative PSO (CPSO) can obtain a solution set with strong diversity and excellent convergence. However, only the best local and global best positions of traditional PSO are used in CPSO, and the process is time consuming. Therefore, the optimal solution cannot be attained using CPSO [27]. In this study, grouping and cooperative features were introduced into PSO to effectively enhance traditional PSO performance. The proposed dynamic group cooperative PSO (DGCPSO) learning method combines dynamic group and CPSO to improve the global search ability in order to reduce computation time, avoiding a suboptimal solution and providing a search ability close to the global optimum.

The objectives of this study were as follows: (1) designing of an IT2FNN based on improved PSO for prediction and control applications, (2) developing a DGCPSO with superior area search capabilities, and (3) verifying the proposed model and related algorithms for the supervised learning prediction problem and the reinforcement learning mobile robot wall-following control problem. The contributions of this study are as follows: (1) designing the IT2FNN based on improved PSO to achieve superior noise suppression relative to the traditional type-1 FNN, and (2) developing DGCPSO to avoid the local optimum problem. The rest of this study consists of five sections. Section 2 describes the structure of the IT2FNN. The improved PSO and its analysis are described in Section 3. Section 4 presents experimental results of dynamic system identification, chaotic time-series prediction, and mobile robot control. Finally, the conclusions and recommendations for future studies are mentioned in Section 5.

2. Structure of IT2FNN

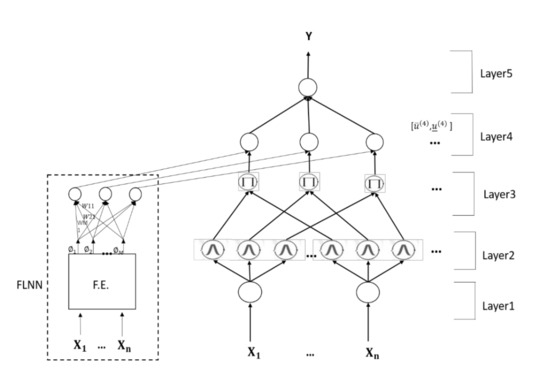

Figure 1 depicts the structure of the IT2FNN, which includes multiple inputs and a single output. The input of the network is denoted by X and output is denoted by Y. In traditional IT2FS, the computational complexity in the defuzzification process is usually very high. Therefore, to increase the effectiveness and reduce complexity of the fuzzy system, this study used the type-reducer method, proposed by Castillo [12], in the defuzzification part. A functional link neural network (FLNN) with a nonlinear combined output was assigned a fuzzy rule in the consequent part [28]. Each fuzzy rule j in the IT2FNN structure is expressed as follows:

where is the input number, is the input variables, is the local output variables, is the type-2 fuzzy set, is the link weight of local output, is the basis trigonometric function of input variables, M is the basis function number, and rule j is the jth fuzzy rule.

Figure 1.

Structure of an interval type-2 fuzzy neural network (IT2FNN).

The proposed IT2FNN has a five-layer NN. Here, represents the th layer output of a node, and each layer of a node operates as follows:

- Layer 1:

- This layer is the input layer, and each node is an input node. Only the input signal is passed to the next layer of the network.

- Layer 2:

- This layer is the membership function layer that performs the fuzzification operations. Each node is defined as a type-2 fuzzy set. Each membership function is a Gaussian membership function represented by an uncertain mean [,] and a fixed standard deviation (STD) . The function is expressed as follows:where and represent the ith input of the j-term mean and standard Gaussian membership function, respectively. The footprint of uncertainty of the Gaussian membership function represents a bounded interval of the upper membership function and lower membership function . The output of each node in the second layer represents an interval . The formula for calculating the membership degree is as follows:and

- Layer 3:

- This layer is also called the firing layer, and each node is a rule node; an algebraic product calculation is used to receive the firing strength of each rule node and .where and represent the firing strength of the rule corresponding to the upper and lower boundaries of the interval.

- Layer 4:

- This layer is called consequent layer and generates type-1 fuzzy sets through a type-reducer operation. In this layer, a numerical output is obtained after defuzzification process. The center of sets type-reducer was used to reduce the computational complexity of the type-reducer process [29]. The formula is as follows:This method simplifies the type-reducer process and only considers the combination of upper and lower boundary firing strength. The combination of firing strength and the corresponding output are expressed as follows:andwhere is obtained from the nonlinear combination of the FLNN input variables , is the link weight of each node in the FLNN, and is the function extension of the input variable. The function expansion consists of the basis functions of trigonometric polynomials.where , M represents the number of basis functions, and N represents the number of input variables.

- Layer 5:

- This layer is the output layer, and the type-reducer operation is performed on the previous layer. An interval type output is obtained. Finally, defuzzification is completed by calculating the average of and , and the NN crisp value of output y is obtained.

3. Proposed Improved Particle Swarm Optimization

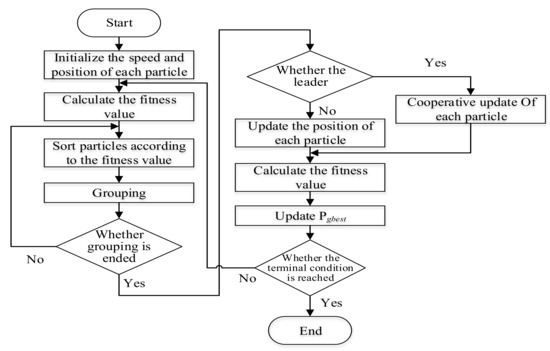

This study proposes an improved PSO method, namely DGCPSO. In DGCPSO, the concept of groups and collaborations are joined to achieve the search ability of traditional PSO. PSO has fast convergence and simple implementation but also has low accuracy, excessively fast convergence, and is susceptible to local optimal solutions in complex problems. Figure 2 depicts the flowchart of DGCPSO.

Figure 2.

Flowchart of dynamic group cooperative particle swarm optimization (DGCPSO).

- (1)

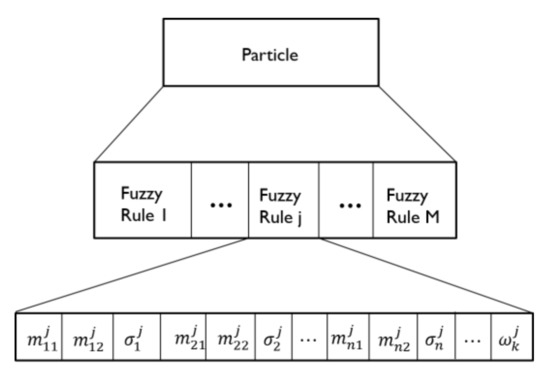

- Step 1: Coding

The parameters of the IT2FNN are used to encode a particle; each particle is an IT2FNN. The DGCPSO proposed in this study is used to change the parameters of each IT2FNN, containing the uncertain mean [], deviation , and link weight in a FLNN . The coding format is depicted in Figure 3.

Figure 3.

Coding diagram of particles.

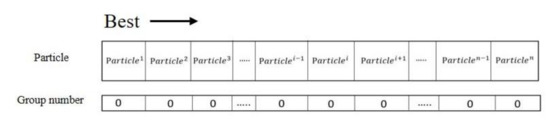

- (2)

- Step 2: Sorting

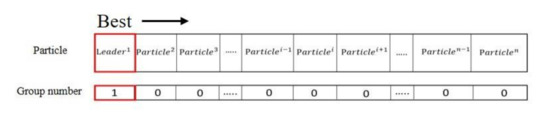

All particles are sorted from high to low according to their fitness values, and the group number 0 is the initialization of all particles, as depicted in Figure 4.

Figure 4.

Sorting diagram of particles.

- (3)

- Step 3: Calculate the Similarity Threshold

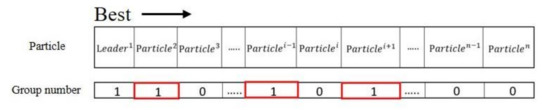

Set the current group number to 0 and the highest fitness particle as the leader of the new group; update the group number and the initial value of to 1, as depicted in Figure 5. Then calculate the similarity threshold of this group, including the average distance and the average fitness. The values are the average distance difference and average fitness value difference between the ungrouped particles (the is 0) and the group leader (L). Here, , are the distance threshold and fitness value threshold of the th group, is the jth dimension of the th group leader, is the fitness value of the th group leader, is the fitness value of the ith particle, is the total number of particles with group number 0 in the current swarm, J is the dimension of the code, and n is the total number of particles. The formulas are expressed as follows.

Figure 5.

Leading diagram of the new group setting.

- (4)

- Step 4: Particle Grouping

Sequentially calculate the ungrouped particles using the following formulas to determine the distance difference () and fitness value difference () between the particle and the leader particle.

When and , the particles are associated with the group leader, placed in the same particle group, and the group number is updated to ; otherwise, the particle is not classified to the group and no operation is performed (Figure 6).

Figure 6.

Particle grouping diagram.

- (5)

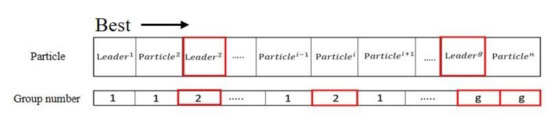

- Step 5: Determine Whether to End Grouping

If ungrouped particles are present, then go back to step 4 and set the highest fitness particle among the ungrouped particles as the leader of the new group. Repeat steps 3 to 4 as depicted in Figure 7. If all the particles have been grouped, then the grouping step ends.

Figure 7.

Repeat steps 3 to 4 diagram.

- (6)

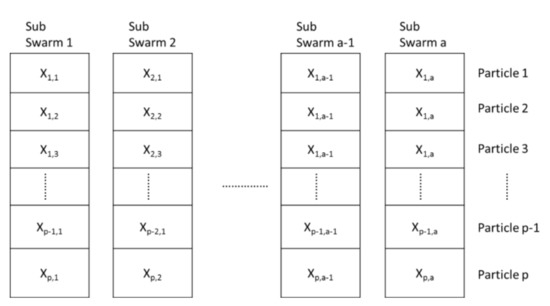

- Step 6: Updating Particles Cooperatively

CPSO changes the traditional PSO to a P group of one-dimensional vectors, with each group representing one dimension of the original issue. Figure 8 illustrates the flowchart of the CPSO method; instead of a group trying to detect the best P-dimensional vector, which is divided into components to P-group one-dimensional vectors, this study proposes a DGCPSO learning method combining the dynamic group and CPSO to enhance the global search ability to reduce computation time.

Figure 8.

Cooperative update particle swarm algorithm.

The original position update formula is as follows.

The cooperative update particle formula is as follows.

- (7)

- Step 7: Whether the Terminal Condition is Reached

Repeat steps 2 to 6 until the terminal condition is reached.

4. Experimental Results

Three experiments were performed to verify the effectiveness of the proposed method: dynamic system identification, chaotic time-series prediction, and mobile robot control.

4.1. Dynamic System Identification

The multiple time delays of the nonlinear dynamic system are expressed as follows:

where

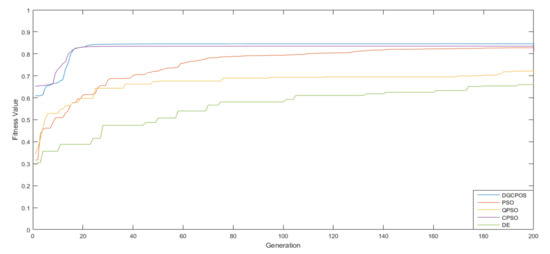

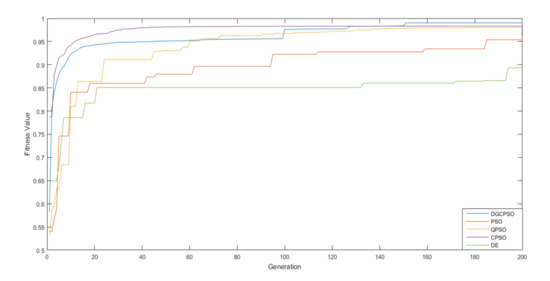

Dynamic system identification was performed to change the parameters of the IT2FNN using the proposed DGCPSO. Table 1 lists the initial parameters of the DGCPSO settings, including the total number of particles (NP), inertia weight ω, acceleration constants and , and the generation and number of fuzzy rules. The compared items consist of the fitness value of the best, worst, average, and the standard deviation (SD). Table 2 and Figure 9, Figure 10 and Figure 11 reveal that the proposed method exhibited superior learning performance to other algorithms [24,25,26,27].

Table 1.

DGCPSO parameters setting.

Table 2.

Dynamic system identification.

Figure 9.

Learning curves of dynamic system identification.

Figure 10.

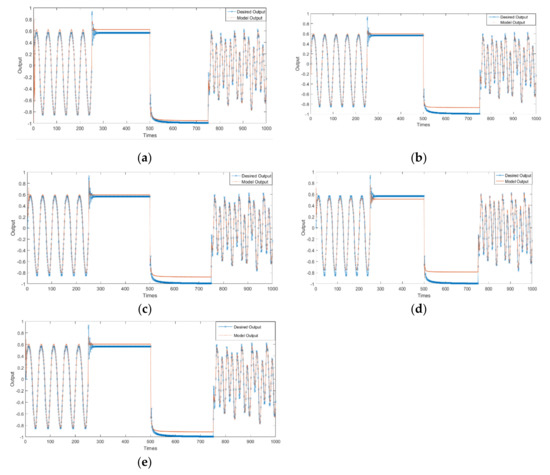

Dynamic system identification of (a) DGCPSO, (b) DE [24], (c) PSO [25], (d) QPSO [26], and (e) CPSO [27].

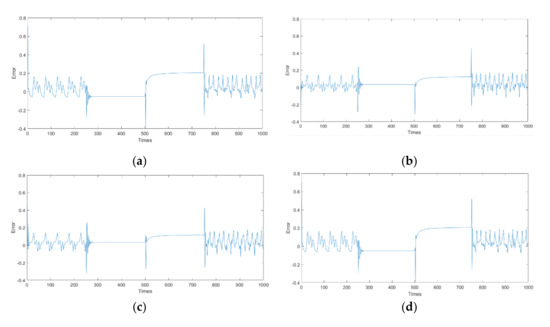

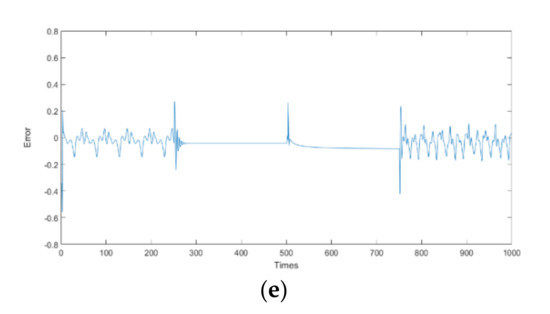

Figure 11.

Dynamic system identification error rates of (a) DGCPSO, (b) DE [24], (c) PSO [25], (d) QPSO [26], and (e) CPSO [27].

4.2. Chaotic Time-Series Prediction

In this example, the Mackey–Glass chaotic time-series was considered. The following delayed differential equation was followed.

where τ = 17 and x (0) = 1.2. The proposed IT2FNN has four inputs, each corresponding to , and one output represents where Δt is a prediction of the future time. The first 500 pairs were used as the training data set, and the remaining 500 pairs were used as the test data sets for validating the proposed method.

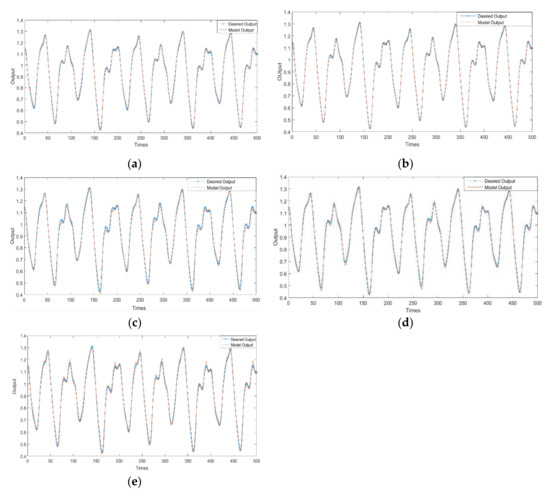

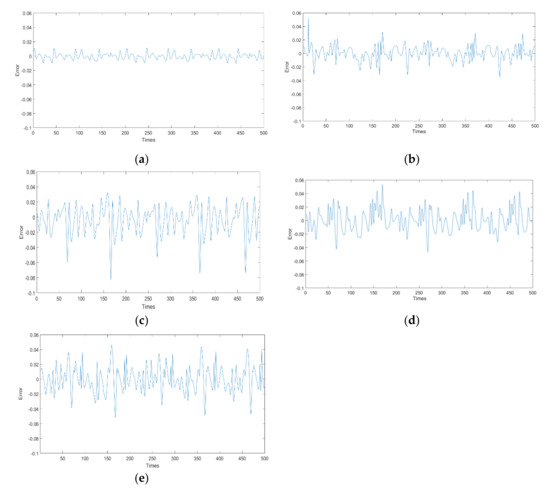

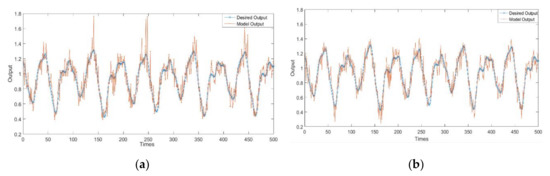

The learning phase involved using the DGCPSO method for parameter learning, and the relevant parameter setting is presented in Table 3. The learning was performed for 200 generations and repeated 30 times. Figure 12, Figure 13 and Figure 14 and Table 4 present the results for the chaotic time-series. The results indicate that the proposed method achieves superior performance to other methods. The root mean square error (RMSE) results from the chaotic time-series with noise added were 0.106 and 0.009 in the T1FNN and T2FNN, respectively (Figure 15). Therefore, the performance of the T2FNN was considerably inferior to that of the T1FNN.

Table 3.

Parameter setting of chaotic time-series.

Figure 12.

Learning curves of chaotic time-series.

Figure 13.

Chaotic time-series prediction results for (a) DGCPSO, (b) DE [24], (c) PSO [25], (d) QPSO [26], and (e) CPSO [27].

Figure 14.

Chaotic time-series prediction error rates of (a) DGCPSO, (b) DE [24], (c) PSO [25], (d) QPSO [26], and (e) CPSO [27].

Table 4.

Chaotic time-series performance of various algorithms.

Figure 15.

Results after adding noise for (a) Type-1 and (b) Type-2.

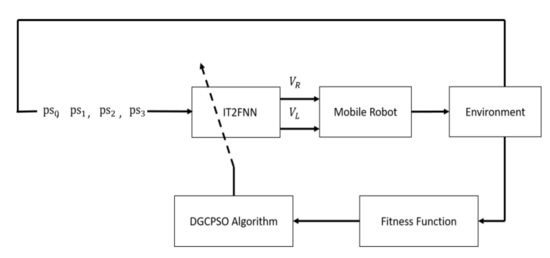

4.3. Wall-Following Control of a Mobile Robot

Reinforcement learning was used to achieve wall-following control of a mobile robot. A complex system need not be used to design the control rules of mobile robots. Figure 16 depicts the block diagram of the entire system. Only an appropriate fitness function should be defined to assess the effectiveness of mobile robots in a training setting. The IT2FNN had four input signals (, , , ), and two output signals (, ). The input signal was the infrared sensing distance of the mobile robot, and the output signal was the turning speed of the left and right wheels of the mobile robot.

Figure 16.

Block diagram of the system.

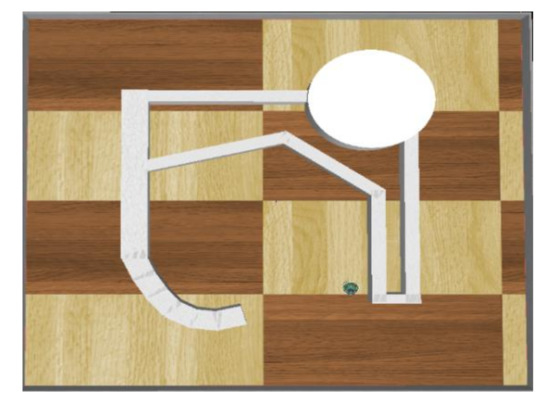

Straight lines, right angles, smooth curves, and U-shaped curves were set as the training setting to verify the response of the mobile robot to various situations in uncertain environments. The size of the training setting was 2.1 (m) × 2 (m), as depicted in Figure 17.

Figure 17.

Training setting for wall-following control.

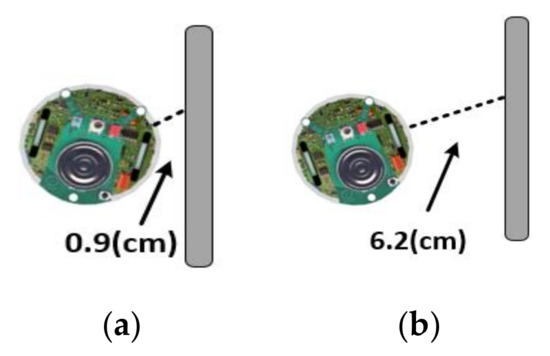

Three termination conditions were set for the mobile robot to ensure wall-following without collision or moving away from walls during the experiment.

- The mobile robot moves more distance in the training setting than the distance of a circle around the training setting (only one circle of the training setting), indicating that the mobile robot successfully circled the training setting.

- One sensor of the mobile robot measures a distance of less than 1 cm. Figure 18a illustrates a collision between the mobile robot and the wall.

Figure 18. (a) Collision between the mobile robot and the wall; (b) the mobile robot deviated wall.

Figure 18. (a) Collision between the mobile robot and the wall; (b) the mobile robot deviated wall. - A sensor to the side of the mobile robot detects a distance greater than 6 cm, indicating that the mobile robot has deviated from the wall, as depicted in Figure 18b.

Each individual represents a solution of an IT2FNN controller, and the controller controls the mobile robot to achieve the wall-following movement in the training setting. After movement, the mobile robot is returned to the initial position. The robot repeats this movement until it reaches a termination condition. The fitness function was used to assess the effectiveness of the mobile robot in the learning process.

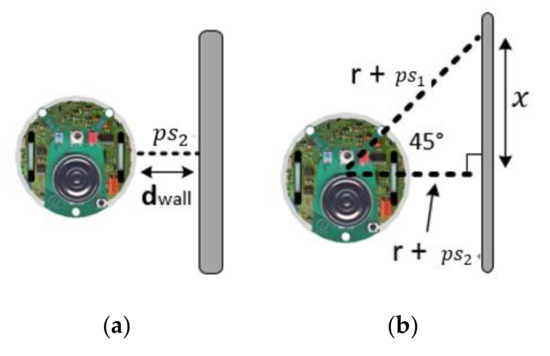

The fitness function has three subfitness parameters, namely the total distance the mobile robot moved (), the distance the mobile robot maintained from the wall (), and the degree of parallelism between the mobile robot and the wall ().

- Total distance the mobile robot moved: The closer the moving distance of the mobile robot is to the predefined value , the closer the mobile robot is to completing one circle around the training setting.If , then the mobile robot successfully circumvents the training setting under all conditions. If , then the fitness function is zero.

- The distance the mobile robot maintained from the wall: The fitness function is the average value of WD(t) during the moving time. The aim is to maintain a fixed distance between the mobile robot and the wall. In each time step, the distance WD(t) between the side of the mobile robot and the wall is calculated as follows:where is the expected distance of the robot from the wall. A predefined distance was set to 4 cm, as shown in Figure 19a, and is the time step of the mobile robot during the learning process of the wall-following motion. If the mobile robot maintains a fixed expected distance from the wall, then the value of is equal to zero.

Figure 19. (a) Distance the mobile robot kept from the wall and (b) degree of parallelism between the mobile robot and the wall.

Figure 19. (a) Distance the mobile robot kept from the wall and (b) degree of parallelism between the mobile robot and the wall. - The degree of parallelism between the mobile robot and the wall: The fitness function was used to evaluate the degree of parallelism between the mobile robot and the wall. If the robot was parallel to the wall, then the angle between the sensor on the right side of the mobile robot and the wall was 90°. According to the law of cosines, must be equal to , forming an isosceles triangle, as depicted in Figure 19b. The formulas are expressed as follows:where and are the distances of the mobile robot sensors, and r is the radius of the mobile robot. The fitness function is defined as the average value of the degree of parallelism between the mobile robot and the wall during the moving time. If the mobile robot is parallel to the wall, then the value of is equal to zero.Therefore, a fitness function F (·) was used to evaluate overall control performance. This fitness function was formed by combining the aforementioned subfitness functions () as follows:This proposed DGCPSO was compared with other evolutionary algorithms. Table 5 lists the initial parameter settings of DGCPSO. To evaluate the algorithm stability, the experiment was performed ten times for each algorithm.

Table 5. DGCPSO initialization parameter setting.

Table 5. DGCPSO initialization parameter setting.

The effectiveness of the algorithm in the wall-following mode can be verified with Table 6. The fitness value of the best, worst, average, SD, and number of successful runs (NSR) of each algorithm were compared. The number of times the mobile robot successfully learned to circle the training setting in ten evolutionary simulations was noted. Table 6 presents a comparison of the effectiveness of the fitness values of various algorithms for wall-following control in the training setting. Under the same conditions, DGCPSO exhibited superior wall-following learning. Furthermore, the STD data revealed that the algorithm proposed in this study has high stability (Table 6).

Table 6.

Efficiency of difference algorithms in the training setting.

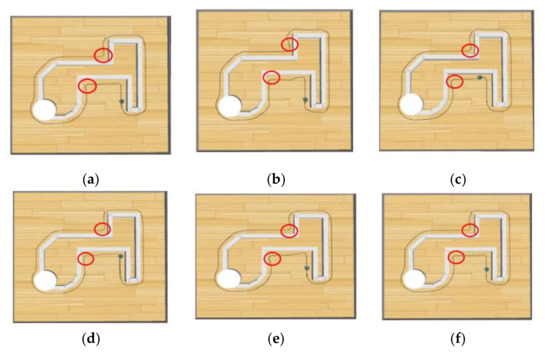

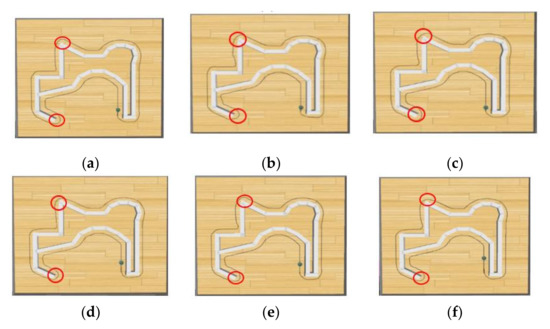

To verify whether different algorithms could successfully adapt the mobile robot to various settings after learning, two test settings were established and the results were compared with the original training setting to verify the effectiveness of the wall-following control of the mobile robot. In the wall-following control, the fitness value of the mobile robot was used to assess the wall-following control of the mobile robot. Table 7 indicates that the control efficiency of the proposed DGCPSO was superior to other algorithms (Figure 20 and Figure 21).

Table 7.

Efficiency of difference algorithms in the testing setting.

Figure 20.

Moving path of setting 1 of (a) DGCPSO, (b) ABC [22], (c) WOA [23], (d) DE [24], (e) PSO [25], and (f) QPSO [26].

Figure 21.

Moving path of setting 2 of (a) DGCPSO, (b) ABC [22], (c) WOA [23], (d) DE [24], (e) PSO [25], and (f) QPSO [26].

5. Conclusions

In this study, an IT2FNN based on DGCPSO learning was proposed for identification, prediction, and control applications. The noise suppressing ability of the IT2FNN was superior to the traditional T1FNN. In addition, the proposed DGCPSO has a superior local search ability compared to that of the traditional PSO. Three types of problems, namely two supervised learning identification and prediction problems and one reinforcement learning wall-following control of a mobile robot problem, were used to verify the proposed model and related algorithms. The results revealed that the average fitness value of the proposed DGCPSO was 1.2% higher than that of traditional PSO in identification, prediction, and wall-following control.

The advantages of the proposed IT2FNN based on DGCPSO learning are presented as follows:

- (1)

- The proposed DGCPSO uses the dynamic grouping and cooperative particle swarm optimization to improve search capabilities and reach near global optimum;

- (2)

- Interval type-2 fuzzy sets are used in IT2FNN to reduce sensor-sensing noise and disturbance;

- (3)

- The effectiveness and robustness of the proposed method are improved in identification, prediction, and control problems.

However, the proposed IT2FNN based on DGCPSO learning has limitations. That is, determining the initial parameters of the DGCPSO, such as the number of fuzzy rules, depends on user experience or trial and error. In future research, a self-adapting number of fuzzy rules will be considered in the IT2FNN model. Meanwhile, in order to achieve high-speed operation in real-time applications, the IT2FNN model will be also implemented on a field programmable gate array in a future study.

Author Contributions

Conceptualization, C.-J.L.; methodology, C.-J.L. and S.-Y.J.; software, C.-J.L., S.-Y.J., H.-Y.L. and C.-Y.Y.; data curation, H.-Y.L. and C.-Y.Y.; writing-original draft preparation, C.-J.L. and S.-Y.J.; writing-review and editing, C.-J.L. and S.-Y.J.; funding acquisition, C.-J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Ministry of Science and Technology of the Republic of China, grant number MOST 108-2221-E-167-026.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Qi, C.; Fourie, A.; Chen, Q. Neural network and particle swarm optimization for predicting the unconfined compressive strength of cemented paste backfill. Constr. Build. Mater. 2018, 159, 473–478. [Google Scholar] [CrossRef]

- Naung, Y.; Schagin, A.; Oo, H.L.; Ye, K.Z.; Khaing, Z.M. Implementation of data driven control system of DC motor by using system identification process. In Proceedings of the 2018 IEEE Conference of Russian Young Researchers in Electrical and Electronic Engineering (EIConRus), Moscow, Russia, 29 January–1 February 2018. [Google Scholar] [CrossRef]

- Cupertino, F.; Giordano, V.; Naso, D.; Delfine, L. Fuzzy control of a mobile robot. IEEE Robot. Autom. Mag. 2006, 13, 74–81. [Google Scholar] [CrossRef]

- Zhu, M.; Wang, X.; Wang, Y. Human-like automous car-following model with deep reinforcement learning. Transp. Res. Part C Emerg. Technol. 2018, 97, 348–368. [Google Scholar] [CrossRef]

- Anish, P.; Parhi, D.R. Multiple mobile robots navigation and obstacle avoidance using minimum rule based ANFIS network controller in the cluttered environment. Int. J. Adv. Robot. Autom. 2016, 1, 1–11. [Google Scholar]

- Masmoudi, M.S.; Krichen, N.; Masmoudi, M.; Derbel, N. Fuzzy logic controllers design for omnidirectional mobile robot navigation. Appl. Soft Comput. 2016, 49, 901–919. [Google Scholar] [CrossRef]

- Hidalgo, D.; Castillo, O.; Melin, P. Type-1 and type-2 fuzzy inference systems as integration methods in modular neural networks for multimodal biometry and its optimization with genetic algorithms. Inf. Sci. 2009, 179, 2123–2145. [Google Scholar] [CrossRef]

- Kayacan, E.; Khanesar, M.A. Chapter 2: Fundamentals of Type-1 Fuzzy Logic Theory. Fuzzy Neural Netw. Real Time Control Appl. 2016, 2, 13–24. [Google Scholar]

- Kayacan, E.; Khanesar, M.A. Chapter 3: Fundamentals of Type-2 Fuzzy Logic Theory. Fuzzy Neural Netw. Real Time Control Appl. 2016, 3, 25–35. [Google Scholar]

- Maguire, L.P.; Roche, B.; McGinnity, T.M.; McDaid, L.J. Predicting a chaotic time series using a fuzzy neural network. Inf. Sci. 1998, 112, 125–136. [Google Scholar] [CrossRef]

- Sarabakha, A.; Fu, C.; Kayacan, E. Intuit before tuning: Type-1and type-2 fuzzy logic controllers. Appl. Soft Comput. 2019, 81, 105495. [Google Scholar] [CrossRef]

- Castillo, O.; Melin, P.; Kacprzyk, J.; Pedrycz, W. Type-2 Fuzzy Logic: Theory and Applications. In Proceedings of the 2007 IEEE International Conference on Granular Computing (GRC 2007), Fremont, CA, USA, 2–4 November 2007. [Google Scholar] [CrossRef]

- Castillo, O.; Melin, P. Comparison of hybrid intelligent systems, neural networks, and interval type-2 fuzzy logic for time series prediction. In Proceedings of the International Joint Conference on Neural Networks, Orlando, FL, USA, 12–17 August 2007; pp. 3086–3091. [Google Scholar]

- El-Nagar, A.M.; El-Bardini, M. Interval type-2 fuzzy neural network controller for a multivariable anesthesia system based on a hardware-in-the-loop simulation. Artif. Intell. Med. 2014, 61, 1–10. [Google Scholar] [CrossRef] [PubMed]

- Kim, C.J.; Chwa, D. Obstacle Avoidance Method for Wheeled Mobile Robots Using Interval Type-2 Fuzzy Neural Network. IEEE Trans. Fuzzy Syst. 2015, 23, 677–687. [Google Scholar] [CrossRef]

- Mendel, J.M. General Type-2 Fuzzy Logic Systems Made Simple: A Tutorial. IEEE Trans. Fuzzy Syst. 2013, 22, 1162–1182. [Google Scholar] [CrossRef]

- Liu, F. An efficient centroid type-reduction strategy for general type-2 fuzzy logic system. Inf. Sci. 2008, 179, 2224–2236. [Google Scholar] [CrossRef]

- Sharifian, A.; Ghadi, M.J.; Ghavidel, S.; Li, L.; Zhang, J. A new method based on Type-2 fuzzy neural network for accurate wind power forecasting under uncertain data. Renew. Energy 2018, 120, 220–230. [Google Scholar] [CrossRef]

- Adigun, O.; Kosko, B. Training Generative Adversarial Networks with Bidirectional Backpropagation. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 1178–1185. [Google Scholar]

- Hou, Y.; Zhao, L.; Lu, H. Fuzzy neural network optimization and network traffic forecasting based on improved differential evolution. Future Gener. Comput. Syst. 2018, 81, 425–432. [Google Scholar] [CrossRef]

- Gu, P.; Xiu, C.; Cheng, Y.; Luo, J.; Li, Y. Adaptive ant colony optimization algorithm. In In Proceedings of the IEEE 2014 International Conference on Mechatronics and Control (ICMC), Jinzhou, China, 3–5 July 2014; Volume 6, pp. 6–8. [Google Scholar]

- Chen, X.; Wei, X.; Yang, G.; Du, W. Fireworks explosion based artificial bee colony for numerical optimization. Knowl.-Based Syst. 2020, 188, 105002. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Gong, W.; Cai, Z. Differential Evolution with Ranking-Based Mutation Operators. IEEE Trans. Cybern. 2013, 43, 2066–2081. [Google Scholar] [CrossRef]

- Cai, X.; Gao, L.; Li, F. Sequential approximation optimization assisted particle swarm optimization for expensive problems. Appl. Soft Comput. 2019, 83, 105659. [Google Scholar] [CrossRef]

- Gan, W.; Zhu, D.; Ji, D. QPSO-model predictive control-based approach to dynamic trajectory tracking control for unmanned underwater vehicles. Ocean Eng. 2018, 158, 208–220. [Google Scholar] [CrossRef]

- Liu, X.F.; Zhou, Y.R.; Yu, X. Cooperative particle swarm optimization with reference-point-based prediction strategy for dynamic multiobjective optimization. Appl. Soft Comput. 2020, 87, 105988. [Google Scholar] [CrossRef]

- Chang, J.Y.; Lin, Y.Y.; Han, M.F.; Lin, C.T. A functional-link based interval type-2 compensatory fuzzy neural network for nonlinear system modeling. In Proceedings of the 2011 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE 2011), San Diego, CA, USA, 8–12 March 2011; pp. 939–943. [Google Scholar]

- Mendel, J.M. Type-2 fuzzy sets and systems: An overview. IEEE Comput. Intell. Mag. 2007, 2, 20–29. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).