GBSVM: Sentiment Classification from Unstructured Reviews Using Ensemble Classifier

Abstract

1. Introduction

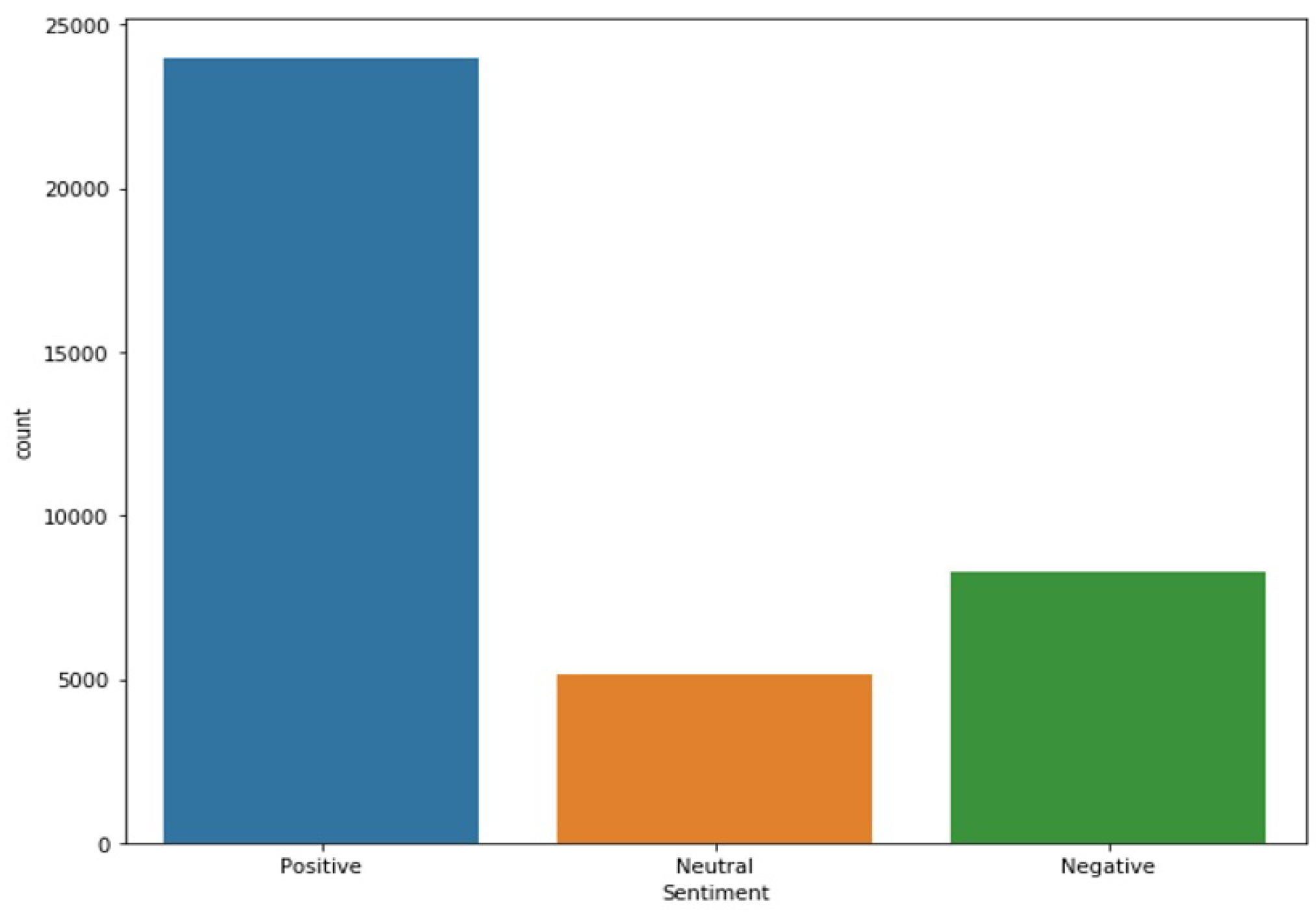

- Performance analysis of SVM, Gradient Boosting Machine (GBM), Logistic Regression (LR), and Random Forest (RF) is carried out for sentiment analysis. The polarity of Google apps dataset is divided into positive, negative, and neutral classes for this purpose.

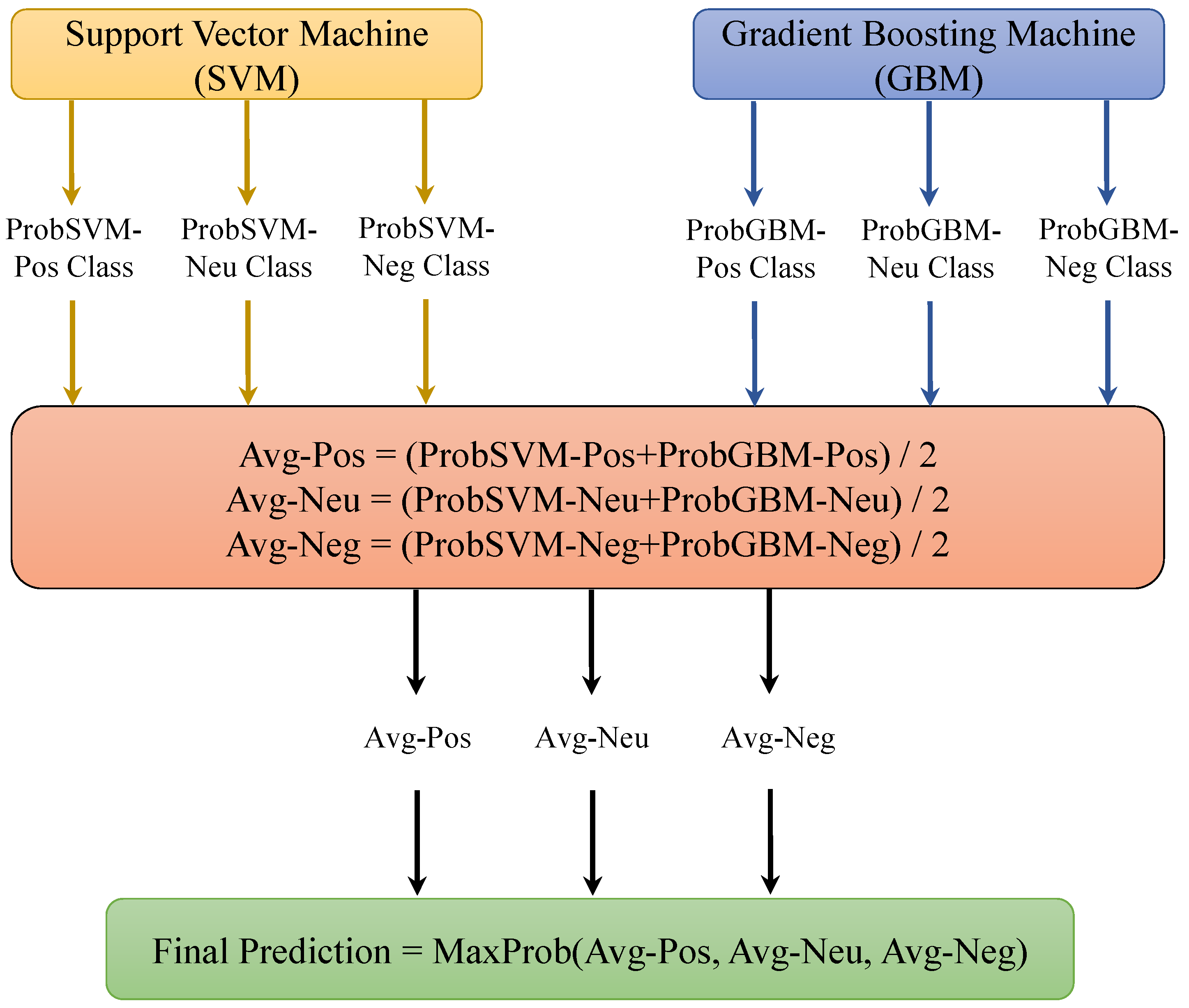

- A voting classifier, called Gradient Boosted Support Vector Machine (GBSVM), is contrived to perform sentiment analysis that is based on Gradient Boosting (GB) and SVM. The performance is compared with four state-of-the-art ensemble methods.

- The use of TF and TF-IDF is investigated, whereby, uni-gram, bi-gram, and tri-gram features are used with the selected classifiers, as well as, GBSMV to analyze the impact on the sentiment classification accuracy.

2. Literature Review

2.1. Review Classification Using Sentiment Analysis

2.2. Sentiment Analysis for Different Languages

2.3. Research Works on the Pre-Processing in Sentiment Analysis

3. Materials and Methods

3.1. Classifiers Selected for the Experiment

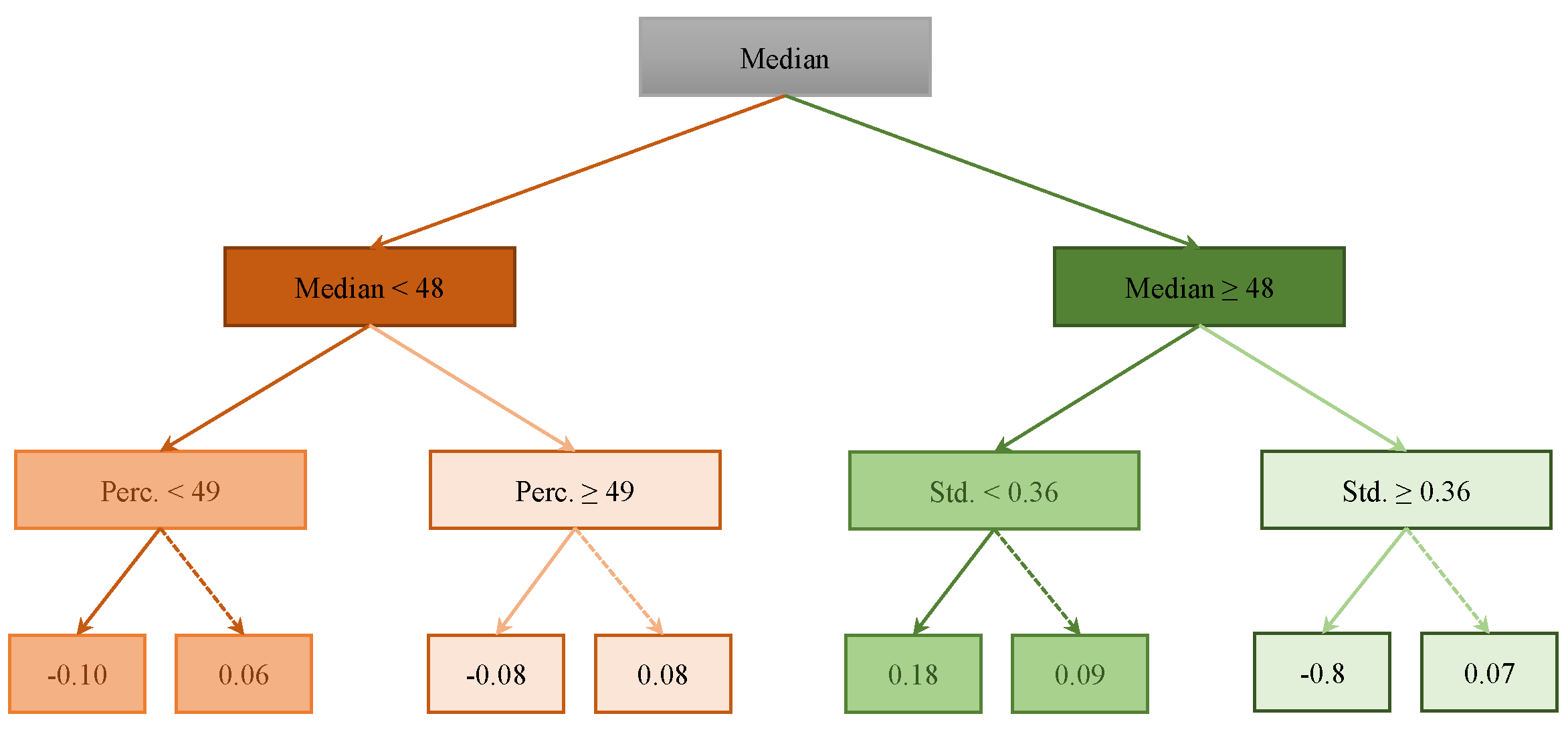

3.1.1. Random Forest

3.1.2. Logistic Regression

3.1.3. Gradient Boosting Machine

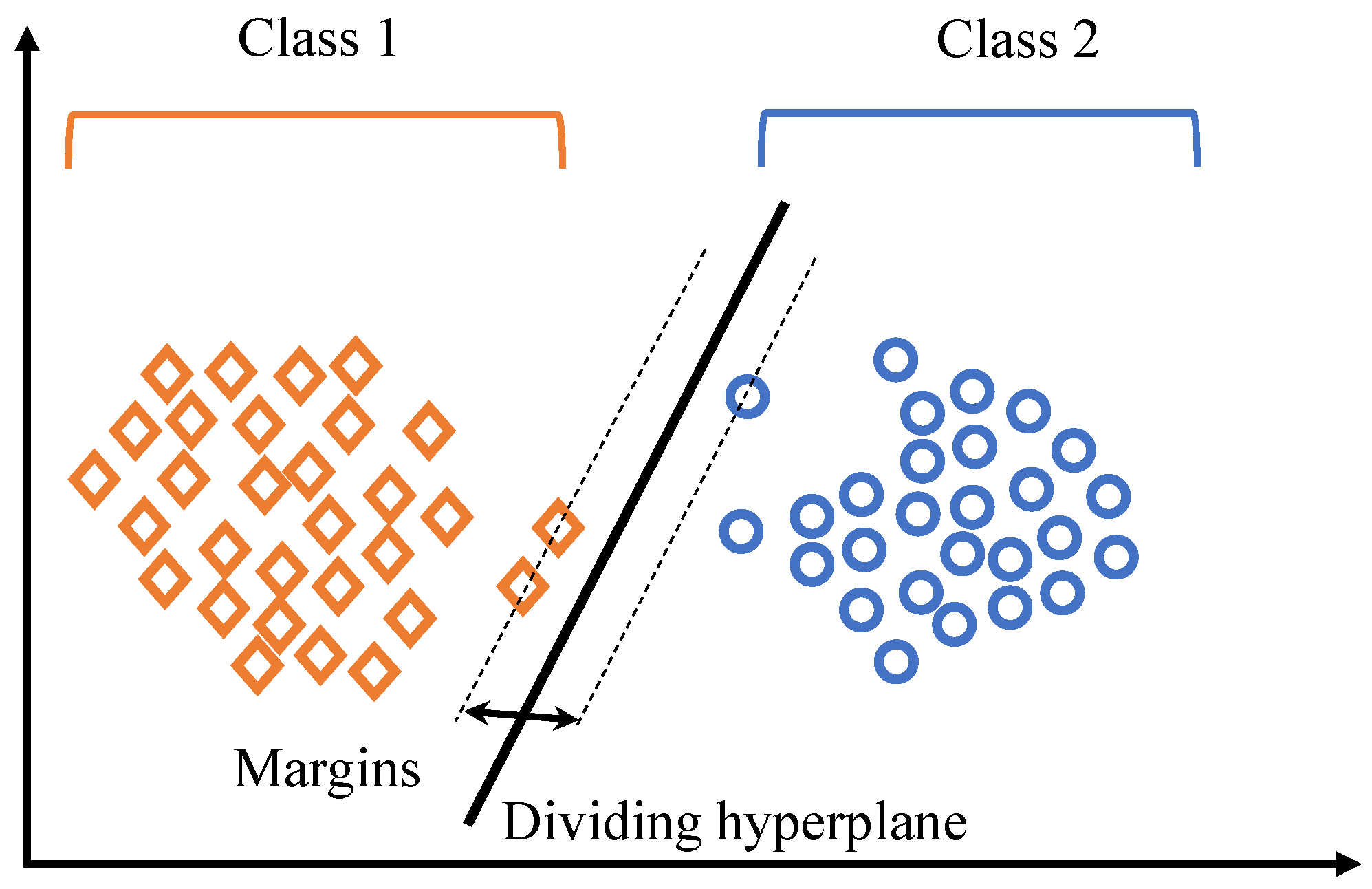

3.1.4. Support Vector Machine

| Algorithm 1 Friedman’s Gradient Boosting Algorithm [31]. |

Inputs:

|

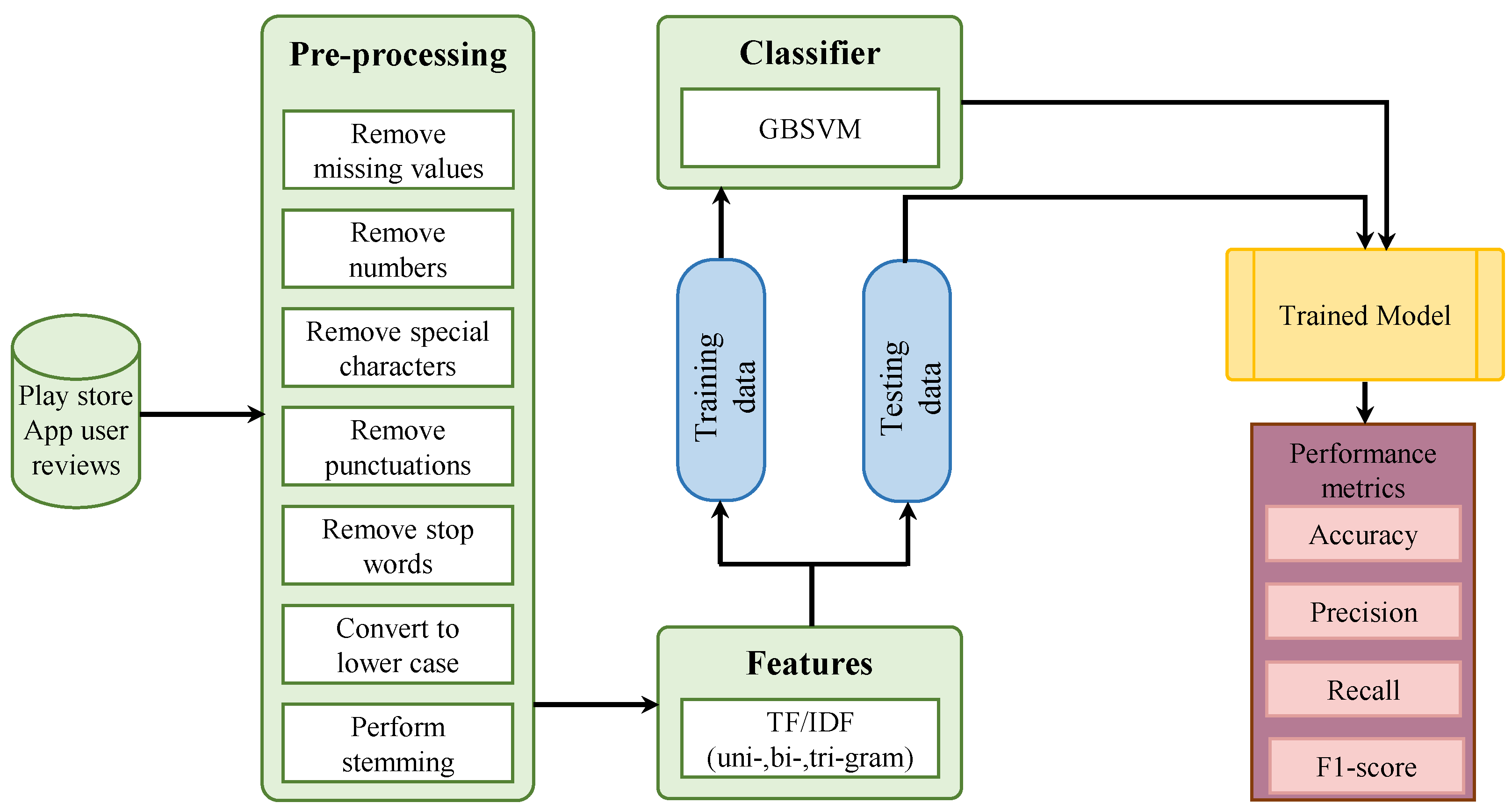

3.2. Proposed Approach

| Algorithm 2 Support voting machine. |

| Input: input data |

|

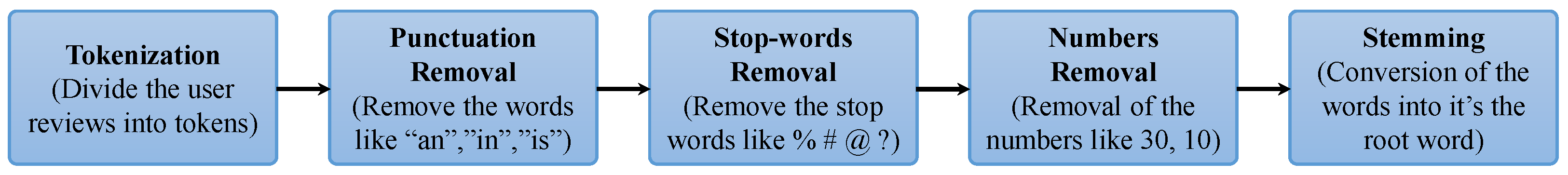

3.2.1. Data Pre-Processing

3.2.2. Proposed GBSVM

| Algorithm 3 Gradient Boosted Support Vector Machine (GBSVM). |

| Input: input data |

| = Trained_ GBM |

| = Trained_ SVM |

|

3.3. Dataset

3.4. Feature Selection

3.5. Performance Evaluation Parameters

3.6. Experiment Settings

4. Results and Discussions

4.1. Performance Analysis of the Proposed GBSVM

4.2. Analyzing the Performance of GBSVM on Additional Dataset

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Chen, L.; Wang, W.; Nagarajan, M.; Wang, S.; Sheth, A.P. Extracting diverse sentiment expressions with target-dependent polarity from twitter. In Proceedings of the Sixth International AAAI Conference on Weblogs and Social Media, Dublin, Ireland, 4–7 June 2012. [Google Scholar]

- Liu, B. Handbook Chapter: Sentiment Analysis and Subjectivity. Handbook of Natural Language Processing. In Handbook of Natural Language Processing; Marcel Dekker, Inc.: New York, NY, USA, 2009. [Google Scholar]

- Dave, K.; Lawrence, S.; Pennock, D.M. Mining the peanut gallery: Opinion extraction and semantic classification of product reviews. In Proceedings of the 12th International Conference on World Wide Web, Budapest, Hungary, 20–24 May 2003; pp. 519–528. [Google Scholar]

- Kasper, W.; Vela, M. Sentiment analysis for hotel reviews. In Proceedings of the Computational Linguistics-Applications Conference, Jachranka, Poland, 17–19 October 2011; Volume 231527, pp. 45–52. [Google Scholar]

- Hussein, D.M.E.D.M. A survey on sentiment analysis challenges. J. King Saud Univ.-Eng. Sci. 2018, 30, 330–338. [Google Scholar] [CrossRef]

- Mukherjee, A.; Venkataraman, V.; Liu, B.; Glance, N. What yelp fake review filter might be doing? In Proceedings of the Seventh International AAAI Conference on Weblogs and Social Media, Cambridge, MA, USA, 8–11 July 2013. [Google Scholar]

- Pang, B.; Lee, L.; Vaithyanathan, S. Thumbs up? Sentiment classification using machine learning techniques. In Proceedings of the ACL-02 Conference on Empirical Methods in Natural Language Processing, Stroudsburg, Philadelphia, PA, USA, 6–7 July 2002; Association for Computational Linguistics: Shumen, Bulgaria, 2002; Volume 10, pp. 79–86. [Google Scholar]

- Pang, B.; Lee, L. Opinion mining and sentiment analysis. Found. Trends. Inf. Retr. 2008, 2, 1–135. [Google Scholar] [CrossRef]

- Rustam, F.; Ashraf, I.; Mehmood, A.; Ullah, S.; Choi, G.S. Tweets Classification on the Base of Sentiments for US Airline Companies. Entropy 2019, 21, 1078. [Google Scholar] [CrossRef]

- Neethu, M.; Rajasree, R. Sentiment analysis in twitter using machine learning techniques. In Proceedings of the 2013 Fourth International Conference on Computing, Communications and Networking Technologies (ICCCNT), Tiruchengode, India, 4–6 July 2013; pp. 1–5. [Google Scholar]

- Ortigosa, A.; Martín, J.M.; Carro, R.M. Sentiment analysis in Facebook and its application to e-learning. Comput. Hum. Behav. 2014, 31, 527–541. [Google Scholar] [CrossRef]

- Bakshi, R.K.; Kaur, N.; Kaur, R.; Kaur, G. Opinion mining and sentiment analysis. In Proceedings of the 2016 3rd International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 16–18 March 2016; pp. 452–455. [Google Scholar]

- Barnaghi, P.; Ghaffari, P.; Breslin, J.G. Opinion mining and sentiment polarity on twitter and correlation between events and sentiment. In Proceedings of the 2016 IEEE Second International Conference on Big Data Computing Service and Applications (BigDataService), Oxford, UK, 29 March–1 April 2016; pp. 52–57. [Google Scholar]

- Ahmed, S.; Danti, A. A novel approach for Sentimental Analysis and Opinion Mining based on SentiWordNet using web data. In Proceedings of the 2015 International Conference on Trends in Automation, Communications and Computing Technology (I-TACT-15), Bangalore, India, 21–22 December 2015; pp. 1–5. [Google Scholar]

- Duwairi, R.M.; Qarqaz, I. Arabic sentiment analysis using supervised classification. In Proceedings of the 2014 International Conference on Future Internet of Things and Cloud, Barcelona, Spain, 27–29 August 2014; pp. 579–583. [Google Scholar]

- Le, H.S.; Van Le, T.; Pham, T.V. Aspect analysis for opinion mining of Vietnamese text. In Proceedings of the 2015 International Conference on Advanced Computing and Applications (ACOMP), Ho Chi Minh City, Vietnam, 23–25 Novomber 2015; pp. 118–123. [Google Scholar]

- Chumwatana, T. Using sentiment analysis technique for analyzing Thai customer satisfaction from social media. In Proceedings of the 5th International Conference on Computing and Informatics (ICOCI), Istanbul, Turkey, 11–13 August 2015. [Google Scholar]

- Boiy, E.; Moens, M.F. A machine learning approach to sentiment analysis in multilingual Web texts. Inf. Retr. 2009, 12, 526–558. [Google Scholar] [CrossRef]

- Duwairi, R.; El-Orfali, M. A study of the effects of preprocessing strategies on sentiment analysis for Arabic text. J. Inf. Sci. 2014, 40, 501–513. [Google Scholar] [CrossRef]

- Uysal, A.K.; Gunal, S. The impact of preprocessing on text classification. Inf. Process. Manag. 2014, 50, 104–112. [Google Scholar] [CrossRef]

- Kalra, V.; Aggarwal, R. Importance of Text Data Preprocessing & Implementation in RapidMiner. In Proceedings of the First International Conference on Information Technology and Knowledge Management, New Dehli, India, 22–23 December 2017; Volume 14, pp. 71–75. [Google Scholar]

- Uysal, A.K.; Gunal, S. A novel probabilistic feature selection method for text classification. Knowl.-Based Syst. 2012, 36, 226–235. [Google Scholar] [CrossRef]

- Hackeling, G. Mastering Machine Learning with Scikit-Learn; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Wang, G.; Sun, J.; Ma, J.; Xu, K.; Gu, J. Sentiment classification: The contribution of ensemble learning. Decis. Support Syst. 2014, 57, 77–93. [Google Scholar] [CrossRef]

- Whitehead, M.; Yaeger, L. Sentiment mining using ensemble classification models. In Innovations and Advances in Computer Sciences and Engineering; Springer: Dordrecht, The Netherlands, 2010; pp. 509–514. [Google Scholar]

- Zhou, Z.H. Ensemble Learning. Encycl. Biom. 2009, 1, 270–273. [Google Scholar]

- Deng, X.B.; Ye, Y.M.; Li, H.B.; Huang, J.Z. An improved random forest approach for detection of hidden web search interfaces. In Proceedings of the 2008 International Conference on Machine Learning and Cybernetics, Kunming, China, 12–15 July 2008; Volume 3, pp. 1586–1591. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Korkmaz, M.; Güney, S.; Yiğiter, Ş. The importance of logistic regression implementations in the Turkish livestock sector and logistic regression implementations/fields. Harran Tarım ve Gıda Bilimleri Dergisi 2012, 16, 25–36. [Google Scholar]

- Johnson, R.; Zhang, T. Learning nonlinear functions using regularized greedy forest. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 36, 942–954. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobot. 2013, 7, 21. [Google Scholar] [CrossRef]

- Bissacco, A.; Yang, M.H.; Soatto, S. Fast human pose estimation using appearance and motion via multi-dimensional boosting regression. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Hutchinson, R.A.; Liu, L.P.; Dietterich, T.G. Incorporating boosted regression trees into ecological latent variable models. In Proceedings of the Twenty-Fifth Aaai Conference on Artificial Intelligence, San Francisco, CA, USA, 7–11 August 2011. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Aslam, S.; Ashraf, I. Data mining algorithms and their applications in education data mining. Int. J. Adv. Res. Comp. Sci. Manag. Stud. 2014, 2, 50–56. [Google Scholar]

- Byun, H.; Lee, S.W. A survey on pattern recognition applications of support vector machines. Int. J. Pattern Recognit. Artif. Intell. 2003, 17, 459–486. [Google Scholar] [CrossRef]

- Burges, C.J. Geometry and Invariance in Kernel Based Methods, Advances in Kernel Methods: Support Vector Learning; MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Shmilovici, A. Support vector machines. In Data Mining and Knowledge Discovery Handbook; Springer: Berlin, Germany, 2009; pp. 231–247. [Google Scholar]

- Smola, A.; Schölkopf, B. A Tutorial on Support Vector Regression; NeuroCOLT Tech. Rep.; Technical report, NC-TR-98-030; Royal Holloway Coll. Univ.: London, UK, 1998; Available online: http://www.kernel-machines (accessed on 7 April 2020).

- Smola, A.J.; Schölkopf, B.; Müller, K.R. The connection between regularization operators and support vector kernels. Neural Netw. 1998, 11, 637–649. [Google Scholar] [CrossRef]

- Epanechnikov, V.A. Non-parametric estimation of a multivariate probability density. Theory Probab. Its Appl. 1969, 14, 153–158. [Google Scholar] [CrossRef]

- Zhang, Y. Support vector machine classification algorithm and its application. In International Conference on Information Computing and Applications; Springer: Berlin/Heidelberg, Germany, 2012; pp. 179–186. [Google Scholar]

- Shevade, S.K.; Keerthi, S.S.; Bhattacharyya, C.; Murthy, K.R.K. Improvements to the SMO algorithm for SVM regression. IEEE Trans. Neural Netw. 2000, 11, 1188–1193. [Google Scholar] [CrossRef]

- Vijayarani, S.; Janani, R. Text mining: Open source tokenization tools-an analysis. Adv. Comput. Intell. Int. J. 2016, 3, 37–47. [Google Scholar]

- Yang, S.; Zhang, H. Text mining of Twitter data using a latent Dirichlet allocation topic model and sentiment analysis. Int. J. Comput. Inf. Eng. 2018, 12, 525–529. [Google Scholar]

- Anandarajan, M.; Nolan, T. Practical Text Analytics. Maximizing the Value of Text Data. In Advances in Analytics and Data Science; Springer Nature Switzerland AG: Cham, Switzerland, 2019; Volume 2. [Google Scholar]

- Bennett, K.P.; Campbell, C. Support vector machines: Hype or hallelujah? Acm Sigkdd Explor. Newsl. 2000, 2, 1–13. [Google Scholar] [CrossRef]

- Agnihotri, D.; Verma, K.; Tripathi, P.; Singh, B.K. Soft voting technique to improve the performance of global filter based feature selection in text corpus. Appl. Intell. 2019, 49, 1597–1619. [Google Scholar] [CrossRef]

- Kaggle. Google Play Store Apps. 2019. Available online: https://www.kaggle.com/lava18/google-play-store-apps (accessed on 21 November 2019).

- Tellex, S.; Katz, B.; Lin, J.; Fernandes, A.; Marton, G. Quantitative evaluation of passage retrieval algorithms for question answering. In Proceedings of the 26th Annual International ACM SIGIR Conference on Research and Development in Informaion Retrieval, Toronto, ON, Canada, 28 July–1 August 2003; pp. 41–47. [Google Scholar]

- Zhao, R.; Mao, K. Fuzzy bag-of-words model for document representation. IEEE Trans. Fuzzy Syst. 2017, 26, 794–804. [Google Scholar] [CrossRef]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient estimation of word representations in vector space. arXiv 2013, arXiv:1301.3781. [Google Scholar]

- Huang, L. Measuring Similarity Between Texts in Python. 2017. Available online: https://sites.temple.edu/tudsc/2017/03/30/measuring-similarity-between-texts-in-python/ (accessed on 21 November 2019).

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of tricks for efficient text classification. arXiv 2016, arXiv:1607.01759. [Google Scholar]

- Sisodia, D.S.; Nikhil, S.; Kiran, G.S.; Shrawgi, H. Performance Evaluation of Learners for Analyzing the Hotel Customer Sentiments Based on Text Reviews. In Performance Management of Integrated Systems and its Applications in Software Engineering; Springer: Singapore, 2020; pp. 199–209. [Google Scholar]

- Oprea, C. Performance evaluation of the data mining classification methods. Inf. Soc. Sustain. Dev. 2014, 2344, 249–253. [Google Scholar]

- Han, J.; Pei, J.; Kamber, M. Data Mining: Concepts and Techniques; Elsevier: Waltham, MA, USA, 2011. [Google Scholar]

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: Cambridge, MA, USA, 2014. [Google Scholar]

- Danesh, A.; Moshiri, B.; Fatemi, O. Improve text classification accuracy based on classifier fusion methods. In Proceedings of the 2007 10th International Conference on Information Fusion, Quebec, QC, Canada, 9–12 July 2007; pp. 1–6. [Google Scholar]

- Kaggle. 20 Newsgroups. 2017. Available online: https://www.kaggle.com/crawford/20-newsgroups (accessed on 14 January 2020).

- Wu, F.; Zhang, T.; Souza, A.H.D., Jr.; Fifty, C.; Yu, T.; Weinberger, K.Q. Simplifying graph convolutional networks. arXiv 2019, arXiv:1902.07153. [Google Scholar]

- Yamada, I.; Shindo, H. Neural Attentive Bag-of-Entities Model for Text Classification. arXiv 2019, arXiv:1909.01259. [Google Scholar]

| Attribute | Description |

|---|---|

| App | It represent the actual name of the app on google play store. |

| Translated_Reviews | It consists of the reviews given by each individual users. |

| Sentiments | It contains positive, Negative and Neutral sentiments. |

| Parameter | RF | LR | GBM | SVM | GBSVM |

|---|---|---|---|---|---|

| max_ depth | 10 | - | 10 | - | 10 |

| learning_ rate | - | - | 0.4 | - | 0.4 |

| n_ estimators | - | - | 100 | - | 100 |

| random_ state | 21 | - | 2 | None | 2 |

| C | - | 1.0 | - | 1.0 | 1.0 |

| cache_ size | - | - | - | 200 | 200 |

| decision_ function_ shape | - | - | - | ‘ovr’ | ‘ovr’ |

| degree | - | - | - | 3 | 3 |

| gamma | - | - | - | auto_ deprecated | - |

| kernel | - | - | - | linear | linear |

| max_ iterations | - | 100 | - | −1 | −1 |

| shrinking | - | - | - | True | True |

| tol (tolerance) | - | 0.0001 | - | 0.001 | 0.001 |

| penalty | - | L2 | - | - | - |

| fit_ intercept | - | True | - | - | - |

| solver | - | lbfgs | - | - | - |

| Classifier | Accuracy | Negative Class | Neutral Class | Positive Class | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | Precision | Recall | F1 | ||

| GBSVM | 0.93 | 0.92 | 0.85 | 0.88 | 0.86 | 0.94 | 0.90 | 0.95 | 0.96 | 0.96 |

| GBM | 0.91 | 0.91 | 0.80 | 0.85 | 0.78 | 0.95 | 0.86 | 0.95 | 0.95 | 0.95 |

| SVM | 0.92 | 0.87 | 0.87 | 0.87 | 0.86 | 0.92 | 0.89 | 0.95 | 0.94 | 0.95 |

| LR | 0.92 | 0.91 | 0.84 | 0.87 | 0.84 | 0.90 | 0.87 | 0.95 | 0.96 | 0.95 |

| RF | 0.87 | 0.85 | 0.71 | 0.78 | 0.80 | 0.87 | 0.83 | 0.90 | 0.94 | 0.92 |

| Classifier | Accuracy | Negative Class | Neutral Class | Positive Class | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | Precision | Recall | F1 | ||

| GBSVM | 0.92 | 0.92 | 0.84 | 0.88 | 0.85 | 0.90 | 0.87 | 0.94 | 0.96 | 0.95 |

| GBM | 0.91 | 0.92 | 0.82 | 0.87 | 0.80 | 0.94 | 0.86 | 0.95 | 0.95 | 0.95 |

| SVM | 0.91 | 0.89 | 0.83 | 0.86 | 0.84 | 0.86 | 0.85 | 0.93 | 0.95 | 0.94 |

| LR | 0.88 | 0.91 | 0.75 | 0.82 | 0.85 | 0.71 | 0.77 | 0.88 | 0.97 | 0.92 |

| RF | 0.87 | 0.87 | 0.71 | 0.78 | 0.79 | 0.82 | 0.81 | 0.89 | 0.94 | 0.92 |

| Classifier | Accuracy | Negative Class | Neutral Class | Positive Class | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | Precision | Recall | F1 | ||

| GBSVM | 0.75 | 0.75 | 0.56 | 0.64 | 0.60 | 0.26 | 0.36 | 0.76 | 0.92 | 0.83 |

| GBM | 0.70 | 0.76 | 0.36 | 0.49 | 0.46 | 0.18 | 0.26 | 0.71 | 0.94 | 0.81 |

| SVM | 0.75 | 0.83 | 0.49 | 0.61 | 0.90 | 0.20 | 0.32 | 0.74 | 0.97 | 0.84 |

| LR | 0.68 | 0.88 | 0.21 | 0.34 | 0.92 | 0.03 | 0.06 | 0.67 | 0.99 | 0.80 |

| RF | 0.73 | 0.78 | 0.49 | 0.60 | 0.55 | 0.26 | 0.35 | 0.75 | 0.93 | 0.83 |

| Classifier | Accuracy | Negative Class | Neutral Class | Positive Class | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Precision | Recall | F1 | Precision | Recall | F1 | Precision | Recall | F1 | ||

| GBSVM | 0.72 | 0.83 | 0.37 | 0.51 | 0.69 | 0.19 | 0.30 | 0.71 | 0.96 | 0.82 |

| GBM | 0.68 | 0.84 | 0.18 | 0.30 | 0.77 | 0.11 | 0.19 | 0.67 | 0.99 | 0.80 |

| SVM | 0.72 | 0.96 | 0.32 | 0.48 | 0.94 | 0.17 | 0.29 | 0.70 | 0.99 | 0.82 |

| LR | 0.66 | 0.97 | 0.11 | 0.20 | 1.00 | 0.01 | 0.03 | 0.65 | 1.00 | 0.79 |

| RF | 0.72 | 0.41 | 0.32 | 0.47 | 0.84 | 0.18 | 0.30 | 0.70 | 0.99 | 0.82 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khalid, M.; Ashraf, I.; Mehmood, A.; Ullah, S.; Ahmad, M.; Choi, G.S. GBSVM: Sentiment Classification from Unstructured Reviews Using Ensemble Classifier. Appl. Sci. 2020, 10, 2788. https://doi.org/10.3390/app10082788

Khalid M, Ashraf I, Mehmood A, Ullah S, Ahmad M, Choi GS. GBSVM: Sentiment Classification from Unstructured Reviews Using Ensemble Classifier. Applied Sciences. 2020; 10(8):2788. https://doi.org/10.3390/app10082788

Chicago/Turabian StyleKhalid, Madiha, Imran Ashraf, Arif Mehmood, Saleem Ullah, Maqsood Ahmad, and Gyu Sang Choi. 2020. "GBSVM: Sentiment Classification from Unstructured Reviews Using Ensemble Classifier" Applied Sciences 10, no. 8: 2788. https://doi.org/10.3390/app10082788

APA StyleKhalid, M., Ashraf, I., Mehmood, A., Ullah, S., Ahmad, M., & Choi, G. S. (2020). GBSVM: Sentiment Classification from Unstructured Reviews Using Ensemble Classifier. Applied Sciences, 10(8), 2788. https://doi.org/10.3390/app10082788