1. Introduction

Protein–protein interactions (PPIs) play important roles in various biological processes and are of pivotal importance in the regulation of biological systems, and are consequently implicated in the development of disease states [

1]. The exponentially increasing size of biomedical literature and the limited ability of manual curators to discover PPIs in text has led to delays in keeping PPI databases, such as BIND (The Biomolecular Interaction Network Database) [

2], MINT (The Molecular INTeraction Database) [

3], and The IntAct molecular interaction database [

4], updated with the current findings. Consequently, this causes a bottleneck when leveraging the valuable information that is currently available in order to develop personalized health care solutions.

Previous studies have explored different methods for PPI extraction. The dominant techniques generally fall under the following three broad categories: co-occurrence-based methods [

5], rule-and-pattern-based methods [

6,

7], and statistical machine learning (ML)-based methods [

8,

9,

10,

11,

12]. Co-occurrence-based methods measure the correlation between each pair of entities by co-occurrence. A major weakness of these methods is their tendency for having a high recall, but a low precision. This is mainly because when entities do not appear in pairs in the training set, no co-occurrence with correlation can be recorded. Rule-and-pattern-based methods employ predefined patterns and rules to match the labelled sequence. Although traditional rule-and-pattern-based methods have achieved high accuracy, their sophistication in pattern design and attenuated recall performance make them unsuitable for practical usage. Compared with co-occurrence- and rule–pattern-based methods, ML-based methods show a much better performance and generalization. In particular, the recent surge of interest in deep learning (DL) methods is due to the fact that they have been shown to outperform previous state-of-the-art techniques for PPI extraction tasks.

Generally, ML based approaches cast the problem of PPIs extraction into a classification problem. Suppose to extract the binary PPIs between entity

and entity

in a given sentence

, where

is a word in

. The classification model is constructed to output 1 when

and

are related, otherwise it is 0. The inputs of the classification model are the features extracted from

. A key difference between traditional ML and DL is in how features are extracted and represented. Traditional ML-based methods usually collect words around target entities as features, such as unigram, bigram, and some semantic and syntactic features, and then these features are put into a bag-of-words model and encoded into one-hot type representations. However, such representations are unable to capture semantic relations among words or phrases and fail in generalizing the long context dependency [

13]. The former issue is rendered as “vocabulary gap” (e.g., the words “depend” and “rely” are different in one-hot representations, albeit their similar linguistic functions). The latter one is introduced because of the n-order Markov restriction that attempts to alleviate the issue of “curse of dimensionality.” Moreover, the inability to extract features automatically leads to laborious manual efforts in designing features.

Different from one-hot encoding representation, word distribution representation is proposed to solve the “vocabulary gap” problem by mapping words to dense vectors of real numbers in a low-dimensional space [

14,

15]. In addition to using words as features, some semantic and syntactic features, such as part-of-speech (POS), word position, and dependency path between two entities, can also be embedded in their distribution representations for feature learning.

Recent studies have proposed several feature embedding methods combining DL models for PPI extraction. Most of these studies focused on finding effective linguistic features for embedding or on tuning model hyperparameters for a certain framework of DL (e.g., convolutional neural networks (CNN) and long short-term memory (LSTM)). Different from previous work, this study focuses on exploring hybrid deep learning architecture for PPI extraction tasks. Our contributions can be summarized as follows:

- (1)

we propose a hybrid architecture of a bidirectional LSTM+CNN model for PPI extraction. The proposed model is able to solve the main issue of the inability to model long-distance contextual information with CNN for PPI extraction in a long sentence. Furthermore, CNN is applied to encode the important information contained in the bidirectional LSTM networks, and to extract the local features and structure information effectively.

- (2)

two embeddings (word-embeddings and shortest dependency path (SDP) embedding) are novelty applied as the inputs of for the bidirectional LSTM networks, which are able to capture semantical and syntactical features effectively.

- (3)

we investigate the hidden features learned by different DL models by reducing the feature dimensions and visualizing these features, which can help to compare the classification performance of these hidden features trained by different DL models in an approximate way.

The outline of the paper is as follows:

Section 2 discusses related works. The framework of the model is introduced in

Section 3. The experimental study is shown in

Section 4.

Section 5 is the discussion and

Section 6 is the conclusion.

2. Related Work

Recent natural language processing (NLP) research is now increasingly focusing on the use of new DL architectures and algorithms to solve difficult NLP tasks, including PPI extraction from biomedical literature. This section reviews significant DL-related models and methods that have been employed for PPI extraction tasks.

The convolutional neural network (CNN) was originally developed for image recognition tasks [

16,

17,

18] and has been successfully applied to the NLP domain by exploiting distributed representations for words (word embeddings). Collobert and Weston [

19] made the first work to show the utility of pre-trained word embeddings. They proposed a neural network architecture that forms the foundation of many current approaches. The work also established word embeddings as a useful tool for PPI extraction tasks.

Like many other natural language tasks, using CNN along with word embeddings has been shown to be effective in PPI extraction tasks [

20], as they have the ability to extract salient n-gram features from the input sentence in order to create an informative latent semantic representation of the sentence for downstream tasks [

21,

22,

23]. Hua and Quan [

24] proposed a CNN with pre-trained word vectors of the shortest dependency path between entity pairs for PPI extraction. In the literature [

25], Quan extended their work to propose a multichannel convolutional neural network (MCCNN) that enables the fusion of multiple pre-trained word embeddings from various data sources (PubMed, PMC (PubMed Central), MedLine, and Wikipedia), and consequently expands the coverage of input vocabulary. A similar work includes the multichannel dependency-based convolutional neural network model (McDepCNN) [

26], which combines CNN along with more syntactic features (e.g., part-of-speech, chunk, named entity, dependency, and position feature) for input embedding. CNN has been shown to outperform previous state-of-the-art techniques for PPI extraction. However, the main issue with CNNs is their inability to model long-distance contextual information and to preserve sequential order in their representations [

22,

27].

Comparatively, the recurrent neural network (RNN) model [

28] is able to model long-distance contextual information by memorizing over previous computations and utilizing this information in current processing. However, simple RNN-based methods suffer from the vanishing gradient problem, which makes it hard to learn and tune the parameters of the earlier layers in the network. This limitation was overcome by various networks, such as long short-term memory (LSTM) [

29]. In addition, Lai et al. [

30] proposed the bidirectional recurrent neural network (bidirectional RNN) to capture the semantic information in different directions for sentence modeling. LSTM has also recently been applied for PPI extraction [

31,

32]. These studies yield a comparable performance, but much more attention has been paid to selecting the classification features or tuning model hyperparameters.

Hybrid models that combine CNN with RNN have aroused some interest in the DL domain, and several different combinations of architecture have been proposed for different subjects [

33]. In the NLP domain, Zhou et al. [

34] proposed C-LSTM, which combines CNN with LSTM for text classification. It takes advantage of CNN to extract a sequence of higher-level phrase representations and feeds them into LSTM to represent sentences. In the literature [

35], a CNN–bidirectional gated recurrent unit (BiGRU) model, integrating CNN and bidirectional gated recurrent unit (BiGRU), is proposed for sentence semantic classification. This model utilizes CNN to obtain intermediate representations, and utilizes BiGRU to obtain contextual representations and semantic distribution. Inspired by these works, this study explores hybrid deep learning architecture for PPI extraction tasks.

3. The Model Description

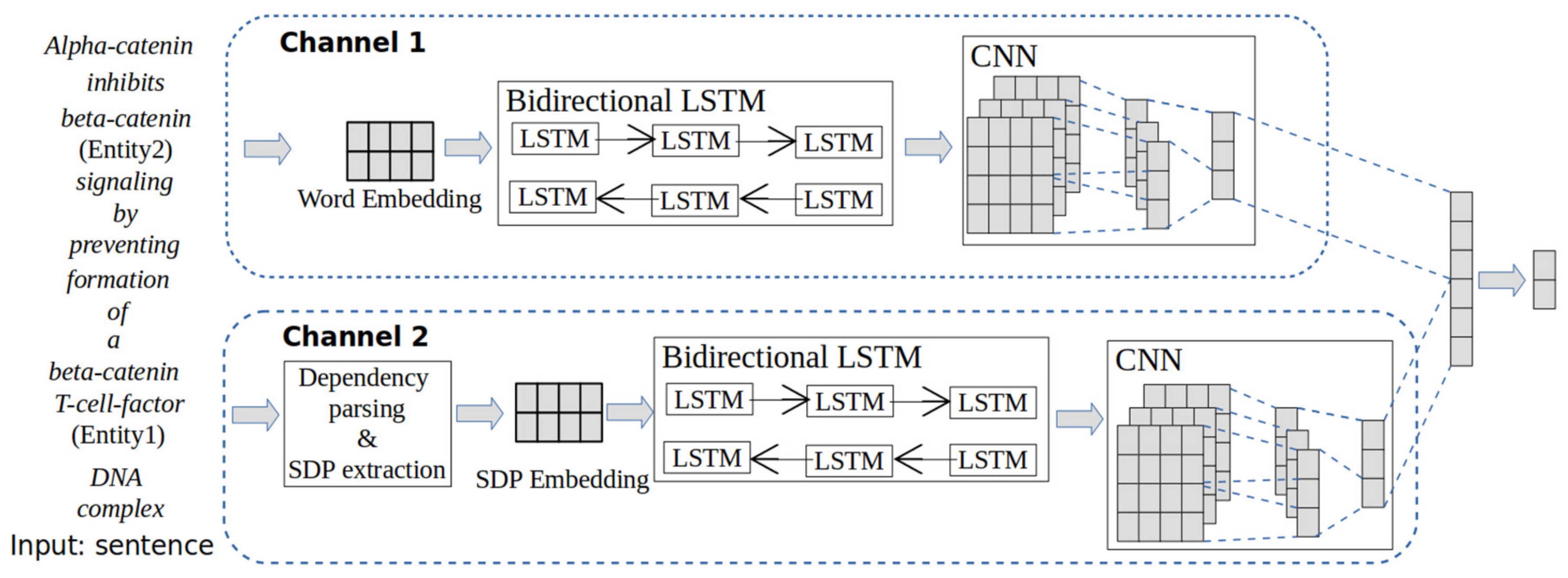

The architecture of the proposed hybrid deep learning model is shown in

Figure 1. By employing bidirectional LSTM to extract the semantic information in both the preceding and succeeding contexts, the proposed model is able to solve the main issue of the inability to model long-distance contextual information with CNN for PPI extraction in a long sentence. Furthermore, CNN is applied to encode the important information contained in the bidirectional LSTM networks, and to capture the local features and structure information effectively. As shown in

Figure 1, two embeddings (word-embeddings and SDP embedding) are novelty applied as the input of bidirectional LSTM networks, which is able to capture semantical and syntactical features effectively.

3.1. Model Input

In the expression of PPIs, most of the interaction words are verbs and nouns, and thus dependency parsing is particularly well-suited for relation extraction because the dependency grammar (DG) views the verb as the structural center of all of the clause structure. Dependency parsing has been well used for PPI extraction in previous studies [

25,

26,

33]. Given an input sentence, we extract the SDP (shortest dependency path) by dependency parsing.

Figure 2 shows the dependency parsing graph (Stanford parser [

36] and a visualization tool [

37] are utilized).

The extracted SDPs are treated as texts for the embedding. Embedding is a feature learning process where words from the vocabulary are mapped to vectors of real numbers in a low-dimensional space relative to the vocabulary size. For notation, we use to represent the pretrained embedding, where is the vocabulary and is the embedding dimension. Specifically, for word-embedding, is composed of the words from the input sentences and for SDP embedding, is composed by the symbols from the input SDPs.

When we assign each word (or symbol) in an input sentence (or SDP) with a corresponding row vector from , we would get a matrix representation for an input sentence (or SDP). The word-embedding and SDP embedding are two tensors, where is the input size of the channel, is the max length of input sentences, and is the embedding dimension.

The input of the model is a sentence that goes through two embedding channels, namely: (1) pretrained word embedding trained on the PubMed abstract corpus [

38] and (2) SDP embedding trained on the shortest dependency paths extracted between target entity pairs.

3.2. Intermediate Structure

As shown in

Figure 1, the two channel embeddings each separately pass through a bidirectional LSTM network consequent with a CNN network. bidirectional LSTM enables learning long-term dependencies for both the preceding and succeeding contexts. After that, CNN encodes the important information contained in the bidirectional LSTM networks.

The bidirectional LSTM network is a combination of Bidirectional RNNs with LSTM, using an input word embedding sequence (

), where

denotes the max length of input sequences. As illustrated in

Figure 3, bidirectional RNNs compute the forward hidden sequence

, the backward hidden sequence

, and the output sequence

by iterating the forward layer from

, the backward layer from

and then updating the output layer as follows:

where

denotes weight matrices,

denotes bias vectors, and

is the hidden layer function on each element of a vector.

In the bidirectional LSTM network, each unit of RNN is an LSTM (

Figure 4):

where

is the logistic sigmoid function, and

are the forget gate, input gate, output gate, and cell state, respectively at the time step

.

The bidirectional LSTM network outputs for both channels are each separately fed to CNN networks. Given an output sequence

of the bidirectional LSTM network, it is input into the convolutional layer. The convolutional layer contains a set of filters for different window sizes

, and computes the output feature map

as follows:

where

is an activation function,

is a bias term, and

is element-wise multiplication.

Then the concatenation operation is applied to join multiple outputs from the Max-Pooling layer into a single tensor for each channel. Finally, the CNN network outputs for both channels are concatenated to a unique vector and fed to a fully connected layer.

4. Datasets and Experimental Setup

4.1. Datasets and Preprocessing

Two benchmarking corpora, Aimed [

39] and BioInfer [

40], were used for the experiments and evaluation. The Aimed dataset was manually tagged, which includes 1955 sentences, and was considered as the standard dataset for the PPI extraction tasks. BioInfer was developed by the Turku BioNLP group [

41], and contains 1100 sentences.

To ensure the generalization of features, we use a similar data preprocessing method to the authors of [

9], by replacing two target entities with special symbols “Entity 1” and “Entity 2”, separately, and entities that are not target entities are all represented as “Entity”. Text preprocessing includes dependency parsing and shortest dependency paths (SDPs) extraction. If there is an interaction between the two entities, we consider this instance as a positive one; otherwise, we consider it as a negative one.

Table 1 shows the statistics for the PPIs corpora.

4.2. The Experiments

4.2.1. Pretrained Embeddings

There are two pretrained embeddings used in our model, namely: word embedding and SDP embedding. The pretrained word embedding is trained on PMC and PubMed (Pyysalo et al. [

38]), with a vocabulary size of 4,087,446 words. The SDP embedding is trained on the shortest dependency paths extracted between the target entity pairs from PPIs corpora by Gensim Word2Vec tool (CBOW training algorithm, Radim Řehůřek and Petr Sojka, Brno, Czech Republic) [

42]. The vocabulary size of the SDP embedding on the BioInfer corpus and Aimed corpus is 1840 words and 1349 words, respectively. Corresponding to the word and SDP embeddings, two types of input, sentences and the shortest dependency paths (SDPs) between target entity pairs, are fed into the model as inputs, separately.

4.2.2. Parameter Setting

The parameter setting is summarized in

Table 2.

4.2.3. Implementation and Evaluation Metrics

Keras 2.2.4 (

https://keras.io/) is applied to implement the models. The configurations of the machine are as follows: GPU—Quadro M1200/PCIe/SSE2, Nvidia, Santa Clara, CA, USA; CPU—Intel® Core™ i7-7820HQ CPU @ 2.90GHz × 8 Intel, Colorado Springs, CO, USA; System—Ubuntu 18.04.2 LTS 64-bit Memory, 16 GiB, Canonical Ltd., London, UK.

We use the average F1 macro score to evaluate the performances of the DL models using the 10-cross-validation (10-fold CV) method. In the calculation of each fold, we calculate the F1 macro score on the entire testing data by creating a Callback function at the end of each epoch, instead of a batch-wise average value.

4.3. Performance Comparison

The performance of the proposed DL model is compared with the state-of-the-art non-hybrid models and hybrid models.

Table 3 shows the comparison results.

CNN-based models [

24,

25,

26] and LSTM-based models [

31,

32] have been applied for PPI extraction recently. However, there are many differences in the text preprocessing strategies (e.g., protein entities are generalized with special symbols or protein IDs, utilizing different tokenization and parsing tools, and utilizing filtering rules or not) and other experimental setups (e.g., hyperparameter settings and evaluation metrics). These make it difficult to compare the performance of the DL models directly. In our experiments, we compare the non-hybrid models (1–5 in

Table 3) and hybrid models (6–7 in

Table 3) with the same experimental settings, except for the models and the inputs of the models.

By comparing CNN-based models 1 with 2, and LSTM-based models 3 with 4 in

Table 3, it can be found that the two embeddings (word-embedding and SDP embedding) input is able to improve the performance for both CNN- and LSTM-based models. This result demonstrates the effectiveness of integrating word embedding and SDP embedding for PPI extraction. The interaction verbs (e.g., “affect” and “bind”) and dependency relation symbols (e.g., “nsubj” and “dobj”) in the SDPs could provide useful information for classifying target protein pairs and extracting the relations.

It is also observed that the LSTM model outperforms the CNN model in the two datasets. The bidirectional LSTM achieved the best performance among the non-hybrid models.

As a hybrid model, we compare the proposed bidirectional LSTM+CNN model (7 in

Table 3) with the hybrid CNN+bidirectional LSTM model (6 in

Table 3), which is a variant of C-LSTM proposed by the authors of [

34]. C-LSTM learns the sentence representation by combining CNN and LSTM, and has been shown to be superior to CNN and LSTM for some text classification tasks. The results show that the proposed bidirectional LSTM+CNN model outperforms the CNN+bidirectional LSTM model greatly for PPI extraction in both of the datasets.

4.4. Hidden Features Visualization and Comparison

We further investigate the hidden features learned by different DL models by reducing the feature dimensions and visualizing these features. We choose four DL models (2, 4, 6, and 7 in

Table 3, represent CNN, LSTM, and the two hybrid-based DL models, respectively) for the visualization.

We first split the dataset into two parts randomly, as follows: training set 90% and testing set 10%. Using the same training set, we train the DL models separately. Then, we create a truncated model for each DL model. The truncated model keep the same network layers until the last hidden feature layer. Then, we set the weights for it from the trained model. The truncated model is used to predict the features for the testing data. After that, we apply principal component analysis (PCA) [

43] to reduce the features predicted by the truncated model to a lower dimension. The PCA variance is 1.00, which implies that the reduced dimensions do represent the hidden features well (scale 0 to 1). Then, t-Distributed Stochastic Neighbor Embedding (t-SNE) [

44] is applied for the visualization.

Figure 5 illustrates the scatter plots of the hidden features on the last layer of the DL models (CNN, LSTM, CNN+bidirectional LSTM, and Bidirectional LSTM+CNN) after dimensionality reduction.

In

Figure 5, the comparison of the four DL models (CNN, LSTM, CNN+bidirectional LSTM, and bidirectional LSTM+CNN) on both datasets shows that bidirectional LSTM+CNN has a much better classification performance than the other models.

Figure 6 illustrates the F1-score increasing trends of the four DL models as the number of epochs increase. The datasets are split into two parts randomly, as follows: training set 90% and testing set 10%.

As can be seen from

Figure 6, bidirectional LSTM+CNN produces a high F1-score with less epochs than the other models. It also keeps a high classification performance as the number of epochs increases. We also found that LSTM also could obtain a high F1-score on some points, but it needs more training than the proposed bidirectional LSTM+CNN model.

5. Discussion

Recently, DL models have been shown to be superior to traditional ML models in many NLP tasks. In PPI extraction tasks, the performance of a DL-based method is mainly affected by the architecture of the DL models and the feature embedding methods. In this study, we compared different architectures of DL models, including CNN, LSTM, and hybrid models, and found that the proposed bidirectional LSTM+CNN model is superior to the other DL models for PPI extraction. As more complex network architectures (e.g., a deep hybrid model with more layers or a deep reinforcement learning model) have not been considered in this study, there is still space for further improvement of the DL model architecture.

In addition to the DL model architecture, the feature embedding methods also have an effect on the classification performance. In DL models, the input semantic and syntactic features are embedded in their distribution representations for feature learning. Previous studies have experimented with some different feature embeddings (such as ngram, part-of-speech (POS), word position, and dependency path). In this study, the stacked pretrained word embedding and SDP embedding are fed into a two embedding channel (word embedding and SDP embedding) architecture, such that the model can extract the feature information more accurately. With more feature channels, an improved performance can be expected.

The difference in the text preprocessing strategies (e.g., protein entities are generalized with special symbols or protein IDs, utilizing different tokenization and parsing tools, and utilizing filtering rules or not) and other experimental settings (e.g., hyperparameter settings and evaluation metrics) make it difficult to compare the performance of the DL models directly. This study did not directly compare the results with other related studies. Instead, under the same experimental environment, our experiments covered all of the DL models that have been applied in previous studies [

24,

25,

26,

31,

32]. It would be more objective to compare the performance of different DL models directly. The experiments of this study used two standard PPI datasets, and a more robust DL model can be expected when using large-scale training data.

DL models are generally opaque, meaning that although they can produce accurate predictions, it is not clear how or why a given prediction is made. This study investigated the hidden features learned by different DL models by reducing feature dimensions and visualizing these features. This would help us to compare the classification performance of these hidden features trained by different DL models in an approximate way. However, how to connect an input with the hidden features and how to control them during training stage are still challenging problems.

6. Conclusions

In this paper, a hybrid DL model is proposed for PPI extraction tasks. The model innovatively integrates bidirectional LSTM with CNN in a two-embedding channel (word embedding and SDP embedding) architecture.

CNN with word embeddings has been shown to be effective in PPI extraction tasks, as it has the ability to extract salient n-gram features from the input sentence to create an informative latent semantic representation of the sentence. However, a main issue with CNNs is their inability to model long-distance contextual information for PPI extraction in a long sentence. To solve this problem, we employ bidirectional LSTM to extract the semantic information in both the preceding and succeeding contexts, because the architecture of LSTM is able to model long-distance contextual information by memorizing over previous computations and utilizing this information in current processing. Furthermore, CNN is applied to encode the important information contained in the bidirectional LSTM networks, and to capture the local features and structure information effectively.

Under the same experimental environment, the results show that the proposed model is superior to the non-hybrid DL models, including CNN-based and LSTM-based models. In addition, the proposed bidirectional LSTM+CNN model outperformed another hybrid model (CNN+bidirectional LSTM model) greatly for PPI extraction. The visualization and comparison of the hidden features learned by different DL models further confirmed the effectiveness of the proposed model.

For future work, we will apply the proposed hybrid DL model to other NLP tasks in order to explore its applicability, and will consider more complex network architectures (e.g., a deep hybrid model with more layers or a deep reinforcement learning model) to improve the performance.