1. Introduction

Graph structure data is ubiquitous in the real world, such as social networks [

1,

2,

3,

4,

5], citation networks [

6,

7,

8], wireless sensor networks [

9], and graph-based molecules [

10,

11]. Recently, graph neural networks (GNNs) have aroused a surge of research interest. The goal of GNNs is to learn representation vectors of nodes in a graph, and then the learned vectors can be used in many graph-based applications, such as link prediction and node classification [

12,

13,

14,

15,

16]. The general idea of GNNs is “message propagation”, i.e., each node iteratively passes, transforms, and aggregates messages (i.e., features) from its neighbors. Then, after

k iterations, each node can capture the information of its neighbor nodes within

k-hops.

There are many works in developing graph neural networks. GCN [

17] simplifies the localized spectral filters used in [

18] by weighted propagating information. GraphSAGE [

6] proposes several types of aggregation strategies to propagate messages from neighbors effectively. GAT [

10] adopts a self-attention [

19] to dynamically propagate messages.

However, the majority of these models suffer from the “over-smoothing” problem [

20,

21]. Specifically, message propagation is proved to be a type of Laplacian smoothing. Stacking too many layers (i.e., repeatedly applying Laplacian smoothing many times) may lead to the representations of nodes indistinguishable and hurt the performance of GNNs [

20]. Furthermore, a random walk view to message propagation shows GNNs converge to a random walk distribution [

21], leading to similar conclusions with the “over-smoothing” problem.

As a matter of fact, many GNNs are shallow neural networks with only two or three layers. Thus, limited neighborhood information is captured. Moreover, adding additional layers cannot always improve the performance of GNNs, and may even have an opposite effect on GNNs [

17,

20]. The above discussions reflect the inability of the current GNN models in exploring the global graph structure. Therefore, although a sufficient size of neighborhoods may help models to capture the high-level graph patterns [

12,

20,

21], most of GNNs fall into the over-smoothing problem and can only capture limited local structure of nodes.

In this paper, we focus on dealing with the limitations of current GNNs, i.e., the need and the bottleneck both introduced by the global information. In detail, we propose a rejection mechanism, which softly rejects information from distant nodes, and allows our model to be free from the over-smoothing problem. To further capture the global graph structure, a graph dilated convolution kernel is proposed, and enlarges the size of the neighborhood at each layer.

The main contributions of this paper are summarized as follows.

We propose a novel graph neural network model, i.e., Graph Dilated networks with Rejection mechanism (GraphDRej), to learn expressive node representations.

We design a rejection mechanism, which is a simple but effective strategy to address the over-smoothing problem.

We present multiple graph dilated convolution kernels to explore a sufficient size of neighborhoods in message propagation.

Extensive experimental results show that the proposed model achieves state-of-the-art results. Also, the effectiveness of both the rejection mechanism and the graph dilated convolution kernel used in GraphDRej is demonstrated.

The remainder of this paper is organized as follows.

Section 2 reviews the related work.

Section 3 presents the preliminaries.

Section 4 analyzes the limitation of existing GNNs.

Section 5 proposes GraphDRej model, i.e., Graph Dilated Network with Rejection Mechanism.

Section 6 reports the experiments.

Section 7 concludes the paper.

2. Related Work

Recently, a vast amount of literature has focused on analyzing graph-based problems by leveraging the graph neural networks [

6,

10,

17,

22,

23,

24]. GCN [

17] is a pilot work, which simplifies the localized spectral filters used in [

18], and extracts the 1-localized information for each node in each convolution layer. Then, the deeper relational features can be captured by stacking multiple convolution layers. GraphSAGE [

6] takes the representation learning into a formal pattern, i.e., aggregation and combination, and proposes several kinds of aggregation strategies. Actually, GCN can be taken as a special case of GraphSAGE. GAT [

10] considers the diversity in neighborhoods, and leverages the self-attention mechanism [

19] to effectively select important information in neighborhoods. GG-NN [

25] designs a gate mechanism-based aggregation function, which provides a weighted average of the messages from neighbors and the center node. Besides, there are some works [

26,

27] considering the imbalanced nodes in representation. Although these GNNs can characterize the node structure and learn the representations of nodes, most of them suffer from the over-smoothing problem.

Meanwhile, there are several works analyzing the mechanism of the graph neural networks, which are related to the over-smoothing problem. Li et al. (2018) [

20] took graph neural networks as a special form of Laplacian smoothing, which is the main reason why GNNs work. At the same time, it shows the limitation of current GNNs, i.e., GNNs cannot capture the global graph structure due to the over-smoothing problem. Although Li et al. (2018) [

20] proposed to leverage co- and self-training to improve the GNN performance, both co- and self-training are designed to deal with the limited validation issue. Therefore, the over-smoothing problem mentioned by Li et al. (2018) [

20] still needs to be solved. Xu et al. (2018) [

21] provide another viewpoint of GNNs. The message propagation of GNNs can be considered as a modified random walk. The distribution of influence scores between two nodes converges to a stationary distribution, which also reflects the over-smoothing problem. Xu et al. (2018) [

21] proposed a densenet-like [

28] module to aggregate neighbors from different hops adaptively as well as to address the over-smoothing problem. The PN [

29] model proposes a novel normalization layer, which is applied on the output of the graph convolution layer to address the over-smoothing problem. Normalizing the representations of nodes can prevent these representations from being too similar. MADReg [

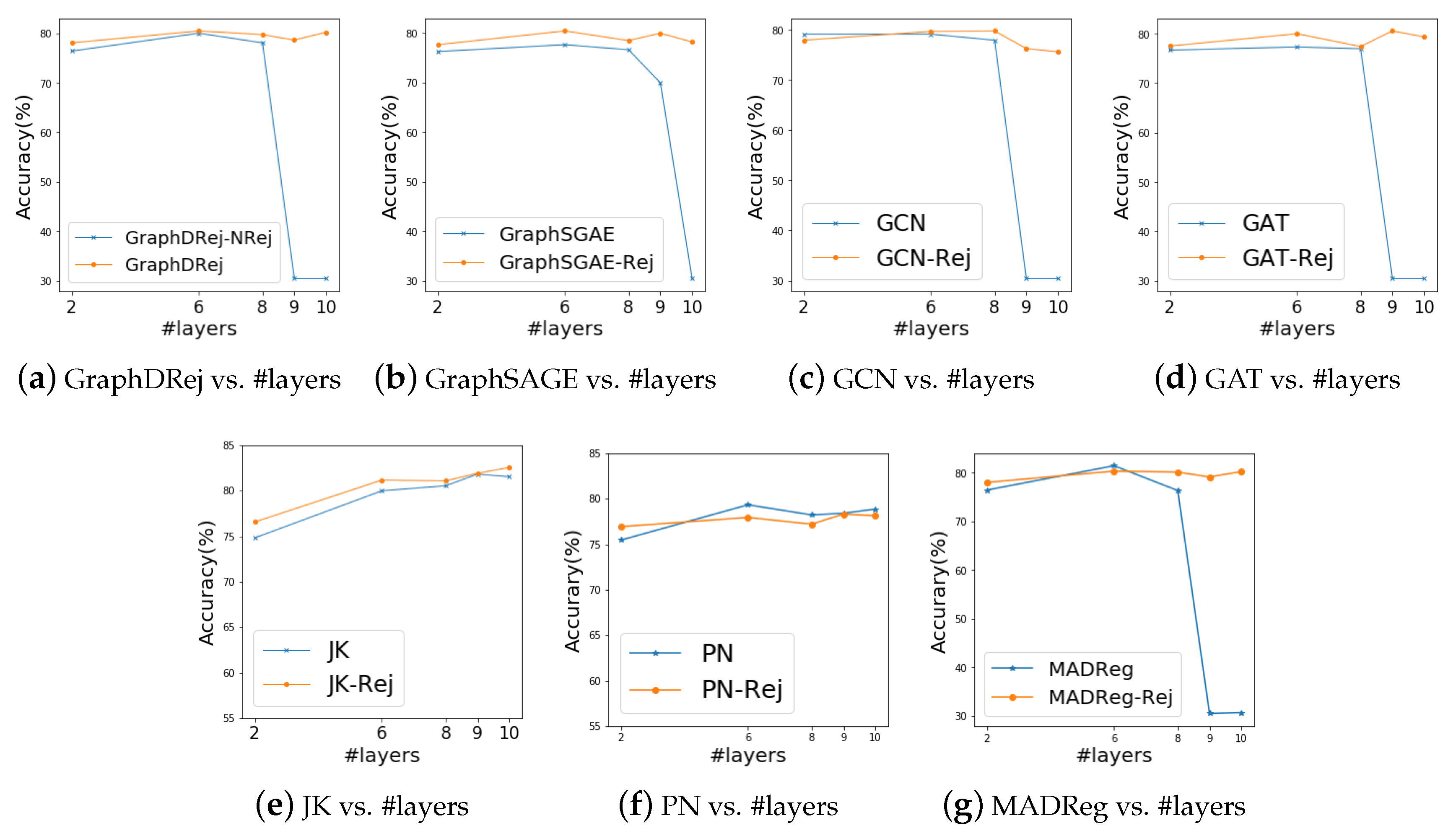

30] provides a topological view of the over-smoothing problem and brings a MADReg loss to avoid the representations of the distant nodes to be similar to the representations of the neighbor nodes. Experimental results (see

Section 6.5) show MADReg can only relieve rather than prevent this problem. Although some of these works can solve the over-smoothing problem, none of them considers how to capture a suitable larger neighborhood well to learn better node representations in a situation where GNNs are free of the over-smoothing problem. In this paper, GraphDRej can not only overcome the over-smoothing problem by a rejection mechanism, but also better characterize node structures by multiple graph dilated convolution kernels. Related experimental results are shown in

Section 6.4 and

Section 6.5.

3. Preliminaries

We begin with summarizing the common GNN models and, along the way, introduce our notations. We represent a graph G as , where V denotes the node set and E is the edge set. Let A be the adjacency matrix of G. The k-hop neighborhood of node v is the set of all nodes reaching to node v in k steps exactly. As default, is the 1-hop neighborhood of node v. Each node is associated with a feature vector and a label .

Most GNNs aim to learn a node representation mapping function by using the graph structure and node features. Current GNNs follow a “message propagation” scheme, where at each iteration, the node can aggregate the information (i.e., “messages”) from its neighbors. Therefore, after

k iterations of propagation, the node representation can capture

k-hop structure information. Formally, at the

k-th layer, a GNN can be expressed as

where

is the representation (i.e., “message”) of node

v at the

k-th layer, which captures the

k hop structure information, and

can be taken as the integrated message representation from neighbors. The initialization message

.

AGGREGATE is a message propagation function which aggregates information from neighbors, and

COMBINE is a function that combines the information from different hops. An illustration is shown in

Figure 1. All the information from the neighbors (i.e., nodes 2–6) of the center node 1 is merged by AGGREGATE. Then,

COMBINE combines the information from the neighbors and the center node 1.

Different models have different propagation functions. For example, Graph Convolutional Network (GCN) [

17] proposes a degree-normalized algorithm to aggregate neighbors’ messages. The propagation rule (i.e., Equation (

1)) of GCN can be presented as

where

is the weight matrix.

AGGREGATE and

COMBINE are fused into one function (i.e.,

in Equation (

1) and

in Equation (

3)), and

is a nonlinear function.

4. Limitation of Existing GNNs

Although GNN models significantly outperform many state-of-the-art methods on some benchmarks, there remain some problems that limit the performance of GNNs.

In point of fact, the message propagation scheme in GNNs can be taken as a random walk [

21]. The influence score of node

u on node

v, which measures the effect of the input feature of node

u to the representation of node

v, can be defined as

where

is the representation vector of node

v at the

k-th layer,

refers to the

i-th dimension of

, and

. Then, the expected distribution of the normalized influence score follows a slightly modified

k-step random walk distribution

starting at the root node

v [

21]. Thus, the message propagates from node

u to node

v in a random walk pattern.

As we know, the node sequence

generated by a random walk is a Markov chain. When

, the probability distribution

converges to a stationary distribution (i.e.,

for any node

) [

31]. It means that, for the message propagation scheme, the representation of each node is influenced almost equally by any other nodes (i.e.,

) [

21] when GNNs go deeper (i.e., a large value of

k). In other words, the node representations may be over-smoothed and lose their focus by the information from distant nodes [

20], which is called the over-smoothing problem.

Therefore, one of the limitations of existing GNNs is that most GNN models cannot go deeper. A deeper version of these models even performs worse than a shallow version, and the best performance of these models is usually achieved within two or three layers [

17]. Although a sufficient size of neighborhoods is especially important, which allows the models to explore a more complex graph structure and aggregate useful neighbors’ information [

20,

21], existing GNNs can only capture limited structure information in a small size of the neighborhoods.

In summary, on the one hand, when we stack too many layers in a GNN model, the node representation will be over smoothed, which makes the node representation indistinguishable. On the other hand, there always exists a need for a sufficient size of neighborhoods to learn a more effective representation [

20,

21]. Therefore, in this paper, we propose a new neural network architecture to tackle the over-smoothing problem and capture a larger size of neighborhoods at the same time.

5. Graph Dilated Network with Rejection Mechanism

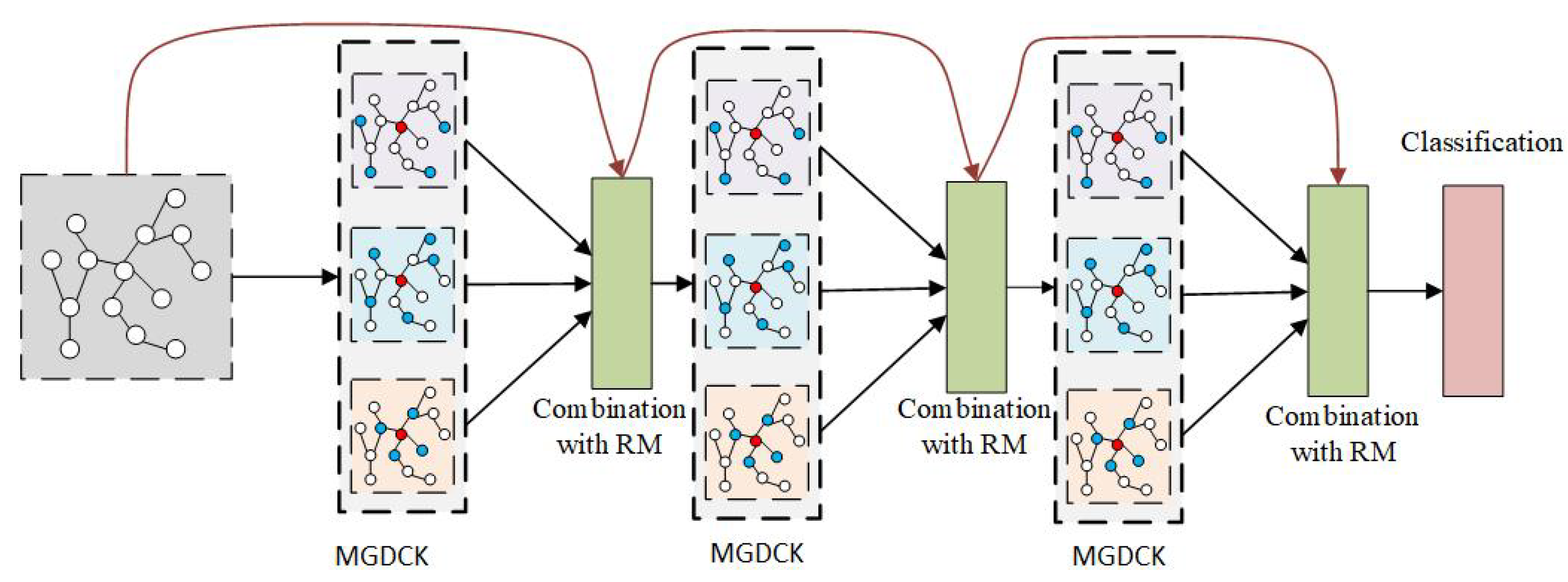

In this section, we introduce the proposed model, called Graph Dilated network with Rejection mechanism (GraphDRej). To overcome the conflict of GNNs just shown in the preceding section, we propose two main components, i.e., the rejection mechanism and the graph dilated convolution kernel. Generally speaking, at each layer, each node can aggregate messages from a large neighborhood via graph dilated convolution kernels, and a hop penalty parameter is introduced to implicitly reject the information from distant nodes (addressing the over-smoothing problem). At the last layer, a cross-entropy loss is adopted to optimize the parameters of the proposed model.

Figure 2 illustrates the architecture of the proposed model.

5.1. Rejection Mechanism

5.1.1. Method

To address the over-smoothing problem, we propose a simple but effective Rejection Mechanism (RM). As discussed in

Section 4, the over-smoothing problem is caused by averaging too much information from distant neighbors. Therefore, we introduce a learnable hop penalty parameter

(optimized by the backpropagation) at each layer to adaptively control the messages flowing from one layer to the next layer (i.e., from one hop to the next hop). In this way, the model can reject the messages from distant nodes to address the over-smoothing problem.

Different from GAT, which dynamically selects messages from 1-hop neighbors, the rejection mechanism is a layer-wise or hop-wise operation. Specifically, the rejection mechanism focuses on the combination function (i.e., Equation (

2)) introduced in the message propagation. At the

k-th layer, the hop penalty parameter

is applied into the integrated message representation from neighbors, and then the combination function can be rewritten as

where

is a weight matrix,

is the rescaled penalty parameter, and ⊙ refers that each element of the vector

multiplies the rescaled hop penalty parameter

. Intuitively, the value of

can be regarded as a gate to influence the message propagation from neighbors to the center node.

is an element-wise addition operation.

To fully understand the proposed rejection mechanism, we provide an example (shown in

Figure 3) to illustrate how it works to address the over-smoothing problem. For simplicity, a chain graph containing four nodes is taken as an example, and only considers the predecessor nodes as 1-hop neighbors. Considering a three-layer graph neural network, which adopts the mean aggregation function and the proposed combination function with RM, the propagation in the

k-th layer for this chain graph can be expressed as (ignoring the weight matrix and nonlinear function in Equation (

5))

where

, the symbol ⊙ in Equation (

5) is ignored for expressing convenience, and

refers to the rescaled parameter

.

Then, the node 3’s representation obtained from the last layer, can be represented as

It reveals that the influence from the distant node 0 to the representation of node 3 is punished by . Due to , multiple multiplications will lead to a small value, which adaptively controls the message flowing from distant nodes (e.g., node 0) to the representation node (e.g., node 3).

Therefore, the benefits of the proposed rejection mechanism can be summarized as follows. (1) When stacking layers to build a deep GNN, the information from distant nodes will be more likely to be punished or even rejected. It is a simple but effective way to address the over-smoothing problem. (2) The information from distant nodes can also affect the node representation, which helps to capture the global structure. (3) The combination of the hop penalty parameters leads to adaptively aggregate information from different hops, which contributes to building a more powerful deep graph model.

5.1.2. Discussion

We notice that the graph neural network GG-NN [

25] proposes a gate mechanism to aggregate neighbors, which is similar to the rejection mechanism proposed in our paper. The differences between these two mechanisms are summarized as follows. (1) The motivations of these two mechanisms are different. For GG-NN, the gate mechanism is proposed to dynamically aggregate neighbors’ information based on the information of neighbors and the center node. For GraphDRej, the rejection mechanism is proposed to reject the information from distant nodes to address the over-smoothing problem. (2) The rejection mechanism is simpler and does not require much computation, whereas for GG-NN, the gate computation involves several multiplication and addition operations of matrices [

25]. (3) The rejection mechanism is a more direct way that strictly limits the information from the distant nodes by the penalty parameters, whereas in GG-NN, according to Equation (

6) in the original paper of GG-NN [

25], not only the messages from the neighbors are rescaled, but also the message from the center node is rescaled. This means the gate mechanism in GG-NN is more like a weighted average of the message from neighbors and the center node, and the messages from the center node may also be rejected. Experimental results (see

Section 6.6) show GG-NN still suffers from the over-smoothing problem.

To further analyze the connection between the rejection mechanism and the gate mechanism, we propose a gate-based version of GraphDRej (denoted as GraphDRej-Gate). The only difference between GraphDRej and GraphDRej-Gate is the computation of the penalty parameter. The penalty parameter

in GraphDRej is used for all the nodes in the same layer, whereas the penalty parameter

in GraphDRej-Gate is used for node

v in the same layer.

where

and

are the transformation matrices. Compared with GG-NN, the rejection (gate) mechanism in GraphDRej-Gate is only applied to the neighbors’ messages, which helps to address the over-smoothing problem (see

Section 6.6). Note that although the gate mechanism is common in other research areas, how to apply the gate mechanism on GNNs to address the over-smoothing problem is still an open question. For example, GG-NN falls into the over-smoothing problem, while GraphDRej-Gate can solve this problem. Actually, the rejection mechanism is a more general idea and leads to a direction on addressing this problem, and the penalty parameter can be a simple learnable parameter or can be computed by a gate function.

5.2. Graph Dilated Convolution Kernel

To have a sufficient size of neighborhoods in message propagation, we explore the idea of dilated convolution kernel [

32] used in Computer Version to enlarge the reception field. Then, we propose a graph dilated convolution kernel, which changes the standard and single message propagation scheme, i.e., one layer for searching 1-hop neighbors, and brings the diversity of propagation schemes.

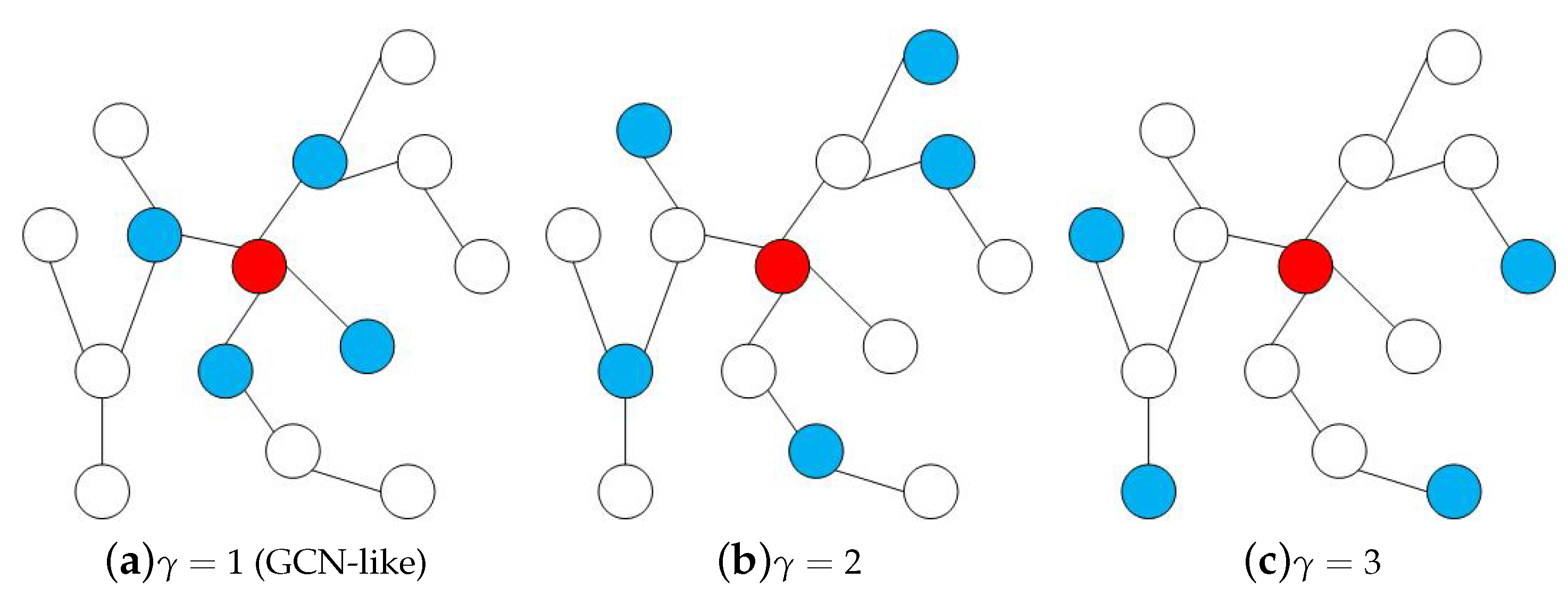

Specifically, we introduce a graph dilation rate

, which refers to the distance between the node and its neighbor nodes.

Figure 4 illustrates the examples of graph dilated convolution kernels with

. Actually, the standard graph convolution kernels used in GNNs can be taken as a special case of our graph dilated convolution kernel where

. Obviously, a larger value of

allows the model to explore nodes from a larger neighborhood. For example, a two-layer stacked graph dilated convolution (

) network can view neighbors within six hops, whereas for a standard two-layer stacked graph convolution network, only neighbors in two hops are considered. Therefore, we can enlarge the neighborhood size via graph dilated convolution kernels.

As presented above, different graph dilated convolution kernels take nodes in different hops as the neighborhoods. To have a diverse and sufficient size of neighborhoods, multiple types of graph dilated convolution kernels are simultaneously applied to the center node. Then, we further propose Multi Graph Dilated Convolution Kernels (MGDCK). At each

k-th layer, multiple types of graph dilated convolution kernels are adopted. Each node

v may aggregate the messages not only from 1-hop neighbors (i.e.,

), but also from 2-hop neighbors (i.e.,

). Formally, when each layer contains

T types of graph dilated convolution kernels, the

AGGREGATE and

COMBINE functions can be expressed as

where

,

COMBINE refers to the combination function with RM mentioned in

Section 5.1, and the implementation of

AGGREGATE can be various. In this paper, we adopt a mean aggregation strategy. Other aggregation functions can also be applied. Then, the representation of node

v at the

k-th layer is the mean of all the representation vectors produced by

T graph dilated convolution kernels

Note that other pooling strategies such as max-pool, min-pool, and attention-pool can also be considered as the summarization operators on all the representations from these T graph dilated convolution kernels. Here, we only take the mean-pool as an example.

Although directly stacking layers can also enlarge the size of neighborhoods, too many layers may introduce the difficulty of the model training. The experimental results also show the effectiveness of graph dilated kernels compared with the directly stacking strategy (see

Section 6.7).

5.3. Training

In this section, we introduce the training details of GraphDRej, including the loss function and the overall algorithm.

5.3.1. Loss Function

We follow the loss used in standard GNNs [

6,

10,

17]. The loss function of GraphDRej is a supervised loss, i.e., making a prediction for the label of each training node. The supervised loss is defined as the cross-entropy.

where

is a weight matrix,

is the output of last layer and is taken as the learned node representation vector, and

is the label prediction of node

v in the training nodes.

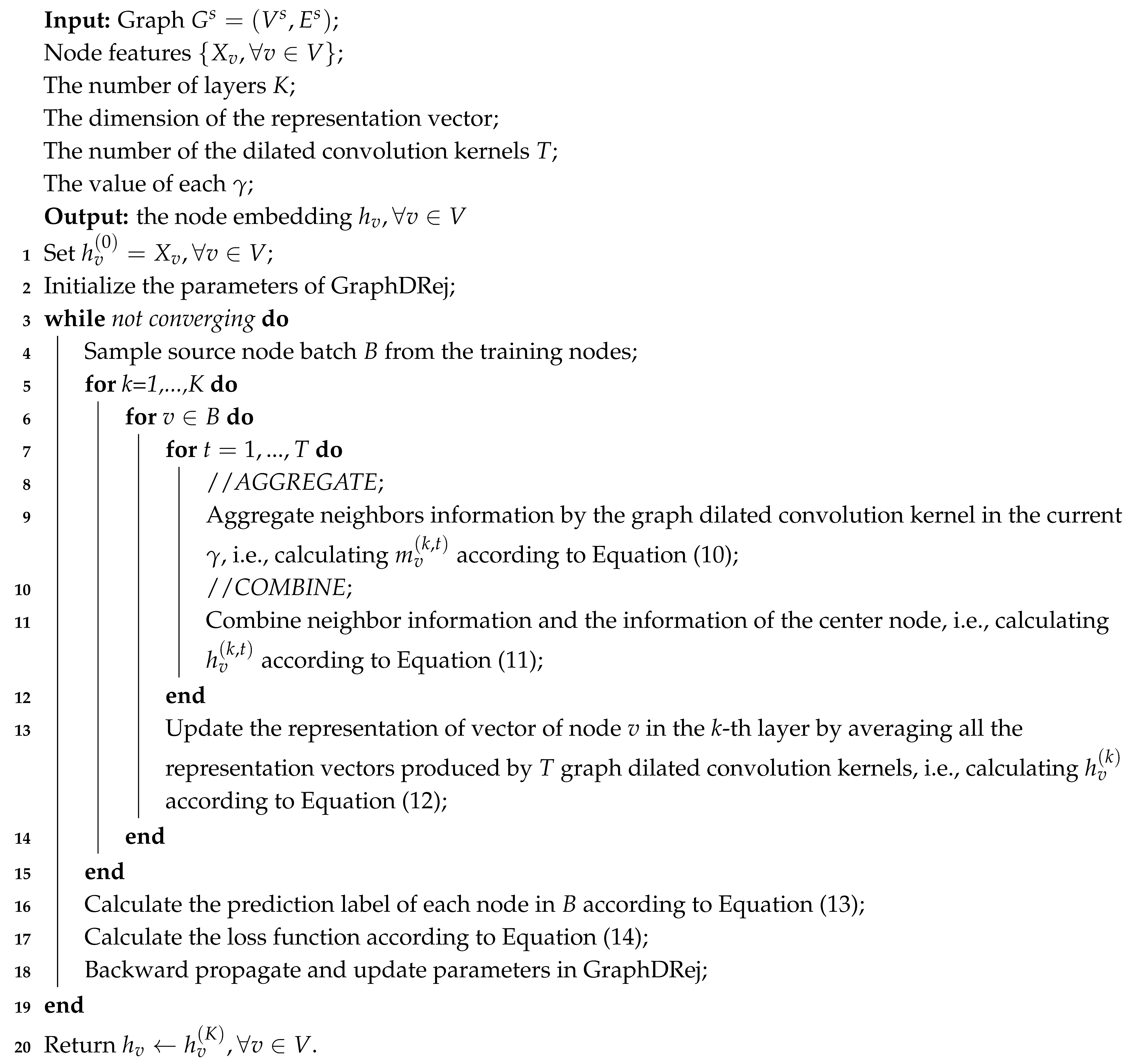

5.3.2. Overall Algorithm

The overall algorithm of GraphDRej is summarized in Algorithm 1. First, in each iteration, we sample a batch of nodes from the training nodes in Line 4. At each layer

k, multiple graph dilated convolution kernels are applied to aggregate information from neighbors (Lines 8–9), and a rejection mechanism based combination is adopted to combine the information from the neighbors and the center node (Lines 10–11). Then, the node representation vector is updated by averaging the representation produced by different graph dilated convolution kernels (Line 13). We calculate the label prediction and the cross-entropy loss in Lines 16–17. After the forward propagation (Lines 4–17), backward propagation is carried out to update the parameters in Line 18. Finally, after the convergence, we take the last layer output as the embeddings of nodes in Line 20.

| Algorithm 1: The Algorithm of GraphDRej. |

![Applsci 10 02421 i001 Applsci 10 02421 i001]() |