1. Introduction

A retina consists of three types of cone cells, namely L-, M-, and S-cones, which are sensitive to long, medium, and short wavelengths of visible light, respectively. The combination of responses from these three types of cone cells determines the color that is perceived. Genetic disorders can cause one or more cones to be partially or totally nonfunctional, which leads to color vision deficiency (CVD), also known as color blindness [

1].

There are 250 million people affected by CVD around the world [

2]. Not being able to clearly differentiate certain colors causes those with CVD to face inconveniences, misconceptions, and even dangers in their daily lives. CVD is an inborn deficiency for most of those diagnosed, and there is not yet a cure for the condition; however, contact lenses and glasses with a color filter are commercially available. By applying uniform changes to a user’s field of view (FoV), these tools increase the red-green contrast but may filter out other colors. Furthermore, it is reported that wearing tinted glasses produces an uncomfortable visual experience, and these glasses may potentially impair the user’s depth perception [

3].

In the field of digital image processing, many methods have been proposed for supporting people with CVD. Some approaches focus on increasing the contrast for pairs of colors by modifying one or both [

4,

5,

6,

7], while others use additional visual cues, such as patterns, to encode color information [

8]. The Daltonization-process-based approaches improve image quality for CVD by increasing or reintroducing lost color contrasts [

9,

10,

11,

12,

13]. Most of these approaches first use color deficiency simulation methods to compute the decreased or lost information.

Recently, several research groups have proposed to implementing Daltonization using an optical see-through head-mounted display (OST-HMD) [

12,

13]. OST-HMD provides a computational semitransparent overlay to the environment. By observing the environment through an OST-HMD, where a personal deficiency and environment adapted compensation image are displayed, a CVD user is expected to regain the lost information.

However, color distortion is a problem that needs to be solved to realize the Daltonization process on OST-HMDs. Distortion happens for many reasons, such as the decay of environmental light when passing through an OST-HMD or rendering distortion [

14,

15]. The most challenging problem, however, is that commercially available OST-HMDs, which are light-additive based, can only emit light that is combined with incoming light from the environment, which makes the perceived image brighter, while the Daltonization process requires some pixel values of the images be decreased.

This paper proposes a novel approach for achieving arriving light control using a liquid crystal display (LCD) for realizing the Daltonization process with an OST-HMD. To validate the feasibility of the idea, a basic prototype consisting of a scene camera, a user-perspective camera, a transmissive LCD panel, and an OST-HMD was presented in [

16]. Small lookup tables were used to calibrate the color distortions. The feasibility of the proposed idea was demonstrated with artificially created simple images. This paper proposes ALCC-glasses as an improved prototype system for validating and demonstrating the new arriving light chroma controllable augmented reality technology for CVD compensation. Well designed experiments were conducted to accurately predict the functions required to correct the distortions that occurred during rendering on the OST-HMD and the transmissions through the OST-HMD and the LCD. Algorithms for automatically computing the transparency of the LCD and the images being displayed on an OST-HMD are presented. Various images were used to validate the effectiveness of the proposed approach by comparing the Daltonization process results obtained with and without light subtraction.

Employing an LCD for light reduction is not itself a new idea. Wetzstein et al. [

3] developed two prototypes to explore the possibility of using a partially transparent LCD panel for light modulation. One lets users see directly through the LCD, but there may be blur and diffraction artifacts. The other uses extra optical lenses to get the LCD in focus and a beam-splitter set between the LCD and the scene to get an on-axis image so that pixel precision can be ensured, but the structure of the prototype makes miniaturization difficult when placing it in the HMD. Hiroi et al. [

17] used a partially transparent LCD panel in their brightness adaptation study. However, their system is for achieving suppression of light in an overexposed area using the LCD and does not allow for individual control of the three color channels.

The contributions of this paper can be summarized as follows:

1. Reproducing color precisely for each pixel is particularly important for Daltonization. The proposed prototype is the first system that can precisely reproduce color by achieving light subtraction with the LCD, while also correcting the color distortion resulting from the transmission of light through an OST-HMD and LCD as well as the rendering on an OST-HMD.

2. A new algorithm is developed for achieving final pixel-wise light chroma control with an OST-HMD while using an LCD to subtract the overall amount of light. In this way, the blur and diffraction artifacts can be alleviated without requiring the LCD to be in focus, which requires incorporating extra devices like optical lenses into the existing system.

3. As a arriving light control technique, the proposed method can be widely used in other augmented reality applications which requiring reduction of individual color channels of the arriving light.

2. Related Work

Anagnostopoulos et al. [

9] and Badlani et al. [

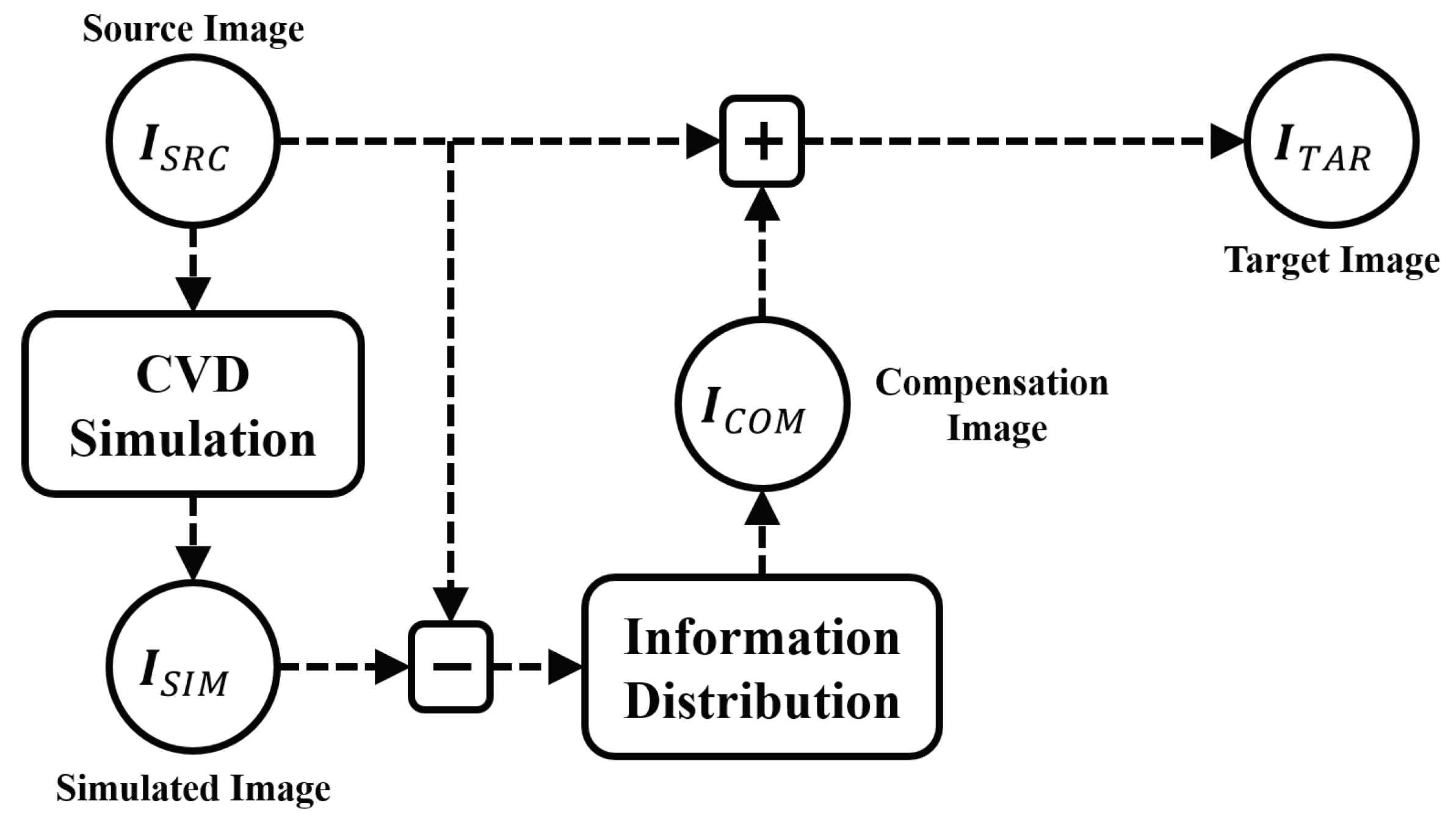

11] put forward the Daltonization process for protanopia (L-cone) and deuteranopia (M-cone) compensation, respectively. As shown in

Figure 1, in [

9,

11], a compensation image,

, was generated by referring to the original image,

, and the CVD simulated image,

. The

is then superimposed over the

to synthesize the target image,

; this image helps individuals with protanopia or deuteranopia identify red and green, which they often struggle to distinguish. In the deuteranopia case [

11],

was obtained by removing the information concerning the M-cone from the

. The difference between the

and the

was treated as the lost color information, particularly from the G channel, as a result of deuteranopia. Then, the

was generated by distributing the lost G channel information across the R and B channels through the following calculations:

In this paper, we applied Birch’s model [

18] to

for the deuteranopia simulation and use the same process as [

11] to compute the compensation image. With the appearance of augmented reality (AR) techniques, CVD compensation via wearable devices has become an emerging research topic. Ananto et al. [

10] and Tanuwidjaja et al. [

13] both proposed CVD compensation solutions with OST-HMDs. In [

10,

13], the

was shown on an OST-HMD superimposed over the realistic scene. However, as already mentioned, the issue of color distortion in OST-HMDs cannot be ignored. In addition, there must be subtraction of arriving light during the Daltonization process, but current OST-HMDs only add light to the background and are not capable of subtracting light.

2.1. Color Distortion Correction for the OST-HMD

A lookup table (LUT) that defines the relationship between the input colors and the ones actually observed appears to be one possible way of dealing with the color distortion of an OST-HMD. But, creating a 16-million-color LUT is time-consuming (it may take two to three days), and the OST-HMD would require a considerable amount of memory to store the LUT. To reduce the size, both Sridharan et al. [

19] and Hincapié-Ramos et al. [

20] utilized a binned-profile method [

21] to divide the CIELAB color space into bins with a size of

. To get rid of the LUT, Itoh et al. [

14] created a channel-specific color-correcting model that consists of two portions, a linear transformation matrix and a nonlinear function, according to the physical optics represented in the rendering and perception of an OST-HMD and a user’s eyes. Ryu et al. [

22] used synthesized images that were captured by a user-perspective camera to estimate real-space colors and performed color correction accordingly. Kim et al. [

23] tackled nonlinear color changes through localized regression; they modeled the changes and used hue-constrained gamut mapping for color correction.

2.2. Light Modulation for OST-HMDs

In order to make arriving light subtraction possible, Wetzstein et al. [

3] developed two prototypes to determine the capability of light modulation using a partially transparent LCD panel. One of the prototypes used an extra optical lenses to get the LCD in focus, and a beam-splitter was set between the LCD and the scene to get an on-axis image so that pixel precision could be ensured. The other prototype let user see directly through the LCD, using an off-axis camera for the scene image. However, the resulting image may suffer from the problems of blur and diffraction.

Hiroi et al. [

17] also introduced a partially transparent LCD panel in their brightness adaptation study. In [

17], the beam-splitter was set to induce the half-light of a scene to the scene camera for overexposure and underexposure detection. The LCD panel was positioned between the beam-splitter and the OST-HMD to stop a portion of the light from getting through the OST-HMD. They achieved the suppression of light in the overexposed area by utilizing the LCD and the compensation of the underexposed area with the OST-HMD. However, their system processes the three color channels (R,G,B) uniformly and gives no control over the individual color channels.

Itoh et al. [

24] introduced an approach for light subtraction based on a spatial light modulator (SLM) with a system that also used an additional optical lens and beam-splitter and provided pixel-level color subtraction. But because their approach also relied on the resolution of the SLM, it also suffered from diffraction because of the pixel grid.

The construction of this paper’s proposed prototype is similar to [

17]. But, a new algorithm was developed for computing the images to be displayed on an OST-HMD and an LCD to achieve light chroma control, including light subtraction for individual color channels. By achieving final pixel-wise light chroma control with an OST-HMD while using an LCD to subtract an overall amount of light, the blur and diffraction artifacts can be alleviated without requiring getting the LCD in focus, which requires using extra devices, like the optical lenses in [

3]. Furthermore, because reproducing color precisely for each pixel is particularly important for Daltonization, the proposed prototype also addresses color correction taking into consideration color distortions resulting from the transmission of light through an OST-HMD and LCD as well as the rendering on an OST-HMD.

3. Proposed Method

In this section, we first present the idea of using an OST-HMD and an LCD for realizing the Daltonization process. Next, we introduce the hardware configuration of the ALCC-glasses. Finally, we explain how to control arriving light to achieve the CVD compensation goal.

3.1. Realizing the Daltonization Process with an OST-HMD and LCD

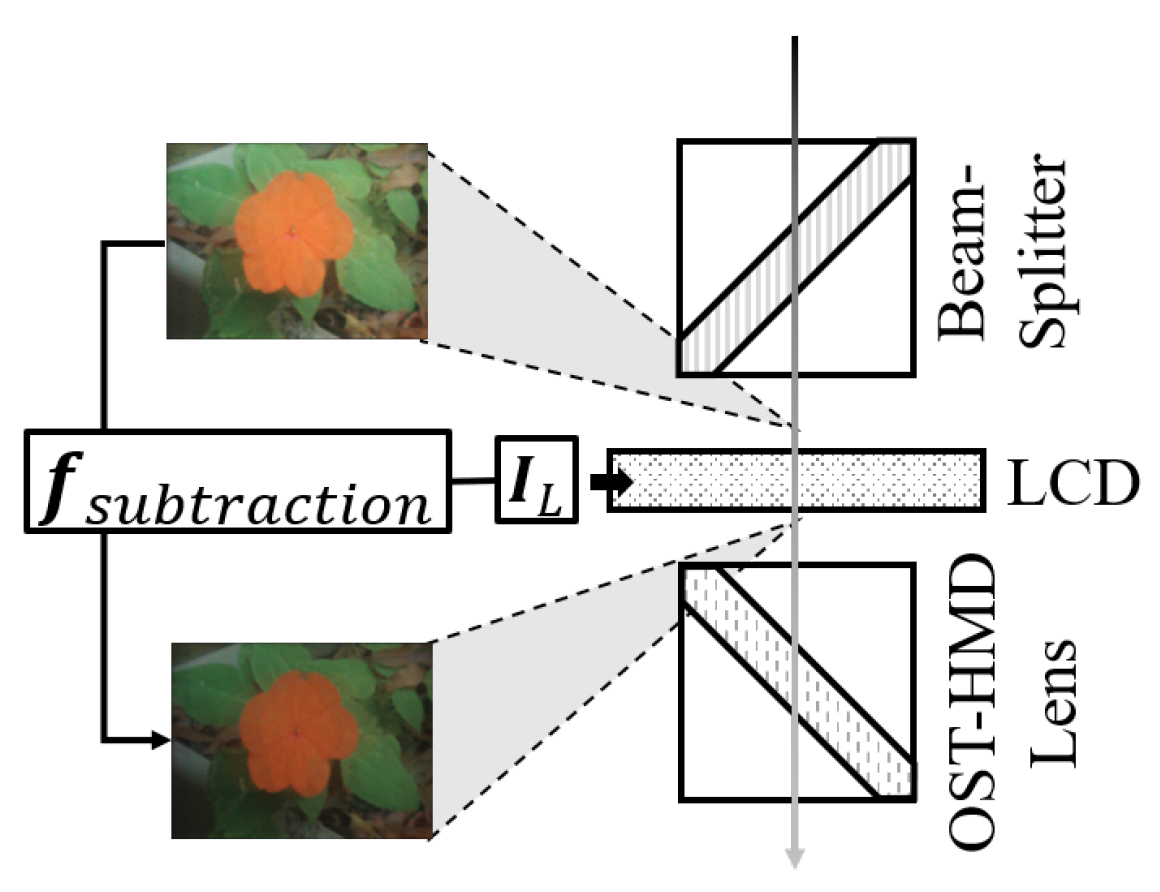

As shown in

Figure 2, when realizing the Daltonization process with an OST-HMD, the scene image,

, is the environment a user with CVD is observing, and the target image,

, is computed by distributing the lost color information to other color channels as described in

Figure 1. This should be equal to the compensated view of the environment observed through the OST-HMD. The displayed compensation image,

, is computed based on the difference between

and

, and because the compensation image may consist of negative pixels, an LCD is used to achieve the subtractive overlay effect.

3.2. Hardware Configuration

Figure 3 shows the hardware configuration of the ALCC-glasses, which is composed of a scene camera, a beam-splitter, a transmissive LCD panel, an OST-HMD, and a user-perspective camera. The scene camera is used for capturing the scene image,

, which is required for computing the compensation image,

. In order to achieve pixel-specific color modification, the image shown on the LCD, the one displayed on the OST-HMD, and the external environment’s image must be aligned. We used a 50/50 beam-splitter to separate the environmental light equally into the scene camera and to the LCD and the OST-HMD. The environmental light will decay after passing through the LCD and the OST-HMD. Moreover, nonlinear distortion usually occurs when rendering an image on an OST-HMD. We realized the color calibration by taking into account the decay and distortion in generating the compensation image. For this purpose, a camera was installed at an eye level position in the prototype to capture the user-perspective image,

; these images were used to collect the data required for the calibration. The user-perspective images were also required for evaluating the effectiveness of the proposed system, which will be introduced in

Section 4.

In the current implementation, we used two Blackfly USB color cameras (BFLY-U3-23S6C-C), a Sony LCX017 LCD panel (1024 × 768) with polarizer and controller, an EPSON BT-35E (1280 × 720) OST-HMD, and a 50/50 beam-splitter. We also used an EIZO CS2730 LED monitor to produce environmental light. When the LCD displays a brighter color (e.g., white as ), it gets more transparent. When it displays a darker color (e.g., black as ), it gets less transparent and blocks the light. We processed the image taken by the scene camera, and calculated the output for the LCD and OST-HMD on a PC with an Intel Xeon CPU E31245 using a single-threaded naive implementation.

The scene camera and the user-perspective camera both had all automatic adjustments and gamma correction turned off (). For alignment, we adjusted the position of the environmental light (monitor) to a position roughly consistent with, the virtual position of the image rendered on the OST-HMD. Thus, both the environment and the image being shown on the OST-HMD can be seen clearly and pixel-specific color modification becomes possible. To avoid the confusion caused by ambient light, we conducted all experiments in a dark room.

3.3. Achieving CVD Compensation with ALCC-Glasses

We ignore the light decrease from the monitor to the scene camera, and assume that the scene camera is observing the scene directly. The environmental light is separated into two rays, but they may not be precisely 50/50. The ray toward the user-perspective camera will then be decreased by the LCD panel and HMD lenses. We use function

to represent all of these changes from the scene image,

, to the user-perspective image,

(

Figure 4), including the difference of the two rays coming from the beam-splitter. We can control part of the decrease by changing the display image,

, on the LCD to adjust the transmittance of the LCD. The relationship of

and transmittance is represented by

(

Figure 5). Finally, the environmental light will combine with the light emitted by the OST-HMD. When we display an image on the OST-HMD, denoted as

, the color is distorted for many reasons; this distortion is accounted for by

(

Figure 6). With the

observed by the scene camera and

observed by the user, we have

With the three functions in Equation (

2) are known, we can compute

and

, which are being displayed by the LCD and the OST-HMD, respectively, using the inverse mapping of the functions. In this subsection, we will first explain how to predict

,

, and

through experiments, and then explain how to control the overlay enabling CVD users to observe a compensated image through the OST-HMD.

3.3.1. Subtraction Function

To predict

, we conducted an experiment by turning off the OST-HMD and displaying an environment color,

, on the monitor. The image to be sent to the LCD was denoted as

, a single colored image with color

. At this point we found that the transmittance from the LCD was gradient due to a hardware issue. We solved this problem by displaying a linear gradient image instead, which guaranteed that for each

, transmittance from the LCD was even. We changed

from 0 to 255 and recorded the corresponding user-perspective image,

.

Figure 7a shows the observed results, with the horizontal axis representing

i, and the vertical axis standing for the

,

,

transmittance value as a percentage. Note that the color values may vary by pixels in the user-perspective image, although the environment image is a uniform color image.

,

, and

are the average value of all pixels in the observed image. As

Figure 7a shows,

is nonlinear. We found the curve could be best fit with a fifth order polynomial. The graphs in

Figure 7b are the observed results when the environmental light was set to

,

, and

. All three graphs are very similar; actually, the curves for all of the different

values are very similar. We have tested the fitting error of the curves for the different

values and found the error increased when the value of any channel in

dropped. Therefore, we chose the curve where

for better accuracy in further computing.

Given a target transmittance

t, we compute the required input,

i, to LCD by solving the polynomial equation using the Newton–Raphson method.

Figure 7a also shows that with the same

t, there was slight differences in the R, G, and B channels, which we believe was determined by the optical behavior of the LCD’s material. Since we cannot change the transmittance of each channel separately, we determined a uniform

i from

t by solving the fitting equation for the average curve of the three channels using the Newton–Raphson method. Still, the difference of each channel will be considered in further calculations.

Note that in all the experiments in this subsection, with the input as 0, the output still gets a value. This is a result of the camera’s light receptor settings. Some types of monitors and OST-HMDs also have the so-called zero-input problem [

14]. These constants must be removed when calculating the curve fitting.

3.3.2. Decay Function

To estimate

, we displayed each of the three color channels from 0 to 255 on the monitor with the OST-HMD turned off and set

i of

to 255 (highest transparency). Since the OST-HMD was off and the LCD was set to its highest transparency, as defined in Equation (

2), the

and

should satisfy

. In

Figure 8, we plot all (

,

) pairs as points on the 2D space, with

along the horizontal axis and the values of

in the R, G, and B channels along the vertical axis. The graphs indicate that the relationship between

and

was linear. As a result of the difference in the color reproduction area of the monitor for each channel, the final observable area of blue light was smaller than red light; hence the line of blue light is shorter than that of red light in

Figure 8.

3.3.3. Distortion Function

Figure 9a shows the response curves of the user perspective camera when the monitor was turned off and a single-channel color was displayed on the OST-HMD. For the

, the input image to HMD, we used

to represent the distorted image that was observed by the user. As can be seen in

Figure 9a, even with single-channel color input, the observation has value in all three channels. This is the side color effect [

14].

Figure 9b shows the relationship between side colors and main colors, with the main color value along the horizontal axis and the side color value on the vertical axis. We can observe that the side colors have a linear relationship to the main colors. Therefore, the main color of each channel is denoted as

,

, and

, and each side color is represented as follows:

Here,

and

stand for side colors of the R-channel,

and

for the G-channel, and

and

for the B-channel. In this way, when

is estimated, we only consider the main color components of each channel. Given a distorted image

, we have

with Equation (

3), which can be rewritten as

Since

and

are known from the experiment (

Figure 9b), we can compute

by solving Equation (

4). With the color

, we compute the required input

for the OST-HMD by solving the polynomial equation with the Newton–Raphson method.

3.4. Controlling the Overlay to Achieve the Target Image

In this subsection, we explain how to compute

and

for a given

and

using Equation (

2).

To determine whether light subtraction was necessary and how much light should be subtracted, we calculated the differences between

and

, the target image computed with the Daltonization process of Equation (

1) and

, the user-perspective image captured with the OST-HMD turned off and the LCD set to

. If

for some pixels is below 0, it means that light subtraction should be performed for those pixels. Because it is difficult to achieve the focus alignment between the LCD, the OST-HMD, and the monitor. we only uniformly controlled the transmittance of the LCD and then achieved the target colors by compensating individual color channels for each pixel using the OST-HMD. For the computation strategy of the transmittance, we propose two principles, the lowest transmittance principle (Principle I), and the histogram peak principle (Principle II). For both Principles I and II, each channel of all the pixels will be judged regarding the need for light subtraction. Consider the set of pixels

; arbitrary pixel

has at least one negative channel value in

, so it requires light subtraction. Then, the light subtraction rate (LSR) and the corresponding transmittance

t of the channel are calculated as

where

and

. In Principle I, the lowest transmittance of all pixels is set as the transmittance of the LCD panel; channels of pixels that are over subtracted by the transmittance

t are supposed to be compensated for by the OST-HMD. In Principle II, the pixel number of each channel and transmittance is counted as the height of the bin in the two-dimensional histogram

H. Each bin of the histogram,

, is combined by a chromaticity channel

c with

and

, which is in hundredths.

Then, we sum up the histogram

H by chromaticity channel and get a one-dimensional histogram

,

Finally, the

k corresponding to the maximal number of pixels of

is set as the target LSR, and

is set as

In theory, Principle I should be more correct. However, we adopted Principle II because of the limitations of the OST-HMD, which will be elaborated on in the discussion section.

(

) is computed as

where

stands for the subtraction required by the

c channel

of pixel

. Then, the controlling image

of the LCD is computed by the method described in

Section 3.3.1, and a new user-perspective image

is estimated as

If for all pixels is greater than or equal to 0, is set to , no subtraction is required, and can be calculated directly following the difference calculation.

For computing

, the difference between

and the estimated user-perspective image

denoted as

, is used to compute how much each channel of each pixel should be compensated for by the OST-HMD. This means the distorted image

emitted by the OST-HMD should equal

; therefore, we can use Equation (

4) to get

and then compute the required input,

, for the OST-HMD as described in

Section 3.3.3.

4. Result and Evaluation

In order to verify the effectiveness of the proposed method, we conducted an experiment to compare our results with the existing method. Both [

12,

13] have the same purpose as ours, that is, achieving color compensation by displaying overlay image on OST-HMD. Both methods do not support the light subtraction. We choose to compare with [

13] as it also uses daltonization so that we can confirm the effect of the light subtraction while eliminating other factors caused by the difference of compensation methods.

Given the

captured by the scene camera from the monitor, the

is equal to the target image for deuteranopia, which is obtained by adding a compensation image computed with Equation (

1) to the

. Denoting the image provided to the user with our prototype system as

and with the existing method [

13] as

, we compared the similarities of

and

to the computed target image

.

, which is captured with the user-perspective camera, is generated by first computing the compensation image with Equation (

1) using

and

, and then by computing the LCD panel controlling image

and the OST-HMD overlay image

with the estimated

,

, and

using Equation (

2).

, which is also captured by a user perspective camera, is generated by displaying the compensation image generated with Equation (

1) directly on the OST-HMD with the LCD removed from the system. For those pixels with negative values in the compensation image, we changed the negative value to 0.

With the LCD installed, the color area that can be observed by the user is actually smaller than the area without the LCD because of the decay and the material color of the LCD. As shown in

Figure 10, with the compensation for both the OST-HMD and the LCD, our method (

Figure 10, 3rd column) appeared to be more similar to the target images (

Figure 10, 2nd column) and showed enhanced contrast as compared to the existing method (

Figure 10, 4th column) for the areas where contrast loss tends to occur with deuteranopia. The resolution of all images is 400 × 300, and the average processing time was 30.91 milliseconds.

Furthermore, as a quantitative evaluation, we used the root mean square (RMS) error metric proposed in [

24] to compare our method with the existing method. The RMS evaluates the consistency of the local contrast between a pixel

and its randomly picked neighboring pixels (

k in total)

in the test image with that of the corresponding pixel

and the neighboring pixels

in the reference image,

where the constant 160 was introduced to normalize the index value to the range of [0,1]. We applied the RMS to the CVD simulation image of the target image, the simulation result using the proposed method, and the simulation result using the existing method. The simulation result of the original image was used as a reference image.

By contrasting the results of the proposed method to the results of the existing method (shown in

Table 1), it is clear that the proposed method’s results are superior other than for group C. Since the image of group C consists of more high frequency on the background, the contrast loss caused by such blurring artifacts, which will be discussed in the next section, becomes more dominant even though the foreground object that is supposed to be barely visible with deuteranopia becomes much clearer than in the existing method.

5. Discussion

By introducing a beam-splitter and an LCD panel into the system, complexity is increased. The compensation stability is greater or lesser, depending on the specification of each optical unit. The light is decreased about 50% by the beam-splitter and will be further decreased by the LCD and the OST-HMD. The light throughput of the system will be less than 10%. However, although we achieved light compensation using an HMD, the extra structure makes miniaturization harder. In further studies, we are considering using a stereo scene camera and simulating the on-axis image from that input instead of using a beam-splitter.

In this study, we ignored the focus problem and forced the user perspective camera to focus on the monitor and the OST-HMD. This is one of the major reasons for the blurring of the artifact observed in

Figure 10, although the artifact has been reduced from that of the existing methods [

13] by using the OST-HMD to compensate colors for individual pixels. This problem needs to be better addressed to use our system in a real-life application. When seen through the LCD panel, there are some diffraction effects from the pixel grid, which is inherently caused by the property of the LCD panel used (bbs bild- und lichtsysteme GmbH.

http://www.bbs-bildsysteme.com/) and might be improved by decreasing the resolution of the LCD since that does not affect the results of our method.

Due to the focus problem, we can only subtract light uniformly for an entire image because pixel-wise alignment cannot be made between the LCD and the other devices. The uniform amount of light to be subtracted is decided by computing the histogram of needs percentage for the three colors of all pixels and selecting the needs percentage of the highest bin in the histogram. Although we compensated the over- or under-subtracted areas with the OST-HMD, errors may remain.

Figure 11a visualizes the need for subtraction with intensity.

Figure 11b,c visualize the errors that are computed as the difference between the resulting image and the target image. When

, which is the highest needs percentage corresponding to the bright area in

Figure 11a, we can get a contrast enhanced image as shown in

Figure 11e, but there are errors in the green channel, on the flower area. This is likely because these pixels require a very small value for the green channel and the zero-input effect mentioned in

Section 3.3.3 prevented that channel from reaching zero. When

, with the positive compensation by the OST-HMD and color calibration, the result still had a fair contrast but lost some of the details compared to the result obtained with

(the yellowish part on the leaves). This indicates that a good transmittance selection policy may reduce the number of error pixels.

By making it possible to calibrate and compensate for incoming light and making the image in the user’s FoV reach a target image, the proposed technology can be combined with other compensation methods for CVD or even other augmented reality applications using OST-HMD where arriving light control is necessary.