Abstract

Natural language processing (NLP) is an effective tool for generating structured information from unstructured data, the one that is commonly found in clinical trial texts. Such interdisciplinary research has gradually grown into a flourishing research field with accumulated scientific outputs available. In this study, bibliographical data collected from Web of Science, PubMed, and Scopus databases from 2001 to 2018 had been investigated with the use of three prominent methods, including performance analysis, science mapping, and, particularly, an automatic text analysis approach named structural topic modeling. Topical trend visualization and test analysis were further employed to quantify the effects of the year of publication on topic proportions. Topical diverse distributions across prolific countries/regions and institutions were also visualized and compared. In addition, scientific collaborations between countries/regions, institutions, and authors were also explored using social network analysis. The findings obtained were essential for facilitating the development of the NLP-enhanced clinical trial texts processing, boosting scientific and technological NLP-enhanced clinical trial research, and facilitating inter-country/region and inter-institution collaborations.

1. Introduction

The adoption of natural language processing (NLP) techniques for clinical information processing has been popular for decades. Previous reviews on the NLP-enhanced clinical trial research commonly adopted manual methods like systematic literature reviews, the results of which might fail to be generalized to the whole community. Thus, it is essential to offer a thorough overview of the NLP-enhanced clinical trial studies in order to inform scholars, policymakers, and clinicians in conducting relevant research and practical activities. To review studies on a large scale, we employed bibliometrics, science mapping, and structural topic modeling (STM) [1,2] to provide a comprehensive investigation of the academic literature worldwide concerning the NLP-enhanced clinical trials research. The remainder of this section is structured as follows. Section 1.1 presents an introduction to the NLP-enhanced clinical trial research. An introduction to bibliometrics, science mapping, and topic modeling is presented in Section 1.2. Section 1.3 depicts the research aims of this study.

1.1. Introduction to the NLP-Enhanced Clinical Trials Research

Clinical trial texts involve valuable information for medical and clinical research, contributing to the solution of problems regarding the quality of healthcare and clinical decision support. However, usually, a significant portion of essential clinical trial information is documented and stored within unstructured texts, making it challenging to effectively and precisely extract useful information. Furthermore, it can be time-consuming and error-prone to convert such unstructured texts into structured ones, which may fail to capture multifarious information [3]. Thus, the extraction of appropriate features from clinical narratives consists of unlocking the hidden knowledge, as well as enabling the conduction of advanced reasoning tasks, for example, diagnosis explanation, disease progression modeling, and treatment effectiveness analytics [4]. There are at least two types of motivations worthy of being highlighted for the conversion of unstructured texts into structured ones [5], including reducing the time of manual screening and enhancing the reuse of such data to realize the automatic processing of large-scale texts.

As the production of unstructured clinical trial data is rapid and on a large scale, it is of great need to mine such textual data and to further generate structured representations through automatic approaches by adopting NLP techniques. NLP concerns natural language human–machine interactions, particularly in terms of enabling machines to process and analyze large-scale natural language data. Applications of NLP have long been widely considered among scholars (e.g., [6,7,8]). As a computer-driven approach to facilitate human language processing [9], the adoption of the NLP techniques for clinical information processing has been popular for decades. There are several effective applications available in our daily life, for example, MedLEE [10]. However, clinical NLP, also referring to the use of NLP techniques that are developed and adopted for supporting healthcare through unlocking clinical information hidden within clinical narratives, is still an emerging technique [11]. Although medical documents in forms of plain texts contain essential information about patients, they are seldom available for structured queries. Besides the structured data, the provision of structured information extracted from natural language documents such as electronic health records (EHRs) [12,13,14] is expected to facilitate clinical trial research [15]. EHRs offer chances to improve the healthcare of patients, incorporate performance measurements in clinical practice, and assist clinical research [16]. EHR systems offer significant potentials for supporting quality improvement and clinical research. In addition, to fully take advantage of the electronic medical records (EMRs), scalable methods like NLP are needed to transform unstructured text to structured data that can be adopted for evaluation or analysis [17]. To that end, NLP has increasingly become a valuable and effective technique for processing clinical trial texts, most of which are unstructured and large-scale.

Currently, several reviews on the NLP-enhanced clinical trial research and its relevant topics are available, as summarized in Table 1. For example, based on 43 studies concerning the NLP of clinical notes on chronic diseases, Sheikhalishahi et al. (2019) [18] conducted a systematic review to explore the development and uptake of NLP approaches adopted to deal with textual clinical notes data on chronic diseases. The existing relevant reviews were conducted mainly by systematic reviews, suffering from the following disadvantages. First, as most studies used manual coding methods, the numbers of reviewed articles were relatively limited. Second, manual analyses might be error-prone as they involved a tedious and laborious coding process. In addition, the results of a small sample of the NLP-enhanced clinical trial studies could not be generalized to the whole community. To that end, this study analyzed all relevant studies regarding the NLP-enhanced clinical trial research to provide a thorough and objective understanding of the entire community by using bibliometrics and topic modeling.

Table 1.

Recent reviews on the natural language processing (NLP)-enhanced clinical trial research and its relevant topics.

1.2. Introduction to Bibliometrics, Science Mapping, and Topic Modeling

Bibliometrics involves a set of approaches or techniques for measuring research outputs using scientific publications indexed in big bibliographic databases [25]. With the sustainable increasing number of scientific papers being published in different research fields, bibliometrics has grown into a valuable method aiming to progress the assessment of scholarly quality and productivity. The contributions of bibliometrics to science progress mainly involve several aspects, for example, enabling the assessment of progress, recognizing groups of relevant domain scientists, recognizing reliable publication sources, serving as an academic foundation for evaluation of new developments, assisting the implementation of specific intelligent information systems, and proposing novel indexes to evaluate scientific output [26,27,28,29,30].

In the bibliometric analysis, performance analysis and science mapping are two major approaches for exploring a specific research area [31,32]. Performance analysis concerns the assessment of the impact of academic outputs of researchers, institutions, or countries. Science mapping focuses on displaying the conceptual structures of particular research fields and their evolutions across time using network analysis, for example, co-words, co-citations, or co-authorship [30]. Performance bibliometric analysis concerns the measurement of scientific outputs using quality and quantity indicators like article count, citation count, and Hirsch index (H-index). Science mapping analysis serves as a spatial representation of the correlation of different areas. It aims to monitor an academic area and to delimit research areas for the determination of its structure and evolution [33].

In addition, topic modeling generates estimations of a list of topics that are hidden within a collection of textual data [34]. STM is a newly proposed unsupervised machine learning approach for analyzing text corpora on a large scale [1,2,35,36]. Topic models use a set of machine learning algorithms and a type of dimension reduction approach to extract latent topics from data. Topic models identify the co-occurrence of terms to further sort them into three latent topics. Each topic comprises terms, each with different assigned probabilities. Additionally, topic models assume that each document comprises different latent topics, each with different assigned probabilities in each document [37].

Bibliometric analysis and topic modeling have been popularly and widely adopted to present a general overview of various research fields (e.g., [38,39,40,41]). Some representative works include bibliometric analysis on, e.g., text mining-enhanced medical research [42], research that utilize AI techniques in processing EHRs data [43], and research of event detection using social media data [44].

1.3. Research Aims

This study aimed to provide a comprehensive overview of the NLP-enhanced clinical trial research. The bibliometric approach suits the purpose. Presently, no bibliometric study regarding scientific outputs on such an interdisciplinary research area is available. Thus, the adoption of science mapping techniques to evaluate the research area is of great need and importance. To that end, in this study, the first in-depth science mapping analysis, as well as an STM analysis of the NLP-enhanced clinical trial research, was conducted, especially for disclosing its structure and evolution. Specifically, based on the full bibliographic information of 451 articles regarding the NLP-enhanced clinical trial research retrieved from Web of Science (WoS), PubMed, and Scopus, this study conducted analyses in seven aspects. First, visualize the annual trend of the NLP-enhanced clinical trial articles. Second, identify the leading publication sources, countries/regions, institutions, and authors for the publication of the NLP-enhanced clinical trial articles. Third, visualize the scientific collaborations among leading countries/regions, institutions, and authors in the NLP-enhanced clinical trial research. Fourth, uncover the major research topics covered within the NLP-enhanced clinical trial research articles. Fifth, explore the evolution of the major research topics. Besides, visualize the topical distributions across prolific countries/regions and institutions. In addition, identify the potential inter-topic directions in the NLP-enhanced clinical trial research.

2. Literature Review

In the area of literature review, there are two major types (i.e., systematic review [45] and bibliometric analysis). Compared to a systematic review, bibliometric analysis is more suitable for evaluating larger-scale literature data and has thus been widely adopted in various research areas [42,44,46,47]. For example, based on 62 articles collected from the WoS, by using content analysis, Arici et al. [48] presented the research trends and bibliometric results of the literature regarding the adoption of augmented reality in science education. Based on the obtained results, they identified several frequently used keywords, including “science education,” “science learning,” and “e-learning,” particularly mobile learning, which has been a great concern for more recent literature. Besides, it was found that “education,” “knowledge,” “science education,” “experiment,” and “effectiveness” were the most frequently used terms in the abstracts. In addition, interests in students’ knowledge and achievement were also found to be significant among authors. With the use of the publications of Computers & Industrial Engineering, one of the top academic journals in the field of the supply chain, Cancino et al. [49] identified predominant trends in the field by analyzing the most important publications, keywords, authors, institutions, as well as countries.

STM has also been applied to investigate research topics within a particular research domain. For example, based on the abstract information of research articles on community forestry published during the period of 1990–2017, Clare and Hickey [50] used bibliometric analysis and an STM method to explore trends of geographic foci, as well as research topics. Four main research issues and 20 topics were identified. The distributions of the annual topic proportions indicated that the research focus has evolved from policy analysis to regional carbon sequestration. Based on a collection of open-ended comments from a nationwide representative sample of 16,464 drivers collected in 2016 and 2017, Lee and Kolodge [51] adopted STM to identify 13 research themes, for example, “Tested for a long time” and “Hacking & glitches.” With the use of STM, Bennett et al. [52] analyzed 177 responses of the mentally disabled regarding their willingness to using autonomous vehicles. In this way, they identified potential obstacles for the adoption of autonomous vehicles perceived by the mentally disabled. Their study provided suggestions to the government and manufacturers in planning public information campaigns to promote the acceptance of autonomous vehicles by the mentally disabled.

Focusing on NLP and clinical trials research, a few bibliometric studies are available. From the NLP perspective, a bibliometric analysis of NLP [53], NLP-empowered mobile computing research [54], NLP-enhanced medical research [47], and computational linguistics [55] can be found. Another aspect is research related to clinical trials or clinical. Reprehensive works include bibliometric analyses of, e.g., the influence measurement of clinical trials citations [56], clinical studies on Tai Chi [57], controlled clinical research concerning traditional Chinese medicine (TCM) in cancer care [58], clinical research articles on sepsis [59], and radiologic randomized controlled trials (RCTs) [60]. For example, with a large volume of clinical studies concerning the safety and advantages of Tai Chi mind-body exercise, Yang et al. [57] systematically synthesized the results or findings of clinical studies concerning Tai Chi for healthcare. Based on the controlled clinical scientific articles of TCM treatments for cancers collected from four major Chinese electronic databases, Li et al. [58] bibliometrically analyzed these articles to assess the reporting quality and summarize the evidence presented in the studied articles. Rosas [61] used a bibliometric analysis method to assess the presence, performance, and impact of scientific articles by the National Institute of Health/National Institute of Allergy and Infectious Diseases clinical trials networks. To be specific, they evaluated the articles in three aspects: (1) Assessing the impact of these articles according to journal impact measures; (2) assessing the performance of articles using bibliometric indicators; and (3) assessing the influence of articles by comparing to similar articles. Tao et al. [59] used bibliometrics to review the development of clinical studies on sepsis systematically. They found that studies contributed by scholars from the USA and published by impactful journals were the most likely to be cited. Hong et al. [60] conducted a bibliometric review of studies on radiologic RCTs to analyze their characteristics and qualities. Based on their analysis, it was found that the quantity and quality of radiologic RCTs had dramatically improved, while the quality of methodologies remained suboptimal. Kim et al. [62] assessed the characteristics and quality of acupuncture RCTs. With an increasing number of RCTs investigating the therapeutic value of yoga interventions, Cramer et al. [63] adopted bibliometrics to present a thorough overview of the characteristics of relevant randomized yoga trials. Cramer et al. [64] aimed to examine whether positive conclusions appeared more in yoga RCTs when they were carried out in India. According to their study, yoga RCTs performed in India were 25 times more likely to reach positive conclusions as compared to those performed somewhere else. In this sense, Indian trials ought to be handled cautiously while assessing yoga’s effectiveness for patients from other regions. Bayram et al. [65] identified and characterized the most highly cited papers regarding ultrasonography evaluations taking place in the emergency departments. According to their analysis, the most frequently cited papers concerning the adoption of ultrasonography involved various topics, and almost half of the authors of these papers were experts in emergency medicine.

There are several research studies presenting discussions on issues regarding NLP-enhanced clinical trial research. For example, by including all the applications or methodological articles leveraging texts to support healthcare and meet customers’ needs, Demner-Fushman and Elhadad [11] presented a systematic review of articles concerning the application of NLP to clinical and consumer-produced textual data. Their study indicated that although clinical NLP continued to facilitate practical applications with an increasing number of NLP approaches being adopted to process large-scale live health information, there was a need to enable the adoption of NLP in clinical applications available in our daily life. Zeng et al. [66] reviewed studies regarding the NLP-enhanced EHRs-driven computational phenotyping, involving the most advanced NLP techniques for the task. Through their review, several implications were obtained. For example, keyword searches, rule-based approaches, and supervised NLP techniques based on machine learning were the most commonly adopted approaches. Second, well-defined keyword search and rule-grounded systems usually achieved high accuracy. Third, classifications based on supervised methods could achieve higher accuracy and was easier to conduct, as compared to unsupervised methods. Besides, advances in machine learning approaches, for example, deep learning, have grown to be popular. Additionally, there was an increasing interest in extracting relations between medical concepts.

3. Materials and Methods

3.1. Data Collection and Preprocessing

3.1.1. Data Collection

Data for bibliometric analysis concerning the NLP-enhanced clinical trial research were drawn from the WoS, PubMed, and Scopus databases. WoS is a quality-assured database for evaluating research frontiers of particular research fields [67,68]. We also included relevant papers from PubMed as it is the most comprehensive medical literature database worldwide. The PubMed database is provided by the National Library of Medicine, covering the most significant number of publications on life sciences and biomedical research. In addition, we also included Scopus, which is the most reliable [69] and comprehensive [70] academic database of peer-reviewed literature, including scientific journals, books, and conference proceedings.

In the WoS, we adopted “TS” as a search field, as suggested by Gao et al. [71]. Papers indexed in Science Citation Index Expanded, Social Sciences Citation Index, Arts & Humanities Citation Index, Conference Proceedings Citation Index- Science, and Conference Proceedings Citation Index - Social Science & Humanities were considered. In PubMed, [Title/Abstract] was used as a search field [72]. Literature type included article and proceedings papers written in English. Usually, research articles contain more original findings. In addition, a great deal of NLP research papers is covered by conferences.

One critical procedure in data retrieval was to identify a set of keywords related to NLP and clinical trials. The most challenging task was to maximize the identification of relevant studies. For example, publications specific to NLP research may not mention terms like “natural language processing;” thus, a query for retrieving NLP research literature should include field-specific terms like “semantic analysis,” which was relevant to NLP. We followed steps proposed by Hassan et al. [73] to attain the keywords list for NLP. Step One: A seed list (e.g., “natural language processing,” “semantic analysis,” “bag of words,” “word sense disambiguation”) was provided by NLP domain experts based on their expertise and understanding of NLP. Step Two: The seed keywords were adopted to retrieve papers in the WoS. Step Three: We identified the author-defined keywords contained in those indexed as highly cited papers among the retrieved papers, and then presented them to NLP domain experts to exclude irrelevant ones. The relevant ones were added to the seed list. Similarly, we used the same steps to attain a keywords list for clinical trials with seed keywords, including “clinical trial*,” “randomized controlled trial*,” and “clinical research trial*.” The retrieval was conducted on April 10, 2019. We chose 1999–2018 as the study period. The final keyword queries for data retrieval are shown in Table A1 and Table A2 in the Appendix A.

Using the queries, 1595, 512, and 1580 papers were retrieved. The total retrieved 3687 papers were further filtered to guarantee a close relation to the research target, which was conducted by two domain experts (A and B) with comprehensive knowledge and a deep understanding of the interdisciplinary area. Expert A reviewed all the articles based on the criteria listed in Table 2 and finalized 451 papers for further data analysis. Expert B conducted the same filtering process, but for only 100 randomly selected papers. The consistency rate in the decisions made by the two experts was above 95%. Thus, the 451 articles were used as the final dataset, including 194 from the WoS, 25 from PubMed, and 232 from Scopus. As the WoS has provided the research domain of each paper, we analyzed research domains of these papers as inspired by Kowsari et al. [74], the result of which is shown in Table 3. It should be noted that as one paper might originate with more than one domain, we included all of them in our analysis. From the result, we could see that Computer Sciences was the top one domain in the publication of NLP-enhanced clinical trial research, followed by Medical Informatics, Health Care Sciences & Services, and Mathematical & Computational Biology.

Table 2.

Examples of inclusion and exclusion criteria for the manual verification.

Table 3.

Top research domains for the 194 papers from the Web of Science (WoS).

3.1.2. Data Pre-Processing

Full bibliographic information of the 451 articles was downloaded in comma separated values (CSV) or plain text file (TXT) files. The CSV or TXT files contained bibliographic details such as authors’ names, affiliation, publication year, publication sources, title, abstract, and keywords information. We performed feature extraction and pre-processing. Key features such as title, abstract, keywords, publication source, publication year, and author address of each paper were extracted using a Python program and stored in Microsoft Excel for further pre-processing, as described in the following. First, check all the papers to make sure all features were included in the Excel file. In particular, use Python to check the integrity of the authors’ address information automatically. For those without complete information, manual supplements of information were conducted based on the original text of the paper. Second, use Python to extract the information of authors, affiliations, and countries/regions from the authors’ addresses and to further transform into analyzable forms. An example of the authors’ addresses transformation is shown as Table 4. Third, carry out normalization. For example, for the analysis of prolific countries/regions, articles originating from England, Scotland, Wales, and Northern Ireland were combined as the UK, while articles originating from Hong Kong, Macau, and Taiwan were individually calculated. For the analysis of prolific affiliations, expressions of the same affiliation were unified. For example, Educ Univ Hong Kong, Hong Kong Inst Educ, Educ Univ Hong Kong, The Education University of Hong Kong, Hong Kong Institute of Education, and The Hong Kong Institute of Education were unified as the Education University of Hong Kong.

Table 4.

Example of the authors’ addresses transformation.

For science mapping analysis, author-defined keywords, and the KeyWords Plus, PubMed MeSH, or Scopus Index keywords, were adopted. Keywords pre-processing was implemented to enhance analysis efficiency. Firstly, keywords were manually assigned to papers without keyword information, according to paper contents, especially titles. For instance, keywords “Ontology,” “data management system,” “post-genomic clinical trial,” “Grid infrastructure,” and “cancer research” were extracted from the title “Ontology based data management systems for post-genomic clinical trials within a European Grid infrastructure for cancer research.” Then, all the keywords were expressed in lower case and singular form. Keywords with similar concept meanings were grouped. For instance, “randomized controlled trial,” “randomised controlled trial,” “randomized controlled-trial,” and “randomised controlled-trial” were grouped with the united expression “randomized controlled trial.” Finally, the data were divided into three consecutive periods, 1999–2008 (91 articles), 2009–2013 (149 articles), and 2014–2018 (211 articles), for thematic evolution analysis.

Before conducting the STM analysis, we conducted a pre-processing procedure to facilitate the analysis. First, abstracts of the 451 selected articles were collected, for which terms were extracted by a self-developed NLP program. Second, terms with similar meanings were unified (e.g., ‘modeling’ and ‘modelling;’ ‘personalize’ and ‘personalise’). Third, stop terms were excluded (e.g., “of,” “you,” “a,” and “the”). Besides, terms containing little or no meaningful information were excluded (e.g., “also,” “although,” “among,” “another,” “demonstrate,” “furthermore,” “however,” “include,” “possible,” and “towards”). In addition, term frequency-inverse document frequencies (TF-IDF), as one of the common techniques of feature extractions [75], was further adopted to filter unimportant terms. We followed the suggestion by Jiang et al. [76] to include terms with TF-IDF values more than or equal to 0.05.

3.2. Performance Bibliometric Analysis

Several bibliometric indicators were implemented, including paper counts, citation counts, paper counts within citation thresholds, and the Hirsch index (H-index) [77,78]. Given that PubMed does not provide citations for a paper, for comparison convenience, we used Google scholar citation counted up to 26 April 2019, for each of the analyzed papers.

The bibliometric performance indicators can help identify prolific and influential publication sources, authors, and institutions, as well as provide data on geographic distribution. Meanwhile, the indicators can also be used to enrich the science mapping analysis workflow by measuring the comparative importance of different research themes, thus helping to establish the most leading, productive, and impactful research areas [30]. The quantitative indicators (e.g., article count) can be adopted to evaluate how productive the detected themes are, while the qualitative indicators (e.g., citation count and H-index) can evaluate their quality.

3.3. Scientific Collaboration Networks Analysis

Social network analysis was used to explore the scientific collaborations among authors, institutions, and countries/regions in the NLP-enhanced clinical trial research field. It has been increasingly important and widely adopted in various research fields [79], for example, psychology, business, and education. Taking the co-author network as an example, with this type of network, we are able to discover different features, for instance, author communities [25].

In this paper, we used Gephi (https://gephi.org/) to generate the collaboration networks, in which nodes indicated authors, institutions, or countries/regions, while lines indicated collaboration relations. The strength of collaboration relations was denoted by line width, while the node size indicated productivity, namely, the paper counts. Especially, we also embodied the country/region information for authors and institutions, as well as the continent information for countries/regions in the networks using node colors.

3.4. Science Mapping Analysis

As a spatial illustration of relations among disciplines and papers [80], science mapping has been broadly applied to various research areas to uncover hidden research topics. This study aimed to measure the evolution progress of the research topics to discover newly emerging research themes, as well as predict their developments. We implemented SciMAT (http://sci2s.ugr.es/scimat), which integrates the most advantages of the available science mapping tools [81]. We followed the analysis procedure defined by Cobo et al. [82], in which co-word analysis was adopted to explore how research areas evolved throughout consecutive time periods [83]. The approach mainly included three stages, including research themes detection, the layout of research themes, and thematic evolution and performance analysis.

3.5. Structural Topic Modeling Analysis

The STM modeling process was as follows. First, 21 models with topic numbers from five to 25 were fitted, respectively. Second, the domain experts evaluated the fitting effectiveness of the 21 models based on their domain knowledge, as well as by examining representative terms and articles for each topic. A 16 topic model was finally selected out. Third, based on the results of the 16 topic STM model, we assigned labels to each topic based on their representative terms and articles. Besides, we conducted a statistical Mann-Kendall trend test method to examine the development trend of each topic throughout the whole period. Furthermore, by incorporating metadata such as country/region, institution, and publication data information of each article, we further calculated and visualized the topical distributions across prolific countries/regions and institutions, as well as topic proportion distributions by year. In addition, we investigated the correlation between topics using a hierarchical clustering method.

4. Performance and Collaboration Analyses Results

The bibliometric analysis results of articles in the research area of NLP techniques for clinical trial text processing are displayed in this section, with article trend analysis, analysis of publication sources, countries/regions analysis, institutions, and authors included. In addition, results of scientific collaborations analyses and thematic evolutions analyses are also presented.

4.1. Analysis of Trend in Articles

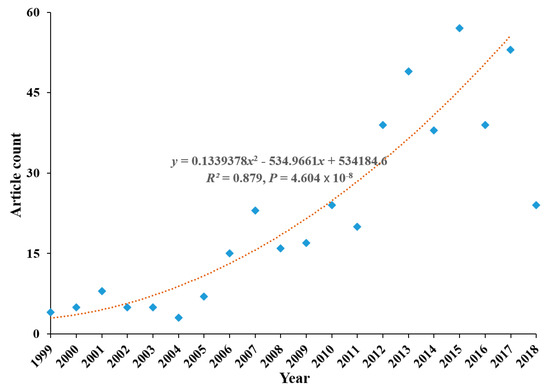

The annual distribution of articles is displayed in Figure 1. The annual number of articles had increased from four in 1999 to 53 in 2017.

Figure 1.

Analysis of trends in articles.

Until 2005, the number was around seven by year, which is quite low. From 2006 until 2011, the number increased to triple, which was around 20. Furthermore, from 2012 until 2017, it was around 45. Thus, the research area had received growing interest and attention from academia during all these years. However, the articles collected with the publication year of 2018 had experienced a sudden drop to 24, which might have partially resulted from time-lag for academic papers to be indexed by academic databases. Thus, there was a great possibility that some articles published in 2018 had not been indexed in the retrieval databases.

Polynomial regression analysis was further conducted to fit the article trend with the year as an independent variable. For obtaining a better fitting efficiency, we utilized the period 1999–2017 for regression fitting. The fitted polynomial regression curve with a positive coefficient of x2 (R2 = 0.879) demonstrated a growing interest and enthusiasm for the research topic.

4.2. Analysis of Publication Sources

Regarding sources, results showed that two or more articles about NLP techniques for clinical trial texts processing research were published in 48 professional sources. Table 5 lists the top thirteen publication sources with an H index value ≥4. Most of the authors published their studies in sources of medical informatics such as the Journal of Biomedical Informatics (JBI), Journal of the American Medical Informatics Association (JAMIA), and American Medical Informatics Association (AMIA) Annual Symposium, which were the top three in the list. The three together contributed nearly 20% of the articles. JBI was the most influential source with an H index value of 20. Additionally, it was also the most productive one, with 37 articles. Thus, it not only had a wide research influence in the area but also had great productivity. JAMIA, with an H index value of 17, ranked in second place. Although JAMIA had comparatively fewer articles than JBI, it had an average-citations-per-article (ACP) value much higher than other sources, including JBI. This showed that it was widely and commonly influential and recognized in the research area. Regarding the article distribution for the two periods, it was interesting to find that JBI had significantly more articles in the second period, much more than other sources. This showed a fast and flourishing development of the NLP-enhanced clinical trial research in recent years.

Table 5.

Distribution of articles by publication source.

4.3. Analyses of Country/Region, Institution, and Author

Forty-five countries/regions had contributed to the 451 articles. Twelve countries/regions had more than ten articles about the NLP-enhanced clinical trial research, while 14 countries/regions had contributed to only one article each. Table 6 depicts the top 20 ranked by H-index. The top four were the USA (42 H-index, 231 articles), Germany (17 H-index, 57 articles), the UK (16 H-index, 51 articles), and the Netherlands (14 H-index, 39 articles), which were also the top four ranked by article count.

Table 6.

Distribution of articles by country/region.

The table also includes the citation count (TC), average citations per article (ACP), numbers of articles with more than 100 and 50 citations (≥100, ≥50, respectively), and numbers of articles published during 1999–2008 (A1) and 2009–2018 (A2). The USA had the top rank position for almost all index metrics (except ACP index), reflecting the high quality of its articles, as well as its dominant position in the NLP techniques for clinical trial text processing research. In addition, the USA was the main collaborator for countries of Germany, the UK, Canada, Australia, and China. Germany was the main collaborator for the USA, the UK, the Netherlands, Spain, and Greece.

There were 605 institutions that participated in the 451 studied articles. Thirty-five institutions had more than five articles on the NLP-enhanced clinical trial research, while 441 institutions had participated in only one article each. Table 7 shows the top 17 ranked by H-index, among which the top three were Stanford University, the National Institutes of Health, and the University of California, San Francisco. For the 17 listed institutions, eight were from the USA, three from the Netherlands, three from the UK, one from Germany, one from Greece, and one from Spain. From the article count perspective, the top three were Stanford University (25 articles), Columbia University (21 articles), and the Technical University of Madrid (17 articles). From the ACP perspective, the top three were the University of California, San Francisco (75.86 ACP), National Institutes of Health (59.50 ACP), and University of Oxford (39.67 ACP). It is worth noting that concerning the number of articles published during the period 1999–2008, Stanford University was ranked first with 14 articles, while most of the articles of Columbia University were published during the second period, demonstrating a flourishing development in the NLP-enhanced clinical trial research.

Table 7.

Distribution of articles by institutions.

One thousand nine hundred ninety-nine authors had contributed to the 451 studied articles. Thirty-three authors had more than five articles on the NLP-enhanced clinical trial research, while 87.44% of authors had participated in only one article. Table 8 depicts the top 19 ranked by H-index, where the top three were Ida Sim (H-index as ten) from the University of California, San Francisco, Manolis Tsiknakis (H-index as eight) from the Foundation for Research and Technology-Hellas, and Amar K. Das (H-index as eight) from Stanford University. For the 19 listed authors, ten were from the USA, four from Spain, two from Germany, two from Greece, and one from the Netherlands. From the article count perspective, the top three were Anca Bucur (15 articles) from Philips Research, Chunhua Weng (13 articles) from Columbia University, and Manolis Tsiknakis (13 articles) from the Foundation for Research and Technology-Hellas. From the ACP perspective, the top three were Ida Sim (ACP as 84.58) from the University of California, San Francisco, Simona Carini (ACP as 42.67) from the University of California, San Francisco, and Mathias Brochhausen (ACP as 39.50) from Saarland University. It is worth noting that concerning the number of articles published during the period 1999–2008, Amar K. Das from Stanford University ranked first with eight articles, while Anca Bucur from Philips Research had the most articles published during the second period.

Table 8.

Distribution of articles by authors.

4.4. Analysis of Scientific Collaboration

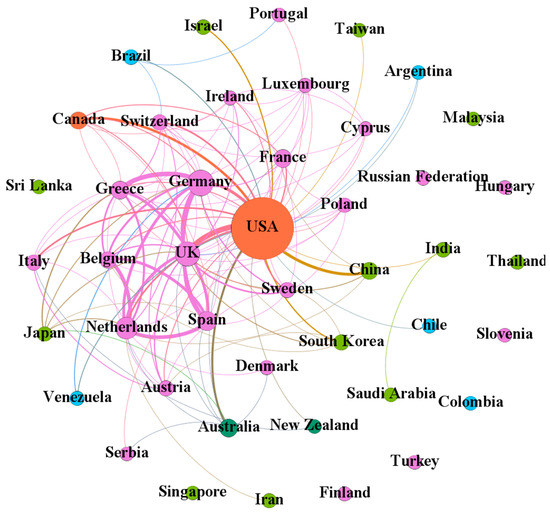

The scientific collaboration relations among the 44 countries/regions (http://home.eduhk.hk/~hxie/applied_sciences/countries.html) participating in research of NLP techniques for clinical trials text processing are illustrated as Figure 2 with 44 nodes and 124 links. Among the 44 countries/regions, 23 were from Europe (orange nodes), 12 from Asia (red nodes), five from South America (purple nodes), two from North America (blue nodes), and two from Oceania (green nodes). The collaborations among European countries/regions were close. The USA and the UK had the most collaborations (in 13 articles), followed by Germany-Greece (11 articles), the Netherlands-Belgium (nine articles), Germany-Spain (nine articles), and the Netherlands-Spain (nine articles). Of these 58 countries, the USA was the most collaborative country with collaborations with 29 countries/regions. Thus, it was located in the center of the network. Other collaborative countries/regions included the UK, Germany, the Netherlands, and Greece, with collaborations with 29, 21, 18, 15, and 15 countries/regions, respectively.

Figure 2.

Collaboration network among countries/regions.

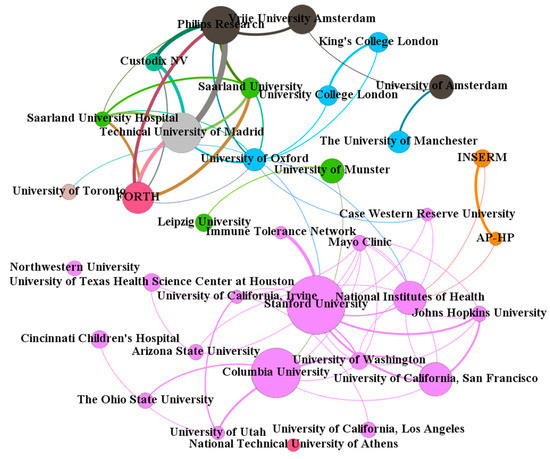

Figure 3 shows the scientific collaboration network among 35 institutions (http://home.eduhk.hk/~hxie/applied_sciences/institutions.html) with an article count ≥5 and with 35 nodes and 65 links. Of them, about 50% are from the USA (blue nodes). The collaborations among them were very close. The Technical University of Madrid from Spain and Philips Research from the Netherlands were the most collaborative partners (nine articles), followed by Philips Research from the Netherlands and Custodix NV from Belgium (six articles), as well as the Technical University of Madrid from Spain and the Foundation for Research and Technology-Hellas from Greece (six articles). Of these 35 institutions, the University of Oxford from the UK (11 collaborators) was the most collaborative institution, followed by the National Institutes of Health from the USA (10 collaborators) and Stanford University from the USA (nine collaborators).

Figure 3.

Collaboration network among institutions with ≥5 articles.

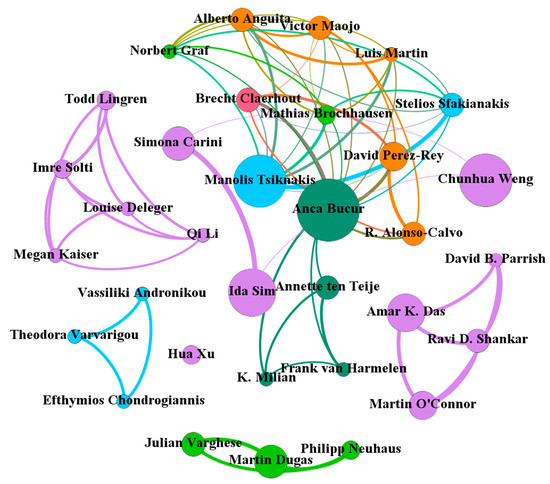

The scientific collaboration relations among 33 authors (http://home.eduhk.hk/~hxie/applied_sciences/authors.html) with an article count ≥5 are displayed in Figure 4 with 33 nodes and 70 links. Among the 33 authors, 13 were from the USA (orange nodes). Interestingly, the collaborations among authors from the same countries/regions were close, with several clusters formed. For example, a cluster was formed by Philipp Neuhaus, Mathias Brochhausen, and Julian Varghese, all of which were from Germany. A cluster was formed by Efthymios Chondrogiannis, Vassiliki Andronikou, and Theodora Varvarigou, all of which were from Greece. In addition, three clusters formed by authors from the USA were significantly and clearly found. Simona Carini from the USA and Ida Sim from the USA had the most collaborations (9 articles). Of these 33 authors, Anca Bucur from the Netherlands was the most collaborative author (13 collaborators), followed by Manolis Tsiknakis from Greece and Victor Maojo from Spain, each with nine collaborators.

Figure 4.

Collaboration network among authors with ≥5 articles.

5. Science Mapping Analysis Results

5.1. Analysis of the Content of the Papers Published

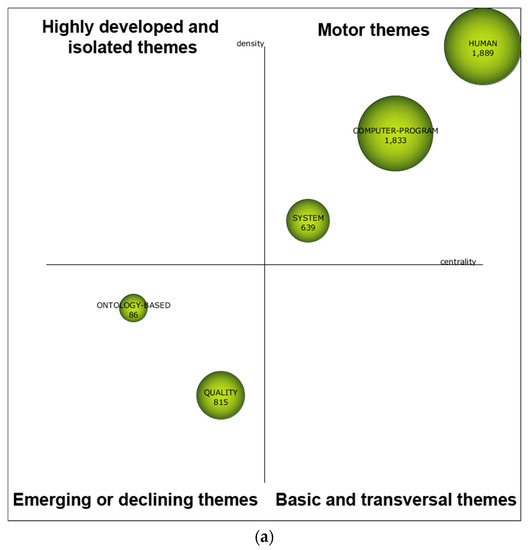

Strategic diagrams were drawn to explore the research themes of high importance in the NLP-enhanced clinical trials research field, where the sphere size was proportional to articles of each research theme, and citations of each theme were also integrated, as in brackets.

During the first sub-period 1999–2008 (see Figure 5a), HUMAN, COMPUTER-PROGRAM, and SYSTEM were motor themes, meaning that these research themes were essential and well developed for structuring the NLP-enhanced clinical trial research field. The performance measures of each theme are depicted in Table 9, including article count, citation count, and H-index. Based on the three performance measures, HUMAN and COMPUTER-PROGRAM could be highlighted. Compared to the remaining themes, they received a significant impact rate, with more citations and higher H-indexes. As for the second sub-period 2009–2013, based on the strategic diagram depicted in Figure 5b, five major themes were highlighted, including HUMAN, ARTIFICIAL-INTELLIGENCE, FACTUAL-DATABASE, MEDICAL-APPLICATION, and MEDICAL-RECORD. In Table 9, two research themes were highlighted with more citations and higher H-indexes, namely, HUMAN and ARTIFICIAL-INTELLIGENCE. Based on the strategic diagram depicted in Figure 5c, during the last sub-period 2014–2018, the NLP-enhanced clinical trials research field had become more diverse with 12 research themes with seven major ones (including motor and basic themes), including PROCEDURE, MACHINE-LEARNING, ELECTRONIC-HEALTH-RECORD, ACCURACY, FEMALE, COMPUTER-ASSISTED, and GENETICS. In Table 9, PROCEDURE with more citations should be highlighted. In addition, the following two themes were notable with a higher H-index: MACHINE-LEARNING and RANDOMIZED-CONTROLLED-TRIAL.

Figure 5.

Strategic diagram for the periods 1999–2008 (a), 2009–2013 (b), and 2014–2018 (c).

Table 9.

Examples of inclusion and exclusion criteria for the manual verification.

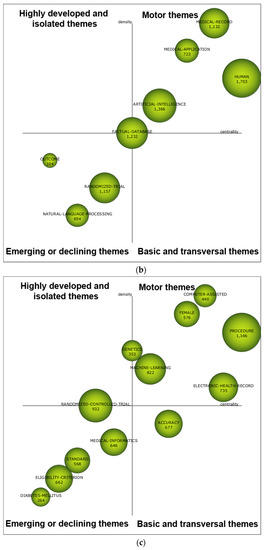

5.2. Conceptual Evolution Map

Referring to the strategies by Ramírez and Sánchez-Cañi [84], we conducted a conceptual evolution analysis. The period 1999–2008 contained 798 keywords, 328 of which appeared again in the period 2009–2013, while the remaining 470 keywords did not. Thus, the similarity index between the two periods equaled 41%. The period 2009–2013 contained 1429 keywords, 1101 of which were newly emerged. In the period 2014–2018, 557 of these keywords appeared again, while 872 did not. The similarity index between the latter two periods equaled 39%. Given that there were more articles in the period 2014–2018, there were 1951 keywords included, of which 1394 have newly emerged. The importance of keywords was constantly changing across time (Figure 6). With terminology changes, diverse keywords were adopted to depict contents of the NLP-enhanced clinical trial studies, with some new keywords emerging while others vanished. For instance, keywords NURSING-EDUCATION, SHORT-TERM-MEMORY, ASSOCIATE-LEARNING, ATTITUDE-TO-COMPUTER, BIOMEDICAL-GRID, and CANCER-SCREENING appeared only in the first period. However, keywords such as SEMANTICS, SYSTEM, ONTOLOGY, RANDOMIZED-CONTROLLED-TRIAL, ARTIFICIAL-INTELLIGENCE, EVIDENCE-BASED-MEDICINE, NATURAL-LANGUAGE-PROCESSING, and DECISION-SUPPORT-SYSTEM were presented in all three periods. The evolution map depicted in Figure 6 was very dense. Some keywords belonged simultaneously to more than one area, while others were isolated (e.g., ONTOLOGY-BASED and QUALITY) or failed to be continuously prominent.

Figure 6.

Thematic evolution (1999–2018).

6. Structural Topic Modeling Analysis Results

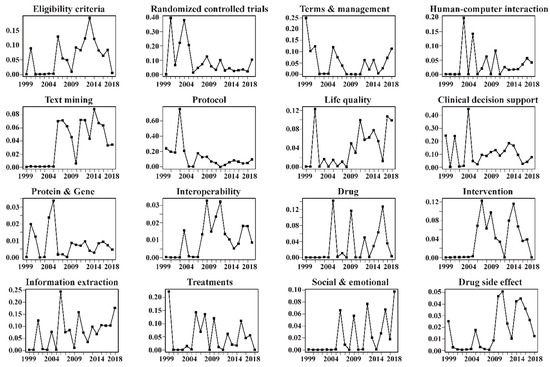

6.1. Topic Identification and Trends

Of the extracted terms, the most frequent ones included “clinical (in 344 articles),” ”trial (335),” ”patient (199),” ”information (197),” ”system (188),” ”knowledge (179),” ”semantic (161),” and ”support (137).” Table 10 displays the 16 topic STM results, with an integration of the most discriminating terms, topical proportions, suggested labels, as well as topical trend test results. Of the 16 topics, some topics were about technologies or methods such as Information extraction and Text mining. Two topics were drug-related, namely, Drug and Drug side effect. The top five most frequently discussed topics included Interoperability (13.21%), Clinical decision support (10.38%), Information extraction (9.36%), Eligibility criteria (8.68%), and Protocol (8.08%).

Table 10.

The 16 structural topic modeling (STM) results with the discriminating terms, topical proportions, suggested topic labels, and trend test results.

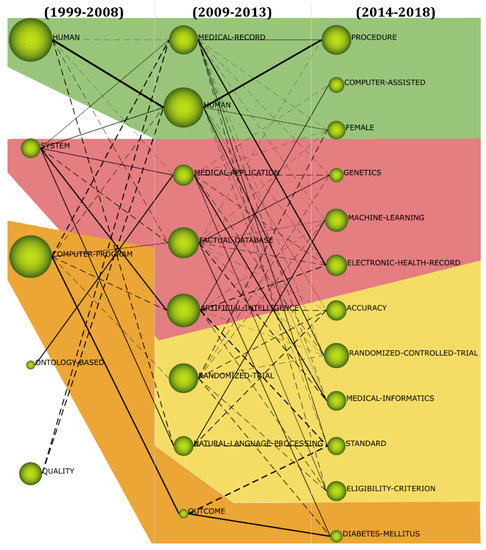

According to the Mann–Kendall trend test, most of the 16 topics presented an increasing trend. In particular, ten topics, namely, Interoperability, Information extraction, Eligibility criteria, Life quality, Intervention, Text mining, Drug, Human–computer interaction, Social & emotional, and Drug side effect, exhibited statistically significant increasing trends. Topic Protocol was found to experience a statistically significant decreasing trend. The annual proportion distributions of the 16 topics are also visualized in Figure 7, from which it was obvious to find that some topics appeared to be increasing, for example, Text mining, Information extraction, and Life quality.

Figure 7.

Temporal trends of the 16 topics in the whole corpus (2009–2018).

6.2. Topical Distributions Across Prolific Countries/Regions and Institutions

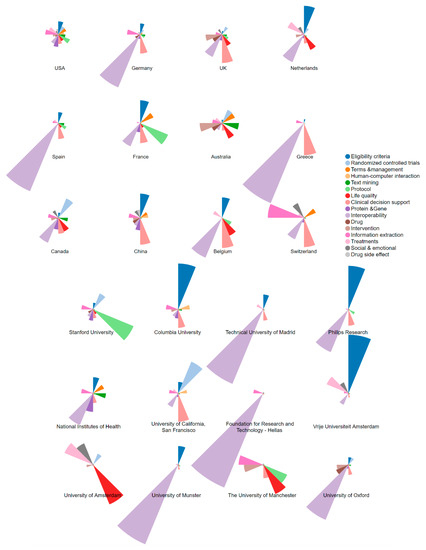

The topical distributions for prolific countries/regions and institutions with respect to NLP-enhanced clinical trial research were also explored, shown in Figure 8, in which the upper panel is for countries/regions, while the lower panel is for institutions.

Figure 8.

Topic proportion distributions across the prolific countries/regions and institutions.

From the perspective of countries/regions, several countries such as the USA, the UK, Australia, Canada, and China seemed to show balanced interest in diverse topics in the research. However, comparatively, the others showed particular interest in one or more topics, among which Interoperability was being favorited most. In addition to Interoperability, there were several topics receiving much interest from particular countries. For example, France was more productive in Protocol. China had focused much on Clinical decision support and Eligibility criteria. Switzerland was very active in Information extraction.

From the perspective of institutions, there were several institutions mainly focusing on the topic Interoperability, including the Technical University of Madrid, Philips Research, the National Institutes of Health, Foundation for Research and Technology-Hellas, University of Munster, and the University of Oxford. As for the topic Eligibility criteria, there were three institutions showing a specific interest for it, including Columbia University, Philips Research, and Vrije Universiteit Amsterdam. In addition, Stanford University was particularly interested in Protocol, while the University of Amsterdam was particularly concerned about Life quality.

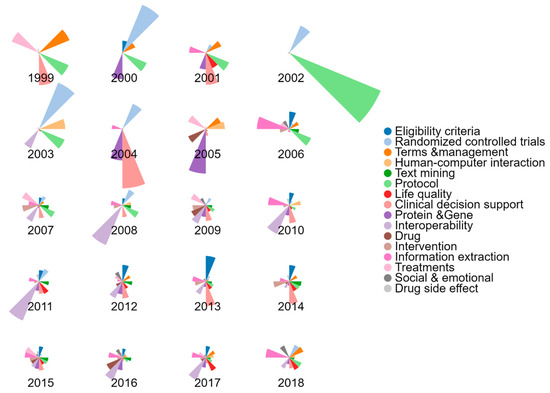

6.3. Topic Proportion Distributions by Year and Topic Clustering

Figure 9 shows the topic proportion distributions by year in the NLP-enhanced clinical trial research literature. Overall, the results had shown a diversity in research topics with time, with more topics being discussed more among authors. In previous years, only several topics received particularly close attention, for example, Randomized controlled trials in 2000, Protocol in 2002, and Clinical decision support in 2004. However, with time, research interest among authors showed more balance in different aspects of the research.

Figure 9.

Topic proportion distributions by year in the NLP-enhanced clinical trial research.

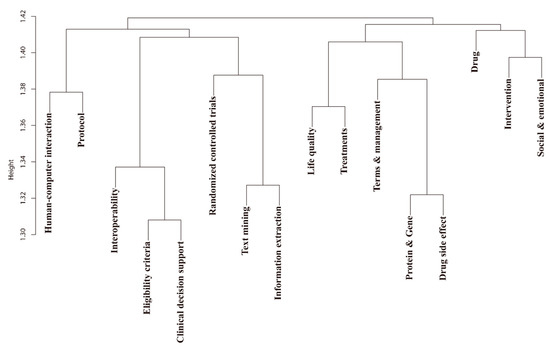

Figure 10 shows the results of the hierarchical clustering based on document-level similarity. Such analysis provided insights into potential inter-topic research. For example, Eligibility criteria and Clinical decision support had a high document-level similarity, indicating that articles with a high relevance to Eligibility criteria also had higher relevance to Clinical decision support. Thus, document-level similarity clustering had the potential to graph emerging trends of the NLP-enhanced clinical trial research during the study period.

Figure 10.

Hierarchical clustering results based on the document-topic distribution matrix.

7. Discussions

In this study, based on 451 articles retrieved from the WoS, PubMed, and Scopus databases, we displayed a thorough explanation of a bibliometric analysis of the NLP-enhanced clinical trials research domain. Results obtained provided answers to the six research questions, based on which some critical issues were highlighted, as follows.

7.1. Insights From Performance Analysis and Scientific Collaboration Analysis

The analysis of the trend of the annual publication demonstrated that, overall, the annual publication of the NLP-enhanced clinical trial research had experienced a dramatic increase, although with a slight decrease in the final year, which was mainly caused by the time delay for the publication to be indexed by the database. The results significantly highlighted increasing attention and interest shown toward the NLP-enhanced clinical trial research from academia, and it was of high potential to continue to be more popular. The results of the analysis of the publication sources identified the leading position of the JBI, JAMIA, and AMIA Symposium in terms of H-index. JBI was also the most prolific journal in the publication of the NLP-enhanced clinical trial research. JAMIA was particularly famous for the impact and influence of its publication, with an ACP value up to 59. The results of the analyses of country/region, institution, and author identified the USA, Stanford University, and Ida Sim as the most influential ones in terms of H-index in the NLP-enhanced clinical trial research.

The scientific collaboration analysis between countries/regions, institutions, and authors highlighted that the USA and the UK, the Technical University of Madrid and Philips Research, as well as Simona Carini and Ida Sim, were the closest collaborators in the NLP-enhanced clinical trial research. The analyses also demonstrated the close collaboration between institutions and authors, particularly those from the USA. However, it was highly suggested that cross-region and cross-country collaboration could be strengthened to take advantage of regional research advantages.

7.2. Insights From Science Mapping Analysis

With the use of SciMAT, research themes were analyzed by exploring keywords and the evolution in each period of time. Four major thematic areas in NLP-enhanced clinical trial research were identified, including Human-focused issues, Systems and applications, Clinical trials processing, as well as Diabetes-related issues. First, within the theme Human-focused issue, there were three keywords, including HUMAN, MEDICAL RECORD, PROCEDURE, FEMALE, and COMPUTER-ASSISTED, where HUMAN was the largest one. EMRs are about the collection and description of events and patients’ health history, concerning their interaction with clinical trials [85]. With the wide adoption of EMR databases in healthcare, an extraordinary amount of clinical data in electronic forms have been produced. There was a growing trend toward the collaborations between institutions from different regions on medical research with the use of EMRs [86]. However, such enormous unstructured medical texts had also become a major challenge for the enrollment of patients to test targeted therapies, which is very time- and energy-consuming [87]. Thus, many scholars had been attempting to advance clinical research by reducing the time spent on screening, identifying, and recruiting patients to realize automatic information extraction and facilitate the decision-making process.

Second, within the theme Systems and applications, there were seven keywords, including SYSTEM, MEDICAL APPLICATION, GENETICS, MACHINE LEARNING, FACTURAL DATABASE, ELECTRONIC HEALTH RECORD, and ARTIFICIAL INTELLIGENCE, where ARTIFICIAL INTELLIGENCE was the largest one. Artificial intelligence techniques had been widely adopted for the processing of clinical trial data. For example, with the use of semantic, structural, and NLP techniques, Dhuliawala et al. [88] proposed an automatic system for transforming activity schedule tables within clinical trial protocols from the portable document format into machine-interpretable forms, Their work was the first effort to adopt the artificial intelligence method for extracting procedural logic within clinical trial texts and creating a protocol knowledge base. Alonso et al. [89] aimed to apply AI techniques to provide support to scientists engaging in bio-nutritional trials. In addition, there were scholars focusing on the application of machine learning techniques for clinical trial data processing. For example, with the use of discriminative sequence classifiers for named entity recognition, Hassanpour and Langlotz [90] proposed a machine learning system for radiology reports annotation and report contents extraction. With the use of logistic regression and decision trees, Bakal et al. [91] focused on accurately predicting new treatment and causative relationships between biomedical entities based on only the semantic graph pattern features obtained from biomedical knowledge graphs.

Third, within the theme Clinical trials processing, there were seven keywords, including ACCURACY, RANDOMIZED TRIAL, RANDOMIZED CONTROLLED TRIAL, MEDICAL INFORMATICS, STANDARD, NATURAL LANGUAGE PROCESSING, and ELIGIBILITY CRITERION, where RANDOMIZED TRIAL was the largest one. One critical application of NLP in clinical trial research is to resolve eligibility criteria for recruiting patients onto clinical trials. To evaluate patients’ eligibility for a particular clinical trial, it usually requires manual evaluation of the coincidence between each eligibility criterion and the condition of the patient, which is very time-consuming [92]. Thus, many scholars had been devoted to facilitate the assessment process. For example, using information extraction techniques, Jonnalagadda et al. [87] proposed an automatic method for converting unstructured texts into structured ones. Such an approach was cross-referenced against eligibility criteria using a rule-based system for determining the qualification of patients for a particular clinical trial. Furthermore, standardization is a critical issue in clinical trial research. It aims to transform useful information hidden within clinical trial texts into machine-interpretable data to facilitate its applications in clinical studies (e.g., patient screening, trial recruitment, trial duplication detection, and clinical decision support [93]). Boscá et al. [94] worked on the task of obtaining semantically interoperable genetic testing reports to not only enhance communication but also facilitate clinical decision support. van Leeuwen et al. [93] proposed a standards-driven trial metadata repository for capturing structured trial information, application-related formalisms, as well as execution logic, to support various relevant applications.

Finally, within the theme Diabetes-related issues, there were three keywords, including COMPUTER PROGRAM, OUTCOME, and DIABETES MELLITUS, where COMPUTER PROGRAM was the largest one. Nowadays, diabetes mellitus has gradually become a worldwide disease, serving as the main cause of morbidity and mortality [95]. Caballero-Ruiz et al. [96] proposed an innovative system named Sinedie to enhance the accessibility of specialized healthcare aid, protect patients from unnecessary displacements, facilitate the process of patient evaluation, and avoid the gestational diabetes negative effects. Cole-Lewis et al. [97] aimed to propose an extendible knowledge base concerning the self-management of diabetes to serve as a foundation for supporting patient-centered decisions. The knowledge base was designed with the participation of academic diabetes educators using knowledge acquisition approaches and was further verified by a scenario-driven method.

7.3. Important Research Topics Identified by STM

Within the 16 identified research topics, there were several critical topics worthy of being highlighted, all of which were frequently discussed among authors regarding the NLP-enhanced clinical trial research.

First of all, Interoperability accounted for the largest proportion (13.21%), and it had also received increasing interest and attention from the research community, indicating its important role in the research area. Currently, there were generally several major problems of medical documentation, including a lack of transparency, an overwhelming amount of medical contents to be documented, and missing interoperability [98]. As indicated by Priyatna et al. [99], semantic interoperability was important while conducting post-genomic clinical trials in which different affiliations cooperate. There were a great number of scholars attempting to solve such a challenge. For example, Alonso-Calvo et al. [100] proposed a standards-driven method to guarantee semantic integration to facilitate clinico-genomic clinical trials analysis, by developing semantic interoperability based on various well-defined biomedical standards. Gazzarata et al. [101] adopted a service-centered architecture paradigm to facilitate technical interoperability.

The second important topic was Information extraction, accounting for a proportion of 9.36%. The reuse of EHRs can facilitate scientific research and patient recruitment for clinical trials. Unfortunately, much information is hidden in unstructured texts such as discharge letters. Thus, it is required to be processed and transformed into structured data through the use of information extraction, which can be a time-consuming and labor-intensive task [102]. There were scholars working on such issues to facilitate clinical trial research. For example, Patterson et al. [103] proposed an NLP system with the use of dictionaries, rules, and patterns to extract measures of heart function recorded in echocardiogram reports. Further, by using a Curated semantic bootstrapping, a novel disambiguation approach based on semantic constraints, and a scalable framework, they enabled the proposed system to process large-scale clinical data. Milosevic et al. [104] investigated the feasibility of information extraction from tables that contained essential information such as characteristics of clinical trials within academic literature. They proposed a rule-driven approach to decompose tables into cell-level structures and to further extract information from them.

In addition, Eligibility criteria were also an essential issue in the NLP-enhanced clinical trial research. An essential step for discovering novel treatments for diseases is to match subjects with potential clinical trials. Eligibility criteria for clinical trials are inclusion and exclusion criteria for clinical trials and are written in the format of free text. Standardization of the eligibility criteria is able to improve both the efficiency and accuracy of the recruitment process [105]. As indicated by Milian et al. [106], the automated interpretation and the evaluation of patients’ eligibility were challenging as the eligibility criteria of clinical trials is typically expressed as free text. There were many scholars trying to seek effective ways to facilitate the process.

7.4. Developing Trends of Research Foci

Results from the Mann–Kendall trend test (Table 10) and topic temporal trends visualization (Figure 7) provided several insights into the evolution trends of the NLP-enhanced clinical trial research. On the whole, most of the 16 identified topics had shown a growing tendency, with some topics becoming important. In addition to the three topics discussed in Section 5.1, there were several other topics that had experienced an increase in studies by authors, for example, Life quality, Social & emotional, Text mining, and Drug side effect.

First, since about 2009, the topic Life quality started to receive increasing attention among authors, although with a decrease in 2016. Such a tendency indicated growing concern on the quality of life within the NLP-enhanced clinical trial research community. For example, aiming to improve the quality of cardiac rehabilitation care, van Engen-Verheul et al. [107,108] first adopted concept mapping to determine factors for the successful implementation of web-driven quality improvement systems based on audit and feedback intervention with outreach visits, and they then evaluated the effectiveness of the proposed system.

Second, the topic Social & emotional, overall, had enjoyed a continually increasing attention since about 2005. As indicated by Chen [109], the internet is widely adopted to search health information and to seek social support. In addition, the large volume of information on the internet requires an effective tool or method for entity resolution in biomedical records to identify name abbreviations for diseases or various symptoms for the same diseases [110]. Thus, social media data have been widely adopted to support clinical trial research, particularly in terms of emotional disclosure analysis. Emotional disclosure refers to “the process of writing or talking about personally stressful or traumatic events and has been associated with improvements in mood and immune parameters in clinical and non-clinical groups [111] (Page 1).” For example, by adopting automatic sentiment analysis, Livas et al. [112] investigated the popularity and content of Invisalign patient testimonials on YouTube, as well as comments’ sentiments.

Third, Text mining had also experienced a growth in research interest among scholars devoted to the NLP-enhanced clinical trial research, particularly since about 2005, although with an obvious decrease in about 2010. For example, Bekhuis and Demner-Fushman [113] aimed to investigate the following three issues: (1) Whether machine learning classifiers were able to facilitate the screening process of nonrandomized studies eligible for a systematic review on a particular topic; (2) whether the performance of classifiers varied with optimization; (3) whether it was possible to reduce the number of citations for screening. With the use of a binary classification approach based on a support vector machine and a multi-layered sequence labeling approach based on conditional random fields, Li et al. [114] conducted evaluations of the use of machine learning approaches, binary classification, and sequence labeling to detect the medication-attribute linkage in two clinical corpora.

In addition, the topic Drug side effect started to gain increasing attention since about 2008. As an essential role in medicine, drugs are produced for treating, controlling, and preventing diseases. However, in addition to therapeutic effects, drugs also bring adverse effects. As indicated by Rajapaksha and Weerasinghe [115], in spite of post-marketing drug surveillance, adverse drug reactions (ADRs) have been one of the most usual causes of deaths worldwide. Even though with expensive clinical trials, it is still difficult to discover all types of ADRs, which can be troublesome for both customers and health care providers. There are many scholars devoted to the issues of drug side effects. For example, with the use of a probabilistic topic model, Bisgin et al. [116] proposed a drug repositioning method to make use of complete safety information as compared to the conventional method.

7.5. Insights Into Potential Inter-Topical Research

Several potential inter-topical research directions were identified from the results of document-topic hierarchical clustering (Figure 10). For example, there was a trend toward research on both Human–computer interaction and Protocol, particularly protocol eligibility criteria. For example, Petkov et al. [117] found the optimum approach based on a clinical trial eligibility database integrated system for rapidly identifying patients with metastatic diseases by automatically screening unstructured radiology reports. In addition, the proposed approach could be employed at different affiliations to improve the screening efficiency of clinical trial eligibility.

Second, a potential research direction was brought by Eligibility criteria and Clinical decision support. By proposing a novel system for clinical trial processing, Huang et al. [118] aimed to not only realize the interoperability by semantically integrating heterogeneous data into clinical trials but also enable services of automated reasoning and data processing in clinical trials decisions supporting systems.

Third, there was also a tendency for the application combinations of Text mining and Information extraction to the clinical trial research. For example, Oellrich et al. [119] compared the performances of four existing concept recognition systems, including the clinical text analysis and knowledge extraction system, the National Center for Biomedical Ontology annotator, the biomedical concept annotation system, and MetaMap.

Fourth, there was also a tendency for combined research on Protein & Gene and Drug side effect. For example, based on the assumption that machine learning was able to make predictions of unknown adverse reactions according to existing knowledge, Bean et al. [120] created a knowledge graph involving four kinds of nodes (i.e., drugs, protein targets, indications, and adverse reactions). They further proposed an innovative machine learning approach based on a simple enrichment test. Experiments indicated that the proposed approach performed tremendously well for the classification of known causes of adverse reactions.

In addition, a potential research direction was brought by Intervention and Social & emotional. Tarazona-Santabalbina et al. [121] conducted an interventional, controlled, and simple randomized study to examine the following two issues. The first was to determine whether a supervised-facility multicomponent exercise program was able to reverse frailty and improve cognition, emotional, and social networking. The second was to explore biological biomarkers of frailty by comparing it to an untrained and controlled population.

7.6. The Latest Trends in NLP-Enhanced Clinical Trials Research

We also provided several latest research achievements and outcomes in the field of the NLP-enhanced clinical trials. First, there was a trend toward drug side-effect signals. Cohort selection is an especially challenging task to which NLP and deep learning techniques can make a significant difference [122]. For example, based on a collection of online posts from known statin users, Timimi et al. [123] adopted NLP techniques and hands-on linguistic analysis for the identification of drug side-effect signals. Experimental results indicated that statin users correlated statistically and significantly with memory impairment discussion.

Second, there was a growing trend in the development of innovative methods to select a cohort for clinical trials. For example, to address track one of the 2018 national NLP clinical challenges, Stubbs et al. [124] first selected criteria from current clinical trials to represent various NLP tasks, for example, concept extraction, temporal reasoning, and inference. They then labeled clinical narratives of 288 patients by examining their qualifications for the criteria. Vydiswaran et al. [125] proposed an innovative hybrid model by combining pattern-driven, knowledge-enriched, and feature weighting approaches. Segura-Bedmar and Raez [122] investigated several deep learning architectures for cohort selection for clinical trials, for example, simple convolutional neural networks (CNNs), deep CNNs, recurrent neural networks (RNNs), and CNNs–RNNs. Xiong et al. [126] proposed a hierarchical neural network consisting of five components. The first was a sentence representation using a CNN or the long short-term memory (LSTM) network. The second was a highway network for adjusting information flow. The third was a self-attention neural network for reweighting sentences. The fourth was a document representation using LSTM. The final was a fully connected neural network for determining whether each criterion was met or not. By employing an innovative generic rule-driven framework plugged in with lexical-, syntactic-, and meta-level, task-specific knowledge inputs, Chen et al. [127] proposed a novel and integrated rule-driven clinical NLP system. In addition, Chen et al. [128] proposed a clinical text representation model to learn patients’ clinical trial criteria eligibility status for participating in cohort research. Experimental results demonstrated that the proposed model outperformed other medical knowledge-related learning architectures by around 3%.

Furthermore, there was also a growing trend for reducing information overload for trial seekers. For example, Liu et al. [129] proposed a novel system using dynamic questionnaires, by adopting a real-time and dynamic question generation approach, and dynamically updating the trial set through excluding unqualified trials according to users’ responses to the generated questions.

Moreover, there was also a growing trend in the use of deep NLP of radiologic reports for measuring real-world oncologic outcomes (e.g., disease progressions and responses to therapies). For instance, Kehl et al. [130] aimed to explore whether deep NLP could extract related cancer outcomes from radiologic reports. Their study demonstrated that deep NLP had potential at speeding up the curation of related cancer outcomes, as well as facilitating fast learning from EHRs.

In addition, there was also a growing need for structuring and learning from the EHR using novel NLP techniques. For example, Thompson et al. [131] proposed an innovative method for assisting structuring, based on the exploration of how the most relevant words related to the class predicts a text from a position perspective.

8. Conclusions

This study reported the first endeavor toward the conduction of a detailed bibliometric analysis of the existing body of knowledge in the NLP-enhanced clinical trial research domain. Results obtained from the bibliometric analysis, science mapping, and STM analysis demonstrated several significant implications and insights into relevant scholars before pursuing further explorations in the NLP-enhanced clinical trial research field. From the methodology perspective, this study was the first to apply the STM approach to the NLP-enhanced clinical trial research, which was able to get rid of the limitations by using manual coding or word frequency analysis. Major findings and suggestions for future research on the NLP-enhanced clinical trials are summarized in the following subsections.

8.1. Major Findings

We summarized the major findings in the following aspects.

First, analysis of the annual trend of publications demonstrated that there was a dramatic growth in the research interests in the NLP-enhanced clinical trial research. In addition, research interests had become diverse among scholars.

Second, the USA, as well as several institutions such as Stanford University, Columbia University, and the National Institutes of Health, had contributed the most to the NLP-enhanced clinical trial research.

Third, the collaborations among European countries/regions in conducting NLP-enhanced clinical trial research were very close. The collaborations among institutions and authors from the same countries/regions were also close, particularly those from the USA.

Fourth, science mapping revealed several major research themes, including Human-focused issues, Systems and applications, Clinical trials processing, as well as Diabetes-related issues.

Fifth, STM identified 16 major topics, within which the most discussed included Interoperability, Clinical decision support, Information extraction, and Eligibility criteria.

Besides, several topics with an increase in research interest among authors had been identified, for example, Life quality, Drug, Social & emotional, Information extraction, Eligibility criteria, and Drug side effect.

In addition, several potential inter-topic research directions had also been uncovered, for example, Human-computer interaction and Protocol, Eligibility criteria and Clinical decision support, Text mining and Information extraction, Protein & Gene and Drug side effect, as well as Intervention and Social & emotional.

8.2. Suggestions for Future Research on the NLP-Enhanced Clinical Trials

Based on the major findings and discussions in Section 6, suggestions for NLP-enhanced clinical trials research in the future are proposed as follows.

First, it is important for scholars to continuously focus on the applications of text mining and information extraction techniques for the processing of clinical trial data.

Second, scholars from different regions and institutions are encouraged to enhance scientific communications and collaborations with advantageous regions in specific research areas to bolster scientific activities regarding the NLP-enhanced clinical trial research.

Third, scholars are encouraged to seek the potential to combine advanced deep learning techniques, for example, deep reinforcement learning, deep neural networks, recurrent neural network, and convolutional neural networks, with NLP techniques to deal with issues regarding clinical trials.

Besides, there is a growing need for improving and ensuring the quality of life in clinical trials research with the assistance of NLP techniques.

In addition, professionals, academics, and clinicians from different areas are encouraged to conduct collaborative research on potential promising issues, for example, Eligibility criteria and Clinical decision support, Protein & Gene and Drug side effect, as well as Intervention and Social & emotional.

Author Contributions

Conceptualization, X.C. and F.L.W.; Formal analysis, X.C.; Methodology, X.C. and H.X.; Software, G.C.; Validation, L.K.M.P. and M.L.; Writing—original draft, X.C., H.X. and L.K.M.P.; Writing—review & editing, M.L. and F.L.W. All authors have read and agreed to the published version of the manuscript.

Funding

The work has been supported by the Interdisciplinary Research Scheme of the Dean’s Research Fund 2018–19 (FLASS/DRF/IDS-3), Departmental Collaborative Research Fund 2019 (MIT/DCRF-R2/18-19), and One-off Special Fund from the Central and Faculty Fund in Support of Research (MIT02/19-20) entitled “Facilitating Artificial Intelligence and Big Data Analytics Research in Education” of The Education University of Hong Kong, Research Seed Fund, Hong Kong Institute of Business Studies Research Seed Fund (HKIBS RSF-190-009) and LEO Dr. David P. Chan Institute of Data Science, Lingnan University, Hong Kong.

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

Table A1.

Final keywords list for NLP research in data retrieval.

Table A1.

Final keywords list for NLP research in data retrieval.