Abstract

Bimanual telemanipulation is vital for facilitating robots to complete complex and dexterous tasks that involve two handheld objects under teleoperation scenarios. However, the bimanual configuration introduces higher complexity, dynamics, and uncertainty, especially in those uncontrolled and unstructured environments, which require more advanced system integration. This paper presents a bimanual robotic teleoperation architecture with modular anthropomorphic hybrid grippers for the purpose of improving the telemanipulation capability under unstructured environments. Generally, there are two teleoperated subsystems within this architecture. The first one is the Leap Motion Controller and the anthropomorphic hybrid robotic grippers. Two 3D printed anthropomorphic hybrid robotic grippers with modular joints and soft layer augmentations are designed, fabricated, and equipped for telemanipulation tasks. A Leap Motion Controller is used to track the motion of two human hands, while each hand is utilized to teleoperate one robotic gripper. The second one is the haptic devices and the robotic arms. Two haptic devices are adopted as the master devices while each of them takes responsibility for one arm control. Based on such a framework, an average RMSE (root-mean-square-error) value of 0.0204 rad is obtained in joint tracking. Nine sign-language demonstrations and twelve object grasping tasks were conducted with the robotic gripper teleoperation. A challenging bimanual manipulation task for an object with 5.2 kg was well addressed using the integrated teleoperation system. Experimental results show that the proposed bimanual teleoperation system can effectively handle typical manipulation tasks, with excellent adaptabilities for a wide range of shapes, sizes, and weights, as well as grasping modes.

1. Introduction

With significant advances in the last decades, robotic teleoperation has gained widespread acceptance and has become one of the most attractive topics due to its potential in dealing with those human-unfriendly, harmful, and unreachable scenarios [1]. Many promising results have been achieved in many challenging telemanipulation tasks, such as robot-assisted surgery [2], space exploration [3], disaster response [4,5], underwater occasions [6], and micromanipulation [7]. Generally, a robotic teleoperation system is composed of the human operator, master devices, communication channels, slave robots, and the environment [8]. During the teleoperation, the human operator directly controls the movement of the master device, which is physically connected with the human operator. The control command is generated from the master device and then sent to the slave robot to perform specific tasks. The interaction force between the slave robot and the environment is sent back to the master device while the position and orientation of the slave robot would be acquired via visual perception or directly observed by human eyes. Thus, the bimanual telemanipulation can be seen as such a framework where each human system interface is controlled by one human hand under teleoperation environments [9].

Corresponding to the robotic manipulation system, the robotic teleoperation is divided into two categories: the teleoperation of the robotic arm and the teleoperation of the robotic gripper. Various haptic devices and sensors have been introduced for the robotic teleoperation. Based on a Leap Motion Controller and a sensorized glove, Ponraj and Ren proposed a sensor fusion technique for human finger tracking and applied it in the teleoperation of a three-fingered soft robotic gripper [10]. Low et al. developed a hybrid telemanipulation system via a 3D printed soft robotic gripper and a soft fabric-based haptic glove [11]. Four sensors were used to match with the four-fingered soft robotic gripper in the telemanipulation system. Sun et al. presented a multilateral teleoperation system with two haptic devices on the master side and a four-fingered robotic gripper on the slave side [12]. The type-2 fuzzy model was used to investigate the system dynamics. Furthermore, Zubrycki and Granosik suggested an integrated vision system by using a Three Gears and a Leap Motion Controller [13]. Hand gestures were used to control a three-fingered dexterous gripper. Besides the robotic gripper, Rebelo et al. put forward a wearable arm exoskeleton-based bilateral robot teleoperation system [14]. A modified sensoric arm exoskeleton was used as a haptic master device to control a 7 DoFs (degrees of freedom) Kuka robot. Xu et al. studied the human–robot interaction in robot teleoperation [15]. Human body motion and hand state were captured by a Kinect v2 to control two robotic arms. Yang et al. proposed a neural network enhanced robotic teleoperation system with guaranteed performance [16]. A Kinect sensor was used to detect the obstacles around the robotic arm while a Geomagic Touch was adopted as the master device to control the Baxter arm.

Rather than the single teleoperation of the robotic arm or robotic gripper, some efforts have been made into the hybrid teleoperation with both the arm and gripper. Razjigaev et al. developed the teleoperation of a concentric tube robot via hand gesture visual tracking [17]. Milstein et al. investigated the grasping of rigid objects with a unilateral robot-assisted minimally invasive surgery system [18]. A haptic device of Novint Falcon was used in the teleoperation of the surgical robots. Bimbo et al. proposed a wearable haptic enhanced robotic teleoperation system for telemanipulation tasks in cluttered environments [19]. A Pisa/IIT SoftHand and a robotic arm were equipped as the slave system. Two wearable vibrotactile armbands were worn on the upper arm and forearm to perceive feedback about collisions on the robotic arm and hand. A Leap Motion Controller was used to capture the user’s hand pose to control the position of the robotic arm and the grasping configuration of the robotic hand.

Although the hybrid teleoperation with the robotic arm and gripper provides the potential to perform multiple manipulation tasks, it is limited to the fields of one handheld objects. However, one hand manipulation only occupies a very small proportion in real applications, whereas most of the occasions involve collaboration between two hands. With the increase of the complexity of robotic manipulation tasks, bimanual manipulation becomes increasingly popular [20,21]. Some bimanual teleoperation systems with dual arms and dual grippers have been developed for bimanual tasks. Du and Zhang developed a Kinect-based noncontact human–robot interface for potential bimanual tasks [22]. Human gestures were recorded as the command in the bimanual teleoperation. Burgner et al. presented a bimanual teleoperated system for endonasal skull base surgery [23]. Two haptic devices were used to teleoperate the two cannulas in the bimanual active cannula robot. Moreover, Du et al. proposed a markerless human–robot interface for bimanual tasks by using a Leap Motion Controller with interval Kalman filter and improved particle filter [24]. Hand gestures were used in the teleoperation of the dual arms and dual grippers. Meli et al. suggested a sensory subtraction technique to force feedback in robot-assisted surgery [25]. Two Omega 7 and four fingertip skin deformation devices were adopted as the haptic interfaces in a challenging 7 DoFs bimanual teleoperation task. Makris et al. investigated the cooperation of dual arm robot and humans for flexible assembly [26]. Besides, some research efforts have been devoted to the mobile bimanual robotic teleoperation [27].

Compared with the simple configuration with one arm and one gripper, two arms are better than one for assistive bimanual manipulation tasks. With the dual arms and dual grippers, the bimanual teleoperation system enables the robot to handle more complex and challenging tasks that are not feasible with one single arm and one gripper, in the same sense as human beings. It provides more opportunities in real applications. However, the bimanual configuration also raises a number of challenges such as higher complexity, uncertainty, and difficult to perceive reliably, especially under those unstructured and uncontrolled scenarios [28]. Most of the bimanual teleoperation systems are limited to a small workspace and well-designed occasions, such as robot-assisted surgery. They are not suitable for most general unstructured applications, which involve a big workspace and can only rely on manually control by the human operator. In addition, the compliance of the robotic gripper is far less than the human hand [29]. The human-level robotic manipulation is still a critical issue, especially under those unconstrained and dynamic environments. Sophisticated control strategies are required to achieve precise manipulation, which not only increases the difficulties of the design but also raises the cost of the manufacturing [30]. How to make a compromise between the dexterity and complexity is a dilemma towards traditional rigid robotic grippers. Meanwhile, the soft gripper is unable to provide necessary grasping force to handle the large and heavy objects due to the lack of rigid support. Even though the soft material can produce large deformations to enhance grasping performance, the soft contact configurations are very sensitive and easy to fail in such a dynamic environment.

To enhance the bimanual manipulation capability of the robotic system under teleoperated and unstructured environments, this paper presents a bimanual robotic teleoperation architecture with dual arms and dual grippers. Two 3D printed anthropomorphic hybrid robotic grippers with modular and reconfigurable joints are developed and equipped with two Universal Robots UR10 on the slave side while two haptic devices and a Leap Motion Controller are set up on the master side. Multiple experiments were conducted to evaluate the performance of the proposed system, including the teleoperation of the arms for target approaching, the teleoperation of the grippers for sign-language demonstrations and grasping tasks, and the integrated teleoperation of the dual arms and dual grippers for bimanual tasks. Several typical manipulation tasks were investigated using the developed teleoperation system. The primary contributions of this paper include:

- (1)

- A bimanual robotic teleoperation architecture with dual arms and dual anthropomorphic hybrid grippers is presented to perform unstructured manipulation tasks with fully human control. Two 3D printed anthropomorphic hybrid robotic grippers with modular joints and soft layer augmentations are designed, fabricated, and equipped as the slave devices for various manipulation tasks. With the modular fingers and soft layer enhancements, the hybrid robotic gripper is endowed with powerful adaptabilities and delicate object manipulation abilities towards a wide range of grasping tasks with regard to different sizes, weights, shapes, and hardnesses.

- (2)

- A markerless, natural user interface is developed for the teleoperation of the anthropomorphic hybrid robotic gripper. A human hand motion tracking system is set up by a Leap Motion Controller. Human hand gesture and finger motion information are captured for the gripper teleoperation. A relative ratio index is proposed as a measure for grasping modulation of the gripper.

- (3)

- Multiple experiments were conducted to demonstrate the effectiveness of the proposed bimanual teleoperation system. Many challenging manipulation tasks were executed using the teleoperation system with dual arms and dual grippers.

The remainder of this paper is organized as follows. Section 2 provides a brief description of the proposed bimanual teleoperation architecture. Section 3 presents the methods of the teleoperation system with dual arms and dual grippers. Section 4 discusses the experimental setup and the results. Section 5 presents the discussion. Section 6 draws the conclusion.

2. System Description

2.1. Architecture of the Teleoperation System

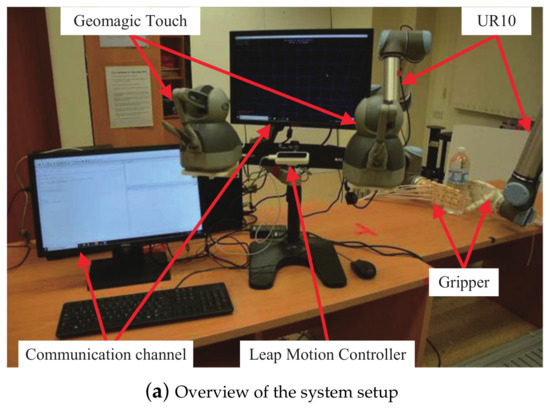

As illustrated in Figure 1, the proposed bimanual robotic teleoperation system consists of two robotic arms, two haptic devices, two robotic grippers, a Leap Motion Controller, communication channels, and a human operator. Two haptic devices and a Leap Motion Controller are set up on the master side while two robotic arms and two robotic grippers are adopted on the slave side. Generally, the overall system is made up of two subsystems, namely the robotic arm teleoperation and the robotic gripper teleoperation. The former subsystem contains two robotic arms and two haptic devices while the latter subsystem consists of two robotic grippers and a Leap Motion Controller.

Figure 1.

Diagram of the bimanual robotic teleoperation system.

In the robotic arm teleoperation subsystem, two UR10 are equipped as the slave robots while two haptic devices of Geomagic Touch (3D Systems, Rock Hill, SC, USA) serve as the master devices. Each Geomagic Touch is used for the teleoperation of one UR10. During the execution, a human operator manipulates specific joints of the Geomagic Touch to generate target movements. Device information such as the position and motion tracking data are recorded and sent to the slave robot as the control command.

In the robotic gripper teleoperation subsystem, a Leap Motion Controller (Leap Motion, San Francisco, CA, USA) and two 3D printed anthropomorphic robotic grippers are set up on the master and slave sides, respectively. Each gripper is mounted on the end effector of the UR10 for manipulation tasks. Two human hands are used in the gripper teleoperation and each hand controls a gripper. A Leap Motion Controller is adopted for human hands motion tracking. Hand gestures and finger motion information are recorded as the control command and sent to the corresponding robotic gripper.

Basically, the two subsystems are independent of each other. They can be performed simultaneously in practical teleoperation. The robotic grippers can be used for manipulation tasks while the robotic arms keep moving. No switching mechanism is needed between the robotic arm and gripper control. However, the human operator only has two hands. He can only control two devices simultaneously, i.e., two robotic arms, two grippers, or one robotic arm and one gripper.

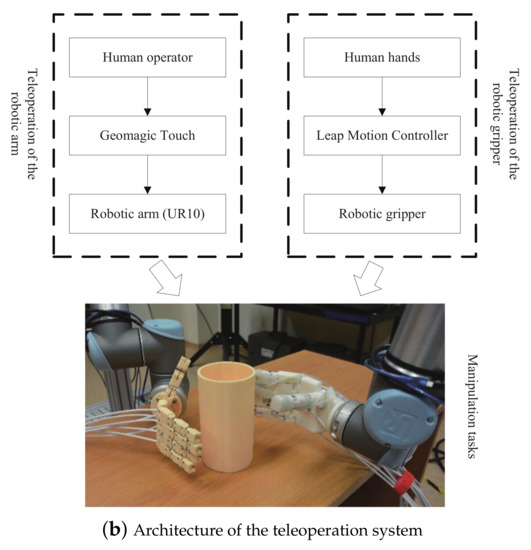

2.2. 3D Printed Anthropomorphic Hybrid Robotic Gripper

In the bimanual teleoperation system, two 3D printed anthropomorphic hybrid robotic grippers are developed for the manipulation tasks. Generally, the two grippers share similar structures. They are cable-driven and five-fingered. As depicted in Figure 2, the hybrid robotic gripper is composed of a rigid structure (rigid gripper) and the soft augmented layer (soft layer). Each rigid gripper has a palm and five fingers. It has 7 DoFs in total, where the thumb has two, while the palm and the other four fingers have one each. Correspondingly, seven servo motors of Dynamixel MX-64R (Robotis, Seoul, Korea) are used as the actuators. Based on the bio-inspired design philosophy, the rigid structure can be seen as the bone of human hand while the soft layer acts as the skin. The rigid structure is used to compensate the gravity of the object and the soft layer is adopted to increase the friction between the gripper and the grasped object. Therefore, the hybrid rigid–soft gripper not only takes advantage of the rigid structure in manipulating rigid objects but also makes use of the soft layer in potential contact and slippery augmentation.

Figure 2.

Illustration of the anthropomorphic hybrid robotic gripper.

In addition, the rigid gripper is a modular product. As shown in Figure 2, each finger module has a dovetail anchor and a dovetail anchor slot. A dovetail joint is generated between two adjacent finger modules. Thus, the length of the finger can be quickly adjusted by installing or removing the finger modules. With modular and reconfigurable joints on each finger, the workspace of the gripper is flexible and can be easily changed according to specific tasks, which enables the gripper to adapt to various manipulation tasks with objects in different sizes and shapes.

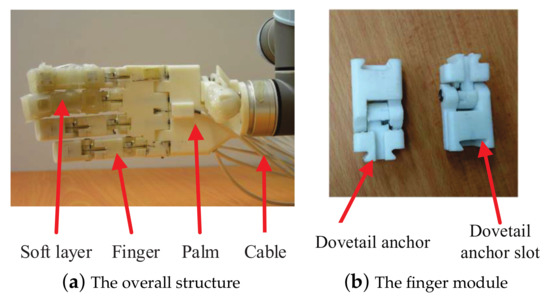

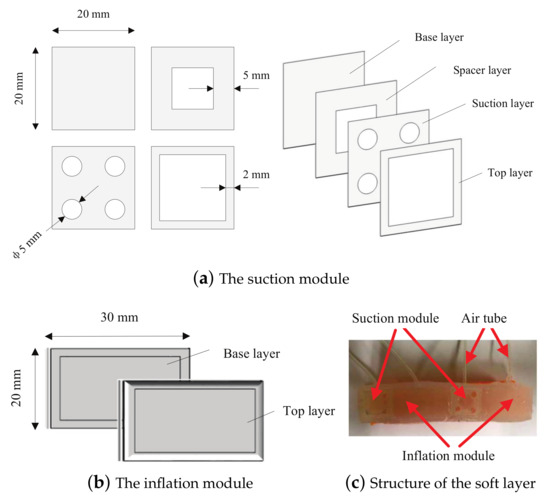

Besides the rigid modular structure, three fingers (the thumb, index, and middle) are mounted with additional soft augmented layers, which are made of a stretchable material named as EcoFlex 00-30 (Smooth-On, Macungie, PA, USA). Generally, the soft layer is composed of suction and inflation modules, which are staggered arranged. As illustrated in Figure 3, the suction module consists of four layers, namely the base layer, spacer layer, suction layer, and top layer. The dimension of the whole suction module is around 20 mm × 20 mm × 6 mm, with a thickness of 1.5 mm for each layer. An air pump with one positive-pressure channel and one negative-pressure channel is connected to the suction module via a soft tube. A negative air pressure ranging from −73 kPa to 0 is provided to actuate the suction capability. Then the suction module would be firmly attached to the object with the effect of the negative pressure. By contrast, the inflation module has two layers. The size of the whole module is approximately 20 mm × 30 mm × 4 mm, with a thickness of 2 mm for each layer. A soft tube is inserted and a positive air pressure ranged from 0 to 73 kPa is input to activate the inflation functionality. The cavity between the two layers would be inflated with the effect of the positive pressure, which is used to increase the contact area between the gripper and the object.

Figure 3.

Structural architecture of the soft layer.

Furthermore, the whole rigid gripper including the five fingers and the palm can be equipped with the soft augmented layer. Specifically, the suction modules are placed on the finger belly of the rigid gripper, which is supposed to provide suction force. The inflation modules are placed on the hinges of the rigid gripper to engage the contact with the object. Thus, the soft layer may not only enhance the grab strength by increasing the contact area but also provide a buffer between the rigid gripper and the grasped object to prevent the object from damage by the hard surface. The 3D printed anthropomorphic rigid–soft gripper takes both the advantages of the rigid and soft grippers, as well as the gripper and the suction cup. With the enhancements in contact, slippery, and stiffness properties by the soft layer, the hybrid rigid–soft robotic gripper is enabled to handle a variety of objects with different shapes, hardnesses, and textures.

2.3. Haptic Device of Geomagic Touch

As a popular haptic device, Geomagic Touch (formerly SensAble Phantom Omni) has been widely used as a master device in various robotic teleoperation scenarios. It provides a variety of device information, such as joint angles, positions, velocities, and accelerations, as well as the interaction force between the slave device and the environment. With six revolute joints, Geomagic Touch has 6 DoFs, with three joint angles and three gimbal angles. It shares a similar configuration with common 6 DoFs robots. Therefore, the Denavite–Hartenberg parameters can be introduced to build the kinematics model of the haptic device [31].

Corresponding to the Cartesian and joint space, there are two mapping strategies between the workspace of the haptic device and slave robot. The first one is the Cartesian space mapping. Based on the kinematics model of the master and slave devices, it is a universal mapping strategy for various devices, especially useful under the heterogeneous circumstance, which has different DoFs between the master and slave devices. However, it is not always possible to set up a precise coordinate transformation between the master and slave devices. The kinematics model of the slave device is not available in some circumstances. The second one is the joint space mapping. Established on the direct mapping between corresponding joints of the master and slave devices, it is more intuitive than the Cartesian space mapping strategy. However, it suffers from a serious DoF-mismatch problem between heterogeneous devices which have different DoFs.

2.4. Leap Motion Controller

Leap Motion Controller is a small USB peripheral device, which is designed for hand and finger motion tracking. With two monochromatic IR cameras and three infrared LEDs, Leap Motion Controller provides an ideal natural user interface. It can generate various hand and finger motion tracking information, such as the palm position, velocity, normal, direction, and grab strength, as well as the fingertip position, velocity, direction, length, width, and finger extended state. Due to its small observation area and high resolution, Leap Motion Controller has been widely used as a human–machine interface in the context of robot control [17], sign-language recognition [32], and text segmentation [33].

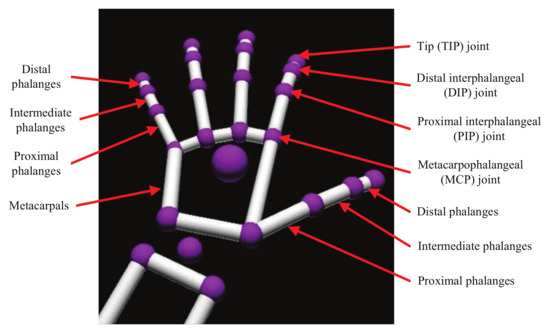

As illustrated in Figure 4, most of the common bones and joints in the human hand are captured by the Leap Motion Controller, which correspond well to the 3D printed anthropomorphic hybrid gripper, as discussed in Section 2.2.

Figure 4.

Illustration of the human hand in Leap Motion Controller.

3. Methods

In this section, the detailed methodology of the developed teleoperation system is presented, which contains the data acquisition from the master devices, the incremental-based robotic arm teleoperation, and the gesture-based robotic gripper teleoperation.

3.1. Data Acquisition

As the two subsystems are independent of each other, the two arms are controlled by the Geomagic Touch while the two grippers are teleoperated by the human hands. In the data acquisition of the robotic arm subsystem, the six joint values of the Geomagic Touch are collected, which are then mapped to the six joints of the corresponding UR10. For the robotic gripper subsystem, the human hand gestures are collected by the Leap Motion Controller. Two human hands were adopted in this experiment and each hand was responsible for one gripper control. The detailed hand gesture information, including the hand type (left or right), palm position, tip position, and finger length, was collected for subsequent analysis.

3.2. Incremental-Based Robotic Arm Teleoperation

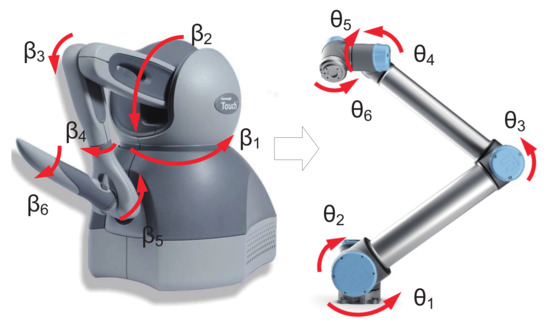

In view of the unstructured environment and the large workspace of the telemanipulation, a joint space mapping strategy is adopted in the robotic arm teleoperation. As shown in Figure 5, the Geomagic Touch shares the same DoFs as the robotic arm. For the sake of simplicity, an intuitive joint to joint mapping is conducted between the corresponding joints of the Geomagic Touch and the UR10. Specifically, the scaled incremental joint values of the Geomagic Touch () is used as the control input, which is defined as:

where t is the sample time step, is the ith joint value at the time step t of the Geomagic Touch, and is a scalable coefficient for the ith joint. was set to 0.3 to achieve the optimal performance according to the experiments.

Figure 5.

The mapping strategy in robotic arm teleoperation.

The front button on the Geomagic Touch is used as the switch of the robotic arm teleoperation system. Once the button is pressed, the scaled incremental joint values are calculated and sent to the corresponding joints of UR10, where

and is the ith joint value at the time step t of the UR10.

Accordingly, is set as zero when the button is released. The slave robot stops in this situation. Thus, the user can easily start or stop the teleoperation system of the dual arms according to the manipulation tasks.

3.3. Gesture-Based Robotic Gripper Teleoperation

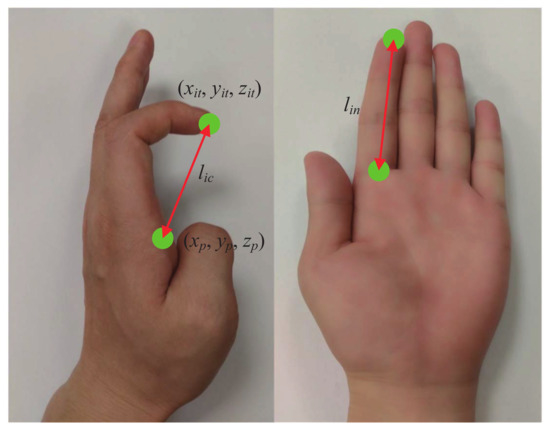

Based on the multiple hand gesture information acquired from the Leap Motion Controller, the attributes of hand type, palm position, finger type, finger length, and tip position are adopted in the robotic gripper teleoperation. Instead of the binary distribution of 0 or 1 from the finger extended attribute, a relative ratio index (RRI, ) is proposed as the measure for grasping modulation of the gripper, which is defined as:

where is the nominal length of the ith finger, which is acquired from the Leap Motion Controller, and is the calculated length of the ith finger, which is calculated as:

where is the palm position of the hand and is the tip position of the ith finger (Figure 6).

Figure 6.

The hand parameters in robotic gripper teleoperation.

The defined RRI can be further transformed to the binary values of 0 or 1 for discrete control as the finger extended attribute. Alternatively, it can be normalized to the values between [0, 1] to act as the grasping modulation index. Compared with the grab strength of the whole hand, each finger has its own RRI, which enables each finger to modulate the grasping state with individual values, not just only one value for all five fingers.

During the gripper teleoperation, each human hand is in charge of one robotic gripper. The type of human hand (left or right) is automatically identified by the Leap Motion Controller. The two robotic grippers are independent of each other, which can be controlled simultaneously by two human hands. Initially, the gripper is set to the open state. When the gripper teleoperation subsystem runs, it moves to the target state according to the control command. Then, it maintains at the last state until the new command comes.

Moreover, the position control and torque control strategies are explored in the robotic gripper teleoperation. They are further used to incorporate with the gesture-based control mode. Compared with the position control strategy, the torque control mode is more flexible and robust. It can automatically regulate the grasping tightness with regard to the object’s shape, size, and hardness. An initial torque value is predefined and then it can be adjusted with the change of the RRI value. Thence, the human operator can easily control the grasping tightness with hand gestures.

In the experiments, for the sake of simplicity, the RRI value was transformed into the binary values of 0 or 1 in both the position control and torque control modes. With this assumption, the RRI value acts as an index to control the state (open or closed) of the corresponding finger of the gripper. The binary values of 0 and 1 of the RRI are mapped to the open and closed states, respectively.

4. Experiments and Results

Corresponding to the architecture of the proposed teleoperation system, three experiments were designed to evaluate the effectiveness of the proposed method, namely robotic arm teleoperation, robotic gripper teleoperation, and the integrated teleoperation for bimanual tasks.

4.1. Robotic Arm Teleoperation Execution

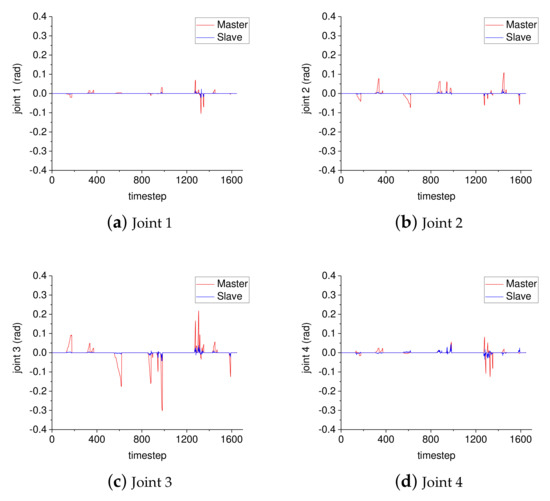

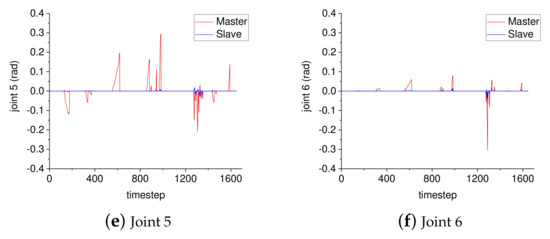

In this experiment, two Geomagic Touch were used as the master devices, while each of them was responsibe for one UR10 control. To illustrate the tracking performance of the proposed incremental-based method for robotic arm teleoperation, corresponding joint values of the master and slave devices were recorded for comparison. The experiments were implemented on a PC platform with MATLAB/Simulink development environment. The OpenHaptics toolkit API was used as the software development kit for the Geomagic Touch. As the two sets of the robotic arm teleoperation subsystems share the same configuration, here we only list the joint values of one Geomagic Touch and the corresponding slave robot, which are shown in Figure 7.

Figure 7.

Joint values tracking in robotic arm teleoperation.

In Figure 7, the joint values of the slave and master devices are the incremental values of the robotic arm () and the corresponding scaled incremental values of the Geomagic Touch (), respectively. To evaluate the tracking performance of the slave device, the RMSE (root-mean-square-error) was introduced to evaluate the difference between the corresponding joint values, which is defined as:

where n is the sample size (number of sample time step) and i is the ith joint ().

As shown in Figure 7, the RMSEs were calculated as 0.0071, 0.0149, 0.0348, 0.0115, 0.0389, and 0.0154 for Joints 1–6, respectively. The average value is 0.0204 for the six joints. Therefore, it can be concluded that the proposed incremental-based robotic arm control strategy can properly perform the joint space mapping between the master device and the slave robot during the teleoperation.

4.2. Robotic Gripper Teleoperation Execution

The dual anthropomorphic grippers share similar specifications. They only differ in size and trivial structural connections. In this experiment, we first used the smaller gripper to demonstrate the teleoperation with sign-language. Then, we used the bigger gripper to test the teleoperation for grasping tasks. Both experiments were implemented on a PC platform with Python development environment. The ROBOTIS Dynamixel SDK and LeapSDK were used as the software development kit for the Dynamixel motor MX-64R and Leap Motion Controller, respectively.

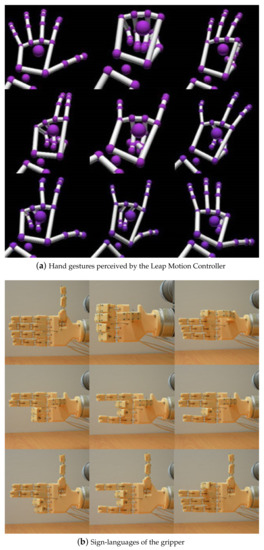

First, a wide range of common hand gestures were tested in the teleoperation of the smaller gripper with sign-language demonstrations. The position control strategy was adopted for the motor control in this setup. The illustration of the human hand gestures and corresponding sign-languages of the robotic gripper is shown in Figure 8.

Figure 8.

Sign-language of the smaller gripper with human hand gestures and Leap Motion Controller.

In Figure 8, it is observed that the sign-language gestures of the gripper are properly matched with the corresponding human hand gestures. As an excellent somatosensory controller, the Leap Motion Controller provides a potential markerless and contactless human–robot interface.

Then, we used the bigger gripper to test the teleoperation for grasping tasks. The torque control strategy was used in the motor control. Many common objects in our daily life were adopted in this experiment, with various shapes, hardnesses, sizes, and textures, ranging from the rigid bottle, fresh pepper, and slender fan, to deformable balloon and fragile egg. The grasping tests are illustrated in Figure 9. The characteristics of the grasped objects are listed in Table 1. The RRI value of the fingers during the teleoperation is given in Table 2.

Figure 9.

Teleoperation of the bigger gripper for grasping tasks with human hand gestures and Leap Motion Controller.

Table 1.

Characteristic of various objects used in the gripper teleoperation experiment.

Table 2.

RRI value of the five fingers during the teleoperation experiment.

It was observed that the teleoperation of the bigger gripper is able to handle a broad range of common objects for grasping tasks. It is well known that different objects correspond to different grasping modes. In view of individual characteristics of the grasped object, the operator can adjust his hand gestures to adapt to different grasping modes, which are further mapped onto the corresponding grippers. With the torque control mode of the Dynamixel motor, the gripper is enabled to grasp fragile and deformable objects. The grasping tightness and target position would be automatically adjusted with the object deformation, which is more flexible and robust with different objects grasping tasks, with different weights, shapes, sizes, hardnesses, and textures. As shown in Figure 9 and Table 1, the robotic gripper can grasp from a tiny spoon to a big toy ball, a fragile egg to a metal bottle, and a light balloon to a heavy bottle of drinking water. It can handle even bigger objects due to the modular and reconfigurable fingers, which can adapt to different sizes of the grasped object. As shown in Figure 9 and Table 2, the RRI value of the five fingers are well matched with the finger state (open or closed) of the gripper during the teleoperation experiment.

4.3. Bimanual Tasks with Teleoperation Execution

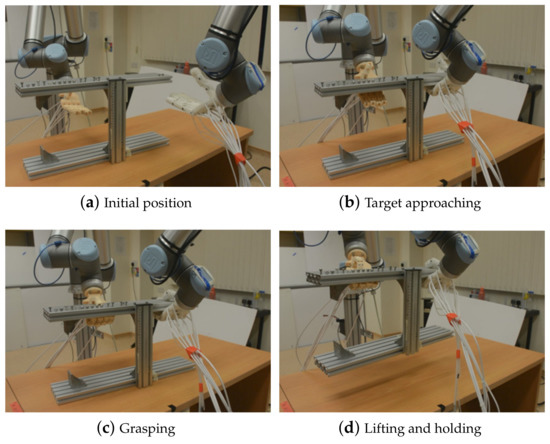

On the basis of the individual teleoperation experiment of the robotic arms and grippers, an integrated teleoperation experiment with the dual arms and dual grippers was conducted to test the performance of the whole teleoperation system for bimanual tasks. A common action set, with target approaching, grasping, lifting, and holding, conducted by dual arms and dual grippers was designed as a bimanual task. A 5.2 kg aluminum frame was introduced as the target object. The experiment of the teleoperation-based bimanual tasks is shown in Figure 10.

Figure 10.

Execution of the teleoperation-based bimanual tasks for the manipulation of an aluminum frame.

The size of the frame was 600 mm × 312 mm × 120 mm. It is really challenging to lift and hold such a big and heavy frame with normal configuration of one arm and one gripper, especially under the unstructured teleoperated environment. The size is too big for one gripper to hold it. The weight is too heavy and the shape is asymmetrical. Slipping would occur during the lifting and holding.

However, as shown in Figure 10 and the attached video, the integrated teleoperation system with dual arms and dual grippers can effectively handle such a big and heavy frame to perform specific bimanual tasks with target approaching, grasping, lifting, and holding. During the manipulation, the two grippers cooperate with each other. The weight of the frame is shared by the two grippers. Potential slipping and dropping are properly avoided. Hence, two arms and two grippers are better than one for such assistive bimanual tasks. It is much easier to keep balance during the manipulation procedures.

5. Discussion

With the dual arms and dual grippers configuration, the proposed bimanual robotic teleoperation system shows strong potential in dealing with challenging manipulation tasks, ranging from one hand grasping with different weights, shapes, sizes, and hardnesses to bimanual collaborative tasks such as target approaching, grasping, lifting, and holding. Compared with traditional teleoperation system with one arm and one gripper, the bimanual configuration with dual arms and dual grippers enables the robotic system to perform more complex manipulation tasks, such as picking and placing heavy objects, grasping, passing, holding, and assembling. Based on the bio-inspired design philosophy, the bimanual configuration with dual arms and dual grippers is very similar to human beings. Undoubtedly, the two hands configuration is much better than a one-hand system in collaborative tasks. It is more common in our daily life and industrial applications.

Based on the haptic device and the joint space mapping strategy, the human operator has the ability to manually control the robotic arm according to his intuitive observations. However, the current setup is only limited to the motion control of the robotic arm. The force information is not included. Besides, the current system is established on the open loop control. The time delay and dynamic uncertainties have not been taken into consideration. To further improve the performance of the teleoperation system, a feedback control is indispensable. Multimodal perception, including visual information and tactile sensing, would be considered to incorporate with the teleoperation system to enhance the bilateral interaction between the human operator, the robot, and the environment. More scenarios and multimodal control strategies could be established, ranging from supervised autonomy to full teleoperation.

The Leap Motion Controller provides a markerless, natural user interface for hand motion tracking in the teleoperation of the robotic gripper. Compared with various wearable devices, it gives the user a more natural and comfortable environment to interact with the slave devices. Due to the similar configurations between the human hand and the anthropomorphic hybrid robotic gripper, the gesture-based control strategy enables the human operator to control the robotic gripper more intuitively and much more easily. However, it is observed that the accuracy of the hand tracking falls significantly when the hand moves away from the Leap Motion Controller, or moves far left or far right, especially in the dynamic setup. The self-occlusion is another problem. When the finger is covered by the palm, or when the palm is sideways, some of the fingers would be overlapped by others. In such scenarios, self-occlusion occurs. To overcome these shortcomings, some other sensors and corresponding sensor fusion techniques should be considered to improve the tracking performance of the Leap Motion Controller.

6. Conclusions

In this paper, a bimanual robotic teleoperation architecture with dual arms and dual anthropomorphic hybrid grippers is proposed for unstructured manipulation tasks. Two 3D printed five-fingered anthropomorphic hybrid robotic grippers and two UR10 robotic arms are set up at the slave side, while two Geomagic Touch and a Leap Motion Controller are equipped at the master side. With multiple augmentations by the soft layer, the hybrid rigid–soft gripper is enabled to handle various objects with different sizes, weights, shapes, and hardnesses, ranging from a tiny plastic spoon, a fragile egg, a deformable balloon, and an irregular pineapple, to a big toy ball, a metal cup, and a bottle of drinking water. A specific mapping strategy between the robotic gripper and human hand is developed via the Leap Motion Controller. Multiple experiments including the sign-language demonstrations and gesture-based grasping tasks were conducted using the developed teleoperation system. The experimental results validate the applicability of the bimanual teleoperation system in dealing with common manipulation tasks, such as target approaching, objects grasping, and bimanual manipulation.

In the future, the time delay and dynamic uncertainty during the teleoperation will be investigated to develop a more robust system for complex bimanual tasks under confined environments. Wearable devices, such as data glove and Myo armband, will be considered to combine with the Leap Motion Controller to develop new types of human–robot interface. More challenging bimanual manipulation tasks should be introduced to evaluate the proposed teleoperation system.

Supplementary Materials

Supplementary File 1Author Contributions

Conceptualization, G.Z. and H.R.; methodology, G.Z.; software, G.Z.; validation, G.Z., X.X., C.L., G.P., and A.V.P.; formal analysis, G.Z.; investigation, G.Z. and X.X.; resources, H.R.; data curation, G.Z. and X.X.; Writing—Original draft preparation, G.Z.; Writing—Review and editing, G.Z., J.M., and H.R.; visualization, G.Z. and X.X.; supervision, H.R.; project administration, H.R.; and funding acquisition, J.M. and H.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Office of Naval Research Global under Grant ONRG-NICOP-N62909- 15-1-2019 awarded to Hongliang Ren and the National Natural Science Foundation of China (No. 51605302) awarded to Jin Ma.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Vertut, J. Teleoperation and Robotics: Applications and Technology; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013; Volume 3. [Google Scholar]

- Guo, J.; Liu, C.; Poignet, P. A Scaled Bilateral Teleoperation System for Robotic-Assisted Surgery with Time Delay. J. Intell. Robot. Syst. 2019, 95, 165–192. [Google Scholar] [CrossRef]

- Artigas, J.; Balachandran, R.; Riecke, C.; Stelzer, M.; Weber, B.; Ryu, J.H.; Albu-Schaeffer, A. Kontur-2: Force-feedback teleoperation from the international space station. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1166–1173. [Google Scholar]

- Nagatani, K.; Kiribayashi, S.; Okada, Y.; Otake, K.; Yoshida, K.; Tadokoro, S.; Nishimura, T.; Yoshida, T.; Koyanagi, E.; Fukushima, M.; et al. Emergency response to the nuclear accident at the Fukushima Daiichi Nuclear Power Plants using mobile rescue robots. J. Field Robot. 2013, 30, 44–63. [Google Scholar] [CrossRef]

- Katyal, K.D.; Brown, C.Y.; Hechtman, S.A.; Para, M.P.; McGee, T.G.; Wolfe, K.C.; Murphy, R.J.; Kutzer, M.D.; Tunstel, E.W.; McLoughlin, M.P.; et al. Approaches to robotic teleoperation in a disaster scenario: From supervised autonomy to direct control. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 1874–1881. [Google Scholar]

- Barbieri, L.; Bruno, F.; Gallo, A.; Muzzupappa, M.; Russo, M.L. Design, prototyping and testing of a modular small-sized underwater robotic arm controlled through a Master-Slave approach. Ocean Eng. 2018, 158, 253–262. [Google Scholar] [CrossRef]

- Bolopion, A.; Régnier, S. A review of haptic feedback teleoperation systems for micromanipulation and microassembly. IEEE Trans. Autom. Sci. Eng. 2013, 10, 496–502. [Google Scholar] [CrossRef]

- Yang, C.; Wang, X.; Li, Z.; Li, Y.; Su, C.Y. Teleoperation control based on combination of wave variable and neural networks. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 2125–2136. [Google Scholar] [CrossRef]

- Buss, M.; Lee, K.K.; Nitzsche, N.; Peer, A.; Stanczyk, B.; Unterhinninghofen, U. Advanced telerobotics: Dual-handed and mobile remote manipulation. In Advances in Telerobotics; Springer: Berlin, Germany, 2007; pp. 471–497. [Google Scholar]

- Ponraj, G.; Ren, H. Sensor Fusion of Leap Motion Controller and Flex Sensors Using Kalman Filter for Human Finger Tracking. IEEE Sens. J. 2018, 18, 2042–2049. [Google Scholar] [CrossRef]

- Low, J.H.; Lee, W.W.; Khin, P.M.; Thakor, N.V.; Kukreja, S.L.; Ren, H.L.; Yeow, C.H. Hybrid tele-manipulation system using a sensorized 3-D-printed soft robotic gripper and a soft fabric-based haptic glove. IEEE Robot. Autom. Lett. 2017, 2, 880–887. [Google Scholar] [CrossRef]

- Sun, D.; Liao, Q.; Gu, X.; Li, C.; Ren, H. Multilateral Teleoperation With New Cooperative Structure Based on Reconfigurable Robots and Type-2 Fuzzy Logic. IEEE Trans. Cybern. 2018, 49, 284–2859. [Google Scholar] [CrossRef]

- Zubrycki, I.; Granosik, G. Using integrated vision systems: Three gears and leap motion, to control a 3-finger dexterous gripper. In Recent Advances in Automation, Robotics and Measuring Techniques; Springer: Berlin, Germany, 2014; pp. 553–564. [Google Scholar]

- Rebelo, J.; Sednaoui, T.; den Exter, E.B.; Krueger, T.; Schiele, A. Bilateral robot teleoperation: A wearable arm exoskeleton featuring an intuitive user interface. IEEE Robot. Autom. Mag. 2014, 21, 62–69. [Google Scholar] [CrossRef]

- Xu, Y.; Yang, C.; Zhong, J.; Wang, N.; Zhao, L. Robot teaching by teleoperation based on visual interaction and extreme learning machine. Neurocomputing 2018, 275, 2093–2103. [Google Scholar] [CrossRef]

- Yang, C.; Wang, X.; Cheng, L.; Ma, H. Neural-learning-based telerobot control with guaranteed performance. IEEE Trans. Cybern. 2017, 47, 3148–3159. [Google Scholar] [CrossRef]

- Razjigaev, A.; Crawford, R.; Roberts, J.; Wu, L. Teleoperation of a concentric tube robot through hand gesture visual tracking. In Proceedings of the 2017 IEEE International Conference on Robotics and Biomimetics (ROBIO), Macau, China, 5–8 December 2017; pp. 1175–1180. [Google Scholar]

- Milstein, A.; Ganel, T.; Berman, S.; Nisky, I. Human-centered transparency of grasping via a robot-assisted minimally invasive surgery system. IEEE Trans. Hum.-Mach. Syst. 2018, 48, 349–358. [Google Scholar] [CrossRef]

- Bimbo, J.; Pacchierotti, C.; Aggravi, M.; Tsagarakis, N.; Prattichizzo, D. Teleoperation in cluttered environments using wearable haptic feedback. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 3401–3408. [Google Scholar]

- Edsinger, A.; Kemp, C.C. Two arms are better than one: A behavior based control system for assistive bimanual manipulation. In Recent Progress in Robotics: Viable Robotic Service to Human; Springer: Berlin, Germany, 2007; pp. 345–355. [Google Scholar]

- Makris, S.; Tsarouchi, P.; Surdilovic, D.; Krüger, J. Intuitive dual arm robot programming for assembly operations. CIRP Ann. 2014, 63, 13–16. [Google Scholar] [CrossRef]

- Du, G.; Zhang, P. Markerless human–robot interface for dual robot manipulators using Kinect sensor. Robot. Comput. Integr. Manuf. 2014, 30, 150–159. [Google Scholar] [CrossRef]

- Burgner, J.; Swaney, P.J.; Rucker, D.C.; Gilbert, H.B.; Nill, S.T.; Russell, P.T.; Weaver, K.D.; Webster, R.J. A bimanual teleoperated system for endonasal skull base surgery. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Francisco, CA, USA, 25–30 September 2011; pp. 2517–2523. [Google Scholar]

- Du, G.; Zhang, P.; Liu, X. Markerless human–manipulator interface using leap motion with interval Kalman filter and improved particle filter. IEEE Trans. Ind. Inform. 2016, 12, 694–704. [Google Scholar] [CrossRef]

- Meli, L.; Pacchierotti, C.; Prattichizzo, D. Sensory subtraction in robot-assisted surgery: Fingertip skin deformation feedback to ensure safety and improve transparency in bimanual haptic interaction. IEEE Trans. Biomed. Eng. 2014, 61, 1318–1327. [Google Scholar] [CrossRef]

- Makris, S.; Tsarouchi, P.; Matthaiakis, A.S.; Athanasatos, A.; Chatzigeorgiou, X.; Stefos, M.; Giavridis, K.; Aivaliotis, S. Dual arm robot in cooperation with humans for flexible assembly. CIRP Ann. 2017, 66, 13–16. [Google Scholar] [CrossRef]

- Tunstel, E.; Wolfe, K.; Kutzer, M.; Johannes, M.; Brown, C.; Katyal, K.; Para, M.; Zeher, M. Recent enhancements to mobile bimanual robotic teleoperation with insight toward improving operator control. Johns Hopkins APL Tech. Dig. 2013, 32, 584–594. [Google Scholar]

- Tsarouchi, P.; Makris, S.; Chryssolouris, G. Human–robot interaction review and challenges on task planning and programming. Int. J. Comput. Integr. Manuf. 2016, 29, 916–931. [Google Scholar] [CrossRef]

- Ponraj Joseph Vedhagiri, G.; Prituja, A.V.; Li, C.; Zhu, G.; Thakor, N.V.; Ren, H. Pinch Grasp and Suction for Delicate Object Manipulations Using Modular Anthropomorphic Robotic Gripper with Soft Layer Enhancements. Robotics 2019, 8, 67. [Google Scholar] [CrossRef]

- Li, C.; Gu, X.; Xiao, X.; Zhu, G.; Prituja, A.; Ren, H. Transcend Anthropomorphic Robotic Grasping With Modular Antagonistic Mechanisms and Adhesive Soft Modulations. IEEE Robot. Autom. Lett. 2019, 4, 2463–2470. [Google Scholar] [CrossRef]

- Siciliano, B.; Khatib, O. Springer Handbook of Robotics; Springer: Berlin, Germany, 2016. [Google Scholar]

- Kumar, P.; Gauba, H.; Roy, P.P.; Dogra, D.P. A multimodal framework for sensor based sign language recognition. Neurocomputing 2017, 259, 21–38. [Google Scholar] [CrossRef]

- Kumar, P.; Saini, R.; Roy, P.P.; Dogra, D.P. Study of text segmentation and recognition using leap motion sensor. IEEE Sens. J. 2016, 17, 1293–1301. [Google Scholar] [CrossRef]

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).