Optimal Cache Deployment for Video-On-Demand in Optical Metro Edge Nodes under Limited Storage Capacity †

Abstract

:1. Introduction

Contributions and Organization

- We propose an analytical model that, given the network topology, a budget in terms of storage capacity and characteristics of the video content catalog, identifies the optimal number of caches to deploy in the network and the optimal distribution of the storage capacity among caches at different network levels, with the aim of minimizing the overall amount of network resources (i.e., capacity) occupied due to VoD content request provisioning.

- To verify the correctness of the analytical model, we perform extensive simulations using a discrete, event-based dynamic simulator for VoD-content caching and distribution (presented in our previous work [9]).

- We perform a sensitivity analysis of the effects of the popularity distribution, the location of metro cache with respect to end-users and the total available storage capacity, on the optimal cache deployment strategy. We summarize the takeaways of the analysis and provide guidelines to identify the optimal cache deployment among the hierarchical levels of a metro network.

2. Related Work

3. Network and VoD Content Modeling

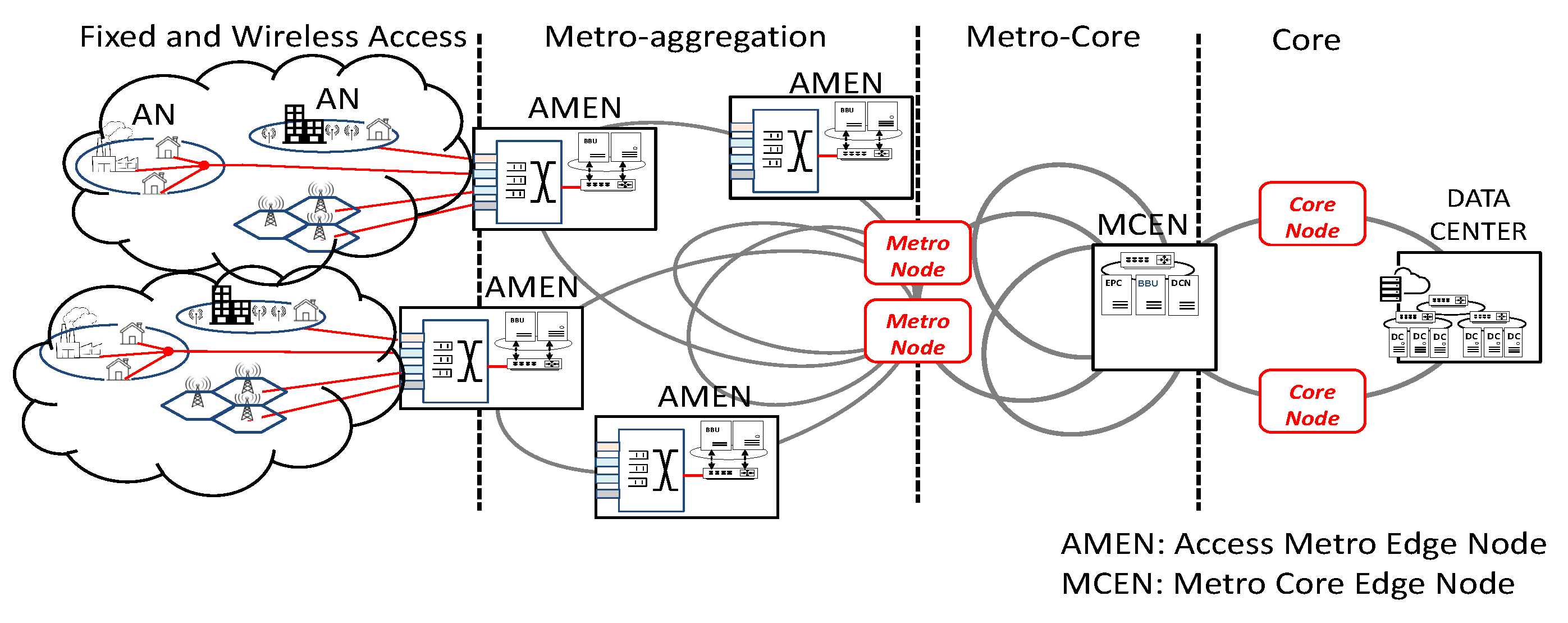

3.1. Network Model

3.2. VoD Content Catalog Modeling

3.2.1. Catalog Size and Popularity

3.2.2. VoD Content Characteristics

4. Analytical Model For Optimal Cache Deployment

4.1. Problem Statement

4.2. Analytical Model

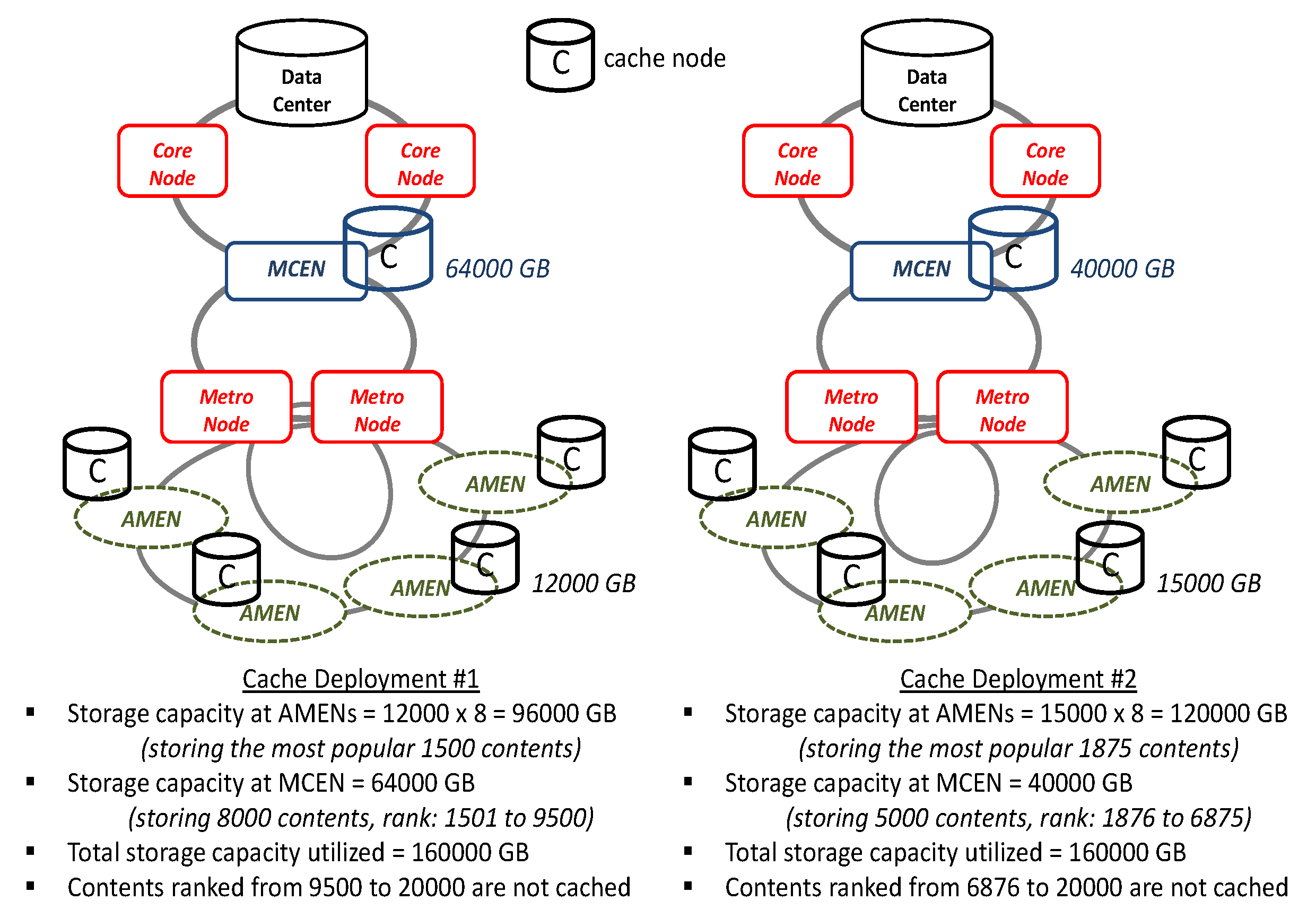

4.2.1. Notation and an Example

4.2.2. Formula Derivation

4.2.3. Example of the Analytical Model

5. Discrete Event-Based Simulator for Dynamic VoD Content Distribution

| Algorithm 1 Video-on-demand (VoD)-chunk provisioning |

|

Input: Network status: cache locations, stored content per cache and available bandwidth on links; VoD content request r(,,m,,): arriving time , user , requested content m, requested bit-rate , chunk duration . Output: VoD provisioning (per chunk) path = ; bandwidth = 0; Allocate list of caches C storing content m; path = shortest path between and nearest cache with available bandwidth ≥; If path = then bw = ; else path = shortest path between and nearest cache with available bandwidth ≥; if path = then bw = ; end if end if if ( = ) then provisionn r over with bandwidth bw; schedule next video-chunk event at ; schedule deprovisioning event at time ; else Block r; end if End; |

6. Numerical Results

6.1. Verification of the Analytical Model

6.2. Optimized Cache Deployment vs. Baseline Strategies

6.3. Analysis of Optimal Cache Deployments

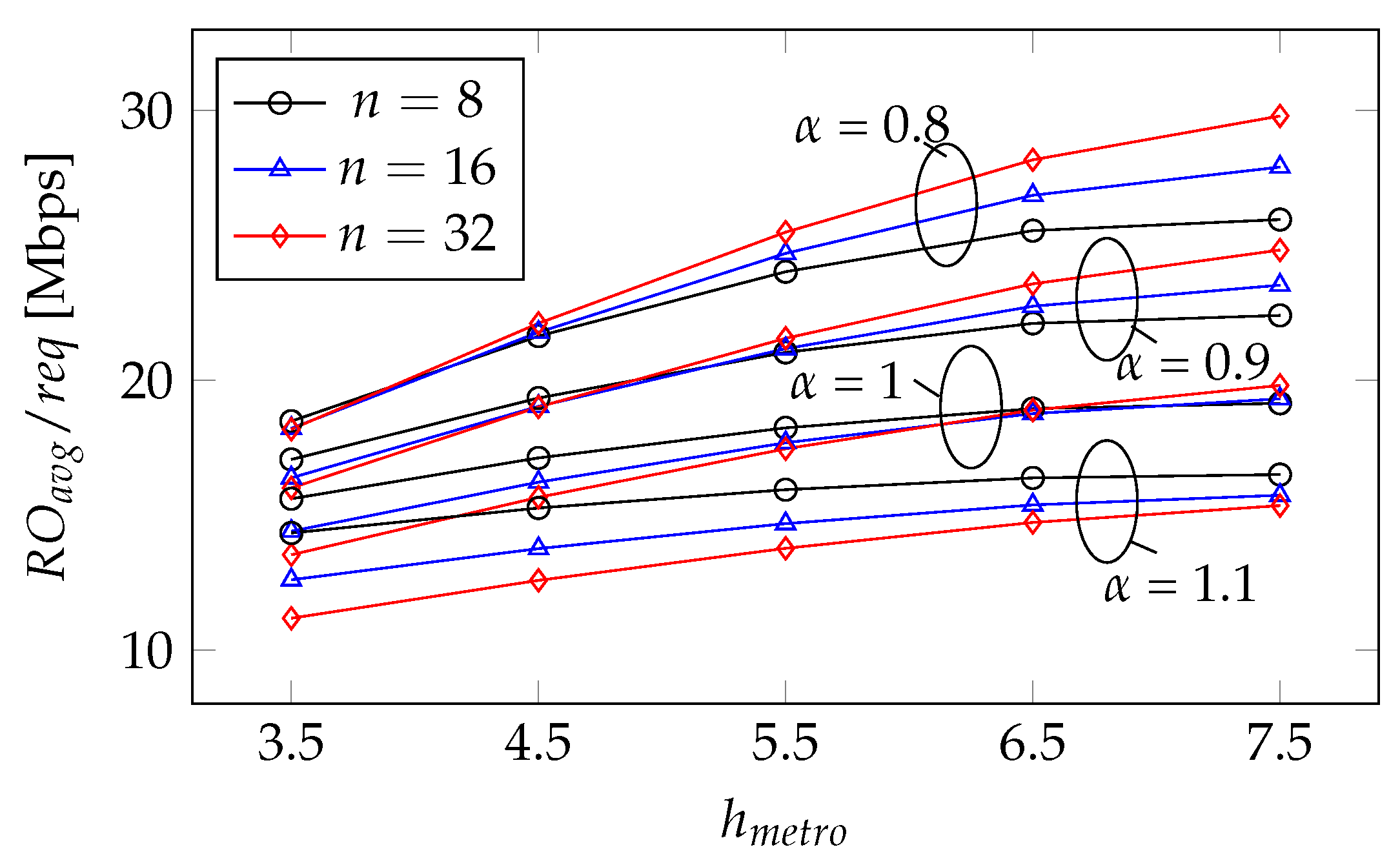

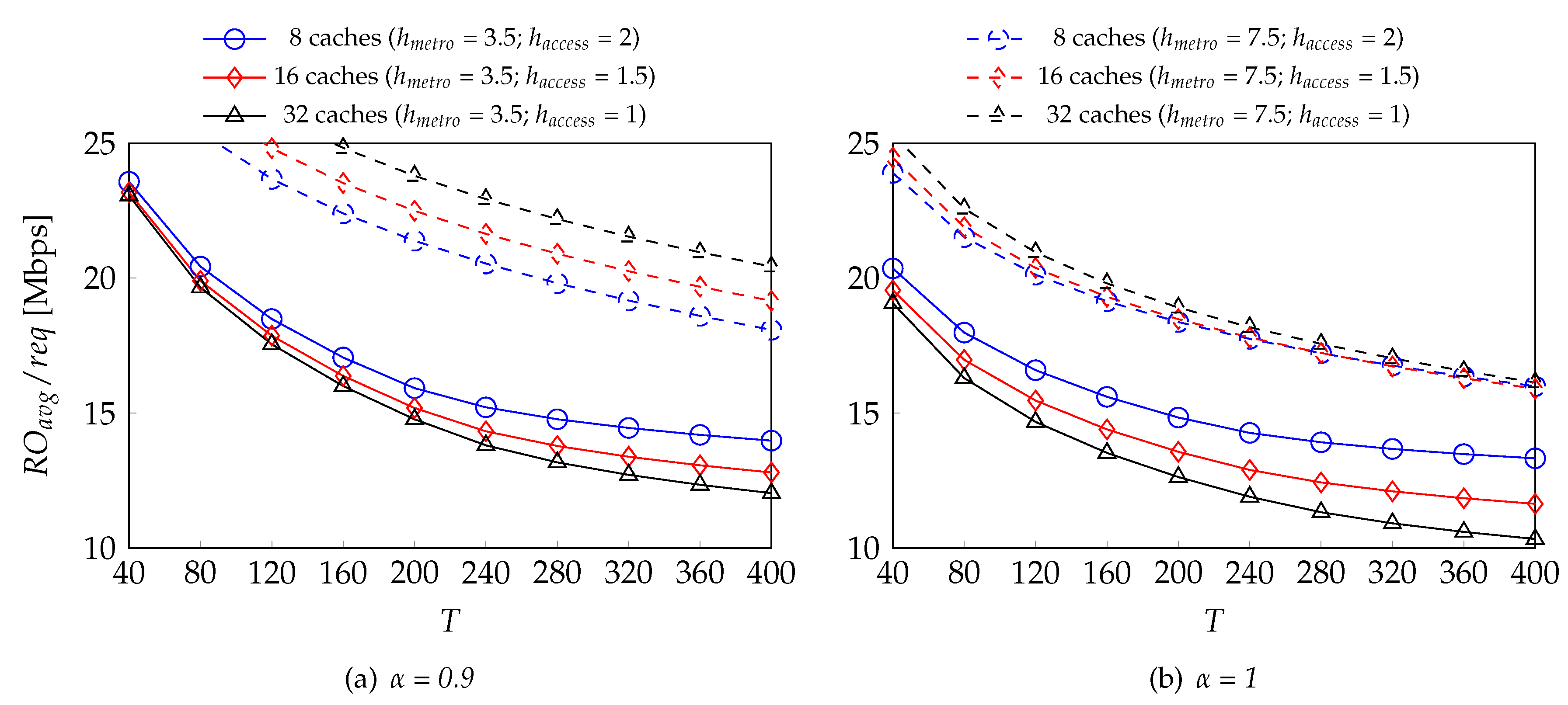

- The location of the MCEN cache, i.e., hop-distance of users from MCEN, ;

- The content catalog popularity skew parameter ;

- The total allowed storage capacity T.

6.3.1. The Effects of the Location of Metro Edge Cache () and the Popularity Skew Parameter ()

6.3.2. Effects of the Location of the Metro Edge Cache () and the Available Storage Capacity (T)

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Peterson, L.; Al-Shabibi, A.; Anshutz, T.; Baker, S.; Bavier, A.; Das, S.; Hart, J.; Palukar, G.; Snow, W. Central office re-architected as a data center. IEEE Commun. Mag. 2016, 54, 96–101. [Google Scholar] [CrossRef]

- Sourlas, V.; Gkatzikis, L.; Flegkas, P.; Tassiulas, L. Distributed cache management in information-centric networks. IEEE Trans. Netw. Serv. Manag. 2013, 10, 286–299. [Google Scholar] [CrossRef]

- Yang, C.; Li, H.; Wang, L.; Xu, Z. A game theoretical framework for improving the quality of service in cooperative radio access network caching. In Proceedings of the IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–7. [Google Scholar]

- Savi, M.; Ayoub, O.; Musumeci, F.; Zhe Li, N.; Verticale, G.; Tornatore, M. Energy-efficient caching for video-on-demand in fixed-mobile convergent networks. In Proceedings of the IEEE Online Conference on Green Communications (OnlineGreenComm), Piscataway, NJ, USA, 10–12 November 2015; pp. 17–22. [Google Scholar]

- Kutscher, D.; Eum, S.; Pentikousis, K.; Psaras, I.; Corujo, D.; Saucez, D.; Schmidt, T.; Waehlisch, M. Information-centric Networking (ICN) Research Challenges; Internet Research Task Force (IRTF): Fremont, CA, USA, 2016; pp. 1–38. [Google Scholar]

- Andreoletti, D.; Rottondi, C.; Giordano, S.; Verticale, G.; Tornatore, M. An open privacy-preserving and scalable protocol for a Network-Neutrality compliant caching. In Proceedings of the ICC 2019-2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Andreoletti, D.; Giordano, S.; Rottondi, C.; Tornatore, M.; Verticale, G. To be neutral or not neutral? The in-network caching dilemma. IEEE Internet Comput. 2018, 22, 18–26. [Google Scholar] [CrossRef]

- Andreoletti, D.; Ayoub, O.; Rottondi, C.; Giordano, S.; Verticale, G.; Tornatore, M. A Privacy-Preserving Protocol for Network-Neutral Caching in ISP Networks. IEEE Access 2019. [Google Scholar] [CrossRef]

- Ayoub, O.; Musumeci, F.; Andreoletti, D.; Mussini, M.; Tornatore, M.; Pattavina, A. Optimal Cache Deployment for Video-an-Demand Delivery in Optical Metro-Area Networks. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, UAE, 9–13 December 2018; pp. 1–6. [Google Scholar]

- Ayoub, O.; Musumeci, F.; Tornatore, M.; Pattavina, A. Energy-Efficient Video-On-Demand Content Caching and Distribution in Metro Area Networks. IEEE Trans. Green Commun. Netw. 2018, 3, 159–169. [Google Scholar] [CrossRef]

- Llorca, J.; Tulino, A.M.; Guan, K.; Esteban, J.; Varvello, M.; Choi, N.; Kilper, D.C. Dynamic in-network caching for energy efficient content delivery. In Proceedings of the IEEE INFOCOM, Turin, Italy, 14–19 April 2013; pp. 245–249. [Google Scholar]

- Applegate, D.; Archer, A.; Gopalakrishnan, V.; Lee, S.; Ramakrishnan, K. Optimal content placement for a large-scale VoD system. IEEE/ACM Trans. Netw. 2016, 24, 2114–2127. [Google Scholar] [CrossRef]

- Ciccarella, G.; Roffinella, D.; Vari, M.; Vatalaro, F. Performance improvement and network TCO reduction by optimal deployment of caching. In Proceedings of the Euro Med Telco Conference (EMTC), Naples, Italy, 12–15 November 2014; pp. 1–6. [Google Scholar]

- Ayoub, O.; Musumeci, F.; Tornatore, M.; Pattavina, A. Techno-Economic Evaluation of CDN Deployments in Metropolitan Area Networks. In Proceedings of the International Conference on Networking and Network Applications (NaNA), Hokkaido, Japan, 16–19 October 2017; pp. 314–319. [Google Scholar]

- Natalino, C.; de Sousa, A.; Wosinska, L.; Furdek, M. Content placement in 5G-enabled edge/core datacenter networks resilient to link cut attacks. Networks 2020. [Google Scholar] [CrossRef]

- Hasan, S.; Gorinsky, S.; Dovrolis, C.; Sitaraman, R.K. Trade-offs in optimizing the cache deployments of CDNs. In Proceedings of the INFOCOM, Toronto, ON, Canada, 27 April–2 May 2014; pp. 460–468. [Google Scholar]

- Gourdin, E.; Bauguion, P. Optimal hierarchical deployment of caches for video streaming. In Proceedings of the 6th International Conference on the Network of the Future (NOF), Montreal, QC, Canada, 30 September–2 October 2015; pp. 1–5. [Google Scholar]

- Li, H.; Wang, H.; Liu, J.; Xu, K. Video requests from online social networks: Characterization, analysis and generation. In Proceedings of the INFOCOM, Turin, Italy, 14–19 April 2013; pp. 50–54. [Google Scholar]

- Kim, D.; Ko, Y.B.; Lim, S.H. Comprehensive analysis of caching performance under probabilistic traffic patterns for content centric networking. China Commun. 2016, 13, 127–136. [Google Scholar] [CrossRef]

- Adhikari, V.K.; Guo, Y.; Hao, F.; Hilt, V.; Zhang, Z.L.; Varvello, M.; Steiner, M. Measurement study of Netflix, Hulu, and a tale of three CDNs. IEEE/ACM Trans. Netw. 2015, 23, 1984–1997. [Google Scholar] [CrossRef]

- Casas, P.; D’Alconzo, A.; Fiadino, P.; Bär, A.; Finamore, A.; Zseby, T. When YouTube does not work - Analysis of QoE-relevant degradation in Google CDN traffic. IEEE Trans. Netw. Serv. Manag. 2014, 11, 441–457. [Google Scholar] [CrossRef]

- Salahuddin, M.A.; Sahoo, J.; Glitho, R.; Elbiaze, H.; Ajib, W. A survey on content placement algorithms for cloud-based content delivery networks. IEEE Access 2018, 6, 91–114. [Google Scholar] [CrossRef]

| Parameter/Variable | Description |

|---|---|

| N | Number of items in catalog |

| T | Total allowed storage capacity (GB) |

| Average RO per second per VoD request | |

| Average content size | |

| Average hop-distance from AMEN caches | |

| Average hop-distance from MCEN cache | |

| Average hop-distance from video server | |

| Number of AMEN caches utilized | |

| k | Index of last content stored in AMEN caches |

| Average bit-rate of all VoD requests |

| Case Study # | (GB) | T | AMENs | |||

|---|---|---|---|---|---|---|

| 1 | 0.9 | 8 | 160,000 | 32 | 32 | 1 |

| 2 | 1 | 6 | 120,000 | 32 | 16 | 1.5 |

| 3 | 1.1 | 8 | 160,000 | 32 | 32 | 1 |

| Case Study # | Error | ||

|---|---|---|---|

| 1 | 266.475 | 267 | |

| 2 | 614.415 | 615 | |

| 3 | 376.228 | 377 |

| Case Study #1 | ||||

| Cache Deployment | DC | MCEN Cache | AMENs Caches | RO |

| Only MCEN | 0 | 100% | 0 | 27 |

| 9% | 46% | 45% | 19 | |

| Only AMENs | 42% | 0 | 58% | 24.43 |

| Case Study #2 | ||||

| Cache Deployment | DC | MCEN Cache | AMENs Caches | RO |

| Only MCEN | 0 | 100% | 0 | 27 |

| 4% | 27% | 69% | 16.21 | |

| Only AMENs | 27% | 0 | 73% | 19.32 |

| Case Study #3 | ||||

| Cache Deployment | DC | MCEN Cache | AMENs Caches | RO |

| Only MCEN | 0 | 100% | 0 | 27 |

| 5% | 22% | 73% | 12.57 | |

| Only AMENs | 22% | 0 | 78% | 15.06 |

| ≤ 4.5 | 4.5 < < 6.5 | ≥ 6.5 | |

| < 1 | AMENs: High number, small capacity MCEN: Large capacity | AMENs: Low number, medium capacity MCEN: Medium capacity | AMENs: Low number, large capacity MCEN: no cache |

| = 1 | |||

| > 1 | AMENs: High number, large capacity MCEN: Small capacity | AMENs: High number, small capacity MCEN: no cache |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ayoub, O.; Andreoletti, D.; Musumeci, F.; Tornatore, M.; Pattavina, A. Optimal Cache Deployment for Video-On-Demand in Optical Metro Edge Nodes under Limited Storage Capacity. Appl. Sci. 2020, 10, 1984. https://doi.org/10.3390/app10061984

Ayoub O, Andreoletti D, Musumeci F, Tornatore M, Pattavina A. Optimal Cache Deployment for Video-On-Demand in Optical Metro Edge Nodes under Limited Storage Capacity. Applied Sciences. 2020; 10(6):1984. https://doi.org/10.3390/app10061984

Chicago/Turabian StyleAyoub, Omran, Davide Andreoletti, Francesco Musumeci, Massimo Tornatore, and Achille Pattavina. 2020. "Optimal Cache Deployment for Video-On-Demand in Optical Metro Edge Nodes under Limited Storage Capacity" Applied Sciences 10, no. 6: 1984. https://doi.org/10.3390/app10061984

APA StyleAyoub, O., Andreoletti, D., Musumeci, F., Tornatore, M., & Pattavina, A. (2020). Optimal Cache Deployment for Video-On-Demand in Optical Metro Edge Nodes under Limited Storage Capacity. Applied Sciences, 10(6), 1984. https://doi.org/10.3390/app10061984