Adapted Binary Particle Swarm Optimization for Efficient Features Selection in the Case of Imbalanced Sensor Data

Abstract

1. Introduction

- (1)

- The development of a modified version of the standard binary particle swarm optimization (BPSO) algorithm, which introduces sensors particles characterized by weights proportional to the importance of the monitoring sensors and used further in the equations for the updating of the velocities of the standard particles;

- (2)

- The adaptation of the proposed algorithm for DLAs data reflecting these adaptations in the way in which the objective function is defined and in the ranking of the features returned by the proposed algorithm;

- (3)

- The evaluation and the validation of the adapted version of the BPSO algorithm using a machine learning methodology developed in-house, which compares the proposed algorithm with other feature selection approaches, and which uses as experimental support Daily Life Activities (DaLiAc) dataset [16].

2. Background

2.1. Feature Selection Challenges for Imbalanced Data Generated by Monitoring Sensors

2.2. Feature Selection Approaches Based on Particle Swarm Optimization

2.3. Machine Learning Approaches from the Literature That Consider the Daily Life Activities (DaLiAc) Dataset

3. Materials and Methods

3.1. Mathematical Formulation of the Optimization Problem Approached Using an Adapted Variant of the BPSO Algorithm

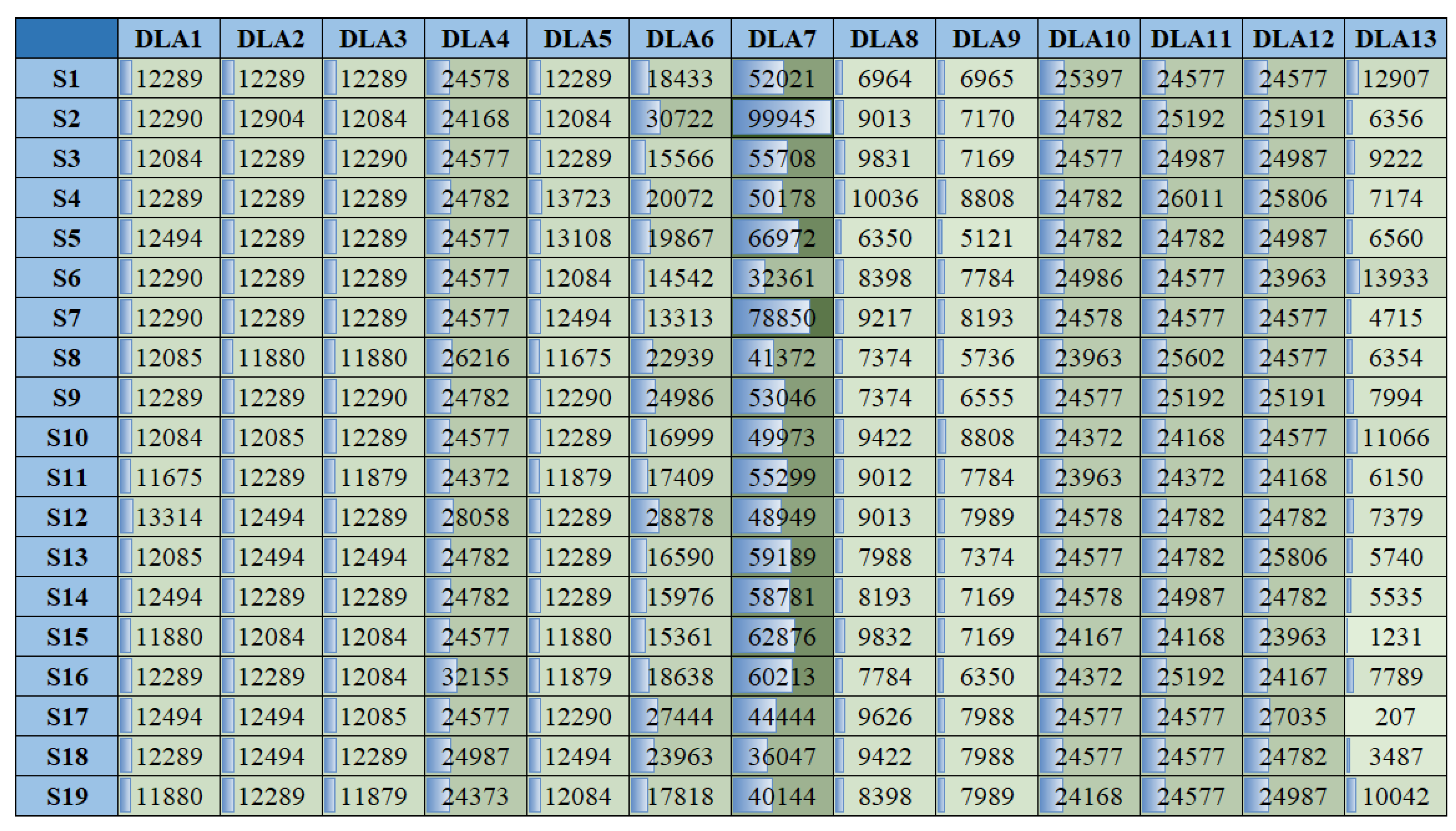

3.2. Matrix of Variability of DLAs Sensors Data for Each Monitored Subject

3.3. Metric for the Evaluation of the Matrix of Variability of DLAs Sensors Data for Each Monitored Subject

3.4. Heuristic for Features Ranking in the Optimal Solution Returned by the Adapted BPSO Algorithm for Each Monitored Subject

3.5. Mathematical Description of the Objective Function of the Adapted Version of the BPSO Algorithm

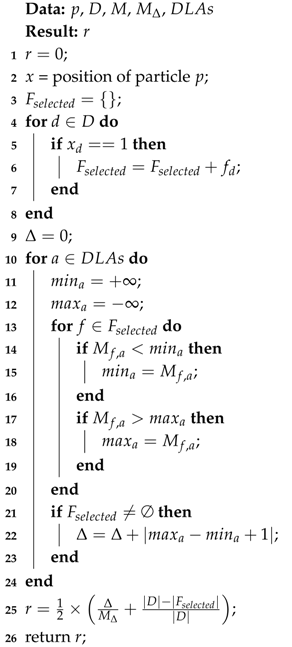

| Algorithm 1: The objective function of the adapted BPSO algorithm. |

|

3.6. Adapted BPSO Algorithm for Feature Selection in the Case of DLAs Sensors Data

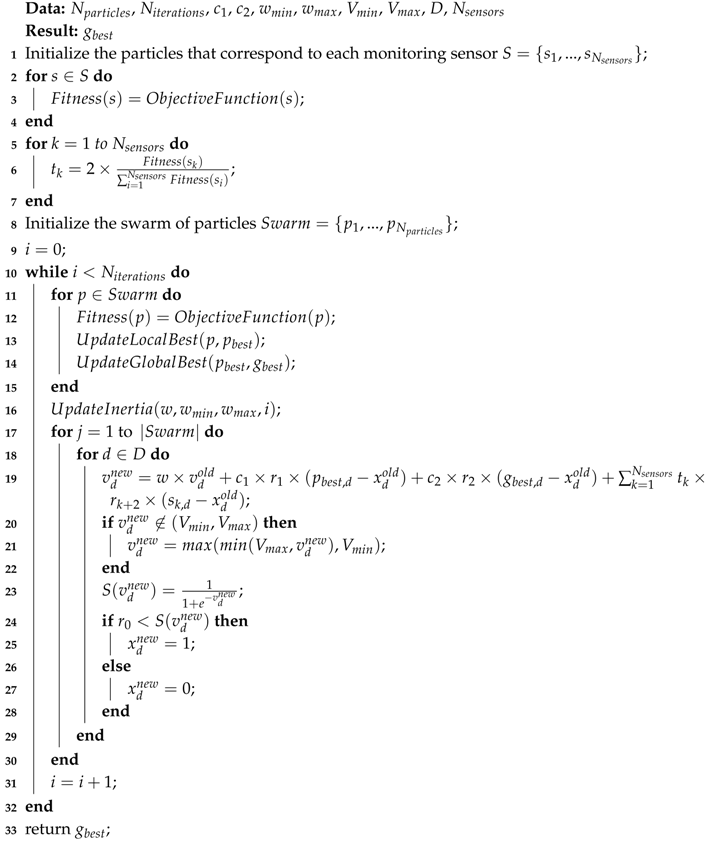

| Algorithm 2: Adapted BPSO Algorithm. |

|

- the inertia component: ,

- the cognitive component: ,

- the social component: ,

- the sensors component: .

4. Results

- (1)

- processor properties: Intel(R) Core(TM) i5-7600K CPU @ 3.80GHz 3.80 GHz;

- (2)

- installed memory (RAM) properties: 16.0 GB;

- (3)

- system type properties: 64-bit Operating System, x64-based processor.

4.1. Machine Learning Methodology for the Classification of DLAs Based on Adapted BPSO

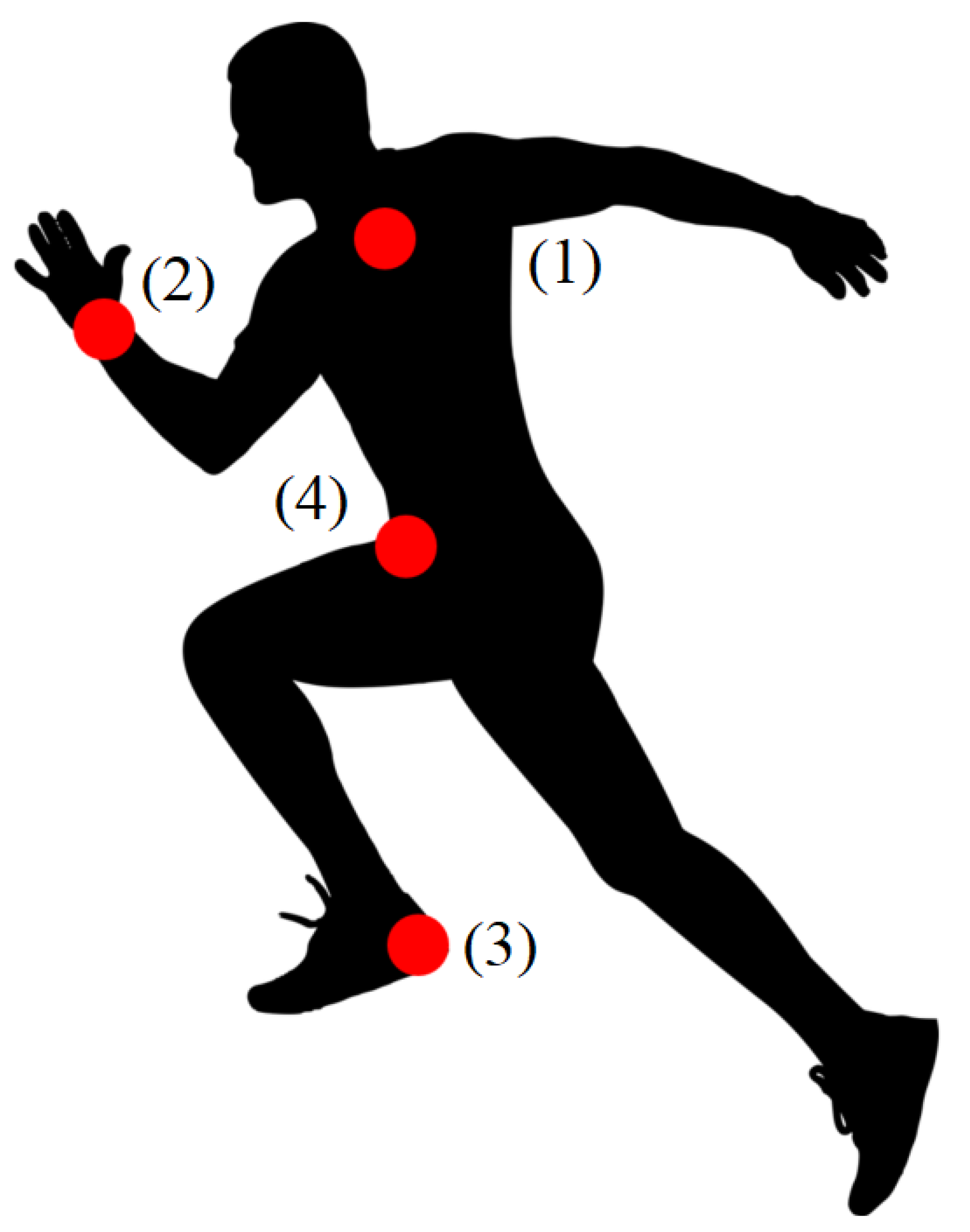

4.1.1. DLAs Sensors Data

- (1)

- —Sitting;

- (2)

- —Lying;

- (3)

- —Standing;

- (4)

- —Washing dishes;

- (5)

- —Vacuuming;

- (6)

- —Sweeping;

- (7)

- —Walking outside;

- (8)

- —Ascending stairs;

- (9)

- —Descending stairs;

- (10)

- —Treadmill running;

- (11)

- —Bicycling (50 watt);

- (12)

- —Bicycling (100 watt);

- (13)

- —Rope jumping;

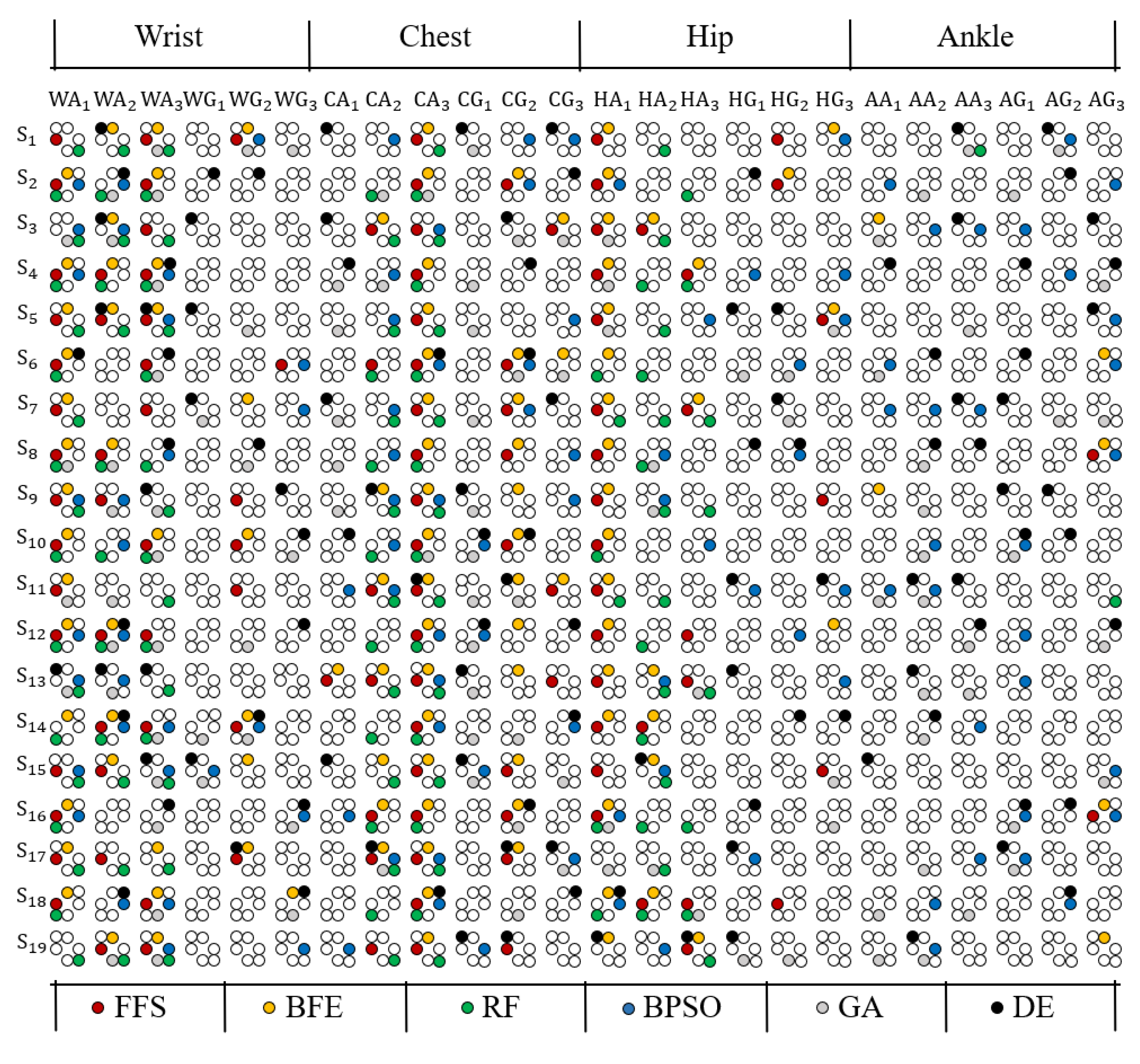

4.1.2. Feature Selection

- (1)

- The first technique considers the features that correspond to the monitoring sensors that are used for collecting the data from the monitoring sensors: six features (chest sensor), six features (right wrist sensor), six features (left ankle sensor), six features (right hip sensor);

- (2)

- (3)

- The third technique considers the BPSO algorithm adapted for data generated by monitoring sensors placed on the bodies of the monitored subjects;

- (4)

4.1.3. Cross Validation

4.1.4. Machine Learning Classification Model

4.1.5. DLAs Classification

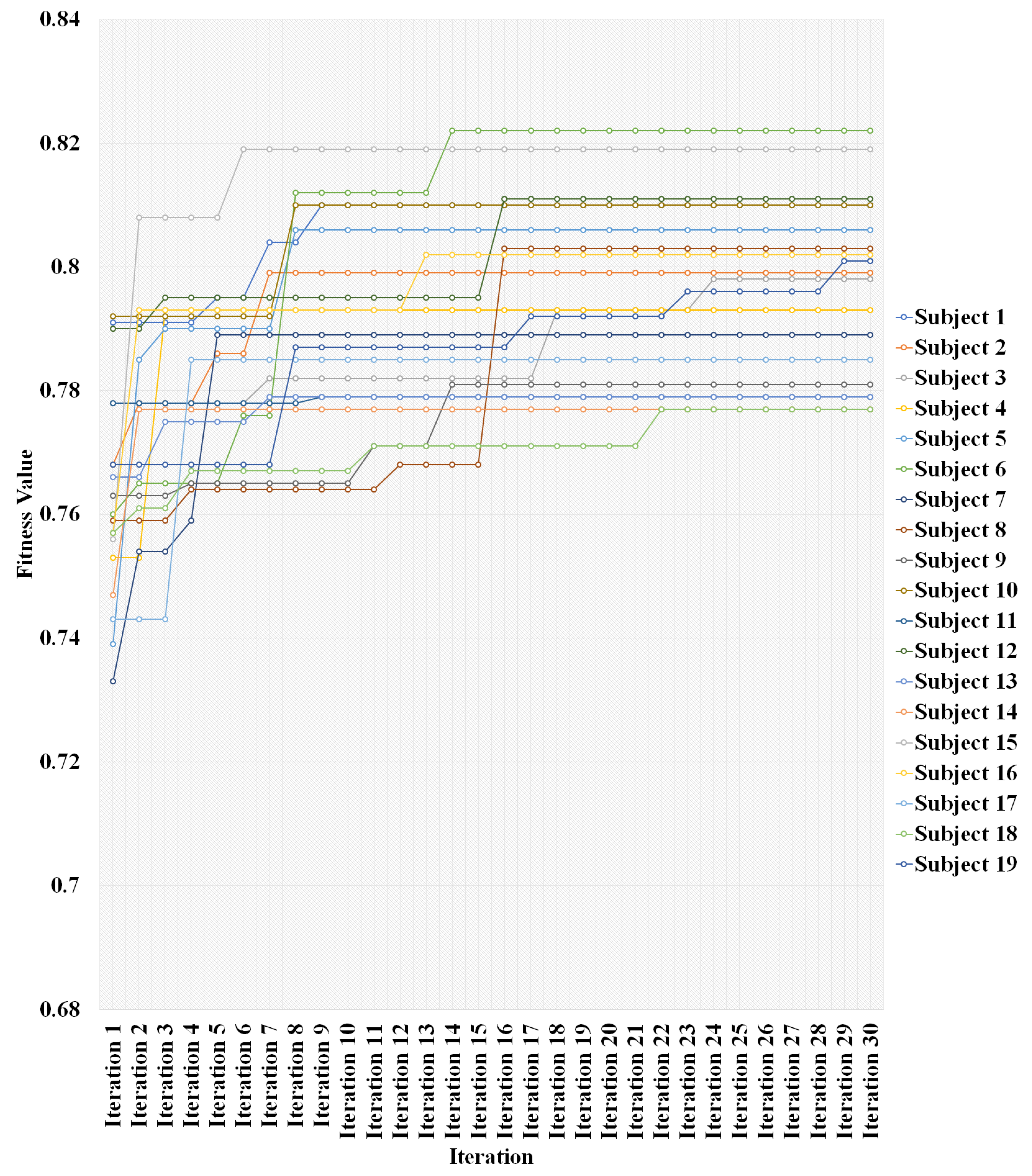

4.2. Feature Selection Results for DLAs Data Generated by Monitoring Sensors Using the Adapted BPSO Algorithm

4.3. Comparison of the Results Obtained Using the Adapted Version of BPSO for Feature Selection with the Results Obtained Using Other Methods

- (1)

- FFS and BFE—The standard configurations from KNIME, a threshold for the number of features equal to six and a random drawing strategy;

- (2)

- RF—The standard configurations from sklearn, a number of estimators equal to 1000, a maximum number of features equal to six;

- (3)

- GA—20 chromosomes, 30 iterations, (crossover rate) = , (mutation rate) = ;

- (4)

- DE—20 agents, 30 iterations, (crossover probability) = , F (differential weight) = .

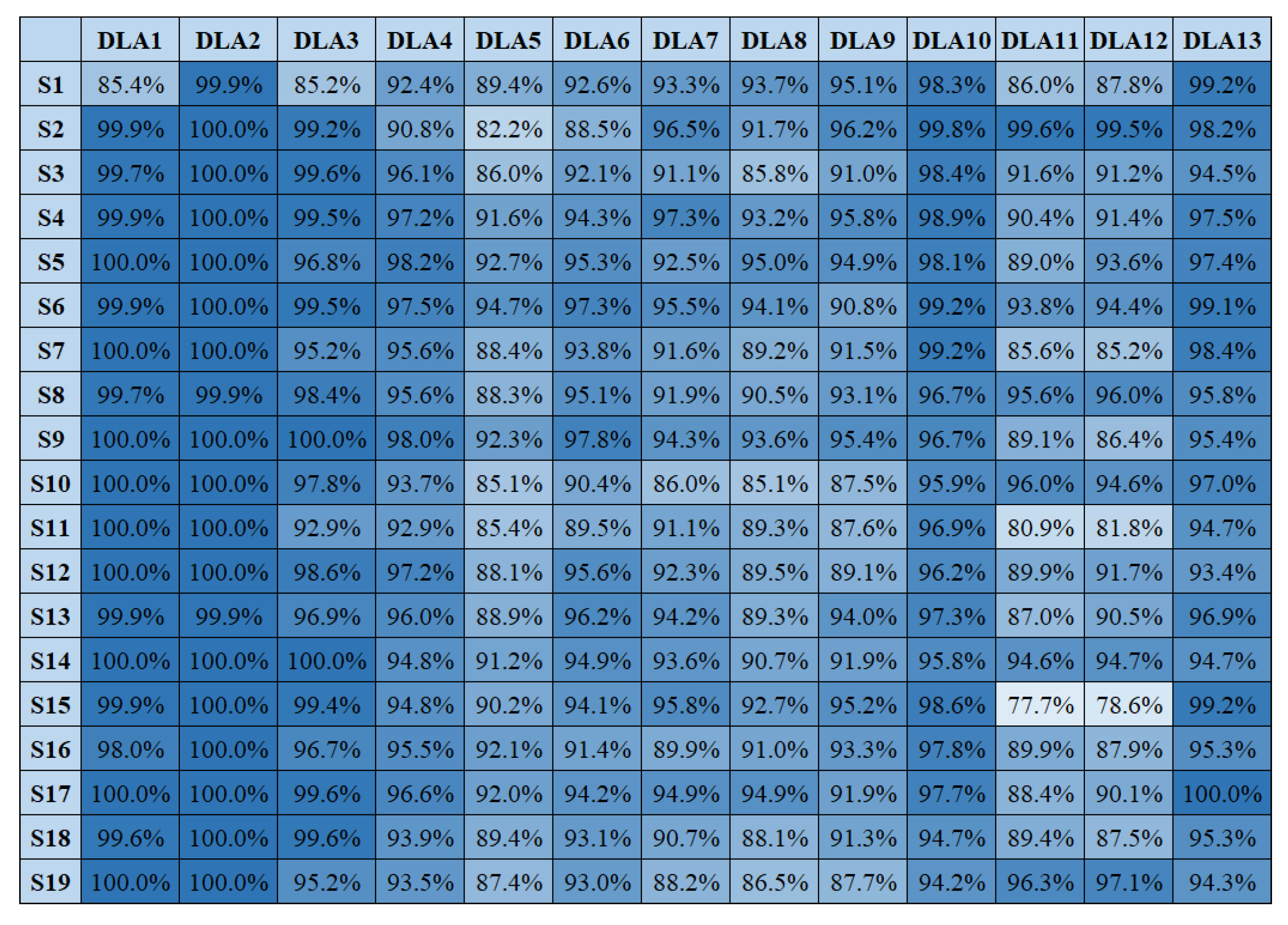

4.4. Comparison of the DLAs Classification Results Obtained Using the Adapted Version of BPSO with the Results Obtained Using Other Methods

5. Discussion

5.1. Application of Adapted BPSO Algorithm for DLAs Classification

5.2. Comparison of the Performance of the BPSO Based Approach with the Performance of Literature Approaches

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AAL | ambient assisted living |

| BFE | backward features elimination |

| BPSO | binary particle swarm optimization |

| C | chest |

| CNN | convolutional neural network |

| CR | crossover rate or crossover probability |

| DaLiAc | Daily Life Activities |

| DE | differential evolution |

| DLA | daily living activity |

| ERT | extremely randomized trees |

| F | differential weight |

| FFS | forward feature selection |

| GA | genetic algorithm |

| k-NN | k-nearest neighbor |

| KNIME | Konstanz Information Miner |

| HPSO-LS | hybrid particle swarm optimization with local search |

| HPSO-SSM | hybrid particle swarm optimization with a spiral-shaped mechanism |

| ICT | information and communication technology |

| II | interaction information |

| IoT | internet of things |

| LA | left ankle |

| LR | logistic regression |

| MR | mutation rate |

| NB | naive bayes |

| PSO | particle swarm optimization |

| PSO-LM | particle swarm optimization with learning memory |

| RF | random forest |

| RH | right hip |

| RSFSAID | rough-set-based feature selection algorithm for imbalanced data |

| RW | right wrist |

| SVM | support vector machine |

| UCI | University of California, Irvine |

| VAF | variance accounted for |

Appendix A

- (1)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (2)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (3)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (4)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (5)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (6)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (7)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (8)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (9)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (10)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (11)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (12)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (13)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (14)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (15)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (16)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (17)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (18)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

- (19)

- — , , , , , , , , , , , , , , , , , , , , , , , ;

References

- ReMIND. Available online: https://www.aalremind.eu/ (accessed on 15 January 2020).

- Moldovan, D.; Anghel, I.; Cioara, T.; Salomie, I.; Chifu, V.; Pop, C. Kangaroo mob heuristic for optimizing features selection in learning the daily living activities of people with Alzheimer’s. In Proceedings of the 22nd International Conference on Control Systems and Computer Science (CSCS), Bucharest, Romania, 28–30 May 2019; pp. 236–243. [Google Scholar]

- Schneider, C.; Trukeschitz, B.; Rieser, H. Measuring the use of the active and assisted living prototype CARIMO for home care service users: Evaluation framework and results. Appl. Sci. 2020, 10, 38. [Google Scholar] [CrossRef]

- Maskeliunas, R.; Damasevicius, R.; Segal, S. A review of internet of things technologies for ambient assisted living environments. Future Internet 2019, 11, 259. [Google Scholar] [CrossRef]

- Dziak, D.; Jachimczyk, B.; Kulesza, W.J. IoT-based information system for healthcare application: Design methodology approach. Appl. Sci. 2017, 7, 596. [Google Scholar] [CrossRef]

- Terashi, H.; Mitoma, H.; Yoneyama, M.; Aizawa, H. Relationship between amount of daily movement measured by a triaxial accelerometer and motor symptoms in patients with Parkinson’s disease. Appl. Sci. 2017, 7, 486. [Google Scholar] [CrossRef]

- Samie, F.; Bauer, L.; Henkel, J. From cloud down to things: An overview of machine learning in internet of things. IEEE Internet Things J. 2019, 6, 4921–4934. [Google Scholar] [CrossRef]

- Vitabile, S.; Marks, M.; Stojanovic, D.; Pllana, S.; Molina, J.M.; Krzyszton, M.; Sikora, A.; Jarynowski, A.; Hosseinpour, F.; Jakobik, A.; et al. Medical data processing and analysis for remote health and activities monitoring. In High-Performance Modelling and Simulation for Big Data Applications: Selected Results of the COST Action IC1406 cHiPSet; Kolodziej, J., Gonzalez-Velez, H., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 186–220. [Google Scholar]

- Chelli, A.; Patzold, M. A machine learning approach for fall detection and daily living activity recognition. IEEE Access 2019, 7, 38670–38687. [Google Scholar] [CrossRef]

- Saadeh, W.; Butt, S.A.; Altaf, M.A.B. A patient-specific single sensor IoT-based wearable fall prediction and detection system. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 5, 995–1003. [Google Scholar] [CrossRef] [PubMed]

- Yatbaz, H.Y.; Eraslan, S.; Yesilada, Y.; Ever, E. Activity recognition using binary sensors for elderly people living alone: Scanpath trend analysis approach. IEEE Sens. J. 2019, 19, 7575–7582. [Google Scholar] [CrossRef]

- Awais, M.; Chiari, L.; Ihlen, E.A.F.; Helbostad, J.; Palmerini, L. Physical activity classification for elderly people in free-living conditions. IEEE J. Biomed. Health 2019, 23, 197–207. [Google Scholar] [CrossRef] [PubMed]

- Yahaya, S.W.; Lotfi, A.; Mahmud, M. A consensus novelty detection ensemble approach for anomaly detection in activities of daily living. Appl. Soft Comput. 2019, 83, 105613. [Google Scholar] [CrossRef]

- Uddin, M.Z.; Hassan, M.M.; Alsanad, A.; Savaglio, C. A body sensor data fusion and deep recurrent neural network-based behavior recognition approach for robust healthcare. Inform. Fusion 2020, 55, 105–115. [Google Scholar] [CrossRef]

- De-La-Hoz-Franco, E.; Ariza-Colpas, P.; Quero, J.M.; Espinilla, M. Sensor-based datasets for human activity recognition—A systematic review of literature. IEEE Access 2018, 6, 59192–59210. [Google Scholar] [CrossRef]

- Leutheuser, M.; Schludhaus, D.; Eskofier, B.M. Hierarchical, multi-sensor based classification of daily life activities: Comparison with state-of-the-art algorithms using a benchmark dataset. PLoS ONE 2013, 8. [Google Scholar] [CrossRef] [PubMed]

- Lin, Q.; Liu, S.; Zhu, Q.; Tang, C.; Song, R.; Chen, J.; Coello, C.A.; Wong, K.-C.; Zhang, J. Particle swarm optimization with a balanceable fitness estimation for many-objective optimization problems. IEEE Trans. Evolut. Comput. 2018, 22, 32–46. [Google Scholar] [CrossRef]

- Zhou, P.; Hu, X.; Li, P.; Wu, X. Online feature selection for high-dimensional class-imbalanced data. Knowl. Based Syst. 2017, 136, 187–199. [Google Scholar] [CrossRef]

- Liu, M.; Xu, C.; Luo, Y.; Xu, C.; Wen, Y.; Tao, D. Cost-sensitive feature selection by optimizing F-measures. IEEE Trans. Image Process. 2018, 27, 1323–1335. [Google Scholar] [CrossRef] [PubMed]

- Maldonado, S.; Lopez, J. Dealing with high-dimensional class-imbalanced datasets: Embedded feature selection for SVM classification. Appl. Soft Comput. 2018, 67, 94–105. [Google Scholar] [CrossRef]

- Xu, Y. Maximum margin of twin spheres support vector machine for imbalanced data classification. IEEE Trans. Cybern. 2017, 47, 1540–1550. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Li, T.; Fan, X.; Luo, C. Feature selection for imbalanced data based on neighborhood rough sets. Inform. Sci. 2019, 483, 1–20. [Google Scholar] [CrossRef]

- Hosseini, E.S.; Moattar, M.H. Evolutionary feature subsets selection based on interaction information for high dimensional imbalanced data classification. Appl. Soft Comput. 2019, 82, 105581. [Google Scholar]

- Xue, B.; Zhang, M.; Browne, W.N. Particle swarm optimisation for feature selection in classification: Novel initialisation and updating mechanisms. Appl. Soft Comput. 2014, 18, 261–276. [Google Scholar]

- Moradi, P.; Gholampour, M. A hybrid particle swarm optimization for feature subset selection by integrating a novel local search strategy. Appl. Soft Comput. 2016, 43, 117–130. [Google Scholar]

- Samanthula, B.K.; Elmehdwi, Y.; Jiang, W. K-nearest neighbor classification over semantically secure encrypted relational data. IEEE T Knowl. Data Eng. 2015, 27, 1261–1273. [Google Scholar]

- Wei, B.; Zhang, W.; Xia, X.; Zhang, Y.; Yu, F.; Zhu, Z. Efficient feature selection algorithm based on particle swarm optimization with learning memory. IEEE Access 2019, 7, 166066–166078. [Google Scholar]

- Xiong, B.; Li, Y.; Huang, M.; Shi, W.; Du, M.; Yang, Y. Feature selection of input variables for intelligence joint moment prediction based on binary particle swarm optimization. IEEE Access 2019, 7, 182289–182295. [Google Scholar]

- Chen, K.; Zhou, F.-Y.; Yuan, X.-F. Hybrid particle swarm optimization with spiral-shaped mechanism for feature selection. Expert Syst. Appl. 2019, 128, 140–156. [Google Scholar]

- Casale, P.; Altini, M.; Amft, O. Transfer learning in body sensor networks using ensembles of randomized trees. IEEE Internet Things 2015, 2, 33–40. [Google Scholar]

- Nazabal, A.; Garcia-Moreno, P.; Artes-Rodriguez, A.; Ghahramani, Z. Human activity recognition by combining a small number of classifiers. IEEE J. Biomed. Health 2016, 20, 1342–1351. [Google Scholar]

- Zdravevski, E.; Lameski, P.; Trajkovik, V.; Kulakov, A.; Chorbev, I.; Goleva, R.; Pombo, N.; Garcia, N. Improving activity recognition accuracy in ambient-assisted living systems by automated feature engineering. IEEE Access 2017, 5, 5262–5280. [Google Scholar]

- Hur, T.; Bang, J.; Huynh-The, T.; Lee, J.; Kim, J.-I.; Lee, S. Iss2Image: A novel signal-encoding technique for CNN-based human activity recognition. Sensors 2018, 18, 3910. [Google Scholar]

- Kumar, A.; Kim, J.; Lyndon, D.; Fulham, M.; Feng, D. An ensemble of fine-tuned convolutional neural networks for medical image classification. IEEE J. Biomed. Health 2017, 21, 31–40. [Google Scholar]

- Liu, J.; Mei, Y.; Li, X. An analysis of the inertia weight parameter for binary particle swarm optimization. IEEE Trans. Evolut. Comput. 2016, 20, 666–681. [Google Scholar]

- Too, J.; Abdullah, A.R.; Saad, N.M. A new co-evolution binary particle swarm optimization with multiple inertia weight strategy for feature selection. Informatics 2019, 6, 21. [Google Scholar]

- Feltrin, L. KNIME an open source solution for predictive analytics in the geosciences [Software and Data Sets]. IEEE Geosci. Remote Sens. Mag. 2015, 3, 28–38. [Google Scholar]

- Macedo, F.; Oliveira, M.R.; Pacheco, A.; Valadas, R. Theoretical foundations of forward feature selection methods based on mutual information. Neurocomputing 2019, 325, 67–89. [Google Scholar]

- Maldonado, S.; Weber, R.; Famili, F. Feature selection for high-dimensional class-imbalanced data sets using support vector machines. Inform. Sci. 2014, 286, 228–246. [Google Scholar]

- Bader-El-Den, M.; Teitei, E.; Perry, T. Biased random forest for dealing with the class imbalance problem. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2163–2172. [Google Scholar]

- Alirezazadeh, P.; Fathi, A.; Abdali-Mohammadi, F. A genetic algorithm-based feature selection for kinship verification. IEEE Signal Process. Lett. 2015, 22, 2459–2463. [Google Scholar]

- Zhang, Y.; Gong, D.-W.; Gao, X.-Z.; Tian, T.; Sun, X.-Y. Binary differential evolution with self-learning for multi-objective feature selection. Inform. Sci. 2020, 507, 67–85. [Google Scholar]

| Characteristic | Value |

|---|---|

| number of daily living activities (DLAs) | 13 |

| number of subjects | 19 |

| number of monitoring sensors | 4 |

| total number of features | 24 |

| sampling frequency | 200 Hz |

| Parameter | Significance | Value |

|---|---|---|

| number of iterations | 30 | |

| number of particles | 20 | |

| cognitive component value | 2 | |

| social component value | 2 | |

| minimum value of velocity | ||

| maximum value of velocity | ||

| minimum value of inertia | ||

| maximum value of inertia |

| Monitored Subject/Sensor Particle Weight | ||||

|---|---|---|---|---|

| 0.478 | 0.567 | 0.448 | 0.507 | |

| 0.544 | 0.490 | 0.466 | 0.500 | |

| 0.570 | 0.535 | 0.418 | 0.477 | |

| 0.498 | 0.559 | 0.470 | 0.473 | |

| 0.565 | 0.492 | 0.465 | 0.478 | |

| 0.482 | 0.529 | 0.465 | 0.524 | |

| 0.472 | 0.502 | 0.528 | 0.498 | |

| 0.564 | 0.520 | 0.435 | 0.481 | |

| 0.537 | 0.554 | 0.429 | 0.480 | |

| 0.509 | 0.505 | 0.472 | 0.514 | |

| 0.508 | 0.494 | 0.461 | 0.537 | |

| 0.536 | 0.520 | 0.474 | 0.470 | |

| 0.594 | 0.510 | 0.441 | 0.455 | |

| 0.555 | 0.480 | 0.475 | 0.490 | |

| 0.497 | 0.525 | 0.489 | 0.489 | |

| 0.573 | 0.490 | 0.450 | 0.487 | |

| 0.555 | 0.527 | 0.451 | 0.467 | |

| 0.571 | 0.484 | 0.453 | 0.492 | |

| 0.532 | 0.524 | 0.472 | 0.472 |

| Subject | ||||

|---|---|---|---|---|

| 684,476 | 0.818 | 8 | ||

| 910,064 | 0.806 | 6 | ||

| 739,985 | 0.795 | 7 | ||

| 714,869 | 0.799 | 7 | ||

| 726,932 | 0.811 | 7 | ||

| 614,417 | 0.814 | 7 | ||

| 733,800 | 0.796 | 5 | ||

| 625,166 | 0.808 | 7 | ||

| 665,925 | 0.800 | 7 | ||

| 658,837 | 0.804 | 6 | ||

| 761,430 | 0.802 | 7 | ||

| 793,377 | 0.803 | 7 | ||

| 802,259 | 0.798 | 7 | ||

| 760,730 | 0.803 | 6 | ||

| 813,828 | 0.811 | 7 | ||

| 885,900 | 0.805 | 7 | ||

| 875,175 | 0.798 | 6 | ||

| 774,223 | 0.793 | 7 | ||

| 753,161 | 0.798 | 6 |

| Subject/Features Selection | FFS | BFE | RF | BPSO | GA | DE |

|---|---|---|---|---|---|---|

| 333,842 | 2,517,009 | 914,742 | 636,930 | 1,930,886 | 1,282,519 | |

| 409,865 | 5,253,360 | 1,117,351 | 953,376 | 2,213,781 | 1,519,955 | |

| 333,872 | 3,661,512 | 800,075 | 799,151 | 1,766,370 | 1,271,330 | |

| 337,070 | 4,481,319 | 751,205 | 716,961 | 1,787,177 | 1,269,005 | |

| 348,089 | 1,632,155 | 886,658 | 703,276 | 1,836,603 | 1,316,106 | |

| 309,679 | 1,569,169 | 680,149 | 658,894 | 1,613,077 | 1,146,485 | |

| 368,906 | 1,704,399 | 827,109 | 705,606 | 1,882,546 | 1,373,507 | |

| 326,191 | 1,540,248 | 863,979 | 588,280 | 1,663,440 | 1,207,692 | |

| 349,503 | 4,865,604 | 723,893 | 652,314 | 1,791,708 | 1,282,526 | |

| 345,890 | 3,366,558 | 695,436 | 575,586 | 1,750,748 | 1,285,746 | |

| 329,980 | 2,669,384 | 814,888 | 822,193 | 1,733,939 | 1,255,230 | |

| 342,686 | 1,580,970 | 830,055 | 868,976 | 1,832,432 | 1,319,197 | |

| 336,975 | 4,311,050 | 905,903 | 849,842 | 1,855,374 | 1,294,919 | |

| 333,746 | 3,019,609 | 902,391 | 892,880 | 1,761,730 | 1,253,549 | |

| 326,056 | 3,081,598 | 873,733 | 915,939 | 1,648,931 | 1,233,069 | |

| 349,341 | 3,455,577 | 1,109,170 | 931,524 | 1,838,868 | 1,303,958 | |

| 322,297 | 1,534,359 | 730,817 | 664,468 | 1,715,616 | 1,287,910 | |

| 318,742 | 5,478,390 | 715,003 | 875,047 | 1,644,804 | 1,157,746 | |

| 319,628 | 4,515,086 | 782,075 | 675,779 | 1,662,081 | 1,141,452 |

| Subject/Features Selection | C | RW | LA | RH | FFS | BFE | RF | BPSO | GA | DE |

|---|---|---|---|---|---|---|---|---|---|---|

| 87.1% | 92.8% | |||||||||

| 97.1% | 88.8% | |||||||||

| 86.7% | 93.4% | 93.4% | 93.4% | |||||||

| 87.5% | 95.9% | 95.9% | ||||||||

| 82.3% | 95.3% | 95.3% | ||||||||

| 88.3% | 96.6% | |||||||||

| 87.1% | 95.7% | |||||||||

| 85.5% | 95.8% | |||||||||

| 83.6% | 94.4% | |||||||||

| 94.5% | 87.0% | 94.5% | ||||||||

| 84.7% | 92.1% | |||||||||

| 85.1% | 94.6% | |||||||||

| 86.0% | 94.0% | |||||||||

| 95.6% | 86.3% | |||||||||

| 93.2% | 88.8% | |||||||||

| 81.3% | 92.8% | |||||||||

| 89.2% | 95.8% | |||||||||

| 83.6% | 93.5% | 93.5% | ||||||||

| 88.4% | 96.2% | 96.2% | ||||||||

| Average | 86.1% | 94.6% |

| Approach | Result | Method |

|---|---|---|

| Our approach | accuracy | The features are selected using an adapted version of BPSO and the data is classified using RF |

| (Zdravevski et al. [32]) | accuracy | A method that applies the score-drift feature selection, based on an algorithm for feature extraction, selection, and classification |

| (Leutheuser et al. [16]) | accuracy | A hierarchical multi-sensor based classification system |

| (Hur et al. [33]) | accuracy | A method that is based on Iss2Image-UCNet6 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moldovan, D.; Anghel, I.; Cioara, T.; Salomie, I. Adapted Binary Particle Swarm Optimization for Efficient Features Selection in the Case of Imbalanced Sensor Data. Appl. Sci. 2020, 10, 1496. https://doi.org/10.3390/app10041496

Moldovan D, Anghel I, Cioara T, Salomie I. Adapted Binary Particle Swarm Optimization for Efficient Features Selection in the Case of Imbalanced Sensor Data. Applied Sciences. 2020; 10(4):1496. https://doi.org/10.3390/app10041496

Chicago/Turabian StyleMoldovan, Dorin, Ionut Anghel, Tudor Cioara, and Ioan Salomie. 2020. "Adapted Binary Particle Swarm Optimization for Efficient Features Selection in the Case of Imbalanced Sensor Data" Applied Sciences 10, no. 4: 1496. https://doi.org/10.3390/app10041496

APA StyleMoldovan, D., Anghel, I., Cioara, T., & Salomie, I. (2020). Adapted Binary Particle Swarm Optimization for Efficient Features Selection in the Case of Imbalanced Sensor Data. Applied Sciences, 10(4), 1496. https://doi.org/10.3390/app10041496