Autonomous Underwater Vehicles: Localization, Navigation, and Communication for Collaborative Missions

Abstract

1. Introduction

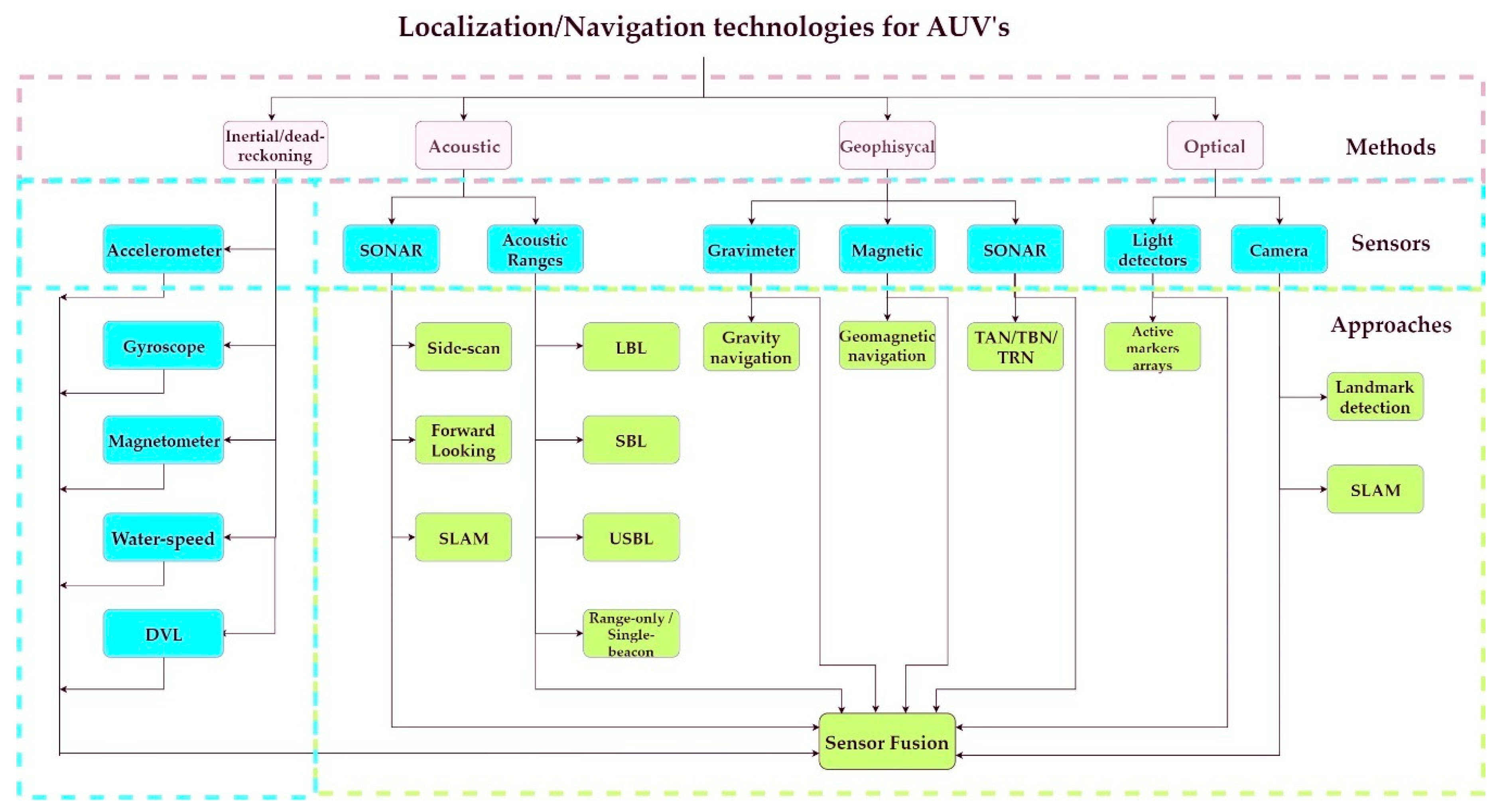

2. Navigation and Localization

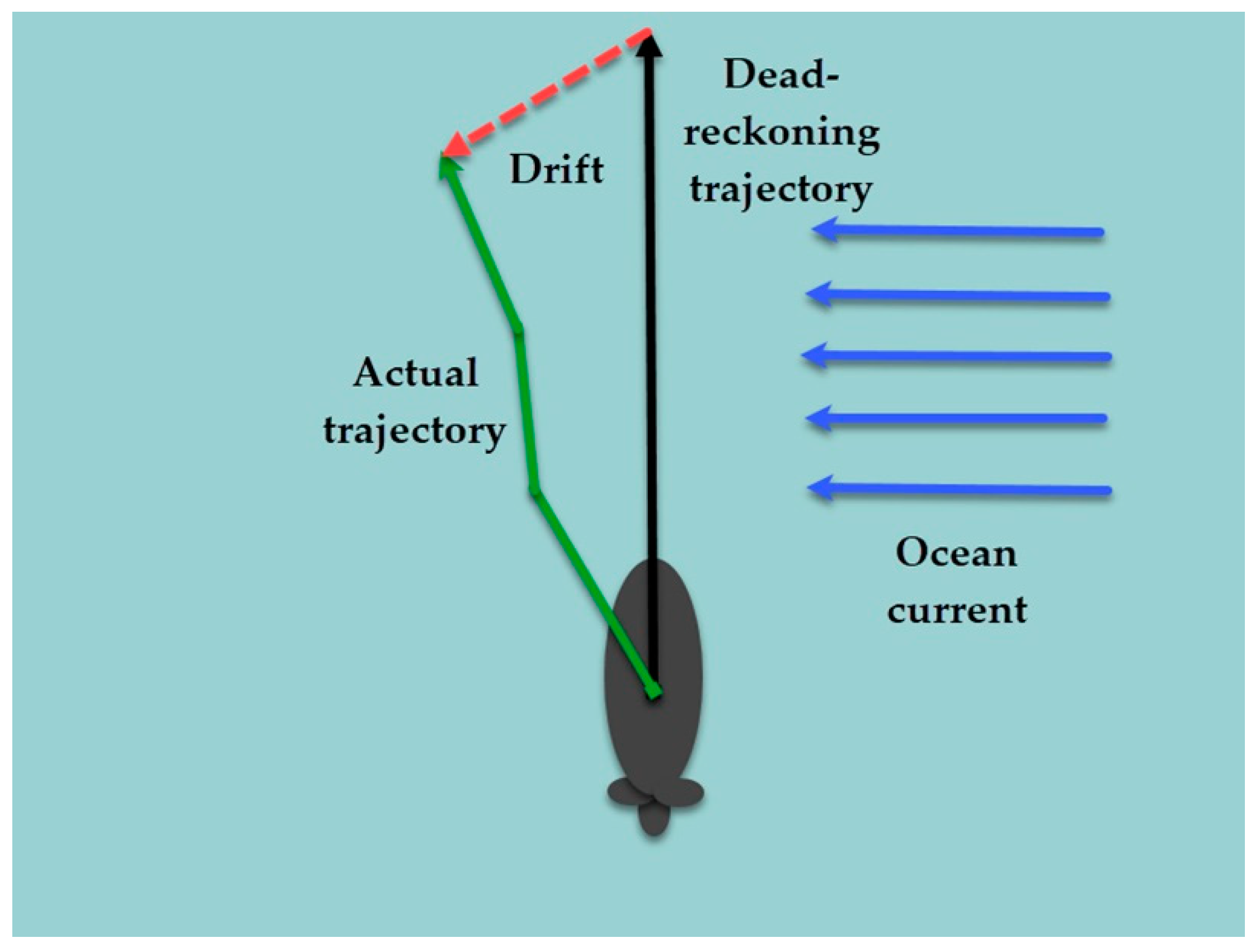

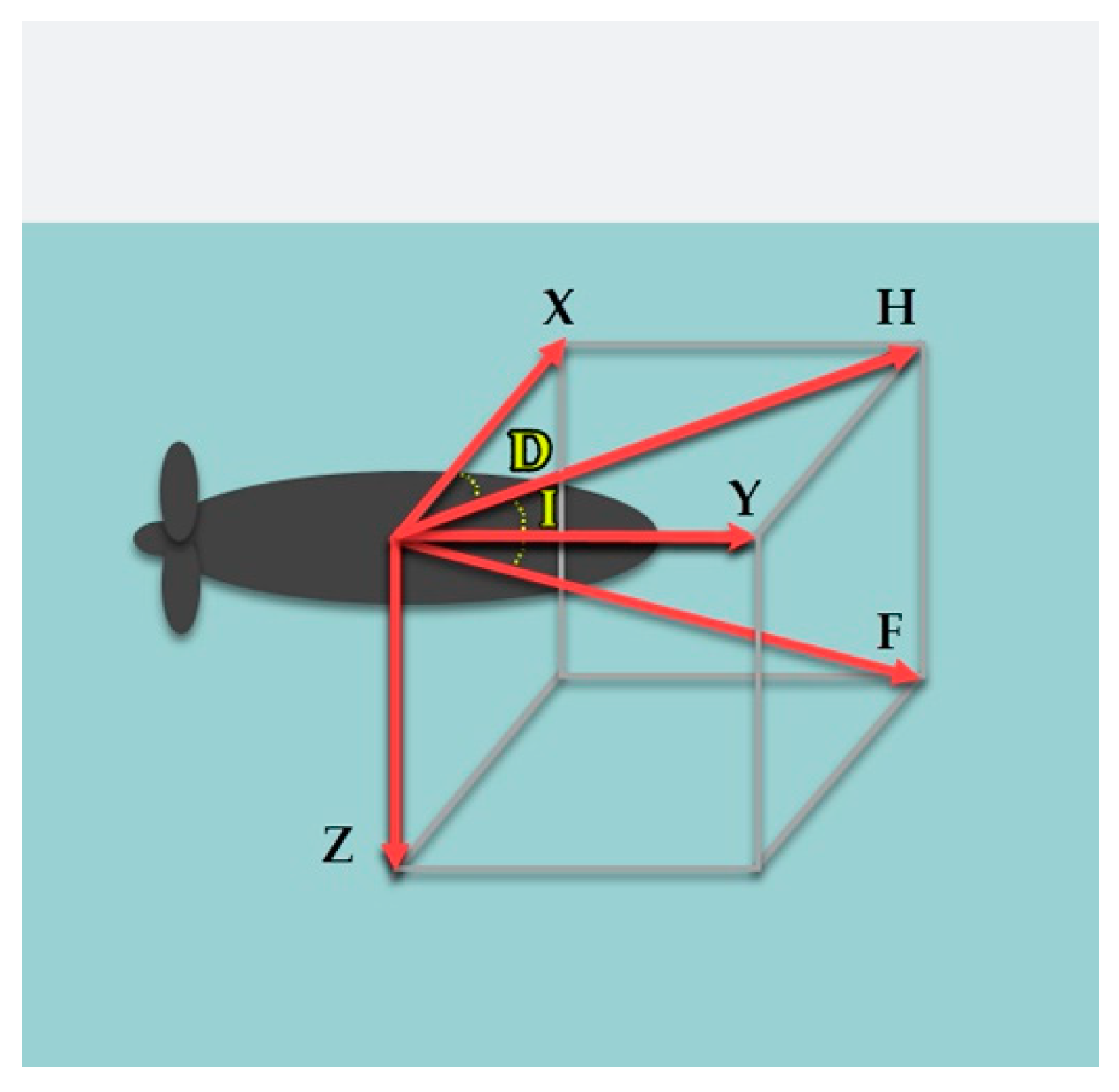

2.1. Dead-Reckoning and Inertial Navigation

2.2. Acoustic Navigation

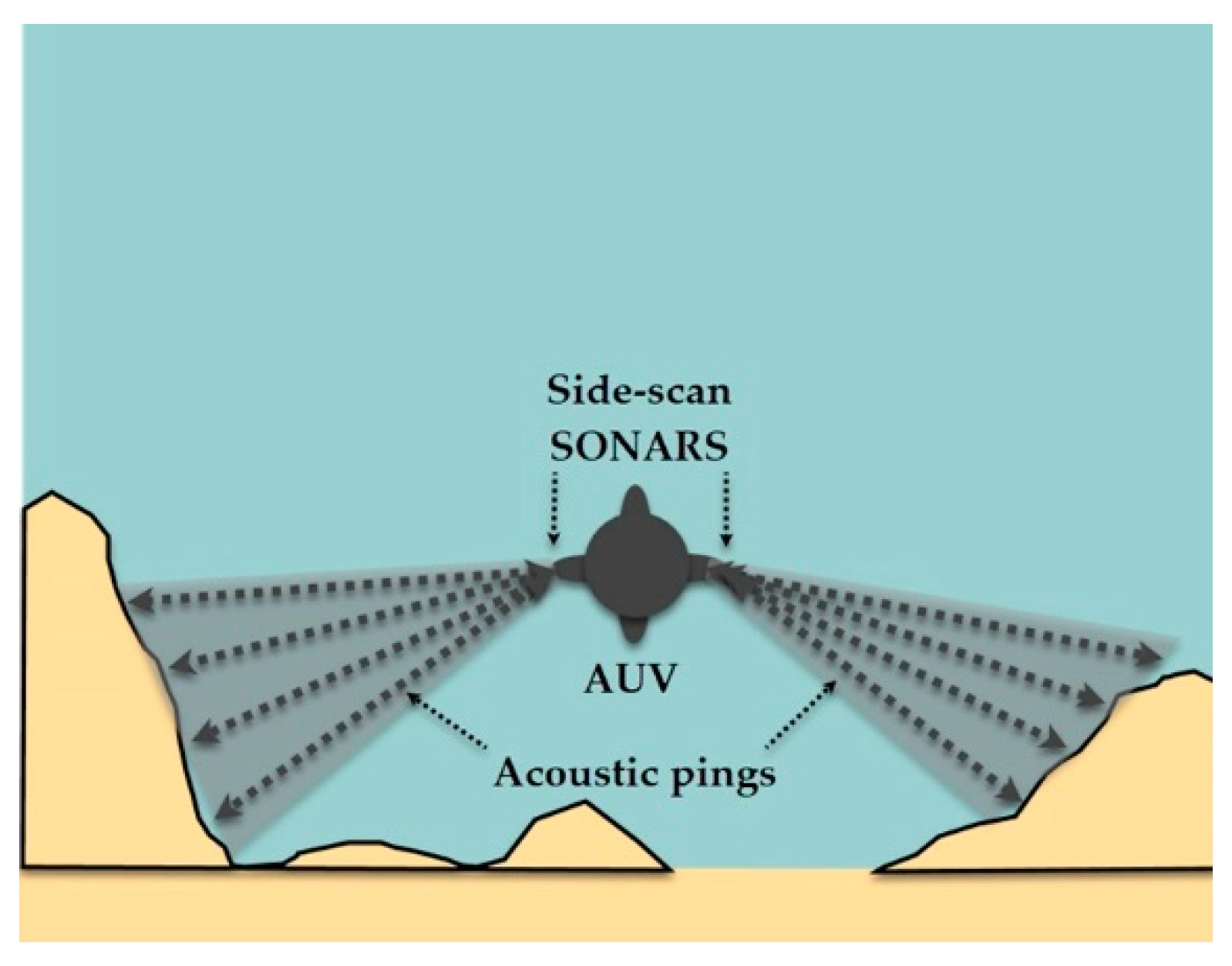

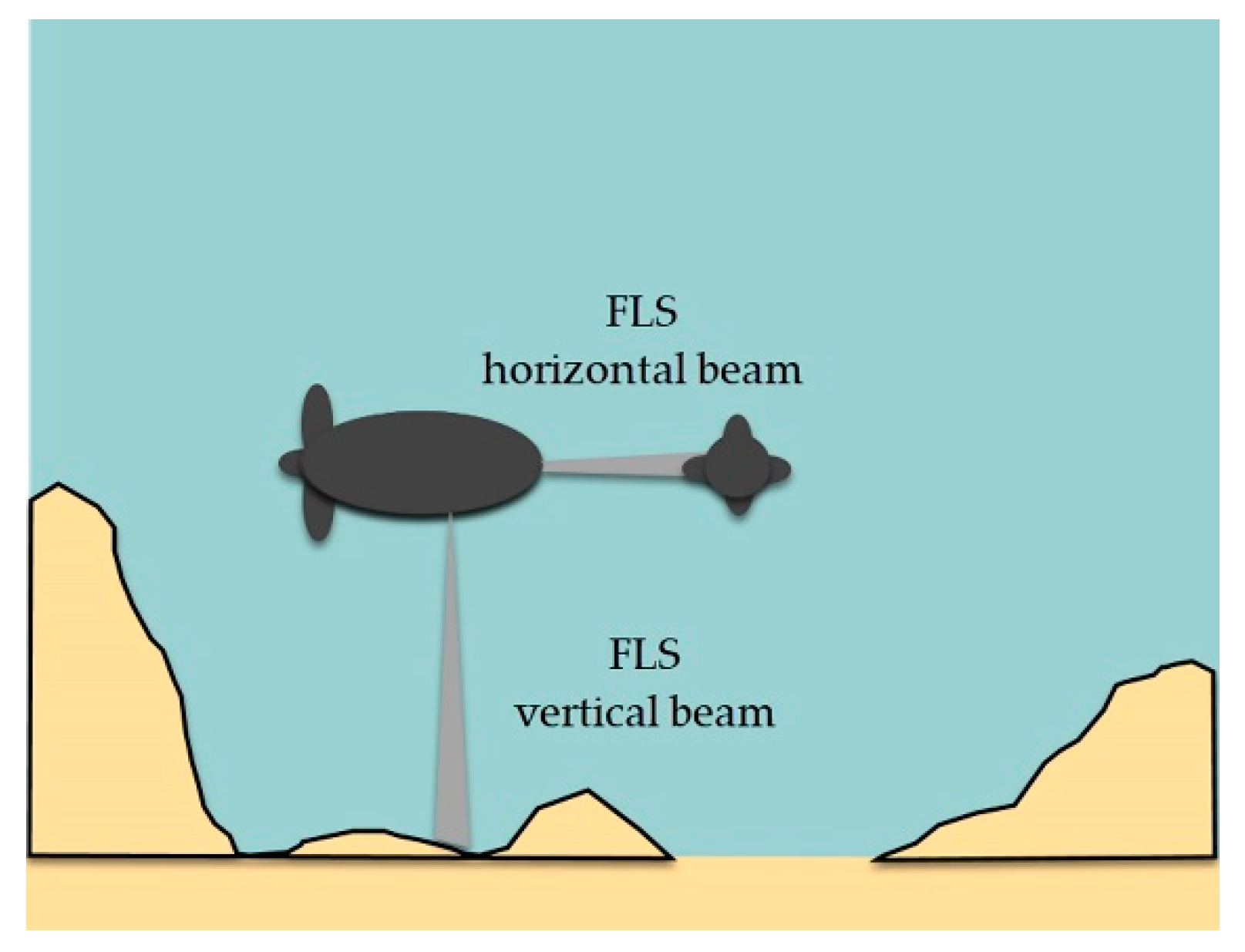

2.2.1. SONAR

2.2.2. Acoustic Ranging

2.3. Geophysical Navigation

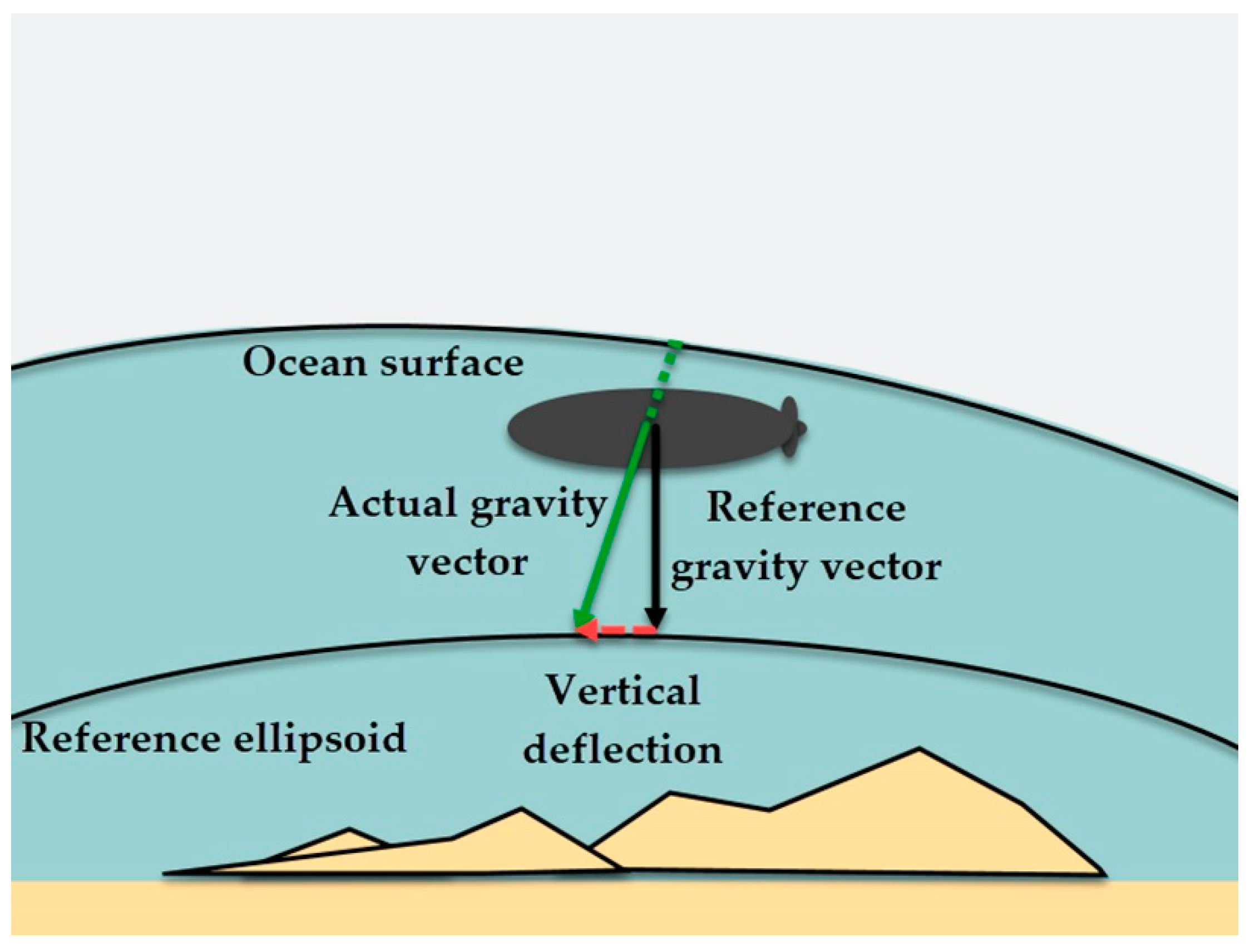

2.3.1. Gravity Navigation

2.3.2. Geomagnetic Navigation

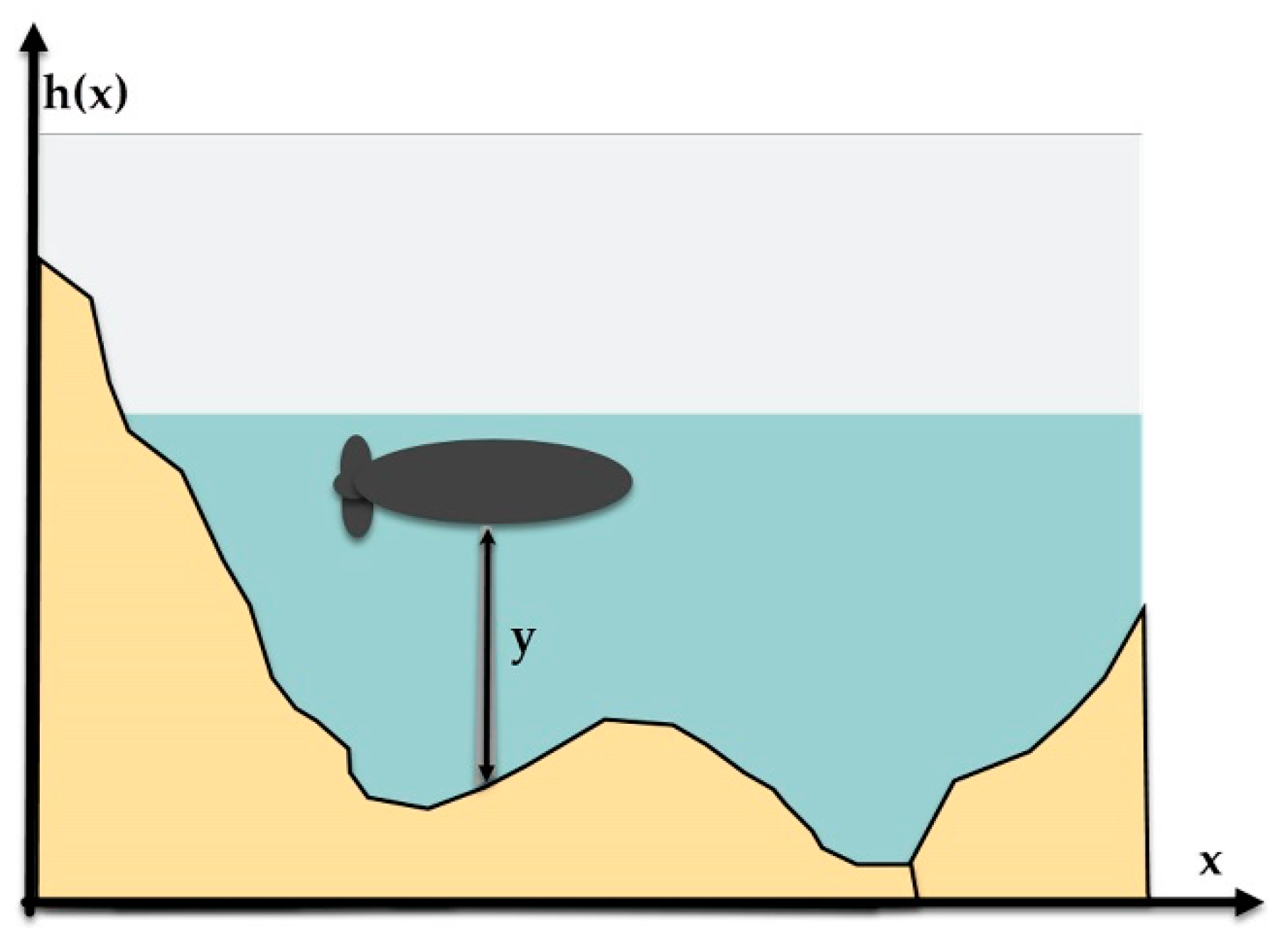

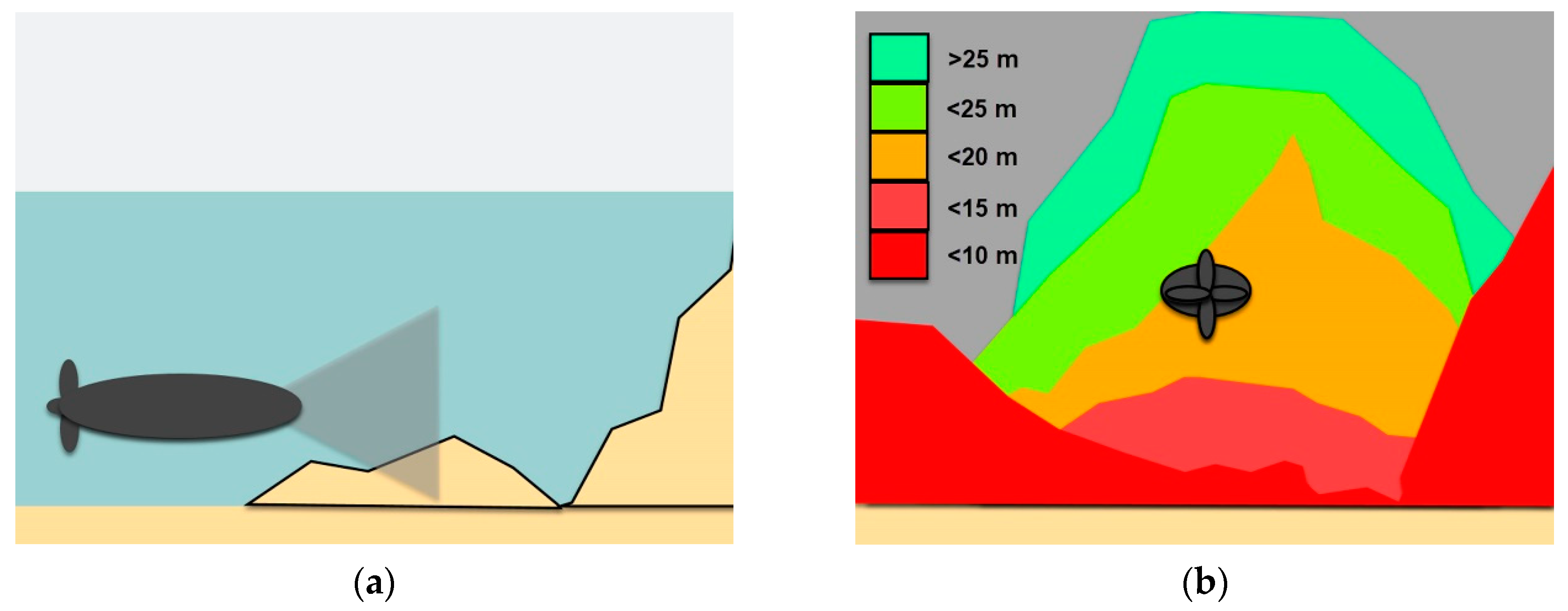

2.3.3. Bathymetric Navigation

2.4. Optical Navigation

2.5. Simultaneous Location And Mapping (SLAM)

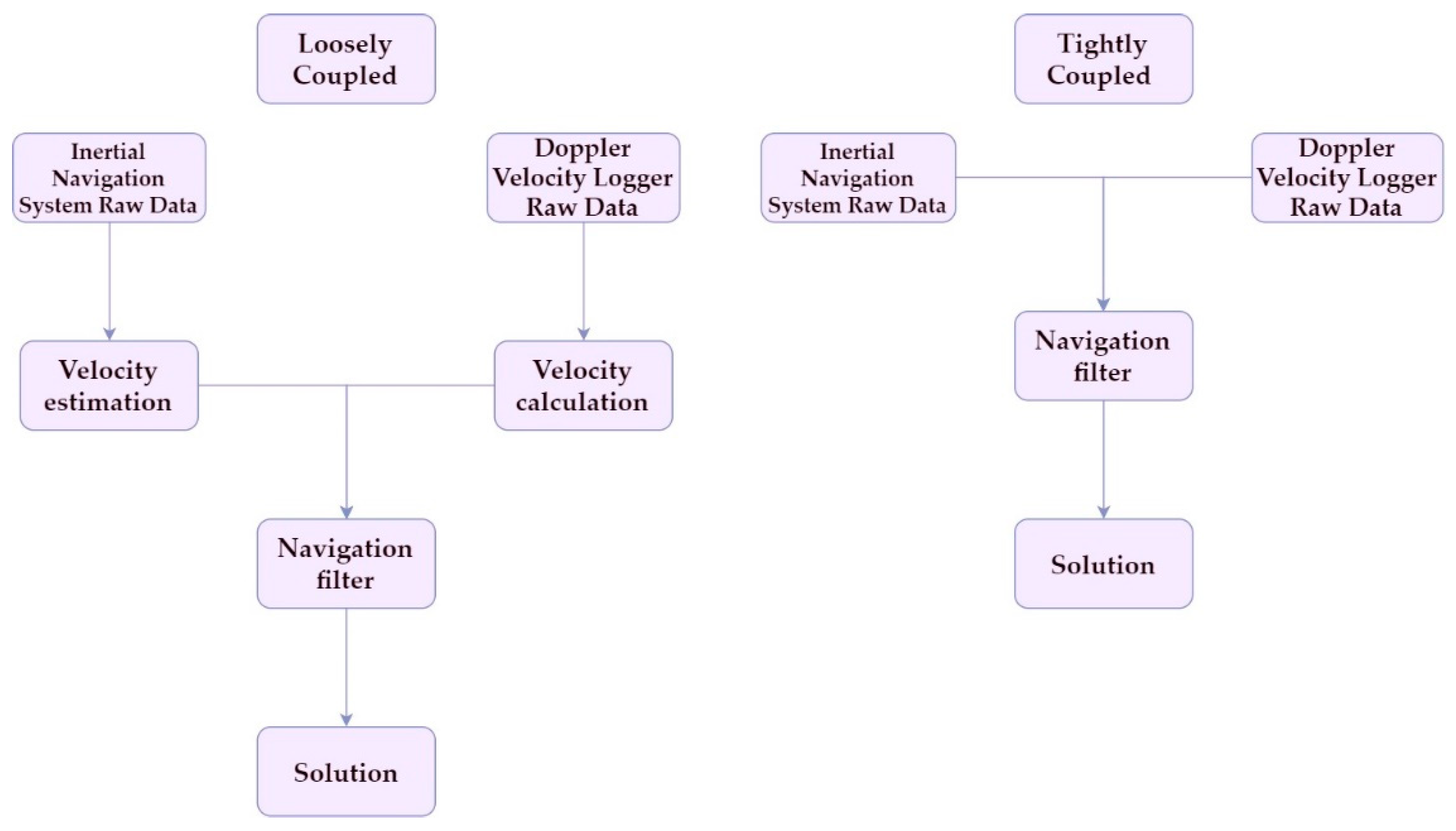

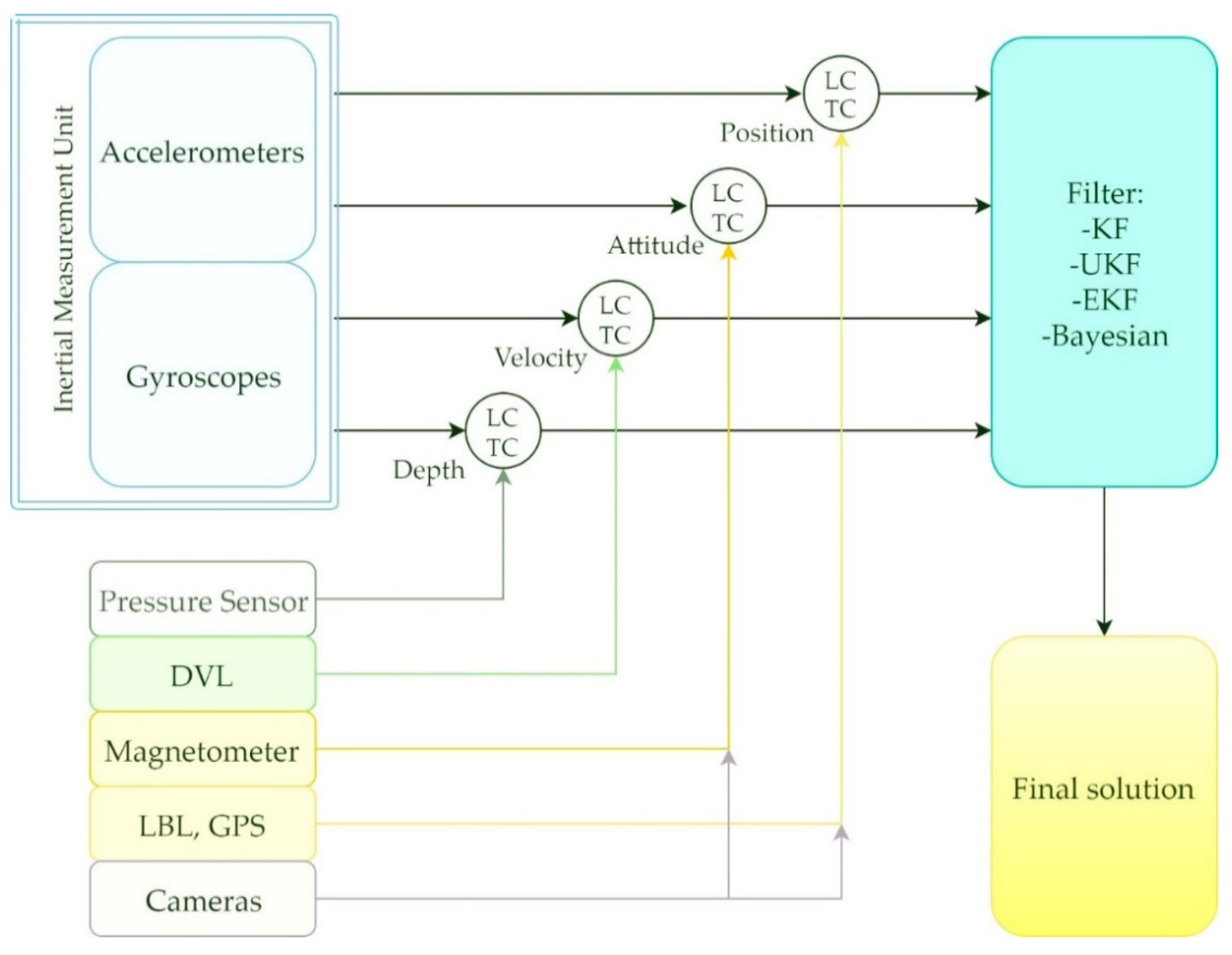

2.6. Sensor Fusion

2.7. Localization and Navigation Overview

3. Collaborative AUVs

3.1. Communication

- Application: Consider the type and length of message (Command and control messages, voice messages, image streaming, etc.) frequency of operation and operating depth.

- Cost: Depending on the complexity and performance, from some hundreds up to $50,000 (USD).

- Size: Usually cylindrical, with lengths from 10 cm to 50 cm.

- Bandwidth: Acoustic modems can perform underwater communication at up to some kb/s. Length of the message and time limitations must be considered

- Range: Range of operation for the vehicle’s communication has impact on the cost of the system. Acoustic modems are suitable from short distances up to tens of km. Considerer than a longer range will increase the latency and power consumption of the system.

- Power consumption: Depending on the range and modulation, the power consumption is in the range of 0.1 W to 1 W in receiving mode and 10 W to 100 W in transmission mode.

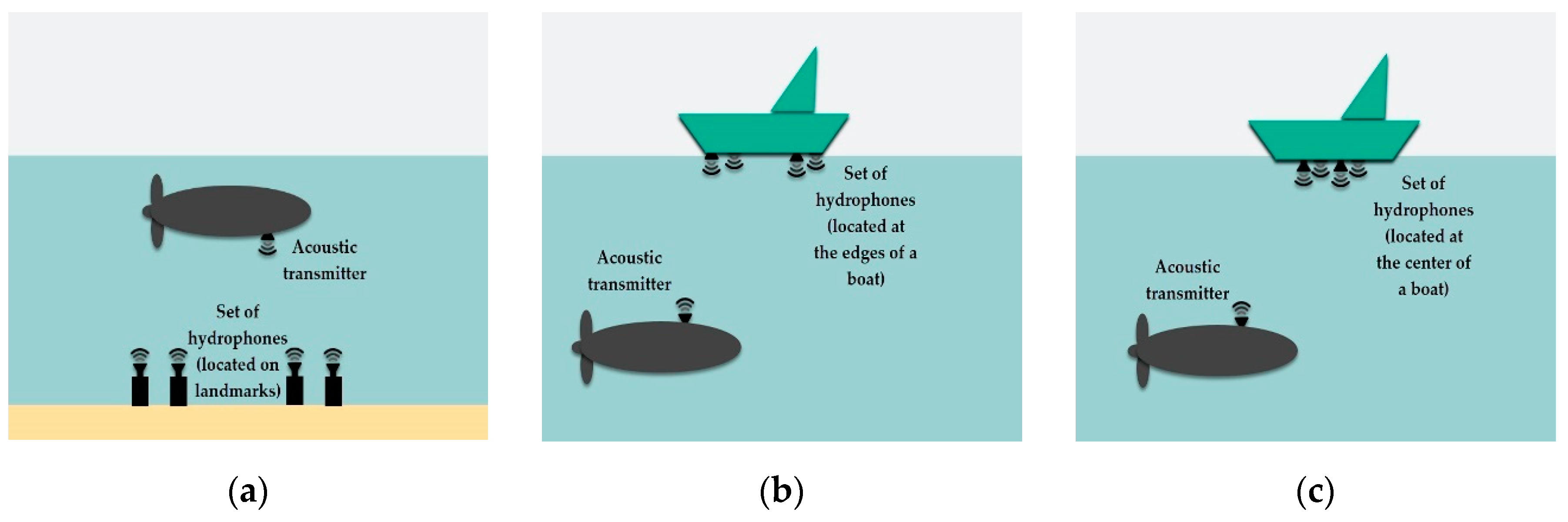

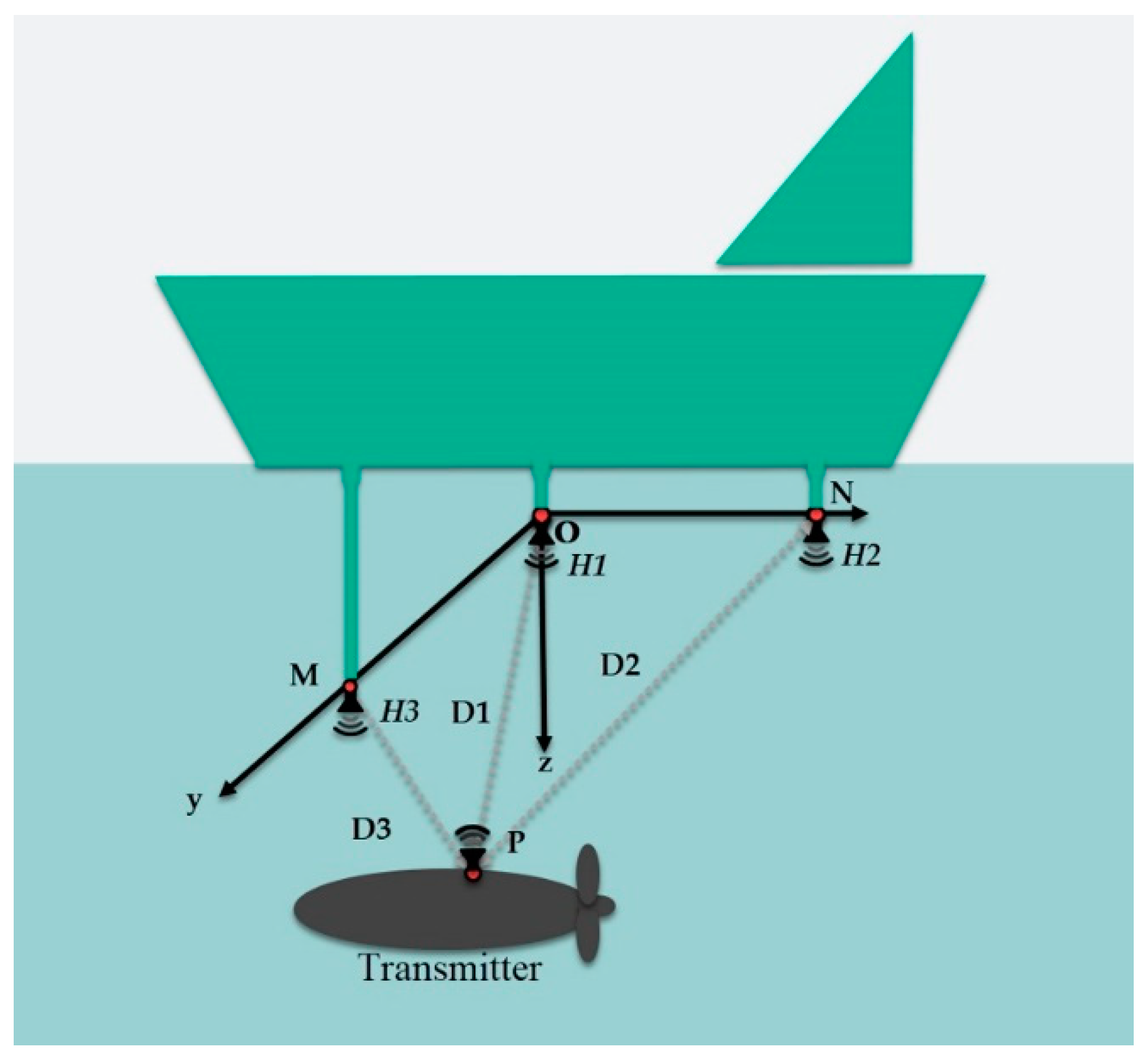

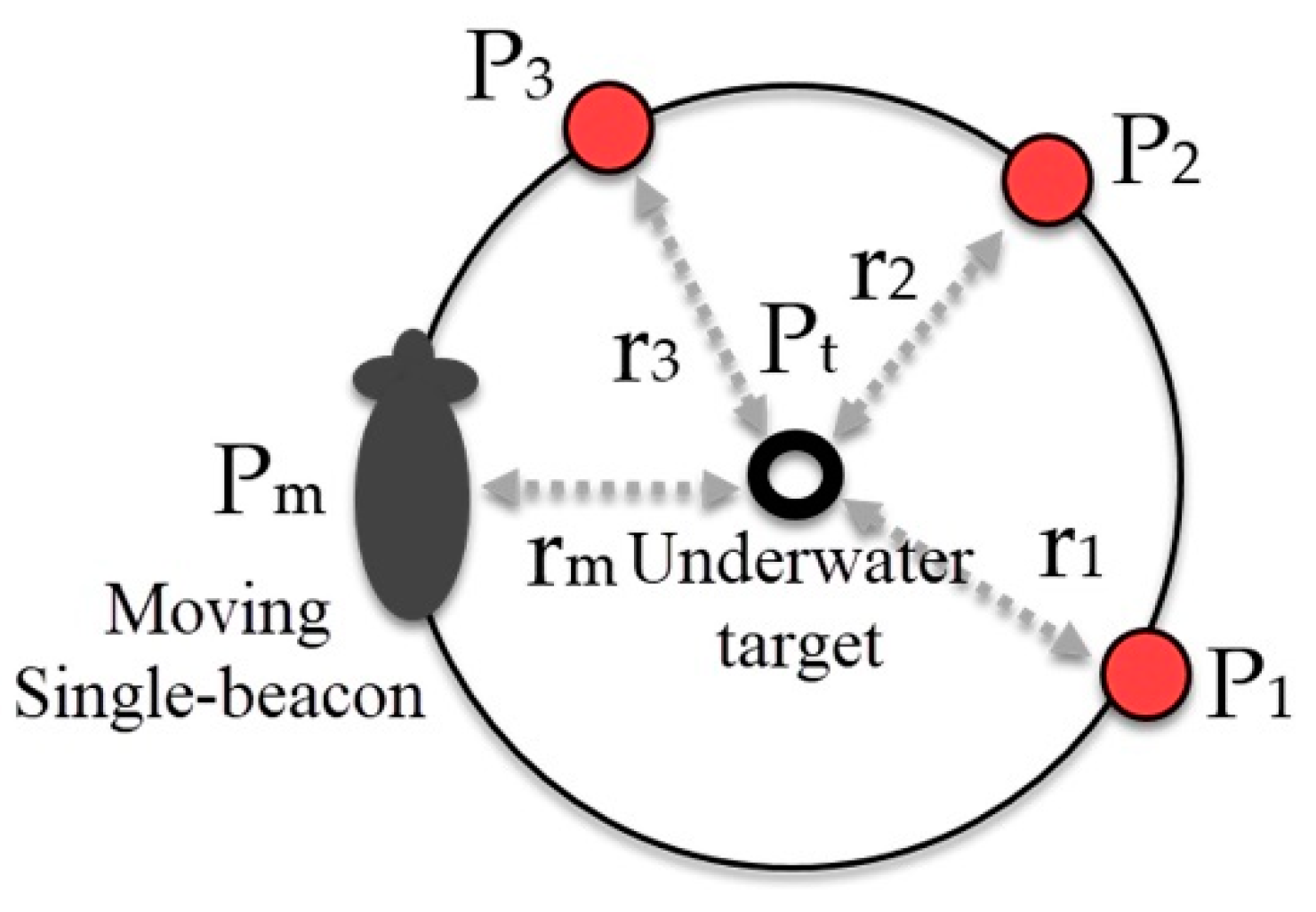

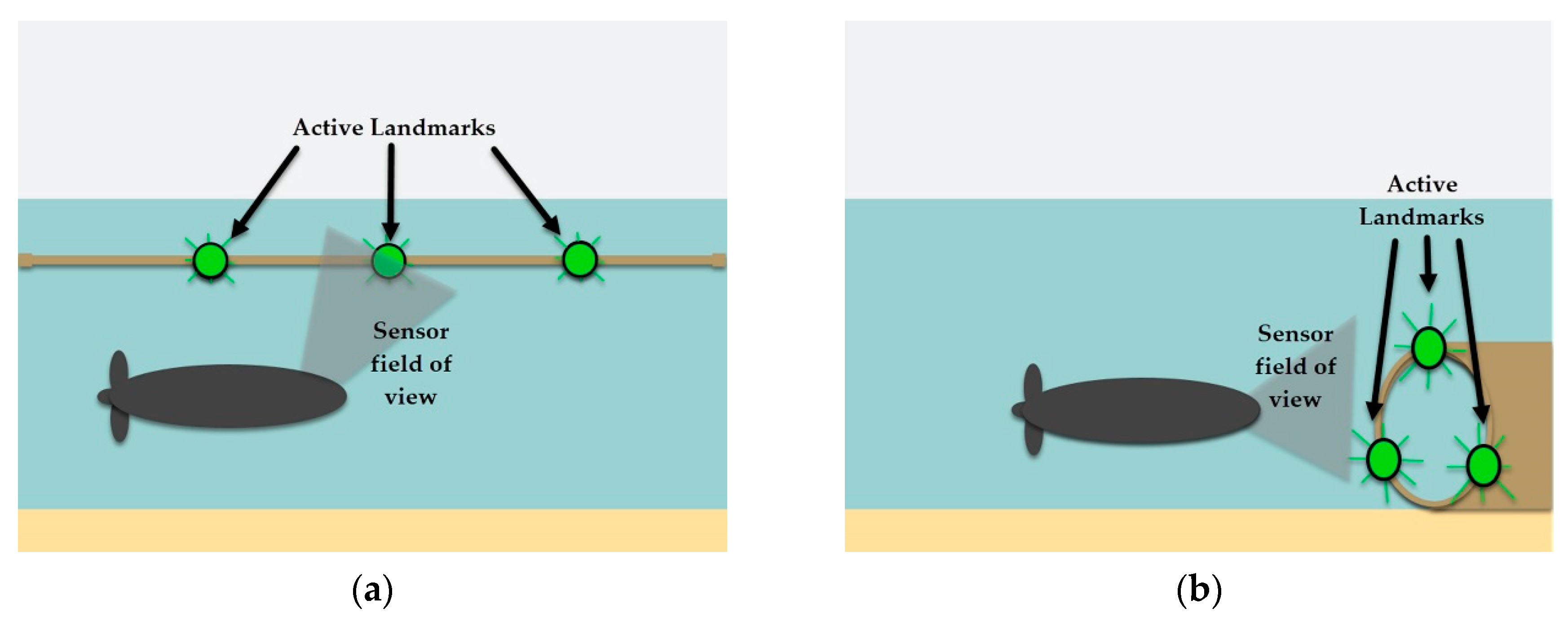

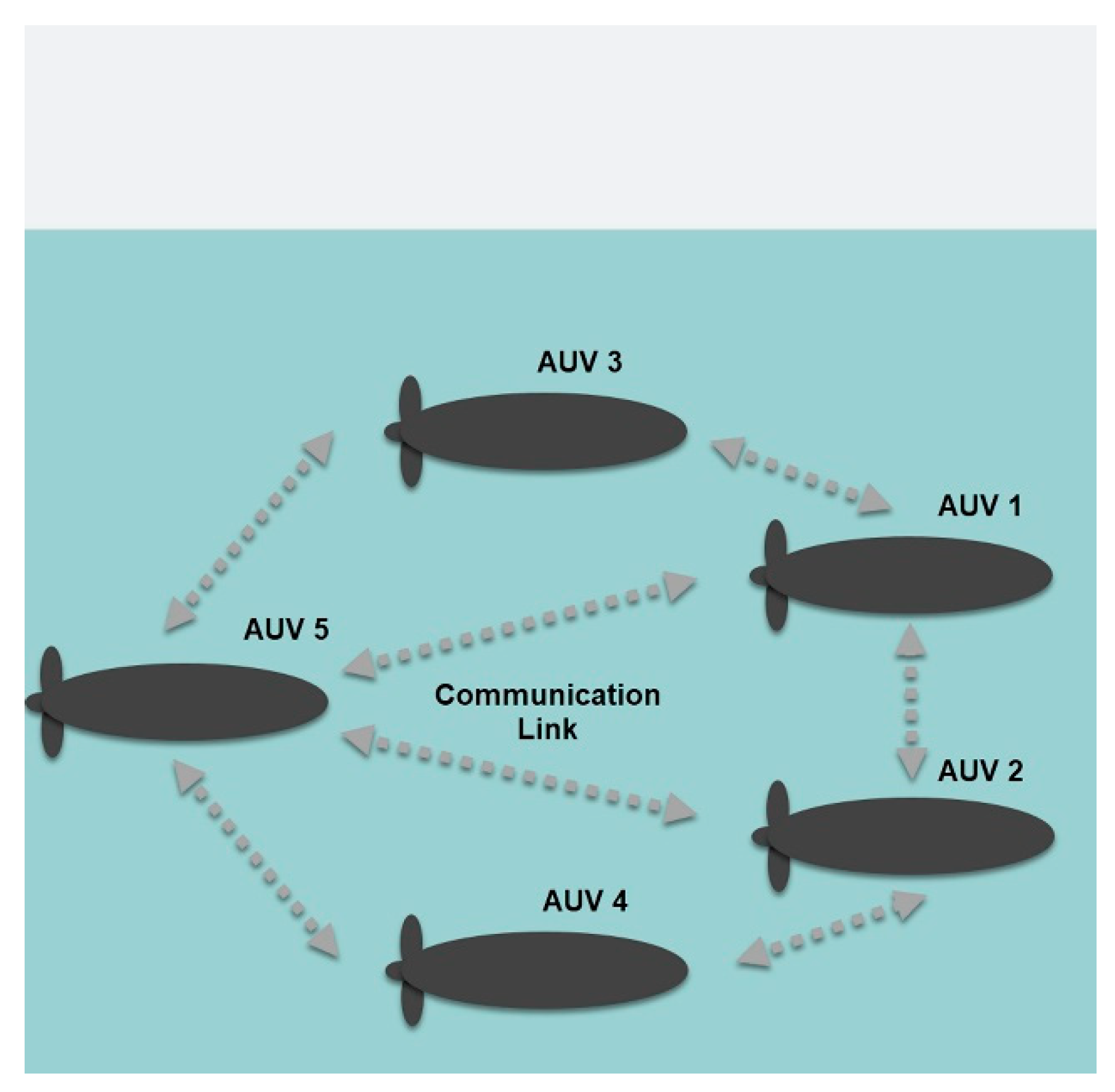

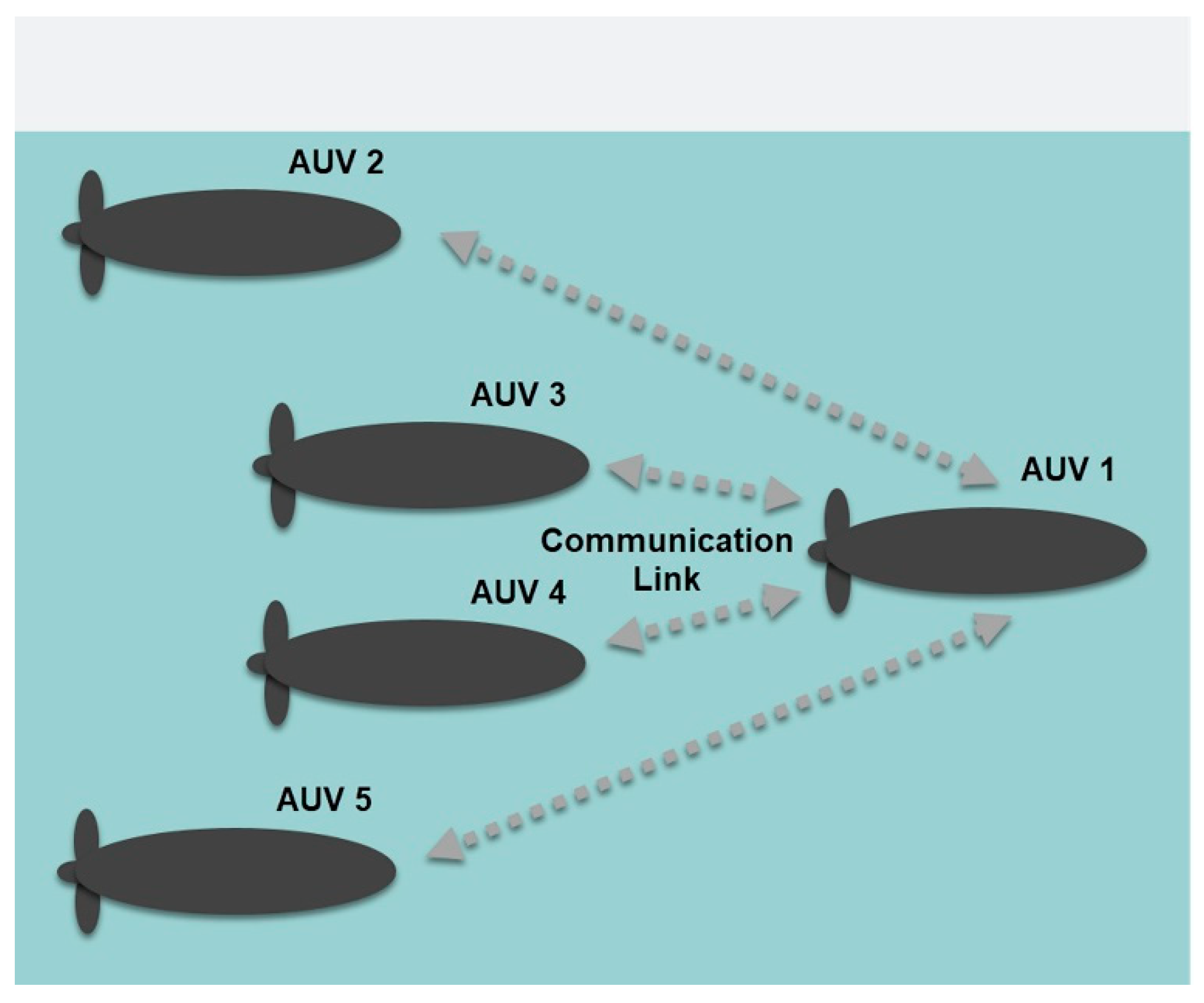

3.2. Collaborative Navigation

3.3. Collaborative Missions

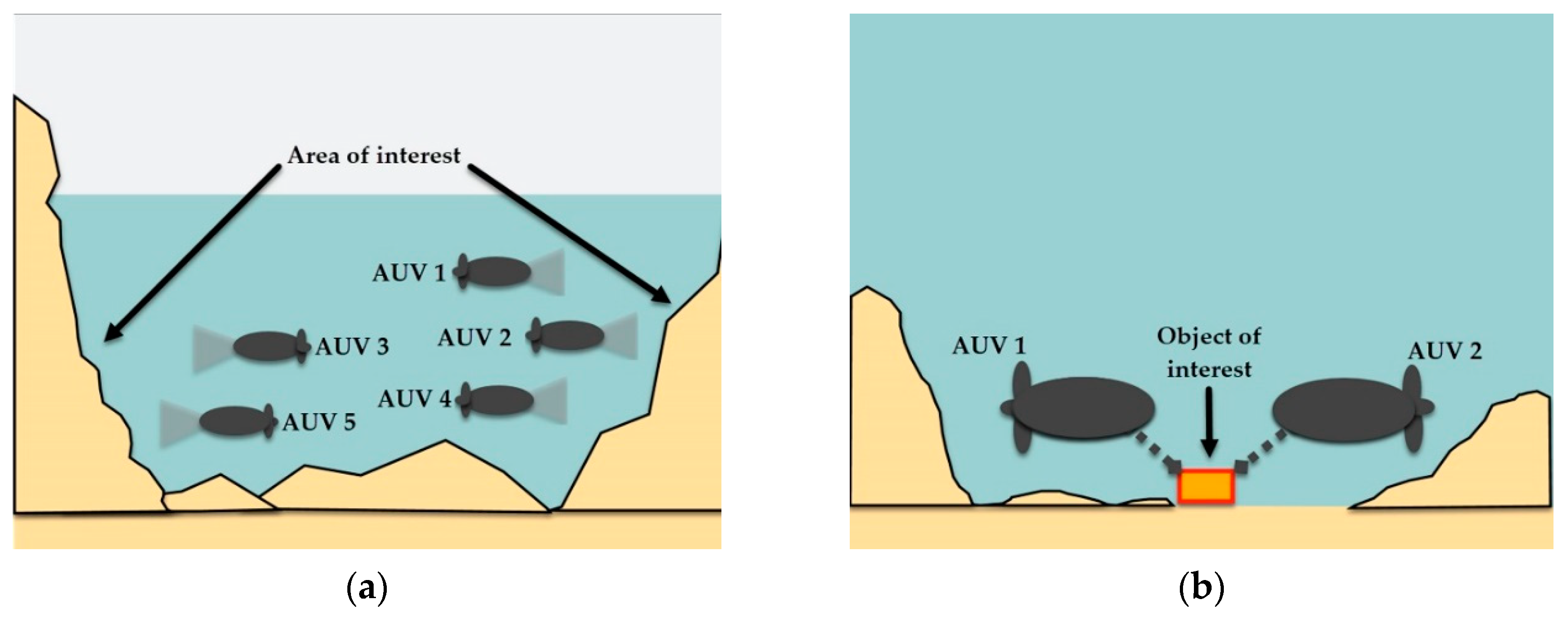

3.3.1. Search Missions

3.3.2. Intervention Missions

3.4. Collaborative AUVs Overview

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AFRB | Autonomous Field Robotics Laboratory |

| AHRS | Attitude and Heading Reference System |

| AUV | Autonomous Underwater Vehicle |

| BITAN | Beijing university of aeronautics and astronautics Inertial Terrain-Aided Navigation |

| BK | Bandler and Kohout |

| CRNN | Convolution Recurrent Neural Network |

| DR | Dead-Reckoning |

| DSO | Direct Sparse Odometry |

| DT | Distance Traveled |

| DVL | Doppler Velocity Logger |

| EKF | Extended Kalman Filter |

| ELC | Extended Loosely Coupled |

| FLS | Forward-Looking SONAR |

| FTPS | Fitting of Two Point Sets |

| GBNN | Glasius Bio-inspired Neural Network |

| GN | Geophysical Navigation |

| GPS | Global Positioning System |

| HSV | Hue Saturation Value |

| I-AUV | Intervention AUV |

| IMU | Inertial Measurement Unit |

| INS | Inertial Navigation Systems |

| KF | Kalman Filter |

| LBL | Long Baseline |

| LC | Loosely Coupled |

| LCI | Language-Centered Intelligence |

| MEMS | Micro-Electro-Mechanical System |

| NRT | Near-Real-Time |

| NN | Neural Networks |

| PF | Particle Filter |

| PL-SLAM | Point and Line SLAM |

| PMF | Point Mass Filter |

| PS | Pressure Sensor |

| PTAM | Parallel Tracking And Mapping |

| RMSE | Root-Mean-Square Error |

| RNN | Recurrent Neural Network |

| ROS | Robot Operating System |

| SBL | Short Baseline |

| SINS | Strapdown Inertial Navigation System |

| SITAN | Sandia Inertial Terrain Aided Navigation |

| SLAM | Simultaneous Location And Mapping |

| SoG | Sum of Gaussian |

| SONAR | Sound Navigation And Ranging |

| SVO | Semi-direct Visual Odometry |

| TAN | Terrain-Aided Navigation |

| TBN | Terrain-Based Navigation |

| TC | Tightly Coupled |

| TERCOM | TERrain COntour-Matching |

| TERPROM | TERrain PROfile Matching |

| TRN | Terrain-Referenced Navigation |

| UKF | Unscented Kalman Filter |

| USBL | Ultra-Short Baseline |

| USV | Unmanned Surface Vehicle |

| VIO | Visual Inertial Odometry |

| VO | Visual Odometry |

References

- Petillo, S.; Schmidt, H. Exploiting adaptive and collaborative AUV autonomy for detection and characterization of internal waves. IEEE J. Ocean. Eng. 2014, 39, 150–164. [Google Scholar] [CrossRef]

- Massot-Campos, M.; Oliver-Codina, G. Optical sensors and methods for underwater 3D reconstruction. Sensors 2015, 15, 31525–31557. [Google Scholar] [CrossRef] [PubMed]

- Xu, J.; Chen, X.; Song, X.; Li, H. Target recognition and location based on binocular vision system of UUV. Chin. Control Conf. CCC 2015, 2015, 3959–3963. [Google Scholar]

- Ridao, P.; Carreras, M.; Ribas, D.; Sanz, P.J.; Oliver, G. Intervention AUVs: The next challenge. Annu. Rev. Control 2015, 40, 227–241. [Google Scholar] [CrossRef]

- Abreu, N.; Matos, A. Minehunting Mission Planning for Autonomous Underwater Systems Using Evolutionary Algorithms. Unmanned Syst. 2014, 2, 323–349. [Google Scholar] [CrossRef]

- Reed, S.; Wood, J.; Haworth, C. The detection and disposal of IED devices within harbor regions using AUVs, smart ROVs and data processing/fusion technology. In Proceedings of the 2010 International WaterSide Security Conference, Carrara, Italy, 3–5 November 2010; pp. 1–7. [Google Scholar]

- Ren, Z.; Chen, L.; Zhang, H.; Wu, M. Research on geomagnetic-matching localization algorithm for unmanned underwater vehicles. In Proceedings of the 2008 International Conference on Information and Automation, Zhangjiajie, China, 20–23 June 2008; pp. 1025–1029. [Google Scholar]

- Leonard, J.J.; Bennett, A.A.; Smith, C.M.; Feder, H.J.S. Autonomous Underwater Vehicle Navigation. MIT Mar. Robot. Lab. Tech. Memo. 1998, 1, 1–17. [Google Scholar]

- Rice, H.; Kelmenson, S.; Mendelsohn, L. Geophysical navigation technologies and applications. In Proceedings of the PLANS 2004 Position Location and Navigation Symposium (IEEE Cat. No.04CH37556), Monterey, CA, USA, 26–29 April 2004; pp. 618–624. [Google Scholar]

- Fallon, M.F.; Kaess, M.; Johannsson, H.; Leonard, J.J. Efficient AUV navigation fusing acoustic ranging and side-scan sonar. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2398–2405. [Google Scholar]

- Bosch, J.; Gracias, N.; Ridao, P.; Istenič, K.; Ribas, D. Close-range tracking of underwater vehicles using light beacons. Sensors 2016, 16, 429. [Google Scholar] [CrossRef] [PubMed]

- Nicosevici, T.; Garcia, R.; Carreras, M.; Villanueva, M. A review of sensor fusion techniques for underwater vehicle navigation. In Proceedings of the Oceans ’04 MTS/IEEE Techno-Ocean ’04 (IEEE Cat. No.04CH37600), Kobe, Japan, 9–12 November 2005; pp. 1600–1605. [Google Scholar]

- Paull, L.; Saeedi, S.; Seto, M.; Li, H. AUV navigation and localization: A review. IEEE J. Ocean. Eng. 2014, 39, 131–149. [Google Scholar] [CrossRef]

- Che, X.; Wells, I.; Dickers, G.; Kear, P.; Gong, X. Re-evaluation of RF electromagnetic communication in underwater sensor networks. IEEE Commun. Mag. 2010, 48, 143–151. [Google Scholar] [CrossRef]

- Li, Z.; Dosso, S.E.; Sun, D. Motion-compensated acoustic localization for underwater vehicles. IEEE J. Ocean. Eng. 2016, 41, 840–851. [Google Scholar] [CrossRef]

- Zhang, J.; Han, Y.; Zheng, C.; Sun, D. Underwater target localization using long baseline positioning system. Appl. Acoust. 2016, 111, 129–134. [Google Scholar] [CrossRef]

- Han, Y.; Zheng, C.; Sun, D. Accurate underwater localization using LBL positioning system. In Proceedings of the OCEANS 2015-MTS/IEEE Washington, Washington, DC, USA, 19–22 October 2016; pp. 1–4. [Google Scholar]

- Zhai, Y.; Gong, Z.; Wang, L.; Zhang, R.; Luo, H. Study of underwater positioning based on short baseline sonar system. Int. Conf. Artif. Intell. Comput. Intell. AICI 2009, 2, 343–346. [Google Scholar]

- Smith, S.M.; Kronen, D. Experimental results of an inexpensive short baseline acoustic positioning system for AUV navigation. Ocean. Conf. Rec. 1997, 1, 714–720. [Google Scholar]

- Costanzi, R.; Monnini, N.; Ridolfi, A.; Allotta, B.; Caiti, A. On field experience on underwater acoustic localization through USBL modems. In Proceedings of the OCEANS 2017, Aberdeen, UK, 19–22 June 2017; pp. 1–5. [Google Scholar]

- Morgado, M.; Oliveira, P.; Silvestre, C. Experimental evaluation of a USBL underwater positioning system. In Proceedings of the ELMAR-2010, Zadar, Croatia, 15–17 September 2010; pp. 485–488. [Google Scholar]

- Xu, Y.; Liu, W.; Ding, X.; Lv, P.; Feng, C.; He, B.; Yan, T. USBL positioning system based Adaptive Kalman filter in AUV. In Proceedings of the 2018 OCEANS-MTS/IEEE Kobe Techno-Oceans (OTO), Kobe, Japan, 28–31 May 2018. [Google Scholar]

- Reis, J.; Morgado, M.; Batista, P.; Oliveira, P.; Silvestre, C. Design and experimental validation of a USBL underwater acoustic positioning system. Sensors 2016, 16, 1491. [Google Scholar] [CrossRef] [PubMed]

- Petillot, Y.R.; Antonelli, G.; Casalino, G.; Ferreira, F. Underwater Robots: From Remotely Operated Vehicles to Intervention-Autonomous Underwater Vehicles. IEEE Robot. Autom. Mag. 2019, 26, 94–101. [Google Scholar] [CrossRef]

- Vaman, D. TRN history, trends and the unused potential. In Proceedings of the 2012 IEEE/AIAA 31st Digital Avionics Systems Conference (DASC), Williamsburg, VA, USA, 14–18 October 2012; pp. 1–16. [Google Scholar]

- Melo, J.; Matos, A. Survey on advances on terrain based navigation for autonomous underwater vehicles. Ocean Eng. 2017, 139, 250–264. [Google Scholar] [CrossRef]

- Jekeli, C. Gravity on Precise, Short-Term, 3-D Free- Inertial Navigation. J. Inst. Navig. 1997, 44, 347–357. [Google Scholar] [CrossRef]

- Perlmutter, M.; Robin, L. High-performance, low cost inertial MEMS: A market in motion! In Proceedings of the 2012 IEEE/ION Position, Location and Navigation Symposium, Myrtle Beach, SC, USA, 23–26 April 2012; pp. 225–229. [Google Scholar]

- Jekeli, C. Precision free-inertial navigation with gravity compensation by an onboard gradiometer. J. Guid. Control. Dyn. 2007, 30, 1214–1215. [Google Scholar] [CrossRef]

- Rice, H.; Mendelsohn, L.; Aarons, R.; Mazzola, D. Next generation marine precision navigation system. In Proceedings of the IEEE 2000 Position Location and Navigation Symposium (Cat. No.00CH37062), San Diego, CA, USA, 13–16 March 2000; pp. 200–206. [Google Scholar]

- ISM3D-Underwater AHRS Sensor-Impact Subsea. Available online: http://www.impactsubsea.co.uk/ism3d-2/ (accessed on 26 December 2019).

- Underwater 9-axis IMU/AHRS sensor-Seascape Subsea BV. Available online: https://www.seascapesubsea.com/product/underwater-9-axis-imu-ahrs-sensor/ (accessed on 26 December 2019).

- Inertial Labs Attitude and Heading Reference Systems (AHRS)-Inertial Labs. Available online: https://inertiallabs.com/products/ahrs/ (accessed on 26 December 2019).

- Elipse 2 series-Miniature Inertial Navigation Sensors. Available online: https://www.sbg-systems.com/products/ellipse-2-series/#ellipse2-a_miniature-ahrs (accessed on 26 December 2019).

- DSPRH-Depth and AHRS Sensor | TMI-Orion.Com. Available online: https://www.tmi-orion.com/en/robotics/underwater-robotics/dsprh-depth-and-ahrs-sensor.htm (accessed on 26 December 2019).

- VN-100-VectorNav Technologies. Available online: https://www.vectornav.com/products/vn-100 (accessed on 21 January 2020).

- XSENS-MTi 600-Series. Available online: https://www.xsens.com/products/mti-600-series (accessed on 21 January 2020).

- Hurtos, N.; Ribas, D.; Cufi, X.; Petillot, Y.; Salvi, J. Fourier-based Registration for Robust Forward-looking Sonar Mosaicing in Low-visibility Underwater Environments. J. Field Robot. 2014, 32, 123–151. [Google Scholar]

- Galarza, C.; Masmitja, I.; Prat, J.; Gomariz, S. Design of obstacle detection and avoidance system for Guanay II AUV. 24th Mediterr. Conf. Control Autom. MED 2016, 5, 410–414. [Google Scholar]

- Braginsky, B.; Guterman, H. Obstacle avoidance approaches for autonomous underwater vehicle: Simulation and experimental results. IEEE J. Ocean. Eng. 2016, 41, 882–892. [Google Scholar] [CrossRef]

- Lin, C.; Wang, H.; Yuan, J.; Yu, D.; Li, C. An improved recurrent neural network for unmanned underwater vehicle online obstacle avoidance. Ocean Eng. 2019, 189, 106327. [Google Scholar] [CrossRef]

- LBL Positioning Systems | EvoLogics. Available online: https://evologics.de/lbl#products (accessed on 26 December 2019).

- GeoTag. Available online: http://www.sercel.com/products/Pages/GeoTag.aspx?gclid=EAIaIQobChMI5sqPkY3S5gIV2v_jBx0apwTAEAAYAiAAEgILm_D_BwE (accessed on 26 December 2019).

- MicroPAP Compact Acoustic Positioning System-Kongsberg Maritime. Available online: https://www.kongsberg.com/maritime/products/Acoustics-Positioning-and-Communication/acoustic-positioning-systems/pap-micropap-compact-acoustic-positioning-system/ (accessed on 26 December 2019).

- Subsonus | Advanced Navigation. Available online: https://www.advancednavigation.com/product/subsonus?gclid=EAIaIQobChMI5sqPkY3S5gIV2v_jBx0apwTAEAAYASAAEgJNpfD_BwE (accessed on 26 December 2019).

- Underwater GPS-Water Linked AS. Available online: https://waterlinked.com/underwater-gps/ (accessed on 26 December 2019).

- Batista, P.; Silvestre, C.; Oliveira, P. Tightly coupled long baseline/ultra-short baseline integrated navigation system. Int. J. Syst. Sci. 2016, 47, 1837–1855. [Google Scholar] [CrossRef]

- Vasilijević, A.; Nad, D.; Mandi, F.; Miškovi, N.; Vukić, Z. Coordinated navigation of surface and underwater marine robotic vehicles for ocean sampling and environmental monitoring. IEEE/ASME Trans. Mechatron. 2017, 22, 1174–1184. [Google Scholar] [CrossRef]

- Sarda, E.I.; Dhanak, M.R. Launch and Recovery of an Autonomous Underwater Vehicle from a Station-Keeping Unmanned Surface Vehicle. IEEE J. Ocean. Eng. 2019, 44, 290–299. [Google Scholar] [CrossRef]

- Masmitja, I.; Gomariz, S.; Del Rio, J.; Kieft, B.; O’Reilly, T. Range-only underwater target localization: Path characterization. In Proceedings of the Oceans 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–7. [Google Scholar]

- Bayat, M.; Crasta, N.; Aguiar, A.P.; Pascoal, A.M. Range-Based Underwater Vehicle Localization in the Presence of Unknown Ocean Currents: Theory and Experiments. IEEE Trans. Control Syst. Technol. 2016, 24, 122–139. [Google Scholar] [CrossRef]

- Vallicrosa, G.; Ridao, P. Sum of Gaussian single beacon range-only localization for AUV homing. Annu. Rev. Control 2016, 42, 177–187. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, Z.; Li, Y.; Tong, J. A passive acoustic positioning algorithm based on virtual long baseline matrix window. J. Navig. 2019, 72, 193–206. [Google Scholar] [CrossRef]

- Zhang, F.; Chen, X.; Sun, M.; Yan, M.; Yang, D. Simulation study of underwater passive navigation system based on gravity gradient. Int. Geosci. Remote Sens. Symp. 2004, 5, 3111–3113. [Google Scholar]

- Meduna, D.K. Terrain Relative Navigation for Sensor-Limited Systems with Applications to Underwater Vehicles; Doctor of Philisophy, Standford University,: Stanford, CA, USA, 2011. [Google Scholar]

- Wei, E.; Dong, C.; Yang, Y.; Tang, S.; Liu, J.; Gong, G.; Deng, Z. A Robust Solution of Integrated SITAN with TERCOM Algorithm: Weight-Reducing Iteration Technique for Underwater Vehicles’ Gravity-Aided Inertial Navigation System. Navig. J. Inst. Navig. 2017, 64, 111–122. [Google Scholar] [CrossRef]

- Pei, Y.; Chen, Z.; Hung, J.C. BITAN-II: An improved terrain aided navigation algorithm. IECON Proc. Ind. Electron. Conf. 1996, 3, 1675–1680. [Google Scholar]

- Han, Y.; Wang, B.; Deng, Z.; Fu, M. A combined matching algorithm for underwater gravity-Aided navigation. IEEE/ASME Trans. Mechatron. 2018, 23, 233–241. [Google Scholar] [CrossRef]

- Guo, C.; Li, A.; Cai, H.; Yang, H. Algorithm for geomagnetic navigation and its validity evaluation. In Proceedings of the 2011 IEEE International Conference on Computer Science and Automation Engineering, Shanghai, China, 10–12 June 2011; pp. 573–577. [Google Scholar]

- Zhao, J.; Wang, S.; Wang, A. Study on underwater navigation system based on geomagnetic match technique. In Proceedings of the 2009 9th International Conference on Electronic Measurement & Instruments, Beijing, China, 16–19 August 2009; pp. 3255–3259. [Google Scholar]

- Menq, C.H.; Yau, H.; Lai, G. Automated precision measurement of surface profile in CAD-directed inspection. IEEE Trans. Robot. Autom. 1992, 8, 268–278. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, H.; Kun, Y.; Tian, C. Study on the underwater geomagnetic navigation based on the integration of TERCOM and K-means clustering algorithm. In Proceedings of the OCEANS’10 IEEE SYDNEY, Sydney, Australia, 24–27 May 2010; pp. 1–4. [Google Scholar]

- Jaulin, L. Isobath following using an altimeter as a unique exteroceptive sensor. CEUR Workshop Proc. 2018, 2331, 105–110. [Google Scholar]

- Cowie, M.; Wilkinson, N.; Powlesland, R. Latest Development of the TERPROM ® Digital Terrain System ( DTS ). In Proceedings of the 2008 IEEE/ION Position, Location and Navigation Symposium, Monterey, CA, USA, 5–8 May 2008; pp. 219–1229. [Google Scholar]

- Zhao, L.; Gao, N.; Huang, B.; Wang, Q.; Zhou, J. A novel terrain-aided navigation algorithm combined with the TERCOM algorithm and particle filter. IEEE Sens. J. 2015, 15, 1124–1131. [Google Scholar] [CrossRef]

- Salavasidis, G.; Munafò, A.; Harris, C.A.; Prampart, T.; Templeton, R.; Smart, M.; Roper, D.T.; Pebody, M.; McPhail, S.D.; Rogers, E.; et al. Terrain-aided navigation for long-endurance and deep-rated autonomous underwater vehicles. J. Field Robot. 2019, 36, 447–474. [Google Scholar] [CrossRef]

- Meduna, D.K.; Rock, S.M.; McEwen, R.S. Closed-loop terrain relative navigation for AUVs with non-inertial grade navigation sensors. In Proceedings of the 2010 IEEE/OES Autonomous Underwater Vehicles, Monterey, CA, USA, 1–3 September 2010. [Google Scholar]

- Nootz, G.; Jarosz, E.; Dalgleish, F.R.; Hou, W. Quantification of optical turbulence in the ocean and its effects on beam propagation. Appl. Opt. 2016, 55, 8813. [Google Scholar] [CrossRef]

- Eren, F.; Pe’Eri, S.; Thein, M.W.; Rzhanov, Y.; Celikkol, B.; Swift, M.R. Position, orientation and velocity detection of Unmanned Underwater Vehicles (UUVs) using an optical detector array. Sensors 2017, 17, 1741. [Google Scholar] [CrossRef]

- Zhong, L.; Li, D.; Lin, M.; Lin, R.; Yang, C. A fast binocular localisation method for AUV docking. Sensors 2019, 19, 1735. [Google Scholar] [CrossRef]

- Liu, S.; Xu, H.; Lin, Y.; Gao, L. Visual navigation for recovering an AUV by another AUV in shallow water. Sensors 2019, 19, 1889. [Google Scholar] [CrossRef] [PubMed]

- Monroy-Anieva, J.A.; Rouviere, C.; Campos-Mercado, E.; Salgado-Jimenez, T.; Garcia-Valdovinos, L.G. Modeling and control of a micro AUV: Objects follower approach. Sensors 2018, 18, 2574. [Google Scholar] [CrossRef] [PubMed]

- Prats, M.; Ribas, D.; Palomeras, N.; García, J.C.; Nannen, V.; Wirth, S.; Fernández, J.J.; Beltrán, J.P.; Campos, R.; Ridao, P.; et al. Reconfigurable AUV for intervention missions: A case study on underwater object recovery. Intell. Serv. Robot. 2012, 5, 19–31. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–108. [Google Scholar] [CrossRef]

- Lu, Y.; Song, D. Visual Navigation Using Heterogeneous Landmarks and Unsupervised Geometric Constraints. IEEE Trans. Robot. 2015, 31, 736–749. [Google Scholar] [CrossRef]

- Carrillo, H.; Dames, P.; Kumar, V.; Castellanos, J.A. Autonomous robotic exploration using occupancy grid maps and graph SLAM based on Shannon and Rényi Entropy. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 487–494. [Google Scholar]

- Pizzoli, M.; Forster, C.; Scaramuzza, D. REMODE: Probabilistic, monocular dense reconstruction in real time. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 2609–2616. [Google Scholar]

- Brand, C.; Schuster, M.J.; Hirschmüller, H.; Suppa, M. Stereo-vision based obstacle mapping for indoor/outdoor SLAM. IEEE Int. Conf. Intell. Robot. Syst. 2014, 1846–1853. [Google Scholar]

- Hernández, J.D.; Istenič, K.; Gracias, N.; Palomeras, N.; Campos, R.; Vidal, E.; García, R.; Carreras, M. Autonomous underwater navigation and optical mapping in unknown natural environments. Sensors 2016, 16, 1174. [Google Scholar] [CrossRef] [PubMed]

- Palomer, A.; Ridao, P.; Ribas, D. Multibeam 3D underwater SLAM with probabilistic registration. Sensors 2016, 16, 560. [Google Scholar] [CrossRef] [PubMed]

- Roman, C.; Singh, H. Consistency based error evaluation for deep sea bathymetric mapping with robotic vehicles. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 3568–3574. [Google Scholar]

- Gomez-Ojeda, R.; Moreno, F.A.; Zuñiga-Noël, D.; Scaramuzza, D.; Gonzalez-Jimenez, J. PL-SLAM: A Stereo SLAM System Through the Combination of Points and Line Segments. IEEE Trans. Robot. 2019, 35, 734–746. [Google Scholar] [CrossRef]

- Wang, R.; Wang, X.; Zhu, M.; Lin, Y. Application of a Real-Time Visualization Method of AUVs in Underwater Visual Localization. Appl. Sci. 2019, 9, 1428. [Google Scholar] [CrossRef]

- Ferrera, M.; Moras, J.; Trouvé-Peloux, P.; Creuze, V.; Dégez, D. The Aqualoc Dataset: Towards Real-Time Underwater Localization from a Visual-Inertial-Pressure Acquisition System. arXiv 2018, arXiv:1809.07076. [Google Scholar]

- Joshi, B.; Rahman, S.; Kalaitzakis, M.; Cain, B.; Johnson, J.; Xanthidis, M.; Karapetyan, N.; Hernandez, A.; Li, A.Q.; Vitzilaios, N.; et al. Experimental Comparison of Open Source Visual-Inertial-Based State Estimation Algorithms in the Underwater Domain; IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS): Macau, China, 2019. [Google Scholar]

- Allotta, B.; Chisci, L.; Costanzi, R.; Fanelli, F.; Fantacci, C.; Meli, E.; Ridolfi, A.; Caiti, A.; Di Corato, F.; Fenucci, D. A comparison between EKF-based and UKF-based navigation algorithms for AUVs localization. In Proceedings of the OCEANS 2015-Genova, Genoa, Italy, 18–21 May 2015; pp. 1–5. [Google Scholar]

- Li, W.; Zhang, L.; Sun, F.; Yang, L.; Chen, M.; Li, Y. Alignment calibration of IMU and Doppler sensors for precision INS/DVL integrated navigation. Optik (Stuttg) 2015, 126, 3872–3876. [Google Scholar] [CrossRef]

- Rossi, A.; Pasquali, M.; Pastore, M. Performance analysis of an inertial navigation algorithm with DVL auto-calibration for underwater vehicle. In Proceedings of the 2014 DGON Inertial Sensors and Systems (ISS), Karlsruhe, Germany, 16–17 September 2014; pp. 1–19. [Google Scholar]

- Gao, W.; Li, J.; Zhou, G.; Li, Q. Adaptive Kalman filtering with recursive noise estimator for integrated SINS/DVL systems. J. Navig. 2015, 68, 142–161. [Google Scholar] [CrossRef]

- Liu, P.; Wang, B.; Deng, Z.; Fu, M. INS/DVL/PS Tightly Coupled Underwater Navigation Method with Limited DVL Measurements. IEEE Sens. J. 2018, 18, 2994–3002. [Google Scholar] [CrossRef]

- Tal, A.; Klein, I.; Katz, R. Inertial navigation system/doppler velocity log (INS/DVL) fusion with partial dvl measurements. Sensors 2017, 17, 415. [Google Scholar] [CrossRef]

- Zhang, T.; Shi, H.; Chen, L.; Li, Y.; Tong, J. AUV positioning method based on tightly coupled SINS/LBL for underwater acoustic multipath propagation. Sensors 2016, 16, 357. [Google Scholar] [CrossRef]

- Zhang, T.; Chen, L.; Li, Y. AUV underwater positioning algorithm based on interactive assistance of SINS and LBL. Sensors 2016, 16, 42. [Google Scholar] [CrossRef]

- Manzanilla, A.; Reyes, S.; Garcia, M.; Mercado, D.; Lozano, R. Autonomous navigation for unmanned underwater vehicles: Real-time experiments using computer vision. IEEE Robot. Autom. Lett. 2019, 4, 1351–1356. [Google Scholar] [CrossRef]

- Li, D.; Ji, D.; Liu, J.; Lin, Y. A Multi-Model EKF Integrated Navigation Algorithm for Deep Water AUV. Int. J. Adv. Robot. Syst. 2016, 3. [Google Scholar] [CrossRef]

- Chen, Y.; Zheng, D.; Miller, P.A.; Farrell, J.A. Underwater Inertial Navigation with Long Baseline Transceivers: A Near-Real-Time Approach. IEEE Trans. Control Syst. Technol. 2016, 24, 240–251. [Google Scholar] [CrossRef]

- Ferrera, M.; Creuze, V.; Moras, J.; Trouvé-Peloux, P. AQUALOC: An underwater dataset for visual–inertial–pressure localization. Int. J. Rob. Res. 2019, 38, 1549–1559. [Google Scholar] [CrossRef]

- Autonomous Fiel Robotic Laboratory-Datasets. Available online: https://afrl.cse.sc.edu/afrl/resources/datasets/ (accessed on 30 December 2019).

- Hwang, J.; Bose, N.; Fan, S. AUV Adaptive Sampling Methods: A Review. Appl. Sci. 2019, 9, 3145. [Google Scholar] [CrossRef]

- Farr, N.; Bowen, A.; Ware, J.; Pontbriand, C.; Tivey, M. An integrated, underwater optical/acoustic communications system. In Proceedings of the OCEANS’10 IEEE SYDNEY, Sydney, Australia, 24–27 May 2010. [Google Scholar]

- Stojanovic, M.; Beaujean, P.-P.J. Acoustic Communication. In Springer Handbook of Ocean Engineering; Dhanak, M.R., Xiros, N.I., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 359–386. ISBN 978-3-319-16649-0. [Google Scholar]

- 920 Series ATM-925-Acoustic Modems-Benthos. Available online: http://www.teledynemarine.com/920-series-atm-925?ProductLineID=8 (accessed on 26 December 2019).

- Micromodem: Acoustic Communications Group. Available online: https://acomms.whoi.edu/micro-modem (accessed on 26 December 2019).

- LinkQuest. Available online: http://www.link-quest.com/html/uwm1000.htm (accessed on 26 December 2019).

- 48/78 Devices | EvoLogics. Available online: https://evologics.de/acoustic-modem/48-78 (accessed on 26 December 2019).

- Mats 3G, Underwater Acoustics-Sercel. Available online: http://www.sercel.com/products/Pages/mats3g.aspx (accessed on 26 December 2019).

- L3Harris | Acoustic Modem GPM300. Available online: https://www.l3oceania.com/mission-systems/undersea-communications/acoustic-modem.aspx (accessed on 26 December 2019).

- Micron Modem | Tritech | Outstanding Performance in Underwater Technology. Available online: https://www.tritech.co.uk/product/micron-data-modem (accessed on 26 December 2019).

- M64 Acoustic Modem for Wireless Underwater Communication. Available online: https://bluerobotics.com/store/comm-control-power/acoustic-modems/wl-11003-1/ (accessed on 26 December 2019).

- Yan, Z.; Wang, L.; Wang, T.; Yang, Z.; Chen, T.; Xu, J. Polar cooperative navigation algorithm for multi-unmanned underwater vehicles considering communication delays. Sensors 2018, 18, 1044. [Google Scholar] [CrossRef] [PubMed]

- Giodini, S.; Binnerts, B. Performance of acoustic communications for AUVs operating in the North Sea. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–6. [Google Scholar]

- Yang, T.; Yu, S.; Yan, Y. Formation control of multiple underwater vehicles subject to communication faults and uncertainties. Appl. Ocean Res. 2019, 82, 109–116. [Google Scholar] [CrossRef]

- Abad, A.; DiLeo, N.; Fregene, K. Ieee Decentralized Model Predictive Control for UUV Collaborative Missions. In Proceedings of the OCEANS 2017-Anchorage, Anchorage, AK, USA, 18–21 September 2017. [Google Scholar]

- Hallin, N.J.; Horn, J.; Taheri, H.; O’Rourke, M.; Edwards, D. Message anticipation applied to collaborating unmanned underwater vehicles. In Proceedings of the OCEANS’11 MTS/IEEE KONA, Waikoloa, HI, USA, 19–22 September 2017; pp. 1–10. [Google Scholar]

- Beidler, G.; Bean, T.; Merrill, K.; O’Rourke, M.; Edwards, D. From language to code: Implementing AUVish. In Proceedings of the UUST07, Kos, Greece, 7–9 May 2007; pp. 19–22. [Google Scholar]

- Rajala, A.; O’Rourke, M.; Edwards, D.B. AUVish: An application-based language for cooperating AUVs. In Proceedings of the OCEANS 2006, Boston, MA, USA, 18–21 September 2006. [Google Scholar]

- Potter, J.; Alves, J.; Green, D.; Zappa, G.; Nissen, I.; McCoy, K. The JANUS underwater communications standard. Underw. Commun. Networking, UComms 2014, 1, 1–4. [Google Scholar]

- Petroccia, R.; Alves, J.; Zappa, G. Fostering the use of JANUS in operationally-relevant underwater applications. In Proceedings of the 2016 IEEE Third Underwater Communications and Networking Conference (UComms), Lerici, Italy, 30 August–1 September 2016; pp. 1–5. [Google Scholar]

- Alves, J.; Furfaro, T.; Lepage, K.; Munafo, A.; Pelekanakis, K.; Petroccia, R.; Zappa, G. Moving JANUS forward: A look into the future of underwater communications interoperability. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–6. [Google Scholar]

- Petroccia, R.; Alves, J.; Zappa, G. JANUS-based services for operationally relevant underwater applications. IEEE J. Ocean. Eng. 2017, 42, 994–1006. [Google Scholar] [CrossRef]

- McCoy, K.; Djapic, V.; Ouimet, M. JANUS: Lingua Franca. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–4. [Google Scholar]

- Wiener, T.F.; Karp, S. The Role of Blue/Green Laser Systems in Strategic Submarine Communications. IEEE Trans. Commun. 1980, 28, 1602–1607. [Google Scholar] [CrossRef]

- Puschell, J.J.; Giannaris, R.J.; Stotts, L. The Autonomous Data Optical Relay Experiment: First two way laser communication between an aircraft and submarine. In Proceedings of the National Telesystems Conference, NTC IEEE 1992, Washington, DC, USA, 19–20 May 1992. [Google Scholar]

- Enqi, Z.; Hongyuan, W. Research on spatial spreading effect of blue-green laser propagation through seawater and atmosphere. In Proceedings of the 2009 International Conference on E-Business and Information System Security, Wuhan, China, 23–24 May 2009; pp. 1–4. [Google Scholar]

- Sangeetha, R.S.; Awasthi, R.L.; Santhanakrishnan, T. Design and analysis of a laser communication link between an underwater body and an air platform. In Proceedings of the 2016 International Conference on Next Generation Intelligent Systems (ICNGIS), Kottayam, India, 1–3 September 2017; pp. 1–5. [Google Scholar]

- Cossu, G.; Corsini, R.; Khalid, A.M.; Balestrino, S.; Coppelli, A.; Caiti, A.; Ciaramella, E. Experimental demonstration of high speed underwater visible light communications. In Proceedings of the 2013 2nd International Workshop on Optical Wireless Communications (IWOW), Newcastle upon Tyne, UK, 21–21 October 2013; pp. 11–15. [Google Scholar]

- Luqi, L. Utilization and risk of undersea communications. In Proceedings of the Ocean MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; pp. 1–7. [Google Scholar]

- Yan, Z.; Yang, Z.; Yue, L.; Wang, L.; Jia, H.; Zhou, J. Discrete-time coordinated control of leader-following multiple AUVs under switching topologies and communication delays. Ocean Eng. 2019, 172, 361–372. [Google Scholar] [CrossRef]

- Lichuan, Z.; Jingxiang, F.; Tonghao, W.; Jian, G.; Ru, Z. A new algorithm for collaborative navigation without time synchronization of multi-UUVS. Ocean. Aberdeen 2017, 2017, 1–6. [Google Scholar]

- Yan, Z.; Xu, D.; Chen, T.; Zhang, W.; Liu, Y. Leader-follower formation control of UUVs with model uncertainties, current disturbances, and unstable communication. Sensors 2018, 18, 662. [Google Scholar] [CrossRef]

- Cui, J.; Zhao, L.; Ma, Y.; Yu, J. Adaptive consensus tracking control for multiple autonomous underwater vehicles with uncertain parameters. ICIC Express Lett. 2019, 13, 191–200. [Google Scholar]

- Teck, T.Y.; Chitre, M.; Hover, F.S. Collaborative bathymetry-based localization of a team of autonomous underwater vehicles. Proc. IEEE Int. Conf. Robot. Autom. 2014, 2475–2481. [Google Scholar]

- Tan, Y.T.; Gao, R.; Chitre, M. Cooperative path planning for range-only localization using a single moving beacon. IEEE J. Ocean. Eng. 2014, 39, 371–385. [Google Scholar] [CrossRef]

- De Palma, D.; Indiveri, G.; Parlangeli, G. Multi-vehicle relative localization based on single range measurements. IFAC-PapersOnLine 2015, 28, 17–22. [Google Scholar] [CrossRef]

- Baylog, J.G.; Wettergren, T.A. A ROC-Based approach for developing optimal strategies in UUV search planning. IEEE J. Ocean. Eng. 2018, 43, 843–855. [Google Scholar] [CrossRef]

- Li, J.; Zhang, J.; Zhang, G.; Zhang, B. An adaptive prediction target search algorithm for multi-AUVs in an unknown 3D environment. Sensors 2018, 18, 3853. [Google Scholar] [CrossRef] [PubMed]

- Lv, S.; Zhu, Y. A Multi-AUV Searching Algorithm Based on Neuron Network with Obstacle. Int. Symp. Auton. Syst. 2019, 131–136. [Google Scholar]

- Bing Sun, D.Z. Complete Coverage Autonomous Underwater Vehicles Path Planning Based on Glasius Bio-Inspired Neural Network Algorithm for Discrete and Centralized Programming. IEEE Trans. Cogn. Dev. Syst. 2019, 73–84. [Google Scholar]

- Yan, Z.; Liu, X.; Zhou, J.; Wu, D. Coordinated Target Tracking Strategy for Multiple Unmanned Underwater Vehicles with Time Delays. IEEE Access 2018, 6, 10348–10357. [Google Scholar] [CrossRef]

- Palomeras, N.; Peñalver, A.; Massot-Campos, M.; Negre, P.L.; Fernández, J.J.; Ridao, P.; Sanz, P.J.; Oliver-Codina, G. I-AUV docking and panel intervention at sea. Sensors 2016, 16, 1673. [Google Scholar] [CrossRef]

- Ribas, D.; Ridao, P.; Turetta, A.; Melchiorri, C.; Palli, G.; Fernandez, J.J.; Sanz, P.J. I-AUV Mechatronics Integration for the TRIDENT FP7 Project. IEEE/ASME Trans. Mechatron. 2015, 20, 2583–2592. [Google Scholar] [CrossRef]

- Casalino, G.; Caccia, M.; Caselli, S.; Melchiorri, C.; Antonelli, G.; Caiti, A.; Indiveri, G.; Cannata, G.; Simetti, E.; Torelli, S.; et al. Underwater intervention robotics: An outline of the Italian national project Maris. Mar. Technol. Soc. J. 2016, 50, 98–107. [Google Scholar] [CrossRef]

- Simetti, E.; Wanderlingh, F.; Torelli, S.; Bibuli, M.; Odetti, A.; Bruzzone, G.; Rizzini, D.L.; Aleotti, J.; Palli, G.; Moriello, L.; et al. Autonomous Underwater Intervention: Experimental Results of the MARIS Project. IEEE J. Ocean. Eng. 2018, 43, 620–639. [Google Scholar] [CrossRef]

- Simetti, E.; Casalino, G.; Manerikar, N.; Sperinde, A.; Torelli, S.; Wanderlingh, F. Cooperation between autonomous underwater vehicle manipulations systems with minimal information exchange. In Proceedings of the OCEANS 2015-Genova, Genoa, Italy, 18–21 May 2015. [Google Scholar]

- Simetti, E.; Casalino, G. Manipulation and Transportation with Cooperative Underwater Vehicle Manipulator Systems. IEEE J. Ocean. Eng. 2017, 42, 782–799. [Google Scholar] [CrossRef]

- Conti, R.; Meli, E.; Ridolfi, A.; Allotta, B. An innovative decentralized strategy for I-AUVs cooperative manipulation tasks. Rob. Auton. Syst. 2015, 72, 261–276. [Google Scholar] [CrossRef]

- Cataldi, E.; Chiaverini, S.; Antonelli, G. Cooperative Object Transportation by Two Underwater Vehicle-Manipulator Systems. In Proceedings of the 2018 26th Mediterranean Conference on Control and Automation (MED), Zadar, Croatia, 19–22 June 2018; pp. 161–166. [Google Scholar]

- Heshmati-alamdari, S.; Karras, G.C.; Kyriakopoulos, K.J. A Distributed Predictive Control Approach for Cooperative Manipulation of Multiple Underwater Vehicle Manipulator Systems. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4626–4632. [Google Scholar]

- Prats, M.; Perez, J.; Fernandez, J.J.; Sanz, P.J. An open source tool for simulation and supervision of underwater intervention missions. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 2577–2582. [Google Scholar]

- Hernández-Alvarado, R.; García-Valdovinos, L.G.; Salgado-Jiménez, T.; Gómez-Espinosa, A.; Fonseca-Navarro, F. Neural network-based self-tuning PID control for underwater vehicles. Sensors 2016, 16, 1429. [Google Scholar] [CrossRef] [PubMed]

- García-Valdovinos, L.G.; Fonseca-Navarro, F.; Aizpuru-Zinkunegi, J.; Salgado-Jiménez, T.; Gómez-Espinosa, A.; Cruz-Ledesma, J.A. Neuro-Sliding Control for Underwater ROV’s Subject to Unknown Disturbances. Sensors 2019, 19, 2943. [Google Scholar] [CrossRef] [PubMed]

| Manufacturer | Product Name | Heading Accuracy/ Resolution | Pitch and Roll Accuracy/ Resolution | Data Rate (Hz) | Depth Rated (m) |

|---|---|---|---|---|---|

| Impact Subsea | ISM3D [31] | ±0.5°/0.1° | ±0.07°/0.01° | 250 | 1000–6000 |

| Seascape Subsea | Seascape UW9XIMU-01 [32] | ±0.5°/0.01° | ±0.5°/0.01° | 400 | 750 |

| Inertial Labs | AHRS-10P [33] | ±0.6°/0.01° | ±0.08°/0.01° | 200 | 600 |

| SBG Systems | Ellipse2-N [34] | ±1.0°/- | ±0.1°/- | 200 | - |

| TMI-Orion | DSPRH [35] | ±0.5°/0.1° | ±0.5°/0.1° | 100 | 500–2000 |

| VectorNav | VN-100 [36] | ±2.0°/0.05° | ±1.0°/0.05° | 400 | - |

| XSENS | MTi-600 [37] | ±1.0°/- | ±0.2°/- | 400 | - |

| Name | Type | Accuracy Range (m) | Operating Depth Range (m) |

|---|---|---|---|

| EvoLogics S2C R LBL [42] | LBL | Up to 0.15 | 200–6000 |

| GeoTag seabed positioning system [43] | LBL | Up to 0.20 | 500 |

| µPAP acoustic positioning [44] | USBL | Not specified | 4000 |

| SUBSONUS [45] | USBL | 0.1–5 | 1000 |

| UNDERWATER GPS [46] | SBL/USBL | 1% of distance range (1 m for a 100 m operating range) | 100 |

| Method | Type | Description | Applications |

|---|---|---|---|

| landmark-based maps | 2D/3D | Models the environment as a set of landmarks extracted from features as points, lines, corners, etc. | Localization and mapping [75]. |

| Occupancy grid maps | 2D | Discretizes the environment in cells and assigns a probability of occupancy of each cell. | Exploring and mapping [76]. |

| Raw Dense Representations | 3D | Describes the 3-D geometry by a large unstructured set of points or polygons. | Obstacle avoidance and visualization [77]. |

| Boundary and Spatial-Partitioning Dense Representations | 3D | Generates representations of boundaries, surfaces, and volumes. | Obstacle avoidance and manipulation [78]. |

| Navigation Technology | Approaches | Information Available | Accuracy | Range | Results |

|---|---|---|---|---|---|

| Acoustic | SONAR | Distance from obstacles. | Depending on distance from obstacles, from 5–10 cm to more than a meter (10–120 cm). | From 5 m up to hundreds of meters from obstacles. | Experimental in real conditions. |

| Acoustic range (LBL, SBL, USBL). | Position | Depending on distance from hydrophone array and the frequency, from some centimeters up to tens of meters. | Up to tens of meters from the array. | Experimental in real conditions. | |

| Geophysical | Gravity, geomagnetic, TAN, TRN, TBN | Position | Meters. Depending on the map resolution and filter applied. | Kilometers from initial position. | Simulation, Experimental under controlled conditions. |

| Optical | Light sensors. | Position and orientation relative to a target. | Up to 20 cm for position and 10° for orientation. | 1–20 m from markers. | Simulation, Experimental under controlled conditions. |

| Cameras | Up to 1 cm for position and 3° for orientation. | 1–20 m from markers. | Experimental in real conditions. | ||

| SLAM | Acoustic | Position and orientation relative to the mapped environment. | From some centimeters up to more than a meter. | Up to tens of meters from targets. | Experimental in real conditions. |

| Cameras | 1–10 m from targets. | Simulations, Experimental under controlled conditions. | |||

| Sensor fusion | ELC, LC, TC. | Position, orientation and velocity. | Depending on the approach and filter applied, accumulative error can be reduced up to some meters (5–20) | Kilometers from initial position. | Simulations, Experimental. |

| Name | Max Bit Rate (bps) | Range (m) | Frequency Band (kHz) |

|---|---|---|---|

| Teledyne Benthos ATM-925 [102] | 360 | 2000–6000 | 9–27 |

| WHOI Micromodem [103] | 5400 | 3000 | 16–21 |

| Linkquest UWM 1000 [104] | 7000 | 350 | 27–45 |

| Evologics S2C R 48/78 [105] | 31,200 | 1000 | 48–78 |

| Sercel MATS 3G 34 kHz [106] | 24,600 | 5000 | 30–39 |

| L3 Oceania GPM-300 [107] | 1200 | 45,000 | Not specified |

| Tritech Micron Data Modem [108] | 40 | 500 | 20–28 |

| Bluerobotics Water Linked M64 Acoustic Modem [109] | 64 | 200 | 100–200 |

| Missions | Applications | Approaches | Results | |

|---|---|---|---|---|

| Collaborative surveillance | Searching Tracking Mapping Inspecting | Game theory. | Acoustic systems | Simulation and Experimental |

| Dynamic prediction theory. Glasius bio-inspired neural networks. Consensus dynamics. | Active landmarks and cameras | |||

| Collaborative intervention | Recovering Manipulating | Decentralized strategies Minimal information exchange strategy Nonlinear model predictive control | Simulation | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

González-García, J.; Gómez-Espinosa, A.; Cuan-Urquizo, E.; García-Valdovinos, L.G.; Salgado-Jiménez, T.; Cabello, J.A.E. Autonomous Underwater Vehicles: Localization, Navigation, and Communication for Collaborative Missions. Appl. Sci. 2020, 10, 1256. https://doi.org/10.3390/app10041256

González-García J, Gómez-Espinosa A, Cuan-Urquizo E, García-Valdovinos LG, Salgado-Jiménez T, Cabello JAE. Autonomous Underwater Vehicles: Localization, Navigation, and Communication for Collaborative Missions. Applied Sciences. 2020; 10(4):1256. https://doi.org/10.3390/app10041256

Chicago/Turabian StyleGonzález-García, Josué, Alfonso Gómez-Espinosa, Enrique Cuan-Urquizo, Luis Govinda García-Valdovinos, Tomás Salgado-Jiménez, and Jesús Arturo Escobedo Cabello. 2020. "Autonomous Underwater Vehicles: Localization, Navigation, and Communication for Collaborative Missions" Applied Sciences 10, no. 4: 1256. https://doi.org/10.3390/app10041256

APA StyleGonzález-García, J., Gómez-Espinosa, A., Cuan-Urquizo, E., García-Valdovinos, L. G., Salgado-Jiménez, T., & Cabello, J. A. E. (2020). Autonomous Underwater Vehicles: Localization, Navigation, and Communication for Collaborative Missions. Applied Sciences, 10(4), 1256. https://doi.org/10.3390/app10041256