1. Introduction

Many kinds of sound signals are applied to convey information in Japanese public spaces. These signals can be divided into three groups. First, alarm signals are used to provide information about potentially life-threatening events. Second, attention signals are used to provide information about basic infrastructure, such as the locations of stairs, toilets, and ticket gates. Third, announcements are used to convey information via speech.

The guidelines for improving the facilitation of transportation for passenger facilities of public transportation in Japan [

1] recommend the use of simulated birdsong for attention signals that inform visually impaired people about the location of stairs in train stations. It is required to adjust the sound pressure levels (SPLs) of the signals to avoid excessive or insufficient volume, and to ensure that they cause no discomfort to users, staff, or surrounding residents. However, a previous study found that more than 40% of visually impaired people reported that such signals were hard to detect [

2]. Thus, there is a need for the development of attention signals that are not unpleasant and can be presented at high SPLs.

Introducing birdsong into public spaces has been found to increase the subjective pleasantness of the soundscapes [

3]. Among natural sounds, birdsong is reported to be judged as the most effective and beneficial type of sound for improving sound environments [

4,

5,

6]. Similar to birdsong, insect song has been shown to have a positive effect on soundscapes [

5,

7]. Thus, birdsong and insect song are less likely to negatively affect sound environments in public spaces, even when presented at high SPLs.

Previous soundscape studies of birdsong and insect song have reported positive effects. However, users’ preferences for various types of birdsong and insect song are currently unclear. Specific types of birdsong or insect song may have a more positive impact on sound environments in public spaces. The aim of the current study was to clarify which types of birdsong and/or insect song are preferred, and to determine the dominant physical characteristics related to these preferences.

2. Materials and Methods

2.1. Subjective Preference Tests

Since the guidelines [

1] recommend a duration of 5 s or less for the sound signals, birdsongs and insect songs with one phrase less than 5 s were selected for subjective preference tests. We tested 18 types of birdsong that were recorded using a dummy-head microphone (KU100, Nuemann, Berlin, Germany) at a sampling rate of 48 kHz and a sampling resolution of 24 bits (WILD BIRDS, Victor, Tokyo, Japan). The birdsong stimuli are summarized in

Table 1.

Figure 1 and

Figure 2 show the temporal waveforms and spectrograms of the 18 birdsong stimuli. We tested 16 types of insect song that were recorded using a linear pulse code modulation recorder microphone (LS-10, OLYMPUS, Tokyo, Japan) at a sampling rate of 44.1 kHz and a sampling resolution of 16 bits (Japan NatureSounds Guide, UEDA NATURE SOUND, Tokyo, Japan). The insect song stimuli are summarized in

Table 2.

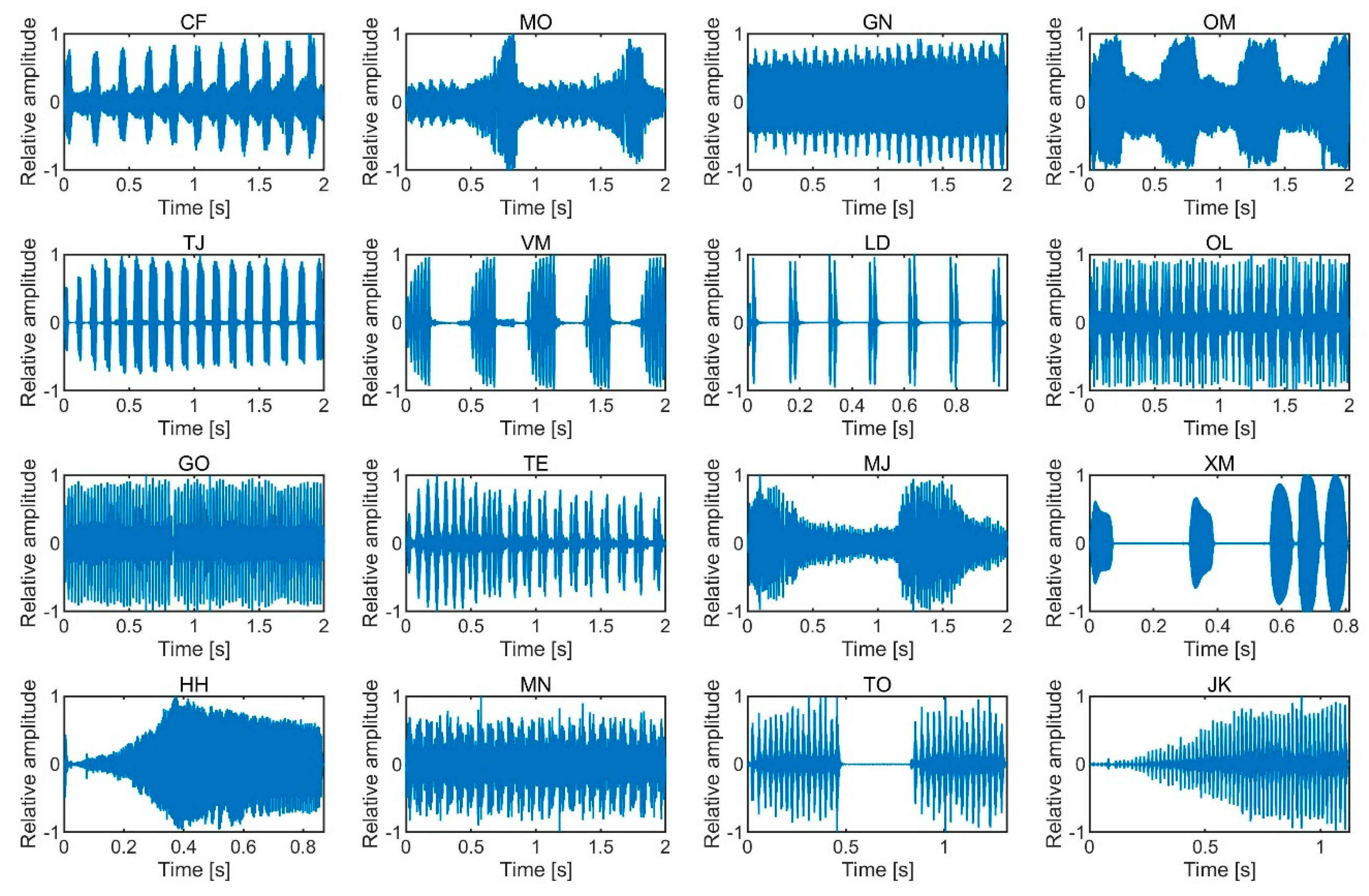

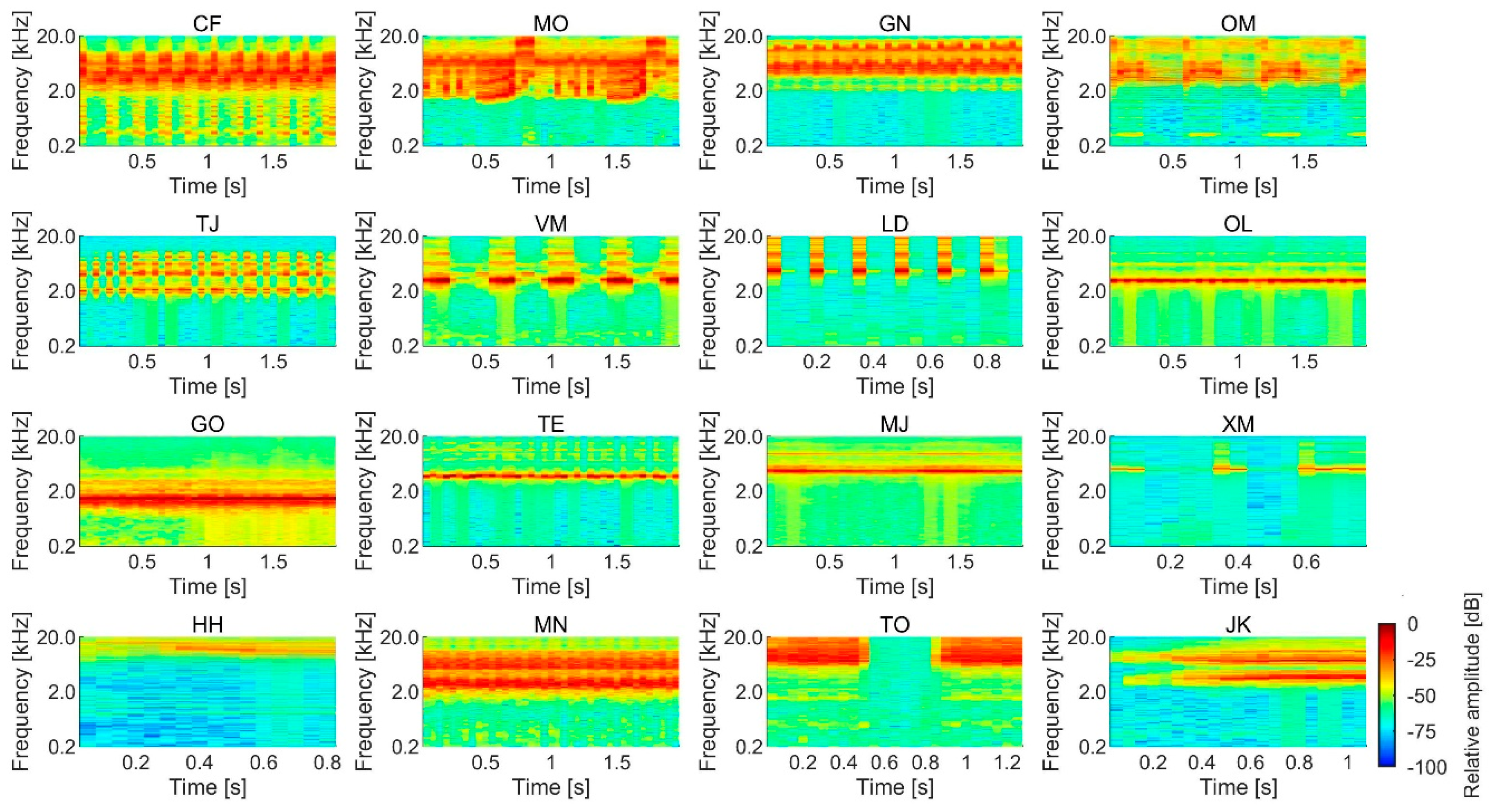

Figure 3 and

Figure 4 show the temporal waveforms and spectrograms of the 16 insect song stimuli. The duration of the stimuli was between 0.4 and 2.0 s.

The experiments were conducted in a soundproof and comfortable thermal environment. The stimuli were presented to participants’ ears through a headphone amplifier (HDVD800, Sennheiser, Wedemark, Germany) and headphones (HD800, Sennheiser, Wedemark, Germany). All stimuli were presented at an equivalent continuous A-weighted SPL (

LAeq) of 70 dBA, measured over a time period

T, which was the stimulus duration in this experiment. This was because the guidelines [

1] recommend that the

LAeq of the sound signals be at least 10 dB greater than the

LAeq of the background noise, which is approximately 60 dBA in the train stations of Japan [

8].

LAeq was verified using a dummy head microphone (KU100, Neumann, Berlin, Germany) and a sound calibrator (Type 4231, Brüel & Kjær, Narum, Denmark).

Fifteen (eleven males; four females) and sixteen (eleven males; five females) participants with normal hearing and an age range of between 20 and 41 years (median age of 24.5 and 24.4 years and standard deviation of 7.2 and 6.9) took part in the subjective preference for birdsongs and insect songs, respectively. It is considered, from our previous studies [

9], that at least ten participants are necessary for the statistical power and generality of the results of these types of psychoacoustic experiments. Informed consent was obtained from each participant after the nature of the study was explained. The study was approved by the ethics committee of the National Institute of Advanced Industrial Science and Technology (AIST) of Japan.

Scheffe’s paired comparison methods [

10] were conducted for all combinations of pairs (i.e., 153 pairs (N[N-1]/2, N = 18) for bird songs and 120 pairs (N[N-1]/2, N = 16) for insect songs) of stimuli by interchanging the order and presenting the pairs in a random order. Scheffe’s method assigns one combination to each participant for comparison [

10], the modified Scheffe’s method assigns all combinations to each participant for comparison, and this is repeated while changing the participant [

11,

12]. This is appropriate when only a few participants are available. The silent duration between stimuli in each pair was 1.0 s. After the presentation of each pair, the participants were required to judge their preference based on seven-point scorings. The scores of preferences for each participant were defined as the scale values (SVs) of preference. The duration of a single experimental session was approximate 25 to 35 min. Tests for birdsongs and insect songs were conducted separately. The normality assumption of SVs of the preference for each stimulus was verified using the Shapiro–Wilk test [

13]. The significance of the main effects was tested by analysis of variance (ANOVA) [

10,

11,

12].

2.2. Physical Parameters

Parameters obtained from the autocorrelation function (ACF) have been proposed for noise and sound quality evaluations [

9,

14]. To determine ACF parameters, the normalized ACF of the signals recorded by the microphone,

p(

t), is defined by:

where:

2T is the integration interval and

p’(

t) =

p(

t)*

se(

t), where

se(

t) is the ear sensitivity corresponding to an A-weighted filtering, for the sake of expedience [

9,

14]. Normalized ACF is calculated using the geometric mean of the energy at zero and the energy at zero + τ to ensure that the normalized ACF satisfies 0 ≤ φ(τ) ≤ 1.

The ACF parameters are calculated from the normalized ACF. τ

1 and φ

1 are defined as the delay time and the amplitude of the first maximum peak. τ

1 and φ

1 are related to the perceived pitch and the pitch strength of sounds, respectively [

9,

15]. Higher values of τ

1 and φ

1 indicate that the sound has a lower or a stronger pitch, respectively. The effective duration of the envelope of the normalized ACF, τ

e, is defined by the ten-percentile delay and represents a repetitive feature containing the sound source itself [

14]. The width of the first decay, W

φ(0), is defined using the delay time interval at a normalized ACF value of 0.5. W

φ(0) is equivalent to the spectral centroid [

9]. Higher values of W

φ(0) indicate that the sound includes a higher proportion of low-frequency components.

Psychoacoustic parameters, such as loudness, sharpness, and roughness, have been applied for sound quality evaluation in previous studies [

16]. Loudness is equivalent to the physical strength of a sound, and includes the transfer function of the outer and middle ear and masking effects [

17]. Sharpness is a measure of the high-frequency component of a sound. It is calculated using a sound’s partial loudness and a weighting function [

16]. Roughness quantifies the subjective perception of the fast (15–300 Hz) amplitude variations of a sound. It is calculated based on the excitation pattern in the critical-band filter banks [

18].

A range of parameters, such as zero-crossing rate (ZCR), entropy, spectral centroid, and so on, have been introduced for the analysis of audio features [

19] and applied to soundscape classification [

20,

21]. The ZCR is the number of times the signal changes from positive to negative values or vice versa, divided by the length of signal time. ZCR is considered as a measure of the noisiness of a signal and the spectral characteristics of a signal in a rather coarse manner. The short-term entropy of energy,

H(

i), of the sequence

ej in the

K sub-frame of fixed duration is defined as:

where:

where

Esubframek is the energy for each sub-frame. The entropy of energy is considered as a measure of abrupt changes in a signal. The spectral centroid and spectral spread characterize the spectral position and shape. Spectral centroid,

Ci, is the center of gravity of the spectrum, and the

Ci value of the

ith audio frame is defined as:

Spectral spread,

Si, is the second central moment of the spectrum and is defined as:

Spectral entropy measures the peakedness of the spectrum and has been used to discriminate between speech and music. The spectral entropy is calculated in a similar way to the entropy of energy in the frequency domain. Spectral flux,

F(i,i−1), is a measure of the variability of the spectrum over time and is defined as:

where

NEi(

k) is the

kth normalized digital Fourier transform coefficient at the

ith frame. Spectral rolloff is a measure of the skewness of the spectral shape. The spectral rolloff is defined as the frequency below which a certain percentage (usually around 90%) of the distribution is concentrated. The spectral rolloff frequency is usually normalized so that it takes values between 0 and 1.

We calculated τ1, φ1, Wφ(0), τe, loudness, sharpness, roughness, ZCR, entropy, spectral centroid, spectral spread, spectral entropy, spectral flux, and spectral rolloff to characterize the birdsongs and insect songs qualitatively. The integration interval or windows length used for the analysis was 0.5 s, and the running step was 0.1 s in all calculations. The average values of physical parameters were used as representative values thereafter. The analyses were carried out using a MATLAB-based program (Mathworks, Natick, MA, USA).

2.3. Multiple Regression Analysis

In this stimulus configuration, sharpness and spectral centroid were highly correlated with Wφ(0), ZCR, spectral rolloff, and each other (|r| > 0.80, p < 0.01). ZCR was highly correlated with spectral entropy and spectral rolloff (|r| > 0.70, p < 0.01). Therefore, sharpness, spectral centroid, and ZCR were excluded from the multiple regression analysis.

To calculate the effects of each physical parameter on SVs of preference, multiple linear regression analyses were carried out using SPSS statistical analysis software (SPSS version 22.0, IBM Corp., Armonk, NY, USA). The forward–backward stepwise selection was carried out to identify and quantify the significant physical parameters of subjective preference. The criteria applied for entry and removal were based on the significance level of the f-value and set at 0.05 and 0.10, respectively. Parameters with a variance inflation factor of 10 or higher were excluded to avoid multicollinearity.

3. Results and Discussion

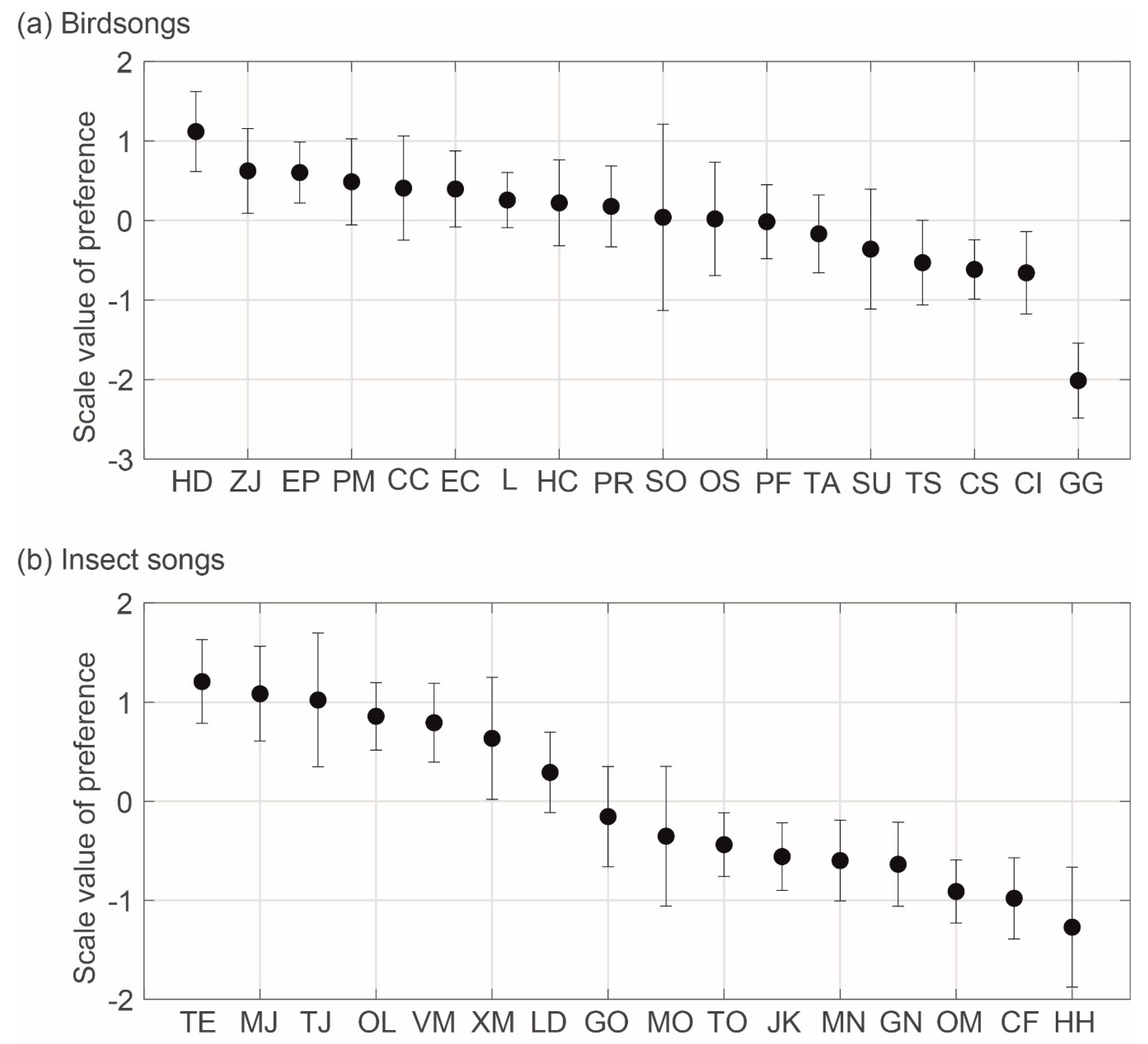

ANOVA for the SV of the preference revealed that the main effect (i.e., difference between the stimuli) was statistically significant (

F (17, 4184) = 295.7,

p < 0.001, for birdsong,

F (15, 3479) = 395.1,

p < 0.001, for insect song).

Figure 5 shows the SV of preferences to birdsong and insect song. The most preferred birdsong was

Horornis diphone.

Horornis diphone is one of the three major species of Passeriform in Japan and is considered to produce a beautiful twittering sound. The most preferred insect song was

Teleogryllus emma.

Teleogryllus emma is the largest Grylloidea in Japan, known colloquially as a cricket, and its song is often heard throughout Japan in autumn. It is well known that lexical information on words and contextual information on sentences improve speech intelligibility and play an important role in verbal communication [

22]. That is, word familiarity plays an important role in intelligibility. Similarly, familiarity with birdsongs and insect songs may affect preferences. Judgments differed among participants regarding preferences for

Streptopelia orientalis. All participants judged that

Garrulus glandarius was the least preferred birdsong, producing a sound resembling a creaky voice. Two birdsongs,

Zosterops japonicus and

Parus minor, and two insect songs,

Teleogryllus emma and

Tanna japonensis, have similar time patterns and scale values of preference, suggesting that time patterns affect preference.

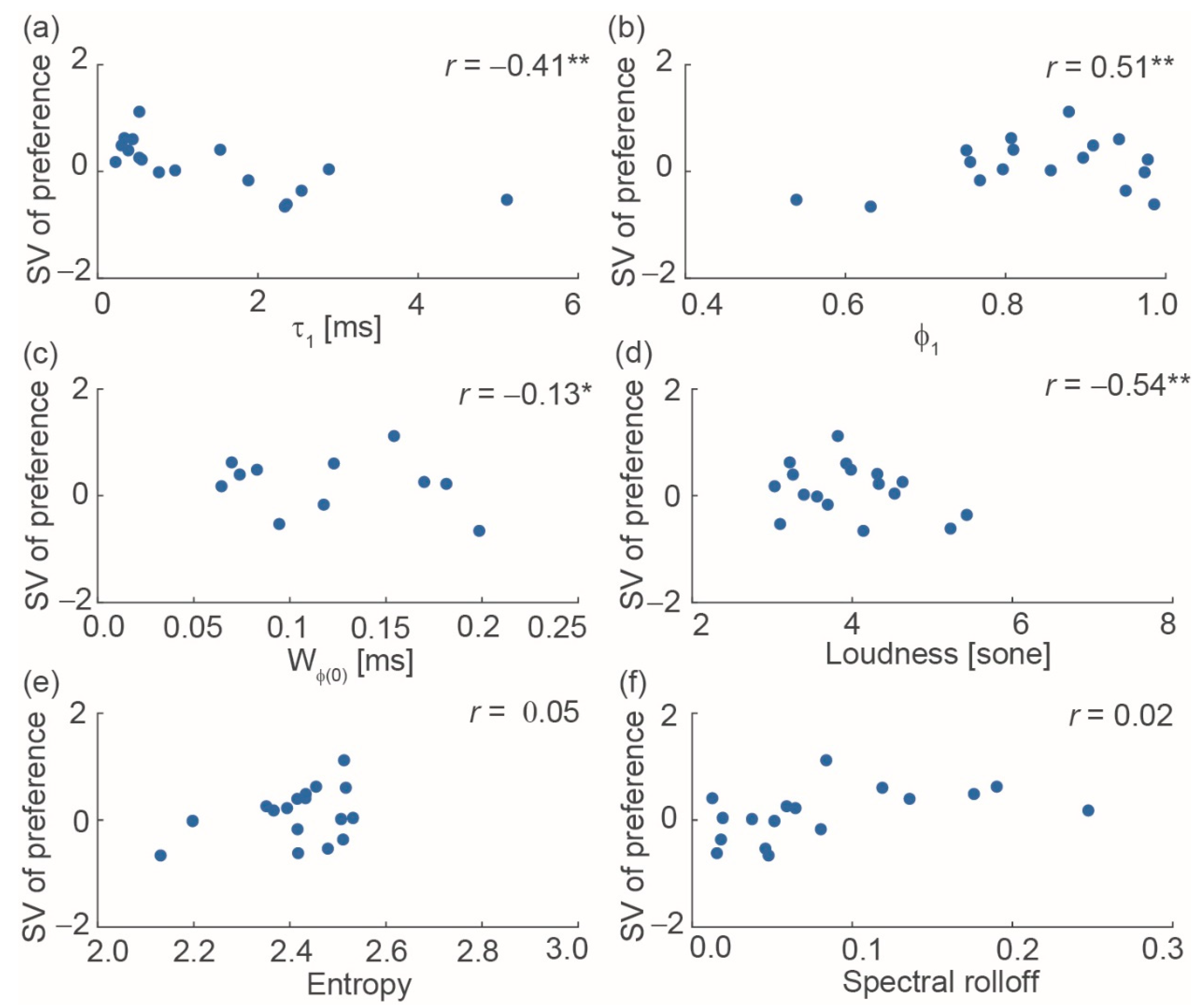

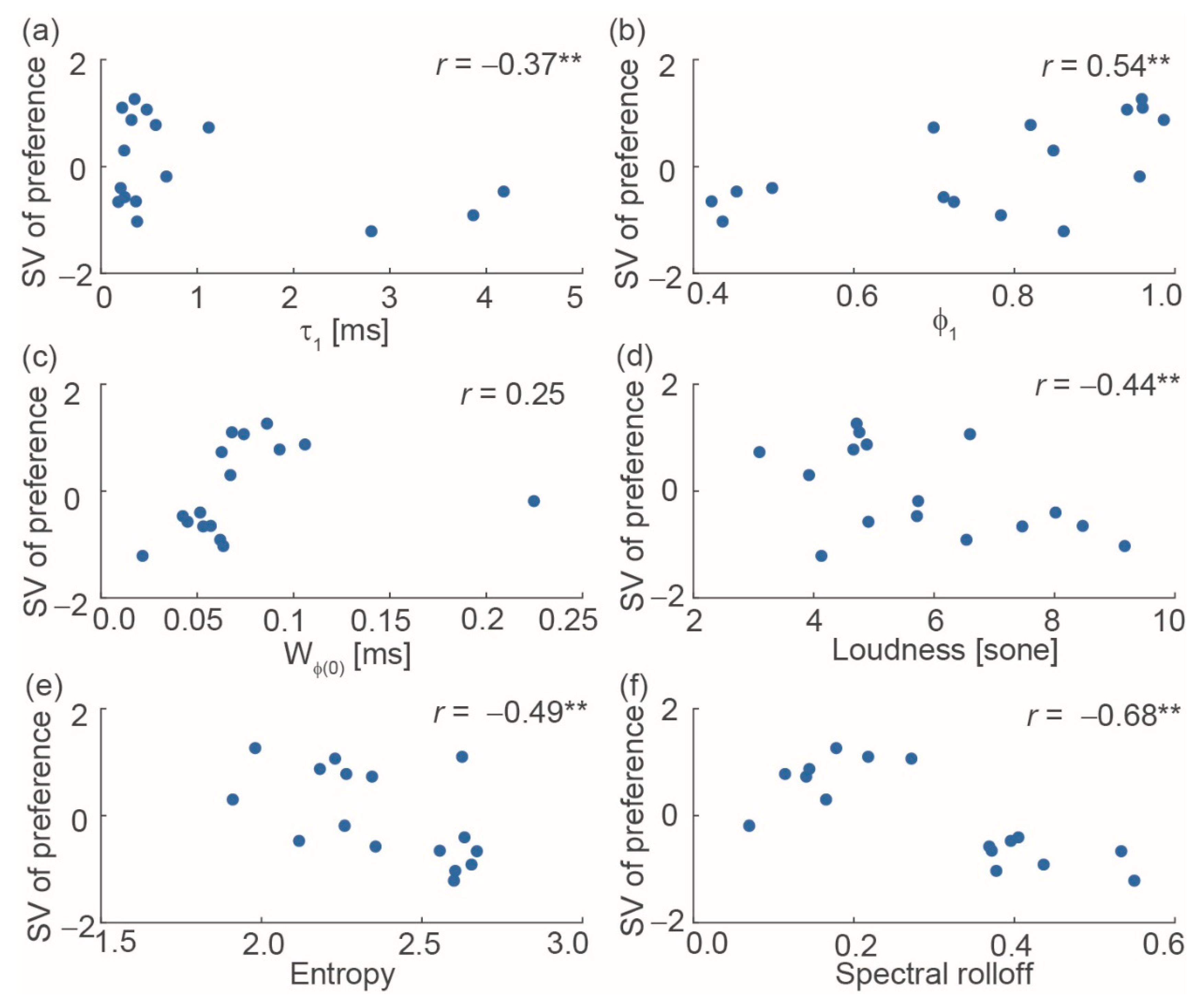

Table 3 shows the correlation coefficients between each physical parameter and subjective preference for birdsongs and insect songs.

Figure 6 and

Figure 7 show the relationship between representative physical parameter and subjective preference for birdsongs and insect songs. SVs of preference for birdsong and insect song stimuli had a high positive correlation with φ

1 (r = 0.51,

p < 0.01; r = 0.54,

p < 0.01), and a high negative correlation with τ

1 (r = −0.41,

p < 0.01; r = −0.37,

p < 0.01) and loudness (r = −0.54,

p < 0.01; r = −0.44,

p < 0.01). The correlation coefficients between subjective preference and duration of the birdsong and insect song stimuli were −0.17 and 0.17, respectively.

Sounds with higher φ

1 have a salient pitch [

15] and noises with lower φ

1, such as those generated by a refrigerator [

23] or an air conditioner [

24], tend to cause more annoyance. These findings suggest that birdsong and insect song stimuli with a salient pitch, which have φ

1 values around 0.9, are preferable. It has been reported that the tonal component, which is correlated with φ

1 value, causes annoyance [

25]. Although this is not consistent with the present findings, it can be explained by periodic variations in the amplitude and frequency of birdsongs and insect songs. The vibrato-like variation can decrease annoyance.

Since lower τ

1 indicates higher pitch, a negative correlation between subjective preference and τ

1 values suggests that birdsongs and insect songs with a higher pitch are preferable. However, this finding is not consistent with previous findings regarding subjective annoyance caused by refrigerator noises [

23]. These findings suggest that sounds with a higher and more salient pitch are preferred, whereas sounds with a higher but unnoticeable pitch cause annoyance.

The negative correlations between subjective preference and loudness meant that quieter birdsong stimuli were preferable. Since SPL was fixed at 70 dBA in the current experiment, loudness may be a useful index for identifying birdsong stimuli that are pleasant when presented at high SPLs.

Insect song stimuli with lower entropy were preferred, suggesting that abrupt changes in SPL were not preferred. Lower spectral entropy and rolloff were preferred, suggesting that insect song stimuli with broader bandwidth were preferred. Thus, spectral content appeared to play a more important role in determining preferences for insect song stimuli than for birdsong stimuli.

A multiple linear regression analysis was performed with the SVs of preference for birdsong and insect song stimuli as the outcome variable. The final model showed that φ

1, τ

1, loudness, and entropy were significant parameters for birdsong stimuli, while φ

1, W

φ(0), τ

e, loudness, and spectral rolloff were significant parameters for insect song stimuli:

The ANOVA indicated the statistical significance of the model (

F (4, 265) = 63.33,

p < 0.001, for birdsong,

F (5, 250) = 101.84,

p < 0.001, for insect song). The adjusted coefficient of determination, R

2, was 0.54 for birdsong and 0.66 for insect song. The standardized partial regression coefficients in Equations (8) and (9) are summarized in

Table 4.

The maximum peak amplitude of the ACF, φ1, which signifies pitch salience and loudness were significant predictive variables for both birdsong and insect song stimuli. Although the partial regression coefficients of loudness for birdsong stimuli were negative, those for insect song stimuli were positive. The simple correlation between subjective preference and loudness was negative. Therefore, this difference suggests that the contributions from predictive variables other than loudness were much larger for insect song stimuli than for birdsong stimuli. Similar to the simple correlation analysis, spectral rolloff was also found to be a significant factor for the prediction of preferences for insect song stimuli.

The width of the first decay of the ACF, W

φ(0), which is equivalent to the spectral centroid, exhibited a larger and negative standardized partial regression coefficient for insect song stimuli. However, W

φ(0), was not significant for birdsong stimuli. This finding could be explained by pitch strength. Birdsong has relatively higher φ

1 values, meaning that birdsong stimuli have a stronger pitch; in this case, pitch is mainly judged by the delay time of the maximum peak of the ACF, τ

1. Insect song stimuli have relatively lower φ

1 values, meaning that insect song stimuli have a weaker pitch; in this case, pitch is mainly judged by W

φ(0). Similar results were previously obtained in a study of pitch sensation for airplane noise [

26].

4. Conclusions

In the current study, preferable birdsong and insect song stimuli and the dominant physical parameters related to preferences were investigated. The results indicated that bird and insect songs that are commonly found in Japan, Horornis diphone and Teleogryllus emma, were rated as the most preferable bird and insect song stimuli. Preferences for birdsong and insect song stimuli may differ in other countries. The maximum peak amplitude of the ACF, φ1, and loudness were significant predictive variables for both birdsong and insect song stimuli. Pitch variables related to the parameters of delay time of the maximum peak of the ACF, τ1 for birdsong and the width of the first decay of the ACF, Wφ(0), for insect song, were significant parameters. Spectral rolloff, which measured the skewness of the spectral shape, provided the largest contribution to subjective preference for insect songs. These findings gave insights into the future design of attention signals to utilize physical parameters, such as φ1, τ1 and loudness. For example, attention signals with higher φ1 and lower τ1 and loudness values are preferable in equal LAeq conditions.

The current findings suggest that it may be useful for future research to develop attention signals that are preferable, even when presented at high SPLs. Current security guidelines in Japan recommend the use of simulated birdsong stimuli as attention signals rather than real birdsong stimuli. Based on the current results, combining the advantages of birdsong A and insect song B may enable the development of a more pleasing sound. In addition, the present findings need validation by participants who are visually impaired, because there were no visually impaired people among the participants.