1. Introduction

Malignant melanoma is one of the most lethal skin tumors due to its high metastasis capacity and high chemoresistance. In recent years, skin cancer has become one of the leading causes of death. As of 2014, in the Americas, there were 2.8 million new cases and 1.3 million deaths as a result of skin cancer. Projections indicate that the number of cancer deaths will increase from 1.3 million in 2014 to 2.1 million in 2030. Approximately 47% of cancer deaths in the Americas occurred in Latin America and the Caribbean; research suggests that this cancer rate is due to the amount of sun exposure and solar radiation levels [

1].

The International Labor Organization (ILO) reports that 56% of the rural of the world population has no health insurance, compared to 22% of people living in urban areas. Specifically, in Mexico, cutaneous melanoma represents 23% of skin tumors seen at the National Cancer Institute. The number of patients with melanoma corresponding to medium and low socioeconomic strata is 77.1%. According to an analysis of the number of cases of melanoma in recent years, there is an increase of approximately 500%, which indicates an alarming problem [

2,

3,

4].

In medical centers, the conventional tools used to diagnose melanomas are digital dermoscopy analysis. These tools are non-invasive techniques based on the use of incident light to achieve better visualization of the skin used for dermatological imaging. Their accuracy depends on prior training with the method. Since these techniques are not direct methods but are only part of the stage to improve the visualization of the skin, the precision lies in the dermatology specialist who evaluates the image delivered by the device. Therefore, it is essential to develop automated equipment that guarantees a certain level of reliability and efficiency [

5,

6,

7,

8,

9,

10,

11,

12].

Biomedical image processing has become one of the most advanced fields in computer vision. The fundamental objective of the projects carried out is to improve the medical information obtained, which means an increase in the diagnosis and, therefore, in its reliability. Medical images are mainly characterized by the difficulty of generating valid data to process. The pictures have a large amount of noise and considerable variability in their properties [

13,

14]. Image processing consists of a process in which the picture attributes are extracted or isolated to enhance image results. The impact of this discipline has been very relevant, and it has been applied in a number of different disciplines, such as medicine, telecommunications, industrial process control, and entertainment [

7]. According to the literature, image processing can be a useful tool for melanoma detection [

15].

Melanoma is a type of skin cancer formed in cells known as melanocytes, responsible for the production of melanin (a pigment that gives skin its color) [

16,

17]. The American Academy of Dermatology uses the ABCD rule to identify melanoma. The ABCD rule was introduced in 1985 [

18,

19] and states that the four main descriptors for identification are:

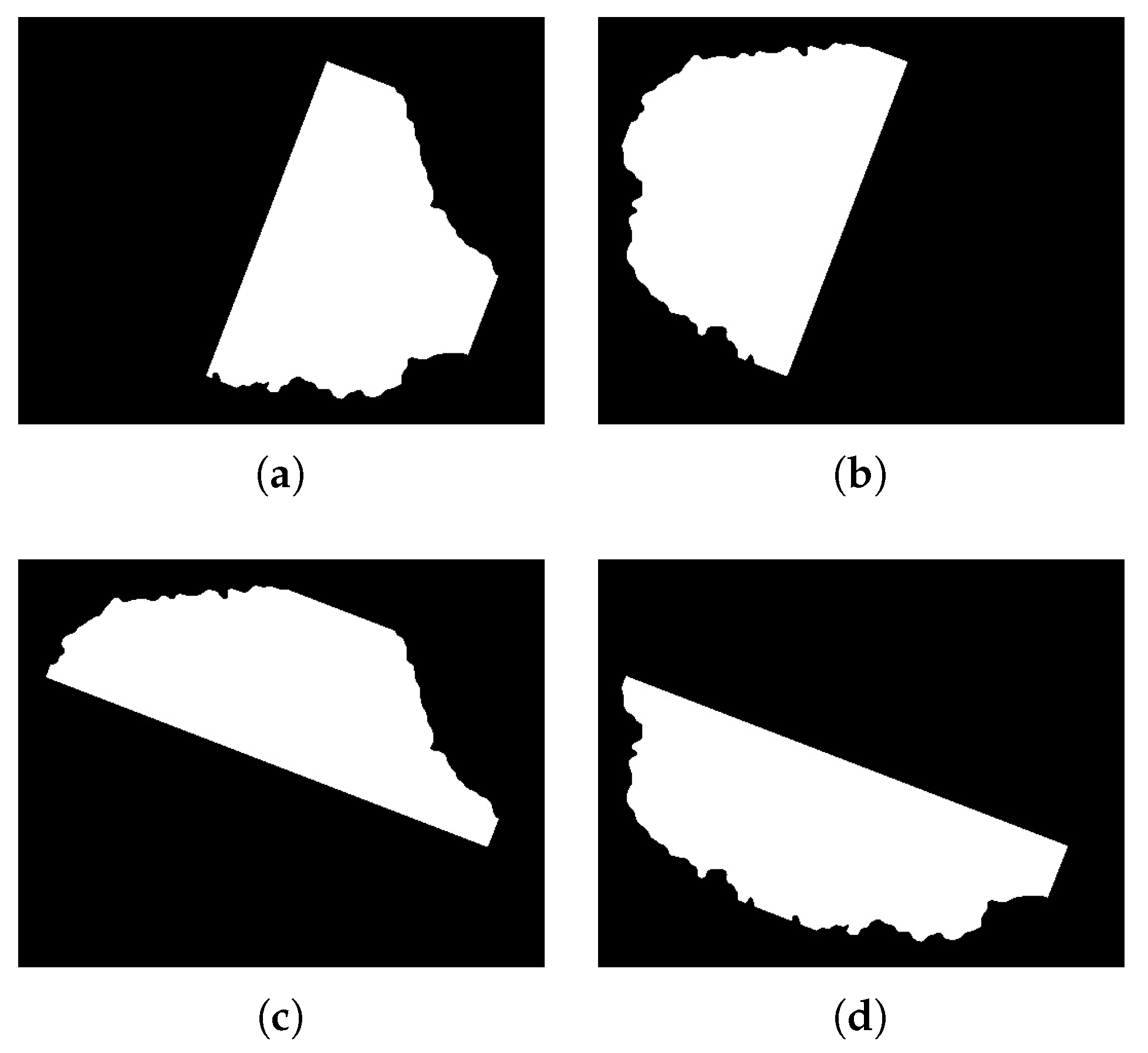

A: Symmetry

B: Edge

C: Color

D: Size

Each of the above descriptors are estimated and weighted according to the technique used to classify them. Although throughout the years several methods have been developed to detect melanoma using the evaluation of these descriptors, they differ from each other in the way to estimate them [

20,

21,

22,

23,

24,

25,

26].

Kostopoulos et al. [

27] present an algorithm which is focused on simple images, evaluates the descriptors of the ABCD rule, and uses probabilistic neural networks for their classification. Symmetry is estimated by identifying the contour and the maximum axis that can be formed considering the center. As for color, the RGB color space is used, and the histogram is determined. The best efficiency was achieved with multi-center images and was around 90%. In the same year, Do et al. [

28] designed a mobile system for melanoma detection. The cell phone captures the images with visible light and evaluates the four descriptors of the ABCD rule. A Gaussian model was used to estimate its descriptors; the dataset contained different skin tones. Achieved efficiency average was 85%. The application is designed to detect only one melanoma for each image.

Giotis et al. [

29] propose a supporting system for specialists focused on this area. The system works with simple images, performs segmentation, and calculates color (RGB as color space) and texture. The specialists provide a set of visual attributes, and according to the results of the descriptors, a decision is taken, so it can thus be regarded a semi-automatic system. The ranking is obtained by the majority of votes in the predictions. They reported an efficiency of 81%.

Zamani et al. [

30] worked with the Daugman Transformation for the extraction of image characteristics and achieved better efficiency in the detection of melanoma. They do not evaluate symmetry; they only estimate the shape, color, and texture. During the evaluation of the different descriptors, the image is processed in both RGB and CIELAB color spaces. The method focused on dermatological images, used Support Vector Machine (SVM) for classification, and achieved an efficiency close to 96%.

Li et al. [

31] use a double classification to achieve greater effectiveness using dermatological images. They reached an efficiency between 75% and 92%. Yuan et al. [

32] use dermatological images. They use several color spaces to improve the efficiency in the detection of melanoma; some are RGB and CIELAB. The study focuses on contour detection and color dispersion. They achieved a Jaccard Index of 0.765.

Table 1 shows relevant research in the literature focused on the detection of melanoma using image processing.

The aforementioned studies have an efficiency between 70% and 96%, and they are only designed for one type of image (dermatological or simple), mostly working with RGB and CIELAB color space, and symmetry evaluation is performed in a similar way or the descriptor is not considered.

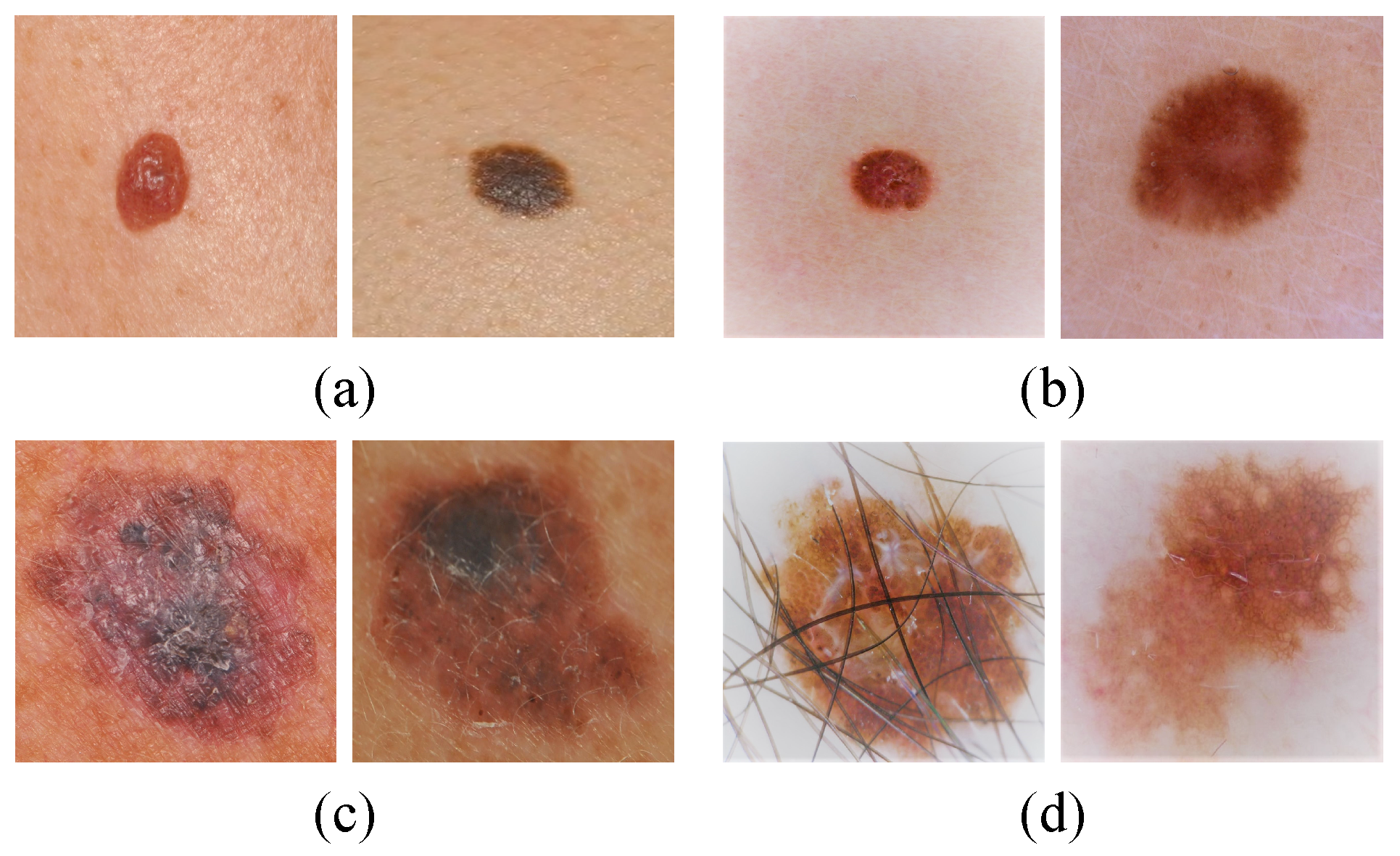

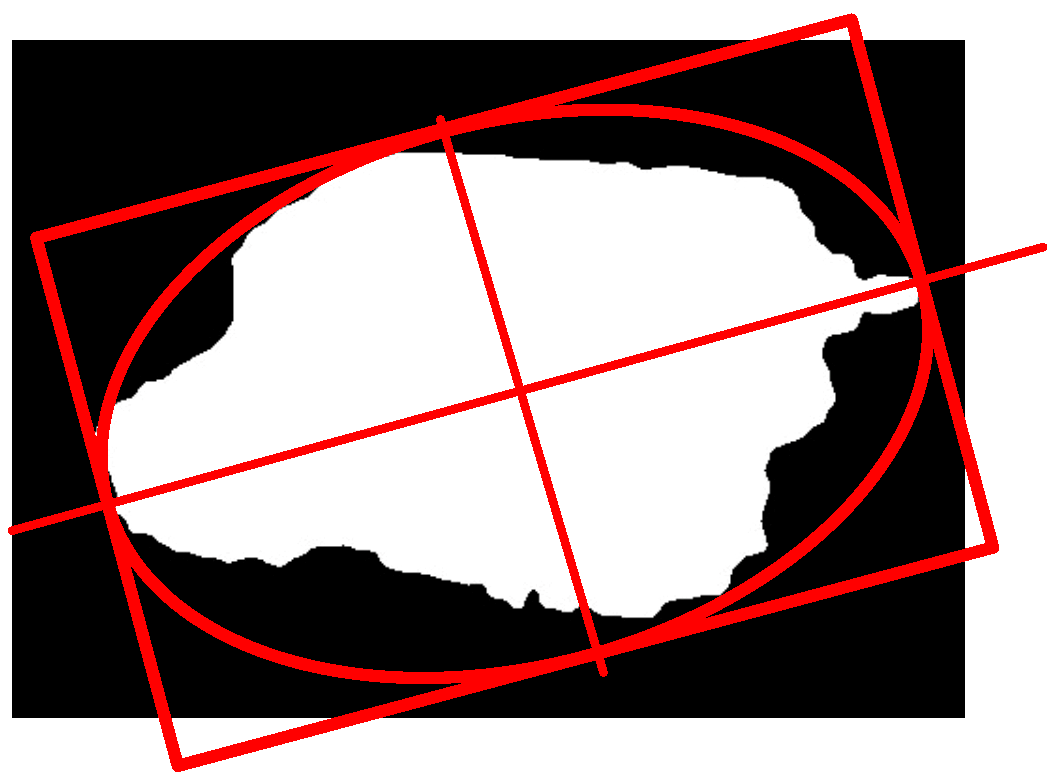

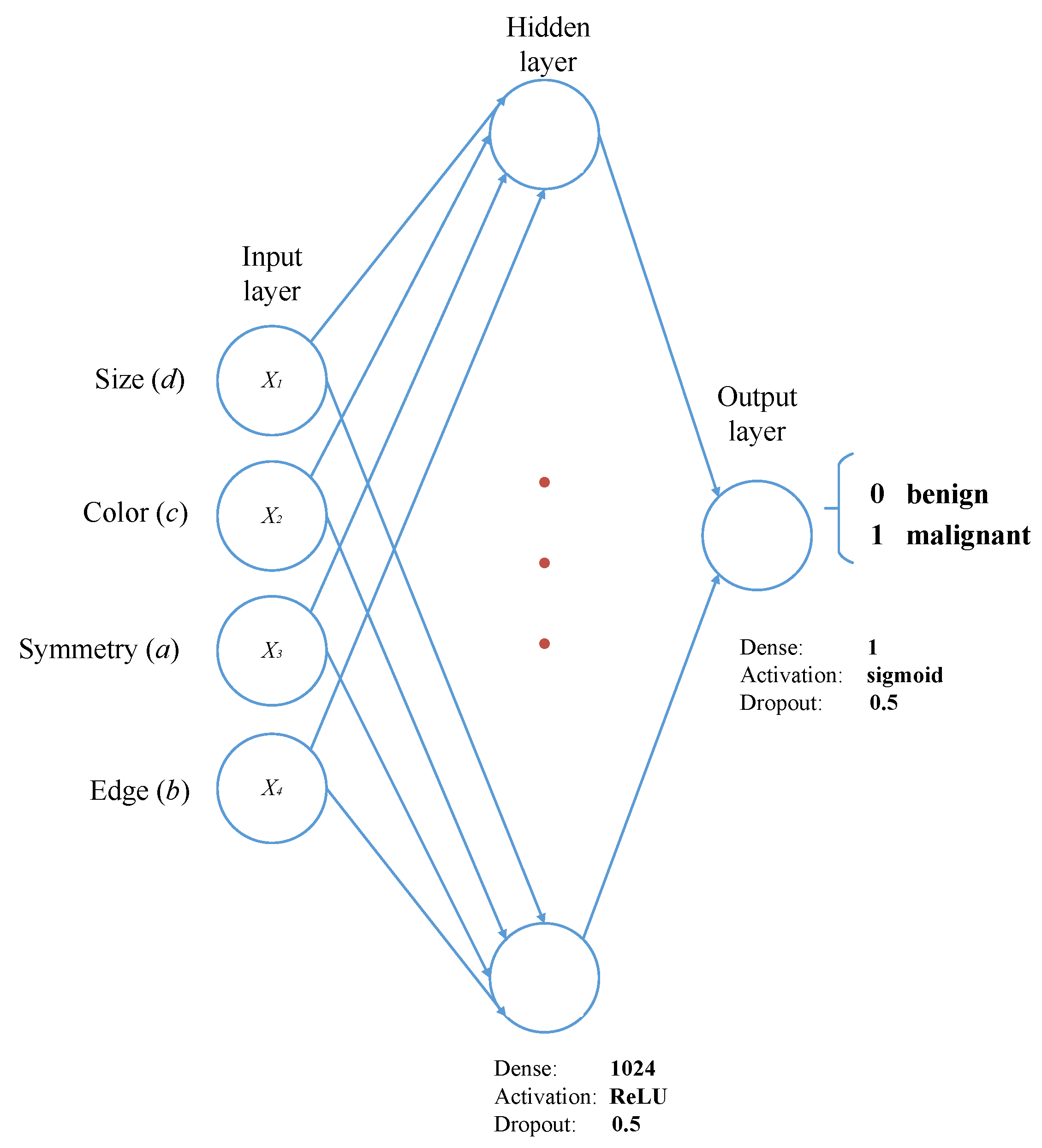

In this research, an efficient and novel method for the detection and classification of melanoma is developed. The algorithm can run using simple images, which are acquired with an RGB camera, as well as dermatological images, which are acquired with professional equipment. It was designed to estimate four descriptors (symmetry, edge, color, size), which are subsequently used as inputs for the classifier. A multilayer perceptron was used to classify between malignant and benign melanoma. Finally, k-fold cross-validation, an analysis of variances and a t-student test were used to validate the robustness and reliability of the method. Moreover, the method developed is capable of detecting more than one case of melanoma in the same image, even if there is some hair present in the picture.

The paper is organized as follows:

Section 2 contains the main stages of the proposed method.

Section 3 includes the results and discussions. Finally, in

Section 4, the conclusions of the article and future work are mentioned.

3. Results and Discussion

The main aim of this research was to develop an efficient and robust method for the detection and classification of melanomas in simple and dermatological images using image processing and a multilayer perceptron. The algorithm estimates the descriptors of the ABCD rule and uses the values as input for the multilayer perceptron. It is responsible for the training and classification of malignant and benign melanoma.

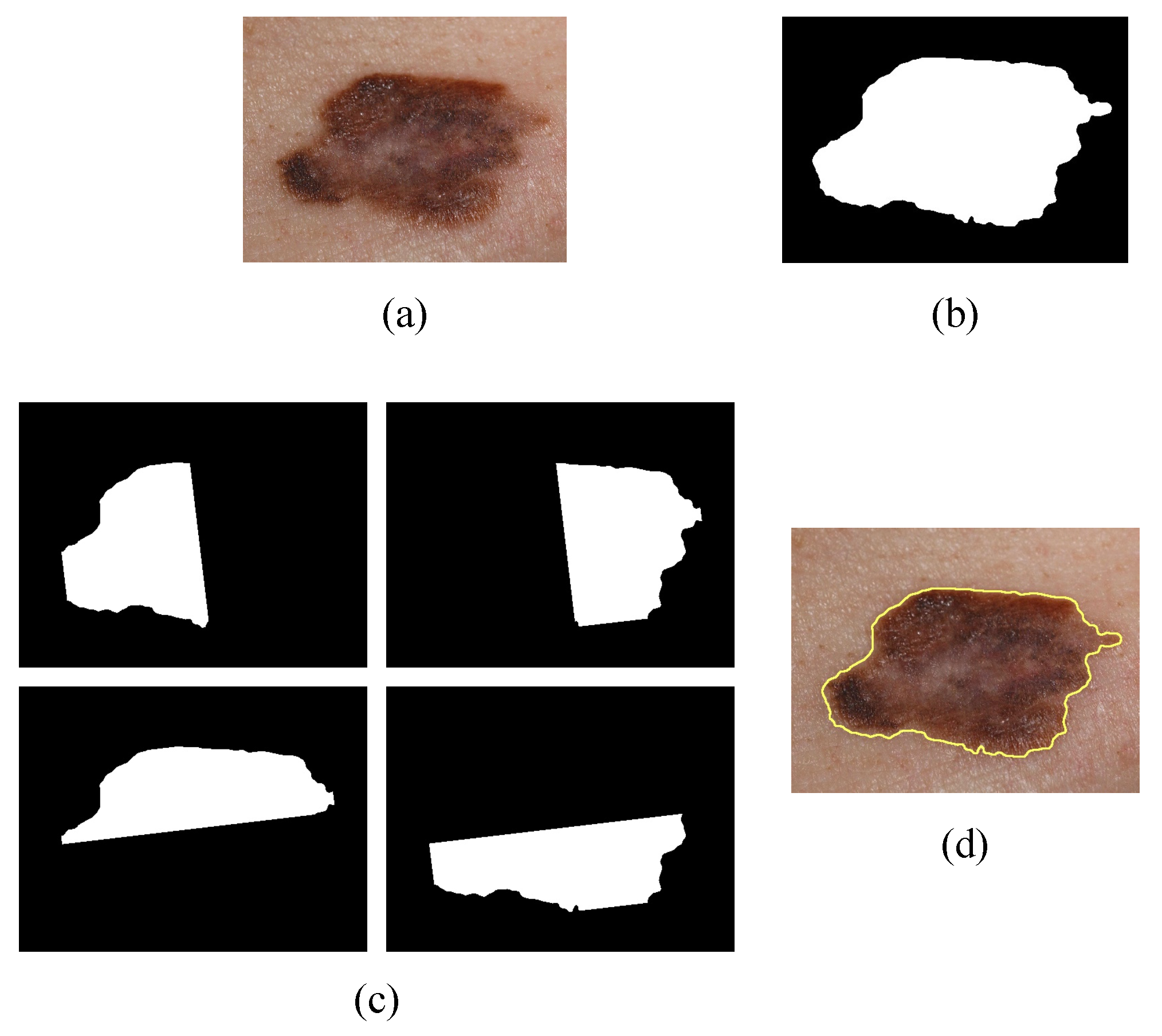

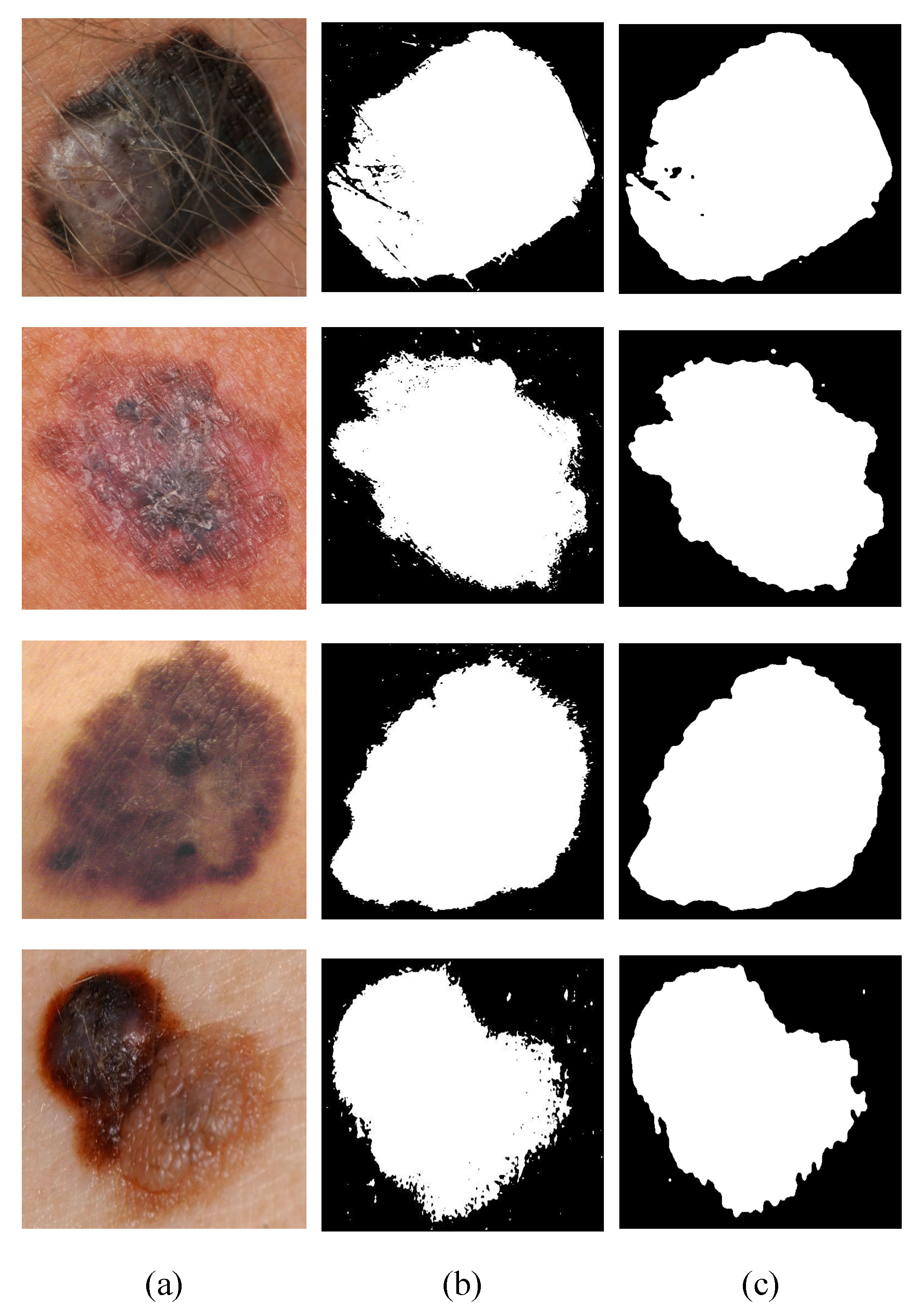

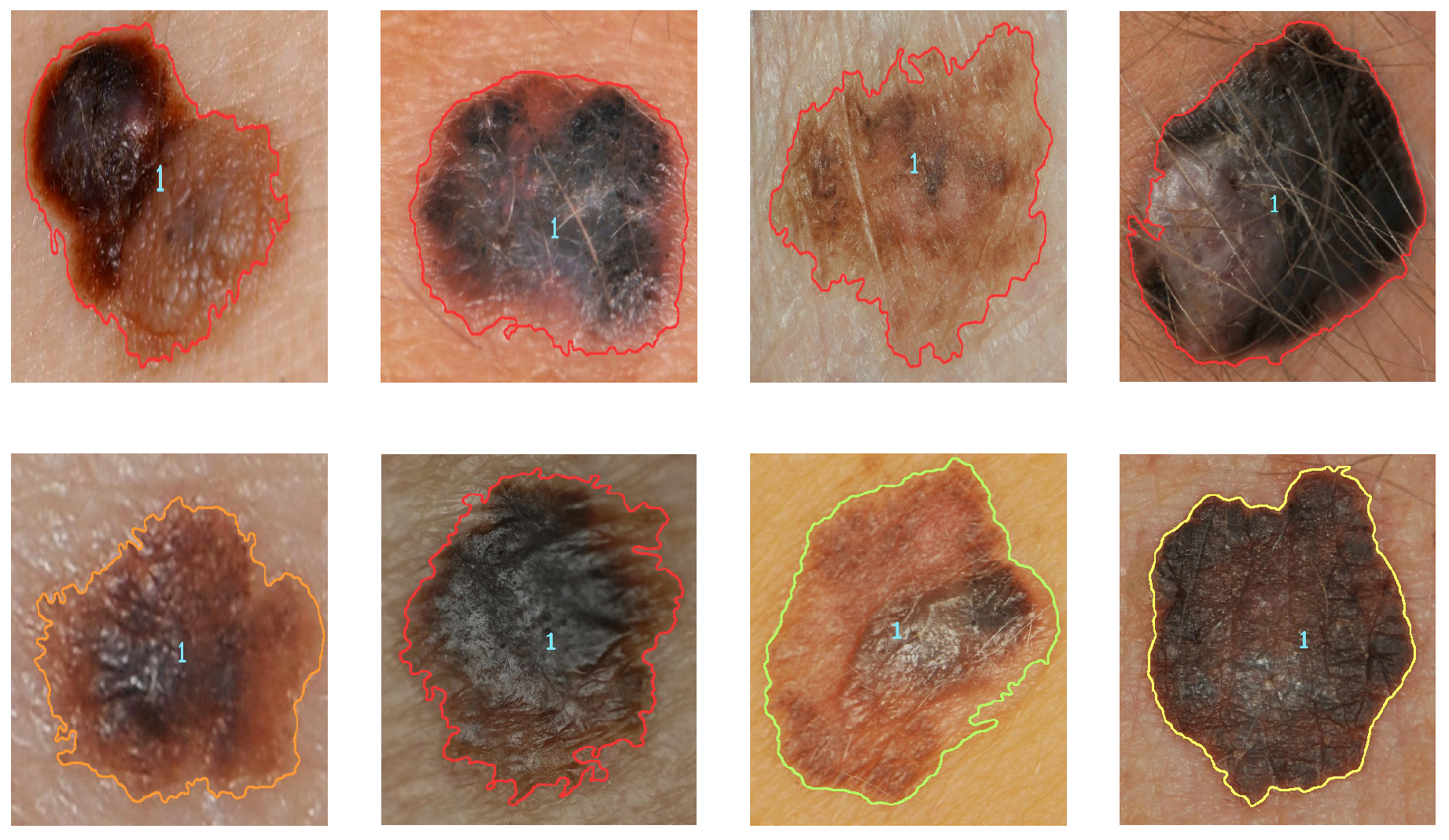

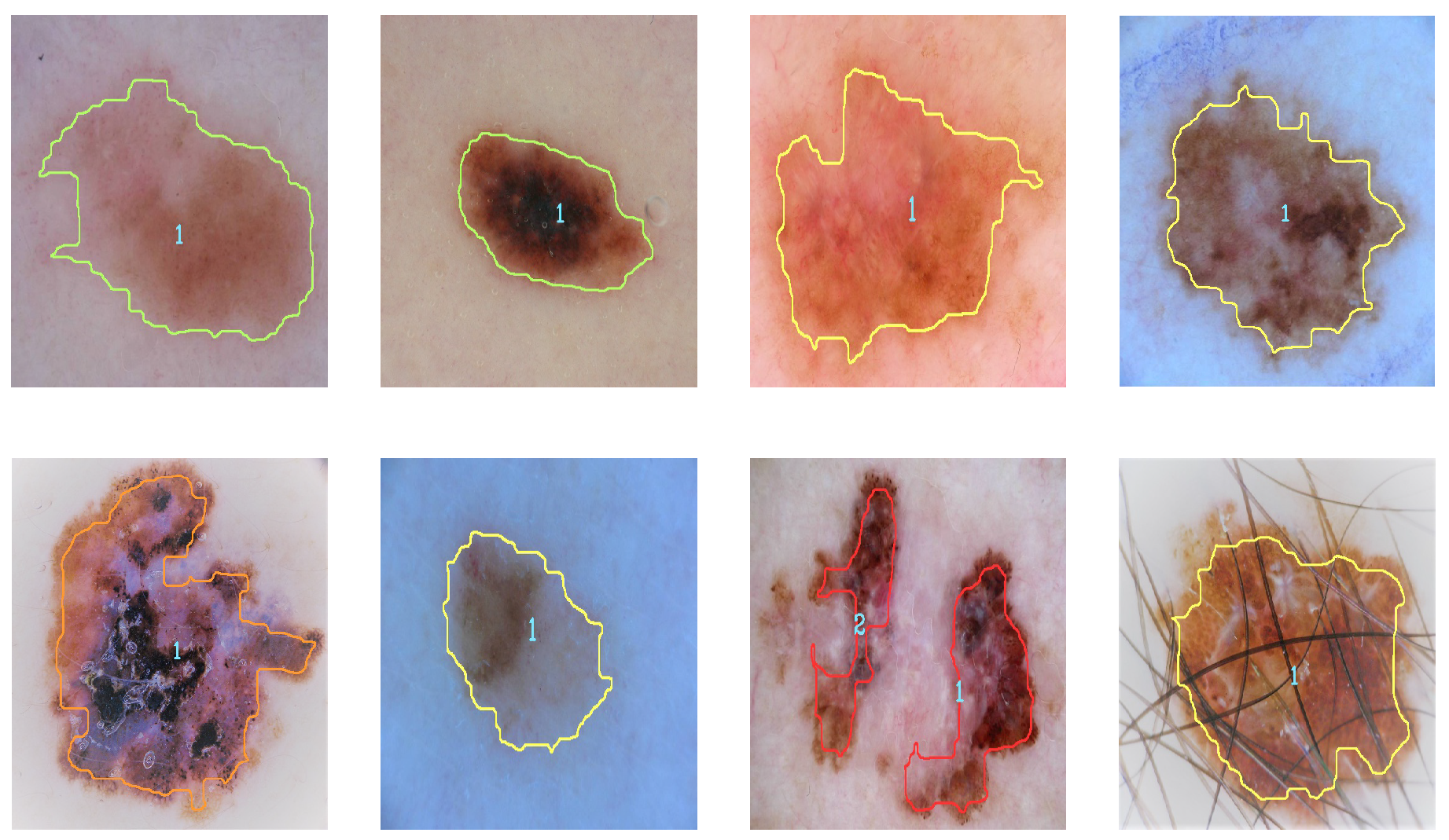

Figure 9 and

Figure 10 illustrate the identification of the contour and the type of melanoma according to the analysis of the results (contour color indicates severity). Severity levels are an additional classification of malignant cases of melanoma.

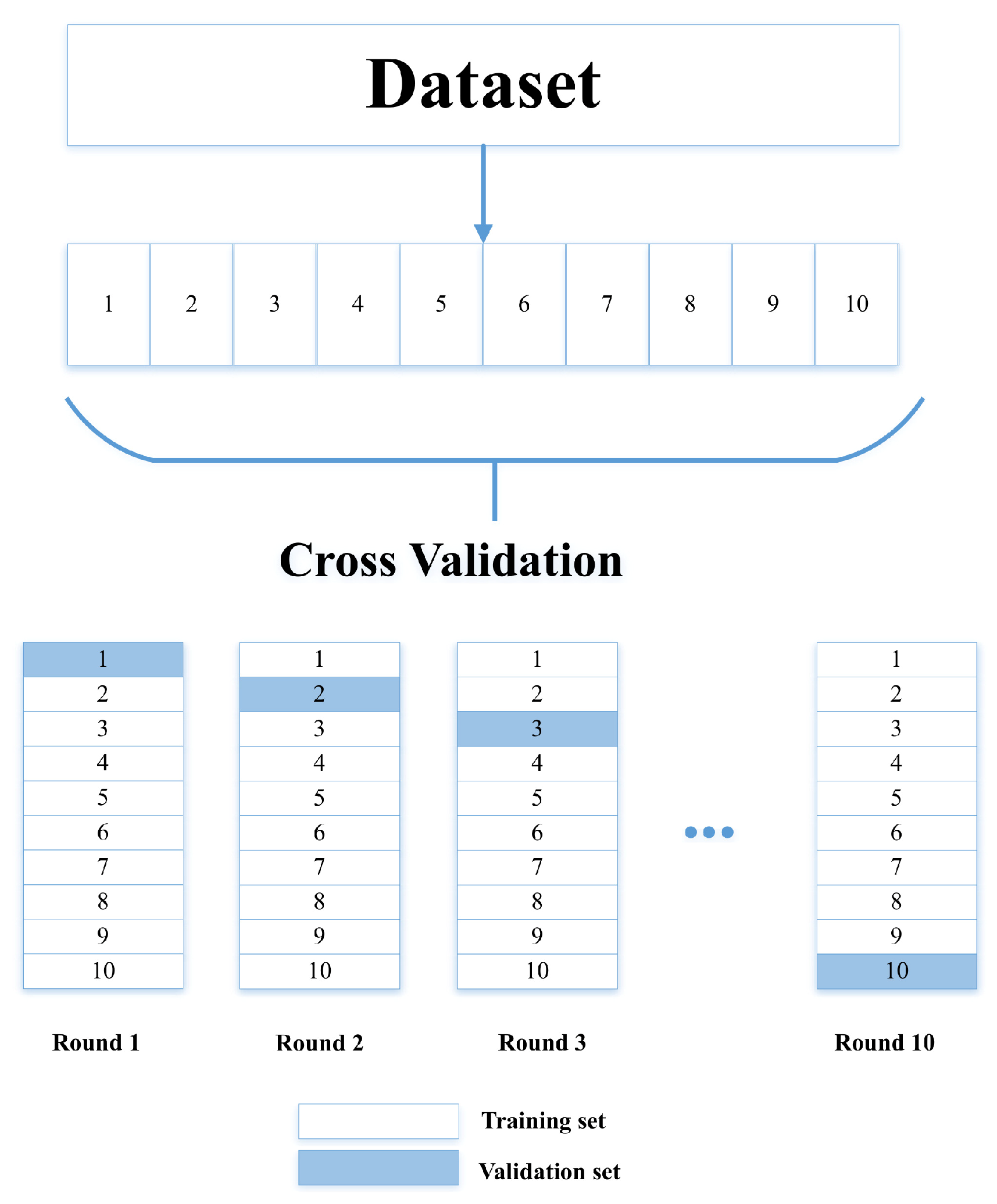

The validation was divided into two stages; the first was the cross-validation using Equations (

4)–(

7), and the second was statistical analysis. According to the results, the method has better efficiency than conventional methods since, after applying the equations, an efficiency of 97.78% for simple images and 98.22% for dermatological images was obtained.

Table 4 shows the precision values obtained in the iterations for both simple and dermatological images.

Figure 11 illustrates the comparison of the efficiency levels of the development method with studies that used the same type of images and datasets.

The second classification is responsible for categorizing the severity of the malignant melanoma once it has been diagnosed. The maximum and minimum obtained from the estimation of each descriptor are used for the classification. The analysis of the results for the second metric indicates that the levels for cases of malignant melanoma correspond to level 1 when they are less than 0.35 (green color), level two when they are less than 0.65 (yellow color), level three when they are less than 0.85 (orange), and level four when they are greater than 0.85 (red);

Figure 9 and

Figure 10 show examples of malignant melanoma with different levels of severity.

Subsequently, the confidence levels of the mean were determined; according to the results, it is said that the average of the cases will fall 97% of the time. It was observed that there is a slight overlap in the ranges of the means. However, the reliability for which it was calculated is high. Thus, it can be said that the range of confidence is high. It is concluded that the means are statistically different among the groups considering a reliability level of 97%.

In addition to detecting and classifying cases of malignant and benign melanoma, and detecting level severity, the proposed method offers other advantages such as detecting several cases of melanoma in the same image, identifying images that show skin only and cases of melanoma where the image has hair in it. When the image contains more than one melanoma, a diagnosis is provided for each.

4. Conclusions

In this paper, a method for detection and classification of melanoma in simple and dermatological images was proposed. It uses mathematical morphology, Gaussian filters, HSV color space, and a multilayer perceptron for rating. Four descriptors—symmetry, size, color, and edge—were estimated. The multilayer perceptron is in charge of classification. Several tests of the classifier with different parameters were performed. The best result was achieved using 1024 neurons in the hidden layer and a sigmoidal activation function.

The experimental results achieved a superior performance than three state-of-the-art methods in terms of efficiency. According to cross-validation, a high level of reliability was achieved, with an efficiency value equal to 97.78% for simple images and 98.22% for dermatological images.

The analysis of the results indicates that the values in the ABCD rule measurements increase significantly in the cases of malignant melanoma, and the most significant descriptor of the four being symmetry.

This work derives certain future activities; one of them is the implementation of the proposed method in an embedded system since, due to its high levels of efficiency, it is inferred that it will increase the levels of reliability in the detection of melanoma in comparison with the applications reported. If this is the case, we can expect to have a portable and low-cost melanoma detector device at some point in the future.