A New Gaze Estimation Method Based on Homography Transformation Derived from Geometric Relationship

Abstract

1. Introduction

2. Comparison Methods

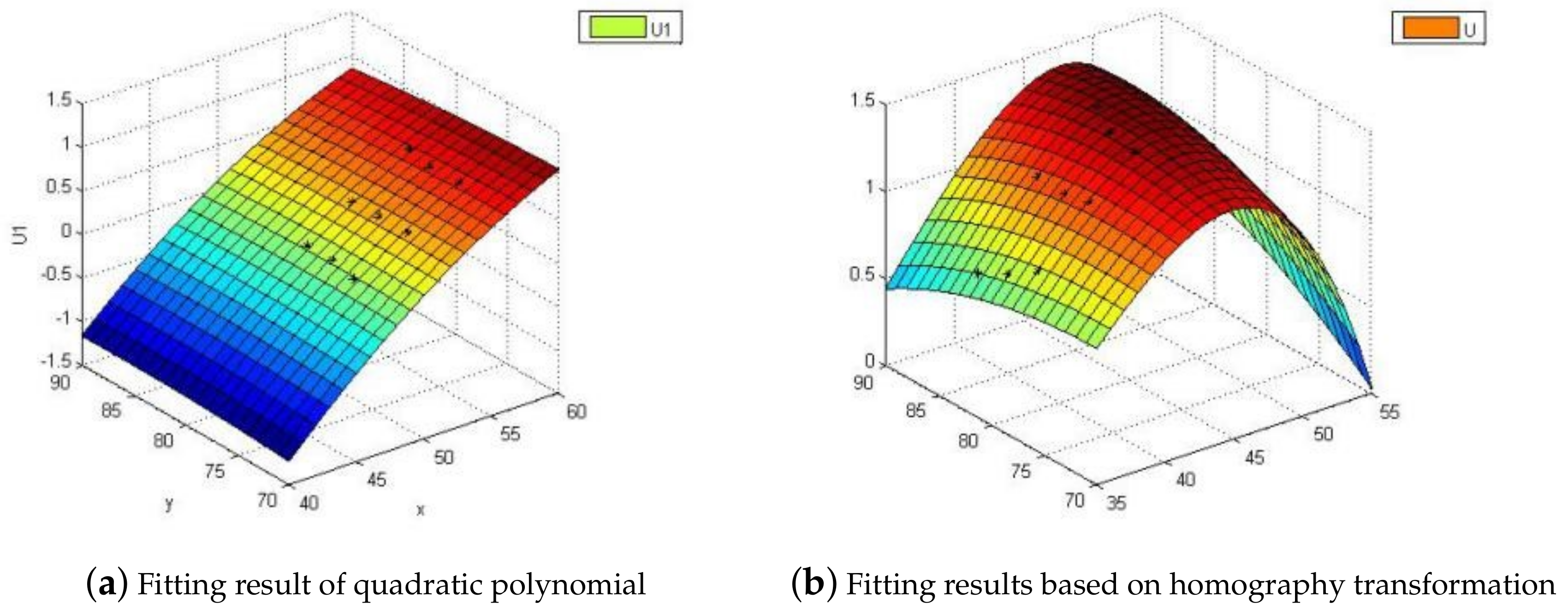

2.1. Polynomial Fitting Method

2.2. Homography Normalization

3. Method

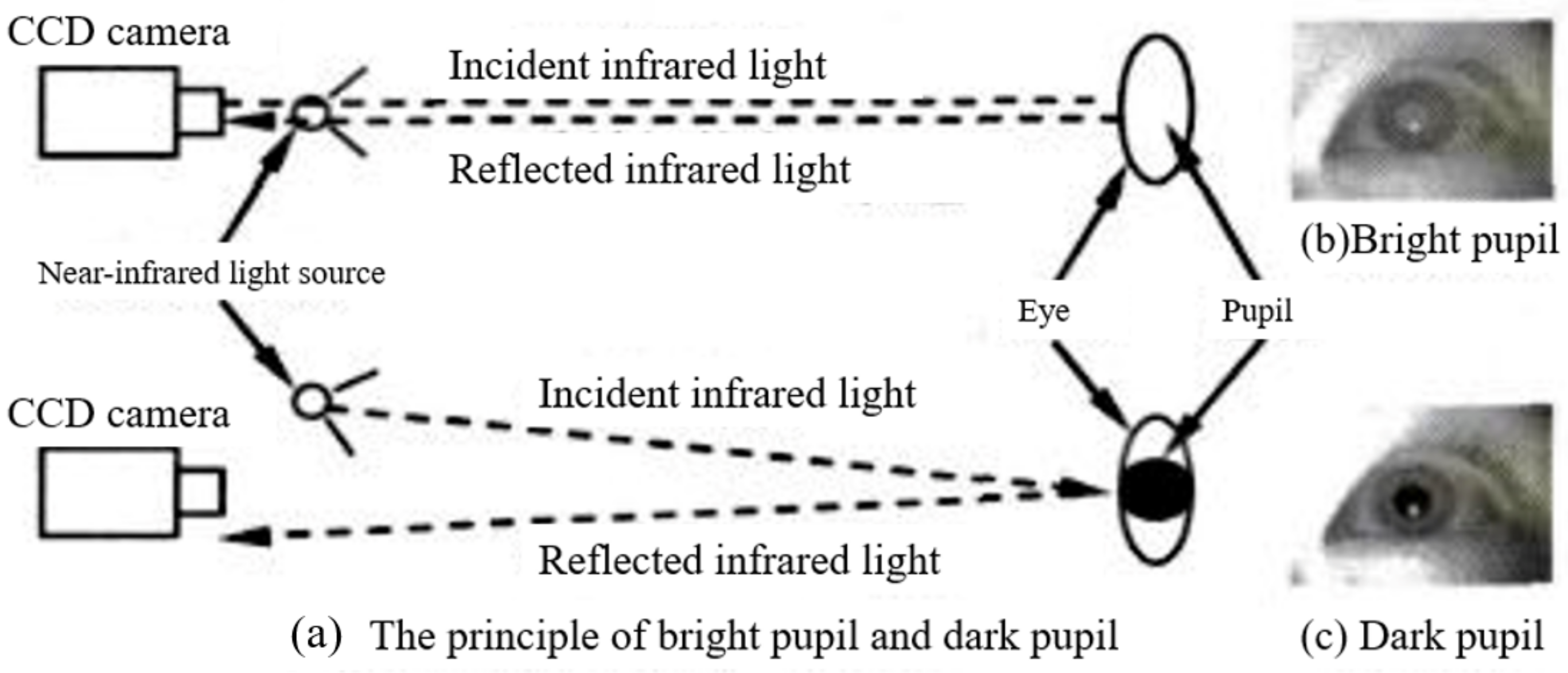

3.1. Pupil Center Location Algorithm

3.2. Build the Mapping Equation

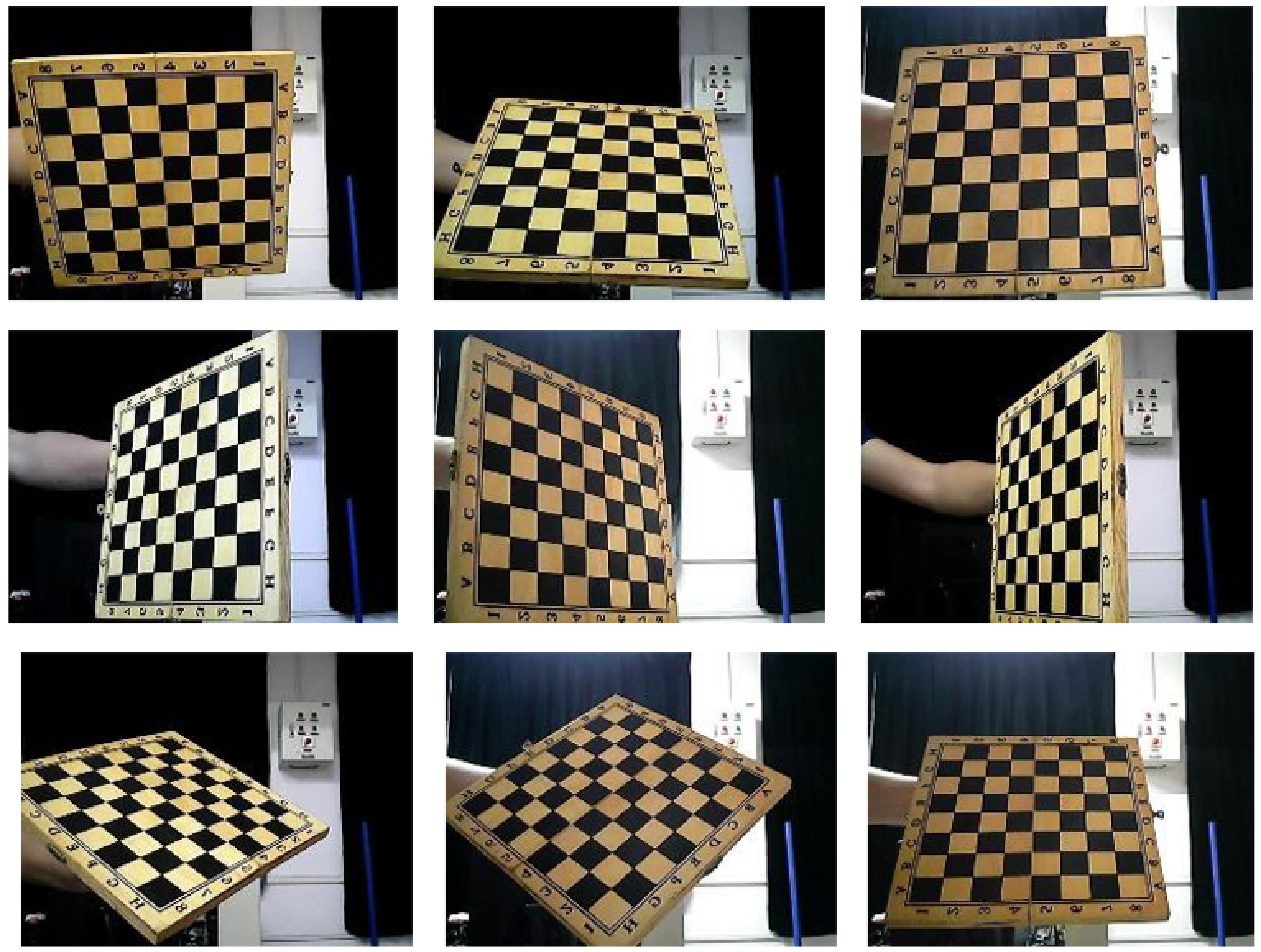

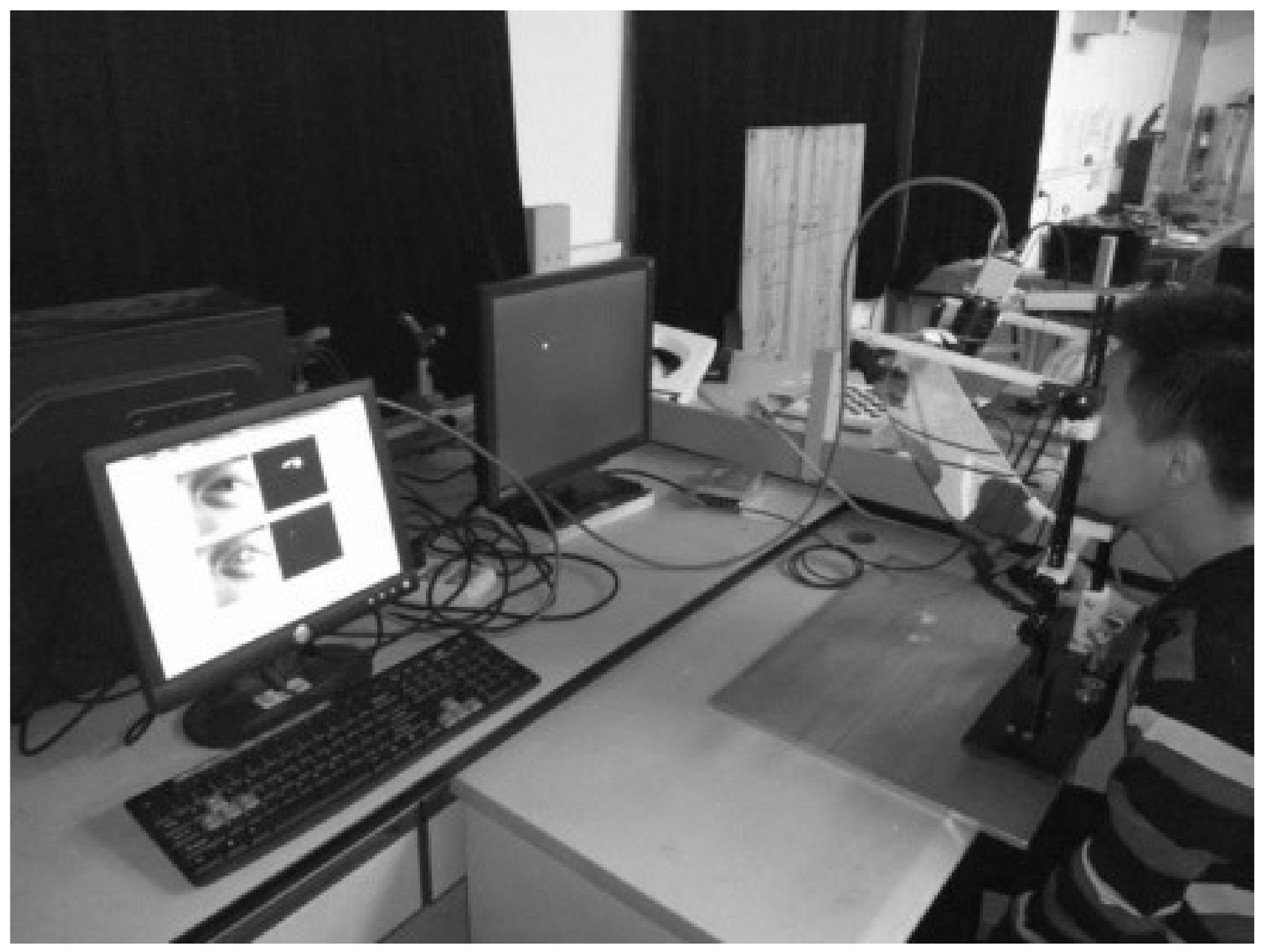

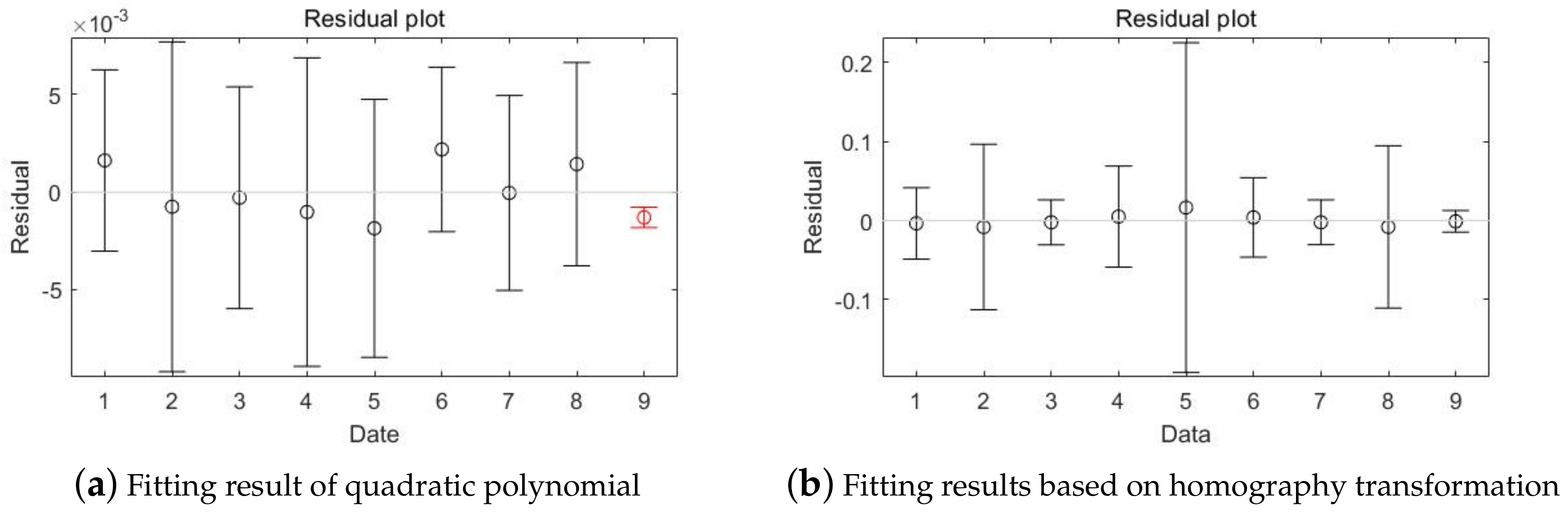

4. Experiment

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Hansen, D.W.; Ji, Q. In the eye of the beholder: A survey of models for eyes and gaze. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 32, 478–500. [Google Scholar] [CrossRef] [PubMed]

- Baluja, S.; Pomerleau, D. Non-Intrusive Gaze Tracking Using Artificial Neural Networks. In Advances in Neural Information Processing Systems 6; Technical Report; DTIC Document; ACM: New York, NY, USA, 1994. [Google Scholar]

- Holl, C.; Komogortsev, O. Eye tracking on unmodified common tablets: Challenges and solutions. Eye Track. Res. Appl. 1994, 1, 277–280. [Google Scholar]

- Xu, L.; Machin, D.; Sheppard, P. A novel approach to real-time non-intrusive gaze finding. In Proceedings of the British Machine Vision Conference (BMVC), York, UK, 1 January 1998; pp. 1–10. [Google Scholar]

- Hansen, D.W.; Hansen, J.P.; Nielsen, M.; Johansen, A.S.; Stegmann, M.B. Eye typing using Markov and active appearance models. In Proceedings of the IEEE Conference on Applications of Computer Vision Workshops, Orlando, FL, USA, 4 December 2002; pp. 132–136. [Google Scholar]

- Sugano, Y.; Matsushita, Y.; Sato, Y. Appearance-based gaze estimation using visual saliency. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 329–341. [Google Scholar] [CrossRef]

- White, K.P., Jr.; Hutchinson, T.E.; Carley, J.M. Spatially dynamic calibration of an eye-tracking system. IEEE Trans. Syst. Man Cybern. 1993, 23, 1162–1168. [Google Scholar] [CrossRef]

- Newman, R.; Matsumoto, Y.; Rougeaux, S.; Zelinsky, A. Real-time stereo tracking for head pose and gaze estimation. In Proceedings of the IEEE Conference on Automatic Face and Gesture Recognition, Grenoble, France, 28–30 March 2000; pp. 122–128. [Google Scholar]

- Morimoto, C.H.; Amir, A.; Flickner, M. Detecting eye position and gaze from a single camera and 2 light sources. In Proceedings of the IEEE Conference Computer Vision and Pattern Recognition, Quebec City, QC, Canada, 11–15 August 2002; pp. 314–317. [Google Scholar]

- Noureddin, B.; Lawrence, P.D.; Man, C. A non-contact device for tracking gaze in a human computer interface. Comput. Vis. Image Underst. 2005, 98, 52–82. [Google Scholar] [CrossRef]

- Wang, J.G.; Sung, E.; Venkateswarlu, R. Estimating the eye gaze from one eye. Comput. Vis. Image Underst. 2005, 98, 83–103. [Google Scholar]

- Guestrin, E.D.; Eizenman, E. General theory of remote gaze estimation using the pupil center and corneal reflections. IEEE Trans. Biomed. Eng. 2006, 53, 1124–1133. [Google Scholar] [CrossRef] [PubMed]

- Meyer, A.; Böhme, M.; Martinetz, T.; Barth, E. A single-camera remote eye tracker. In Perception and Interactive Technologies; Springer: Berlin/Heidelberg, Germany, 2006; pp. 208–211. [Google Scholar]

- Hennessey, C.; Noureddin, B.; Lawrence, P. A single camera eye-gaze tracking system with free head motion. In Proceedings of the Symposium on Eye Tracking Research and Applications, San Diego, CA, USA, 27–29 March 2006; pp. 87–94. [Google Scholar]

- Eizenman, E. An automatic personal calibration procedure for advanced gaze estimation systems. IEEE Trans. Biomed. Eng. 2010, 57, 1031–1039. [Google Scholar]

- Villanueva, A.; Cabeza, R.; SPorta, S. Eye tracking: Pupil orientation geometrical modeling. Image Vis. Comput. 2006, 24, 663–679. [Google Scholar] [CrossRef]

- Villanueva, A.; Cabeza, R.; SPorta, S. Gaze tracking system model based on physical parameters. Int. J. Pattern Recognit. Artif. Intell. 2007, 21, 855–877. [Google Scholar] [CrossRef]

- Nagamatsu, T.; Hiroe, M.; Rigoll, G. Corneal-Reflection-Based Wide Range Gaze Tracking for a Car. In Proceedings of the International Conference on Human-Computer Interaction, Orlando, FL, USA, 26–31 July 2019; pp. 385–400. [Google Scholar]

- Brolly, X.L.; Mulligan, J.B. Implicit calibration of a remote gaze tracker. In Proceedings of the IEEE Conference on Applications of Computer Vision Workshop, Washington, DC, USA, 27 June–2 July 2004; p. 134. [Google Scholar]

- Ebisawa, Y.; Satoh, S.I. Effectiveness of pupil area detection technique using two light sources and image difference method. In Proceedings of the IEEE Conference on Engineering in Medicine and Biology Society, San Diego, CA, USA, 31 October 1993; pp. 1268–1269. [Google Scholar]

- Hansen, D.W.; Pece, A.E. Eye tracking in the wild. Comput. Vis. Image Underst. 2005, 98, 155–181. [Google Scholar] [CrossRef]

- Ji, Q.; Yang, X. Real-time eye, gaze, and face pose tracking for monitoring driver vigilance. Real-Time Imaging 2002, 8, 257–377. [Google Scholar] [CrossRef]

- Williams, O.; Blake, A. Sparse and semi-supervised visual mapping with the sˆ3gp. IEEE Conf. Comput. Vis. Pattern Recognit. Work 2006, 8, 230–237. [Google Scholar]

- Cerrolaza, J.J.; Villanueva, A.; Cabeza, R. Taxonomic study of polynomial regressions applied to the calibration of video-oculographic systems. In Proceedings of the Symposium on Eye Tracking Research and Applications, Savannah, GA, USA, 26–28 March 2008; pp. 259–266. [Google Scholar]

- Cerrolaza, J.J.; Villanueva, A.; Cabeza, R. Error characterization and compensation in eye tracking systems. In Proceedings of the Symposium on Eye Tracking Research and Applications, Santa Barbara, CA, USA, 28–30 March 2012; pp. 205–208. [Google Scholar]

- Zhu, J.; Yang, J. Subpixel eye gaze tracking. In Proceedings of the Conference on Automatic Face and Gesture Recognition, Washington, DC, USA, 21 May 2002; pp. 124–129. [Google Scholar]

- Xia, L.; Sheng, B.; Wu, W.; Ma, L.; Li, P. Accurate gaze tracking from single camera using gabor corner detector. Multimed. Tools Appl. 2016, 75, 221–239. [Google Scholar] [CrossRef]

- Shao, G.; Che, M.; Zhang, B.; Cen, K.; Gao, W. A novel simple 2D model of eye gaze estimation. In Proceedings of the IEEE Conference on Intelligent Human Machine Systems and Cybernetics, Nanjing, China, 26–28 August 2010; pp. 300–304. [Google Scholar]

- Blignaut, P. Mapping the pupil-glint vector to gaze coordinates in a simple video-based eye tracker. J. Eye Mov. Res. 2000, 18, 331–335. [Google Scholar]

- George, A.; Routray, A. Fast and accurate algorithmfor eye localisation for gaze tracking in low-resolution images. IET Comput. Vis. 2016, 10, 660–669. [Google Scholar] [CrossRef]

- Tong, Q.; Hua, X.; Qiu, J. A new mapping function in table-mounted eye tracker. In Proceedings of the 2017 International Conference on Optical Instruments and Technology: Optoelectronic Imaging/Spectroscopy and Signal Processing Technology, Tianjin, China, 12 January 2018; Volume 10620. [Google Scholar]

- Li, W.; Che, M.; Li, F. Gaze Estimation Research with Single Camera. In Proceedings of the Conference on e Business Techology and Strategy, Tianjin. China, 1 January 2012; pp. 592–599. [Google Scholar]

- Zhang, C.; Chi, J.N.; Zhang, Z.; Gao, X.; Hu, T.; Wang, Z. Gaze estimation in a gaze tracking system. Sci. China Inf. Sci. 2011, 54, 2295–2306. [Google Scholar] [CrossRef]

- Morimoto, C.H.; Coutinho, F.L.; Hansen, D.W. Screen-Light Decomposition Framework for Point-of-Gaze Estimation Using a Single Uncalibrated Camera and Multiple Light Sources. J. Math. Imaging Vis. 2020, 62, 585–605. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, G.; Shi, J. 2D Gaze Estimation Based on Pupil-Glint Vector Using an Artificial Neural Network. Appl. Sci. 2016, 6, 174. [Google Scholar] [CrossRef]

- Cheng, H.; Liu, Y.; Fu, W.; Ji, Y.; Yang, L.; Zhao, Y. Gazing point dependent eye gaze estimation. Pattern Recognit. 2017, 71, 36–44. [Google Scholar] [CrossRef]

- Dong, H.Y.; Chung, M.J. A novel non-intrusive eye gaze estimation using cross-ratio under large head motion. Comput. Vis. Image Underst. 2005, 98, 25–51. [Google Scholar]

- Choi, K.A.; Ma, C.; Ko, S.J. Improving the usability of remote eye gaze tracking for human-device interaction. IEEE Trans.Consumer Electron. 2014, 60, 493–498. [Google Scholar] [CrossRef]

- Hansen, D.W.; Agustin, J.S.; Villanueva, A. Homography normalization for robust gaze estimation in uncalibrated setups. In Proceedings of the 2010 Symposium on Eye-Tracking Research & Applications, Austin, TX, USA, 22–24 March 2010; pp. 13–20. [Google Scholar]

- Zhu, Z.; Ji, Q. Novel eye gaze tracking techniques under natural head movement. IEEE Trans. Biomed. Eng. 2007, 54, 2246–2259. [Google Scholar] [PubMed]

- Merchant, J.; Morrissette, R.; Proterfield, J.L. Remote measurement of eye direction allowing subject motion over one cubic foot of space. IEEE Trans. Biomed. Eng. 1974, 21, 309–317. [Google Scholar] [CrossRef] [PubMed]

- Morimoto, C.H.; Koons, D.; Amir, A.; Flickner, M. Pupil detection and tracking multiple light sources. Image Vis. Comput. 2000, 18, 331–335. [Google Scholar] [CrossRef]

- Morimoto, C.H.; Mimica, M. Eye gaze tracking techniques for interactive applications. Comput. Vis. Image Underst. 2005, 98, 4–24. [Google Scholar] [CrossRef]

- Zhang, C.; Chi, J.N.; Zhang, Z.H.; Wang, Z.L. A novel eye gaze tracking technique based on pupil center cornea reflection technique. Chin. J. Comput. 2010, 33, 1273–1287. [Google Scholar] [CrossRef]

- Ma, C.; Baek, S.J.; Choi, K.A.; Ko, S.J. Improved remote gaze estimation using corneal reflection-adaptive geometric transforms. Opt. Eng. 2014, 53, 053112. [Google Scholar] [CrossRef]

- Huang, J.-B.; Cai, Q.; Liu, Z.; Ahuja, N.; Zhang, Z. Towards accurate and robust cross-ratio based gaze trackers through learning from simulation. In Proceedings of the Symposium on Eye Tracking Research and Applications, Safety Harbor, FL, USA, 26–28 March 2014; pp. 75–82. [Google Scholar]

- Fitzgibbon, A.W.; Pilu, M.; Fisher, R.B. Direct least square fitting of ellipses. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 476–480. [Google Scholar] [CrossRef]

- Ruan, Y.; Zhou, H.; Huang, J. An Improved Method for Human Eye State Detection Based on Least Square Ellipse Fitting Algorithm. In Proceedings of the 2018 13th World Congress on Intelligent Control and Automation (WCICA), Changsha, China, 4–8 July 2018. [Google Scholar]

- Fitzgibbon, A.W.; Fisher, R.B. A Buyer’s Guide to Conic Fitting. In Proceedings of the British Machine Vision Conference, Birmingham, UK, 1 September 1995. [Google Scholar]

- Hartley, R.; Zisserman, A.F. Multiple View Geometry in Computer Vision; Cambridge University Press: Cambridge, UK, 2000. [Google Scholar]

- Zhang, Z. Flexible camera calibration by viewing a plane from unknown orientations. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999. [Google Scholar]

| Fixation Point | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | Accuarcy |

|---|---|---|---|---|---|---|---|---|---|---|

| experiment 1 (+(blue)) | 0.733 | 0.114 | 0.182 | 0.348 | 0.388 | 0.872 | 0.898 | 0.585 | 0.469 | - |

| experiment 2 (+(red)) | 0.387 | 0.292 | 0.371 | 0.087 | 0.354 | 0.554 | 0.257 | 0.379 | 0.287 | - |

| mean value | 0.560 | 0.202 | 0.276 | 0.218 | 0.371 | 0.713 | 0.577 | 0.482 | 0.378 | 0.420 |

| variance | 0.060 | 0.016 | 0.018 | 0.034 | 0.001 | 0.050 | 0.206 | 0.021 | 0.017 | 0.047 |

| Serial Number | Factors Considered | ||||

|---|---|---|---|---|---|

| Coefficient of Determination | Mean Square Error | F-Statistic | Equation | Mapping Equation Abscissa | |

| 1 | 0.9947471 | 5.4259*1 | 11,620 | Equation (1) | U |

| 2 | 0.9987318 | 3.1474*1 | 24,573 | Equation (7) | U |

| 3 | 0.9963974 | 5.9933*1 | 197.479 | Equation (1) | V |

| 4 | 0.9999745 | 5.1450*1 | 221.869 | Equation (7) | V |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luo, K.; Jia, X.; Xiao, H.; Liu, D.; Peng, L.; Qiu, J.; Han, P. A New Gaze Estimation Method Based on Homography Transformation Derived from Geometric Relationship. Appl. Sci. 2020, 10, 9079. https://doi.org/10.3390/app10249079

Luo K, Jia X, Xiao H, Liu D, Peng L, Qiu J, Han P. A New Gaze Estimation Method Based on Homography Transformation Derived from Geometric Relationship. Applied Sciences. 2020; 10(24):9079. https://doi.org/10.3390/app10249079

Chicago/Turabian StyleLuo, Kaiqing, Xuan Jia, Hua Xiao, Dongmei Liu, Li Peng, Jian Qiu, and Peng Han. 2020. "A New Gaze Estimation Method Based on Homography Transformation Derived from Geometric Relationship" Applied Sciences 10, no. 24: 9079. https://doi.org/10.3390/app10249079

APA StyleLuo, K., Jia, X., Xiao, H., Liu, D., Peng, L., Qiu, J., & Han, P. (2020). A New Gaze Estimation Method Based on Homography Transformation Derived from Geometric Relationship. Applied Sciences, 10(24), 9079. https://doi.org/10.3390/app10249079